Abstract

This paper presents closed-form optimal cooperative guidance laws for two UAVs under information constraints that achieve the required relative approach angle. Two UAVs cooperate to optimize a common cost function under a coupled constraint on terminal velocity vectors and the information constraint which defines the sensor information availability. To handle the information constraint, a general two-player partially nested decentralized optimal control problem is considered in the continuous finite-horizon time domain. It is shown that under the state-separation principle the optimal solution of the decentralized control problem can be obtained by solving two centralized subproblems which cover the prediction problem for the information-deficient player and the prediction error minimization problem for the player with full information. Based on the solution of the decentralized optimal control problem, the explicit closed-form cooperative guidance laws that can be efficiently implemented on conventional guidance computers are derived. The performance of the proposed guidance laws is investigated on both centralized and decentralized cooperative scenarios with nonlinear engagement kinematics of networked two-UAV systems.

1. Introduction

1.1. Cooperative Control of Networked Systems

Cooperative control problems in networks of multiple autonomous agents have received considerable attention in civilian and military applications. This is due to the advantages that swarm of multi-agent system brings and the growing interest in understanding the tactical hunting behaviors of animal group that realize greater efficiency and operational capability. Especially for cooperative missions of multiple unmanned aerial vehicles (UAVs), cooperative control techniques can be used to improve the operational performance and survivability, as well as greatly reducing the overall effort that would have been previously required by independently operating multiple agents for attack or surveillance missions. For instances, cooperative attack techniques are devised as a countermeasure against the formidable defense systems [1,2,3] and cooperative surveillance techniques are adopted in various applications in order to broadening the time and space coverage of monitoring and detection [4,5,6].

To achieve high-level autonomy for cooperative UAVs, one of the fundamental capabilities is to approach the destination with relative geometric constraints (e.g., terminal time and angle). For instance, the terminal time and angle constraints are the fundamental components that lead the cooperative loitering munitions to saturate and penetrate the defense systems [2]. Also they perform the key role in cooperative surveillance missions by enhancing the observability of the multi-measuring environment [7] and maximizing the mutual information obtained by multiple sensors [8,9]. Even though there have been lots of studies on this domain, complicated numerical trajectory optimization techniques are usually used to obtain the optimal time or energy path with the geometric constraints [10,11,12]. Considering the computational capacity of conventional guidance computers, numerical optimization techniques might not be suitable to swarm UAV systems as the computational complexity typically increases with the number of UAVs. Therefore, adopting analytical guidance algorithm can lead more efficiency for cooperation of multiple UAVs [13].

1.2. Related Works

The most widely accepted approach for deriving analytical guidance algorithms is based on the linear optimal control theory, e.g., linear quadratic regulator (LQR), which gives the optimal control input in a state-feedback form [14,15]. There have been many studies on cooperative guidance problems. To control the terminal time of multiple vehicles [1,3] have proposed impact-time control guidance (ITCG) and cooperative proportional navigation (CPN) which synchronize the time-to-go of each vehicle. The ITCG controls the impact time of vehicles to a predetermined desired value and CPN decreases the variance of time-to-go during the homing. To control the impact angle of multiple vehicles, there have been lots of studies and application such as the impact-angle control guidance (IACG) techniques [16,17,18]. The IACG independently guides each of vehicle to a predetermined desired value based on the one-on-one solution. Furthermore, studies have been extended to control the impact time and angle simultaneously (ITACG) [2,19,20].

The aforementioned cooperative guidance laws can be categorized by two types, which are the implicit and explicit cooperation. In implicit cooperation, multiple vehicles are guided to the target without an information sharing networks but with predetermined objectives (e.g., ITCG, IACG), while explicit cooperation shares information to optimize a common team criteria (e.g., CPN). Compared to the impact-time control guidance laws, for the impact-angle control guidance laws it is not common to construct a closed-loop structure using communication networks. Shaferman and Shima [21] proposed an explicit cooperative guidance law for controlling the relative impact angle of multiple vehicles for any team size which provides substantially better results than implicit guidance laws in the acceleration requirements aspect.

However, networked systems should be considered from a realistic point of view, such as failure or security issues that could seriously impact the system. The connectivity of networked systems becomes a challenge for long-distance applications [4] and they are prone to malicious attacks as the network size increases [22]. To overcome such challenges, [23] has proposed the emergent self-organization algorithm where the strategies of the cooperative UAVs depends only on locally available information while providing robust and dependable connections on UAV-relay networks and [24] has proposed a distributed matching game model where the source UAVs select the preferred relay UAVs competitively, according to their own transmission requirements. In [25] a flight planning procedure is addressed for maintaining the connectivity in multi-UAV swam sensing missions where the message passing procedure on decentralized coordination algorithm is used for propagating information in an on-line learning approach. For maintaining and tracking the network connectivity throughout the formation process of multiple UAVs, [26] presents a decentralized controller which provides a target-centric formation.

The key approach of the aforementioned studies is handling the network with limited information which can be described in information constraints, in optimal control problems. Accordingly, the control problems with network under information constraints should be considered. Such problems can be distinguished as either centralized or decentralized depend on the network structure. In centralized problems, systems involve a single decision maker. This may be because there is only one system involved or multiple subsystems might communicate to the central processor which decides the decisions for the overall system. By contrast, decentralized problems are basically defined as any system which is not centralized. Intuitively, one might think of a system in which each subsystems have their own processing unit and make their own decisions based on their own measurements [27]. Although the optimal solution of centralized control problems are well known, it is hard to obtain the optimal solution of decentralized problems. The well-known example of Witsenhausen [28] showed that the optimal controller of decentralized system with feedback is generally nonlinear and computationally intractable. Ho and Chu [29] developed a class of information structure for decentralized control problems, called partially nested, for which the optimal controller is linear. A broader class of problems called quadratically invariant was developed by Rotkowitz et al. [30] which includes the partially nested systems and have the property that the set of closed-loop maps is convex [31]. The explicit optimal solution of quadratically invariant problems are obtained in various search space. Swigart et al. [32] attained an explicit state-space solution in the discrete finite-horizon time domain, using a spectral factorization approach and dynamic programming. Kim and Lall [33,34,35,36] attained the explicit solution in the continuous infinite-horizon time domain by defining a unifying condition that split the decentralized optimal control problem into multiple centralized problems. However, to apply the decentralized solution to the cooperative guidance laws given as a polynomial function of time-to-go, it is necessary to obtain an explicit solution in the continuous finite-horizon time domain.

1.3. Contributions of This Paper

This paper aims to obtain the explicit guidance laws to control the relative approach angle of two UAVs under the nested dynamical structures with sensor information constraints. The relative approach angle constraints and the information constraints are considered to enhance the observability of networked two-UAV systems on the target and to cover the failure or security issues on networked systems, respectively. The centralized and decentralized optimal guidance solutions of networked two-UAV systems are derived in the continuous finite-horizon time domain. The key difference from the conventional LQR-based optimal guidance laws is that our approach considers the information deficiency constraints between the two UAVs, i.e., the first UAV’s sensor measurement is available to the second UAV (via communication networks or by direct measurements), so the second UAV can use the first UAV’s information for cooperation, while the first UAV is not able to use the second UAV’s measurements. Please note that the conventional LQR frameworks are not able to handle these information constraints. Motivated by [35], the state-separation principle is proposed which enables separation of the decentralized control problem into multiple centralized problems. Based on the optimal solution of the decentralized control problem, the explicit closed-form cooperative guidance solutions are derived in terms of the line-of-sight angles and the line-of-sight angle rates, thus it can be easily implemented on typical guidance computers. To the best knowledge of the authors, this is the first attempt to describe the closed-form cooperative guidance solution that explicitly minimizes the finite-horizon linear quadratic objective function under information constraints. Finally, the solutions are converted to the guidance form with the cost function with the terminal velocity constraint.

The remainder of this paper is organized as follows: the two-UAV cooperative engagement geometry is presented first, and the optimal control problem under the nested dynamical structures with information constraints are formulated in Section 2. Section 3 shows the solution and the proofs of the decentralized control problem followed by the derivation of the cooperative guidance laws. In Section 4, numerical simulation results of networked two-UAV systems are presented. The concluding remarks are given in Section 5.

2. Problem Statement

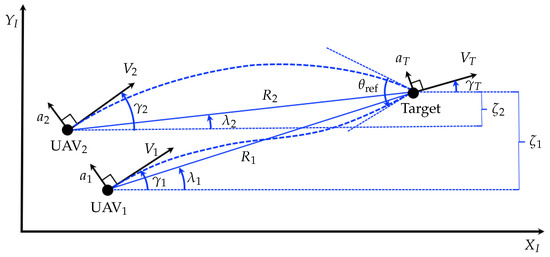

Let us consider the planar homing guidance geometry of two UAVs and a stationary (or slowly moving) target, as shown in Figure 1. The frame is an inertial Cartesian coordinate system which is fixed in space. Variables associated with the i-th UAV for and the target are denoted by subscripts i and T. Here and denote the velocity, flight-path angle and the line-of-sight (LOS) angle, respectively. The normal acceleration of each vehicle is denoted by a and the predetermined relative approach angle constraint of two UAVs are denoted by . The relative distance of each UAV and target in axis is denoted by . Other variables in Figure 1 are self-explanatory.

Figure 1.

Two UAVs in cooperative engagement geometry.

2.1. Kinematics Relations for Cooperative Engagement

The kinematics of each UAV for the homing problem can be expressed in vector form as follows:

where the variables in bold font represent the value in coordinate. Here and are the unit vectors of axis and axis and the velocities of each UAV and target are assumed to be constant. Under the assumption, the constant closing velocity and the interception time can be calculated as

where is the nominal range-to-go of the i-th UAV at time t, and is the initial time.

The homing kinematics in Equation (1) is clearly nonlinear, which needs to be linearized in order for deriving guidance laws based on the linear quadratic (LQ) optimal control theory. For this purpose, the near-collision course assumption, which is valid for small and , is used. Then the linearized kinematics of two UAVs can be expressed in state-space form as follows:

where

with the process noise w which covers the wind gust or the target maneuver . Please note that this is a straight-forward two-agent extension of the very widely used linearization techniques for classical optimal guidance problems [37,38,39].

2.2. Nested Dynamical Systems with Sensor Information Deficiency Constraints

Let us consider the nested dynamical structures for two UAVs, as shown in Figure 2 which implies that the dynamical interactions are directional. Without loss of generality, we consider two interconnected linear systems with nested dynamics as follows:

Figure 2.

Two-player nested dynamical structures.

Notice the two-UAV system model in Equation (3) can be described in this form. For generality, from here the word ’player’ is used instead of ’UAV’ until the optimal strategies are derived.

In the nested dynamics framework described above, and denote the state variable and control input of i-th player, respectively. Please note that the above equations imply that the first player’s state and decision affects the second player, while the second player’s does not affect the first player. Also, the first player’s sensor measurement is available to the second player (via communication networks or by direct measurements), so the second player can use the first player’s information for its control ( depends on and ), while the first player is not able to use the second player’s measurements ( depends on only). Here, we assume that each player’s sensor measures its state directly which indicates that , and therefore for .

Define the random variables of the initial states which are mutually independent with the following known probability density functions:

and the process noises are assumed to be stationary zero-mean Gaussian which are characterized by the following covariance matrices:

The set of available information for each player is defined as follows:

which gives the control strategies as:

where is a function or a dynamical system that describes the controller of the i-th player.

The performance index in the finite-horizon quadratic form which couples the states and is defined as follows:

where the weighting parameters H and Q are positive semidefinite and R is positive definite as follows:

Then the optimization problem describing the cooperation of the two players can be stated as follows:

Problem 1.

(Two player LQR) For the following two-player nested dynamical systems model,

and find the optimal strategies and that minimize the finite-horizon quadratic cost

where is a function of for , and is a function of and for .

2.3. State Separation

The centralized controller for linear quadratic regulation problem is well known that the full-state feedback is the optimal strategy, i.e., every player needs access to every information. However, in decentralized control problem it is impracticable because of the information asymmetry; player 1 does not have measurement information on player 2. Therefore, the state-separation principle is proposed which separates player 2’s state () into two parts: (1) player 1’s best estimate on player 2’s state (), and (2) the remainder (, i.e., the estimation error). The accessible information with information set can be written to the conditional estimation which are as follows:

- For player 1:where .

- For player 2:where and .

The subscript indicates the state of player j estimated by player i. Notice that player 1 must estimate the state of player 2 which is defined by , and the best estimation is given by the dynamical propagation

which player 1 can compute only using player 1’s measurement information and player 2’s control strategy (it is assumed that the collaborator’s control strategy is known to each other). The definition of the estimation error of and the corresponding control input are as follows:

where the estimation error dynamics is given by:

2.4. Uncorrelated Variance Propagation

In this subsection, it is shown that the variances of state accessible to player 1 and the estimation error are propagated independently, and it is used to derive two independent subproblems that give insight of the optimal strategy. The initial probability density functions in Equation (5) are assumed to be known to each other, where the initial probability density functions of and are as follows:

giving the structured covariance matrix of as follows:

Consider a block covariance matrix which is structured as follows:

where is block (size compatible with A) and is block (size compatible with . Suppose an arbitrary feedback gain matrix which has the same structure as , then P propagates with the following Lyapunov equation:

where and are the system and input matrix in Equation (16). In addition, the autocorrelation of process noise matrix, Q, is:

hence , , , and Q have the same structures as , and consequently the covariance matrix also follows the same structure as below:

Therefore, and are uncorrelated and independent if follows the same structure as .

2.5. Cost Separation

The cost function in Equation (9) can be rewritten by substituting the state with the separated state in Equation (15) which yields

where the state variables and the control variables are given by = and , and , , are given by:

which helps to rewrite Problem 1 by using the following state-separated model.

Problem 2.

(Decentralized two player LQR) Consider the separated system model

and find the optimal strategies that minimize the cost

where and are functions of for , and is a function of and for .

Based on the fact that and are independent, Equation (24) can be separated into three parts following the structures of .

- Part 1:

- Part 2:

- Part 3:

The cost and represent the performance index of the strategies by and respectively, while describes the cross-coupling of and . Suppose the weighting matrix Hdec and Qdec are symmetric, then can be simplified as

where and are linear in and respectively. Since and are independent random variables with the initial probability density functions given in Equations (5) and (19) where their variance propagate with the Lyapunov equation in Equation (22), the expected values of the linear combinations of and can be derived as follows:

and

Therefore, and are functions of independent variables and consequently . Then the minimum of J is given by the summation of the minimums of and as follows:

Now the optimal solution of Problem 2 can be obtained by solving two independent subproblems. The solution of each subproblem can be obtained by each player, the leader and the follower.

Subproblem 1.

(Leader problem) Consider the separated model for the leader

where the accessible information for the leader is limited to

Find the optimal strategies and that minimize the cost

where and are functions of for .

Subproblem 2.

(Follower problem) Consider the separated model for the follower

where the follower is accessible to every information

Find the optimal strategy that minimizes the cost

where is a function of and for .

3. Main Results

In this section, the explicit optimal control strategy of the Problem 1 is obtained. Then based on the solution, the centralized and decentralized optimal two-agent cooperative guidance laws are derived.

3.1. Decentralized Two-Player Optimal Controller

Lemma 1.

Consider the Subproblem 1 and suppose that there exists a stabilizing solution X for the Riccati equation

with the terminal condition

where are the system and input matrix in Equation (17) and H is the terminal weighting matrix defined in Equation (26).

Then the optimal strategies for Subproblem 1 are as follows:

where

and evolves with the following dynamics:

Lemma 2.

Consider the Subproblem 2 and suppose that there exists a stabilizing solution Y for the Riccati equation

with the terminal condition

Then the optimal strategy for Subproblem 2 is as follows:

where

Proof.

(for both Lemma 1 and Lemma 2) For Subproblems 1 and 2, all the necessary information is accessible to each player as follows:

therefore, each subproblem is a centralized problem with full information, for which the optimal strategy is the well-known full-state feedback obtained by solving the Riccati equations in Equations (33) and (37). For more technical details including the solution uniqueness issues, one may refer to [33] or [35].

Theorem 1.

Proof.

The proof follows from Lemma 1, Lemma 2 and the definition of and as follows:

which achieves the minimum cost given in Equation (32).

3.2. Optimal Cooperative Guidance Laws for Two UAVs Relative Approach Angle Control

In this section, the main results in Theorem 1 are used for deriving the optimal cooperative guidance solutions for two UAVs under information constraints with the relative approach angle constraints. The solutions are expressed in terms of the line-of-sight parameters, so that they can be easily understood by aerospace guidance communities and can be efficiently implemented on conventional guidance computers.

3.2.1. Centralized Solution with Full Information Sharing

For controlling the relative approach angle between two UAVs, a linear quadratic problem with the following cost function is considered:

where the notations follow from Figure 1, and the positive real number b and c are the penalties imposed on the UAVs’ miss distance and the approach angle error, respectively. The parameter r above represents the reference value inducing the terminal relative approach angle. The flight-path angle of each UAV is related to and as follows:

and the parameter is the ratio of and . Without loss of generality, it is assumed that is not smaller than , hence:

The relative approach angle of two UAVs, , which is required to be at the terminal time, can be expressed as follows:

To obtain the optimal control input that minimizes Equation (45), the Hamiltonian is defined as follows:

where and , with the definitions of x and u from Equation (3). Hereafter in this subsection, we follow the same definitions for x and u. The Lagrangian multiplier is a column vector which consists of and , and the first necessary conditions for optimality are the following costate equations:

with the terminal conditions as follows:

thus, the dynamic equations of the costates are as follows:

The second necessary condition for optimality is as follows:

which yields

Substituting the above optimal control solutions to the linear system description in Equation (3) and integrating yields:

along with and , Equation (55) can be expressed in terms of as follows:

Evaluating the above equation with the transition matrix and r gives the following linear relations:

where

and

Then the resulting linear algebraic equations can be solved for the unknown terminal values and thus arrive at the expression for by replacing the arbitrary by t as follows:

where the optimal feedback gains for and and the feed-forward gains for are obtained as follows:

Taking the limit , for obtaining the zero-miss distance with the required relative approach angle, yields

and then the optimal control strategies are obtained as follows:

which can be rewritten in terms of the line-of-sight angles as follows:

where the relation of line-of-sight angle , line-of-sight rate , closing velocity and time-to-go are given below:

Notice in Equation (63) that each agent is required to access the measurement information from both UAVs, to be able to compute the optimal solutions.

3.2.2. Decentralized Solution with Information Deficiency Constraints

Based on Theorem 1 the decentralized solution of the leader problem can be obtained from Equation (62) as follows:

and the follower problem is the well-known optimal rendezvous problem where the solution is given as:

which can be rewritten in terms of the line-of-sight angles as follows:

and

Please note that the decentralized optimal strategy for agent1 can be computed using and only, while the optimal strategy for agent2 requires all the measurement information from both UAVs.

3.2.3. Potential Issues on Practical Implementation

The cooperative guidance laws proposed in this paper considers the matched time-horizon for both UAVs. However, they can vary for each agent because of the different launch conditions, uncertainties of the dynamical model, or external disturbances. To manage this issue, the cost function in Equation (45) can be extended for different time-horizons as follows:

where the leader is assumed to intercept the target earlier than the follower does:

Before the time-to-go of the follower reach to the initial time-to-go of the leader, is assumed to be 0 that yields . Therefore for , the follower is guided with the proportional navigation (PN) guidance law for the centralized case and for the decentralized case, computed as follows:

For the proposed guidance laws are used, where the leader predicts , and which depend on the time-to-go of the follower by using the dynamical propagation in Equation (12), whereas the follower retains the necessary information by communication networks or by direct measurements.

4. Numerical Experiments

In this section, the performance of the proposed guidance strategies are investigated on networked two-UAV systems. We consider the planar nonlinear kinematics for the UAVs as follows:

where the positions of the i-th UAV in the frame from Figure 1 are denoted by , in this section, and the other notations follow from Section 2. The process noise can be interpreted as the disturbance factor that includes the wind gust acting on the i-th UAV or target maneuver. Please note that it has been used and proven useful in a wide range of literature from classical optimal guidance problems [1,3,16,17,35,37].

In this example, two UAVs are launched from different locations with identical launch angle of 10 deg and guided to approach a stationary target with different approach angles separated by 30 deg. Please note that no explicit approach angle command for each UAV was given. For each UAV, the maximum available guidance command is assumed to be , where g represents the gravitational acceleration. The initial states of the UAVs and the target are listed in Table 1.

Table 1.

Initial states for the numerical example.

Both the centralized and decentralized cooperation scenarios are considered here. In the centralized cooperation scenario, the deterministic UAV dynamics, i.e., , in Equation (70) is considered for reference analysis. The two UAVs are assumed to share the perfect information on , and for . In the decentralized cooperation scenario, the directional information constraint is considered, i.e., the first UAV’s sensor measurement is available to the second UAV, while the second’s measurement is not available to the first, and the stochastic dynamics is considered, where disturbs the dynamics and trajectories. Our main interest is to see whether the proposed decentralized cooperation scheme let the two UAVs approach the target with the required relative approach angle while making up the perturbed dynamics and trajectories caused by the random disturbance ’s.

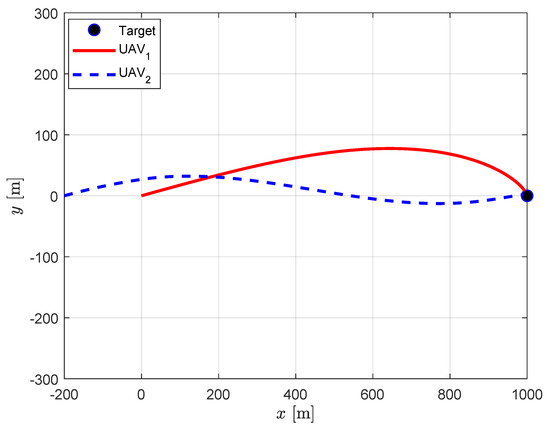

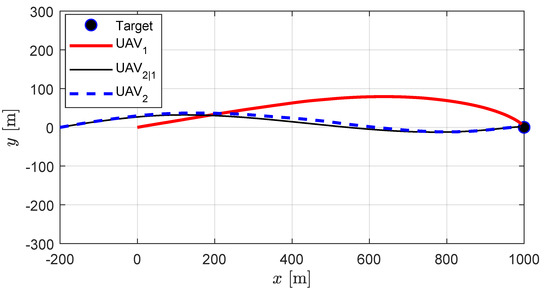

4.1. Centralized Cooperation under Full Information Sharing

Figure 3 presents the trajectories obtained by the centralized guidance strategy. It is apparent that the guidance strategy enforces the required relative approach angle of 30 deg with a small error of 0.15 deg while both UAVs perfectly approaching to the target. Although both UAVs are launched to identical direction, UAV1 approaches the target from above while UAV2 approaches from below to satisfy the relative approach angle constraint. The impact time of each UAV is 10.12 s and 11.95 s, respectively. The detailed results are listed in Table 2.

Figure 3.

Centralized cooperation: UAV trajectories.

Table 2.

Results from the centralized cooperation with full information.

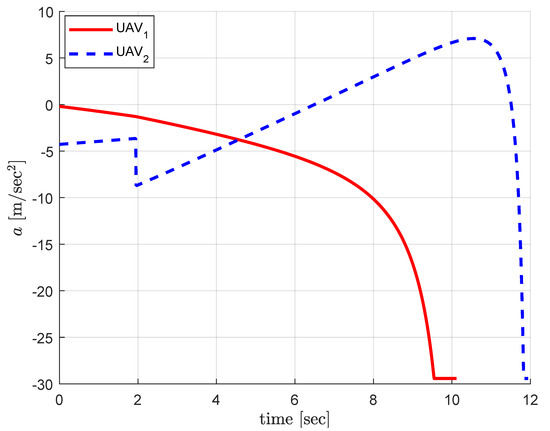

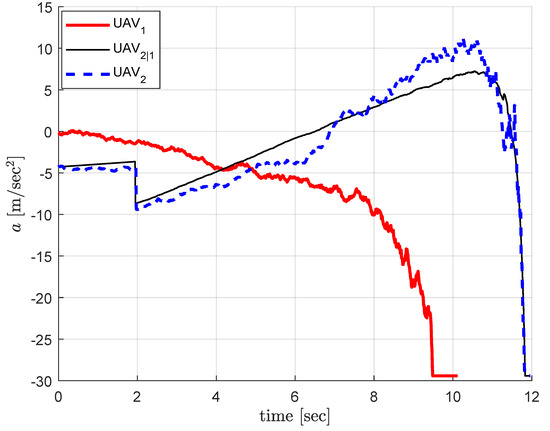

Figure 4 shows the acceleration commands during the engagement. The guidance command for UAV1 decreases to negative value which allows UAV1 to approach the target from above to satisfy the relative angle constraint. The guidance command for UAV2 increases from negative value to positive to approach the target from below to satisfy the relative approach angle constraint. Please note that UAV2 is guided with the PN guidance law for the first 1.95 s where the matched time-to-go for UAV1 does not exist in this engagement scenario. After that UAV2 cooperates with UAV1 for the matched time-horizon.

Figure 4.

Centralized cooperation: guidance commands.

4.2. Decentralized Cooperation under Information Deficiency

Figure 5 and Figure 6 present the decentralized cooperation scenario. In this case, UAV1 estimates the state of UAV2 by the dynamic propagation and UAV2 issues a correction command in order to minimize the error caused by UAV1’s estimate. Figure 5 presents the trajectories obtained by the decentralized guidance strategy where the thin black line (UAV2|1) represents UAV1’s best estimate on UAV2’s position. It is evident that UAV2’s position converges to that of UAV2|1 which allows the relative approach angle to be maintained for a similar level with the centralized cooperation. The detailed results are listed in Table 3.

Figure 5.

Decentralized cooperation: UAV trajectories. Thin black line represents UAV1’s best estimate on UAV2’s position, and the dotted blue line represents the actual trajectory of UAV2.

Figure 6.

Decentralized cooperation: guidance commands. Thin black line (UAV2|1) represents UAV1’s best estimate on UAV2’s guidance command, and the dotted blue line (UAV2) represents the actual guidance command of UAV2 as the sum .

Table 3.

Results from the decentralized cooperation with information constraints.

Figure 6 shows the acceleration commands during the engagement where the thin black line (UAV2|1) represents UAV1’s best estimate on UAV2’s guidance command. UAV2’s guidance command is maintained slightly less than that of UAV2|1 until UAV2’s correction command increases sufficiently, and become greater than the estimated value in order to making up the perturbed trajectories caused by the disturbance. It is obvious that the guidance command of UAV2 converges to that of UAV2|1 at the terminal time which indicates that the correction command converges to zero.

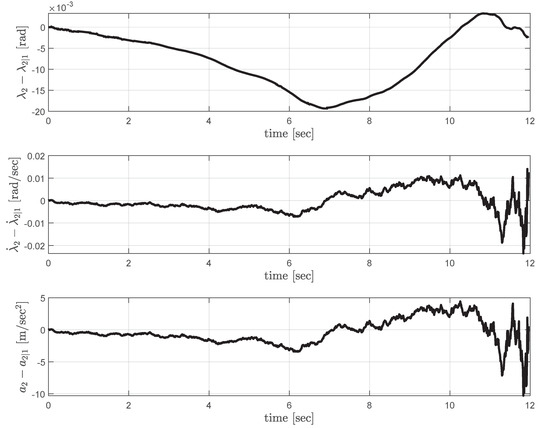

Figure 7 shows the error in UAV1’s estimate on UAV2’s line-of-sight angle and the line-of-sight rate. The last plot in Figure 7 shows the corresponding correction command computed by UAV2. The estimation errors increase under the influence of the disturbance, but they quickly converge to zero, which indicates the decentralized guidance strategy tries to follow the centralized guidance strategy. Please note that the fluctuating guidance commands observed when the time-to-go approaches to zero is usual as in most of the classical and practical guidance laws [1,3,16,17,35,37].

Figure 7.

Decentralized cooperation: estimation error profiles (top, middle), and the correction command (bottom) computed by UAV2.

5. Conclusions

In this study, optimal cooperative guidance laws for two UAVs under sensor information deficiency constraints and the relative approach angle constraints are proposed. The general decentralized optimal control problem is formulated with the nested dynamics and information structure where the communication between the UAVs is directional. The optimal control problem is solved by adopting the state-separation principle which separates the decentralized optimal control problem into two centralized optimal control subproblems. The solution of the first subproblem considers the information deficiency which the leader is associated with according to the accessible information. The solution of the second subproblem considers additional effort for the follower that tries to minimize the prediction error of the leader. The optimal cooperative guidance solutions are derived in terms of the line-of-sight angles and the line-of-sight angle rates, so that it can be easily understood by the guidance community and can be easily implemented on conventional guidance computers.

Based on the proposed optimal cooperative guidance solution, the centralized and decentralized cooperative guidance laws are derived in closed form. Two UAVs cooperate to optimize a common objective function which couples their vertical velocity components at the terminal states. The performance of the proposed guidance strategies is investigated with nonlinear kinematics on both of centralized and decentralized cooperation setups. In the centralized cooperation setup, a deterministic scenario is considered, while a stochastic scenario is considered for decentralized cooperation setup. The simulation results show the guidance strategy enforces the required relative approach angle constraints and the decentralized guidance strategy converges to the centralized guidance strategy as the follower supports the prediction of the leader.

Future research directions may include extensions to general n-UAV cooperative guidance solutions or other cooperative mission objectives. Other realistic constraints such as collision avoidance, communication range, communication delay, and so on, should also be taken into account for practical implementation of the proposed approach.

Author Contributions

Conceptualization, J.-H.K.; methodology, J.-H.K. and D.L.; validation, J.-H.K. and D.L.; formal analysis, D.L.; investigation, D.L.; resources, H.-L.C.; writing—original draft preparation, D.L.; writing—review and editing, D.L., H.-L.C. and J.-H.K.; visualization, D.L.; supervision, J.-H.K. and H.-L.C.; funding acquisition, J.-H.K. and H.-L.C. All authors have read and agreed to the published version of the manuscript.

Funding

D.L. and H.-L.C. were supported in part by Unmanned Vehicles Core Technology Research and Development Program through the National Research Foundation of Korea (NRF), Unmanned Vehicle Advanced Research Center (UVARC) funded by the Ministry of Science and ICT, the Republic of Korea (No. 2020M3C1C1A01082375). J.-H.K. was supported in part by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2019R1C1C1011579).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jeon, I.S.; Lee, J.I.; Tahk, M.J. Homing guidance law for cooperative attack of multiple missiles. J. Guid. Control Dyn. 2010, 33, 275–280. [Google Scholar] [CrossRef]

- Lee, J.I.; Jeon, I.S.; Tahk, M.J. Guidance law to control impact time and angle. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 301–310. [Google Scholar]

- Jeon, I.S.; Lee, J.I.; Tahk, M.J. Impact-time-control guidance law for anti-ship missiles. IEEE Trans. Control Syst. Technol. 2006, 14, 260–266. [Google Scholar] [CrossRef]

- Acevedo, J.J.; Maza, I.; Ollero, A.; Arrue, B.C. An Efficient Distributed Area Division Method for Cooperative Monitoring Applications with Multiple UAVs. Sensors 2020, 20, 3448. [Google Scholar] [CrossRef]

- Casbeer, D.W.; Kingston, D.B.; Beard, R.W.; McLain, T.W. Cooperative forest fire surveillance using a team of small unmanned air vehicles. Int. J. Syst. Sci. 2006, 37, 351–360. [Google Scholar] [CrossRef]

- Gu, J.; Su, T.; Wang, Q.; Du, X.; Guizani, M. Multiple moving targets surveillance based on a cooperative network for multi-UAV. IEEE Commun. Mag. 2018, 56, 82–89. [Google Scholar] [CrossRef]

- Fonod, R.; Shima, T. Estimation enhancement by cooperatively imposing relative intercept angles. J. Guid. Control Dyn. 2017, 40, 1711–1725. [Google Scholar] [CrossRef]

- Hoffmann, G.M.; Tomlin, C.J. Mobile sensor network control using mutual information methods and particle filters. IEEE Trans. Autom. Control 2009, 55, 32–47. [Google Scholar] [CrossRef]

- Choi, H.L.; Lee, S.J. A potential-game approach for information-maximizing cooperative planning of sensor networks. IEEE Trans. Control Syst. Technol. 2015, 23, 2326–2335. [Google Scholar] [CrossRef]

- Kaplan, A.; Kingry, N.; Uhing, P.; Dai, R. Time-optimal path planning with power schedules for a solar-powered ground robot. IEEE Trans. Autom. Sci. Eng. 2016, 14, 1235–1244. [Google Scholar] [CrossRef]

- He, S.; Shin, H.S.; Tsourdos, A. Trajectory optimization for target localization with bearing-only measurement. IEEE Trans. Robot. 2019, 35, 653–668. [Google Scholar] [CrossRef]

- Spangelo, I.; Egeland, O. Generation of energy-optimal trajectories for an autonomous underwater vehicle. In Proceedings of the 1992 IEEE International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; pp. 2107–2108. [Google Scholar]

- He, S.; Lee, C.H.; Shin, H.S.; Tsourdos, A. Optimal Guidance and Its Applications in Missiles and UAVs; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Bryson, A.E. Applied Optimal Control: Optimization, Estimation and Control; CRC Press: Boca Raton, FL, USA, 1975. [Google Scholar]

- Kirk, D.E. Optimal Control Theory: An Introduction; Courier Corporation: New York, NY, USA, 2012. (Original Work Published 1970). [Google Scholar]

- Ryoo, C.K.; Cho, H.; Tahk, M.J. Optimal guidance laws with terminal impact angle constraint. J. Guid. Control Dyn. 2005, 28, 724–732. [Google Scholar] [CrossRef]

- Ryoo, C.K.; Cho, H.; Tahk, M.J. Time-to-go weighted optimal guidance with impact angle constraints. IEEE Trans. Control Syst. Technol. 2006, 14, 483–492. [Google Scholar] [CrossRef]

- Kim, M.; Grider, K.V. Terminal guidance for impact attitude angle constrained flight trajectories. IEEE Trans. Aerosp. Electron. Syst. 1973, 9, 852–859. [Google Scholar] [CrossRef]

- Kumar, S.R.; Ghose, D. Impact time and angle control guidance. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Kissimmee, FL, USA, 5–9 January 2015; p. 0616. [Google Scholar]

- Erer, K.S.; Tekin, R. Impact time and angle control based on constrained optimal solutions. J. Guid. Control Dyn. 2016, 39, 2448–2454. [Google Scholar] [CrossRef]

- Shaferman, V.; Shima, T. Cooperative optimal guidance laws for imposing a relative intercept angle. J. Guid. Control Dyn. 2015, 38, 1395–1408. [Google Scholar] [CrossRef]

- Shakeri, R.; Al-Garadi, M.A.; Badawy, A.; Mohamed, A.; Khattab, T.; Al-Ali, A.K.; Harras, K.A.; Guizani, M. Design challenges of multi-UAV systems in cyber-physical applications: A comprehensive survey and future directions. IEEE Commun. Surv. Tutor. 2019, 21, 3340–3385. [Google Scholar] [CrossRef]

- Orfanus, D.; de Freitas, E.P.; Eliassen, F. Self-organization as a supporting paradigm for military UAV relay networks. IEEE Commun. Lett. 2016, 20, 804–807. [Google Scholar] [CrossRef]

- Liu, D.; Xu, Y.; Wang, J.; Xu, Y.; Anpalagan, A.; Wu, Q.; Wang, H.; Shen, L. Self-organizing relay selection in UAV communication networks: A matching game perspective. IEEE Wirel. Commun. 2019, 26, 102–110. [Google Scholar] [CrossRef]

- Teacy, W.L.; Nie, J.; McClean, S.; Parr, G. Maintaining connectivity in UAV swarm sensing. In Proceedings of the 2010 IEEE Globecom Workshops, Miami, FL, USA, 6–10 December 2010; pp. 1771–1776. [Google Scholar]

- Dutta, R.; Sun, L.; Pack, D. A decentralized formation and network connectivity tracking controller for multiple unmanned systems. IEEE Trans. Control Syst. Technol. 2017, 26, 2206–2213. [Google Scholar] [CrossRef]

- Bemporad, A.; Heemels, M.; Johansson, M. Networked Control Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 406. [Google Scholar]

- Witsenhausen, H.S. A counterexample in stochastic optimum control. SIAM J. Control 1968, 6, 131–147. [Google Scholar] [CrossRef]

- Ho, Y.C.; Chu, K.H. Team decision theory and information structures in optimal control problems–Part I. IEEE Trans. Autom. Control 1972, 17, 15–22. [Google Scholar] [CrossRef]

- Rotkowitz, M.; Lall, S. Decentralized control information structures preserved under feedback. In Proceedings of the 41st IEEE Conference on Decision and Control, Las Vegas, NV, USA, 10–13 December 2002; Volume 1, pp. 569–575. [Google Scholar]

- Lessard, L.; Lall, S. Quadratic invariance is necessary and sufficient for convexity. In Proceedings of the 2011 American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 5360–5362. [Google Scholar]

- Swigart, J.; Lall, S. An explicit dynamic programming solution for a decentralized two-player optimal linear-quadratic regulator. In Proceedings of the Mathematical Theory of Networks and Systems, Budapest, Hungary, 5–9 July 2010. [Google Scholar]

- Kim, J.H.; Lall, S. A unifying condition for separable two player optimal control problems. In Proceedings of the 2011 50th IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), Orlando, FL, USA, 12–15 December 2011; pp. 3818–3823. [Google Scholar]

- Kim, J.H.; Lall, S. Separable optimal cooperative control problems. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 5868–5873. [Google Scholar]

- Kim, J.H.; Lall, S. Explicit solutions to separable problems in optimal cooperative control. IEEE Trans. Autom. Control 2014, 60, 1304–1319. [Google Scholar] [CrossRef]

- Kim, J.H.; Lall, S.; Ryoo, C.K. Optimal cooperative control of dynamically decoupled systems. In Proceedings of the 2012 IEEE 51st IEEE Conference on Decision and Control (CDC), Maui, HI, USA, 10–13 December 2012; pp. 4852–4857. [Google Scholar]

- Zarchan, P. Tactical and Strategic Missile Guidance; American Institute of Aeronautics and Astronautics, Inc.: Washington, DC, USA, 2012. [Google Scholar]

- Wang, Y.; Lei, H.; Ye, J.; Bu, X. Backstepping Sliding Mode Control for Radar Seeker Servo System Considering Guidance and Control System. Sensors 2018, 18, 2927. [Google Scholar] [CrossRef] [PubMed]

- Fang, F.; Cai, Y.; Yu, Z. Adaptive estimation and cooperative guidance for active aircraft defense in stochastic scenario. Sensors 2019, 19, 979. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).