A High-Speed Low-Cost VLSI System Capable of On-Chip Online Learning for Dynamic Vision Sensor Data Classification

Abstract

1. Introduction

2. Algorithm Review

2.1. Algorithm Flow

2.2. Algorithm Optimization

3. VLSI Hardware System

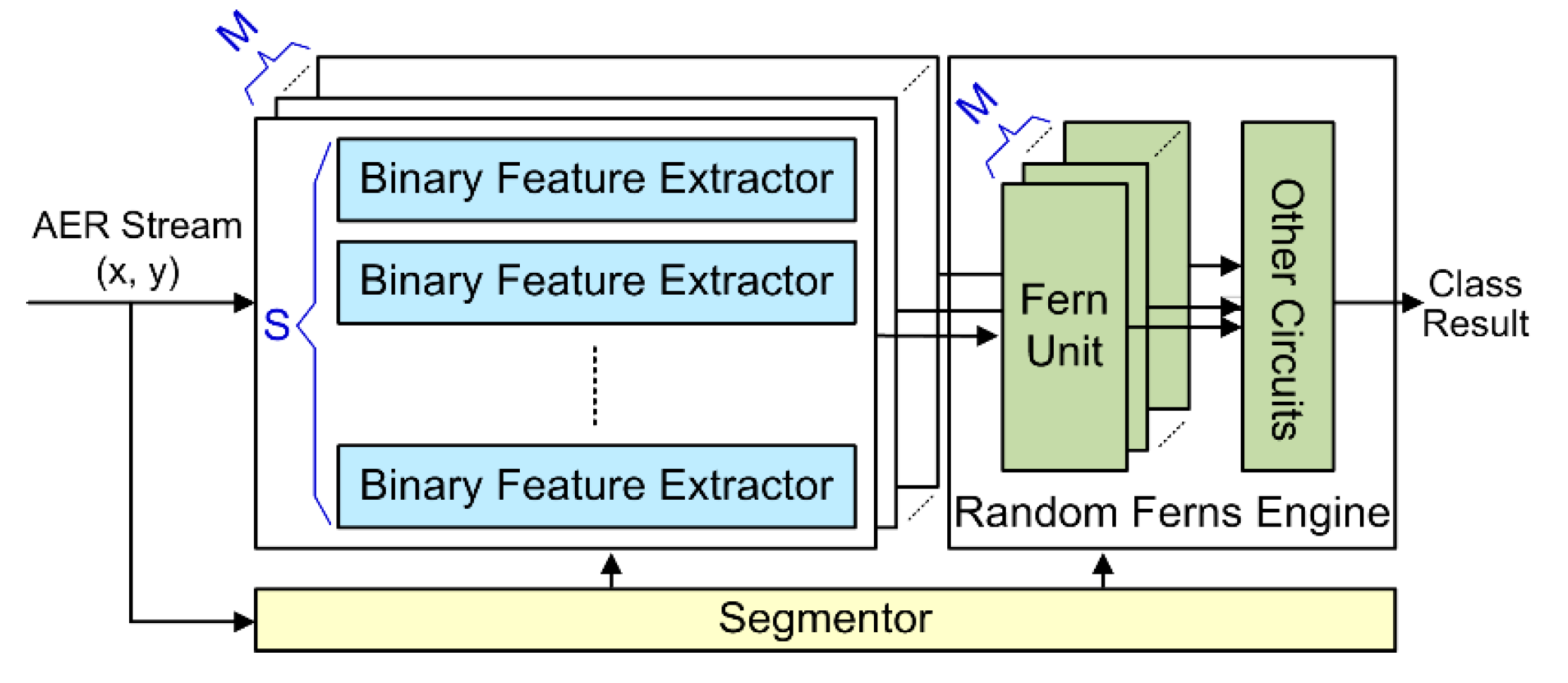

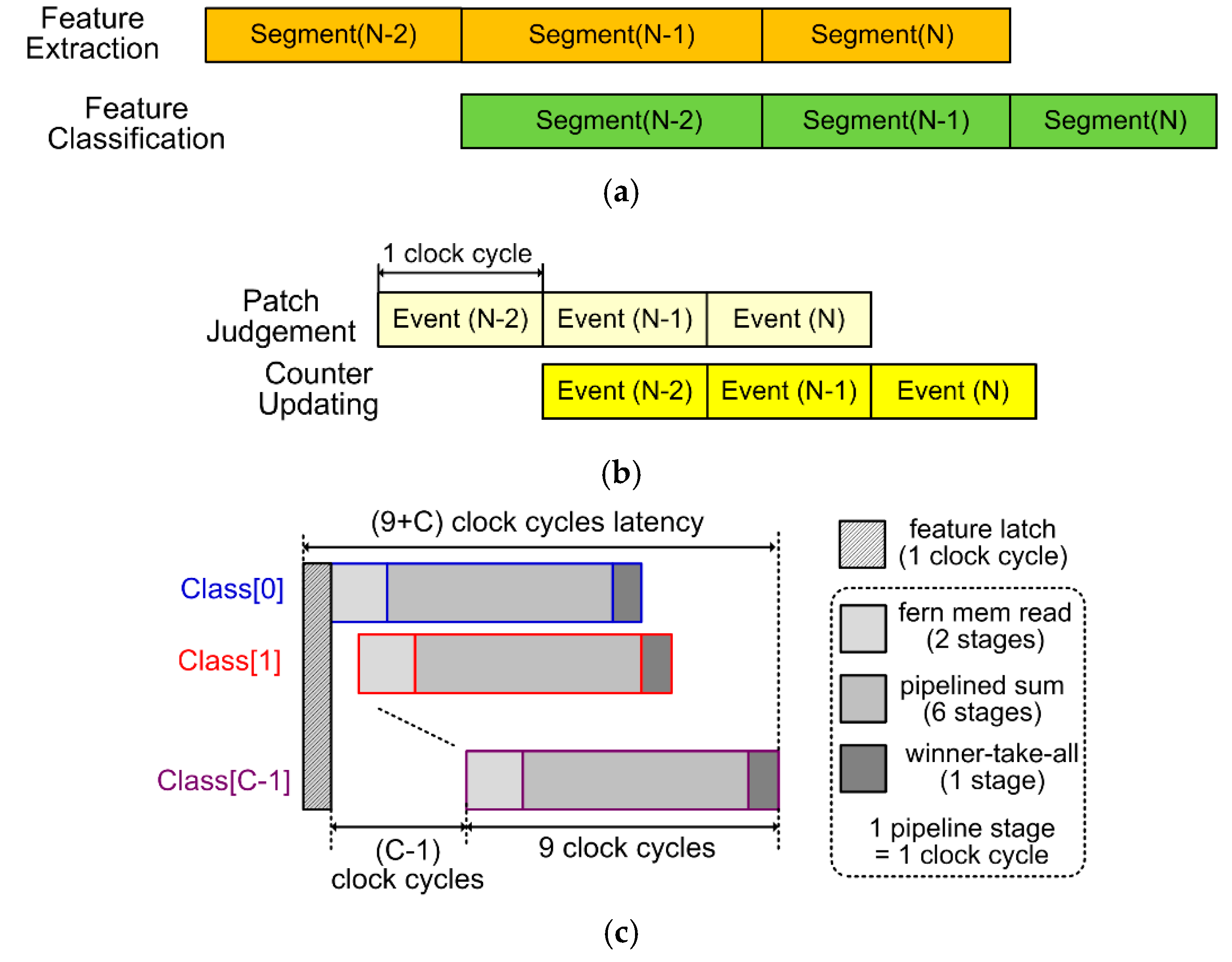

3.1. System Architecture

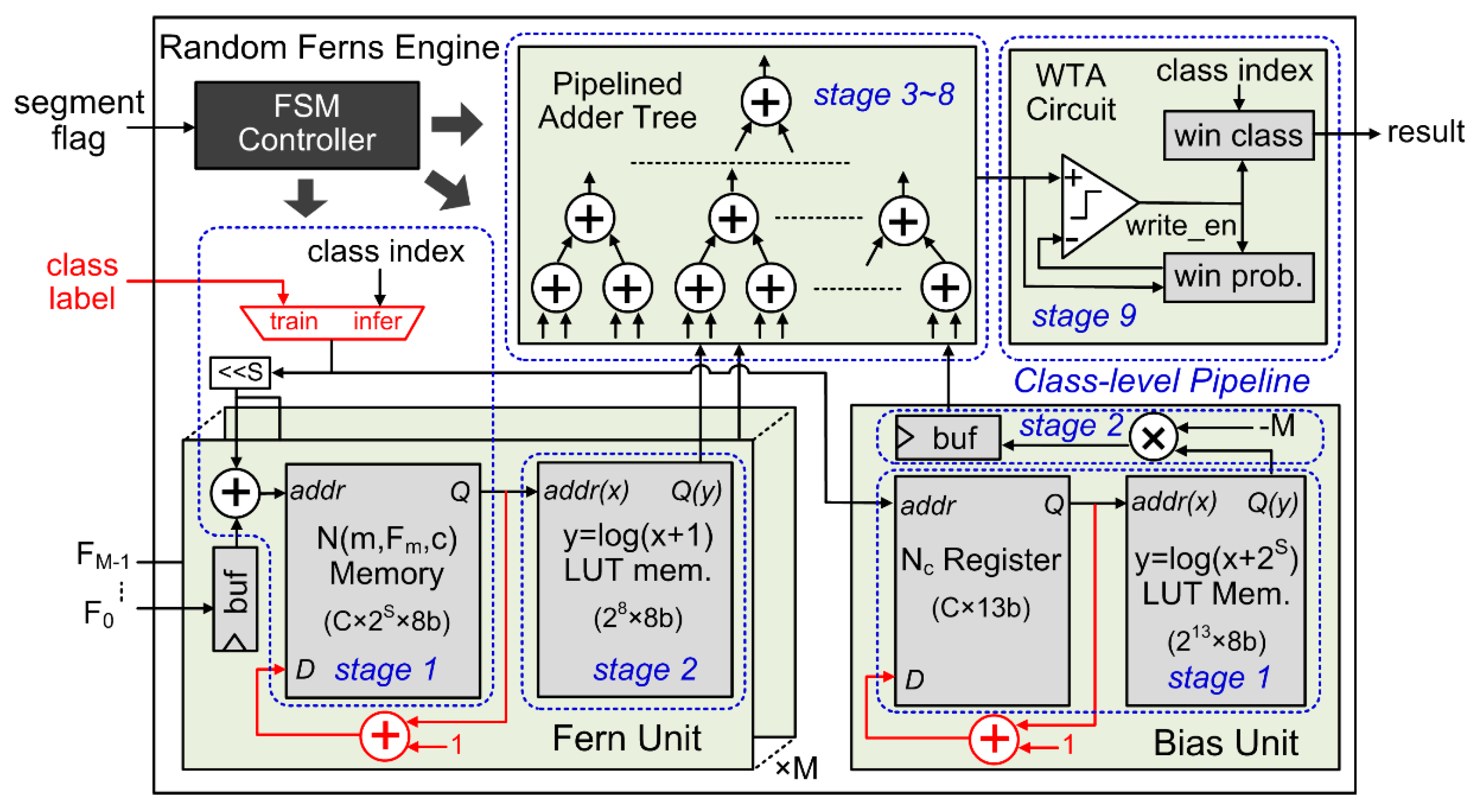

3.2. Circuit Design

4. Experimental Results

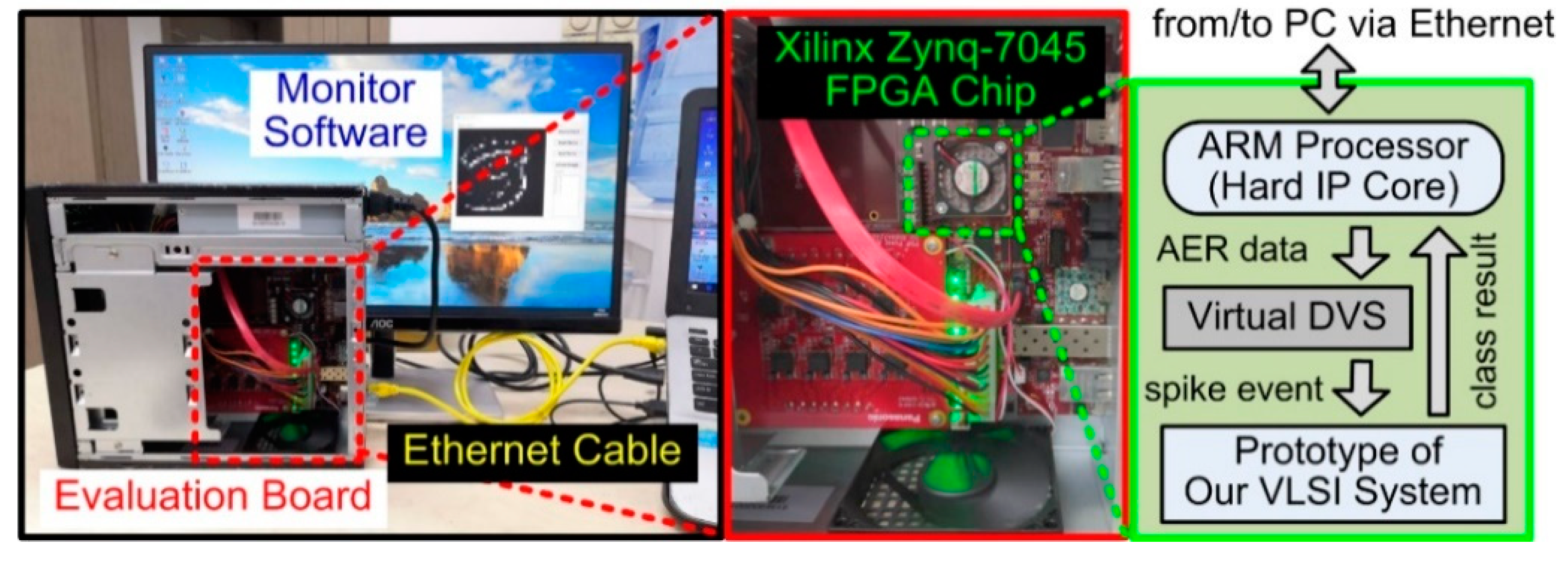

4.1. FPGA Prototype

4.2. Comparison and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, C.; Ai, H.; Li, Y.; Lao, S. High-performance rotation invariant multiview face detection. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 671–686. [Google Scholar] [CrossRef] [PubMed]

- Shitao, C.; Zhang, S.; Shang, J.; Chen, B. Brain-inspired cognitive model with attention for self-driving cars. IEEE Trans. Cogn. Dev. Syst. 2017, 11, 13–25. [Google Scholar] [CrossRef]

- Puvvadi, U.L.N.; Benedetto, K.D.; Patil, A.; Kang, K.; Park, Y. Cost-effective security support in real-time video surveillance. IEEE Trans. Ind. Inform. 2015, 11, 1457–1465. [Google Scholar] [CrossRef]

- Haskell, M.W.; Cauley, S.F.; Wald, L.L. TArgeted Motion Estimation and Reduction (TAMER): Data Consistency Based Motion Mitigation for MRI Using a Reduced Model Joint Optimization. IEEE Trans. Med. Imaging 2018, 37, 1253–1265. [Google Scholar] [CrossRef]

- Shi, C.; Yang, J.; Han, Y.; Cao, Z.; Qin, Q.; Liu, L.; Wu, N.; Wang, Z. A 1000 fps Vision Chip Based on a Dynamically Reconfigurable Hybrid Architecture Comprising a PE Array Processor and Self-Organizing Map Neural Network. IEEE J. Solid-State Circuits 2014, 9, 2067–2082. [Google Scholar] [CrossRef]

- Li, H.; Liu, H.; Ji, X.; Li, G.; Shi, L. CIFAR10-DVS: An event-stream dataset for object classification. Front. Neurosci. 2017, 11, 309. [Google Scholar] [CrossRef]

- Posch, C.; Matolin, D.; Wohlgenannt, R.; Maier, T.; Litzenberger, M. A Microbolometer Asynchronous Dynamic Vision Sensor for LWIR. IEEE Sens. J. 2009, 9, 654–664. [Google Scholar] [CrossRef]

- Posch, C.; Serrano-Gotarredona, T.; Linares-Barranco, B.; Delbruck, T. Retinomorphic event-based vision sensors: Bioinspired cameras with spiking output. Proc. IEEE 2014, 102, 1470–1484. [Google Scholar] [CrossRef]

- Won, J.-Y.; Ryu, H.; Delbruck, T.; Lee, J.H.; Hu, J. Proximity Sensing Based on a Dynamic Vision Sensor for Mobile Devices. IEEE Trans. Ind. Electron. 2015, 62, 536–544. [Google Scholar] [CrossRef]

- Schaik, A. van; Liu, Shih-Chii. AER EAR: A matched silicon cochlea pair with address event representation interface. IEEE Trans. Circuits Syst. I Regul. Pap. 2007, 54, 48–59. [Google Scholar] [CrossRef]

- Lenero-Bardallo, J.A.; Serrano-Gotarredona, T.; Linares-Barranco, B. A 3.6 μs Latency Asynchronous Frame-Free Event-Driven Dynamic-Vision-Sensor. IEEE J. Solid-State Circuits 2011, 46, 1443–1455. [Google Scholar] [CrossRef]

- Song, S.; Miller, K.D.; Abbott, L.F. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 2000, 3, 919–926. [Google Scholar] [CrossRef] [PubMed]

- Gütig, R.; Sompolinsky, H. The tempotron: A neuron that learns spike timing–based decisions. Nat. Neurosci. 2006, 9, 420–428. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, S.R.; Rajendran, B. Spiking neural networks for handwritten digit recognition—Supervised learning and network optimization. Neural Netw. 2018, 103, 118–127. [Google Scholar] [CrossRef] [PubMed]

- Yu, Q.; Tang, H.; Tan, K.C.; Li, H. Rapid feedforward computation by temporal encoding and learning with spiking neurons. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 1539–1552. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Ding, R.; Chen, S.; Linares-Barranco, B.; Tang, H. Feedforward Categorization on AER Motion Events Using Cortex-Like Features in a Spiking Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1963–1978. [Google Scholar] [CrossRef] [PubMed]

- Furber, S.B.; Lester, D.R.; Plana, L.A.; Garside, J.D.; Painkras, E.; Temple, S.; Brown, A.D. Overview of the SpiNNaker System Architecture. IEEE Trans. Comput. 2013, 62, 2454–2467. [Google Scholar] [CrossRef]

- Painkras, E.; Plana, L.A.; Garside, J.; Temple, S.; Galluppi, F.; Patterson, C.; Lester, D.R.; Brown, A.D.; Furber, S.B. SpiNNaker: A 1-W 18-Core System-on-Chip for Massively-Parallel Neural Network Simulation. IEEE J. Solid-State Circuits 2013, 48, 1943–1953. [Google Scholar] [CrossRef]

- Akopyan, F.; Sawada, J.; Cassidy, A.; Alvarez-Icaza, R.; Arthur, J.; Merolla, P.; Imam, N.; Nakamura, Y.; Datta, P.; Nam, G.-J.; et al. TrueNorth: Design and Tool Flow of a 65mW 1 Million Neuron Programmable Neurosynaptic Chip. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2015, 34, 1537–1557. [Google Scholar] [CrossRef]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Ma, D.; Shen, J.; Gu, Z.; Zhang, M.; Zhu, X.; Xu, X.; Xu, Q.; Shen, Y.; Pan, G. Darwin: A neuromorphic hardware co-processor based on spiking neural networks. J. Syst. Archit. 2017, 77, 43–51. [Google Scholar] [CrossRef]

- Frenkel, C.; Lefebvre, M.; Legat, J.D.; Bol, D. A 0.086-mm2 12.7-pJ/SOP 64k-Synapse 256-Neuron Online-Learning Digital Spiking Neuromorphic Processor in 28-nm CMOS. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 145–158. [Google Scholar] [CrossRef] [PubMed]

- Camunas-Mesa, L.; Zamarreno-Ramos, C.; Linares-Barranco, A.; Acosta-Jimenez, A.J.; Serrano-Gotarredona, T.; Linares-Barranco, B. An Event-Driven Multi-Kernel Convolution Processor Module for Event-Driven Vision Sensors. IEEE J. Solid-State Circuits 2012, 47, 504–517. [Google Scholar] [CrossRef]

- Zamarreno-Ramos, C.; Linares-Barranco, A.; Serrano-Gotarredona, T.; Linares-Barranco, B. Multicasting mesh AER: A scalable assembly approach for reconfigurable neuromorphic structured AER systems. Application to ConvNets. IEEE Trans. Biomed. Circuits Syst. 2013, 7, 82–102. [Google Scholar] [CrossRef]

- Camunas-Mesa, L.A.; Dominguez-Cordero, Y.L.; Linares-Barranco, A.; Serrano-Gotarredona, T.; Linares-Barranco, B. A Configurable Event-Driven Convolutional Node with Rate Saturation Mechanism for Modular ConvNet Systems Implementation. Front. Neurosci. 2018, 12, 63. [Google Scholar] [CrossRef]

- Tapiador-Morales, R.; Linares-Barranco, A.; Jimenez-Fernandez, A.; Jimenez-Moreno, G. Neuromorphic LIF Row-by-Row Multiconvolution Processor for FPGA. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 159–169. [Google Scholar] [CrossRef]

- Peng, X.; Zhao, B.; Yan, R.; Tang, H.; Yi, Z. Bag of events: An efficient probability-based feature extraction method for AER image sensors. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 791–803. [Google Scholar] [CrossRef]

- Li, H.; Li, G.; Shi, L. Classification of Spatiotemporal Events Based on Random Forest. In Advances in Brain Inspired Cognitive Systems; Springer Nature: Berlin/Heidelberg, Germany, 2016; pp. 138–148. [Google Scholar] [CrossRef]

- Shi, C.; Li, J.; Wang, Y.; Luo, G. Exploiting Lightweight Statistical Learning for Event-Based Vision Processing. IEEE Access 2018, 6, 19396–19406. [Google Scholar] [CrossRef]

- Ozuysal, M.; Calonder, M.; Lepetit, V.; Fua, P. Fast keypoint recognition using random ferns. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 448–461. [Google Scholar] [CrossRef]

- Huang, J.; He, W.; Zhou, X.; He, J.; Wang, Y.; Shi, C.; Lin, Y. A Hardware System for Fast AER Object Classification with On-chip Online Learning. In Proceedings of the 2019 IEEE International Conference on Integrated Circuits, Technologies and Applications (ICTA), Chengdu, China, 13–15 November 2019; pp. 85–86. [Google Scholar]

- Huang, J.; Lin, Y.; He, W.; Zhou, X.; Shi, C.; Wu, N.; Luo, G. High-speed Classification of AER Data Based on a Low-cost Hardware System. In Proceedings of the International Conference on ASIC (ASICON), Chongqing, China, 29 October–1 November 2019; pp. 1–4. [Google Scholar]

- Farabet, C.; Paz, R.; Perez-Carrasco, J.; Zamarreno-Ramos, C.; Linares-Barranco, A.; Lecun, Y.; Culurciello, E.; Serrano-Gotarredona, T.; Linares-Barranco, B. Comparison between Frame-Constrained Fix-Pixel-Value and Frame-Free Spiking-Dynamic-Pixel ConvNets for Visual Processing. Front. Neurosci. 2012, 6, 32. [Google Scholar] [CrossRef] [PubMed]

- Mini-ITX Board. Available online: http://www.zedboard.org/product/mini-itx-board (accessed on 20 July 2020).

- Shi, C.; Luo, G. A Compact VLSI System for Bio-Inspired Visual Motion Estimation. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1021–1036. [Google Scholar] [CrossRef] [PubMed]

- Shi, C.; Luo, G. A Streaming Motion Magnification Core for Smart Image Sensors. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1229–1233. [Google Scholar] [CrossRef] [PubMed]

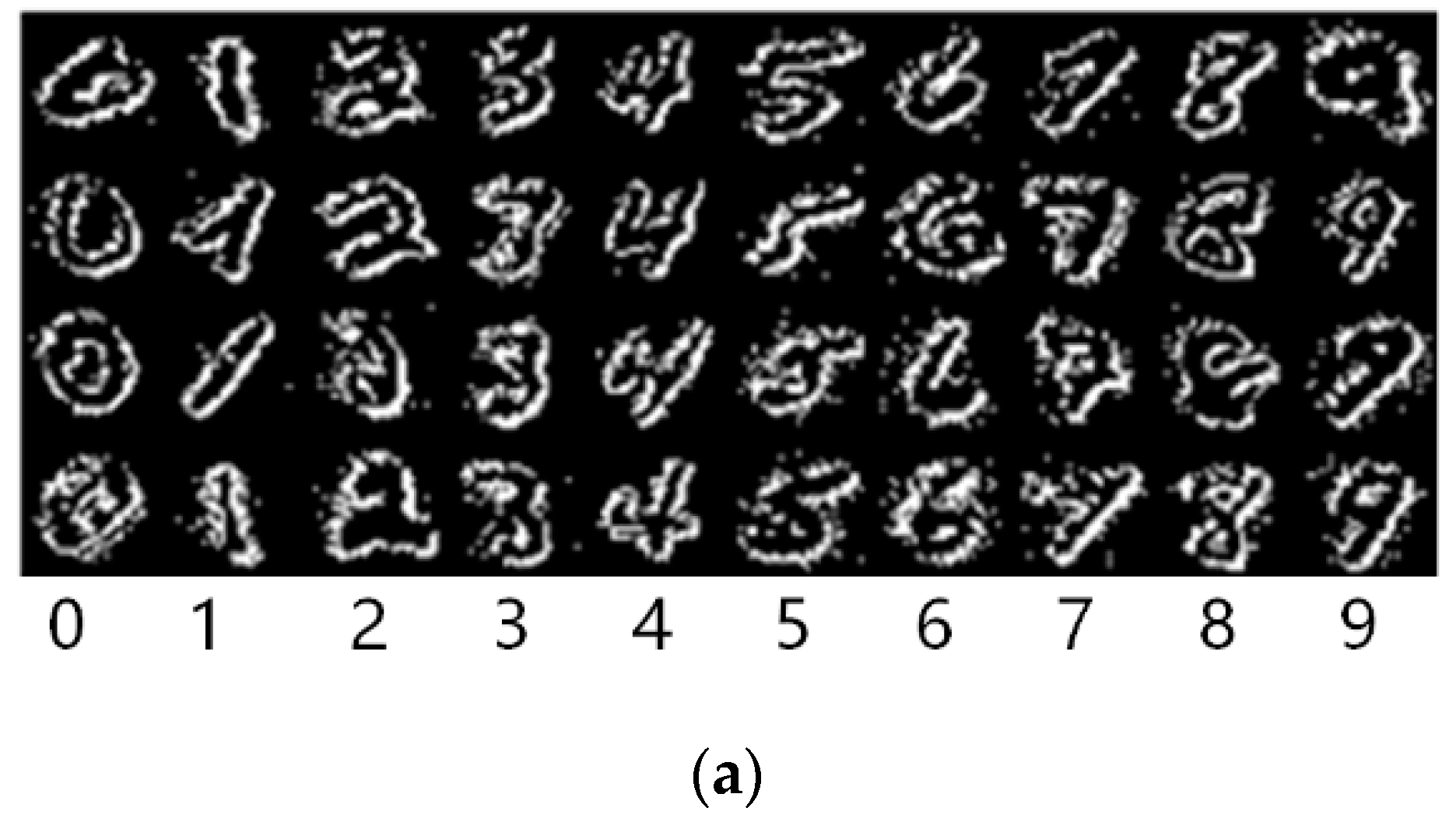

- Serrano-Gotarredona, T.; Linares-Barranco, B. Poker-DVS and MNIST-DVS. Their History, How They Were Made, and Other Details. Front. Neurosci. 2015, 9, 481. [Google Scholar] [CrossRef]

- Chen, G.K.; Kumar, R.; Sumbul, H.E.; Knag, P.C.; Krishnamurthy, R.K. A 4096-Neuron 1M-Synapse 3.8-pJ/SOP Spiking Neural Network With On-Chip STDP Learning and Sparse Weights in 10-nm FinFET CMOS. IEEE J. Solid-State Circuits 2019, 54, 992–1002. [Google Scholar] [CrossRef]

- Tapiador-Morales, R.; Maro, J.M.; Jimenez-Fernandez, A.; Jimenez-Moreno, G.; Benosman, R.; Linares-Barranco, A. Event-Based Gesture Recognition through a Hierarchy of Time-Surfaces for FPGA. Sensors 2020, 20, 3404. [Google Scholar] [CrossRef] [PubMed]

- Serrano-Gotarredona, R.; Oster, M.; Lichtsteiner, P.; Linares-Barranco, A.; Paz-Vicente, R.; Gomez-Rodriguez, F.; Camunas-Mesa, L.; Berner, R.; Rivas-Perez, M.; Delbruck, T.; et al. CAVIAR: A 45k Neuron, 5M Synapse, 12G Connects/s AER Hardware Sensory–Processing–Learning–Actuating System for High-Speed Visual Object Recognition and Tracking. IEEE Trans. Neural Netw. 2009, 20, 1417–1438. [Google Scholar] [CrossRef] [PubMed]

| Hyperparameter. | M (# of Ferns) | S (# of Binary Feature) | α (# of Segment Event) | D (Patch Size Range) | C |

|---|---|---|---|---|---|

| MNIST-DVS | 50 | 12 | 300 | 3, 4 or 5 | 10 |

| Poker-DVS | 8 | 100 | 4 | ||

| Posture-DVS | 10 | 500 | 3 |

| FPGA Platform (Zynq-7045) | Logic Resources | Memory Resources | Power Consumption (mW) | ||

|---|---|---|---|---|---|

| Flip-Flops 1 (437200) | Slice LUTs (218600) | DSP/Multiplier (900) | Block RAM (545) | ||

| MNIST-DVS | 46505 (10.6%) | 21882 (10.0%) | 1 (0.11%) | 527 (96.7%) | 690 |

| Poker-DVS | 31318 (7.2%) | 14702 (6.7%) | 1 (0.11%) | 52 (9.5%) | 564 |

| Posture-DVS | 38843 (8.9%) | 16923 (7.7%) | 1 (0.11%) | 79 (14.5%) | 652 |

| Method | MNIST-DVS | Poker-DVS | Posture-DVS | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc. | Ttrain | Tclass | Acc. | Ttrain | Tclass | Acc. | Ttrain | Tclass | |

| (%) | (s) | (s) | (%) | (s) | (s) | (%) | (s) | (s) | |

| SCNN [15] (DoG + Tempotron) | 62.50 | 1208 | 7825 | 92.53 | 31 | 23.34 | 91.88 | 1548 | 118,717 |

| SCNN [16] (Gabor + Tempotron) | 75.52 | 8805 | 982 | 91.76 | 73.12 | 7.91 | 95.61 | 11,794 | 2984 |

| Statistical Learn. [28] (BoE + SVM) | 74.82 | 31.5 | 27.4 | 93.00 | 0.03 | 0.02 | 98.66 | 45.46 | 44.64 |

| Statistical Learn. [30] (Random Ferns) | 78.08 | 41.3 | 5.0 | 97.2 | 0.6 | 0.1 | 99.59 | 39.3 | 5.0 |

| This work (Random Ferns) | 77.9 | 0.06 (×688) | 0.0066 (×758) | 99.4 | 0.00046 (×1304) | 0.00006 (×1667) | 99.3 | 0.11 (×357) | 0.011 (×456) |

| Design Work | Implementation | Technology | Clock Freq. (MHz) | Power (mW) | DVS Event Throughput (Meps) | Energy Efficiency | Latency (μs) | Algorithm | On-Chip Learning | Benchmark Dataset | Classification Accuracy (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Darwin [22] | 25 mm2 ASIC | 180 nm | 70 | 59 | N/A | N/A | 160,000 | SNN | No | MNIST (non-DVS) | 93.8 |

| ODIN [23] | 0.086 mm2 (core) ASIC | 28 nm FDSOI | 75 | 0.477 | N/A | 78.6 Gsops/W 1 | 31,447 | SNN | Yes | 84.5 | |

| [39] | 1.72 mm2 ASIC | 10 nm FinFET | 105 | 9.2 | N/A | 120.5 Gsops/W | 160 | SNN | Yes | 89 | |

| [24] | 31.9 mm2 ASIC | 350 nm | 100 | 200 | 16.6 | 83 Meps/W | 0.175 | SCNN | No | Real DVS camera | N/A |

| [26] | Spartan-6 FPGA | 45 nm | 50 | 7.7 | 0.05–3 | 6.4–389 Meps/W | 0.5–32 | SCNN | No | Poker-DVS | 97.5 |

| [27] | Zynq-7100 FPGA | 28 nm | 100 | 59 | 0.779 | 13.2 Meps/W | 9.01 | SCNN | No | Poker-DVS | N/A |

| [40] | Zynq-7100 FPGA | 28 nm | 100 | 77 | 2 | 26.0 Meps/W | 6.7 | HOTS | No | NavGestures-sit (DVS) | 93.3 |

| This work | Zynq-7045 FPGA | 28 nm | 100 | 690 | 100 | 145 Meps/W | 0.21 | Random Ferns | Yes | MNIST-DVS Poker-DVS Posture-DVS | 77.9 99.4 99.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, W.; Huang, J.; Wang, T.; Lin, Y.; He, J.; Zhou, X.; Li, P.; Wang, Y.; Wu, N.; Shi, C. A High-Speed Low-Cost VLSI System Capable of On-Chip Online Learning for Dynamic Vision Sensor Data Classification. Sensors 2020, 20, 4715. https://doi.org/10.3390/s20174715

He W, Huang J, Wang T, Lin Y, He J, Zhou X, Li P, Wang Y, Wu N, Shi C. A High-Speed Low-Cost VLSI System Capable of On-Chip Online Learning for Dynamic Vision Sensor Data Classification. Sensors. 2020; 20(17):4715. https://doi.org/10.3390/s20174715

Chicago/Turabian StyleHe, Wei, Jinguo Huang, Tengxiao Wang, Yingcheng Lin, Junxian He, Xichuan Zhou, Ping Li, Ying Wang, Nanjian Wu, and Cong Shi. 2020. "A High-Speed Low-Cost VLSI System Capable of On-Chip Online Learning for Dynamic Vision Sensor Data Classification" Sensors 20, no. 17: 4715. https://doi.org/10.3390/s20174715

APA StyleHe, W., Huang, J., Wang, T., Lin, Y., He, J., Zhou, X., Li, P., Wang, Y., Wu, N., & Shi, C. (2020). A High-Speed Low-Cost VLSI System Capable of On-Chip Online Learning for Dynamic Vision Sensor Data Classification. Sensors, 20(17), 4715. https://doi.org/10.3390/s20174715