Abstract

Disaster robotics is a growing field that is concerned with the design and development of robots for disaster response and disaster recovery. These robots assist first responders by performing tasks that are impractical or impossible for humans. Unfortunately, current disaster robots usually lack the maneuverability to efficiently traverse these areas, which often necessitate extreme navigational capabilities, such as centimeter-scale clearance. Recent work has shown that it is possible to control the locomotion of insects such as the Madagascar hissing cockroach (Gromphadorhina portentosa) through bioelectrical stimulation of their neuro-mechanical system. This provides access to a novel agent that can traverse areas that are inaccessible to traditional robots. In this paper, we present a data-driven inertial navigation system that is capable of localizing cockroaches in areas where GPS is not available. We pose the navigation problem as a two-point boundary-value problem where the goal is to reconstruct a cockroach’s trajectory between the starting and ending states, which are assumed to be known. We validated our technique using nine trials that were conducted in a circular arena using a biobotic agent equipped with a thorax-mounted, low-cost inertial measurement unit. Results show that we can achieve centimeter-level accuracy. This is accomplished by estimating the cockroach’s velocity—using regression models that have been trained to estimate the speed and heading from the inertial signals themselves—and solving an optimization problem so that the boundary-value constraints are satisfied.

1. Introduction

Disasters are defined as discrete meteorological, geological, or man-made events that exceed local resources to respond and contain [1]. Disaster response is the phase of emergency management that is focused on saving the lives of those affected by the disaster and mitigating further damage by the disaster. Over the past 50 years, mankind has become increasingly urbanized, with roughly 55% of the human population living in urban areas [2]. As such, it is increasingly likely that disasters will occur in urban areas. This has led to the formation of specialized Urban Search and Rescue (USAR) teams that are capable of responding to a wide range of disasters in urban areas [3]. Time sensitivity and operation under harsh conditions are among the main challenges for search and rescue. USAR teams have been called upon to conduct operations in areas of extreme heat [4] or radiation [5], as well as environments containing explosive gases [6] or airborne pollutants such as carcinogens [7]. The aforementioned issues have birthed an entire discipline of field robotics, coined disaster robotics (or alternatively, search and rescue robotics) [8]. Disaster robotics is concerned with the design and deployment of robotic agents—whether they be ground, aerial, or marine—that are capable of addressing the challenges of disaster response.

USAR teams often use Unmanned Ground Vehicles (UGVs) to explore areas that would be impossible to rapidly, and safely, explore themselves. Existing robotic platforms can be used in areas where there are several meters of clearance; however, urban ruins can contain rubble piles or damaged buildings with voids that are several centimeters wide, with high tortuosity and verticality, and exhibiting a wide range of surface properties. Current technology is limited in its ability to miniaturize a robot to this scale while retaining enough mobility to traverse these environments. A potential solution to the mobility problem comes in the form of biologically-inspired robotics [9], a field of robotics that is interested in creating robots that mimic animal locomotion. Biomimetic modes of motion include legged locomotion (e.g., rHex [10] and VelociRoACH [11]) and serpentine locomotion (e.g., Active Scope Camera [12]). Research has also been conducted into creating grippers that mimic the adhesive behavior of insects and geckos (e.g., [13,14]). Though these methods are promising, it is still uncertain how they will be miniaturized to the centimeter scale while retaining their mobility across a wide range of surfaces.

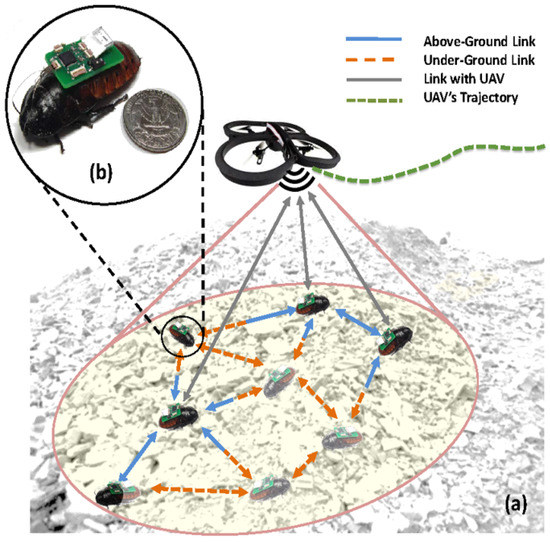

Researchers [15,16,17] have shown that it is possible to remote control a Madagascar hissing cockroach (Gromphadorhina portentosa) via the bioelectrical stimulation of its neuro-mechanical system. These roaches grow to be approximately 60 mm long and 30 mm wide, with a payload capacity of approximately 15 g [15]. They use a combination of pretarsal claws and adhesive pads to cling to and move on a wide variety of surfaces [18], with top speeds of several cm/s. Their exoskeleton is a compliant structure, allowing them to fall from heights and squeeze under obstacles [19] without issue. Additionally, G. portentosa has the ability to survive days without water and weeks without food. This combination of features could make G. portentosa suitable for USAR teams in disaster response. As shown in the literature, these cockroaches can be outfitted with various electronic sensor payloads to be used for search, reconnaissance, and mapping tasks in urban ruins necessitating extreme mobility [20] (see Figure 1). These agents are referred to as biological robots, or biobots. Biobots can be used, both individually and in larger groups, to perform sensing tasks that are impractical or impossible to accomplish by other means. Sensing modalities may include microphone arrays for two-way audio, environmental sensors such as temperature and gas monitors, and cameras or infrared sensors for video feed. Each biobot has wireless capabilities, and special sensor payloads can be fabricated so that biobots can act as mobile repeaters to improve communication reliability.

Figure 1.

Diagram of a biobotic network: (a) Biobots serve as above/below ground agents and can be controlled either individually or collectively via a leader agent (in this case, a UAV). (b) Each biobot is several centimeters in length and a United States quarter dollar is shown for scale comparison [21].

USAR scenarios present substantial challenges for localization and mapping. Traditional techniques for localization that rely on Global Positioning Systems (GPS) are not feasible as GPS signals may be unavailable under the rubble. Furthermore, environmental hazards—such as fire and smoke —make it so that many commonly used ranging techniques (e.g., LIDAR/RADAR) become unreliable. Even vision-based techniques can fail in the presence of dirt, mud, and debris. Dirafzoon et al. [22] have recently proposed a solution for mapping that is based on Topological Data Analysis. This method generates a coordinate-free map of an environment using a group of biobots by keeping track of when they come into close proximity with one another. This map can be used to track the connectivity of a group of biobots, and given sufficient coverage of an area, it can also provide a coarse estimate of what obstacles (e.g., voids in the environment or physical impediments) are present. Two limitations of this approach are: first, the map does not contain accurate metric information—i.e., it cannot give responders the location of point of interest; secondly, the algorithm requires a large number of biobotic agents to be deployed in an area, which may not always be feasible. The two main contributions of this work are as follows:

- Development of a data-driven model for determining the speed of a biobotic agent (G. portentosa) based solely on inertial signals obtained from a thorax-mounted Inertial Measurement Unit (IMU).

- Design and verification of an inertial navigation system that is capable of estimating the pose of G. portentosa without the aid of additional sensing modalities.

Our navigation system requires minimal sensing modalities and will function with a single biobotic agent, eliminating the need for the biobot to use high-bandwidth/high-power sensors, such as cameras, for navigation.

The remainder of the paper is as follows: Section 2 introduces the topic of inertial navigation as well as work that is related to our system; Section 3 provides an overview and mathematical formulation of our navigation system; Section 4 describes the details of our navigation system; Section 5 details the experimental setup used for analysis and validation; Section 6 documents the performance of our navigation system; Section 7 concludes the paper and discusses ongoing and future work.

2. Related Work

A localization system that relies purely on inertial signals is known as an Inertial Navigation System (INS) [23]. Furthermore, inertial signals are often used in conjunction with other sensing modalities to create integrated navigation systems that are capable of localization. A brief review of integrated navigation systems, focusing on those that use IMUs, is presented next.

The goal of a navigation system is to estimate the position and/or orientation of an agent. When both position and orientation are tracked, the resultant system is said to estimate the pose of an agent. In the context of this paper, we will refer to pose estimation as ’localization’. There are a variety of sensing modalities that can be combined with inertial signals to localize an agent, one of the most common being Global Navigation Satellite Systems (GNSS) such as GPS. Systems combining both visual and inertial data are also becoming more common due to a combination of improved on-board processing capabilities, lower camera costs, and increased camera resolution (e.g., [24,25,26,27]). Other common sensors used to supplement inertial navigation systems include range finders such as LIDAR [28,29,30], RADAR [31,32], and SONAR (typically for underwater applications) [33,34].

The position and orientation of an agent can be obtained through double integration of the accelerometer and single integration of the gyroscope signals; however, IMU sensor noise renders these results unusuable after a short period of time for all but the most precise (i.e., expensive) IMUs. As such, navigation systems that rely solely on integrating the inertial signals are rare, and navigation is usually achieved by supplementing inertial signals with data obtained via additional sensing modalities. Usually, the sensor modalities are integrated using a Kalman Filter. Two common INS/GNSS frameworks are the ’loosely-coupled’ and ’tightly-coupled’ approaches [23], which use GNSS position/velocity and GNSS psuedo-range/psuedo-range rate, respectively. When GNSS is unavailable, as is the case for our application, then supplemental sensors, such as those listed in the previous paragraph, can be used for position and/or velocity estimation.

Pedestrian Dead Reckoning (PDR) is a particular application of inertial navigation where IMUs are used in a novel way [35]. The idea behind PDR is to use the inertial signals to determine a person’s stride by keeping track of when the feet hit the ground, thus limiting error growth by providing a measure of position/orientation displacement without the need to integrate the inertial signals themselves. The event pertaining to when a foot hits the ground, known as a zero-velocity update, can be tracked using an analytical [36,37] or a data-driven model [38,39,40]. Additionally, the stride lengths themselves can be determined analytically [41] or via a data-driven approach [42]. Unfortunately, many agents do not exhibit distinctive (and consistent) events such as zero-velocity updates. This is the root cause of the difficulty of inertial navigation in GNSS-denied areas—a lack of sensors and/or events that can be used to reduce the growth of pose error that is caused by noisy IMU signals [43]. As a result, machine learning techniques have been leveraged to learn position and velocity models as an alternative to deriving them from first principles. The effect of the IMU noise is mitigated since the noisy IMU signals are integrated into the model itself. The downside to this approach is that navigation accuracy is directly dependent on the data used to train the model(s). If the data used to train the model is dissimilar to the data obtained in the field, then the learned model(s) will provide poor approximations. Nevertheless, machine learning techniques have been successfully demonstrated for inertial navigation in areas where GNSS is unavailable.

Most inertial navigation systems track the position, velocity, and orientation, as well as the bias terms on the accelerometers and gyroscopes. These states comprise the traditional 15-state inertial navigation system, and it is these states that are estimated via data-derived models. Early examples of position/velocity models can be seen in [44,45]. In both papers, the target application was land vehicle navigation. The novelty of the papers came from using the GPS signal as the ground truth for training two neural networks that would be responsible for position and velocity estimation when GPS was unavailable. Under this framework, the vehicle used an INS/GPS system when GPS was available and switched to using the neural networks when GPS was unavailable. The position displacements were estimated using a neural network that took the INS estimates of velocity and orientation as inputs. In [44], the velocity estimation neural network took the INS velocity estimate and time information as inputs, whereas [45] only used the INS velocity estimate as input. Other authors have also taken the approach of using INS estimates to learn models for position and velocity estimation (e.g., [46,47]); however, the need to use INS estimates creates a potential problem—if GPS is lost for an extended period of time, then the navigation system will degrade in performance since the INS estimates will become increasingly erroneous. In [44], this problem was mitigated by creating a variant of their system that used the output of the velocity estimation neural network as input to the position estimation neural network instead of the INS velocity estimates. In [47], this issue was resolved by combining random forest regression with Principal Component Regression (PCR) [48]. Other authors have proposed using windowed inertial signals for pose estimation, thus avoiding this particular issue. Windowed approaches can be broken into two categories: models that use Long Short Term Memory (LSTM) neural networks [49,50,51,52] and models that use Convolutional Neural Networks (CNN) [38,53].

Some authors have created learning models that combine both LSTM and CNN approaches (e.g., [54]) while others have favored using ensemble learning methods in lieu of neural networks [55,56]. The majority of the models in the literature involve position or velocity estimation; however, these are not the only quantities that can be estimated. Orientation [50,54] and speed [38] can be estimated and the noise parameters for Kalman Filter frameworks can be learned as well [53].

There are two competing paradigms on how learned models should be incorporated into a navigation system: end-to-end frameworks and pseudo-measurements. The idea behind the end-to-end framework is that a learned model is sufficient to output the pose of an agent given its inertial signals. Systems utilizing the end-to-end paradigm tend to incorporate deep neural networks involving CNNs or LSTMs [50,51,54,57]. The primary benefit of an end-to-end framework is the ability to implicitly model the relationship between the agent’s egomotion (measured by the inertial signals) and its pose. As such, it becomes possible to create navigation systems using lower quality IMUs. The major downside of this approach is that the accuracy of these models is highly dependent on the data used to train them. Proponents of the psuedo-measurement approach argue that the best way to incorporate learned models is by adding them as additional measurements to an existing navigation system (e.g., a Kalman filter) [38,49,52,53,55,58]. The benefit of this approach is that the existing navigation system is augmented rather than replaced; however, the challenge of this approach comes from determining the details of how the learned model(s) will be integrated into the existing system. A middle ground approach has also been used in the literature, with the idea being to use the original navigation system when possible and the learned model(s) only when necessary [44,45,46,47,56]. A list of current trends and challenges in integrated navigation systems can be found in [59,60].

Our target application, navigation of centimeter-scale rubble stacks using biobotic agents, is a form of terrestrial navigation in a GNSS-denied environment. It shares similarities to the pedestrian and automobile localization problems that are commonly seen in the literature; however, there are two key distinctions: first, there are no zero-velocity events that occur with guaranteed regularity, and secondly, biobotic agents frequently change both their speed and their direction. To resolve these issues, we developed an inertial navigation system that utilizes regression models for estimating speed and heading. Speed regression was chosen as an alternative to velocity regression to simplify the training process. Other papers, such as [38], estimate speed for zero-velocity detection; however, these papers are concerned with determining if an agent is moving (i.e., a classification problem), whereas we are interested in how fast an agent is moving. Our algorithm computes heading by using a regression model to estimate the heading correction that must be applied to headings that are computed by an Attitude and Heading Reference System (AHRS). This idea of using a data-driven model to correct an INS output is similar to [55]; however, in that paper, the authors developed a model for determining position error. Although our speed and heading models use windowed inertial signals, similar to many of the papers listed in the preceding paragraphs, we explicitly extract the features from the inertial signals [61] whereas other approaches, such as [50,54], do this implicitly. Our models use random forests to avoid the overfitting issues that are commonly seen in neural networks. Our approach of using random forests is similar to [46]; however, that paper proposed a navigation system that took INS velocities as input and returned position displacements as output. We incorporate the speed and heading models into our navigation system by using them to solve a two-point boundary-value problem.

3. Problem Formulation

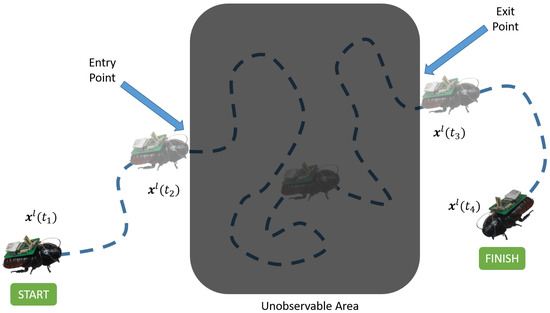

Consider the following scenario, illustrated in Figure 2: a USAR team needs to search a target area that is not easily accessible via conventional tools (e.g., a rubble stack exhibiting high tortuosity and centimeter-scale clearance). The team deploys a biobotic agent into the target area, where it explores the environment while simultaneously collecting pertinent sensor data. Once the biobot enters the target area, its pose is no longer observable; however, the biobot will eventually leave the target area, whereby its pose will once again be observable. The goal is to reconstruct the biobot’s trajectory using inertial data so that any signals of interest can be localized.

Figure 2.

Problem Description: Suppose we want to track the trajectory of an agent over time. The agent can be observed during the time intervals and ; however, the agent is not observable during the time interval . As such, the agent’s state is unknown during this time interval. The goal of our algorithm is to estimate the agent’s state during the interval so that the trajectory over the interval can be reconstructed. See Section 3 for a description of the agent’s state, .

Our biobots use low-cost IMUs to decrease unit cost and increase scalability. These IMUs have noisy gyroscope signals that make it difficult to accurately estimate the orientation of the biobot. As such, the gyroscope signals must be supplemented with additional information to limit the error growth of the orientation. We chose to use the direction of gravity [62] as the supplemental information. A downside to this approach is that it necessitates an algorithm for determining the direction of gravity in the body frame of the biobot. Ordinarily, this process would be accomplished using an orientation estimate that is obtained from integrating the gyroscopes; however, this strategy is not viable due to sensor noise. To avoid this issue, we restrict the agent to 2D planar environments so that the direction of gravity in the body frame is known, and we leave the extension to 3D for future work.

The trajectory estimation problem can be formulated as a nonlinear two-point boundary-value problem [63,64], where the biobot’s pose at both the entry (start state) and exit (final state) points are known. In this boundary-value problem, the objective is to find an optimal state trajectory between the start and end pose. Optimality is measured by how well the reconstructed state trajectory matches the estimated speeds and headings that are obtained from the IMU mounted on the biobot.

For our application, we define a local tangent frame, l, and use it as both the reference and resolving frames of our navigation system, where uses Cartesian coordinates. Additionally, we define the body frame (denoted by b) to be centered on the IMU that is mounted to the body of the biobotic agent itself, with origin . Note that the subscript of the term means “frame b with respect to (w.r.t.) frame l”, and the superscript means “resolved using frame l”. The coordinate frames are illustrated in Figure 8.

We define the biobot’s state, , to be its position, speed, and heading:

where , , , denote the biobot’s position, speed, and heading, respectively. We assume that the biobotic agent always moves in the direction that it is facing. Under this assumption, we can recover the biobot’s velocity, , by combining its speed and heading: and . Note that this model is very similar to the Dubins car [65] and Reeds–Shepp car [66] models that are commonly used in robotics; the difference is that those models use speed and angular rate as inputs, whereas our model defines speed to be a state and uses specific force and angular rate as inputs (see Figure 3), denoted as and , respectively.

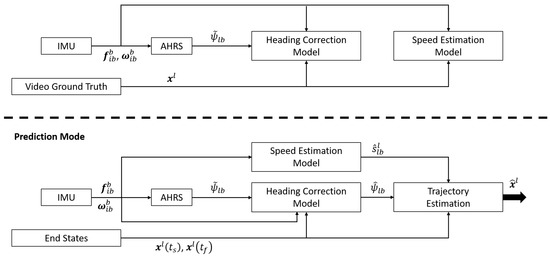

Figure 3.

System Pipeline: The algorithm is broken into two modes of operation: Training Mode and Prediction Mode. In training mode, inertial signals are combined with video ground truth data to train regression models that can be used to estimate the speed and heading of the agent. In prediction mode, the trained speed/heading models are used to estimate the speed and heading from the inertial signal input. The estimated speeds/headings are then combined to reconstruct the trajectory of the agent. and denote the specific force measured by the accelerometer and the angular rate measured by the gyroscope, respectively—frame i denotes the Earth-centered inertial frame. is the biobot’s estimated speed, is the heading estimate obtained from the AHRS, and is the biobot’s estimated heading after it has been corrected.

We represent the biobot’s true trajectory as a smooth mapping in , , where denotes the biobot’s exit time. Our goal is to find a reconstruction of , , where denotes the set of parameters that govern . The optimal parameters are obtained by minimizing the following cost functional:

subject to the boundary conditions, where and denote the entry and exit times, respectively. We pose this problem as a supervised machine learning problem, where the objective is to minimize Equation (2) by training a model that is capable of generating using inertial signals. The ground truth values of are obtained from video footage of the biobot.

4. Methodology

The goal of our navigation system is to estimate a biobot’s trajectory during time intervals where it cannot be observed. We assume that the biobot’s state is known at the beginning and end of these time intervals, and use the inertial signals obtained from an IMU mounted on the biobot to generate a curve that best approximates the biobot’s trajectory. Our algorithm uses machine learning to accomplish this goal and the system pipeline is shown in Figure 3.

The models used in the algorithm are trained via supervised learning. As such, there are two phases to our algorithm: Training Mode and Prediction Mode. In training mode, features are extracted from the inertial signals and used to generate regression models for estimating the speed of the biobot and correcting the heading that is obtained from an Attitude and Heading Reference System (AHRS). In prediction mode, these two models are used to estimate the biobot’s trajectory.

This section provides the details necessary to implement our algorithm, and is broken down into subsections that correspond to the modules shown in Figure 3. Additionally, all models were implemented using the MATLAB Statistics and Machine Learning toolbox [67].

4.1. Attitude and Heading Reference System (AHRS)

An AHRS is a partial INS that only tracks orientation. These systems are often used to supplement gyroscopes that are too noisy to be used as standalone systems for computing orientation. Due to the noise on our gyroscopes, we use the Madgwick Filter [62], an AHRS commonly used in the robotics community, to compute the biobot’s orientation. The Madgwick Filter is a complementary filter that combines gyroscope integration, accelerometer leveling, and magnetic heading to produce an accurate estimate of orientation. Furthermore, the Madgwick Filter generates an orientation estimate for each IMU sample that is given as input. Since our application is prone to magnetic interference, we do not use the magnetic heading component of the Madgwick Filter. The Madgwick Filter and the ramifications of excluding the magnetic heading component from it are elaborated upon next, starting with the filter’s cost function:

where , , and , denote the estimated orientation (in quaternion form) of the body w.r.t. the local tangent reference frame; direction of the body’s acceleration due to gravity (i.e., a unit vector), resolved in the local tangent reference frame; and the estimated direction of the body’s acceleration due to gravity, resolved in the body frame, respectively. Note that and ∘ denote the quaternion conjugate and quaternion product, respectively. The interested reader can learn more about using quaternions as rotation operators in [68].

The idea behind Equation (3) is that the correct orientation estimate will be the orientation that minimizes the difference between the direction of the acceleration due to gravity resolved in the reference frame (in quaternion form), , and the direction of the acceleration due to gravity resolved in the body frame. The underlying assumption of Equation (3) is that there exists a means by which can be estimated. As mentioned in Section 3, we assume that the biobot is operating on the plane, and under this assumption, . The gradient of Equation (3) w.r.t. , denoted as , is used to update the orientation of the biobot, as follows:

where denotes the sampling interval of the IMU, is the gain of the filter, and (-) and (+) are used to designate whether a term has been computed before or after the update, respectively. The derivation of Equation (4) can be found in [62]. Since the biobot is restricted to the plane, we do not need to worry about singular points in Euler Angle sequences. As such, we extract the estimated heading of the biobot from the the Madgwick Filter, , by converting the quaternion orientation output to an extrinsic ZYX Euler Angle Sequence and storing the rotation around the +Z axis. The details on converting between quaternions and Euler Angles can be found in [68], Chapter 7.

As mentioned previously, the Madgwick filter has a third component to it that involves magnetic heading. Specifically, that term’s purpose is to create a unique orientation fix by using the direction of the Magnetic North Pole as an orthogonal direction to the direction of gravity. Since we cannot determine Magnetic North due to the magnetic interference that is likely present in our application, we can only restrict the orientation to a plane that is orthogonal to the estimated direction of gravity, . As such, the orientation generated by our AHRS will drift over time. This drift is caused by the gyroscope error and grows linearly in time, as shown in Figure 5 and discussed in Section 4.4.1.

4.2. Feature Extraction

Inertial Measurement Units produce specific force and angular rate readings, , at a specified sampling rate. Authors commonly use these inertial signals in their direct form (i.e., specific force/angular rate) or integrated form (e.g., velocity/orientation) when adding machine learning to an INS. Our speed estimation (Section 4.3) and heading correction (Section 4.4) models use time-domain features extracted from windowed inertial signals. This section describes the process of generating the inputs to our models from the calibrated inertial signals themselves.

Our model is trained using the dataset, , where n denotes the number of data points in the dataset. The kth feature vector is denoted as , where denotes the timestamp associated with the kth data point. Henceforth, “IMU sample” will refer to the IMU readings themselves, and “data point” will refer to the elements of , unless explicitly stated otherwise.

Each data point is computed from a window of inertial data. We use a one-second sliding window with 50% overlap. This particular configuration was chosen based on empirical evidence that was shown in [61]. The goal of that paper was to recognize when biobots were exhibiting various motion-based activities using inertial signals obtained from an IMU mounted on their thorax. Speed regression and heading correction are also motion-based, hence we chose this particular configuration for the sliding window.

Each data point is timestamped using the timestamp of the first video frame in the window. The ground truth speed and heading that are associated with each data point are obtained via an algorithm that corrects the ground truth video frames so that the ground truth speeds and headings integrate to match the ground truth positions. This corrective algorithm is detailed in Section 4.6.

Each feature vector consists of 60 time-domain features shown in Table 1 that are extracted from the windowed IMU data. These features are commonly used for activity recognition using wearable sensors and were shown in [61] to also be useful for classifying motion-based activities for biobots. We also normalize the features to have zero mean and unit variance—this is commonly done to prevent features from having undue influence due to their relative magnitude to other features. By extracting features from the windowed IMU data, we reduce the dimensionality of our model input to 60, irrespective of window size. Furthermore, the extracted features increase our models’ robustness to noise and reduce their susceptibility to spurious IMU readings (e.g., outliers and/or missing data).

Table 1.

Feature vector.

4.3. Speed Estimation Model

The goal of our speed estimation model is to estimate the speed of the biobot, , using feature vectors created from windowed inertial data. There are two components to the speed estimation model: a classification model that can detect when the biobot is stationary, denoted as , and a regression model that can estimate the speed of the biobot when it is not stationary, denoted as . Explicitly, the structure of the speed estimation model is given by this equation:

where denotes the timestamp of the kth feature vector, as discussed in Section 4.2.

4.3.1. Speed Regression

Biobots (G. portentosa) exhibit a tripod gait and preliminary analysis [69] has shown that it is possible to directly estimate a biobot’s speed from its inertial signals, as opposed to integrating the signals over time, as a result of the wobbling motion that is induced by the tripod gait. Using these findings, we designed , i.e., , where F denotes the number of features; is only used when the biobot is moving, as described in Equation (5).

Ordinarily, speed is estimated by integrating the accelerations that are extracted from the IMU’s specific force readings; however, this is not feasible for low-cost IMUs because sensor noise renders these values unusable after a brief period of time. The error characteristics of our IMU (see Table 5) place it into this category. Furthermore, this issue is exacerbated by the lack of consistent measurements (e.g., zero-velocity updates) that can be used to curtail error growth, thus limiting our ability to apply traditional INS frameworks such as Kalman Filters. Fortunately, by using , the biobot’s speed can directly estimated from its inertial signals, thus eliminating the linear error growth over time that occurs when obtaining the speed from integrating the acceleration. We realized using a random forest [70] of regression decision trees [71,72]. Specifically, we used the Classification and Regression Tree (CART) proposed in [73]. Random forests are a type of ensemble learner [74,75] that utilize a collection of decision trees as base learners. Random Forests are widely used for their intepretability, strong dataset generalization abilities, and computational efficiency. We use 100 trees in our model. This number was chosen by analyzing the error of our model as the number of trees was varied (see Figure 4). Our decision trees are grown until the leaf nodes have partitions of, at most, five data points each. The average speed of each leaf node is given by:

where denotes the speed of the ith data point in the node, n denotes the number of data points in the node, and is the coefficient used to fit a piecewise-constant approximation of the biobot’s speed. Each decision tree is trained using approximately 27% of the training data, obtained via bagging, and 20 of the 60 possible features, chosen randomly. We use the mean squared residual, denoted as , as the splitting criterion of our decision trees:

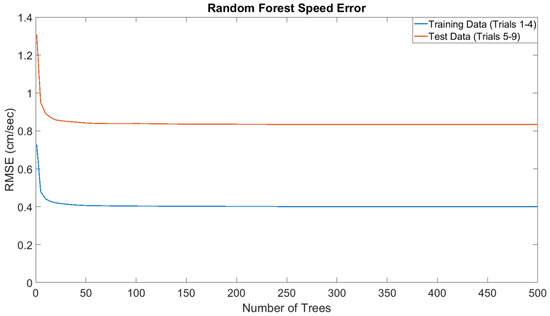

Figure 4.

Analysis on Number of Trees: The Root Mean Square Error (RMSE) of the speed of the biobot is shown as a function of the number of trees in the random forest speed regression model, . We use 100 trees for our speed regression model because the RMSE does not decrease if additional trees are added. Four trials were used to train the random forest regression model and the errors are reported for both the training data and the test data. Each trial is approximately 30 min long.

More information on splitting criteria for decision trees can be found in [74], Chapter 5. The speed of the biobot is determined by traversing each regression tree in the random forest down to a leaf node, obtaining that leaf node’s corresponding value, and averaging the results of each of the decision trees as follows:

where denotes the average speed computed by the jth decision tree, and m denotes the number of trees in the random forest (100 in our case). The hyperparameters for are shown in Table 2.

Table 2.

Random forest model hyperparameters.

4.3.2. Stationarity Detection

Zero-Velocity detection is commonly used in INS applications. It was shown in [61] that the zero-velocity (i.e., zero-speed) state could be accurately tracked in biobots using a random forest model. We used a similar model to construct a stationarity detector , where F denotes the number of features. The goal of is to assign one of two labels to each feature vector, denoting whether the biobot is moving () or stationary (). These labels are used in Equation (5) to estimate the biobot’s speed. is very similar to and the hyperparameters for it are shown in Table 2. The primary differences between and stem from the fact that is a binary classifier. As such, classification decision trees are used and the splitting criterion of the decision trees is different. Specifically, we use the Gini Index, denoted as :

where denotes the indicator function, denotes the speed of the ith data point in the node, and n denotes the number of data points in the node. The stationarity of the biobot is predicted by taking the majority vote of the decision trees in .

Since is associated with a window of data, multiple ground truth video frames could fall within the window. As such, the number of zero-speed video frames needed to flag as stationary is a parameter. We flagged data points as stationary when 100% of their video frames were stationary.

4.4. Heading Correction Model

Our AHRS generates estimates of heading for each IMU sample, denoted by ; however, these estimates have an error that increases linearly in time, as discussed in Section 4.1. Our heading correction model resolves this issue and has three goals: first, it detrends the error in ; secondly, it averages to produce a heading for each data point, ; finally, it corrects to generate a more accurate estimate of heading of the biobot. Succinctly, this process can be written as:

where denotes the final heading estimate that is outputted by the heading correction model and , , where F denotes the number of features. is a regression model that corrects , where is the estimated heading correction. Equation (10) is solved by splitting the heading correction model into two submodules. The first submodule detrends the AHRS output and averages it to generate ; the second submodule is , and it applies the corrective term needed to generate , as mentioned previously. The hyperparameters for can be found in Table 2.

4.4.1. Detrending the Heading Error

The actual and estimated headings of the biobot are known at the entry and exit times, denoted as and , where and denote the entry and exit times, respectively. Using this information, we create a linear model :

where the heading error is defined as, . is then used to detrend the heading error in .

The heading associated with the kth feature vector, , is computed by averaging the AHRS output for that window:

where denotes the number of IMU samples in the kth data point’s window, and i denotes the AHRS output for the ith IMU sample in the window. Figure 5 shows the effect of detrending the heading error on a biobot dataset. In this figure, the detrending algorithm reduces the linear error growth to a constant error that fluctuates due to sensor noise.

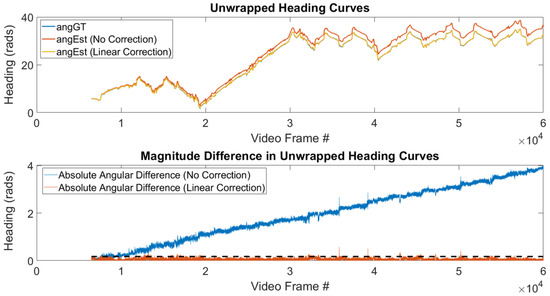

Figure 5.

Detrending AHRS Output: The unwrapped heading of the biobot is shown for a 30-min trial. The top graph shows the heading in radians and the bottom graph shows the original error between the estimated and ground truth headings as well as the error after detrending the AHRS output. The dashed line represents a heading error of . Notice how the error of the detrended AHRS does not grow over time.

4.4.2. Learning Heading Corrections

We designed to be a random forest of CART regression trees, similar to . The goal of is to correct the data point’s heading estimate using . This heading correction, denoted as , is needed to remove the error that is introduced as a consequence of averaging the original AHRS estimates. approximates using a piecewise-constant function, where the coefficient associated with each leaf node is computed from the average of that particular leaf node’s data points, . The mean-squared residual is used as the splitting criterion:

where i denotes the ith data point in the node and n denotes the number of data points in the node. is predicted by averaging the predictions of each of the decision trees in :

where denotes the average heading correction computed by the jth decision tree, and n denotes the number of trees in the random forest (100 in our case). The estimated heading of the biobot at time , , is computed using Equation (10).

4.5. Trajectory Estimation

Thus far, we have discussed how to obtain an estimate of the biobot’s heading, , and speed, , for each feature vector, . In this section, we will discuss how to use these estimates to obtain an estimate of the biobot’s position, . Furthermore, we will explain how to estimate the biobot’s state trajectory, , which tracks the biobot’s state over the time interval, ], where and denote the biobot’s entry and exit times, respectively. Before we begin, we need to introduce the terminology needed to describe .

We define a trajectory segment to be the state trajectory over a time interval . Until now, we have described a biobot as having a singular entry point and a singular exit point; however, this needn’t be the case. It is possible for a biobot to have multiple entry and exit points over the course of its trajectory—for example, the biobot could repeatedly enter and leave a rubble stack. The ith entry/exit point is used to define the time bounds of the ith trajectory segment, and the trajectory segments are concatenated in a piecewise fashion to obtain the estimate of the biobot’s state trajectory:

where n denotes the number of entry/exit points, and the subscript of is used to emphasize the fact that the estimated state trajectory is only valid for the ith trajectory segment, which is denoted as . Additionally, we require that the biobot’s state at the entry point of the ith trajectory segment be identical to its state at the exit point of the previous trajectory segment. This means that and . This requirement ensures that is an approximation of .

The estimated state, , requires the evaluation of , , and . We can compute and by linearly interpolating the speed (Section 4.3) and heading (Section 4.4) estimates obtained from data points that fall within the time bounds of . In order to satisfy the constraint that , the estimated and actual velocity trajectories need to have the same area under their curves: . This is unlikely to happen as it would require the expected error of to be zero—in other words, both and , the signals that are used to construct , would need to have expected errors of zero. To resolve this issue, we perturb the speed and heading trajectories with piecewise-cubic splines (described in Section 4.5.1) so that . The perturbed speed and heading trajectories are denoted as and , respectively, and are generated as follows:

where and denote the speed and heading perturbation splines, respectively. The perturbed speed and heading trajectories, illustrated in Figure 6, are then used to compute :

where and denote the estimated position and velocity trajectories of , respectively.

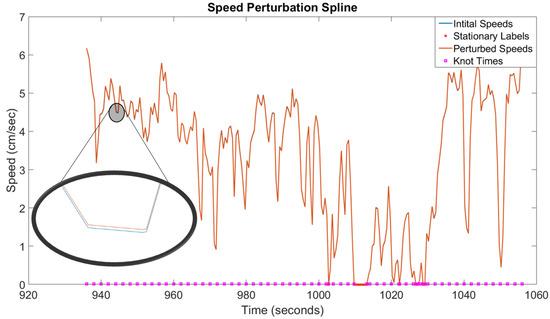

Figure 6.

Perturbation Splines: A two-minute section of a biobot’s estimated speed trajectory is shown. The interpolated speed trajectory, , is shown in blue. The perturbed speed trajectory, , is shown in orange. and are magnified in the inset picture to highlight the fact that the trajectories are different. The knot locations of the speed perturbation spline are marked with magenta squares. Additionally, data points that have zero speed are marked with red x’s. Notice how is zero during the stationary sections of —this behavior is achieved by using the algorithm discussed in Section 4.5.3.

To summarize, the biobot’s estimated trajectory, , consists of a set of trajectory segments, , where the ith trajectory segment is constructed by using splines to perturb its speed and heading trajectories so that .

4.5.1. Perturbation Spline Construction

and are the speed and heading perturbation splines that are needed to correct the speed and heading for a trajectory segment so that the boundary condition, , is satisfied—this process was described in Equation (16). The index i is reused in this section to indicate the ith spline piece of a particular trajectory segment. The speed and heading perturbation splines of a trajectory segment are clamped piecewise-cubic splines that have the following form:

where denotes the start time of the spline, denotes the end time of the spline, and are the four coefficients of . The spline pieces are concatenated to form the entire perturbation spline:

where n denotes the number of pieces in the spline, takes the form described by Equation (18), , , and for . The duration of each spline piece, , is one of the four hyperparameters of the trajectory estimation algorithm, shown in Table 3.

Table 3.

Trajectory estimation hyperparameters for varying trajectory segment lengths.

Each of the spline pieces has a set of four coefficients that can be modified to alter the shape of the spline. Since we are interested in using the splines to perturb the speed and heading trajectories, it makes sense to define the coefficients of Equation (18) in terms of the spline piece’s knot locations, denoted as y, as these locations will control how much the speed and heading are perturbed at specific times. Each spline piece has two knot locations, obtained by evaluating the spline piece at the start and end times, and denoted as . Additionally, to ensure that the spline pieces fit together, we will also need to consider the first time derivative of :

The first time derivative evaluated at the knot locations is denoted as . Evaluating and at the knot locations generates the following four Equations:

where . Equations (20)–(23) can be solved to find the coefficients of each spline piece:

Each spline piece has its own set of and parameters; however, there are two restrictions that limit the values that these parameters can take. The first restriction is that the perturbation spline must be zero at a trajectory segment’s entry and exit points because those states are known and should remain unaltered. The implication of this is that , where n denotes the final spline piece of . The second restriction is that the perturbation spline and its first derivative must be continuous. This necessitates that the following two statements be true: and . These two restrictions mean that each perturbation spline will have degrees of freedom, where n is the number of spline pieces in S. As such, each trajectory segment will have optimizable parameters since each trajectory segment contains both a speed perturbation spline and a heading perturbation spline:

4.5.2. Perturbation Spline Optimization

We denote the optimizable parameters of a trajectory segment’s perturbation splines as , where are the parameters associated with the speed perturbation spline and are the parameters associated with the heading perturbation spline.

Recall that the goal of our navigation system is to solve the two-point boundary problem introduced in Equation (2). The cost functional itself, , measures the distance between the true and estimated state trajectories. The estimated speed and heading trajectories, and , were constructed by linearly interpolating the estimates obtained via Equations (5) and (10). These estimates represent our best guess of the actual speed and heading trajectories since they are constructed from models trained to minimize the error in the speed and heading estimates, as described in Equations (7), (9), and (13). Additionally, we know that can be constructed by combining and as described in Equations (16) and (17). This means that we already have the that minimizes , sans the constraints. We also know the biobot’s state at the entry and exit times. This means that we can define , , , and so that all constraints involving speed and heading are satisfied. Additionally, since the biobot’s state is known at the time of entry, we can define so that the the starting position constraint is satisfied. As a result of these manipulations, all of the constraints are satisfied except for the end position constraint, . This endpoint constraint is the reason why we require the speed and heading perturbation splines, and the rest of this section discusses how to optimize these perturbation splines so that the end position constraint is satisfied.

We define a surrogate cost functional for each trajectory segment, denoted as , which aims to minimize the perturbation of our estimates, and , by placing a weighted cost on the amount of speed and heading perturbation. Additionally, we incorporate the trajectory segment’s end position constraint into as a weighted penalty term, ensuring that the end position constraint can be satisfied to an amount. The cost has the following form:

The first term is obtained by numerically integrating the integrand using a sampling rate of 30 Hz. , , and are weights that adjust the impact of the amount of speed perturbation, amount of heading perturbation, and end position constraint violation, respectively. These weights are hyperparameters for the trajectory estimation algorithm and the values that we used can be found in Table 3.

is optimized using the fminunc function of the MATLAB Optimization toolbox [76]. This particular function uses the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm ([77], Chapter 6), where the line search ([77], Chapter 3) is performed via a cubic interpolation function. It should be noted that none of the parameters in are shared between trajectory segments. This means that each trajectory segment in can be optimized in parallel, which will increase the algorithm’s computational efficiency.

4.5.3. Handling Stationary Points on the Perturbation Spline

The perturbation splines should not alter the biobot’s speed and heading trajectories when the biobot is stationary. To guarantee this, the perturbation splines must be zero when the biobot is stationary. This can be accomplished by first creating the perturbation spline that is described in Equations (18)–(25). The resulting perturbation spline, , is then modified using the following steps:

- Stationary points are defined to be points where . Stationary intervals occur whenever there are two, or more, consecutive stationary points. Find all stationary points and stationary intervals in .

- Each interval of stationary points will become its own spline piece with coefficients equal to zero: . The new spline piece will start and end at the first and last points of the stationary interval, respectively.

- Stationary points will become the ends of spline pieces, and the knot location and knot derivative at the stationary points will be zero. This is accomplished by:

- (a)

- Any spline piece that falls entirely within a stationary interval is removed from .

- (b)

- Any spline piece that ends at a stationary point has coefficients: .

- (c)

- Any spline piece that begins at a stationary point has coefficients: .

- (d)

- Any spline piece that contains stationary intervals and/or stationary points is split into multiple spline pieces such that the new spline pieces terminate on a stationary point:

- i

- If both ends of a spline piece are stationary points, then the spline piece will take the form described in step 2.

- ii

- If one end of the spline piece is a stationary point, then it will take the form of 3b or 3c, depending on whether the stationary point is at the beginning or end of the spline piece.

Once has been altered, the remaining optimizable coefficients can be optimized using Equation (26), as described in Section 4.5.2. A positive side effect of this algorithm is that it can reduce the total number of optimizable parameters in when the biobot is stationary for prolonged periods of time.

4.6. Enhancing the Ground Truth

Our trajectory estimation algorithm (Section 4.5) uses two random forest regression models to estimate a biobot’s speed (Section 4.3) and heading (Section 4.4). These models are only as good as the ground truth data that is used to train them, so it is imperative that we use ground truth speeds and headings that are as accurate as possible. A key component of this is ensuring that the ground truth speeds and headings integrate to match the ground truth positions. We use video recordings to obtain the ground truth state, , and the specifics of this are detailed in Section 5.4.1. In this section, we will present the algorithm that we use to ensure that the ground truth speeds and headings are correct—that they integrate to match the ground truth positions obtained from the video data.

The ground truth video data give us a discrete set of ground truth speeds and ground truth headings. We linearly interpolate these discrete speeds and headings to generate continuous speed and heading trajectories for the ground truth, denoted as and , respectively. We will use the same notation as in Equation (16) to differentiate the original interpolated trajectories from their perturbed counterparts. We compute the ground truth speeds and headings using the same approach that was used for trajectory estimation. First, we split the ground truth state trajectory into a series of trajectory segments, as described in Equation (15), which we shall denote as . The starts and ends of the trajectory segments in are arbitrary—we use trajectory segments that are one minute each, but this needn’t be the case. Once has been created, we define the perturbed ground truth speeds and headings for each trajectory segment as and , respectively, using a similar form to Equation (16):

where the only difference is that we are now using interpolated ground truth speeds and headings as opposed to interpolated estimates of the ground truth speed and heading. The ground truth perturbation splines of the ith trajectory segment, denoted as and , are piecewise-cubic perturbation splines that take the same form as the perturbation splines described in Equations (18)–(25). Additionally, the coefficients of and take the form of Equation (24). Finally, we use an almost identical variant of the algorithm defined in Section to ensure that the ground truth perturbation splines do not alter the ground truth speed and heading trajectories when the biobot is stationary. The only difference in the algorithm is that the stationary points are determined by using the ground truth speeds obtained from the video frames, denoted as , instead of the speed estimates, .

Using this information, we define a cost functional for each ground truth trajectory segment, , which has a form similar to Equation (26):

where is the perturbed ground truth obtained by integrating and . The only difference between Equations (28) and (26) is the introduction of the term, which tracks how much the perturbed ground truth position differs from the original ground truth position that is obtained from linearly interpolating the video frames, . The end position constraint is retained as a weighted penalty term because the ground truth trajectory, , must be continuous. , , , and are weights that adjust the emphasis that is placed on matching the ground truth position trajectory, amount of speed perturbation, amount of heading perturbation, and end position constraint violation, respectively. These weights are hyperparameters for the ground truth optimization algorithm and the values that we used can be found in Table 4.

Table 4.

Hyperparameters for Ground Truth Optimization.

5. Experimental Setup

This section details the experimental setup that we used to test and verify our algorithm. The hardware and software that we used are listed, and details are provided as to how we constructed our experimental testbed, as well as the biobots themselves. The section concludes with the experimental procedure that we used to collect data, including how we extracted the video ground truth from the video data.

5.1. Hardware

We use a MetaMotion C sensor board (Mbientlab Inc., San Francisco, CA, USA). The MetaMotion C sensor board, shown in Figure 7, is a circular system-on-chip that weighs 3 g and measures 24 mm diameter × 6 mm height. The board has 8 MB of onboard flash storage and is powered by a 3V CR2032 200mAH Lithium coin-cell battery. The board has a 16-bit BMI160 IMU (Bosch GmbH, Reutlingen, Germany) that contains a three-axial accelerometer and a three-axial gyroscope. Processing is handled by a 32-bit Arm Cortex M4F CPU and communication is accomplished via a 2.4 GHz transceiver that uses Bluetooth 4.2 Low Energy. The performance of the BMI160 IMU is shown in Table 5.

Figure 7.

MetaMotion C Sensor Board: From left to right: (1) Coordinate frame of MetaMotion C sensor board (this is the body frame of our algorithm); (2) 3D-printed MetaMotion C case; (3) MetaMotion C PCB; (4) The IMU (inside the case) is mounted to the thorax of the biobot with the +Y direction of the IMU facing in the direction of the biobot’s antennae.

Table 5.

BMI160 specifications and performance *.

We chose the MetaMotion C for two main reasons: first, it is small enough to fit over the thorax of a biobot (see Figure 7), ensuring that it does not alter the biobot’s center of mass; secondly, the MetaMotion C is light enough to not interfere with the biobot’s locomotion. In this study, we just needed to localize the insect; therefore, we did not need the custom backpacks we designed previously for biobotic control of insects (e.g., [17,69,78]).

5.2. Arena

All data were taken inside of a circular arena with a diameter of 115 cm, shown in Figure 8. This circular arena is inscribed inside of a 48” (approx. 122 cm) square of plywood with 155 mm high walls made of poster board. The walls were coated with petroleum jelly to prevent the biobot from climbing on them. Weights were placed at the corners of the plywood base to ensure that the arena did not shift relative to a LifeCam HD-3000 (Microsoft, Redmond, WA, USA) that was mounted 74” (approx. 188 cm) off the ground via a tripod. A laptop was connected to the camera to stream 1280 × 720 resolution video at 30 frames per second.

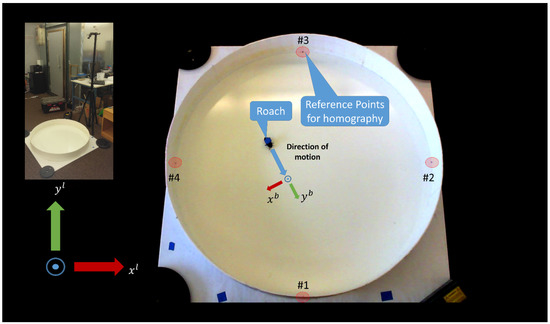

Figure 8.

Experimental Setup: Circular arena of 115 cm diameter. Camera and perspective views are presented. The blue object on top of the roach is the IMU. The local tangent reference frame (frame l) and roach body frame (frame b) are also illustrated. Note that the roach body frame is centered on the IMU and the local tangent reference frame has its origin at the center of the circular arena—these frames have been shifted in this figure for illustration purposes only.

The reference frame for the system has its origin at the center of the arena, and is aligned with the four points that are numbered in Figure 8. The aforementioned four points were used for computing the homography to convert image space (pixels) to the local tangent reference frame (centimeters), which forms our physical space. The four points form two diameters, and the intersection of these diameters was used to find the center of the circular arena in the physical space. Figure 8 highlights the important components of the arena and includes both a perspective view of the setup and a camera view.

5.3. Biobotic Agent

The biobot is a non-instrumented (see Section 5.1) adult female Madagascar hissing cockroach (Gromphadorhina portentosa) that measures roughly 60 mm length × 30 mm width (see Figure 7). The roach was taken from a colony that we have raised at NC State since 2013. Additionally, the biobot was kept near room temperature, 75–80 °F, and fed a diet of dog treats.

5.4. Data Collection

The MetaMotion C board (Firmware version 1.3.7) was mounted on the thorax of a biobot as shown in Figure 7. The biobot was then placed inside the arena as shown in Figure 8 and allowed to move around inside the arena for 30 min while accelerometer and gyroscope data was logged to the MetaMotion C’s internal storage. The accelerometer and gyroscope ranges were set to g and ±500 /s, respectively (see Table 5). These values were chosen because the biobot’s movement never exceeded these sensor limits. Both sensors were sampled at 100 Hz, which is consistent with the IMU sampling rate used in [69]. The IMU was interfaced via Mbientlab’s MetaBase app (version 3.3.0 for Android) on an Android 6.0.1 device.

Video data were used to determine the ground truth position, speed, and heading of the biobot and this is discussed further in Section 5.4.1. The IMU data and video data were synchronized by tapping the IMU three times before the experiment and three times after the experiment. The synchronization taps could be located in both the video feed and the inertial signals, allowing us to map the video time (measured in seconds) to the IMU time (measured in Unix Epoch time). We found that a linear mapping was sufficient to align the IMU and video data to within one video frame. The coefficients of the line were found using a least-squares optimization.

The MetaMotion C sensor board does not start/stop the accelerometer and gyroscope at the same time. As such, there is a slight offset in the timestamps associated with these two sensors. To address this, we manually aligned the accelerometer and gyroscope signals to each other by looking at their IMU times (Unix Epoch Time) and discarding any readings before their first shared sample time and any readings after their last shared sample time. This alignment ensured that the number of accelerometer and gyroscope samples was identical. Furthermore, we used the timestamp of the accelerometer to mark the IMU samples (i.e., the timestamp for the ith accelerometer/gyroscope reading was defined to be the ith accelerometer timestamp). This alignment method allowed us to align the IMU sensors to within two milliseconds of each other. The two millisecond misalignment between the accelerometer and gyroscope was acceptable because our video ground truth was only accurate to one video frame ( s). The protocol for data collection is as follows:

- Start the MetaBase app and configure the MetaMotion C board to log data internally.

- Attach the MetaMotion C to the thorax of the biobot.

- Start the video recording and place the biobot in the arena.

- Start IMU data logging via the MetaBase app and tap the IMU three times in succession.

- Let the biobot move in the arena for 30 min.

- Tap the IMU three times in succession.

- Stop IMU data logging via the MetaBase app and stop video recording.

- Retrieve the data from the MetaMotion C via the MetaBase app.

This protocol was used to create a dataset consisting of nine trials. The same biobot was used for each of the nine trials and the biobot was allowed to rest for at least 24 h between trials. By doing this, we ensured that the biobot was fully rested between trials, thereby eliminating any effects that exhaustion could have on the biobot’s movement.

5.4.1. Video Ground Truth

The ground truth state of the biobot was obtained from the biobot’s video footage. Specifically, we placed an elliptical bounding box around the biobot and used it to compute the biobot’s position, speed, and heading. To accomplish this, we placed blue tape over the MetaMotion C case (see Figure 9) and used the following approach (summarized in Algorithm 1) to compute the biobot’s pose for each video frame:

Figure 9.

Video Tracker Output: Left Image: Biobotic agent inside the arena. The video frame number is shown in the upper left corner of the image. The center of the IMU is marked as a green square and the heading of the biobot is shown with a blue arrow. Right Image: Close-up shot of the biobot. The green square denotes the center of the IMU and the blue arrow denotes the biobot’s heading. Additionally, the contour of the biobot’s body is highlighted with a red ellipse and the center of the biobot’s body is marked with a magenta square. All of the aforementioned elements were obtained using the computer vision algorithm described in Section 5.4.1.

- Compute a background image for the video that excludes the biobot and convert the image to the HSV color space.

- For each video frame:

- (a)

- Isolate the elements of the video frame that differ from the background: (i) Convert the video frame to the HSV color space and subtract the background image from the video frame. This generates a difference image. (ii) Define a set of thresholds applied to the S and V channels of the difference image to identify the parts of the image that differ substantially from the background image. As such, the only nonzero pixels in the thresholded difference image will be those of the MetaMotion C and the biobot.

- (b)

- Find the Meta Motion C by applying a color threshold to the HSV image. In our setup, we applied a color threshold to isolate blue-colored objects since this was the color of the MetaMotion in the video feed (see Figure 9).

- (c)

- Generate an edge image by applying a Canny edge detector [79] to binary detections of the MetaMotion C and the biobot. Set the pixel locations of the MetaMotion C in the edge image to be zero. The only non-zero pixels in the edge image will belong to the body of the biobot.

- (d)

- Fit an ellipse to the elliptical edge (i.e., body of the biobot) in the edge image and store the ellipse’s center and orientation in pixel coordinates. This ellipse is the elliptical bounding box that will be used to compute the ground truth state for the video frame.

| Algorithm 1 Pose Algorithm. |

Input: video F thresholds used to detect agent tag thresholds used to detect MetaMotion tmm |

Output:, the set of biobot poses |

1: ←CREATEBACKGROUNDIMAGE(,’hsv’) |

2: for each video frame do |

3: ←CONVERIMAGE(f,’hsv’) |

4: ←REMOVEBACKGROUND(,) |

5: |

6: ←FINDAGENT(, |

7: ←FINDMETAMOTION(,,) |

8: |

9: ←GENERATEEDGEIMAGE(,’CANNY’) |

10: ←REMOVEMETAMOTION(,) |

11: |

12: L←FINDELLIPSE() |

13: ←EXTRACTPOSE(L) |

14: Store pose in |

15: end for |

16: return |

We applied a homography to convert the biobot’s elliptical bounding box from image space (pixel coordinates) to physical space (centimeter coordinates)—the reference points used in the homography are shown in Figure 8. The biobot’s heading, , was defined to be the direction of the major axis of the elliptical bounding box. The biobot’s position, , was defined to be the center of the elliptical bounding box. The speed was determined by computing the biobot’s position displacement over time. Additionally, we used a speed threshold to determine when the biobot was stationary. This was necessary because the biobot’s speed could fluctuate over time when it was stationary due to pixel differences in the biobot’s position between video frames.

The procedure for computing the ground truth does not guarantee that the biobot’s ground truth speed and heading trajectories will integrate to match the biobot’s ground truth position trajectory. We resolved this by using the algorithm discussed in Section 4.6 to refine our ground truth.

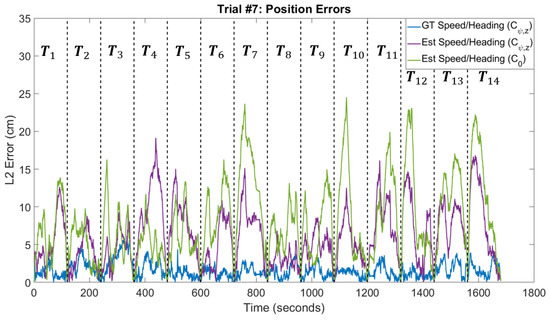

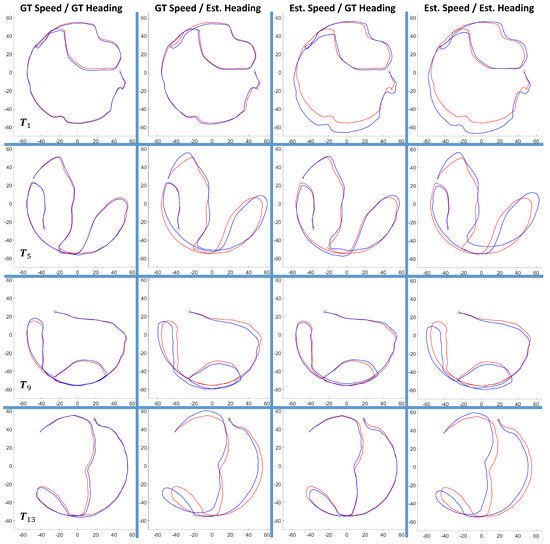

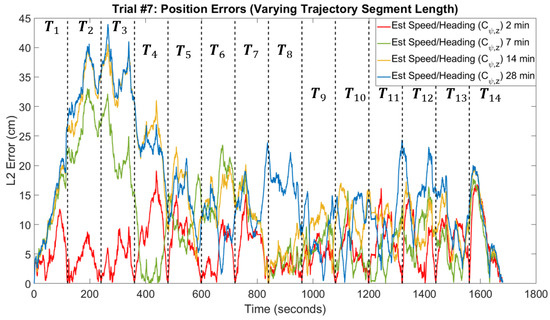

6. Results and Discussion

We analyzed our navigation framework using a dataset that consists of nine trials. Each trial is approximately 30 min and the trials were conducted using the procedure described in Section 5. The first four trials were used for training and the remaining five trials were used for testing. The hyperparameters for the speed and heading correction models were set to the values specified in Table 2. The hyperparameters for the trajectory estimation algorithm can be found in Table 3 for varying trajectory segment lengths. Ground truth refinement was performed using the hyperparameter values in Table 4 and trajectory segments of one-minute length. All other results were obtained using trajectory segments of two-minute length, unless explicitly stated otherwise. Finally, data points were obtained by using a one-second sliding window with 50% overlap.

6.1. Speed Regression

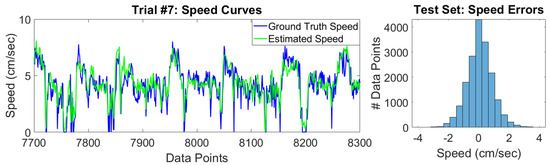

The performance of our speed estimation model is shown in Table 6. Figure 10 highlights a section of trial #7’s estimated speed curve as well as the overall distribution of speed errors in the test set. As expected, the training set error is lower than the test set error because the random forest model was trained to minimize the errors in the training data.

Table 6.

Performance of speed estimation model.

Figure 10.

Test Set Speed Errors: (Left Plot) A five-minute section of Trial #7’s speed curve is shown, where the estimated and ground truth speeds are compared. (Right Plot) Distribution of the speed errors between the estimated and ground truth speeds. Each bin has a width of 0.4 cm/s.

Overall, our model is able to track the ground truth speeds, with an RMSE of less than 1 cm/s for each of the trials (see Table 6). With that said, our model underestimates speeds that have large magnitude. The reason for this is the lack of training samples that have large speeds, as shown in Figure 11. Interestingly, our model has a very low mean signed error, which means that underestimates in the speed are counteracted by overestimates in other data points. The implication of this is that the estimated and true trajectories have comparable lengths.

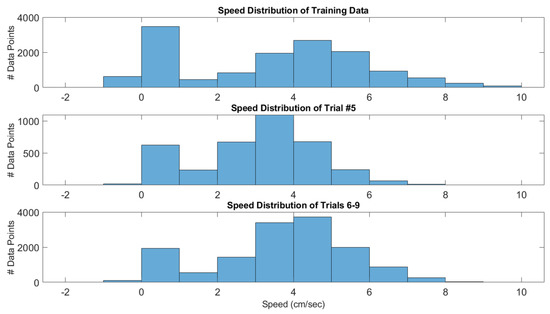

Figure 11.

Ground Truth Speed Distributions: Each bin has a width of 1 cm/s. Note that the negative speeds are a result of the perturbation that occurs in the ground truth refinement algorithm. (Top Plot) Speed distribution of the training data (trials 1–4). (Middle Plot) Speed distribution of trial #5. Trial #5 has a large number of data points in the 2–4 cm/s range, which isn’t well-sampled in the training data; this has an adverse effect on its estimated speed. (Bottom Plot) Speed distribution of trials 6–9.

Lastly, we would like to highlight trial #5 in Table 6, which has a mean signed error that is much larger than the other trials. The reason for this is that trial #5 is abnormally slow, as shown in Figure 11. Specifically, it has a large number of speeds that are 2–4 cm/s, which incidentally happens to be a speed range that is not largely sampled in the training set. This highlights the limitation of the machine learning approach to inertial navigation that was mentioned in Section 2, namely that machine learning approaches depend on having test set data that is similar to the training set data. If we had added trial #5 to the training data, we could have improved the performance of the test set data, especially trial #8, which has a similar speed distribution to trial #5.

6.2. Stationarity Detection

The confusion matrix for our stationarity detector can be found in Table 7, where “Stationary” is the positive class and “Moving” is the negative class. Additionally, several performance metrics are shown in Table 8.

Table 7.

Test set confusion matrix for stationarity detection.

Table 8.

Performance of stationarity detector.

The performance metrics show that our stationarity detector has a high accuracy rate; however, this is misleading because the biobot is much more likely to be moving than stationary, as shown in the confusion matrix. As a result, the Matthews Correlation Coefficient (MCC), which shows the correlation between the predicted and true outputs, is a better indicator of the stationarity detector’s performance. Since the MCC is high, we can conclude that the stationarity detector is working. Furthermore, the false-positives and false-negatives are the results of ambiguities and choices in our annotation, which is discussed next.

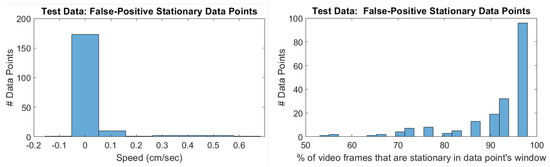

Each data point’s label is created from the video frames that fall within its window, as stated in Section 4.3.2. As a result, the percentage of video frames needed to flag a data point as stationary is a hyperparameter. We set this percentage to be (i.e., all of the video frames need to be stationary). Figure 12 shows two things: first, the overwhelming majority of the false-positive data points have zero-speed; secondly, most of the false-positive data points have at least of their video frames as stationary. Since we are using data points that are one second long, with a video frame rate of 30 fps, this means that most of the false-positive data points have 28/30 video frames as stationary. Dropping the number of video frames needed to flag a data point as stationary to 28, instead of 30, would remove most of the false-positive samples. Trial #9, in particular, had a large number of false-positive data points, so making this change would increase its performance.

Figure 12.

Speed Distribution of False-Positive Stationary Samples: (Left Plot) Ground truth speeds for samples falsely flagged as stationary. Bin width of 0.1 cm/s. (Right Plot) Percentage of video frames that are labeled as stationary in false positive data points. Bin width of 2%. Many false-positives are caused by data points that have most, but not all, of their video frames flagged as stationary—altering the video frame threshold in the stationarity detector would resolve this issue.

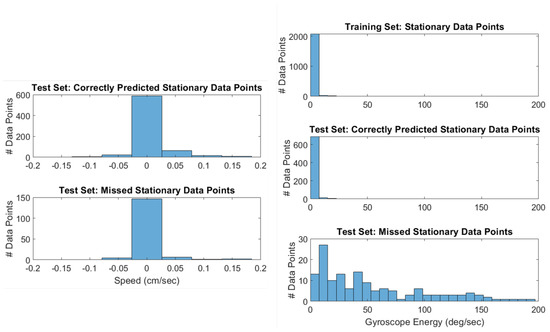

Our stationarity detector only considers when an agent has zero-speed. As a consequence, when the biobot is rotating in place, the biobot is still flagged as “stationary”. This is the cause of most of the false-negative data points, as shown in Figure 13. Figure 13 reveals that the overwhelming majority of false-negatives are indeed zero-speed. Furthermore, the speed distribution of the false-negative data points is similar to the speed distribution of the true-positive data points. Figure 13 also shows us that the reason why false-negatives occur is because the biobot is rotating in place, as evidenced by the gyroscope energy. A solution to this issue would be to separate the current stationary label into two labels: “stationary” and “rotating in place”. This would resolve most of the false negatives, and would be especially useful in trials 6–7, which have a large number of instances where the biobot is rotating in place (i.e., false-negatives).

Figure 13.

Missed Stationary Samples: (Top Left Plot) Ground truth speeds for samples falsely flagged as moving. Bins have widths of 0.04 cm/s. (Bottom Left Plot) Ground truth speeds for samples falsely flagged as moving. (Top Right Plot) Gyroscope energy in stationary samples for training data. Bins have width of approximately 7.6 deg/s. (Middle Right Plot) Gyroscope energy in correctly predicted stationary samples. (Bottom Right Plot) Gyroscope energy in missed stationary samples. This shows that false-negatives occur when the biobot is rotating in place (i.e., high gyroscope energy).

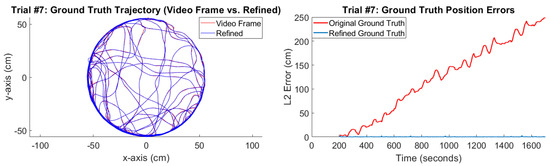

6.3. Ground Truth Refinement

The ground truth refinement algorithm, discussed in Section 4.6, is an optimization algorithm that perturbs the speeds and headings obtained from each video frame (Section 5.4.1) such that they integrate to match the positions in those video frames. Table 9 and Figure 14 show the results of the ground truth refinement algorithm. Table 9 shows the error—that is, the distance between the true position trajectory that is obtained from the video frames and the position trajectory that is integrated from the speeds and headings—where the “baseline” method refers to the speeds and headings that are obtained from the video frames themselves, and the “refined” method refers to the perturbed speeds and headings that are obtained from the ground truth refinement algorithm. We see that the ground truth refinement algorithm is required because the position trajectory obtained from the baseline method does not track the true position trajectory. This error occurs because the speed and heading of each video frame is slightly off from the true speed and heading. Furthermore, since this error is uncorrected, it causes the position error to grow linearly over time, as shown in Figure 14. By contrast, the refined speeds and headings obtained from the ground truth refinement algorithm are perturbed so that the position error does not grow over time. Figure 14 also shows what the ground truth trajectory looks like after refinement.

Table 9.

Error (cm) for ground truth positions.

Figure 14.

Ground Truth Refinement: (Left Plot) Refined Ground Truth Trajectory. Red Line: The position trajectory obtained from the video frames themselves. Blue Line: The position trajectory obtained by integrating the speeds and headings that have been refined via the ground truth refinement algorithm (Section 4.6). (Right Plot) Ground Truth Position Errors. The figure shows the distance between the position trajectory obtained from the video frames and the position trajectory that is integrated from the speeds and headings. Red Line: The original speeds and headings that are obtained from the video frames themselves. Blue Line: The refined speeds and headings that are obtained from the ground truth refinement algorithm. The ground truth refinement algorithm corrects the speeds and headings obtained from the video frames so that the position error does not grow over time.

6.4. Heading Correction

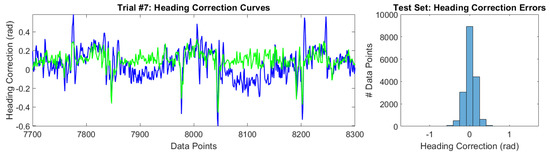

The heading correction model is broken into two components, as discussed in Section 4.4: the first component detrends the error in the AHRS output, and its results were shown in Figure 5; the second component is a regression model that estimates the heading correction needed to align the AHRS output with the refined ground truth headings. The second component is what is presented in this section and the performance of the heading correction regression model is shown in Table 10. Additionally, a sample of a heading correction curve is shown in Figure 15 where the distribution of the heading correction errors are also displayed. Table 10 reveals that our heading regression model reduced the heading error in of the data points in the test set. Furthermore, the heading regression model reduced the average heading error of the test set by , where the percentage improvement over using only the detrended AHRS outputs was calculated as follows:

Table 10.

Performance of heading correction model.

Figure 15.

Test Set Heading Correction Errors: (Left Plot) A five-minute section of Trial #7’s heading correction curve is shown, where the estimated heading correction (Green line) is compared against the ground truth heading correction (Blue line). (Right Plot) Distribution of the heading correction errors between the estimated and ground truth heading corrections. Each bin has a width of 0.17 radians.

The underlying premise of the heading correction regression model is that heading corrections are independent of the heading trajectory itself; instead, they are determined by the egomotion of the biobot. As evidence in support of this hypothesis, we observed that the distribution of the heading corrections is almost identical between the training and test datasets, even though these datasets comprise nine different heading trajectories.

Furthermore, the similarity between the heading corrections in the training and test datasets explains the consistency in the performance of the heading correction regression model, as shown in Table 10. The only outlier is trial #6, which has a larger error than the other trials because the biobot attempted to climb on the arena wall during the trial.