An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders

Abstract

1. Introduction

1.1. Traditional Assessment Methods

1.2. The State of the Art

1.3. Summary of Previous Studies and Main Contributions of This Study

2. Methods

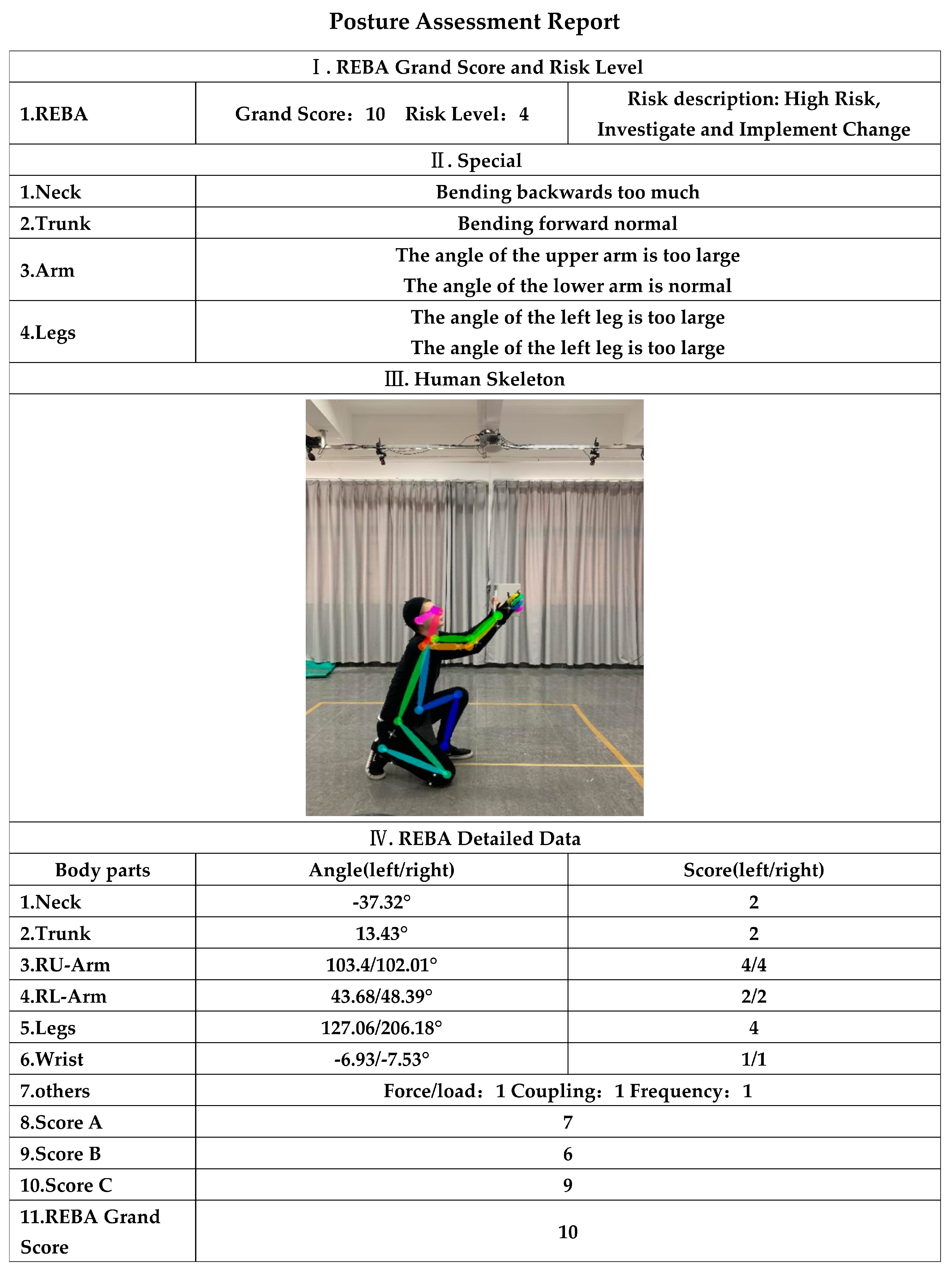

2.1. Quick Capture System: A CPM-Based REBA System

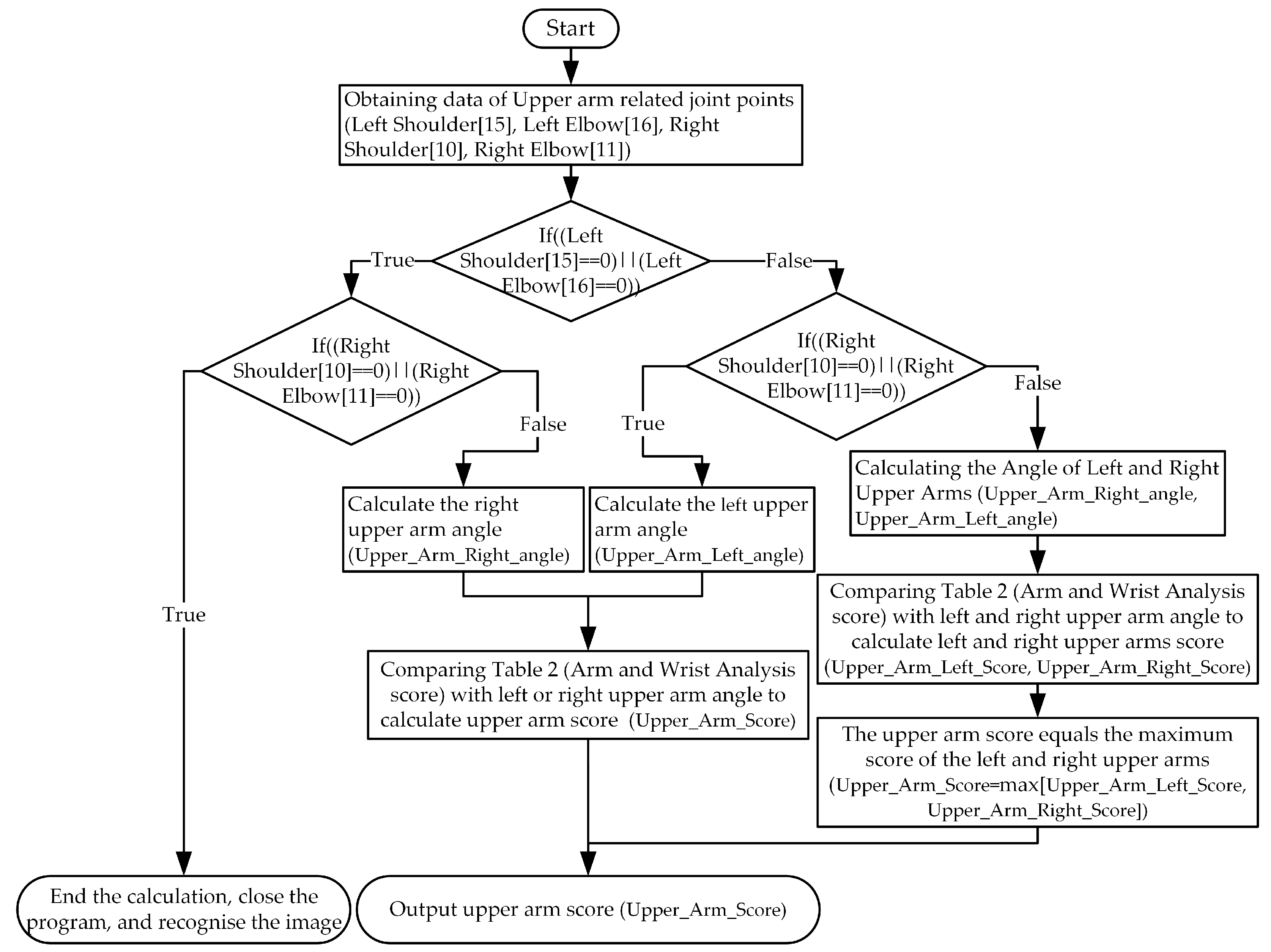

2.1.1. REBA with Rule-Based Human Risk Calculating (HRC) Formula

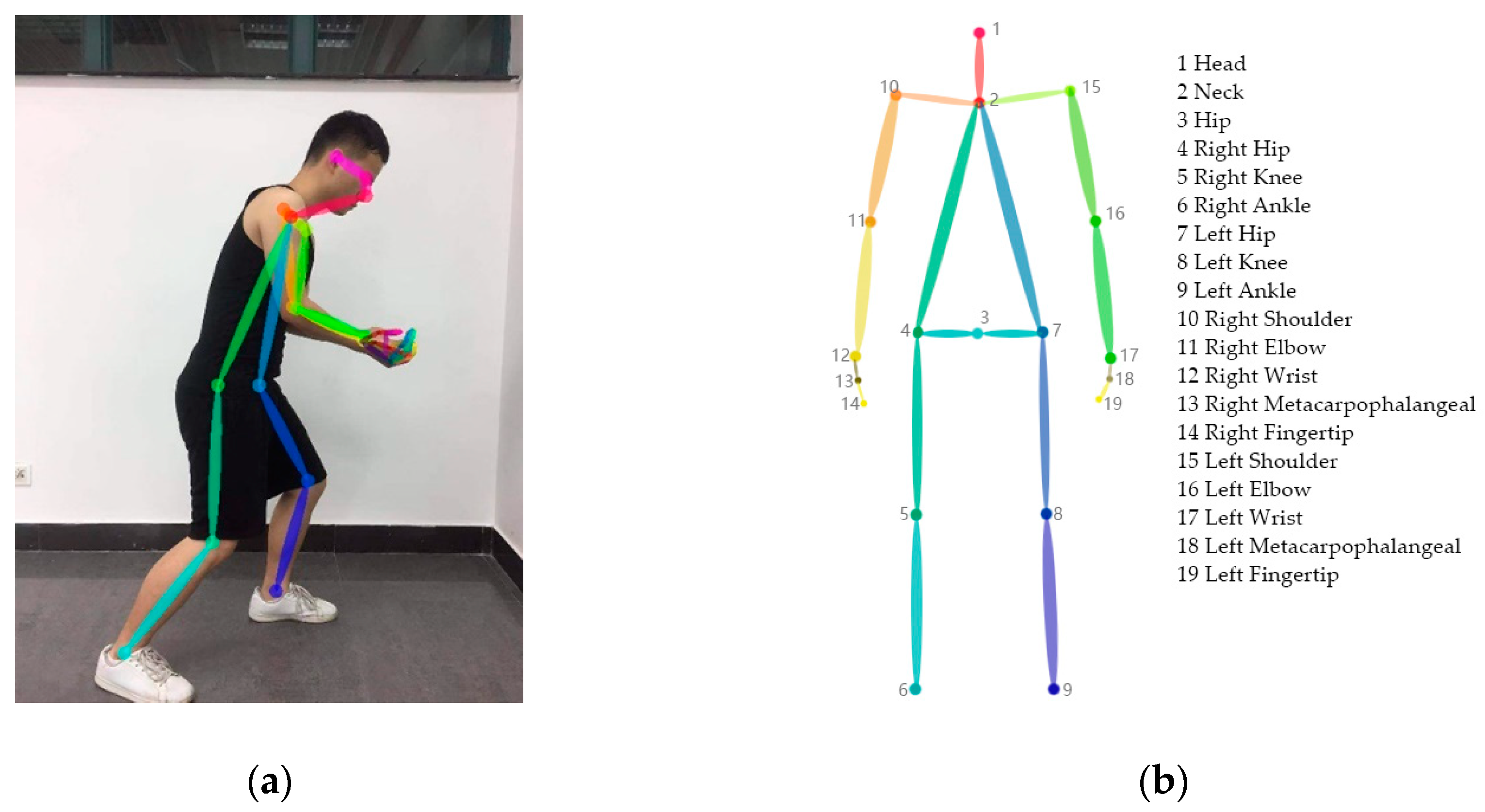

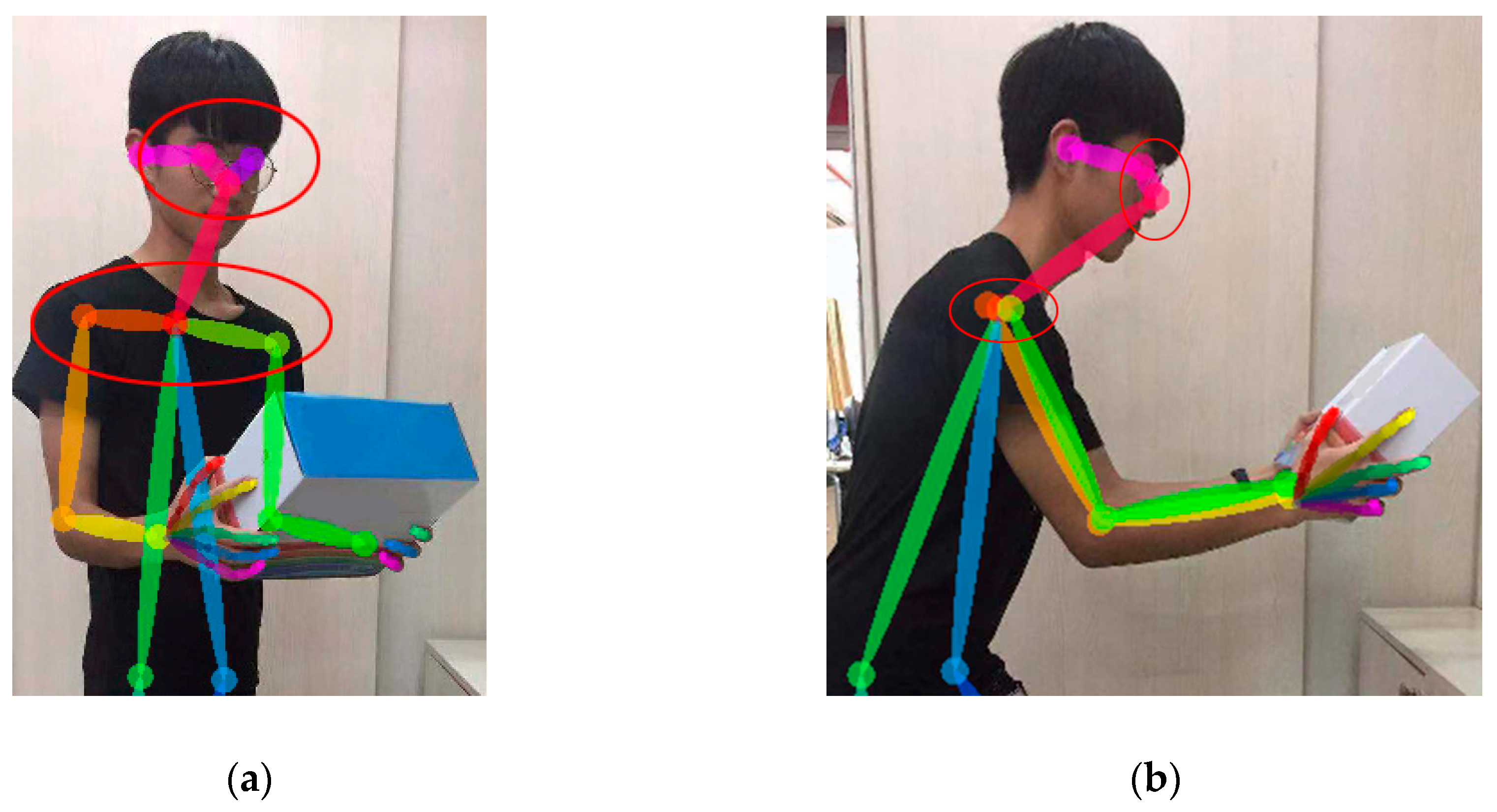

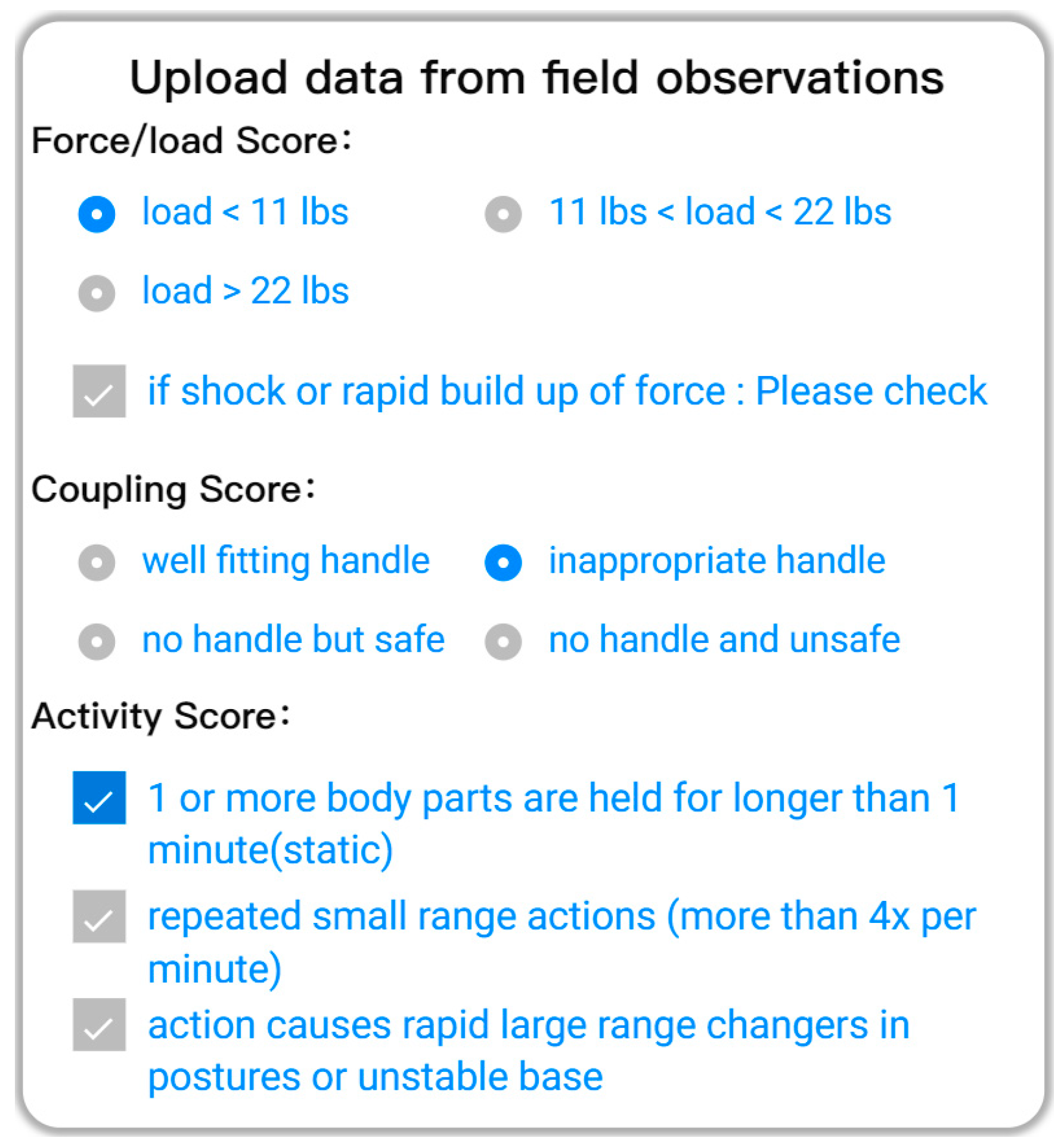

2.1.2. Data Retrieval

2.2. Evaluating Experiment

2.2.1. Participants

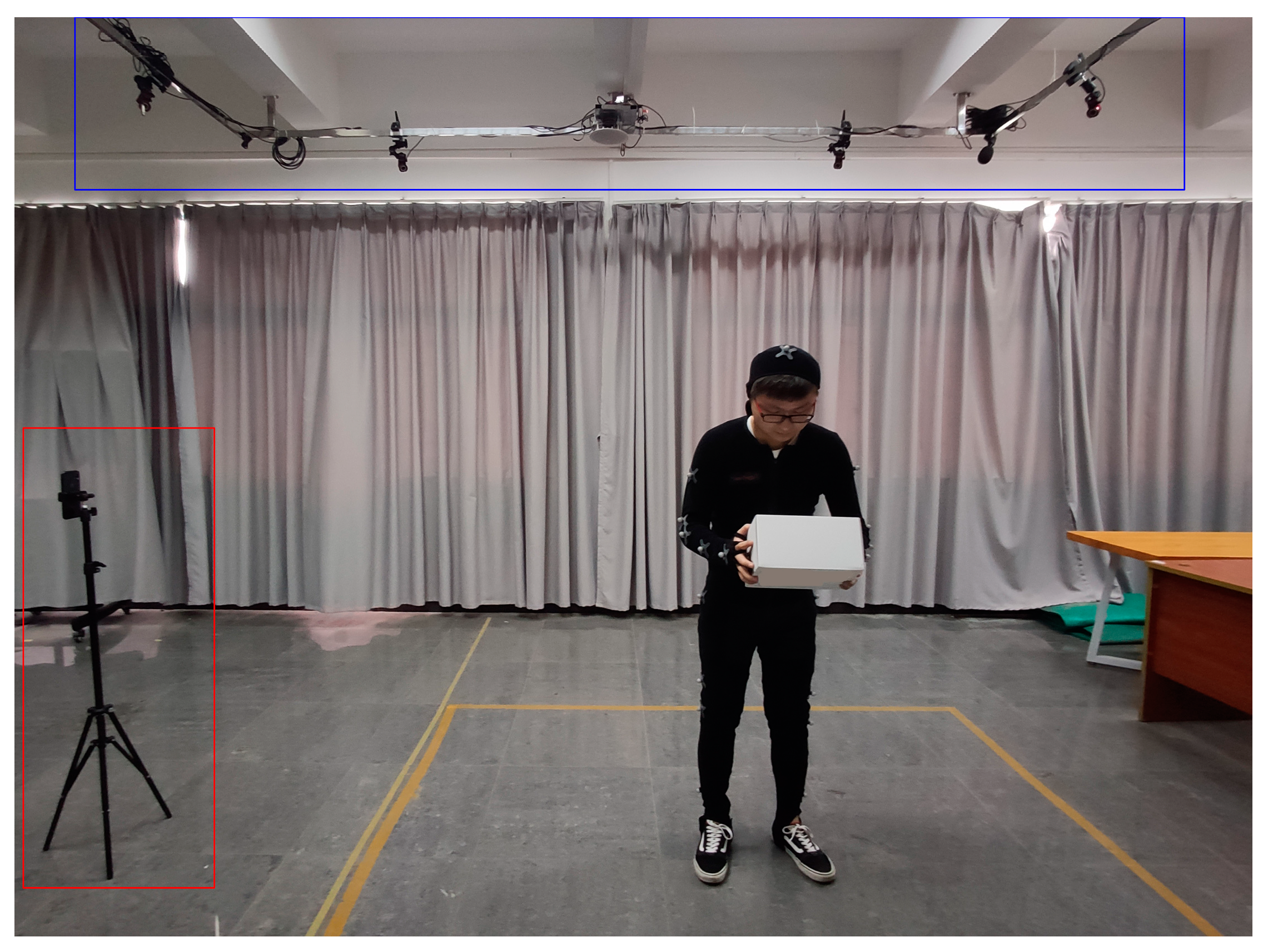

2.2.2. Equipment and Apparatus

2.2.3. Experimental Setting

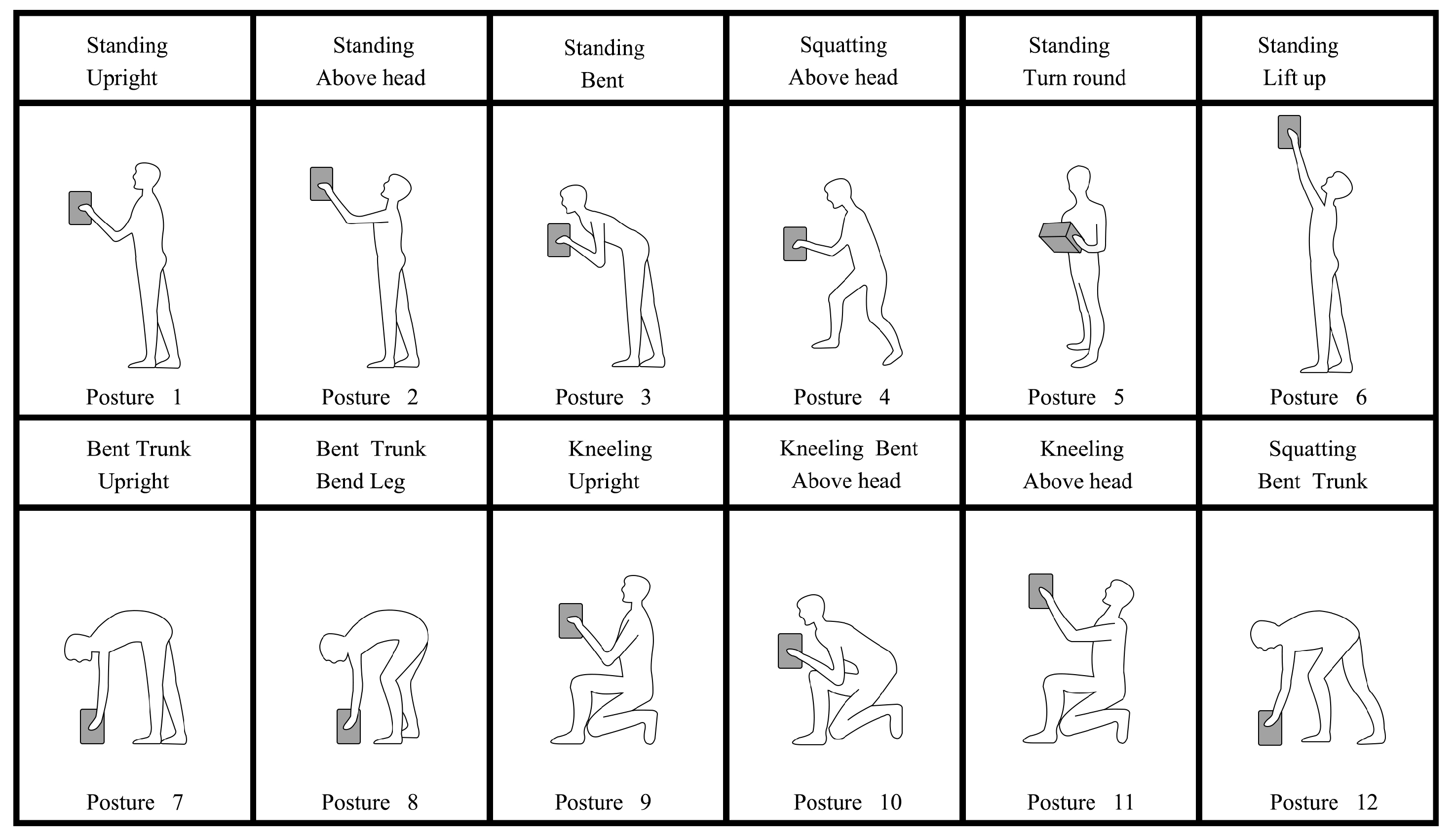

2.2.4. Procedure

2.2.5. Data Analysis

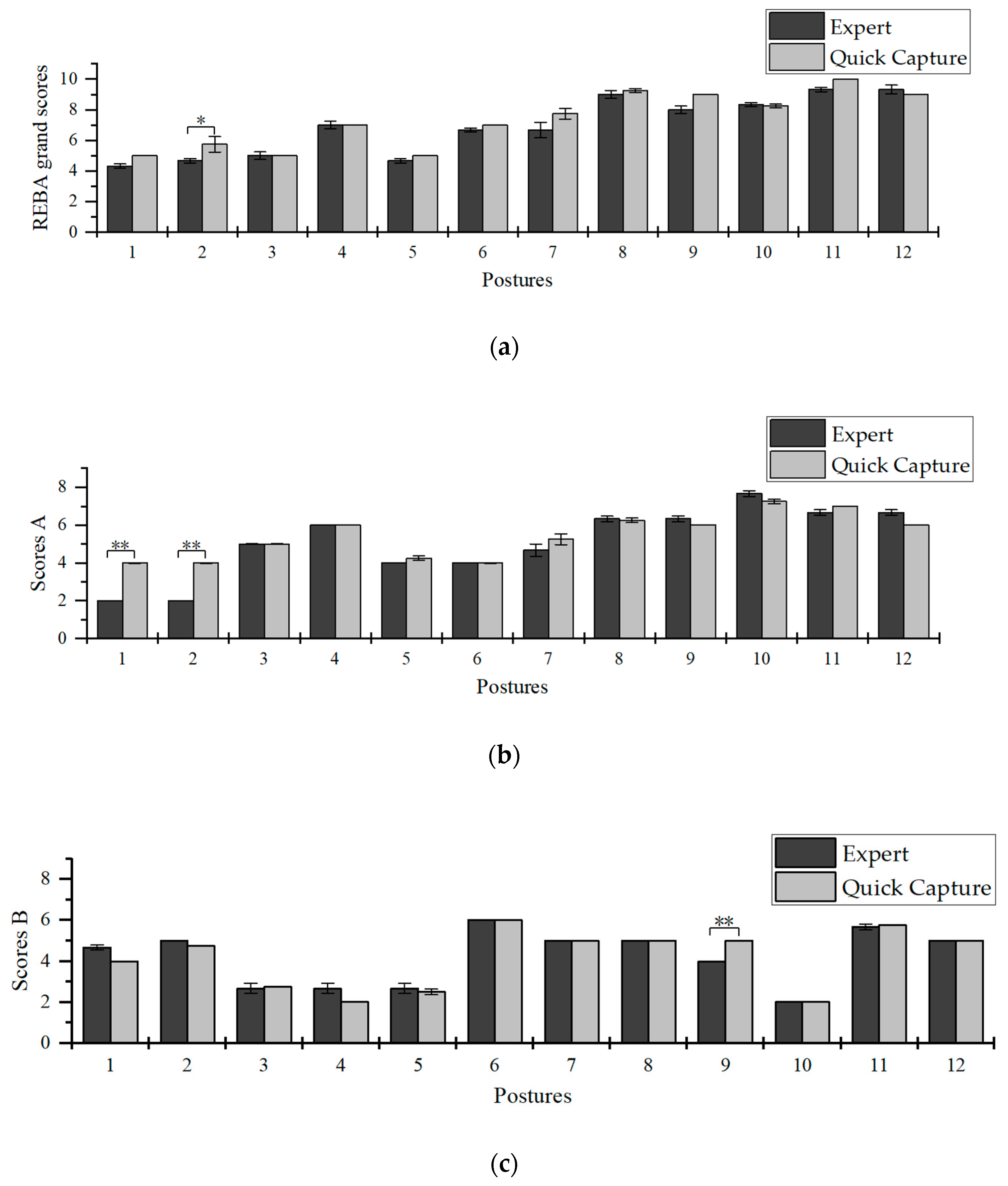

3. Results

4. Discussion

4.1. Theoretical Contributions and Empirical Implications

- (1)

- The study applied a novel CPM-based REBA system for MSDs risk assessment, named the “Quick Capture“ system. To the best of the authors’ knowledge, this is the first system developed based on CPM-based REBA for MSDs risk assessment. This illustrates in-depth applications of CPM theory and the REBA system, and also enriches the adoption of the theory in image recognition in the field of ergonomics.

- (2)

- To experimentally compare MSDs risk assessments, ergonomic experiments involving Quick Capture, ergonomic experts, and motion capture were conducted. The experimental design based on the Quick Capture system and the results of this study could provide considerable insights on MSDs risk assessment in the field of ergonomics.

- (1)

- Quick Capture can demonstrate an automated mode on parameter-adjusting in REBA MSDs risk assessment. The scoring accuracy can also be improved.

- (2)

- Quick Capture uses a smartphone as a carrier, which solves the tedious operations in the MSDs assessment. It also makes it possible to be a widespread application.

- (3)

- The system can quickly complete MSDs assessments in real-life scenarios, thus, minimizing cost and time associated with MSDs assessment.

4.2. Summary of Expected Results

5. Conclusions

- (1)

- Quick Capture’s angle recognition accuracy was consistent with that of the motion capture system;

- (2)

- The score calculated by Quick Capture was consistent with those of the experts;

- (3)

- The Quick Capture system could make up for possible errors made by experts.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Marras, W.M.; Cutlip, R.G.; Burt, S.E.; Waters, T.R. National occupational research agenda (NORA) future directions in occupational musculoskeletal disorder health research. Appl. Ergon. 2009, 40, 15–22. [Google Scholar] [CrossRef]

- Vallati, C.; Virdis, A.; Gesi, M.; Carbonaro, N.; Tognetti, A. ePhysio: A Wearables-Enabled Platform for the Remote Management of Musculoskeletal Diseases. Sensors 2018, 19, 2. [Google Scholar] [CrossRef]

- Ferguson, S.A.; Marras, W.S.; Gary Allread, W.; Knapik, G.G.; Vandlen, K.A.; Splittstoesser, R.E.; Yang, G. Musculoskeletal disorder risk as a function of vehicle rotation angle during assembly tasks. Appl. Ergon. 2011, 42, 699–709. [Google Scholar] [CrossRef]

- Nath, N.D.; Akhavian, R.; Behzadan, A.H. Ergonomic analysis of construction worker’s body postures using wearable mobile sensors. Appl. Ergon. 2017, 62, 107–117. [Google Scholar] [CrossRef]

- Sutari, W.; Yekti, Y.N.D.; Astuti, M.D.; Sari, Y.M. Analysis of working posture on muscular skeleton disorders of operator in stamp scraping in ‘batik cap’ industry. Procedia Manuf. 2015, 4, 133–138. [Google Scholar] [CrossRef][Green Version]

- Bazazan, A.; Dianat, I.; Feizollahi, N.; Mombeini, A.; Shirazi, A.H.; Castellucci, H.I. Effect of a posture correction based intervention on musculoskeletal symptoms and fatigue among control room operators. Appl. Ergon. 2019, 76, 12–19. [Google Scholar] [CrossRef]

- David, G.C. Ergonomic methods for assessing exposure to risk factors for work related musculoskeletal disorders. Occup. Med. 2005, 55, 190–199. [Google Scholar] [CrossRef]

- Kuorinka, I.; Jonsson, B.; Kilbom, A.; Vinterberg, H.; Biering-Sørensen, F.; Andersson, G.; Jørgensen, K. Standardised Nordic questionnaires for the analysis of musculoskeletal symptoms. Appl. Ergon. 1987, 18, 233–237. [Google Scholar] [CrossRef]

- Bernardes, J.M.; Gómez-Salgado, J.; Ruiz-Frutos, C.; Dias, A. Self-reports of musculoskeletal symptoms as predictors of work-related accidents: A hospital-based case-control study. Saf. Sci. 2019, 115, 103–109. [Google Scholar] [CrossRef]

- Chen, H.C.; Liu, Y.P.; Hong, W.H.; Lin, Y.C.; Yu, C.Y. Evaluation of a proposed chair with an arm support for wiring terminal blocks on a vertical plane. Ind. Health 2018, 57, 207–217. [Google Scholar] [CrossRef] [PubMed]

- Seuser, A.; Kurnik, K.; Mahlein, A.K. Infrared Thermography as a Non-Invasive Tool to Explore Differences in the Musculoskeletal System of Children with Hemophilia Compared to an Age-Matched Healthy Group. Sensors 2018, 18, 518. [Google Scholar] [CrossRef] [PubMed]

- Moreno, V.; Curto, B.; Garcia-Esteban, J.A.; Zaballos, F.H.; Hernández, P.A.; Javier Serrano, J. HUSP: A Smart Haptic Probe for Reliable Training in Musculoskeletal Evaluation Using Motion Sensors. Sensors 2018, 19, 101. [Google Scholar] [CrossRef] [PubMed]

- Cardona, M.; García Cena, C.E.; Serrano, F.; Saltaren, R. ALICE: Conceptual Development of a Lower Limb Exoskeleton Robot Driven by an On-Board Musculoskeletal Simulator. Sensors 2020, 20, 789. [Google Scholar] [CrossRef] [PubMed]

- Cancela, J.; Pastorino, M.; Tzallas, A.T.; Tsipouras, M.G.; Rigas, G.; Arredondo, M.T.; Fotiadis, D.I. Wearability Assessment of a Wearable System for Parkinson’s Disease Remote Monitoring Based on a Body Area Network of Sensors. Sensors 2014, 14, 17235–17255. [Google Scholar] [CrossRef]

- Karhu, O.; Kansi, P.; Kuorinka, I. Correcting working postures in industry: A practical method for analysis. Appl. Ergon. 1977, 8, 199–201. [Google Scholar] [CrossRef]

- Hignett, S.; McAtamney, L. Rapid entire body assessment (REBA). Appl. Ergon. 2000, 31, 201–205. [Google Scholar] [CrossRef]

- McAtamney, L.; Corlett, E.N. RULA: A survey method for the investigation of work related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Roman-Liu, D. Comparison of concepts in easy-to-use methods for MSD risk assessment. Appl. Ergon. 2014, 45, 420–427. [Google Scholar] [CrossRef]

- Yoon, S.Y.; Ko, J.; Jung, M.C. A model for developing job rotation schedules that eliminate sequential high workloads and minimize between-worker variability in cumulative daily workloads: Application to automotive assembly lines. Appl. Ergon. 2016, 55, 8–15. [Google Scholar] [CrossRef]

- Janowitz, I.L.; Gillen, M.; Ryan, G.; Rempel, D.; Trupin, L.; Swig, L.; Mullen, K.; Rugulies, R.; Blanc, P.D. Measuring the physical demands of work in hospital settings: Design and implementation of an ergonomics assessment. Appl. Ergon. 2005, 37, 641–658. [Google Scholar] [CrossRef]

- Ansari, N.A.; Sheikh, M.J. Evaluation of work Posture by RULA and REBA: A Case Study. IOSR J. Mech. Civ. Eng. 2014, 11, 18–23. [Google Scholar] [CrossRef]

- Kee, D.; Na, S.; Chung, M.K. Comparison of the Ovako Working Posture Analysis System, Rapid Upper Limb Assessment, and Rapid Entire Body Assessment based on the maximum holding times. Int. J. Ind. Ergon. 2020, 77, 102943. [Google Scholar] [CrossRef]

- Schwartz, A.; Gerberich, S.G.; Kim, H.; Ryan, A.D.; Church, T.R.; Albin, T.J.; McGovern, P.M.; Erdman, A.E.; Green, D.R.; Arauz, R.F. Janitor ergonomics and injuries in the safe workload ergonomic exposure project (SWEEP) study. Appl. Ergon. 2019, 81, 102874. [Google Scholar] [CrossRef] [PubMed]

- Savino, M.; Mazza, A.; Battini, D. New easy to use postural assessment method through visual management. Int. J. Ind. Ergon. 2016, 53, 48–58. [Google Scholar] [CrossRef]

- Trask, C.; Mathiassen, S.E.; Rostami, M.; Heiden, M. Observer variability in posture assessment from video recordings: The effect of partly visible periods. Appl. Ergon. 2017, 60, 275–281. [Google Scholar] [CrossRef] [PubMed]

- Vignais, N.; Miezal, M.; Bleser, G.; Mura, K.; Gorecky, D.; Marin, F. Innovative system for real-time ergonomic feedback in industrial manufacturing. Appl. Ergon. 2013, 44, 566–574. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018; pp. 1302–1310. [Google Scholar]

- Chen, Y.C.; Tian, Y.L.; He, M.Y. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Lee, J.; Joo, H.; Lee, J.; Chee, Y. Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach. Sensors 2020, 20, 361. [Google Scholar] [CrossRef]

- González, A.; Hayashibe, M.; Bonnet, V.; Fraisse, P. Whole body center of mass estimation with portable sensors: Using the statically equivalent serial chain and a Kinect. Sensors 2014, 14, 16955–16971. [Google Scholar] [CrossRef]

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Zhao, Y.T.; Niu, J.W.; Zhang, R.J.; Liu, H.X.; Ren, L.H. Convolutional neural network for joint angle recognition and posture assessment. Comput. Eng. Appl. 2019, 55, 209–216. [Google Scholar]

- He, J.; Zhang, C.; He, X.L.; Dong, R.H. Visual Recognition of traffic police gestures with convolutional pose machine and handcrafted features. Neurocomputing 2020, 390, 248–259. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Cai, L.; Yan, J. Standing-Posture Recognition in Human-Robot Collaboration Based on Deep Learning and the Dempster-Shafer Evidence Theory. Sensors 2020, 20, 1158. [Google Scholar] [CrossRef] [PubMed]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Hands Deep in Deep Learning for Hand Pose Estimation. Comput. Sci. 2015, 24, 21–30. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4724–4732. [Google Scholar]

- Abobakr, A.; Nahavandi, D.; Hossny, M.; Iskander, J.; Attia, M.; Nahavandi, S.; Smets, M. RGB-D ergonomic assessment system of adopted working postures. Appl. Ergon. 2019, 80, 75–88. [Google Scholar] [CrossRef] [PubMed]

- Gong, F.M.; Ma, Y.H.; Zheng, P.; Song, T. A deep model method for recognizing activities of workers on offshore drilling platform by multistage convolutional pose machine. J. Loss Prev. Process 2020, 64, 104043. [Google Scholar] [CrossRef]

- Nordander, C.; Hansson, G.Å.; Ohlsson, K.; Arvidsson, I.; Balogh, I.; Strömberg, U.; Rittner, R.; Skerfving, S. Exposure-response relationships for work-related neck and shoulder musculoskeletal disorders e Analyses of pooled uniform data sets. Appl. Ergon. 2016, 55, 70–84. [Google Scholar] [CrossRef]

- Xu, X.; Robertson, M.; Chen, K.B.; Lin, J.H.; McGorry, R.W. Using the Microsoft Kinect™ to assess 3-D shoulder kinematics during computer use. Appl. Ergon. 2017, 65, 418–423. [Google Scholar] [CrossRef]

- Promsri, A.; Haid, T.; Federolf, P. How does lower limb dominance influence postural control movements during single leg stance? Hum. Mov. Sci. 2018, 58, 165–174. [Google Scholar] [CrossRef]

- Colombini, D.; Colombini, C.; Occhipinti, E. I Disturbi Muscolo-Scheletrici Lavorativi; INAIL: Milano, Italy, 2012. [Google Scholar]

- Cruz-Montecinos, C.; Cuesta-Vargas, A.; Muñoz, C.; Flores, D.; Ellsworth, J.; De la Fuente, C.; Calatayud, J.; Rivera-Lillo, G.; Soto-Arellano, V.; Tapia, C.; et al. Impact of Visual Biofeedback of Trunk Sway Smoothness on Motor Learning during Unipedal Stance. Sensors 2020, 20, 2585. [Google Scholar] [CrossRef] [PubMed]

- Diego-Mas, J.A.; Alcaide-Marzal, J. Using kinect sensor in observational methods for assessing postures at work. Appl. Ergon. 2014, 45, 976–985. [Google Scholar] [CrossRef] [PubMed]

- Plantard, P.; Shum, H.P.H.; Le Pierres, A.S.; Multon, F. Validation of an ergonomic assessment method using Kinect data in real workplace conditions. Appl. Ergon. 2017, 65, 562–569. [Google Scholar] [CrossRef] [PubMed]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Yan, X.Z.; Li, H.; Li, A.R.; Zhang, H. Wearable IMU-based real-time motion warning system for construction workers’ musculoskeletal disorders prevention. Autom. Constr. 2017, 74, 2–11. [Google Scholar] [CrossRef]

- Lee, C.H.; Chen, C.H.; Lin, C.; Li, F.; Zhao, X. Developing a Quick Response Product Configuration System under Industry 4.0 Based on Customer Requirement Modelling and Optimization Method. Appl. Sci. 2019, 9, 5004. [Google Scholar] [CrossRef]

- Lee, C.H.; Li, F.; Chen, C.H.; Lin, C. A Kano-Based Quick-Response Product Configuration System Under Industry 4.0. In Transdisciplinary Engineering for Complex Socio-technical Systems, Proceedings of the 26th ISTE International Conference on Transdisciplinary Engineering, Tokyo, Japan, 30 July–1 August 2019; IOS Press: Amsterdam, The Netherlands, 2019; Volume 10, pp. 63–71. [Google Scholar]

- Lee, C.H.; Chen, C.H.; Li, F.; Shie, A.J. Customized and knowledge-centric service design model integrating case-based reasoning and TRIZ. Expert Syst. Appl. 2020, 143, 113062. [Google Scholar] [CrossRef]

- Nižetić, S.; Šolić, P.; González-de-Artaza, L.D.; Patronod, L. Internet of Things (IoT): Opportunities, issues and challenges towards a smart and sustainable future. J. Clean. Prod. 2020, 274, 122877. [Google Scholar] [CrossRef]

| REBA Score | Risk Level | Risk Description |

|---|---|---|

| 1 | 1 | Negligible risk |

| 2~3 | 2 | Low risk. Change may be needed |

| 4~7 | 3 | Medium risk. Further investigate change soon |

| 8~10 | 4 | High risk. Investigate and implement change |

| 11+ | 5 | Very high risk. Implement change |

| Body Parts | Mean (SD) | Significance | |

|---|---|---|---|

| Motion Capture System | Quick Capture System | ||

| Neck | −6.178 (12.455) | −6.072 (10.790) | NS |

| Trunk | 36.066 (34.010) | 32.793 (32.628) | NS |

| L-Legs | 39.802 (48.076) | 42.796 (48.690) | NS |

| R-Legs | 52.839 (59.726) | 51.314 (60.963) | NS |

| LU-Arm | 43.974 (39.558) | 43.204 (40.474) | NS |

| RU-Arm | 39.413 (39.322) | 41.975 (39.657) | NS |

| LL-Arm | 45.398 (26.327) | 50.595 (25.277) | NS |

| RL-Arm | 53.156 (25.459) | 55.236 (26.058) | NS |

| L-Wrist | 6.983 (4.634) | 6.404 (4.435) | NS |

| R-Wrist | 8.462 (5.272) | 7.888 (4.201) | NS |

| RMSEs | Body Parts | AVE | ρ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Neck | Trunk | L-Legs | R-Legs | LU-Arm | RU-Arm | LL-Arm | RL-Arm | L-Wrist | R-Wrist | |||

| 1 | 0.95(0.98) | 2.28(2.42) | 2.83(1.61) | 2.34(1.38) | 5.50(3.13) | 4.29(3.14) | 4.83(3.32) | 3.36(3.06) | 2.73(1.90) | 1.71(1.88) | 3.09 | 0.896 ** |

| 2 | 4.89(5.62) | 3.12(2.05) | 3.20(1.00) | 2.25(2.60) | 1.98(0.66) | 2.13(0.26) | 5.17(3.03) | 5.27(5.76) | 3.08(1.84) | 5.53(3.11) | 3.66 | 0.968 ** |

| 3 | 1.86(0.84) | 3.03(2.29) | 2.66(2.00) | 4.04(0.91) | 3.12(2.64) | 1.91(1.89) | 9.70(7.98) | 5.10(5.50) | 3.10(2.53) | 3.50(2.78) | 3.80 | 0.963 ** |

| 4 | 3.33(2.36) | 4.27(1.30) | 1.49(1.60) | 6.49(3.11) | 2.64(2.99) | 3.10(3.19) | 5.06(4.37) | 4.45(4.43) | 3.57(2.47) | 5.09(2.92) | 3.95 | 0.988 ** |

| 5 | 3.72(3.23) | 3.29(0.52) | 2.63(2.16) | 2.02(1.71) | 5.14(4.25) | 3.70(1.54) | 7.58(1.26) | 5.34(1.94) | 4.14(3.02) | 4.79(3.61) | 4.24 | 0.824 ** |

| 6 | 4.24(3.09) | 2.03(2.07) | 1.73(1.15) | 3.38(0.74) | 4.38(3.50) | 5.58(3.76) | 10.76(6.3) | 7.55(4.11) | 2.86(3.16) | 2.37(1.86) | 4.49 | 0.963 ** |

| 7 | 3.73(3.58) | 7.90(0.93) | 7.63(4.73) | 4.26(4.73) | 5.59(1.43) | 4.51(4.61) | 8.21(8.01) | 4.42(4.95) | 3.39(3.90) | 4.64(1.48) | 5.54 | 0.726 ** |

| 8 | 4.96(4.96) | 4.43(2.02) | 9.06(6.25) | 2.87(1.88) | 3.15(3.19) | 5.10(1.17) | 15.66(8.65) | 4.24(0.03) | 2.18(2.42) | 4.96(2.72) | 5.78 | 0.874 ** |

| 9 | 4.44(4.77) | 4.10(2.29) | 7.26(5.60) | 1.98(1.83) | 4.40(4.10) | 4.81(4.28) | 5.11(5.74) | 5.01(4.89) | 2.72(2.27) | 4.59(1.50) | 4.56 | 0.980 ** |

| 10 | 3.11(3.29) | 1.94(1.44) | 8.55(6.92) | 3.63(1.49) | 11.68(2.09) | 5.62(1.46) | 12.81(9.96) | 5.05(4.9) | 4.29(4.95) | 4.41(4.73) | 6.25 | 0.959 ** |

| 11 | 2.38(2.70) | 3.53(0.77) | 10.95(3.33) | 3.46(3.94) | 3.43(3.39) | 4.55(2.33) | 11.07(2.76) | 5.12(3.22) | 3.70(4.22) | 4.94(5.38) | 5.31 | 0.983 ** |

| 12 | 5.41(2.07) | 6.40(5.04) | 6.55(2.78) | 4.53(1.34) | 5.26(6.01) | 10.3(1.45) | 7.20(3.80) | 6.82(3.26) | 7.31(6.92) | 5.55(5.00) | 6.56 | 0.850 ** |

| AVE | 3.58 | 3.86 | 5.38 | 3.44 | 4.69 | 4.63 | 8.60 | 5.14 | 3.59 | 4.76 | 4.77 | 0.915 |

| ICCs | Quick Capture | Expert |

|---|---|---|

| REBA Grand Score | 0.980 | 0.961 |

| Score A | 0.973 | 0.981 |

| Score B | 0.989 | 0.926 |

| RMSE | P0 | Cohen’s Kappa | p Value | |

|---|---|---|---|---|

| REBA Grand Score | 0.622 | 0.968 | 0.710 | <0.01 |

| Score A | 0.878 | 0.931 | 0.742 | <0.01 |

| Score B | 0.408 | 0.957 | 0.763 | <0.01 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhang, R.; Lee, C.-H.; Lee, Y.-C. An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders. Sensors 2020, 20, 4414. https://doi.org/10.3390/s20164414

Li Z, Zhang R, Lee C-H, Lee Y-C. An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders. Sensors. 2020; 20(16):4414. https://doi.org/10.3390/s20164414

Chicago/Turabian StyleLi, Ze, Ruiqiu Zhang, Ching-Hung Lee, and Yu-Chi Lee. 2020. "An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders" Sensors 20, no. 16: 4414. https://doi.org/10.3390/s20164414

APA StyleLi, Z., Zhang, R., Lee, C.-H., & Lee, Y.-C. (2020). An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders. Sensors, 20(16), 4414. https://doi.org/10.3390/s20164414