Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants

Abstract

1. Introduction

2. Materials and Methods

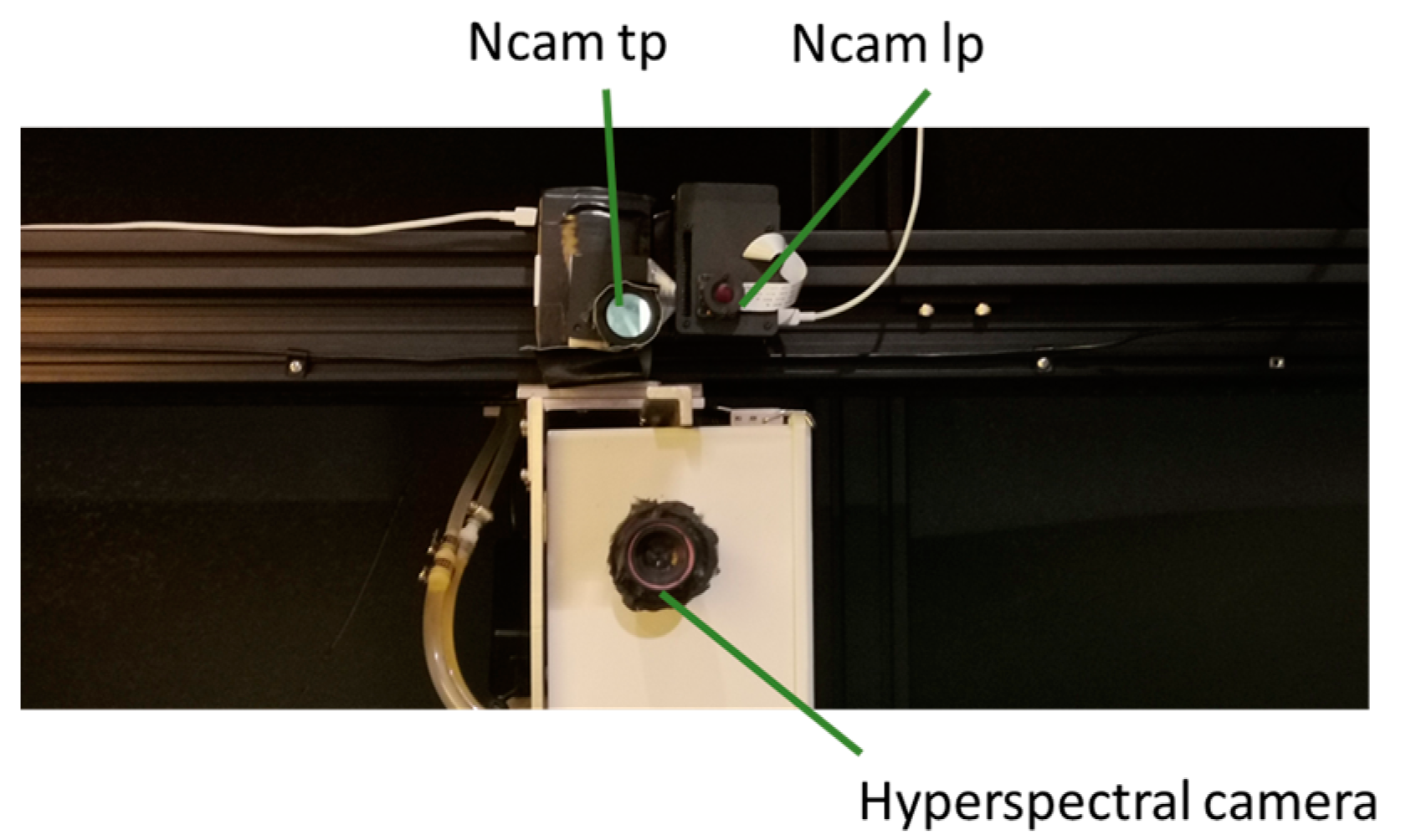

2.1. Ncam System Development

2.2. Plant Samples

2.3. Image Acquisition

2.4. Image Processing

2.5. Data Analysis

2.5.1. Dataset Analysis

2.5.2. Data Preprocessing

2.5.3. NDVI Estimation

2.5.4. Other Applications

3. Results and Discussion

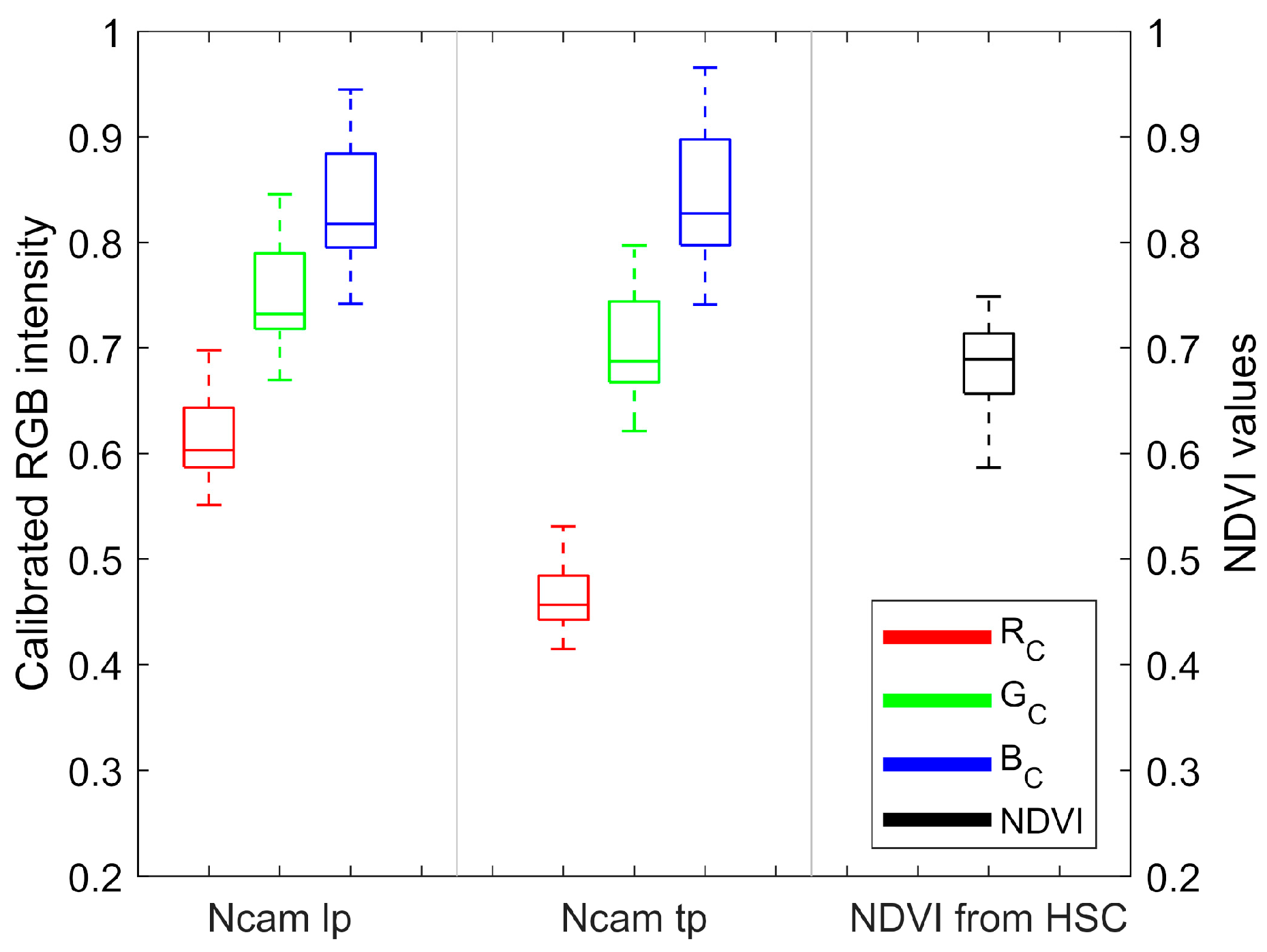

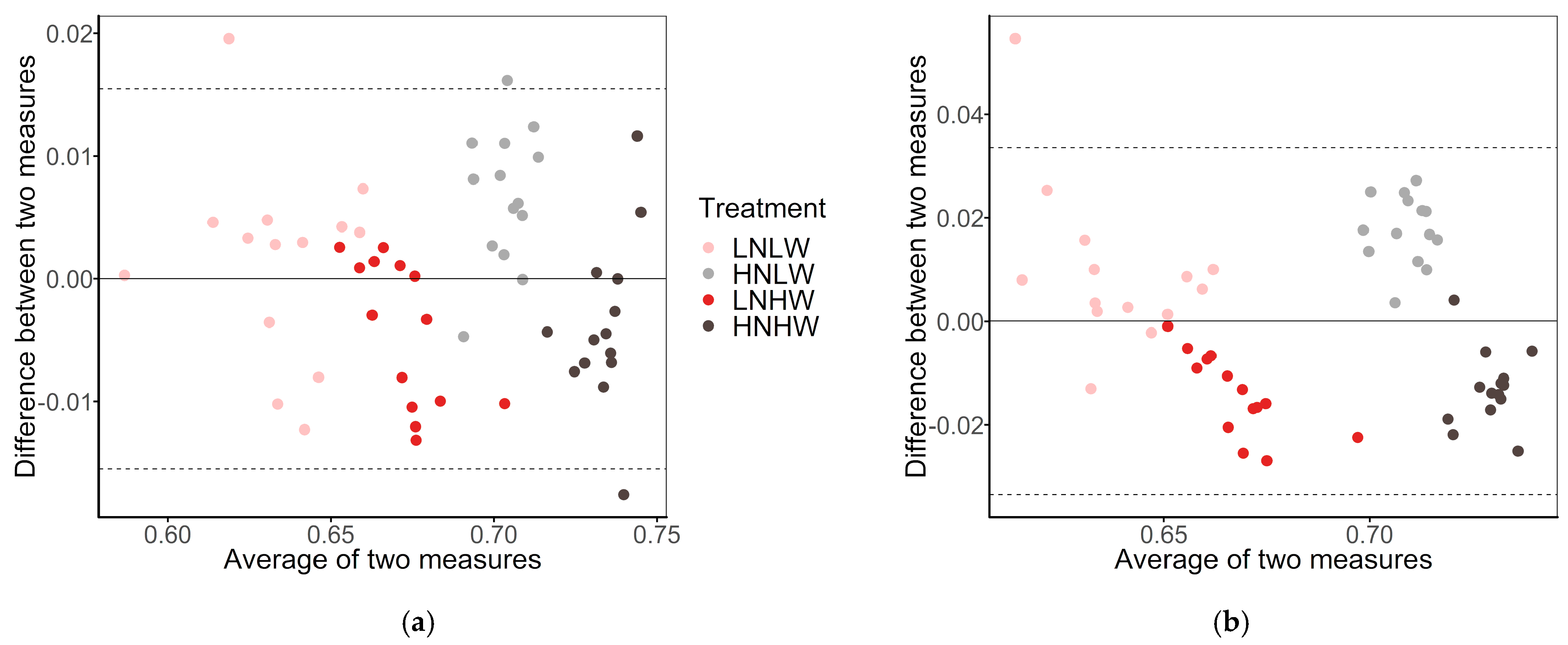

3.1. Dataset Analysis

3.2. Channel Components of Ncams Images

3.3. NDVI Estimation

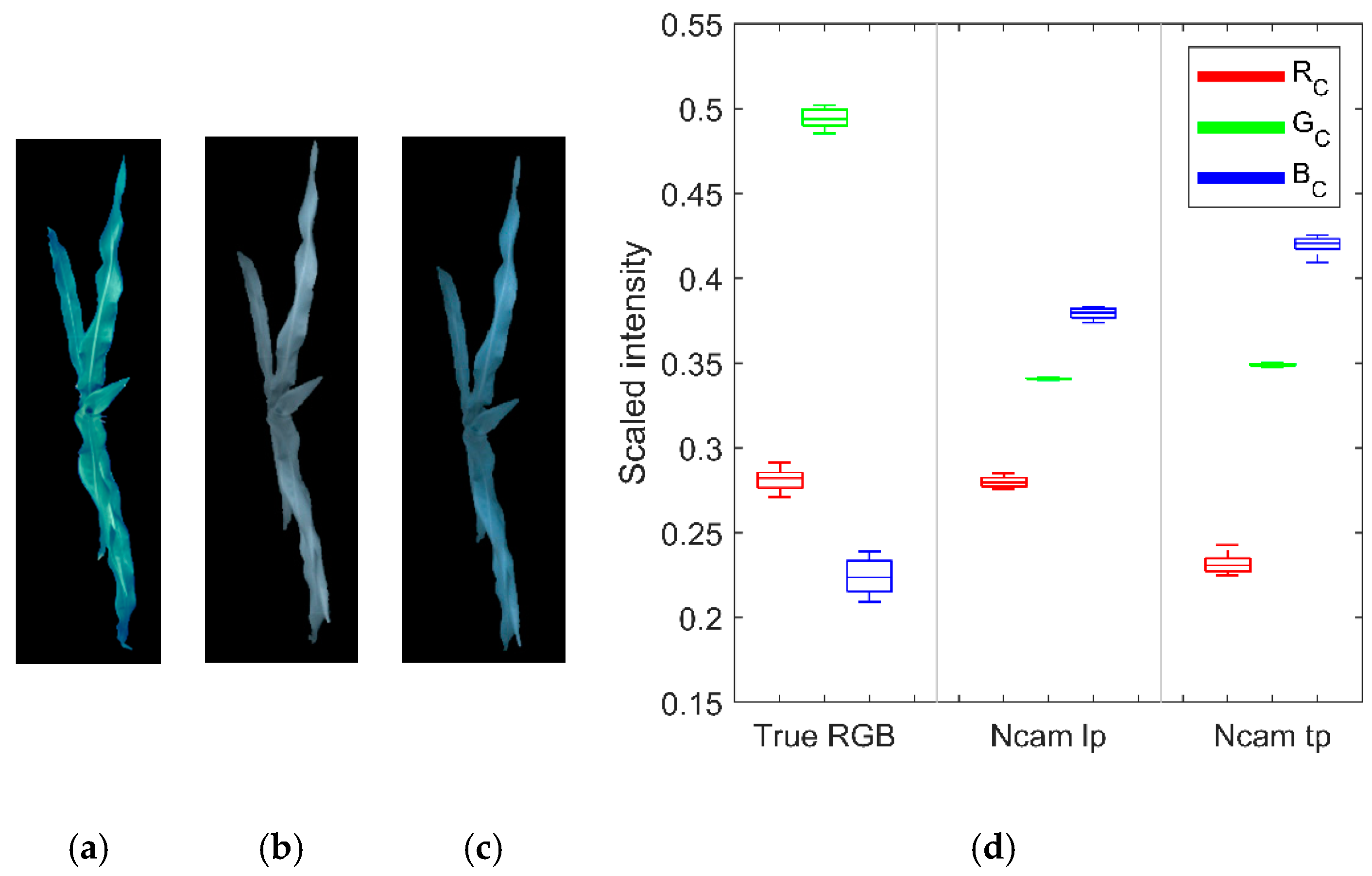

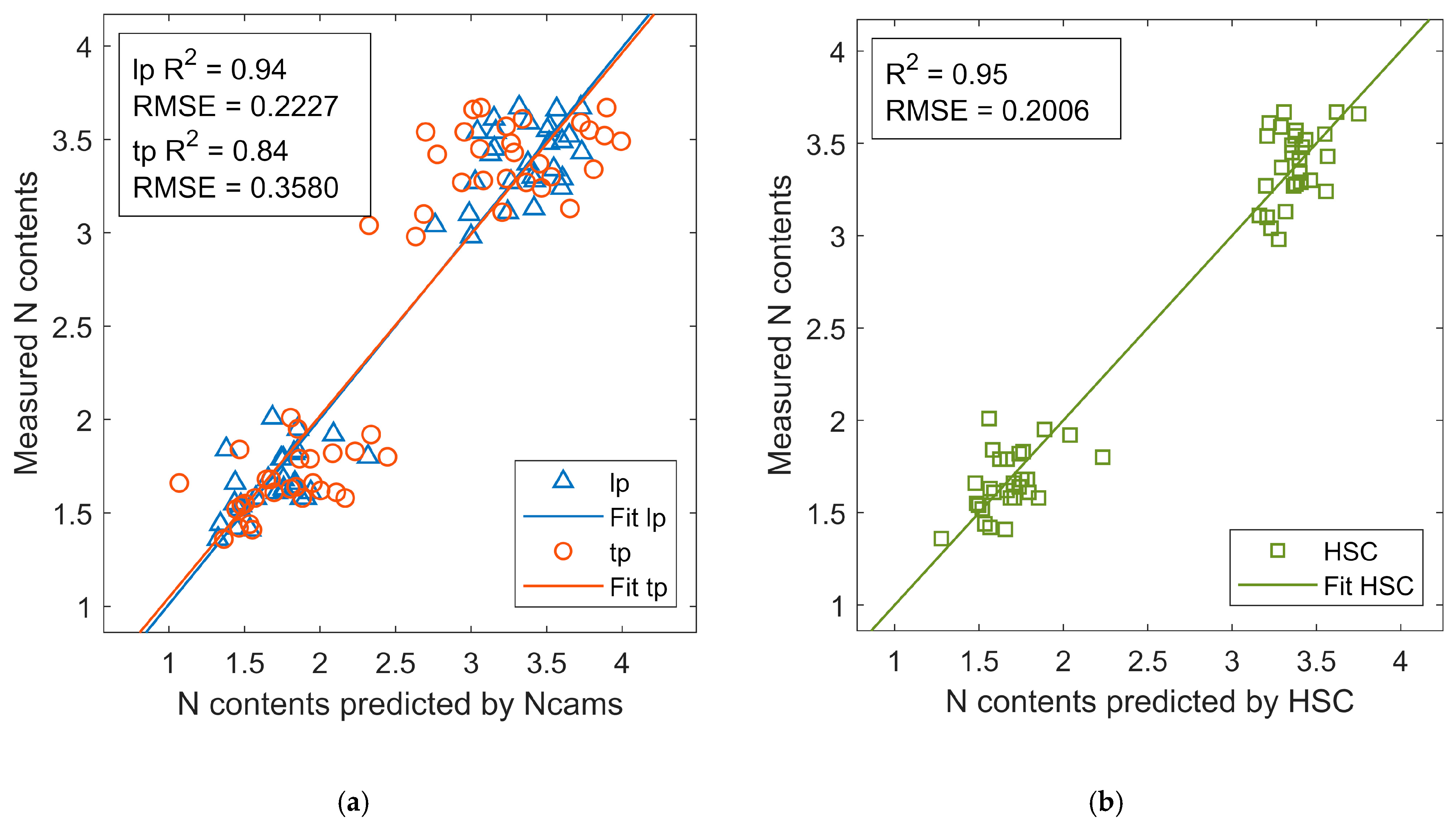

3.4. Other Application

3.5. Ncam Advantages

3.6. Ncam Limitations

3.7. Opportunities for Future Work

4. Conclusion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rabatel, G.; Gorretta, N.; Labbé, S. Getting simultaneous red and near-infrared band data from a single digital camera for plant monitoring applications: Theoretical and practical study. Biosyst. Eng. 2014, 117, 2–14. [Google Scholar] [CrossRef]

- Kumar, A.; Bhandari, A.K.; Padhy, P. Improved normalised difference vegetation index method based on discrete cosine transform and singular value decomposition for satellite image processing. IET Signal Process. 2012, 6, 617–625. [Google Scholar] [CrossRef]

- Genovese, G.; Vignolles, C.; Nègre, T.; Passera, G. A methodology for a combined use of normalised difference vegetation index and CORINE land cover data for crop yield monitoring and forecasting. A case study on Spain. Agronomie 2001, 21, 91–111. [Google Scholar] [CrossRef]

- Sannier, C.A.D.; Taylor, J.C.; Du Plessis, W. Real-time monitoring of vegetation biomass with NOAA-AVHRR in Etosha National Park, Namibia, for fire risk assessment. Int. J. Remote Sens. 2002, 23, 71–89. [Google Scholar] [CrossRef]

- Kriegler, F.J.; Malila, W.A.; Nalepka, R.F.; Richardson, W. Preprocessing transformations and their effects on multispectral recognition. In Proceedings of the 6th International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 13–16 October 1969; pp. 97–131. [Google Scholar]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Duan, T.; Chapman, S.C.; Guo, Y.; Zheng, B. Dynamic monitoring of NDVI in wheat agronomy and breeding trials using an unmanned aerial vehicle. Field Crop. Res. 2017, 210, 71–80. [Google Scholar] [CrossRef]

- Cho, M.A.; Skidmore, A.; Corsi, F.; van Wieren, S.E.; Sobhan, I. Estimation of green grass/herb biomass from airborne hyperspectral imagery using spectral indices and partial least squares regression. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 414–424. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral vegetation indices and their relationships with agricultural crop characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Galvão, L.S.; Ponzoni, F.J.; Epiphanio, J.C.N.; Rudorff, B.F.T.; Formaggio, A.R. Sun and view angle effects on NDVI determination of land cover types in the Brazilian Amazon region with hyperspectral data. Int. J. Remote Sens. 2004, 25, 1861–1879. [Google Scholar] [CrossRef]

- Wang, L.; Jin, J.; Song, Z.; Wang, J.; Zhang, L.; Rehman, T.U.; Ma, D.; Carpenter, N.R.; Tuinstra, M.R. LeafSpec: An accurate and portable hyperspectral corn leaf imager. Comput. Electron. Agric. 2020, 169. [Google Scholar] [CrossRef]

- Wang, L.; Duan, Y.; Zhang, L.; Wang, J.; Li, Y.; Jin, J. LeafScope: A Portable High-Resolution Multispectral Imager for In Vivo Imaging Soybean Leaf. Sensors 2020, 20, 2194. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; He, X.; Wang, Y.; Liu, H.; Xu, D.; Guo, F. Review of spectral imaging technology in biomedical engineering: Achievements and challenges. J. Biomed. Opt. 2013, 18, 100901. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chen, D.; Walker, C.N.; Angus, J.F. Estimating the nitrogen status of crops using a digital camera. F. Crop. Res. 2010, 118, 221–227. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Houlès, M.; Martiné, J.F. A light-weight multi-spectral aerial imaging system for nitrogen crop monitoring. Precis. Agric. 2012, 13, 525–541. [Google Scholar] [CrossRef]

- Qiu, C.; Liao, G.; Tang, H.; Liu, F.; Liao, X.; Zhang, R.; Zhao, Z. Derivative parameters of hyperspectral NDVI and its application in the inversion of rapeseed leaf area index. Appl. Sci. 2018, 8, 1300. [Google Scholar] [CrossRef]

- Costa, L.; Nunes, L.; Ampatzidis, Y. A new visible band index (vNDVI) for estimating NDVI values on RGB images utilizing genetic algorithms. Comput. Electron. Agric. 2020, 172, 105334. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Wardlow, B.D.; Suyker, A.E.; Verma, S.B.; Shibayama, M. An alternative method using digital cameras for continuous monitoring of crop status. Agric. For. Meteorol. 2012, 154–155, 113. [Google Scholar] [CrossRef]

- Petach, A.R.; Toomey, M.; Aubrecht, D.M.; Richardson, A.D. Monitoring vegetation phenology using an infrared-enabled security camera. Agric. For. Meteorol. 2014, 195–196, 143–151. [Google Scholar] [CrossRef]

- Nijland, W.; de Jong, R.; de Jong, S.M.; Wulder, M.A.; Bater, C.W.; Coops, N.C. Monitoring plant condition and phenology using infrared sensitive consumer grade digital cameras. Agric. For. Meteorol. 2014, 184, 98–106. [Google Scholar] [CrossRef]

- Montes de Oca, A.; Arreola, L.; Flores, A.; Sanchez, J.; Flores, G. Low-cost multispectral imaging system for crop monitoring. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 443–451. [Google Scholar] [CrossRef]

- Sakamoto, T.; Shibayama, M.; Kimura, A.; Takada, E. Assessment of digital camera-derived vegetation indices in quantitative monitoring of seasonal rice growth. ISPRS J. Photogramm. Remote Sens. 2011, 66, 872–882. [Google Scholar] [CrossRef]

- Netto, A.F.A.; Martins, R.N.; De Souza, G.S.A.; Dos Santos, F.F.L.; Rosas, J.T.F. Evaluation of a low-cost camera for agricultural applications. J. Exp. Agric. Int. 2019, 1–9. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, color-infrared and multispectral images acquired from unmanned aerial systems for the estimation of nitrogen accumulation in rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Stow, D.; Hope, A.; Chen, D.; Garrison, C.; Service, D.; Richardson, D. Potential of colour-infrared digital camera imagery for inventory and mapping of alien plant invasions in South African shrublands. Int. J. Remote Sens. 2000, 21, 2965–2970. [Google Scholar] [CrossRef]

- Dworak, V.; Selbeck, J.; Dammer, K.H.; Hoffmann, M.; Zarezadeh, A.A.; Bobda, C. Strategy for the development of a smart NDVI camera system for outdoor plant detection and agricultural embedded systems. Sensors 2013, 13, 1523–1538. [Google Scholar] [CrossRef] [PubMed]

- Raza, A.; Ikram, A.A.; Amin, A.; Ikram, A.J. A review of low cost and power efficient development boards for IoT applications. In Proceedings of the 2016 Future Technologies Conference (FTC), San Francisco, CA, USA, 6–7 December 2017; pp. 786–790. [Google Scholar] [CrossRef]

- Ma, D.; Carpenter, N.; Amatya, S.; Maki, H.; Wang, L.; Zhang, L.; Neeno, S.; Tuinstra, M.R.; Jin, J. Removal of greenhouse microclimate heterogeneity with conveyor system for indoor phenotyping. Comput. Electron. Agric. 2019, 166, 104979. [Google Scholar] [CrossRef]

- Zhang, L.; Maki, H.; Ma, D.; Sánchez-Gallego, J.A.; Mickelbart, M.V.; Wang, L.; Rehman, T.U.; Jin, J. Optimized angles of the swing hyperspectral imaging system for single corn plant. Comput. Electron. Agric. 2019, 156, 349–359. [Google Scholar] [CrossRef]

- Pagnutti, M.; Ryan, R.E.; Cazenavette, G.; Gold, M.; Harlan, R.; Leggett, E.; Pagnutti, J. Laying the foundation to use Raspberry Pi 3 V2 camera module imagery for scientific and engineering purposes. J. Electron. Imaging 2017, 26, 013014. [Google Scholar] [CrossRef]

- Quiñonero-Candela, J.; Rasmussen, C.E. A unifying view of sparse approximate Gaussian process regression. J. Mach. Learn. Res. 2005, 6, 1939–1959. [Google Scholar]

- Drucker, H.; Surges, C.J.C.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 1–6 December 1997; pp. 155–161. [Google Scholar]

- Masialeti, I.; Egbert, S.; Wardlow, B. A comparative analysis of phenological curves for major crops in Kansas. GISci. Remote Sens. 2010, 47, 241–259. [Google Scholar] [CrossRef]

- Smith, M.-L.; Ollinger, S.V.; Martin, M.E.; Aber, J.D.; Hallett, R.A.; Goodale, C.L. Direct estimation of aboveground forest productivity through hyperspectral remote sensing of canopy nitrogen. Ecol. Appl. 2002, 12, 1286–1302. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L. Using hyperspectral remote sensing data for retrieving canopy chlorophyll and nitrogen content. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 574–583. [Google Scholar] [CrossRef]

- Taghizadeh, M.; Gowen, A.A.; O’donnell, C.P. Comparison of hyperspectral imaging with conventional RGB imaging for quality evaluation of Agaricus bisporus mushrooms. Biosyst. Eng. 2011, 108, 191–194. [Google Scholar] [CrossRef]

- Prey, L.; von Bloh, M.; Schmidhalter, U. Evaluating RGB imaging and multispectral active and hyperspectral passive sensing for assessing early plant vigor in winter wheat. Sensors 2018, 18, 2931. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Rich, P.M.; Price, K.P. Temporal responses of NDVI to precipitation and temperature in the central Great Plains, USA. Int. J. Remote Sens. 2003, 24, 2345–2364. [Google Scholar] [CrossRef]

| Parameters | HSC | Ncam lp | Ncam tp |

|---|---|---|---|

| Frame rate (FPS) | 60 | 2 | 2 |

| Exposure time (ms) | 6 | 10 | 16 |

| Resolution | 1760 × 1620 | 3280 × 2464 | 3280 × 2464 |

| Ncam tp | Ncam lp | |||||||

|---|---|---|---|---|---|---|---|---|

| Model | R2 | RMSE | MAE | MPE | R2 | RMSE | MAE | MPE |

| MLR | 0.96 | 0.0079 | 0.0064 | 0.93% | 0.82 | 0.0169 | 0.0143 | 2.09% |

| GP Matern | 0.96 | 0.0079 | 0.0067 | 0.97% | 0.82 | 0.0168 | 0.0144 | 2.10% |

| SVR quadratic | 0.96 | 0.0081 | 0.0065 | 0.94% | 0.81 | 0.0173 | 0.0149 | 2.18% |

| Parameters | HSC | Ncam lp | Ncam tp | |

|---|---|---|---|---|

| Configuration | Spatial resolution | 1760 × 1620 | 3280 × 2464 | 3280 × 2464 |

| Effective bands | 513 | 3 | 3 | |

| Spectral range (nm) | 400 to 1000 | 580 to 1000 | 620 to 690 and 810 to 890 | |

| Imaging method | Imaging type | Push broom scan | One-shot | One-shot |

| Imaging speed | 1 min | Less than 1 s | Less than 1 s | |

| Size and weight | Dimension (mm) | 320 × 90 × 96 | 25 × 24 × 9 | 25 × 24 × 9 |

| Weight (kg) | 5 | About 0.1 | About 0.1 | |

| Operation | -- | Professional | Easy | Easy |

| Price | -- | Over $10,000 | $85 | $69 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Duan, Y.; Zhang, L.; Rehman, T.U.; Ma, D.; Jin, J. Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors 2020, 20, 3208. https://doi.org/10.3390/s20113208

Wang L, Duan Y, Zhang L, Rehman TU, Ma D, Jin J. Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors. 2020; 20(11):3208. https://doi.org/10.3390/s20113208

Chicago/Turabian StyleWang, Liangju, Yunhong Duan, Libo Zhang, Tanzeel U. Rehman, Dongdong Ma, and Jian Jin. 2020. "Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants" Sensors 20, no. 11: 3208. https://doi.org/10.3390/s20113208

APA StyleWang, L., Duan, Y., Zhang, L., Rehman, T. U., Ma, D., & Jin, J. (2020). Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors, 20(11), 3208. https://doi.org/10.3390/s20113208