WatchPose: A View-Aware Approach for Camera Pose Data Collection in Industrial Environments

Abstract

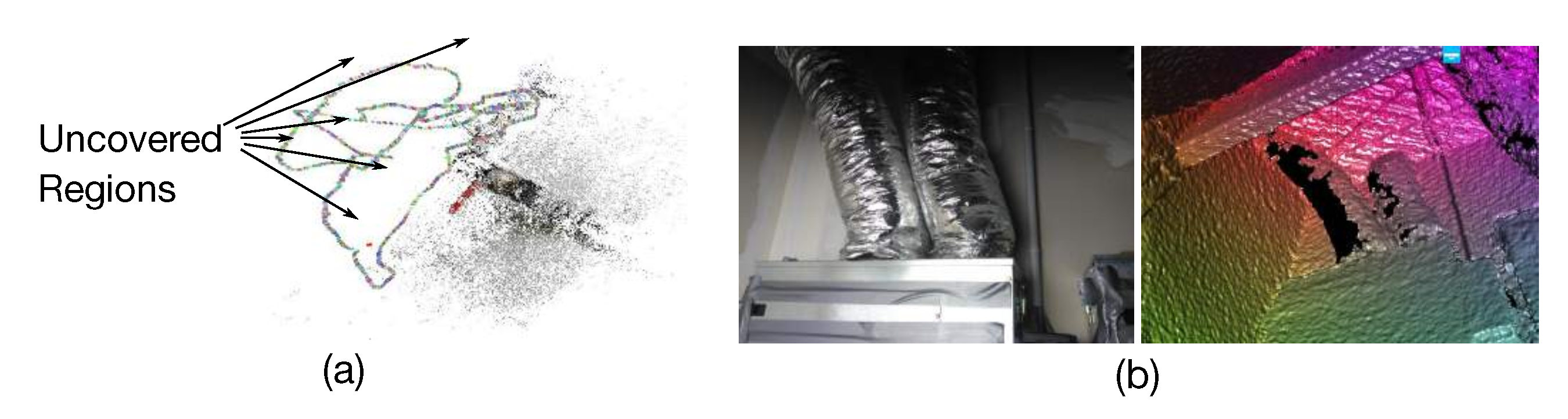

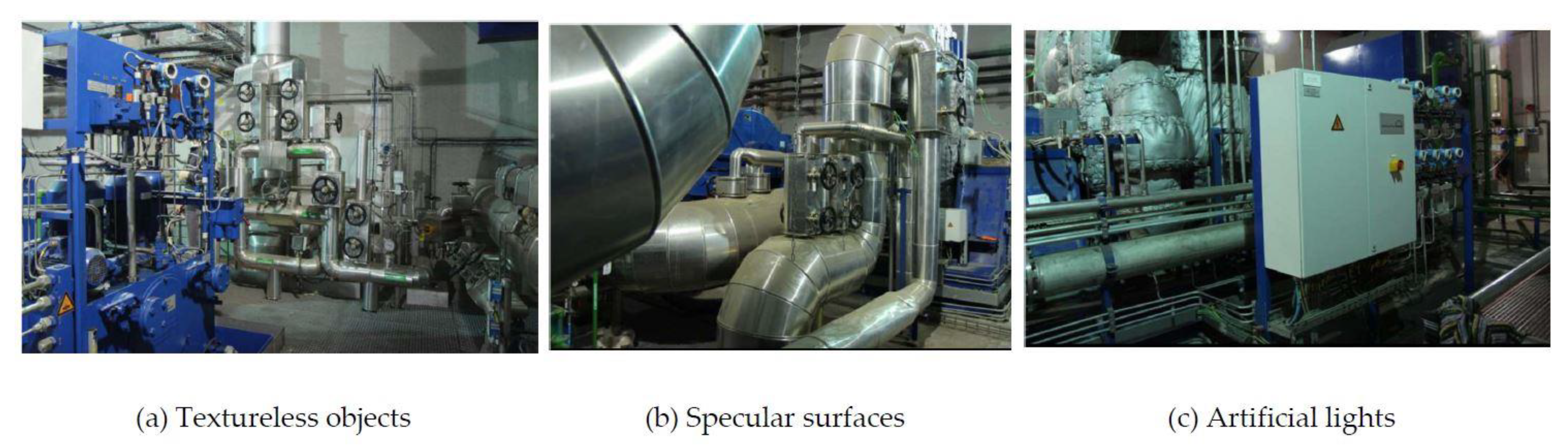

1. Introduction

2. Related Works

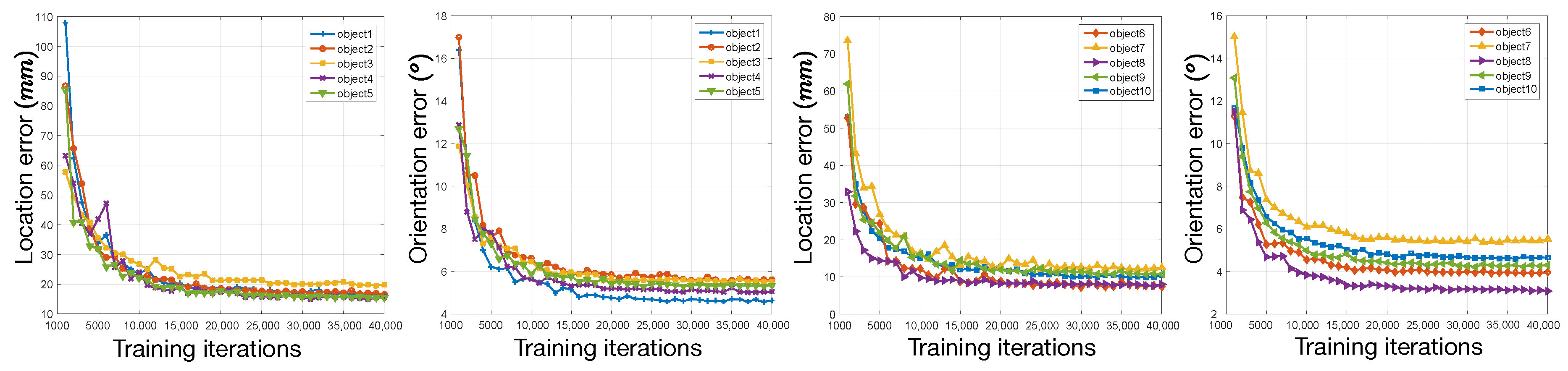

2.1. Camera Pose Data Collection

2.2. Camera Pose Estimation

3. WatchPose

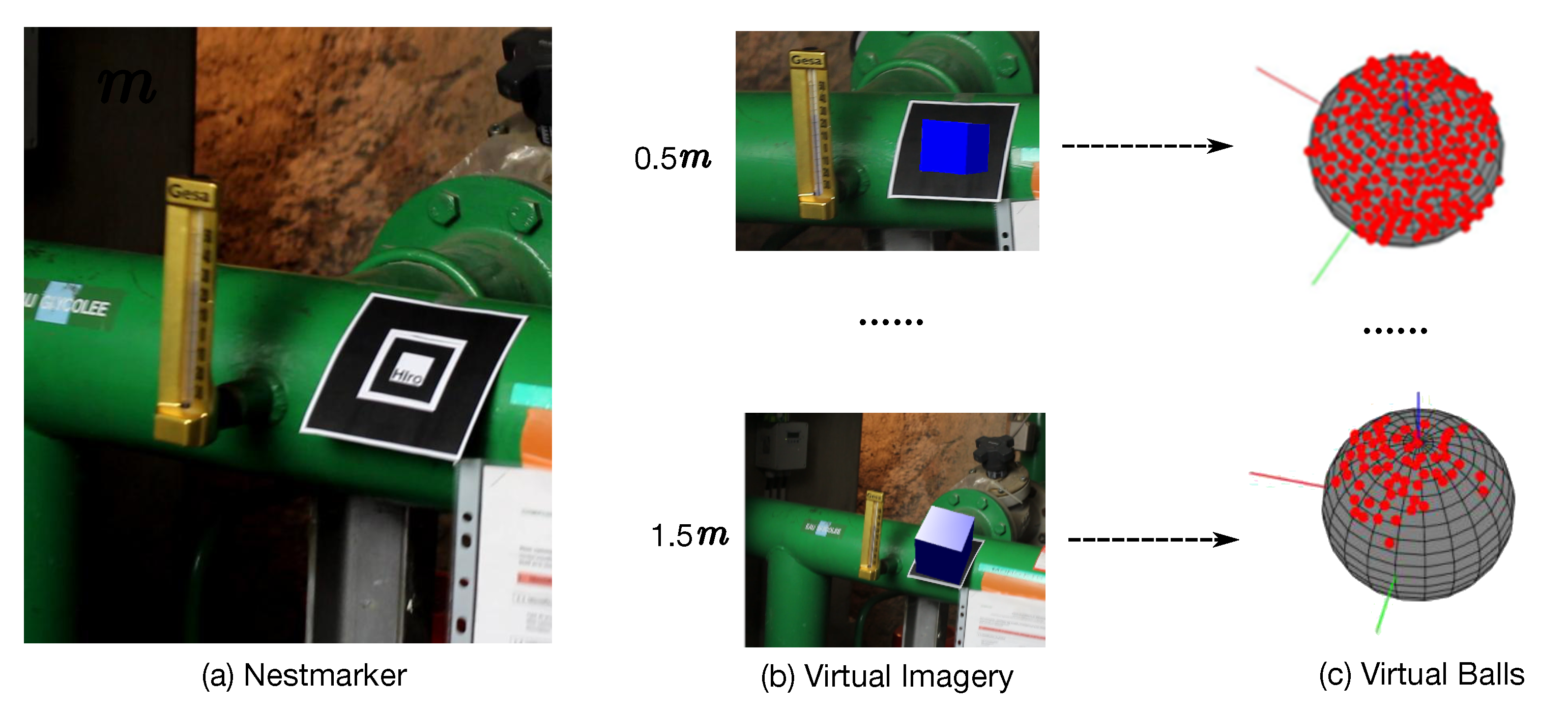

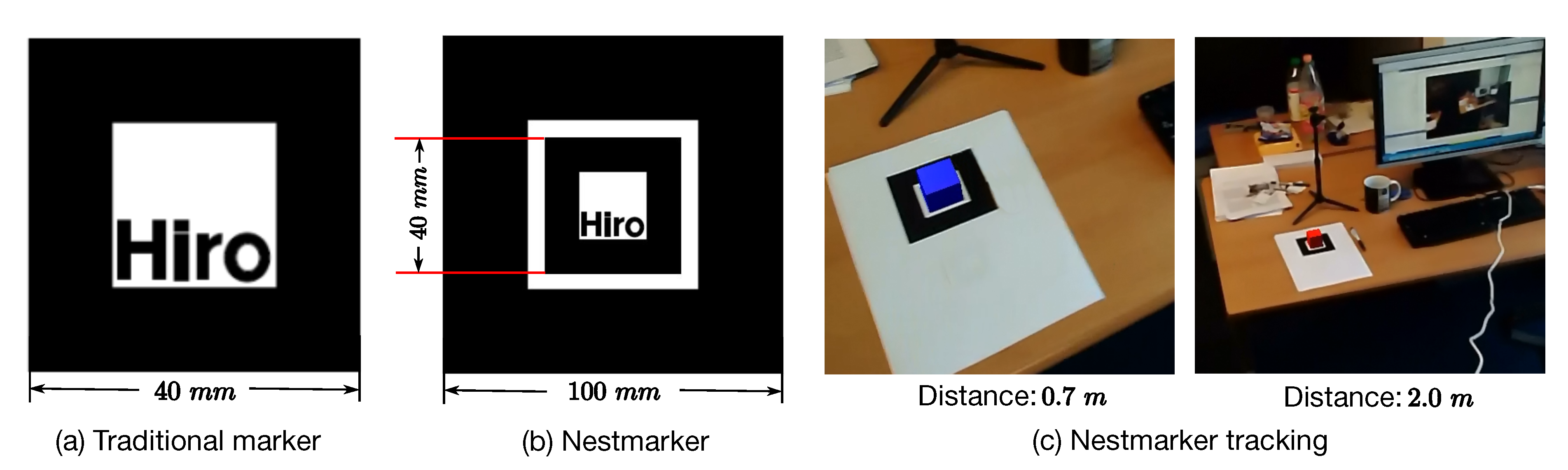

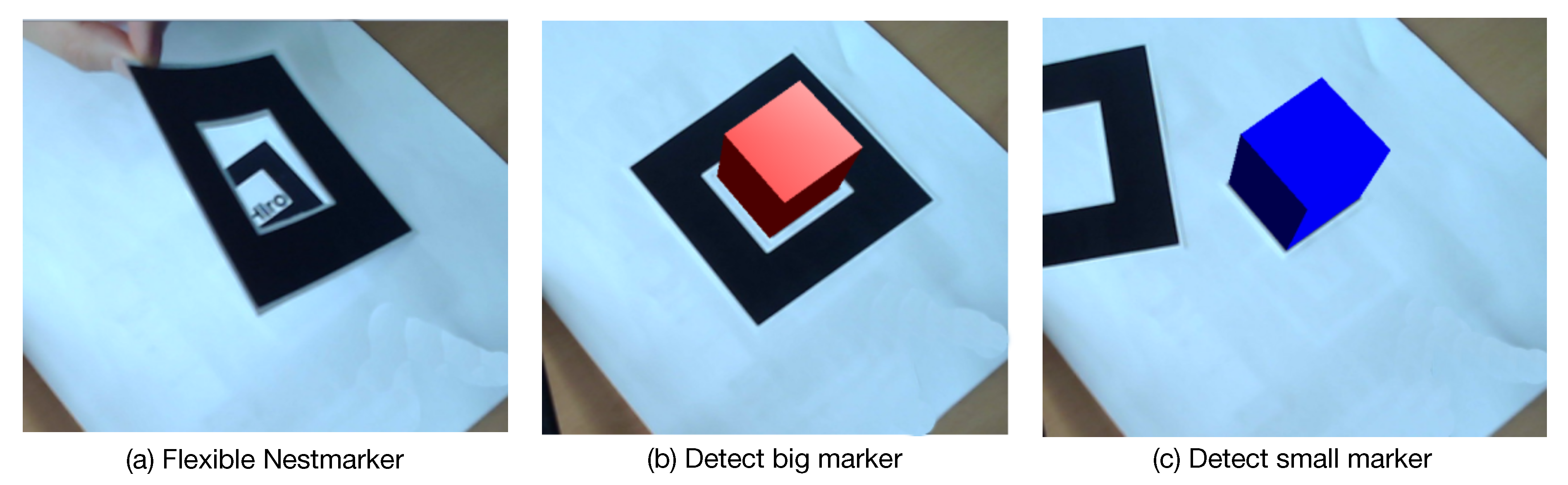

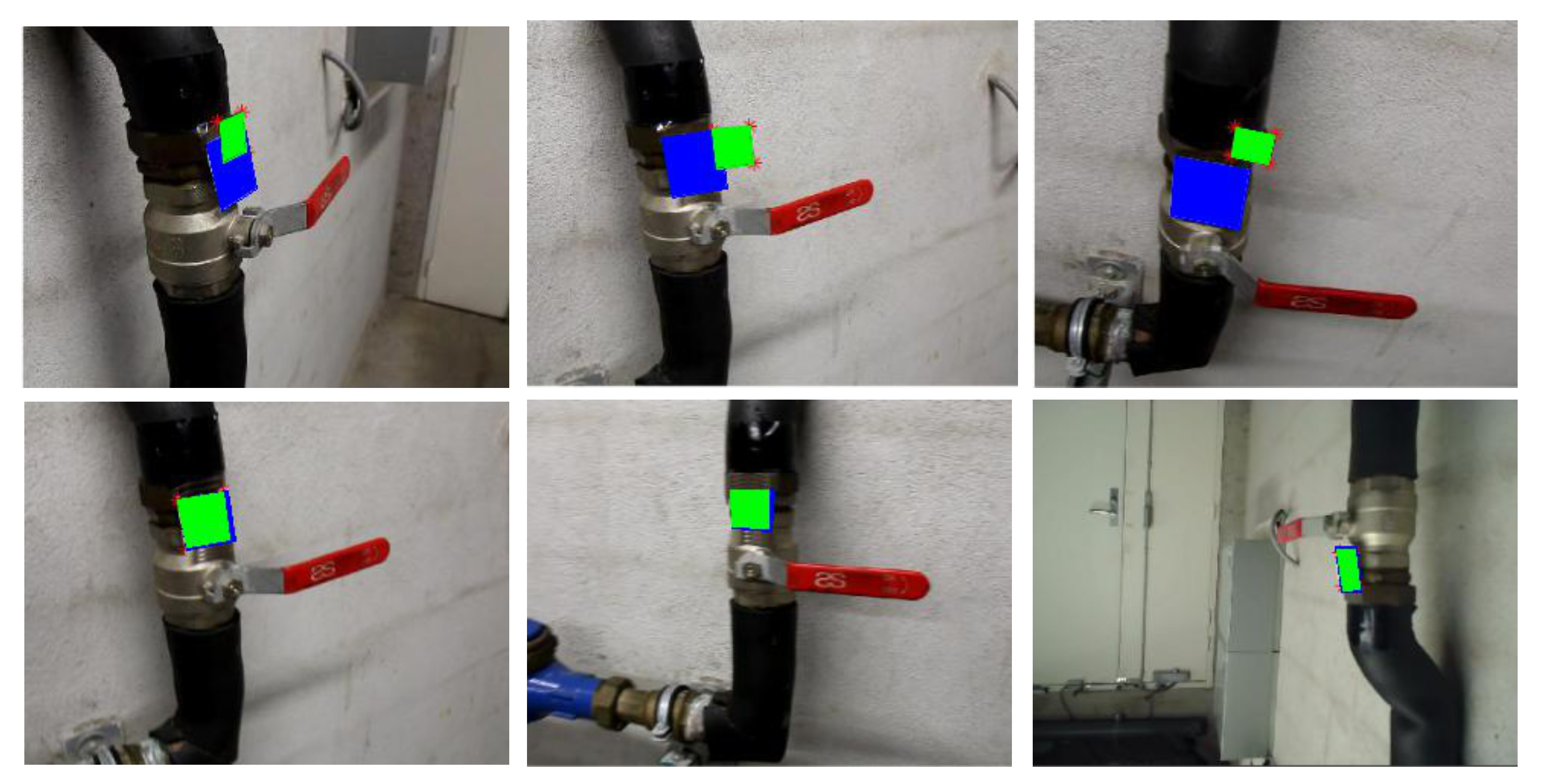

3.1. Nestmarker

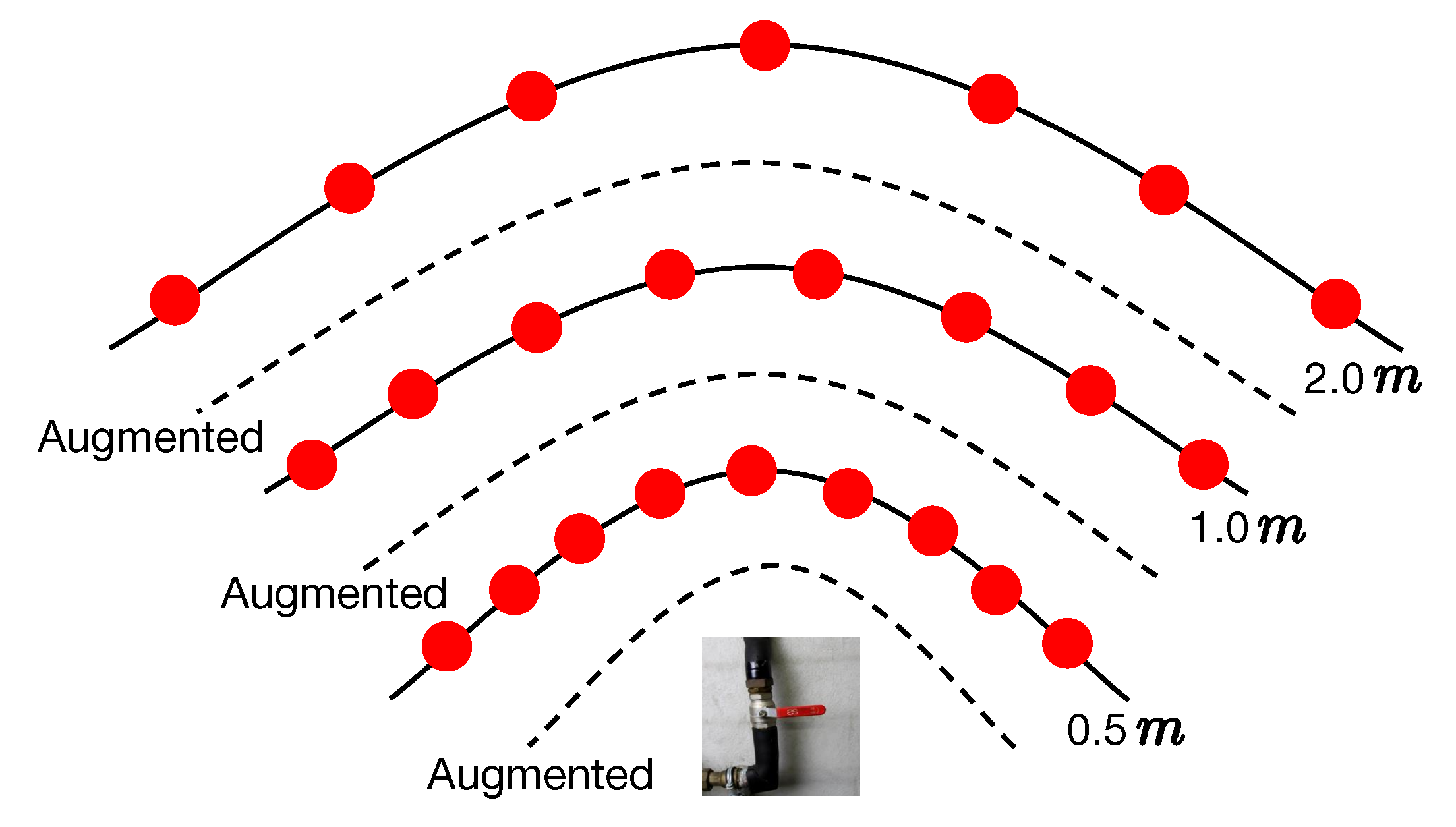

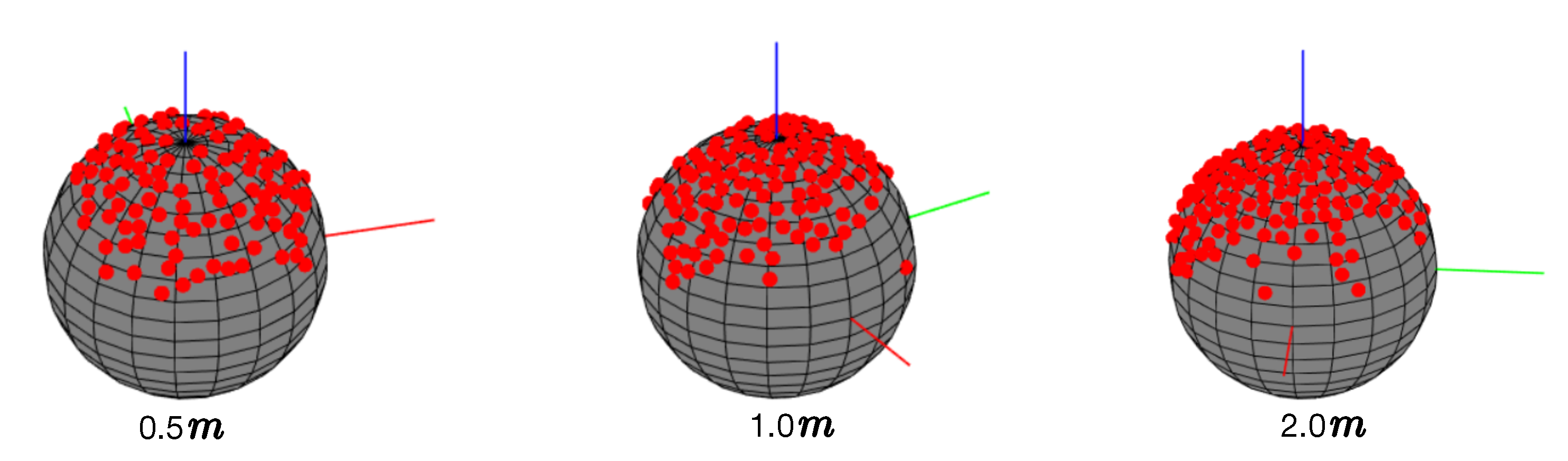

3.2. Data Collection

3.3. Parametrization and Augmentation

4. Experiments

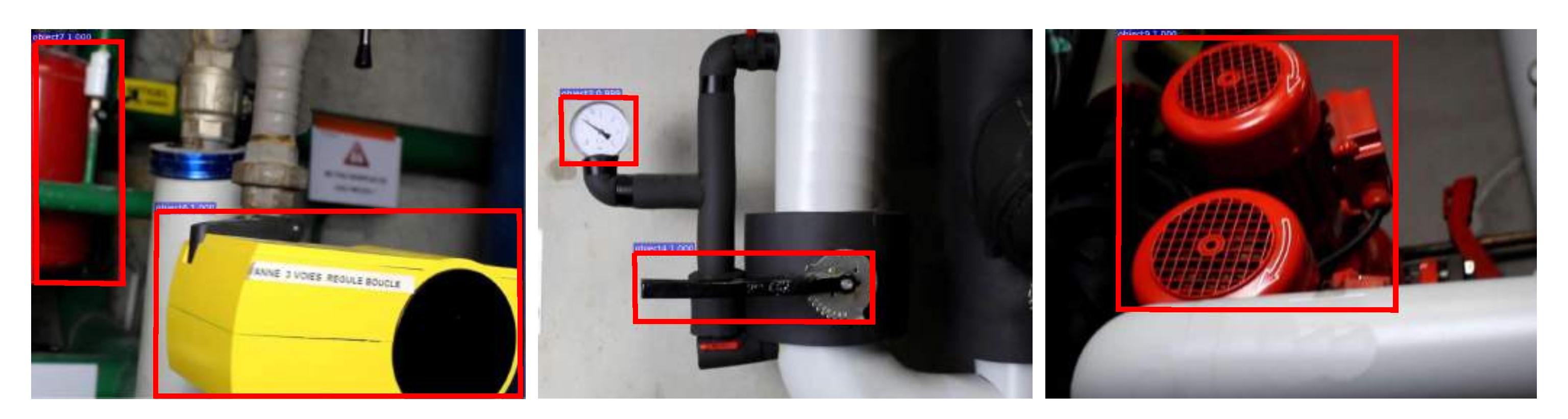

4.1. Industrial10

4.2. Target Object Detection

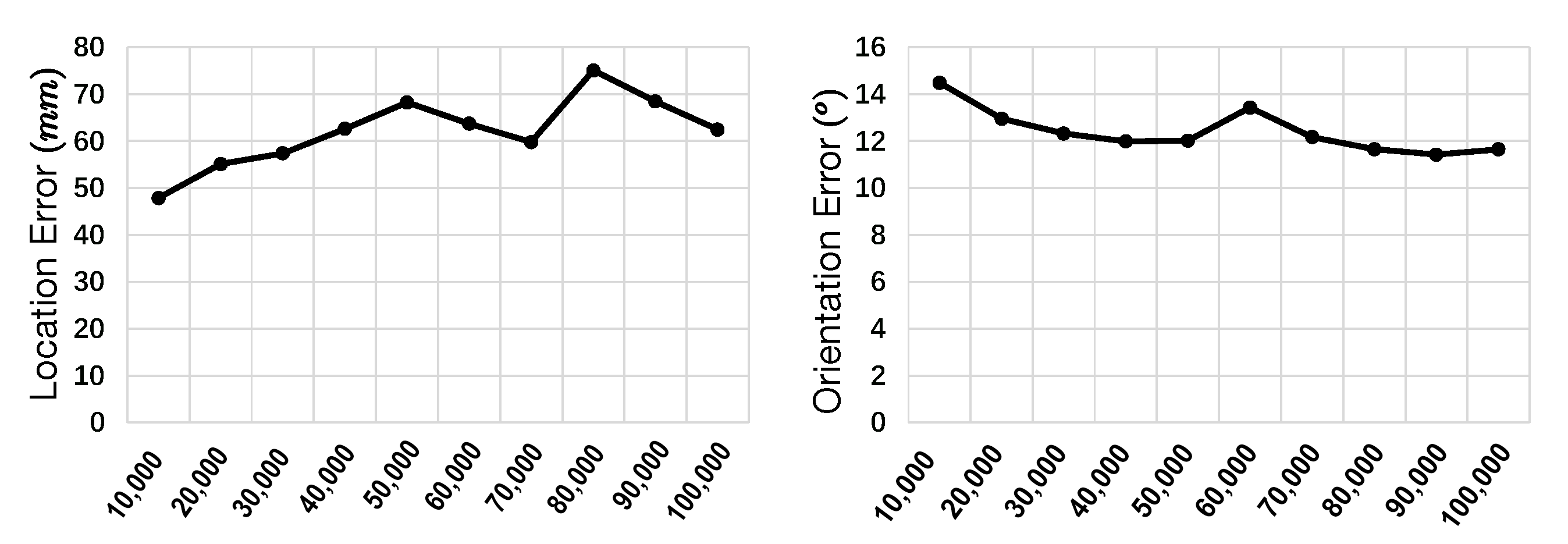

4.3. Ablation Studies of WatchPose

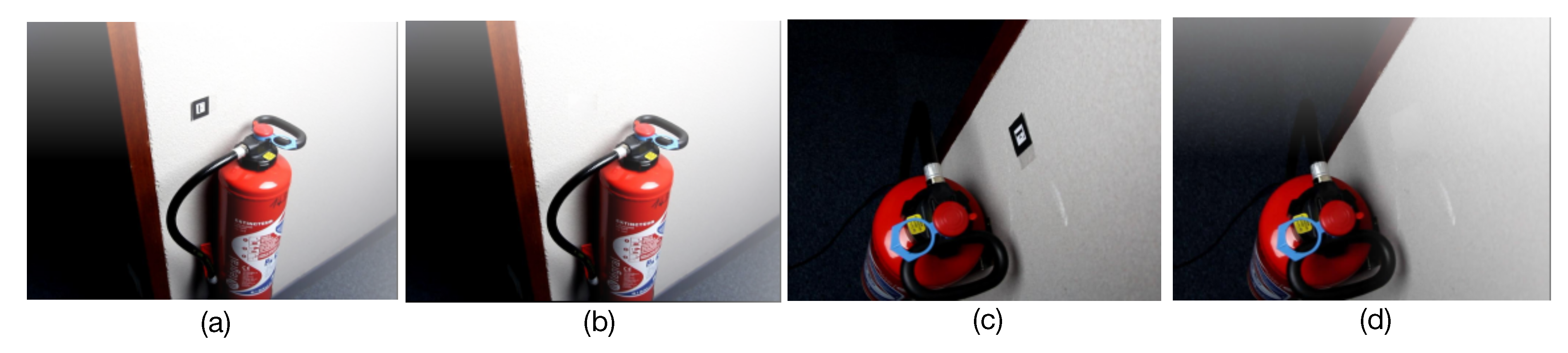

4.3.1. Original Images

4.3.2. Augmentation

4.3.3. Dense Control

4.4. Deep Absolute Pose Estimators

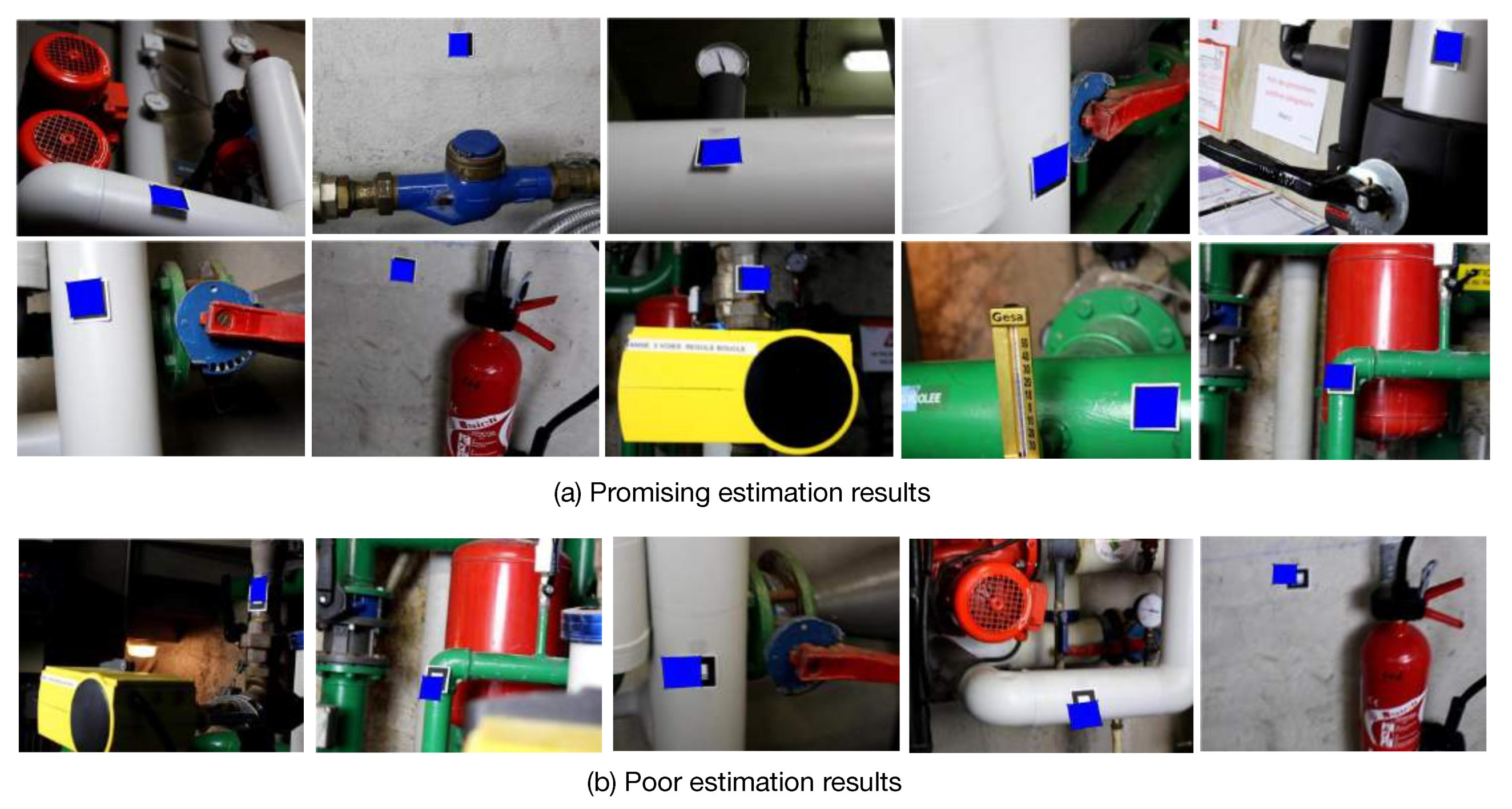

4.5. Discussion on Restrictions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fernando, L.; Mariano, F.; Zorzal, E. A survey of industrial augmented reality. Comput. Ind. Eng. 2020, 139, 106–159. [Google Scholar]

- Williams, B.; Klein, G.; Reid, I. Real-time SLAM relocalisation. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Billinghurst, M.; Clark, A.; Lee, G. A survey of augmented reality. Found. Trends Hum.-Comput. Interact. 2015, 8, 73–272. [Google Scholar]

- Sattler, T.; Zhou, Q.; Pollefeys, M.; Leal-Taixe, L. Understanding the Limitations of CNN-based Absolute Camera Pose Regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1–11. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. PoseNet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Kendall, A.; Cipolla, R. Geometric loss functions for camera pose regression with deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5974–5983. [Google Scholar]

- Brahmbhatt, S.; Gu, J.; Kim, K.; Hays, J.; Kautz, J. Geometry-Aware Learning of Maps for Camera Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2616–2625. [Google Scholar]

- Melekhov, I.; Ylioinas, J.; Kannala, J.; Rahtu, E. Image-based localization using hourglass networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 879–886. [Google Scholar]

- Naseer, T.; Burgard, W. Deep regression for monocular camera-based 6-dof global localization in outdoor environments. In Proceedings of the International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1525–1530. [Google Scholar]

- Wu, J.; Ma, L.; Hu, X. Delving deeper into convolutional neural networks for camera relocalization. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 5644–5651. [Google Scholar]

- Laskar, Z.; Melekhov, I.; Kalia, S.; Kannala, J. Camera relocalization by computing pairwise relative poses using convolutional neural network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 929–938. [Google Scholar]

- Brachmann, E.; Krull, A.; Nowozin, S.; Shotton, J.; Michel, F.; Gumhold, S.; Rother, C. DSAC-differentiable RANSAC for camera localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6684–6692. [Google Scholar]

- Brachmann, E.; Rother, C. Learning less is more-6d camera localization via 3d surface regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4654–4662. [Google Scholar]

- He, Y.; Sun, W.; Huang, H.; Liu, J.; Fan, H.; Sun, J. PVN3D: A Deep Point-wise 3D Keypoints Voting Network for 6DoF Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1–10. [Google Scholar]

- Moo, Y.K.; Ono, E.T.Y.; Lepetit, V.; Salzmann, M.; Fua, P. Learning to Find Good Correspondences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1–11. [Google Scholar]

- Toft, C.; Stenborg, E.; Hammarstrand, L.; Brynte, L.; Pollefeys, M.; Sattler, T.; Kahl, F. Semantic Match Consistency for Long-Term Visual Localization. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 383–399. [Google Scholar]

- Schonberger, J.L.; Pollefeys, M.; Geiger, A.; Sattler, T. Semantic Visual Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1–11. [Google Scholar]

- Yogamani, S.; Hughes, C.; Horgan, J.; Sistu, G.; Varley, P.; ODea, D.; Uricar, M.; Milz, S.; Simon, M.; Amende, K.; et al. WoodScape: A multi-task, multi-camera fisheye dataset for autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 1–9. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–60. [Google Scholar]

- Wohlhart, P.; Lepetit, V. Learning descriptors for object recognition and 3d pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3109–3118. [Google Scholar]

- Cao, Z.; Sheikh, Y.; Banerjee, N.K. Real-time scalable 6DOF pose estimation for textureless objects. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 2441–2448. [Google Scholar]

- Schwarz, M.; Schulz, H.; Behnke, S. RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 1329–1335. [Google Scholar]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the Art on 3D Reconstruction with RGB-D Cameras. Comput. Graph. Forum 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A.W. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the IEEE/ACM International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Rabbi, I.; Ullah, S.; Rahman, S.U.; Alam, A. Extending the Functionality of ARToolKit to Semi Controlled/Uncontrolled Environment. Information 2014, 17, 2823–2832. [Google Scholar]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Shavit, Y.; Ferens, R. Introduction to Camera Pose Estimation with Deep Learning. arXiv 2019, arXiv:1907.05272v3. [Google Scholar]

- Brachmann, E.; Krull, A.; Michel, F.; Gumhold, S.; Shotton, J.; Rother, C. Learning 6d object pose estimation using 3d object coordinates. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 536–551. [Google Scholar]

- Tiebe, O.; Yang, C.; Khan, M.H.; Grzegorzek, M.; Scarpin, D. Stripes-Based Object Matching. In Computer and Information Science; Springer: Cham, Switzerland, 2016; pp. 59–72. [Google Scholar]

- Shotton, J.; Glocker, B. Scene coordinate regression forests for camera relocalization in RGB-D images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2930–2937. [Google Scholar]

- Bui, M.; Baur, C.; Navab, N.; Ilic, S.; Albarqouni, S. Adversarial Networks for Camera Pose Regression and Refinement. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019; pp. 1–9. [Google Scholar]

- Wu, C. Towards Linear-Time Incremental Structure from Motion. In Proceedings of the International Conference on 3D Vision, Seattle, WA, USA, 29 June–1 July 2013; pp. 127–134. [Google Scholar]

- Gu, C.; Ren, X. Discriminative Mixture-of-templates for Viewpoint Classification. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 408–421. [Google Scholar]

- Aubry, M.; Maturana, D.; Efros, A.A.; Russell, B.C.; Sivic, J. Seeing 3d chairs: Exemplar part-based 2d-3d alignment using a large dataset of cad models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3762–3769. [Google Scholar]

- Masci, J.; Migliore, D.; Bronstein, M.M.; Schmidhuber, J. Descriptor Learning for Omnidirectional Image Matching. In Registration and Recognition in Images and Videos; Springer: Berlin/Heidelberg, Germany, 2014; pp. 49–62. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Gall, J.; Yao, A.; Razavi, N.; Van Gool, L.; Lempitsky, V. Hough forests for object detection, tracking, and action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2188–2202. [Google Scholar] [CrossRef] [PubMed]

- Gupta, S.; Arbeláez, P.; Girshick, R.; Malik, J. Aligning 3D models to RGB-D images of cluttered scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4731–4740. [Google Scholar]

- Kendall, A.; Cipolla, R. Modelling uncertainty in deep learning for camera relocalization. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 4762–4769. [Google Scholar]

- Walch, F.; Hazirbas, C.; Leal-Taixe, L.; Sattler, T.; Hilsenbeck, S.; Cremers, D. Image-based localization using lstms for structured feature correlation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 627–637. [Google Scholar]

- Tateno, K.; Kitahara, I.; Ohta, Y. A Nested Marker for Augmented Reality. In Proceedings of the IEEE Virtual Reality Conference, Charlotte, NC, USA, 10–14 March 2007; pp. 259–262. [Google Scholar]

- Crete, F.; Dolmiere, T.; Ladret, P.; Nicolas, M. The blur effect: Perception and estimation with a new no-reference perceptual blur metric. In Proceedings of the Human Vision and Electronic Imaging, San Jose, CA, USA, 29 January–1 February 2007; pp. 1–11. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zhong, B.; Li, Y. Image Feature Point Matching Based on Improved SIFT Algorithm. In Proceedings of the International Conference on Image, Vision and Computing, Xiamen, China, 5–7 July 2019; pp. 489–493. [Google Scholar]

- Korman, S.; Avidan, S. Coherency Sensitive Hashing. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1099–1112. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Russell, B.; Torralba, A.; Murphy, K.; Freeman, W. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge Results. 2007. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/ (accessed on 26 May 2020).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Tian, Y.; Jiang, W. Gimbal Handheld Holder. U.S. Patent 10,208,887, 19 February 2019. [Google Scholar]

- Kim, Y.T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- ARCore. Google ARCore. 2017. Available online: https://developers.google.com/ar/ (accessed on 26 May 2020).

| Object | Average Precision | Object | Average Precision |

|---|---|---|---|

| Object1 | 95.0% | Object6 | 97% |

| Object2 | 99.2% | Object7 | 99.6% |

| Object3 | 100% | Object8 | 100% |

| Object4 | 99.5% | Object9 | 100% |

| Object5 | 100% | Object10 | 99.5% |

| Objects | Traditional [5,7] | WatchPose | Objects | Traditional [5,7] | WatchPose | ||||

|---|---|---|---|---|---|---|---|---|---|

| Object1 | 368.2857 mm | 77.0220° | 79.1773 mm | 19.7309° | Object6 | 391.6417 mm | 79.5715° | 82.9850 mm | 17.1254° |

| Object2 | 350.5098 mm | 63.8454° | 81.3936 mm | 20.2622° | Object7 | 291.6453 mm | 81.2755° | 61.2231 mm | 23.2668° |

| Object3 | 381.9421 mm | 80.2673° | 84.8384 mm | 21.4031° | Object8 | 237.5793 mm | 59.0616° | 50.4712 mm | 16.5201° |

| Object4 | 341.1610 mm | 83.6164° | 69.9175 mm | 20.8298° | Object9 | 280.1659 mm | 81.4738° | 56.7338 mm | 18.3799° |

| Object5 | 355.6238 mm | 86.3258° | 73.7141 mm | 22.0913° | Object10 | 278.5611 mm | 77.3197° | 58.4822 mm | 19.9535° |

| Train Types | Testing Set: With-Inpainting | Testing Set: Without-Inpainting |

|---|---|---|

| Training set: with-inpainting | 69.8936 mm 19.9563° | 210.5763 mm 25.1174° |

| Training set: without-inpainting | 360.1666 mm 31.7290° | 60.6378 mm 20.5549° |

| Objects | Traditional | WatchPose | Objects | Traditional | WatchPose | ||||

|---|---|---|---|---|---|---|---|---|---|

| Object1 | 321.4866 mm | 69.1536° | 16.6907 mm | 4.6411° | Object6 | 389.0706 mm | 76.0915° | 7.3524 mm | 3.9631° |

| Object2 | 311.8624 mm | 60.1966° | 16.5327 mm | 5.6037° | Object7 | 280.2132 mm | 79.5342° | 12.4772 mm | 5.5193° |

| Object3 | 360.3298 mm | 76.3290° | 19.7768 mm | 5.5316° | Object8 | 229.0576 mm | 51.5531° | 7.9837 mm | 3.0772° |

| Object4 | 339.3160 mm | 80.3916° | 15.6313 mm | 5.0524° | Object9 | 266.6300 mm | 75.0026° | 10.7575 mm | 4.3059° |

| Object5 | 354.3400 mm | 81.3303° | 15.3658 mm | 5.3537° | Object10 | 271.8297 mm | 72.1473° | 10.4555 mm | 4.6450° |

| Original Images | Orientation Error (mm) | Location Error (°) | |

|---|---|---|---|

| 0 | 2367 | 16.7402 | 4.6613 |

| 0.2 | 1793 | 16.6907 | 4.6411 |

| 0.4 | 1434 | 29.989 | 7.2499 |

| 0.6 | 1075 | 103.663 | 26.8137 |

| 0.8 | 717 | 212.5046 | 47.2115 |

| 1 | 358 | 325.8071 | 72.7677 |

| Traditional | PoseNet [5] | Bayesian-PoseNet [39] | Hourglass-Pose [8] | PoseNet+ [6] | MapNet [7] | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Object1 | 16.691 mm | 4.641° | 17.717 mm | 3.223° | 7.132 mm | 2.952° | 6.551 mm | 2.338° | 5.259 mm | 2.100° |

| Object2 | 16.533 mm | 5.604° | 15.505 mm | 4.213° | 11.040 mm | 4.041° | 11.608 mm | 4.084° | 9.646 mm | 3.568° |

| Object3 | 19.777 mm | 5.532° | 18.740 mm | 4.102° | 12.183 mm | 4.095° | 11.953 mm | 3.843° | 10.676 mm | 3.288° |

| Object4 | 15.631 mm | 5.052° | 17.946 mm | 3.986° | 9.635 mm | 3.541° | 8.325 mm | 3.476° | 9.466 mm | 3.096° |

| Object5 | 15.366 mm | 5.354° | 14.364 mm | 3.585° | 10.865 mm | 3.193° | 12.225 mm | 2.778° | 9.105 mm | 2.405° |

| Object6 | 7.352 mm | 3.963° | 7.311 mm | 2.475° | 5.803 mm | 2.087° | 4.285 mm | 2.058° | 4.882 mm | 1.988° |

| Object7 | 12.477 mm | 5.519° | 13.307 mm | 3.985° | 7.523 mm | 3.371° | 6.950 mm | 3.112° | 5.973 mm | 3.020° |

| Object8 | 7.984 mm | 3.077° | 8.154 mm | 2.603° | 5.419 mm | 2.677° | 5.727 mm | 2.539° | 3.785 mm | 2.395° |

| Object9 | 10.758 mm | 4.306° | 10.587 mm | 3.369° | 6.276 mm | 2.923° | 5.785 mm | 2.716° | 4.308 mm | 2.467° |

| Object10 | 10.456 mm | 4.645° | 11.988 mm | 3.555° | 6.405 mm | 3.114° | 6.620 mm | 2.996° | 4.685 mm | 2.411° |

| Mean | 13.303 mm | 4.769° | 13.562 mm | 3.510° | 8.228 mm | 3.199° | 8.003 mm | 2.99° | 6.779 mm | 2.674° |

| WatchPose | PoseNet [5] | Bayesian-PoseNet [39] | Hourglass-Pose [8] | PoseNet+ [6] | MapNet [7] | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Object1 | 321.487 mm | 69.154° | 315.742 mm | 66.544° | 295.021 mm | 62.792° | 284.158 mm | 57.688° | 260.900 mm | 55.758° |

| Object2 | 311.862 mm | 60.197° | 312.967 mm | 55.300° | 253.023 mm | 56.655° | 241.538 mm | 51.312° | 230.533 mm | 48.319° |

| Object3 | 360.330 mm | 76.329° | 355.475 mm | 71.164° | 290.545 mm | 70.936° | 279.047 mm | 72.325° | 233.519 mm | 64.396° |

| Object4 | 339.316 mm | 80.392° | 341.601 mm | 76.356° | 295.942 mm | 74.763° | 280.991 mm | 71.527° | 239.109 mm | 63.830° |

| Object5 | 354.340 mm | 81.330° | 352.981 mm | 74.372° | 299.807 mm | 79.449° | 286.456 mm | 72.869° | 256.954 mm | 57.061° |

| Object6 | 389.071 mm | 76.092° | 265.340 mm | 72.558° | 322.610 mm | 73.795° | 310.644 mm | 69.460° | 269.646 mm | 56.306° |

| Object7 | 280.213 mm | 79.534° | 282.665 mm | 77.425° | 261.754 mm | 79.773° | 250.111 mm | 77.497° | 233.322 mm | 67.701° |

| Object8 | 229.058 mm | 51.553° | 221.250 mm | 56.541° | 210.119 mm | 49.858° | 190.307 mm | 47.544° | 155.271 mm | 40.131° |

| Object9 | 266.630 mm | 75.003° | 275.934 mm | 75.422° | 215.861 mm | 71.598° | 203.967 mm | 69.300° | 181.857 mm | 57.695° |

| Object10 | 271.830 mm | 72.147° | 268.961 mm | 71.184° | 234.627 mm | 72.644° | 222.443 mm | 70.313° | 200.762 mm | 64.317° |

| Mean | 312.414 mm | 72.173° | 299.292 mm | 69.687° | 267.931 mm | 69.226° | 254.966 mm | 65.984° | 226.187 mm | 57.551° |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Simon, G.; See, J.; Berger, M.-O.; Wang, W. WatchPose: A View-Aware Approach for Camera Pose Data Collection in Industrial Environments. Sensors 2020, 20, 3045. https://doi.org/10.3390/s20113045

Yang C, Simon G, See J, Berger M-O, Wang W. WatchPose: A View-Aware Approach for Camera Pose Data Collection in Industrial Environments. Sensors. 2020; 20(11):3045. https://doi.org/10.3390/s20113045

Chicago/Turabian StyleYang, Cong, Gilles Simon, John See, Marie-Odile Berger, and Wenyong Wang. 2020. "WatchPose: A View-Aware Approach for Camera Pose Data Collection in Industrial Environments" Sensors 20, no. 11: 3045. https://doi.org/10.3390/s20113045

APA StyleYang, C., Simon, G., See, J., Berger, M.-O., & Wang, W. (2020). WatchPose: A View-Aware Approach for Camera Pose Data Collection in Industrial Environments. Sensors, 20(11), 3045. https://doi.org/10.3390/s20113045