Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model

Abstract

1. Introduction

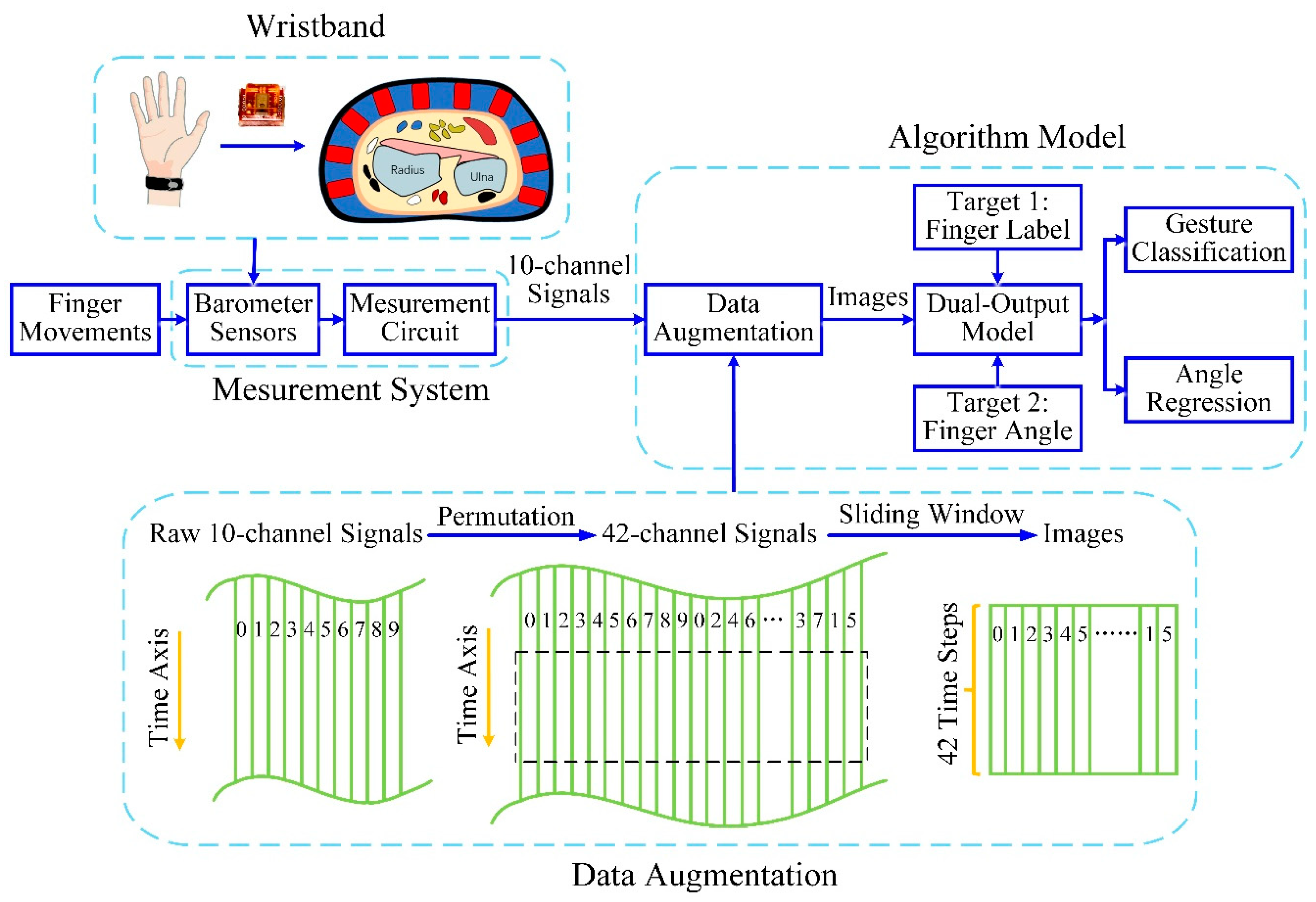

2. Materials and Methods

2.1. Wristband Prototype and Experiment Protocol

2.1.1. Wristband prototype

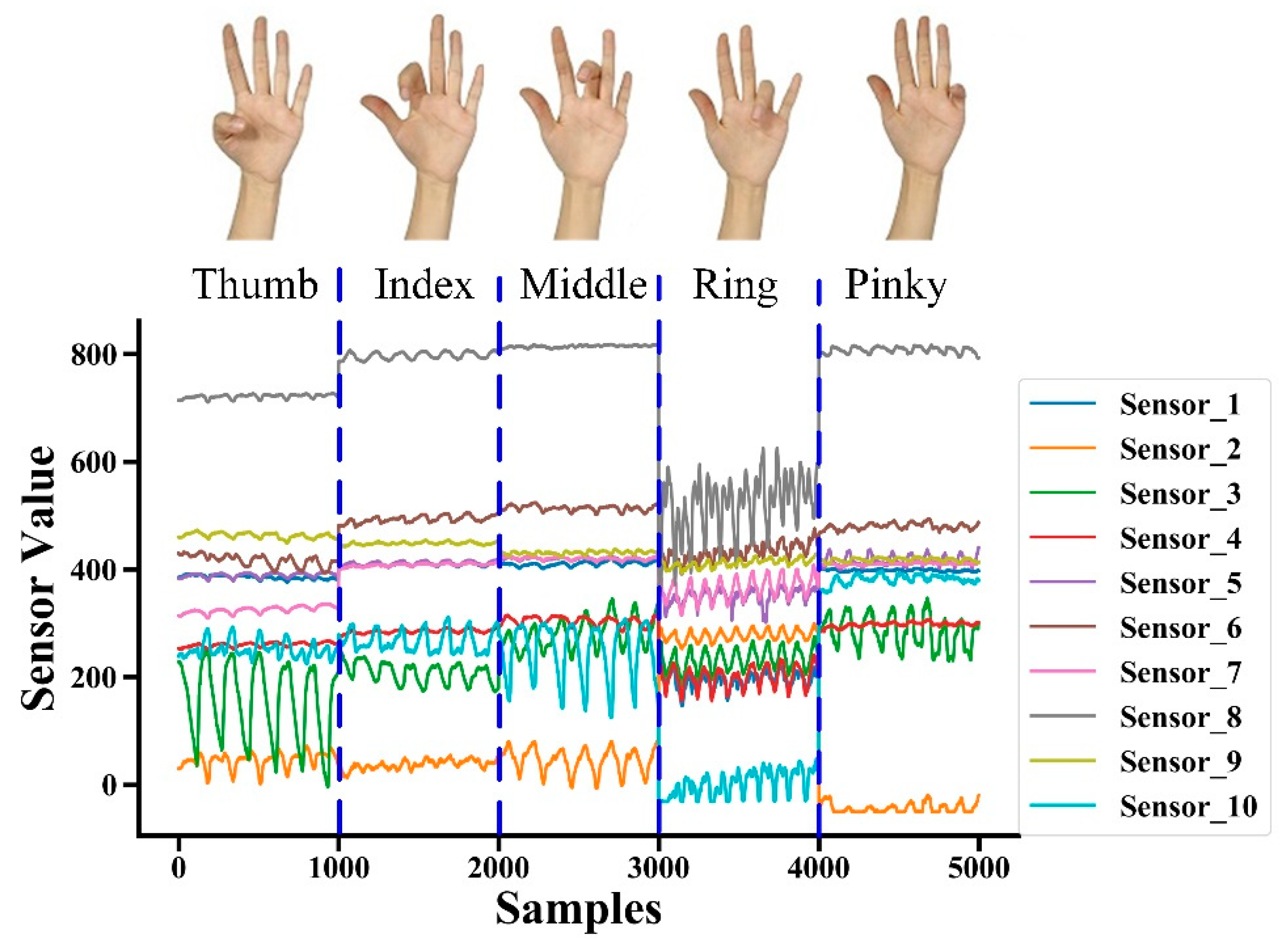

2.1.2. Gesture Set

2.1.3. Experiment Protocol

2.2. Data Augmentation

- N-channel signals are arranged into a 2D array , where is the time length of signal sequences, which is the original signal array.

- The 2D array from Step 1 is permutated into , where is the dimension of a newly generated array so that each signal sequence is adjacent to every other sequence [28]. For example, 3-channel signals [1, 2, 3], wherein the column 3 signal and column 1 signal are not adjacent, can be rearranged into 2D array signals [1, 2, 3, 1]. Then, each column of signals can be adjacent to other columns of signals, which takes into account the spatial information between each column of signals, and in this example.

- The new signal array from Step 2 is converted into a movement image by windowing, and the image size is , where is the length of the window.

- The window slides along the time axis to generate different movement images that are utilized as new inputs of the model.

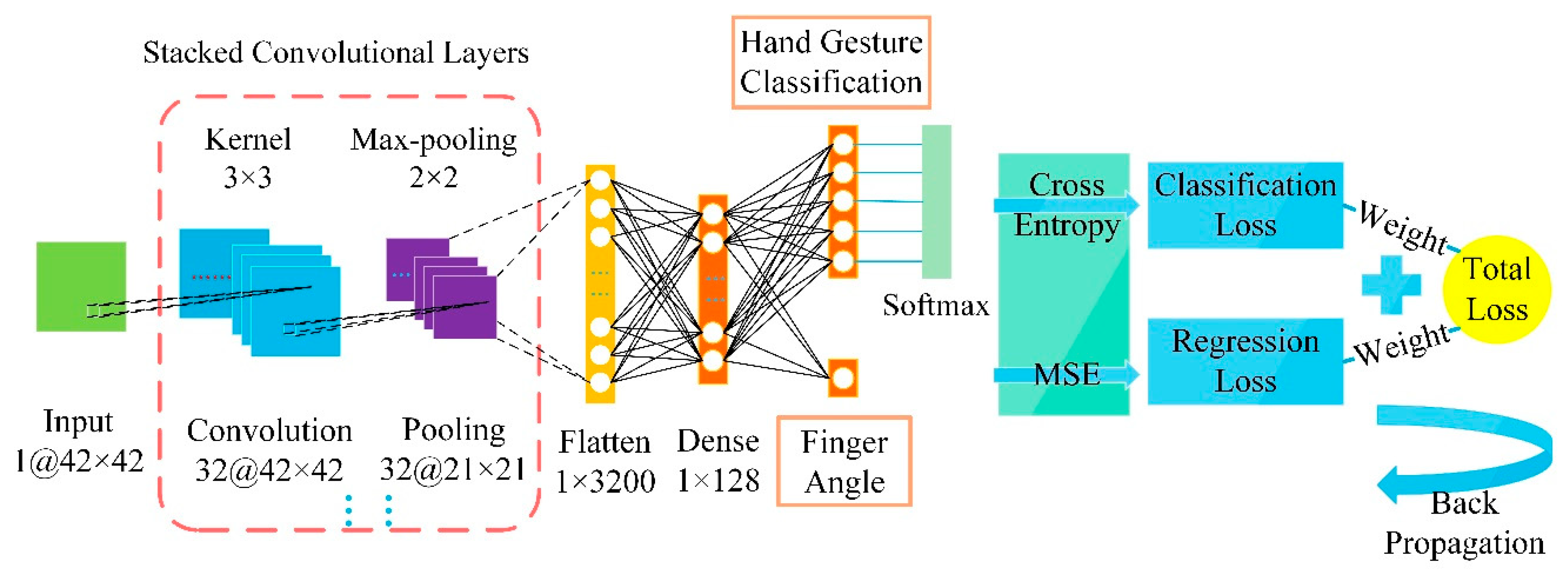

2.3. Model Architecture

2.3.1. Convolutional Layer

2.3.2. Dual Outputs

2.3.3. Loss Weighting and Training Algorithm

2.4. Model Performance Evaluation

2.5. Benchmarking against Other Machine Learning Models

2.5.1. CNN without Data Augmentation

2.5.2. RNN

2.5.3. SVM

2.5.4. RF

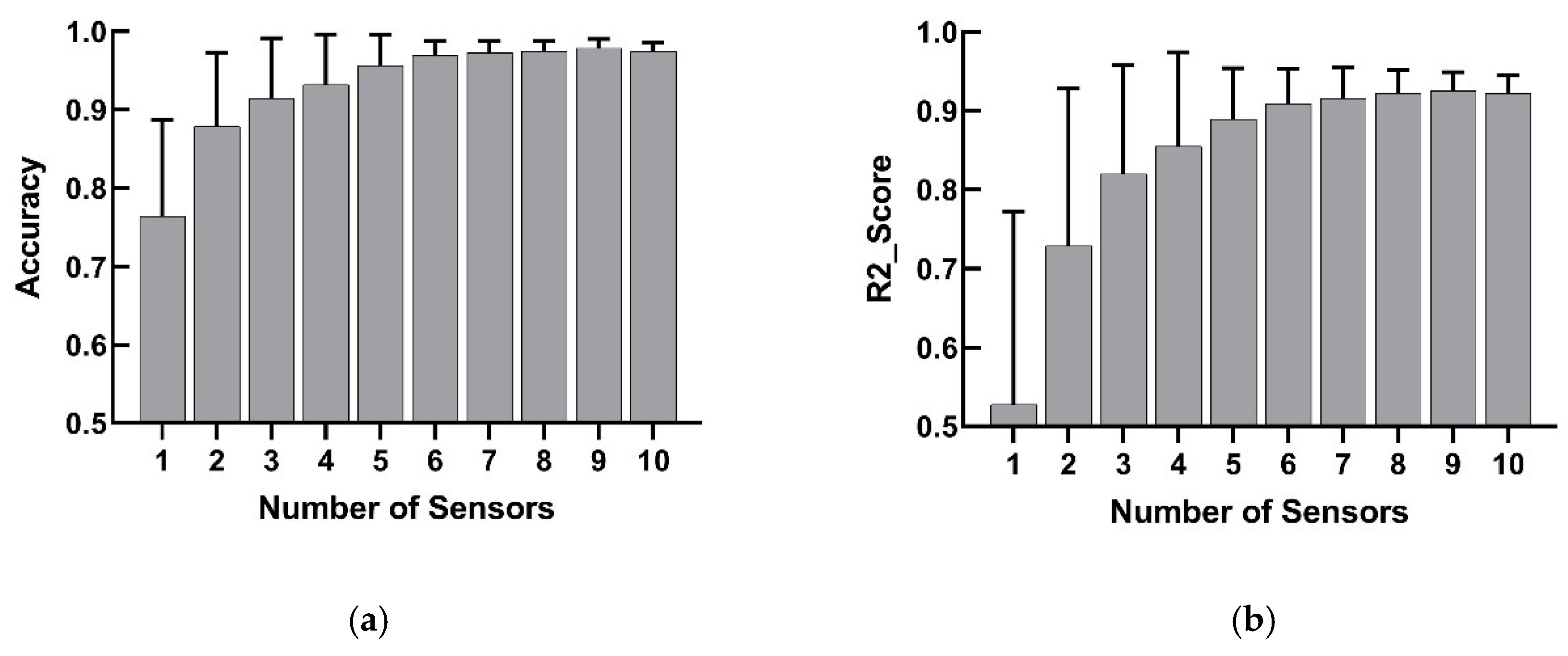

2.6. The Impact of Sensor Numbers on the Dual-Output Model

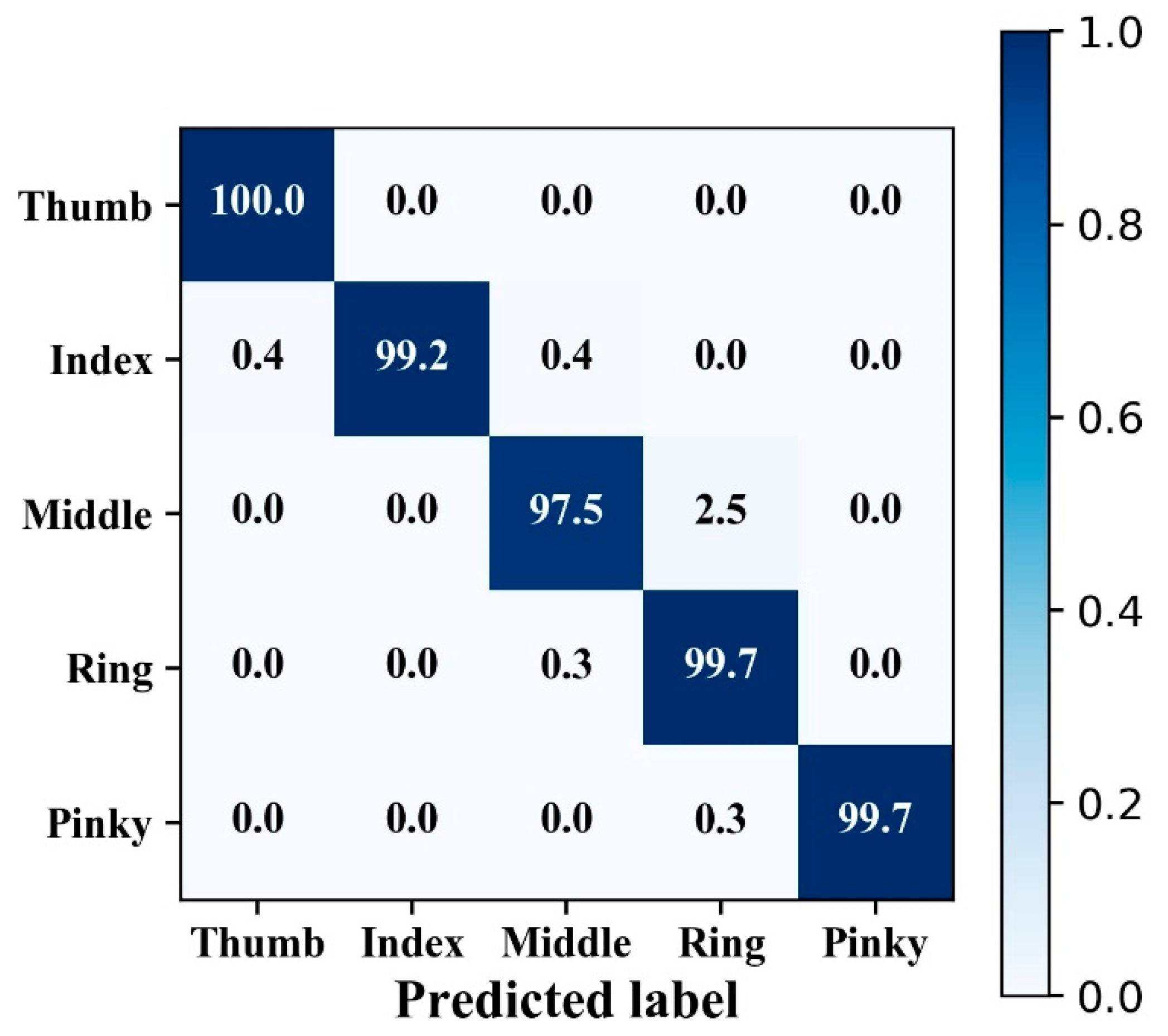

3. Results

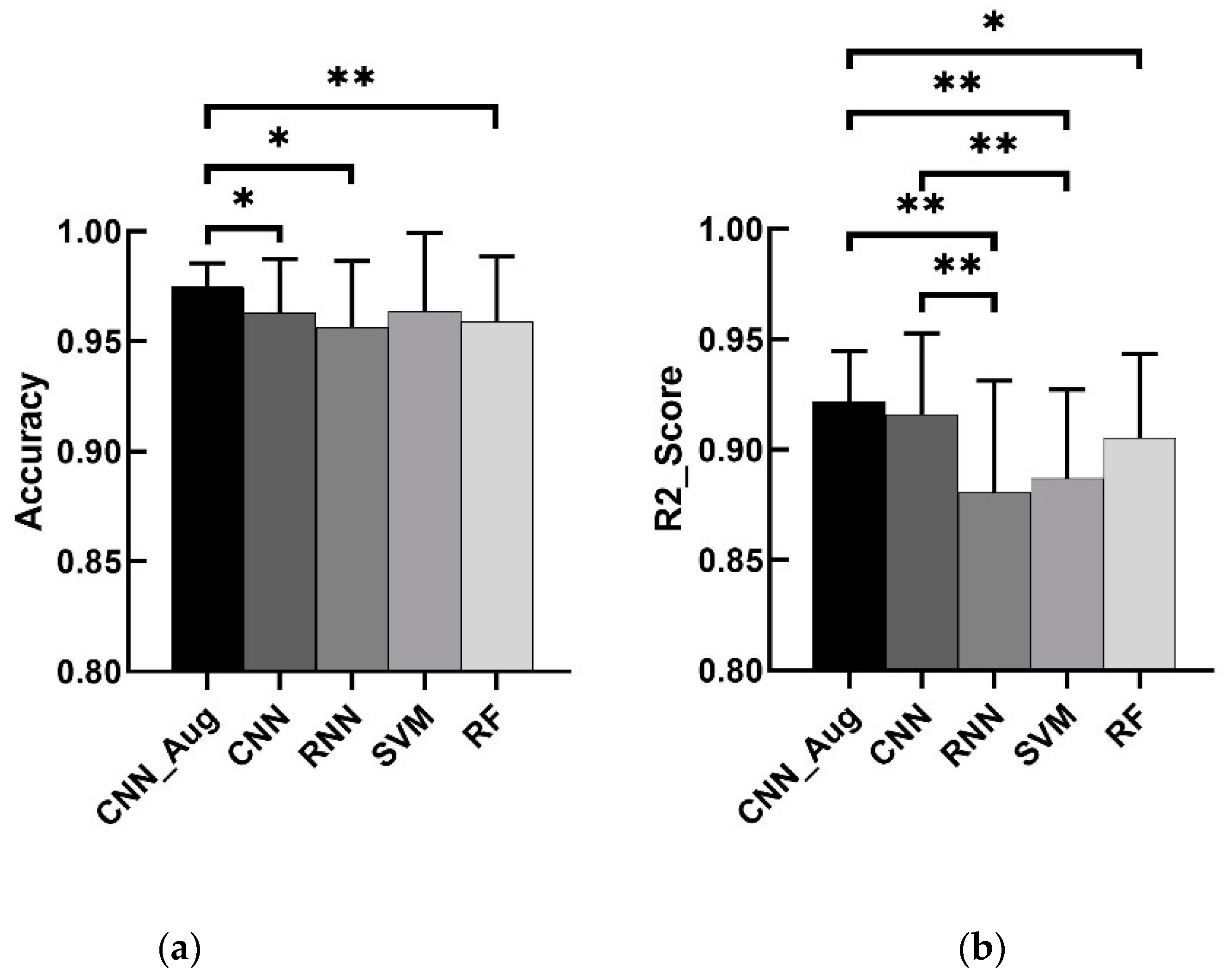

4. Discussion

4.1. Comparison with Previous Results

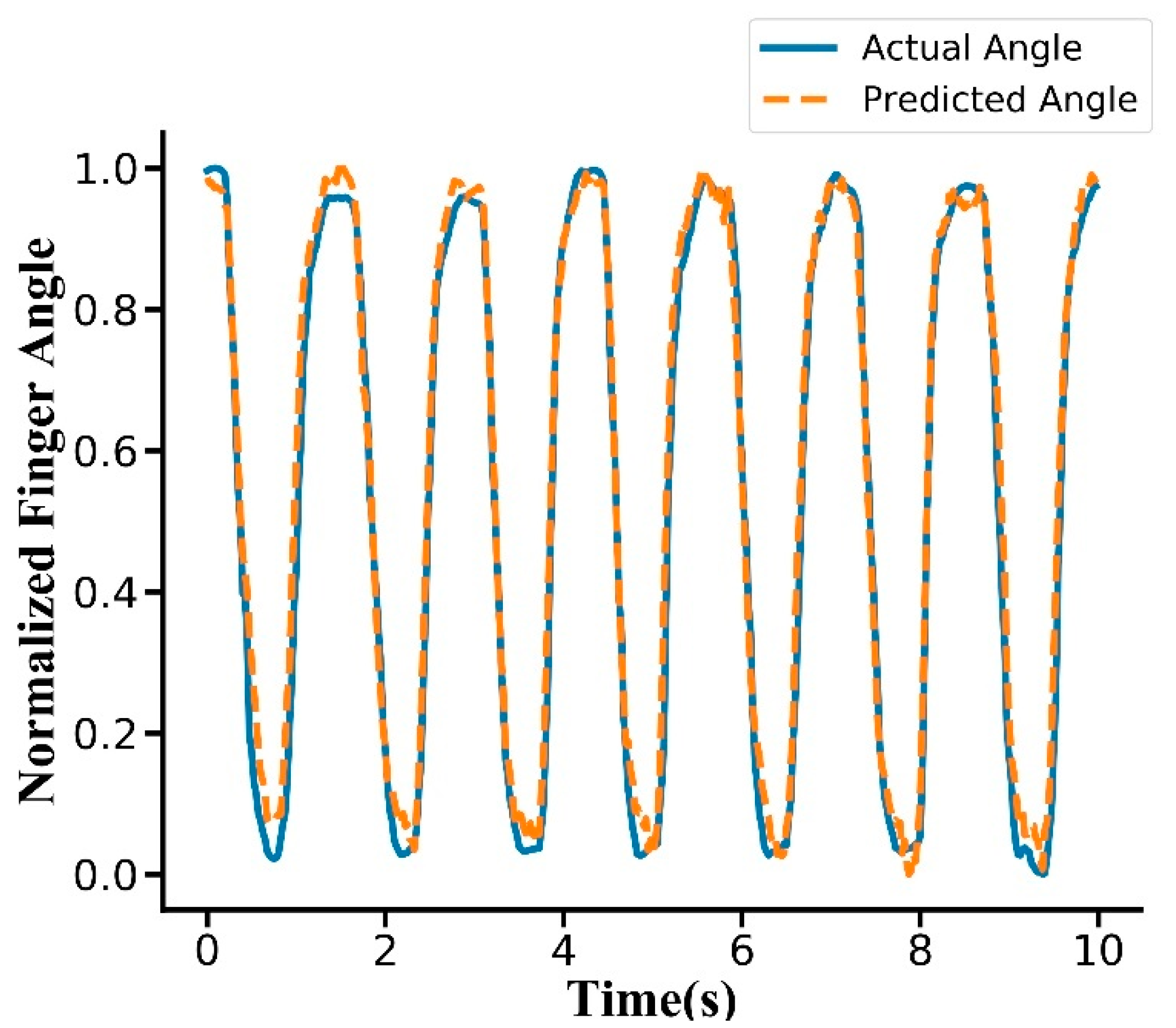

4.2. Analysis of Confusion Matrix and Fitting Curve

4.3. Effects of Hyperparameters

4.3.1. Window Length

4.3.2. Network Structure of the Model

4.3.3. Batch Size and Epoch

4.3.4. Weight Ratio

4.4. Feature Extraction in Sequential Signals and Image Signals for CNN

4.4.1. Feature Extraction in Sequential Signals for CNN

4.4.2. Feature Extraction in Converted Image Signals for CNN

4.5. Comparison with Other Machine Learning Models

4.5.1. Comparison between Dual-Output Models with and without Data Augmentation

4.5.2. Comparison between Dual-Output Models Based on CNN with Data Augmentation and RNN

4.5.3. Comparison between Dual-Output Models and Single-Output Models

4.6. Comparison of Results with Different Sensor Numbers

4.7. Calculation Time and Real-Time Application

4.8. Improvement of Dual-Output Model

4.9. Benefits of the Dual-Output Model Compared with Stacked Single-Output Models

4.10. Limitations and Future Work

4.11. Potential Applications

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mitra, S.; Acharya, T. Gesture Recognition: A Survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Galka, J.; Masior, M.; Zaborski, M.; Barczewska, K. Inertial Motion Sensing Glove for Sign Language Gesture Acquisition and Recognition. IEEE Sens. J. 2016, 16, 6310–6316. [Google Scholar] [CrossRef]

- Xu, D. A neural network approach for hand gesture recognition in virtual reality driving training system of SPG. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Lee, S.; Hara, S.; Yamada, Y. A safety measure for control mode switching of skill-assist for effective automotive manufacturing. IEEE Trans. Autom. Sci. Eng. 2009, 7, 817–825. [Google Scholar] [CrossRef]

- Chen, F.; Lv, H.; Pang, Z.; Zhang, J.; Hou, Y.; Gu, Y.; Yang, H.; Yang, G. WristCam: A Wearable Sensor for Hand Trajectory Gesture Recognition and Intelligent Human-Robot Interaction. IEEE Sens. J. 2019, 19, 8441–8451. [Google Scholar] [CrossRef]

- Ren, Z.; Meng, J.; Yuan, J.; Zhang, Z. Robust hand gesture recognition with Kinect sensor. In Proceedings of the 19th ACM international conference on Multimedia, Scottsdale, AZ, USA, 28 November–1 December 2011. [Google Scholar]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013. [Google Scholar]

- Liu, J.; Zhong, L.; Wickramasuriya, J.; Vasudevan, V. uWave: Accelerometer-based personalized gesture recognition and its applications. Pervasive Mob. Comput. 2009, 5, 657–675. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of Wrist-Worn, Real-Time Hand, and Surface Gesture Recognition via sEMG and IMU Sensing. IEEE Trans. Ind. Inf. 2018, 14, 3376–3385. [Google Scholar] [CrossRef]

- Zhang, Y.; Harrison, C. Tomo: Wearable, low-cost electrical impedance tomography for hand gesture recognition. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 1–4 November 2015. [Google Scholar]

- Jiang, S.; Li, L.; Xu, H.; Xu, J.; Gu, G.; Shull, P.B. Stretchable e-Skin Patch for Gesture Recognition on the Back of the Hand. IEEE Trans. Ind. Electron. 2020, 67, 647–657. [Google Scholar] [CrossRef]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016. [Google Scholar]

- Kim, Y.; Toomajian, B. Hand Gesture Recognition Using Micro-Doppler Signatures with Convolutional Neural Network. IEEE Access 2016, 4, 7125–7130. [Google Scholar] [CrossRef]

- Kawaguchi, J.; Yoshimoto, S.; Kuroda, Y.; Oshiro, O. Estimation of Finger Joint Angles Based on Electromechanical Sensing of Wrist Shape. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1409–1418. [Google Scholar] [CrossRef]

- Zhou, Y.; Zeng, J.; Li, K.; Fang, Y.; Liu, H. Voluntary and FES-induced Finger Movement Estimation Using Muscle Deformation Features. IEEE Trans. Ind. Electron. 2020, 67, 4002–4012. [Google Scholar] [CrossRef]

- Smith, R.J.; Tenore, F.; Huberdeau, D.; Etienne-Cummings, R.; Thakor, N.V. Continuous decoding of finger position from surface EMG signals for the control of powered prostheses. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008. [Google Scholar]

- Hioki, M.; Kawasaki, H. Estimation of finger joint angles from sEMG using a neural network including time delay factor and recurrent structure. ISRN Rehabil. 2012, 2012, 604314. [Google Scholar] [CrossRef]

- Pan, L.; Zhang, D.; Liu, J.; Sheng, X.; Zhu, X. Continuous estimation of finger joint angles under different static wrist motions from surface EMG signals. Biomed. Signal Process. Control 2014, 14, 265–271. [Google Scholar] [CrossRef]

- Antfolk, C.; Cipriani, C.; Controzzi, M.; Carrozza, M.C.; Lundborg, G.; Rosén, B.; Sebelius, F. Using EMG for real-time prediction of joint angles to control a prosthetic hand equipped with a sensory feedback system. J. Med. Biol. Eng. 2010, 30, 399–406. [Google Scholar] [CrossRef]

- Abraham, Z.; Tan, P.N. An integrated framework for simultaneous classification and regression of time-series data. In Proceedings of the 2010 SIAM International Conference on Data Mining, Columbus, OH, USA, 29 April–1 May 2010. [Google Scholar]

- Wang, Z.; Li, W.; Kao, Y.; Zou, D.; Wang, Q.; Ahn, M.; Hong, S. HCR-Net: A Hybrid of Classification and Regression Network for Object Pose Estimation. In Proceedings of the 2018 IJCAI, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X. A public domain dataset for human activity recognition using smartphones. In Proceedings of the ESANN, Bruges, Belgium, 24–26 April 2013. [Google Scholar]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuators A. 2020, 301, 111738. [Google Scholar] [CrossRef]

- Liang, R.H.; Ouhyoung, M. A sign language recognition system using hidden markov model and context sensitive search. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Hong Kong, China, 1–4 July 1996. [Google Scholar]

- Huang, Y.; Yang, X.; Li, Y.; Zhou, D.; He, K.; Liu, H. Ultrasound-based sensing models for finger motion classification. IEEE J. Biomed. Health Inf. 2017, 22, 1395–1405. [Google Scholar] [CrossRef]

- Dwivedi, S.K.; Ngeo, J.G.; Shibata, T. Extraction of Nonlinear Synergies for Proportional and Simultaneous Estimation of Finger Kinematics. IEEE Trans. Biomed Eng. 2020. [Google Scholar] [CrossRef]

- Shull, P.B.; Jiang, S.; Zhu, Y.; Zhu, X. Hand Gesture Recognition and Finger Angle Estimation via Wrist-Worn Modified Barometric Pressure Sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 724–732. [Google Scholar] [CrossRef]

- Murthy, G.; Jadon, R. Hand gesture recognition using neural networks. In Proceedings of the 2010 IEEE 2nd International Advance Computing Conference (IACC), Patiala, India, 19–20 February 2010. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. In Proceedings of the 9th International Conference on Artificial Neural Networks: ICANN ’99, Edinburgh, UK, 7–10 September 1999. [Google Scholar]

- Zhao, R.; Wang, D.; Yan, R.; Mao, K.; Shen, F.; Wang, J. Machine health monitoring using local feature-based gated recurrent unit networks. IEEE Trans. Ind. Electron. 2017, 65, 1539–1548. [Google Scholar] [CrossRef]

- Al-Timemy, A.H.; Bugmann, G.; Escudero, J.; Outram, N. Classification of finger movements for the dexterous hand prosthesis control with surface electromyography. IEEE J. Biomed. Health Inf. 2013, 17, 608–618. [Google Scholar] [CrossRef]

- Geng, W.; Du, Y.; Jin, W.; Wei, W.; Hu, Y.; Li, J. Gesture recognition by instantaneous surface EMG images. Sci. Rep. 2016, 6, 36571. [Google Scholar] [CrossRef]

- Ngeo, J.G.; Tamei, T.; Shibata, T. Continuous and simultaneous estimation of finger kinematics using inputs from an EMG-to-muscle activation model. J. Neuroeng. Rehabil. 2014, 11, 122. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Chen, Z.; Ding, H.; Liu, H. A Proportional Pattern Recognition Control Scheme for Wearable A-mode Ultrasound Sensing. IEEE Trans. Ind. Electron. 2020, 67, 800–808. [Google Scholar] [CrossRef]

- Ma, X.; Zhao, Y.; Zhang, L.; Gao, Q.; Pan, M.; Wang, J. Practical Device-Free Gesture Recognition Using WiFi Signals Based on Metalearning. IEEE Trans. Ind. Inf. 2020, 16, 228–237. [Google Scholar] [CrossRef]

- Farina, D.; Jiang, N.; Rehbaum, H.; Holobar, A.; Graimann, B.; Dietl, H.; Aszmann, O.C. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 797–809. [Google Scholar] [CrossRef]

- Gupta, H.P.; Chudgar, H.S.; Mukherjee, S.; Dutta, T.; Sharma, K. A Continuous Hand Gestures Recognition Technique for Human-Machine Interaction using Accelerometer and Gyroscope sensors. IEEE Sens. J. 2016, 16, 6425–6432. [Google Scholar] [CrossRef]

- Cenedese, A.; Susto, G.A.; Belgioioso, G.; Cirillo, G.I.; Fraccaroli, F. Home automation oriented gesture classification from inertial measurements. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1200–1210. [Google Scholar] [CrossRef]

- Megalingam, R.K.; Rangan, V.; Krishnan, S.; Edichery Alinkeezhil, A.B. IR sensor based Gesture Control Wheelchair for Stroke and SCI Patients. IEEE Sens. J. 2016, 16, 6755–6765. [Google Scholar] [CrossRef]

- Kruse, D.; Wen, J.T.; Radke, R.J. A sensor-based dual-arm tele-robotic system. IEEE Trans. Autom. Sci. Eng. 2014, 12, 4–18. [Google Scholar] [CrossRef]

- Premaratne, P.; Nguyen, Q. Consumer electronics control system based on hand gesture moment invariants. IET Comput. Vis. 2007, 1, 35–41. [Google Scholar] [CrossRef]

| Model | Time (s) |

|---|---|

| CNN with data augmentation | 0.0444 |

| CNN without data augmentation | 0.0489 |

| RNN | 0.0424 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Q.; Jiang, S.; Shull, P.B. Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model. Sensors 2020, 20, 2972. https://doi.org/10.3390/s20102972

Gao Q, Jiang S, Shull PB. Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model. Sensors. 2020; 20(10):2972. https://doi.org/10.3390/s20102972

Chicago/Turabian StyleGao, Qinghua, Shuo Jiang, and Peter B. Shull. 2020. "Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model" Sensors 20, no. 10: 2972. https://doi.org/10.3390/s20102972

APA StyleGao, Q., Jiang, S., & Shull, P. B. (2020). Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model. Sensors, 20(10), 2972. https://doi.org/10.3390/s20102972