Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing

Abstract

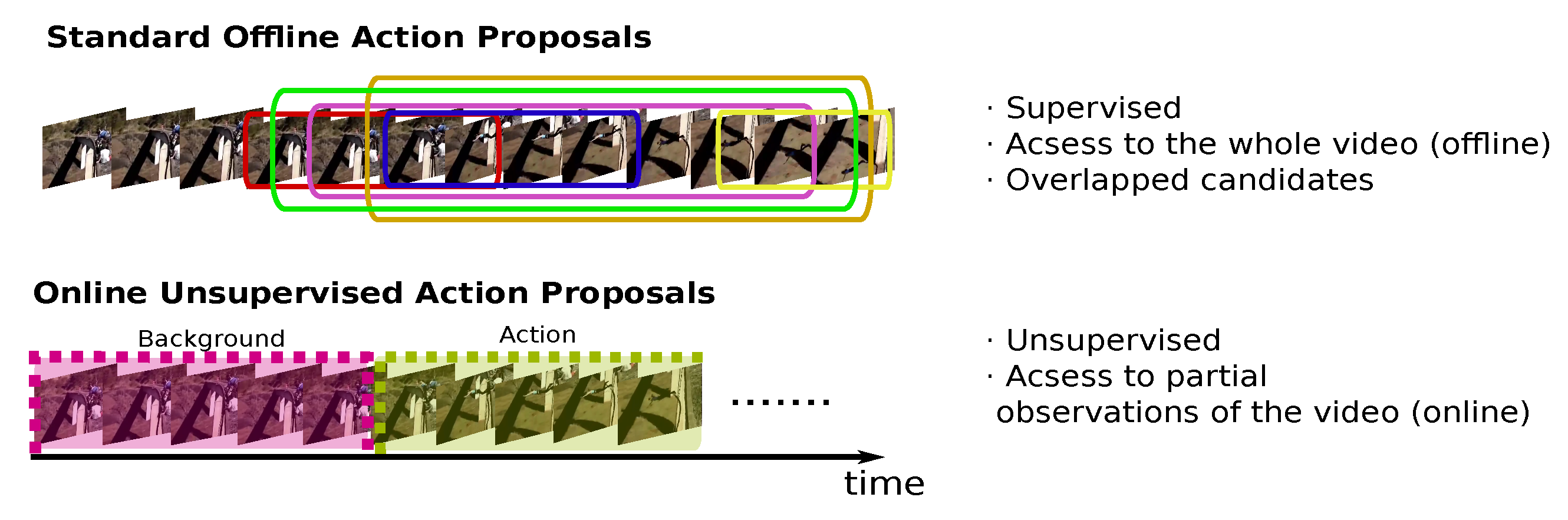

1. Introduction

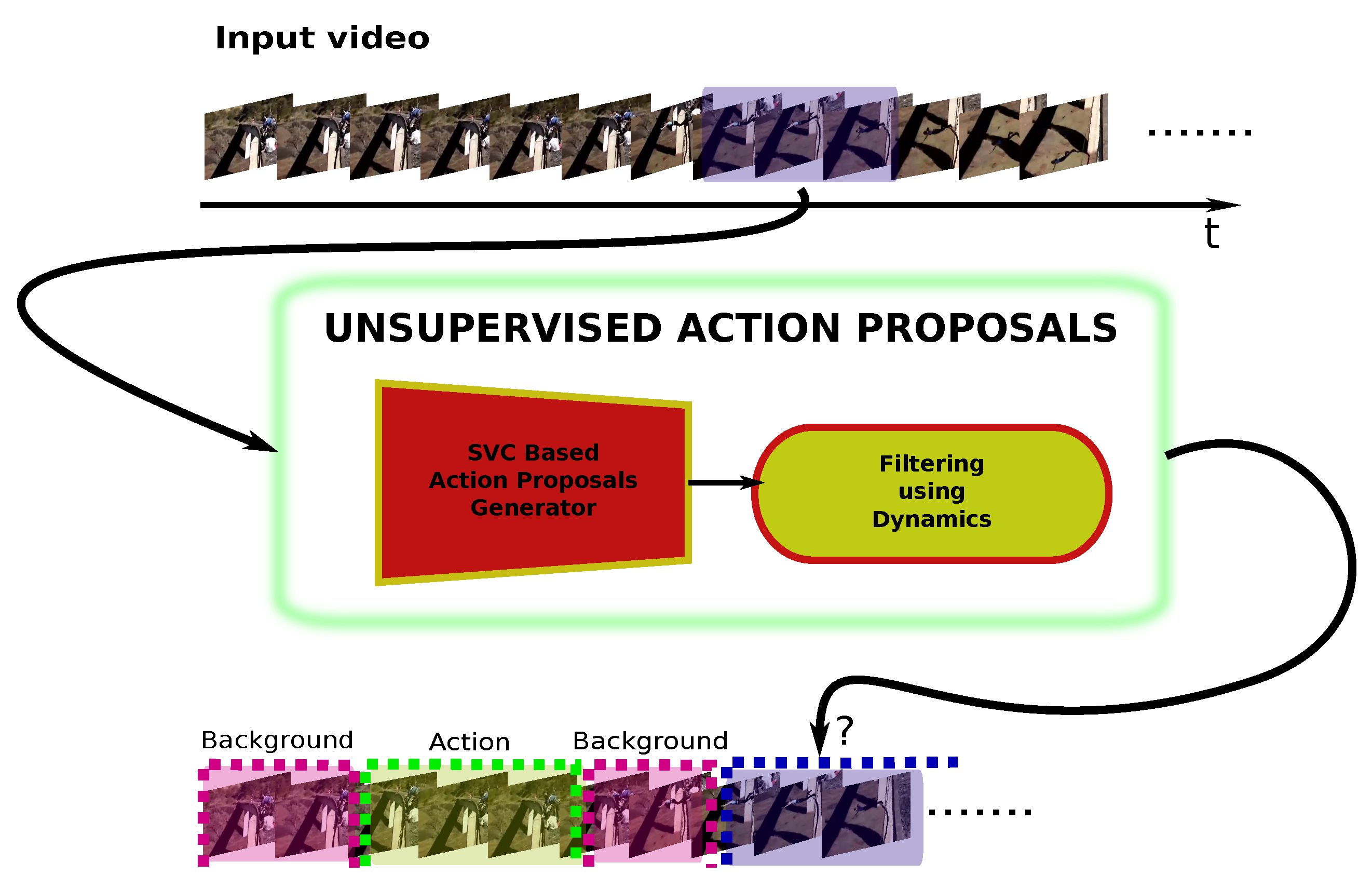

- To the best of our knowledge, we are the first in addressing the Action Proposals (AP) problem with a novel unsupervised solution, which is based on two main modules: a Support Vector Classifier (SVC) and a filter based on dynamics. While the former discriminates between contiguous sets of video frames to generate sets of candidates segments, the latter computes the dynamics of these segments and applies a distance criterion between each segment dynamics and a randomized version of them.

- Unlike all state-of-the-art approaches, ours is the first completely online. The video is processed as it arrives at the sensor, without accessing any information from the future nor modifying any past decision.

- Comparing to the state of the art our best unsupervised configuration achieves more than 41% and 59% of the performance of the best supervised model for ActivityNet and Thummos’14 datasets, respectively.

2. Related Work

2.1. Temporal Action Proposals

2.2. Online Action Detection

3. Proposed Method

3.1. Problem Setup

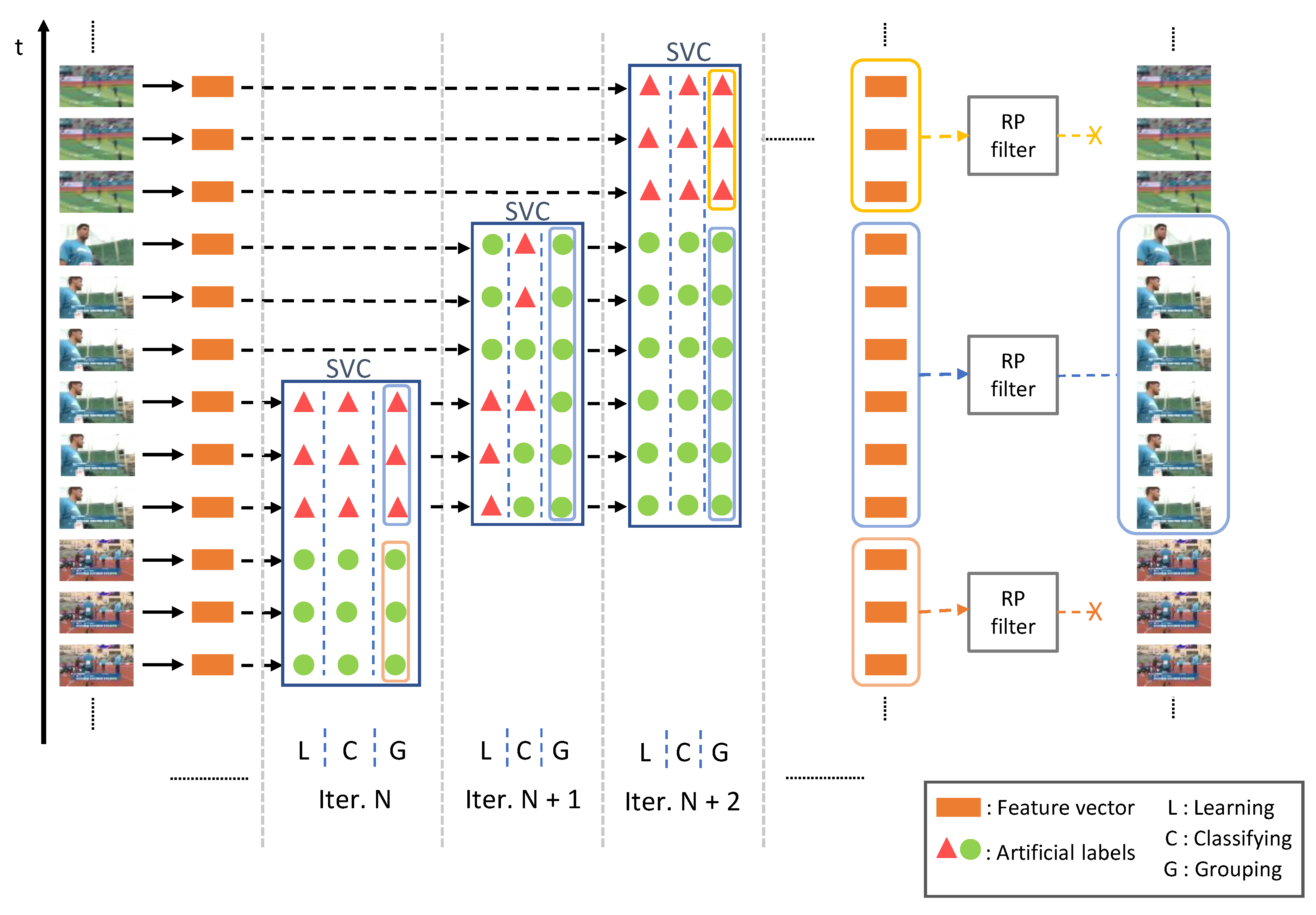

3.2. Learning Unsupervised SVC for AP

3.3. Rank-Pooling Filtering

| Algorithm 1 SVC-UAP method on a certain video to obtain its action proposals | |

| Given a certain video | |

| Input: Incoming frames of a certain video: | |

| Features to collect in each iteration: N | |

| threshold: | |

| Rank Pooling filter threshold: r | |

| Output: Set of action proposals: | |

| 1: | |

| 2: | |

| 3: while not end of video do | |

| 4: if first iteration then | |

| 5: | ▹ First incoming video frames |

| 6: | ▹ Feature extraction |

| 7: | ▹ Split in two set of features |

| 8: | |

| 9: | |

| 10: else | |

| 11: | ▹ Next incoming video frames |

| 12: | |

| 13: | |

| 14: | |

| 15: | |

| 16: end if | |

| 17: | ▹ Train and apply SVC module and get classification error rate |

| 18: if then | ▹ No action proposal found |

| 19: | ▹ Join the two sets |

| 20: else | ▹ Possible action proposal found |

| 21: | ▹ Randomly shuffles the input set |

| 22: | ▹ Distance between rank pooling vectors |

| 23: if then | |

| 24: | ▹ If similar, it is background. Thus, candidate discarded |

| 25: else | |

| 26: | ▹ Proposal confirmed |

| 27: | |

| 28: end if | |

| 29: end if | |

| 30: end while | |

| 31: return AP | |

4. Experiments

4.1. Experimental Setup

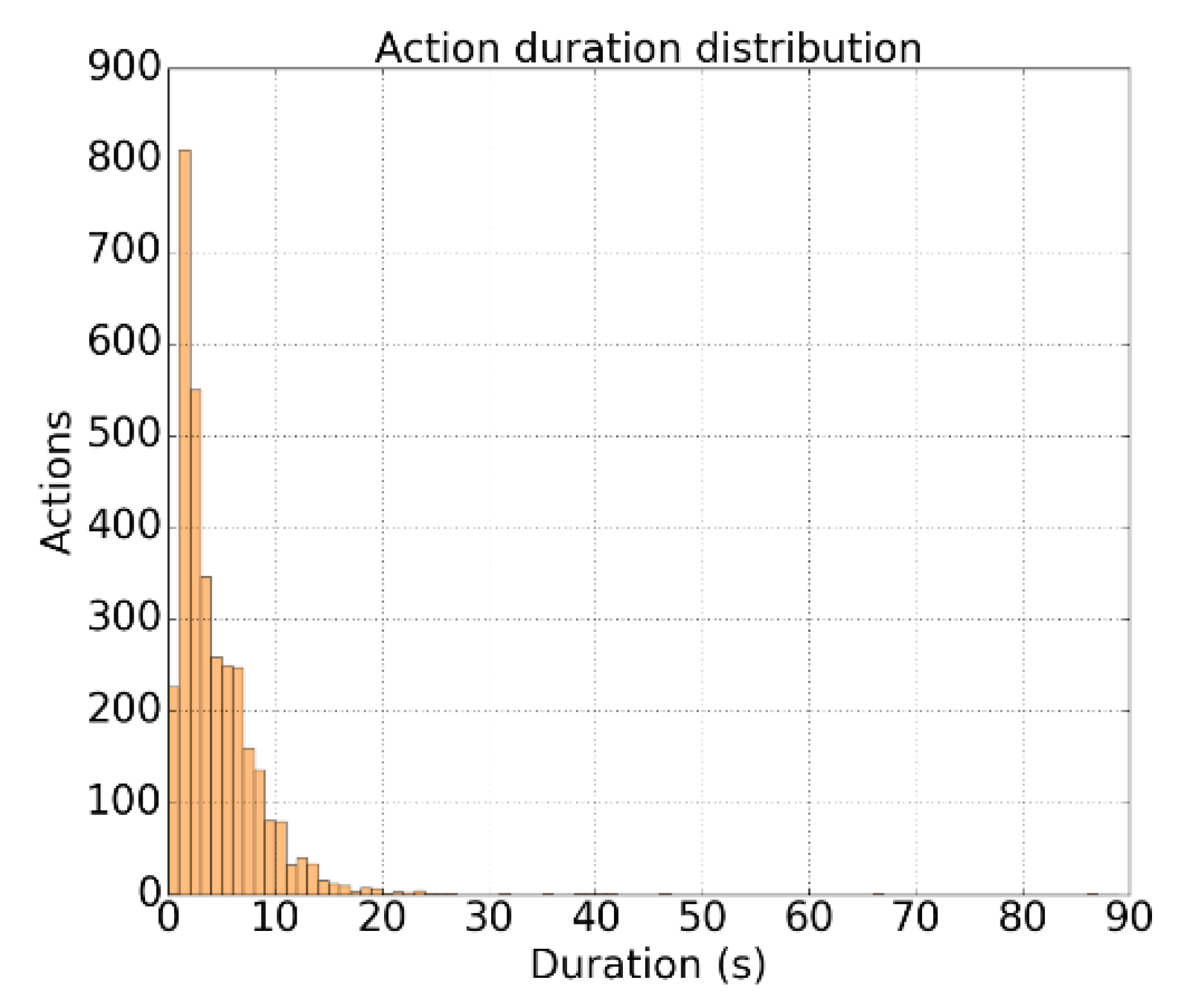

4.1.1. Datasets

4.1.2. Evaluation Metric

4.1.3. Implementation Details

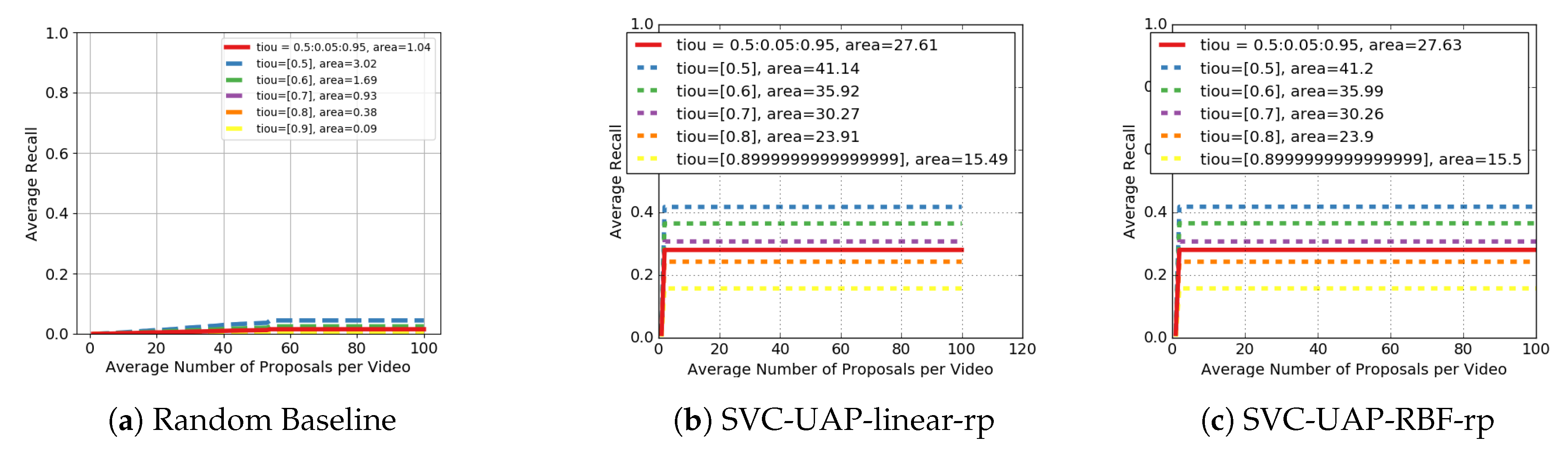

4.2. Experimental Evaluation in Activitynet

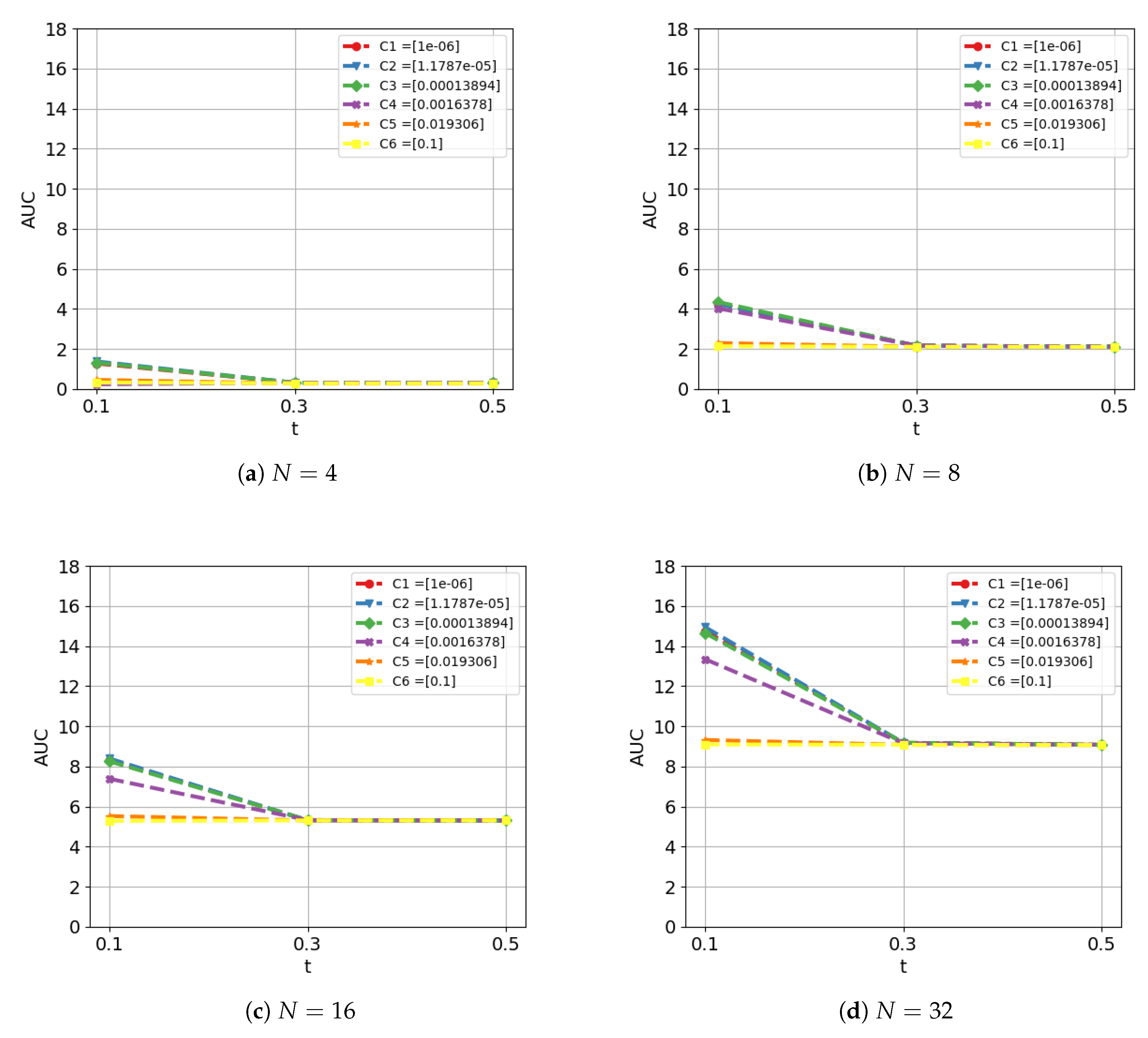

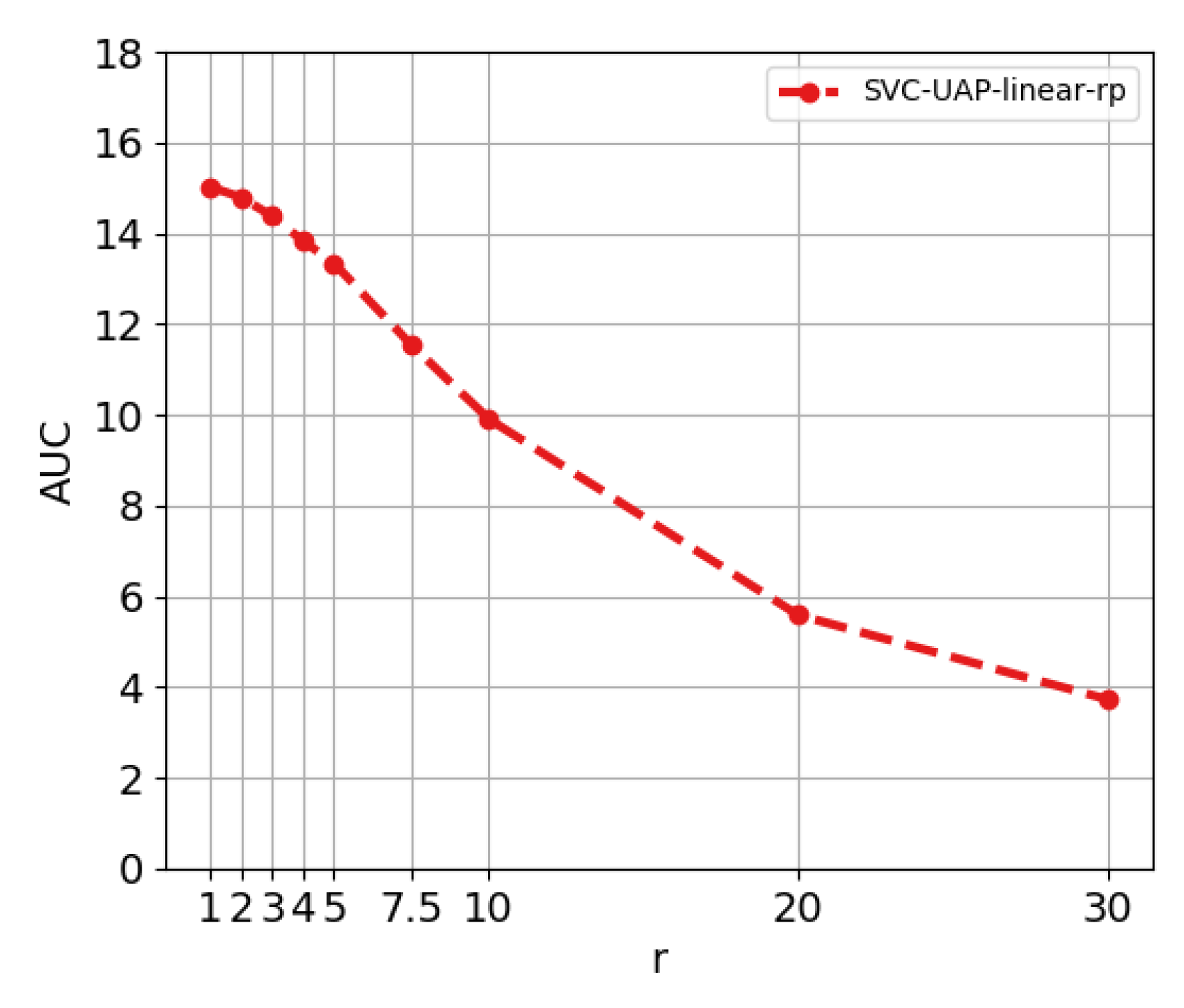

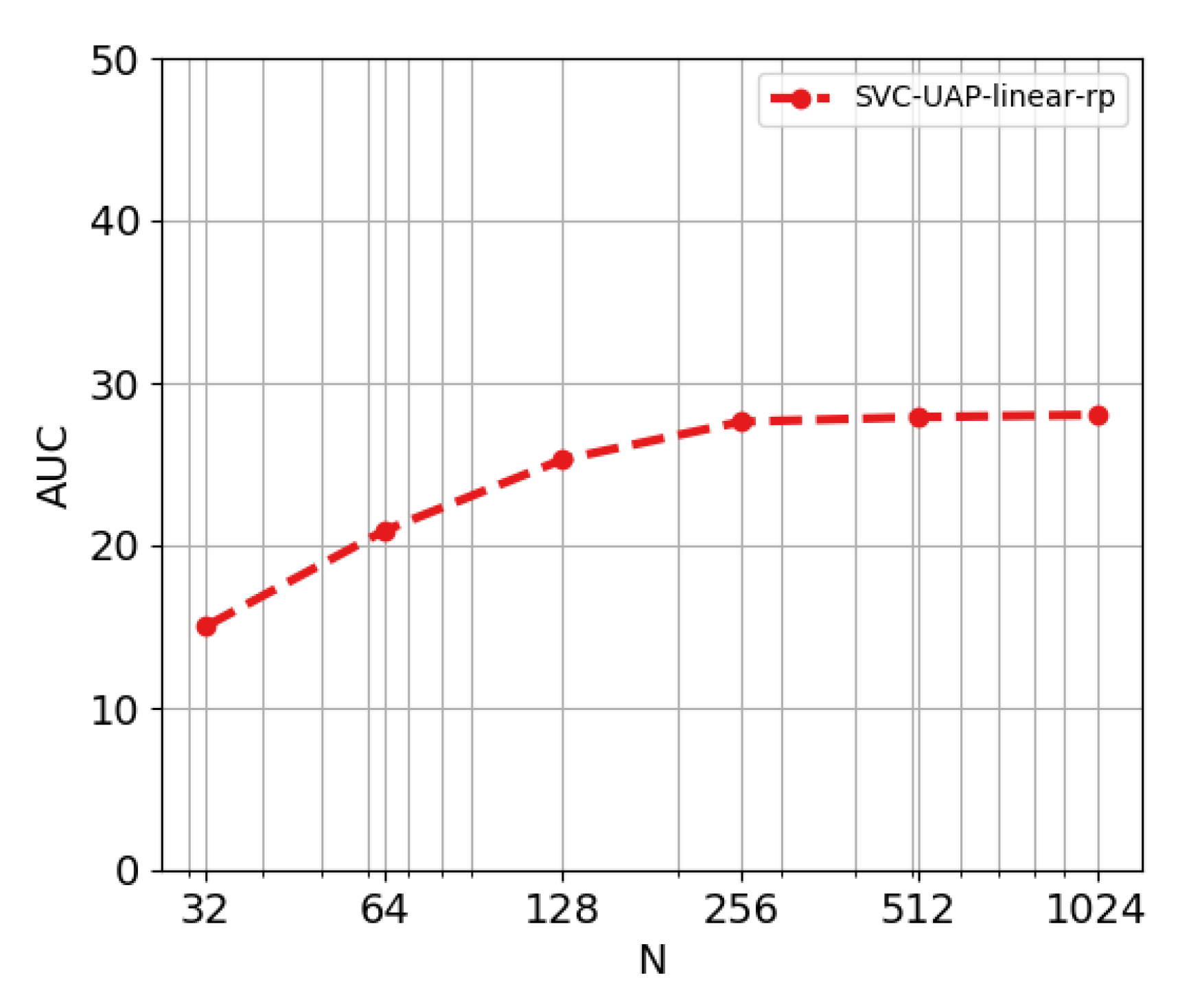

4.2.1. Ablation Study

4.2.2. Main Results

4.2.3. Comparison to State-of-the-Art Supervised AP Models

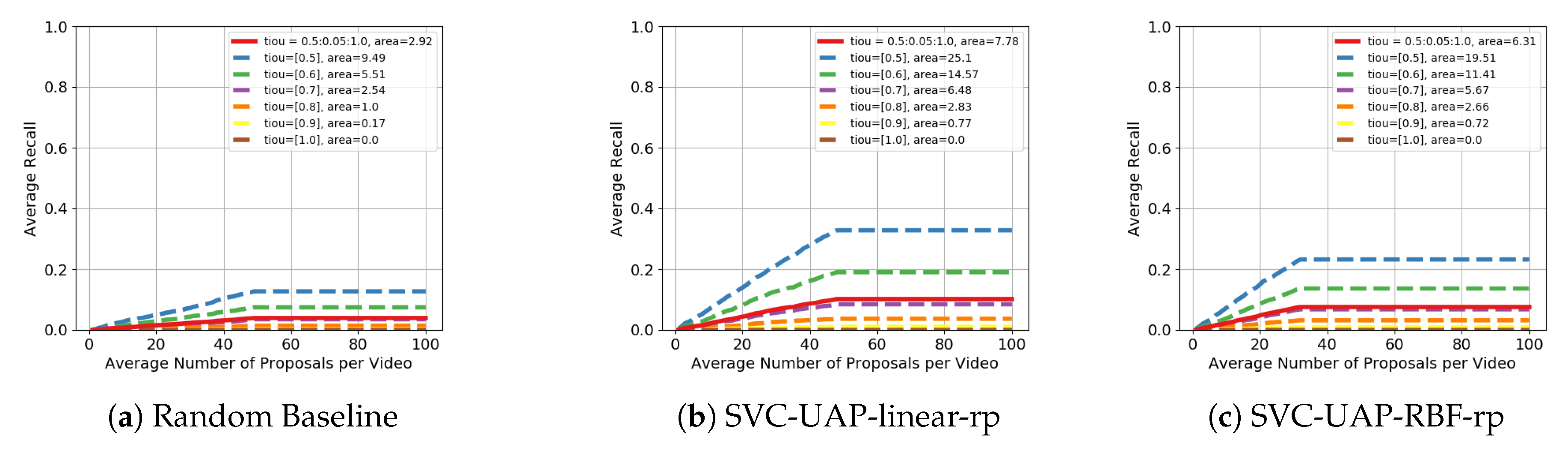

4.3. Experimental Evaluation on Thumos’14

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| TAD | Temporal Action Detection |

| AP | Action Proposal |

| OAD | Online Action Detection |

| SVM | Support Vector Machine |

| SVC | Support Vector Classifier |

| RBF | Radial basis function |

| SVC-UAP | Support Vector Classifiers for Unsupervised Action Proposals |

| SVR | Support Vector Regression |

| PCA | Principal Component Analysis |

References

- Jiang, Y.; Dai, Q.; Liu, W.; Xue, X.; Ngo, C. Human Action Recognition in Unconstrained Videos by Explicit Motion Modeling. IEEE Trans. Image Process. (TIP) 2015, 24, 3781–3795. [Google Scholar] [CrossRef] [PubMed]

- Richard, A.; Gall, J. Temporal Action Detection Using a Statistical Language Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3131–3140. [Google Scholar] [CrossRef]

- Yeung, S.; Russakovsky, O.; Mori, G.; Fei-Fei, L. End-to-End Learning of Action Detection from Frame Glimpses in Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2678–2687. [Google Scholar] [CrossRef]

- Yuan, J.; Ni, B.; Yang, X.; Kassim, A.A. Temporal Action Localization with Pyramid of Score Distribution Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3093–3102. [Google Scholar] [CrossRef]

- Gao, J.; Sun, C.; Yang, Z.; Nevatia, R. TALL: Temporal Activity Localization via Language Query. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5277–5285. [Google Scholar] [CrossRef]

- Xu, H.; Das, A.; Saenko, K. R-C3D: Region Convolutional 3D Network for Temporal Activity Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5794–5803. [Google Scholar] [CrossRef]

- Gao, J.Y.; Yang, Z.H.; Nevatia, R. Cascaded Boundary Regression for Temporal Action Detection. arXiv 2017, arXiv:1705.01180. [Google Scholar]

- Yao, G.; Lei, T.; Liu, X.; Jiang, P. Temporal Action Detection in Untrimmed Videos from Fine to Coarse Granularity. Appl. Sci. 2018, 8, 1924. [Google Scholar] [CrossRef]

- Lee, J.; Park, E.; Jung, T.D. Automatic Detection of the Pharyngeal Phase in Raw Videos for the Videofluoroscopic Swallowing Study Using Efficient Data Collection and 3D Convolutional Networks. Sensors 2019, 19, 3873. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.B.; Zhang, Y.X.; Zhong, B.; Lei, Q.; Yang, L.; Du, J.X.; Chen, D.S. A Comprehensive Survey of Vision-Based Human Action Recognition Methods. Sensors 2019, 19, 1005. [Google Scholar] [CrossRef] [PubMed]

- Ghanem, B.; Niebles, J.C.; Snoek, C.; Caba-Heilbron, F.; Alwassel, H.; Escorcia, V.; Khrisna, R.; Buch, S.; Duc-Dao, C. The ActivityNet Large-Scale Activity Recognition Challenge 2018 Summary. arXiv 2018, arXiv:1808.03766. [Google Scholar]

- Shou, Z.; Wang, D.; Chang, S. Temporal Action Localization in Untrimmed Videos via Multi-stage CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1049–1058. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal Segment Networks: Towards Good Practices for Deep Action Recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 20–36. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Y.; Lin, D.; Van Gool, L. UntrimmedNets for Weakly Supervised Action Recognition and Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6402–6411. [Google Scholar] [CrossRef]

- Gao, J.; Chen, K.; Nevatia, R. CTAP: Complementary Temporal Action Proposal Generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 70–85. [Google Scholar] [CrossRef]

- Lin, T.; Zhao, X.; Su, H.; Wang, C.; Yang, M. BSN: Boundary Sensitive Network for Temporal Action Proposal Generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–21. [Google Scholar] [CrossRef]

- Lin, T.; Liu, X.; Li, X.; Ding, E.; Wen, S. BMN: Boundary-Matching Network for Temporal Action Proposal Generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3888–3897. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, L.; Zhang, Y.; Liu, W.; Chang, S. Multi-Granularity Generator for Temporal Action Proposal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 3599–3608. [Google Scholar] [CrossRef]

- Heilbron, F.C.; Niebles, J.C.; Ghanem, B. Fast Temporal Activity Proposals for Efficient Detection of Human Actions in Untrimmed Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1914–1923. [Google Scholar] [CrossRef]

- Escorcia, V.; Caba Heilbron, F.; Niebles, J.C.; Ghanem, B. DAPs: Deep Action Proposals for Action Understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 768–784. [Google Scholar] [CrossRef]

- Buch, S.; Escorcia, V.; Shen, C.; Ghanem, B.; Niebles, J.C. SST: Single-Stream Temporal Action Proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6373–6382. [Google Scholar] [CrossRef]

- Gao, J.; Yang, Z.; Sun, C.; Chen, K.; Nevatia, R. TURN TAP: Temporal Unit Regression Network for Temporal Action Proposals. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3648–3656. [Google Scholar] [CrossRef]

- Chao, Y.; Vijayanarasimhan, S.; Seybold, B.; Ross, D.A.; Deng, J.; Sukthankar, R. Rethinking the Faster R-CNN Architecture for Temporal Action Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1130–1139. [Google Scholar] [CrossRef]

- Yuan, Z.; Stroud, J.C.; Lu, T.; Deng, J. Temporal Action Localization by Structured Maximal Sums. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3215–3223. [Google Scholar] [CrossRef]

- Zhao, Y.; Xiong, Y.; Wang, L.; Wu, Z.; Tang, X.; Lin, D. Temporal Action Detection with Structured Segment Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2933–2942. [Google Scholar] [CrossRef]

- Heilbron, F.C.; Escorcia, V.; Ghanem, B.; Niebles, J.C. ActivityNet: A large-scale video benchmark for human activity understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 961–970. [Google Scholar] [CrossRef]

- Jiang, Y.G.; Liu, J.; Roshan Zamir, A.; Toderici, G.; Laptev, I.; Shah, M.; Sukthankar, R. THUMOS Challenge: Action Recognition with a Large Number of Classes. 2014. Available online: http://crcv.ucf.edu/THUMOS14/ (accessed on 20 August 2014).

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Ji, J.; Cao, K.; Niebles, J.C. Learning Temporal Action Proposals With Fewer Labels. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7072–7081. [Google Scholar] [CrossRef]

- Khatir, N.; López-Sastre, R.J.; Baptista-Ríos, M.; Nait-Bahloul, S.; Acevedo-Rodríguez, F.J. Combining Online Clustering and Rank Pooling Dynamics for Action Proposals. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis (IbPRIA), Madrid, Spain, 1–4 July 2019; pp. 77–88. [Google Scholar] [CrossRef]

- De Geest, R.; Gavves, E.; Ghodrati, A.; Li, Z.; Snoek, C.; Tuytelaars, T. Online Action Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 269–284. [Google Scholar] [CrossRef]

- Gao, J.; Yang, Z.; Nevatia, R. RED: Reinforced Encoder-Decoder Networks for Action Anticipation. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; pp. 921–9211. [Google Scholar] [CrossRef]

- De Geest, R.; Tuytelaars, T. Modeling Temporal Structure with LSTM for Online Action Detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1549–1557. [Google Scholar] [CrossRef]

- Xu, M.; Gao, M.; Chen, Y.; Davis, L.; Crandall, D. Temporal Recurrent Networks for Online Action Detection. In Proceedings of theIEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 5531–5540. [Google Scholar] [CrossRef]

- Baptista-Ríos, M.; López-Sastre, R.J.; Caba-Heilbron, F.; van Gemert, J.; Acevedo-Rodríguez, F.J.; Maldonado-Bascón, S. The Instantaneous Accuracy: A Novel Metric for the Problem of Online Human Behaviour Recognition in Untrimmed Videos. In Proceedings of theIEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 1282–1284. [Google Scholar] [CrossRef]

- Baptista-Ríos, M.; López-Sastre, R.J.; Caba-Heilbron, F.; van Gemert, J.; Acevedo-Rodríguez, F.J.; Maldonado-Bascón, S. Rethinking Online Action Detection in Untrimmed Videos: A Novel Online Evaluation Protocol. IEEE Access 2019, 8, 5139–5146. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the ACM Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar] [CrossRef]

- Fernando, B.; Gavves, E.; Oramas, J.; Ghodrati, A.; Tuytelaars, T. Rank pooling for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 773–787. [Google Scholar] [CrossRef] [PubMed]

- Fernando, B.; Anderson, P.; Hutter, M.; Gould, S. Discriminative hierarchical rank pooling for activity recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Bilen, H.; Fernando, B.; Gavves, E.; Vedaldi, A.; Gould, S. Dynamic image networks for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, J.; Cherian, A.; Porikli, A. Ordered pooling of optical flow sequences for action recognition. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016. [Google Scholar]

- Cherian, A.; Fernando, B.; Harandi, M.; Gould, S. Generalized rank pooling for action recognition. In Proceedings of the CVPRIEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Cherian, A.; Sra, S.; Gould, S.; Hartley, R. Non-Linear Temporal Subspace Representations for Activity Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Cherian, A.; Gould, S. Second-order Temporal Pooling for Action Recognition. Int. J. Comput. Vis. 2019, 127, 340–362. [Google Scholar] [CrossRef]

- Liu, T. Learning to Rank for Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Carreira, J.; Noland, E.; Banki-Horvath, A.; Hillier, C.; Zisserman, A. A Short Note about Kinetics-600. arXiv 2018, arXiv:1808.01340. [Google Scholar]

- Monfort, M.; Andonian, A.; Zhou, B.; Ramakrishnan, K.; Bargal, S.A.; Yan, T.; Brown, L.; Fan, Q.; Gutfreund, D.; Vondrick, C.; et al. Moments in Time Dataset: One Million Videos for Event Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 502–508. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar] [CrossRef]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 1725–1732. [Google Scholar] [CrossRef]

- Dai, X.; Singh, B.; Zhang, G.; Davis, L.S.; Chen, Y.Q. Temporal Context Network for Activity Localization in Videos. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5727–5736. [Google Scholar] [CrossRef]

- Lin, T.; Zhao, X.; Shou, Z. Single Shot Temporal Action Detection. In Proceedings of the ACM International Conference on Multimedia (ACMM), Mountain View, CA, USA, 23–27 October 2017; pp. 988–996. [Google Scholar] [CrossRef]

| Linear Kernel | RBF Kernel | Rank Pooling | |

|---|---|---|---|

| SVC-UAP-linear | ✓ | ✗ | ✗ |

| SVC-UAP-linear-rp | ✓ | ✗ | ✓ |

| SVC-UAP-RBB | ✗ | ✓ | ✗ |

| SVC-UAP-RBF-rp | ✗ | ✓ | ✓ |

| Modified Parameters | Fixed Parameters | SVC-UAP Variant | |

|---|---|---|---|

| Experiment 1 | - | SVC-UAP-linear | |

| Experiment 2 | SVC-UAP-linear-rp | ||

| Experiment 3 | SVC-UAP-linear-rp | ||

| SVC-UAP-Linear | SVC-UAP-Linear-rp | |

|---|---|---|

| AUC | 14.94 | 15.02 |

| AUC | |

|---|---|

| [51]s | 59.58 |

| [52]s | 64.40 |

| CTAP [15]s | 65.72 |

| BSN [16]s | 66.17 |

| BMN [17]s | 67.10 |

| SVC-UAP-linear-rp | 27.61 |

| SVC-UAP-RBF-rp | 27.63 |

| AR@50 | AR@100 | |

|---|---|---|

| SCNN-prop [12]s | 17.22 | 26.17 |

| SST [21]s | 19.90 | 28.36 |

| CTAP [15]s | 32.49 | 42.61 |

| BSN [16]s | 37.46 | 46.06 |

| BMN [17]s | 39.36 | 47.72 |

| SVC-UAP-linear-rp | 10.16 | 10.16 |

| SVC-UAP-RBF-rp | 7.53 | 7.53 |

| Random baseline | 3.96 | 3.96 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baptista Ríos, M.; López-Sastre, R.J.; Acevedo-Rodríguez, F.J.; Martín-Martín, P.; Maldonado-Bascón, S. Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing. Sensors 2020, 20, 2953. https://doi.org/10.3390/s20102953

Baptista Ríos M, López-Sastre RJ, Acevedo-Rodríguez FJ, Martín-Martín P, Maldonado-Bascón S. Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing. Sensors. 2020; 20(10):2953. https://doi.org/10.3390/s20102953

Chicago/Turabian StyleBaptista Ríos, Marcos, Roberto Javier López-Sastre, Francisco Javier Acevedo-Rodríguez, Pilar Martín-Martín, and Saturnino Maldonado-Bascón. 2020. "Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing" Sensors 20, no. 10: 2953. https://doi.org/10.3390/s20102953

APA StyleBaptista Ríos, M., López-Sastre, R. J., Acevedo-Rodríguez, F. J., Martín-Martín, P., & Maldonado-Bascón, S. (2020). Unsupervised Action Proposals Using Support Vector Classifiers for Online Video Processing. Sensors, 20(10), 2953. https://doi.org/10.3390/s20102953