1. Introduction

The incidence of road traffic crashes is one of the leading causes of death worldwide, and the reduction of the number of traffic-related crashes has become a major social and public health challenge, considering the ever-increasing number of vehicles on the road. One of the most common causes of vehicle crashes is driver inattention. One study conducted by the National Highway Traffic Safety Administration (NHTSA) reported that approximately 80 percent of vehicle crashes and 65 percent of near-crashes involved driver inattention within three seconds prior to the incident [

1]. Taking into account that human life expectancy is continuously getting longer, it has become crucial that we assist those who are older and those who are physically impaired in driving and achieve higher road safety measures through research and development of advanced driver assistance systems (ADAS) technology.

The safety functions of ADAS require accurate information on the environment surrounding the vehicle. A popular approach in recent years to obtain the information on the vehicle surroundings involves fusing the data generated by multiple types of sensors (e.g., radar, lidar, and cameras) equipped on the vehicle [

2,

3,

4,

5,

6,

7]. This way, it is possible to overcome the functional and environmental limitations of each type of sensor and generate the estimate of the state of each surrounding object with higher accuracy. However, this sensor fusion approach has its limits on the reliability and data collection range. The sensor accuracy of driving environment information is affected by a number of factors such as weather and solar irradiance. In addition, no data can be acquired when the target is outside the field of view of the sensors or when the line of sight to the target is obstructed. In order to further enhance road safety, it is therefore critical to improve the reliability and the detection range of the perception system and also find a way to obtain information on objects in non-line-of-sight (NLOS) regions.

A wireless vehicular communication system can be viewed as a new type of automotive sensor that allows engineers to design the next generation of ADAS, enabling drivers to exchange information on their own vehicles as well as the environment surrounding them. Whereas on-board sensor data obtained with radar, lidar, and cameras enable the estimation of target vehicle information such as relative position, speed, and heading, vehicular communication data additionally provide us with the best possible measurements on vital vehicle data including speed, yaw rate, and steering angle, which are obtained directly from the remote vehicle bus. This communication network can further extend its reach when vehicles, roadside infrastructures, and vulnerable road users (e.g., pedestrians, cyclists, and motorcyclists) are equipped with wireless communication devices. Wireless vehicular communications, often referred to as vehicle-to-everything (V2X) communications, can be classified into different types including vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I), and vehicle-to-pedestrian (V2P) communications. While V2V communications involves two or more vehicles exchanging data with each other, V2I communications allows data exchange between vehicles and roadside units. Furthermore, V2P communications involves vehicles exchanging data with pedestrians. Studies have shown that combining V2V and V2I technologies can help address about 80 percent of all vehicle crashes [

8].

Such significant advantages of V2X communications in road safety can become even more augmented when combined with the on-board sensor measurements via data fusion.

Figure 1 summarizes the positives and the negatives of perception through V2X communications and those of remote sensing with on-board sensors such as radar, lidar, and cameras. The two groups of data complement each other, resulting in a more accurate, robust, and complete perception of the vehicle surroundings. As mentioned earlier, the implementation of V2X communications greatly enhances the perception capability, as it enables detection of targets in NLOS regions and extends the detection range up to 1 km [

9], while the longest detection range that can be achieved with on-board sensors is 200–250 m (through radar systems). Exchanging V2X communication data is possible regardless of weather conditions, whereas the accuracy and reliability of on-board sensors can be significantly reduced by adverse weather conditions such as rain, snow, and fog [

10]. Furthermore, safety applications of camera systems such as collision warning and pedestrian detection are often inactive in a dark environment or during night time. V2X communication data also include accurate target dimension information (width, length, and height), but the dimension information obtained with on-board sensors are often inaccurate or even unavailable due to the effects of occlusion and the limitations of the sensor field of view (FOV). On the other hand, there are some negative aspects to perception based solely on V2X communications. Transmitted V2X communication data can be delayed or even lost in an adverse radio frequency propagation environment (e.g., blockage and multipath) and/or a high communication channel load scenario (e.g., heavily congested urban intersections). In addition, V2X safety messages such as the basic safety message (BSM) are transmitted at a period of 100 ms, whereas on-board sensor measurements can be collected with a period of about 50 ms or even at a faster rate depending on the sensor model. Locating targets through V2X communications is also limited in that vehicles must be equipped with vehicular communication devices to participate in the exchange of the safety messages, and that the accuracy and reliability of positioning are largely dependent on the quality and availability of the global navigation satellite system (GNSS) signals. In an environment where GNSS signals are not available (e.g., inside a tunnel and under an overpass), vehicles can no longer transmit the safety messages, which results in a discontinuous acquisition of data on surrounding vehicles.

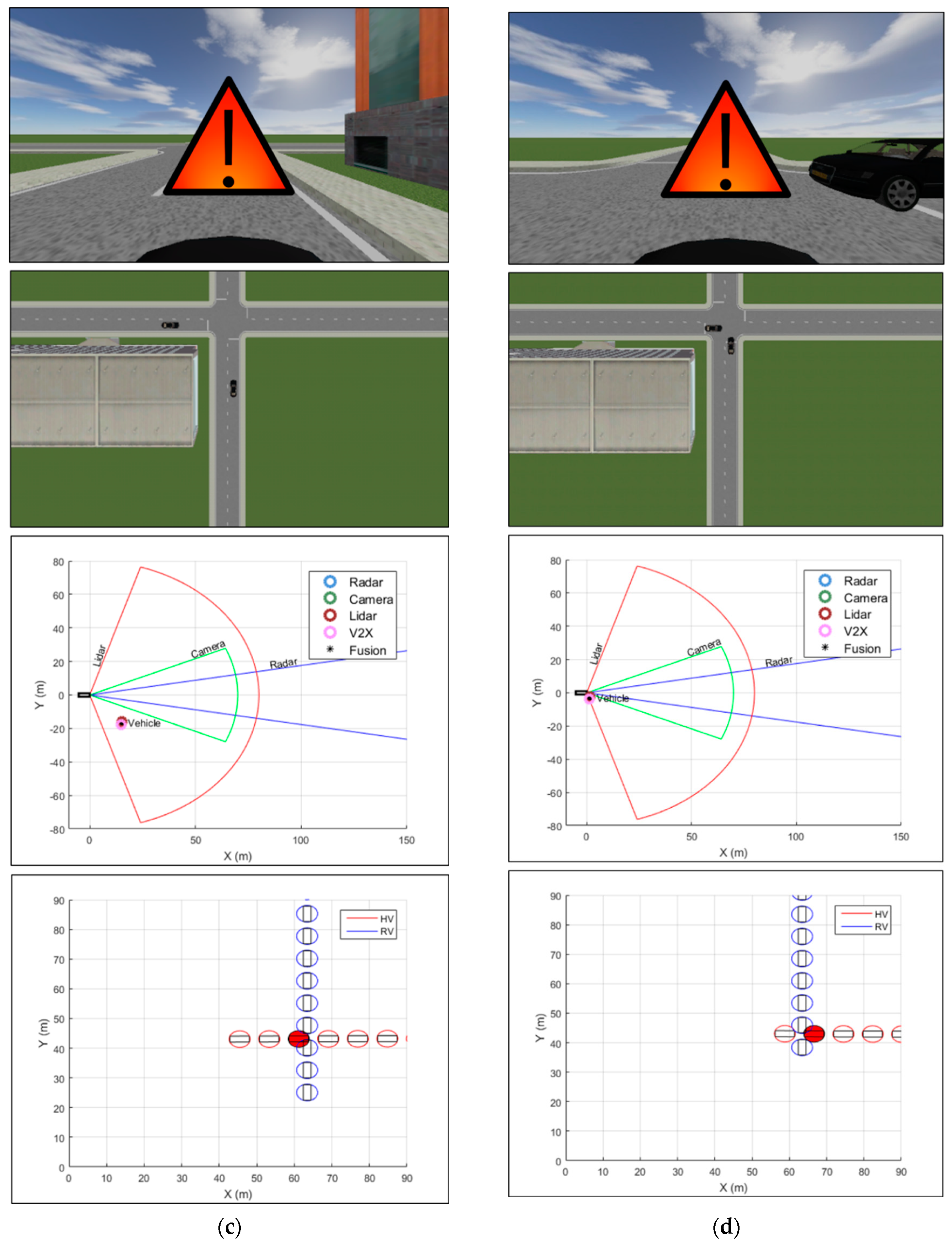

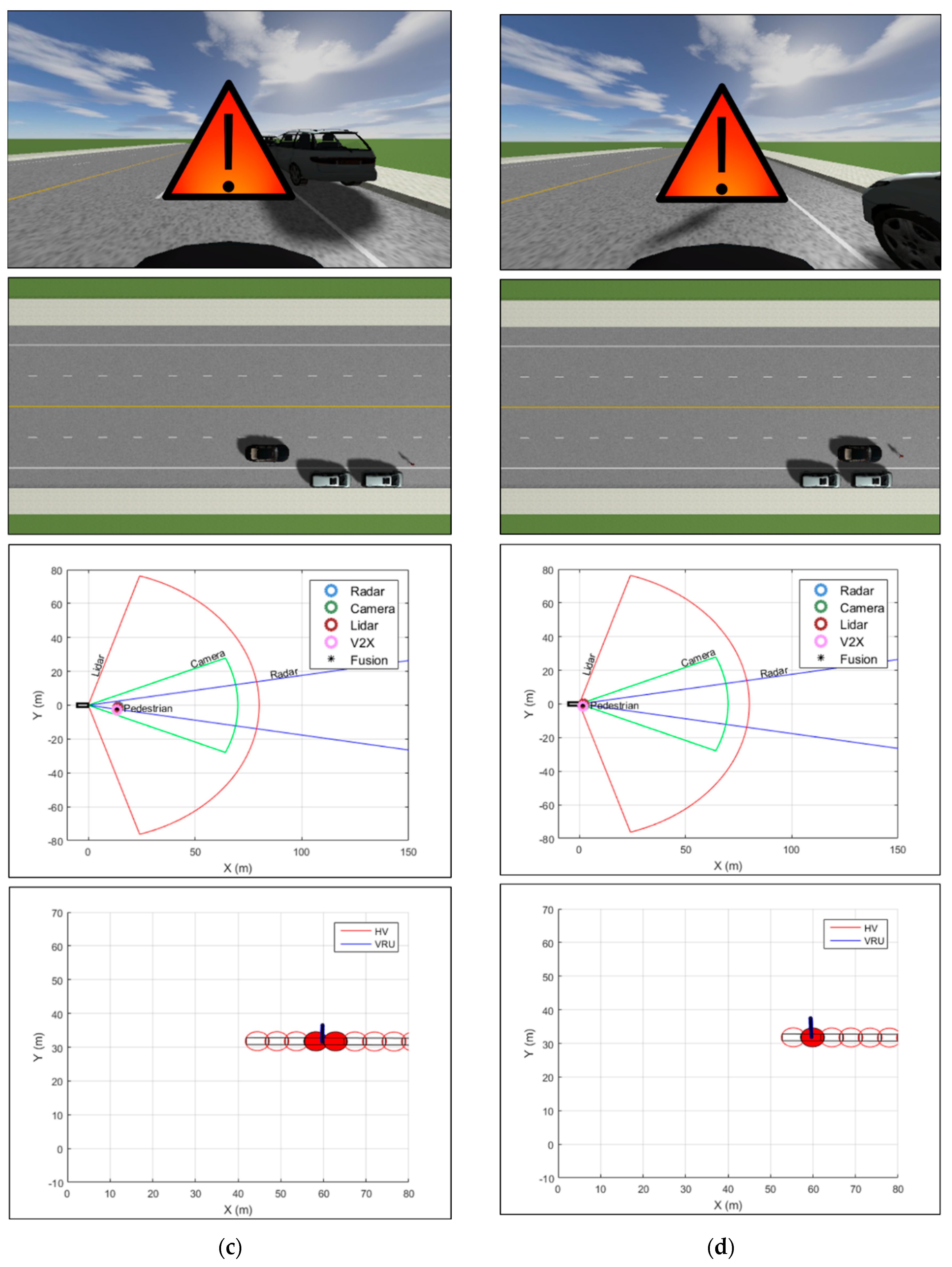

In this paper, we propose a method for vehicle trajectory prediction and collision warning through fusion of multisensors and V2X communications. In order to enhance the perception capabilities and reliability of traditional on-board sensors, we employ a Kalman filter-based approach for a high-level fusion of radar, lidar, camera, and V2X communication data. To verify the performance of the proposed method, we constructed co-simulation environments using MATLAB/Simulink and PreScan [

11], which is designed for simulation of ADAS and active safety systems. In addition to radar, lidar, and camera sensor systems, the host vehicle is equipped with a dedicated short-range communications (DSRC) transceiver, which enables the collection of information on the surrounding vehicles and vulnerable road users (VRUs) equipped with DSRC devices through exchanging safety messages. The performance of the proposed vehicle collision warning system is evaluated in a vehicle–vehicle collision scenario and a vehicle–pedestrian collision scenario.

The rest of the paper is organized as follows.

Section 2 introduces related research work. In

Section 3, we describe the architecture of the proposed system and discuss background information about automotive sensors for remote sensing and V2X communications. The proposed method for vehicle collision warning is presented in

Section 4, and the experimental results are given in

Section 5. Finally,

Section 6 concludes the paper by summarizing the main points and addressing future work.

2. Related Work

Vehicle collision warning systems have been studied by many researchers. Typical vehicle collision warning systems are based on sensor measurements from radar and camera sensors. Vehicle collision warning and automatic partial braking systems based on radar sensors that have been implemented in commercially available Mercedes-Benz cars are described in [

12]. A vehicle collision warning system with a single Mobileye camera is presented in [

13], where rear-end collision scenarios are considered and the warning is generated based on the time-to-collision (TTC) calculation. More recently, there have been efforts to develop cooperative collision warning systems that utilize vehicular communications. In [

14], a crossroad scenario with two vehicles equipped with GPS receivers and vehicular communication devices is considered, where the trajectory prediction is performed with a Kalman filter and TTC is used for the collision risk indicator. A rear-end collision warning model based on a neural network approach is presented in [

15], where participating vehicles are equipped with GPS receivers and vehicular communication devices and are assumed to be moving in the same lane.

Despite the advantages of vehicular communications, the cooperative sensing approach based on vehicular communications and on-board sensor fusion has not been examined extensively yet by researchers. Inter-vehicle object association using point matching algorithms is proposed in [

16] to determine the relative position and orientation offsets between measurements taken by different vehicles. In [

17], a vision-based multiobject tracking system is presented to check the plausibility of the data received via V2V communications. Radar and V2V communication fusion approach is suggested in [

18] for a longer perception range and lower position and velocity errors. In the case of maritime navigation, the automatic radar plotting aids (ARPA) and the automatic identification system (AIS) technologies are widely implemented to identify and track vessels and to prevent collisions between vessels based on radar measurements as well as static and dynamic information (e.g., vessel name, call sign, position, course, and speed) of other AIS-equipped vessels exchanged over the marine VHF radio channels [

19,

20]. Although these papers present promising applications, the potential of the fusion of on-board sensor data and V2X communication data in the context of ADAS applications, such as vehicle collision prevention, has not been extensively investigated.

3. System Overview

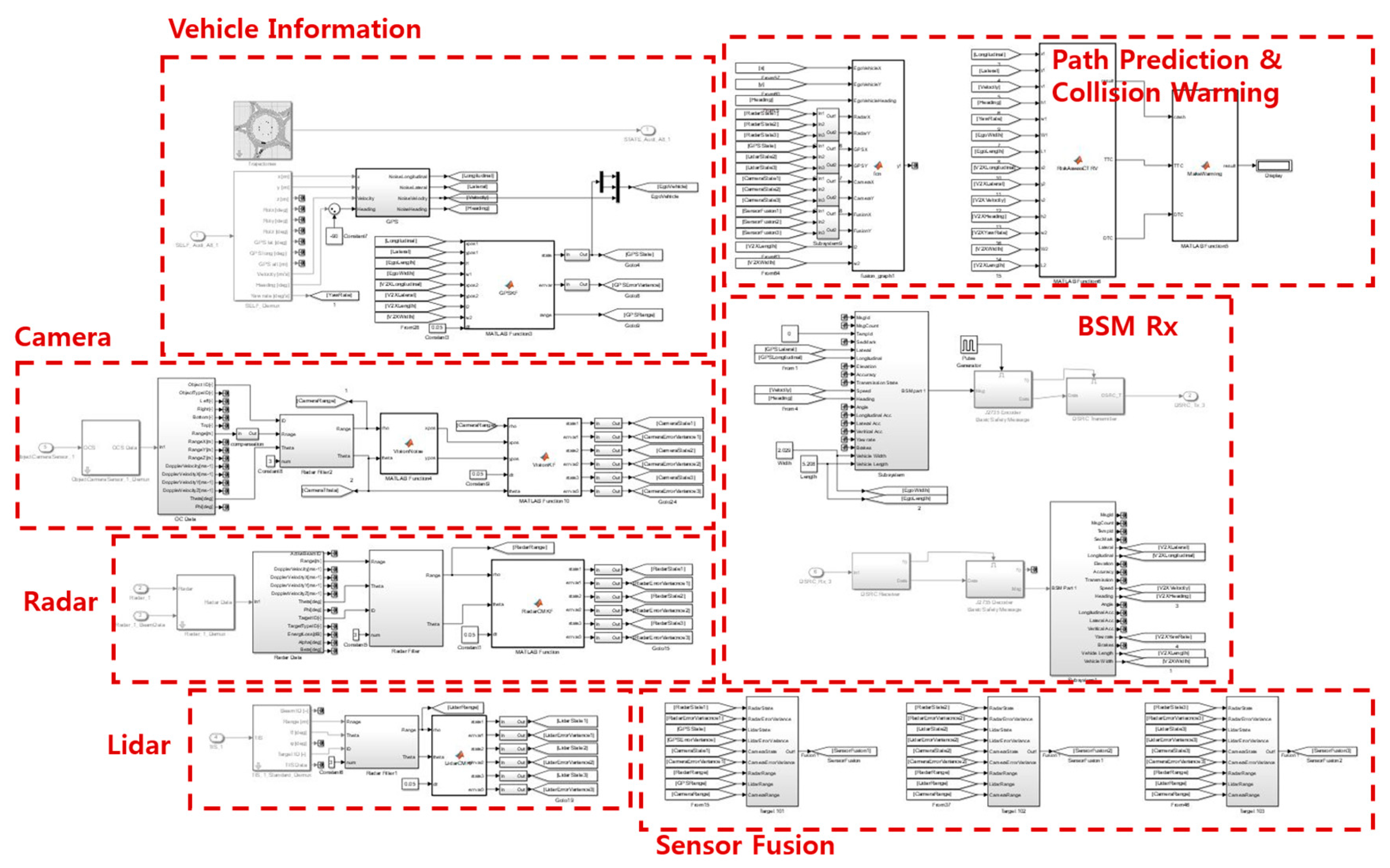

As each type of sensors has its advantages and disadvantages, combining data from multiple types of sensors is necessary in order to maximize detection and tracking capability. In this work, a high-level fusion of radar, lidar, cameras, and V2X communication data was performed to predict the trajectories of the nearby targets and generate an appropriate warning to the driver prior to a possible collision. In an effort to perform simulations under close-to-real conditions, the characteristics of local environment perception sensors that have been widely considered for ADAS functions in commercially available vehicles were employed.

3.1. Architecture of the Proposed System

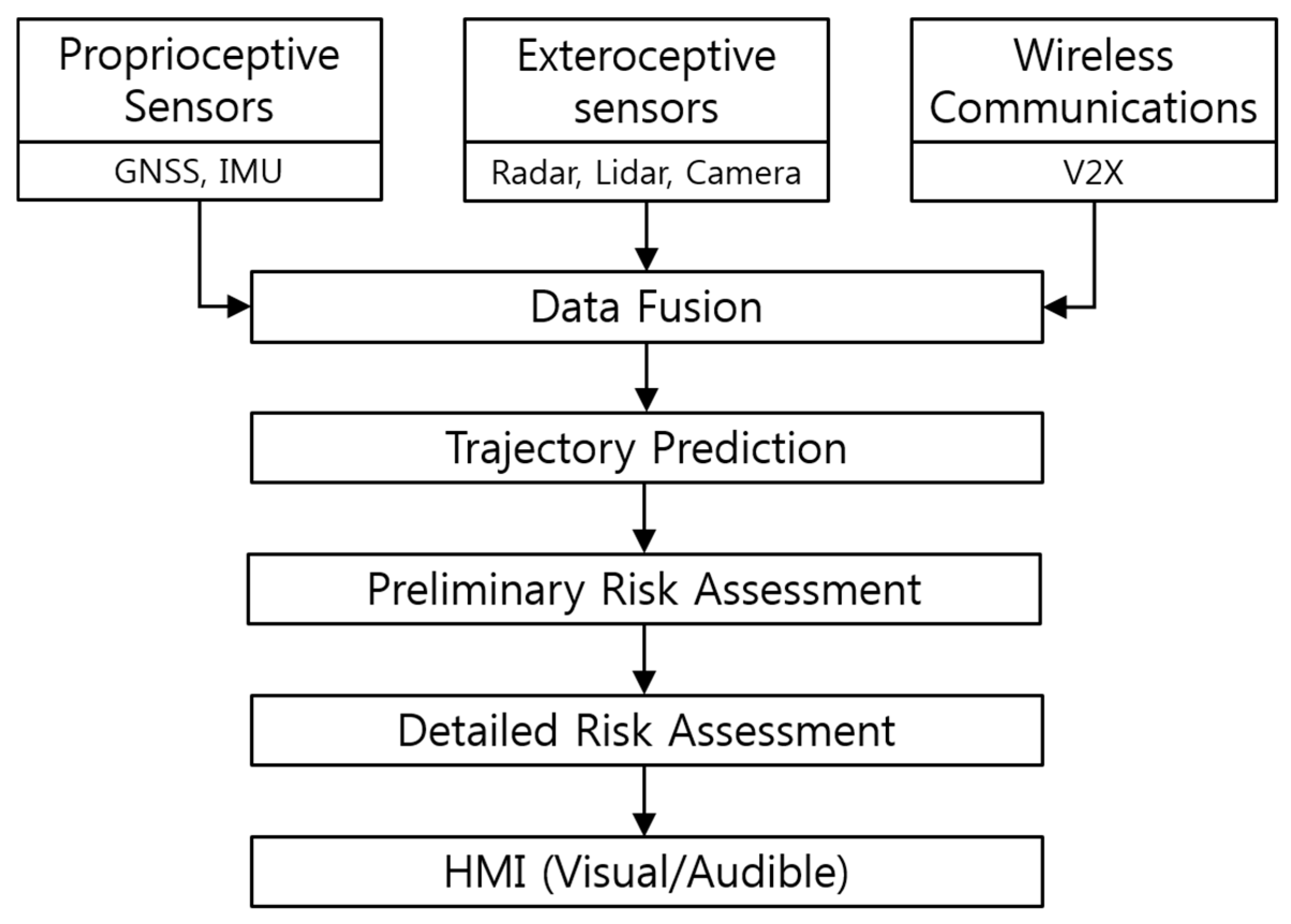

The framework of the proposed vehicle collision warning system is illustrated in

Figure 2. The first step of the proposed system involves perception. For the purpose of estimating the relative position of the target in the surrounding space with respect to the host vehicle, the host vehicle obtains the relative range and azimuth from the radar and the lidar, the relative lateral and longitudinal position from the camera, and the GNSS measurements of the remote target as well as its dynamic information such as speed and yaw rate via the DSRC transceiver. The measurements from each sensor are processed with a Kalman filter algorithm, which reduces the measurement noise and outputs the state and error covariance at each time step. Note that, in the case of computing the relative target position and orientation from V2X communication data, it is necessary to consider the heading and GNSS measurements of the host vehicle as well. A high-level fusion is performed using the estimated quality scores for sensor data, which are based on the error covariance computed through the prediction and update steps of the Kalman filter. Trajectory prediction for the targets detected in the perception stage is performed by employing the constant turn rate and velocity (CTRV) motion model. In risk assessment steps, possible vehicle collisions are detected based on the results from the previous trajectory prediction step. A preliminary assessment that requires significantly less computation load is first carried out to detect possible collisions, and if collisions are expected, a more detailed assessment is performed to estimate precise TTC. Finally, appropriate visual and audible warnings are generated to the driver based on the TTC estimate, where the warning information is provided through the human–machine interface (HMI) in four different threat levels.

3.2. Automotive Sensors for Remote Sensing

We selected on-board sensors that have already been adopted in production vehicles such that by adding V2X communication devices we can evaluate the benefits of introducing V2X communications to today’s vehicles in terms of road safety. The types of sensors installed on vehicles produced in recent years include radar, cameras, and also lidar, which enable ADAS features such as forward collision warning (FCW), automatic emergency braking (AEB), adaptive cruise control (ACC), and lane keeping assist system (LKAS).

Automotive radar, which is an active ranging sensor designed for detecting and tracking remote targets in the surrounding environment, is one of the most used ranging sensors for ADAS functions these days. The most widely found long-range radar sensors on production vehicles include Delphi ESR, Bosch LRR, and Continental ARS series, of which characteristics are shown in

Table 1. The specification values are from the respective manufacturer’s specification sheet. In this work, the technical data of Delphi ESR were employed to model the radar in the experimental environment.

Lidar is an active ranging sensor that operates in a similar fashion to radar except that it utilizes light rather than radio waves. Most automotive lidars currently use near-infrared light with a wavelength of 905 nm. Lidar became a popular choice for automated driving technology research since it was used by a large number of teams who participated in the DARPA Grand Challenges. Lidar offers more accurate ranging performance compared with radar and cameras, but despite its advantage, most automakers are yet to adopt lidar mainly due to its tremendous cost. However, it appears that automakers will gradually consider using lidar in the near future because low-cost lidar sensors are becoming more available. Audi became the first automaker to adopt lidar in the production vehicle when they recently started shipping their flagship sedan equipped with an on-board lidar sensor [

21]. The performance of the Ibeo Scala sensor is summarized in

Table 2.

Contrary to other ranging sensors, vision sensors do not directly provide range information. Instead, range information is often estimated using the road geometry and the point of contact of the vehicle and the road [

22], optical flow velocity vectors [

23], bird’s-eye view [

24], and object knowledge [

24]. Considering that the detection and tracking performance of a vision-based system may largely vary depending on the algorithm used, the technical data of the Mobileye vehicle detection system, as reported in [

22], were employed to model the vision sensor.

Table 3 shows the performance characteristics of the Mobileye system.

3.3. V2X Communications

The IEEE 802.11p and the IEEE 1609 family of standards are collectively called wireless access in vehicular environments (WAVE) standards. The IEEE has developed the IEEE 802.11p as an amendment to the IEEE 802.11 to include vehicular environments [

25]. This amendment was required to support wireless communications among vehicles and infrastructure. The IEEE 1609 protocol suite is a higher-layer standard based on the IEEE 802.11p. In the case of V2V communications, on-board units (OBUs) are installed in vehicles to enable wireless communication. These devices operate independently and exchange data using the 5.9 GHz DSRC frequency band, which is divided into seven 10-MHz channels. One of them is the control channel (CCH), which is used for safety and control messages, while other six are the service channels (SSHs), which are used for data transfer [

26]. The characteristics of the WAVE standards are summarized in

Table 4.

For the purpose of V2X communications, the host vehicle in this work is equipped with a DSRC antenna in addition to the sensors described in the previous section. This makes it possible for the host vehicle to gather information on the remote vehicles in the surrounding area (up to a distance of 1000 m) by exchanging BSMs, which are sent over the CCH channel with a period of 100 ms. The BSM, which is defined in the SAE J2735 message set dictionary [

27], contains safety data regarding the vehicle state such as the GNSS position, speed, heading, and yaw rate of the vehicle, as well as the vehicle size. A BSM consists of two parts: Part I and Part II. The BSM Part I contains the core data that must be included in every BSM, whereas the BSM Part II content is optional.

Table 5 describes the data contained in a BSM.

Similar to the BSM, the personal safety message (PSM) contains important kinematic state information on VRUs, such as pedestrians, bicyclists, and road workers. It is possible to detect VRUs located within the DSRC coverage area by collecting the PSMs transmitted from the VRU communication devices. The PSM, which is also defined in the SAE J2735 message set dictionary [

27], is currently under development, but the core data elements that must be included in a PSM are specified in advance, as shown in

Table 6.

The accuracy of the BSM and the PSM information we assumed in the implementation of the proposed vehicle collision system is presented in

Table 7. For the BSM, typical measurement noise characteristics of a relatively simple differential GPS (DGPS) receiver, as well as those of a wheel speed sensor and a yaw rate sensor are considered. It is important that the position data included in the BSM meet a lane-level accuracy, which is described in the United States Department of Transportation (USDOT) report on vehicular safety communications [

28] as a minimum relative positioning requirement for collision warning applications. With regard to the PSM, the parameter settings for the VRU safety as reported in the SAE J2945/9 VRU safety message performance requirements [

29] are employed in this work for V2P communications.

6. Conclusions

In this paper, we present the development of a vehicle collision warning system based on multisensors and V2X communications. On-board sensors including radar, lidar, and camera systems that have already been adopted in production vehicles are chosen for this work such that by adding V2X communication devices to the vehicle, we can evaluate the benefits of introducing V2X communications to today’s vehicles in terms of road safety. The proposed design employs a Kalman filter-based approach for high-level fusion of V2X communications and on-board automotive sensors for remote sensing. Based on the TTC estimate result from the trajectory prediction and the risk assessment steps, an appropriate visual and audible warning is provided to the driver prior to the collision. The performance of the proposed system is evaluated in virtual driving environments, where two types of vehicle collision scenarios are considered: a vehicle–vehicle collision in an SCP scenario and a vehicle–pedestrian collision in the Euro NCAP test scenario. The results from the proof-of-concept test demonstrate that the proposed system enables higher driver and pedestrian safety through improved perception performance and proper collision warning, even in situations where collision mitigation is difficult with existing safety systems. For future work, we plan to implement the proposed vehicle collision warning method in an in-vehicle prototyping system and evaluate the performance in various driving conditions. In order to ensure the collision warning application reliability, we also aim to investigate the effects of various factors (e.g., distance between vehicles and transmission power) that could adversely affect the reliability of V2X communications.