Time Series Multiple Channel Convolutional Neural Network with Attention-Based Long Short-Term Memory for Predicting Bearing Remaining Useful Life

Abstract

1. Introduction

2. Related Work

3. Proposed Methods

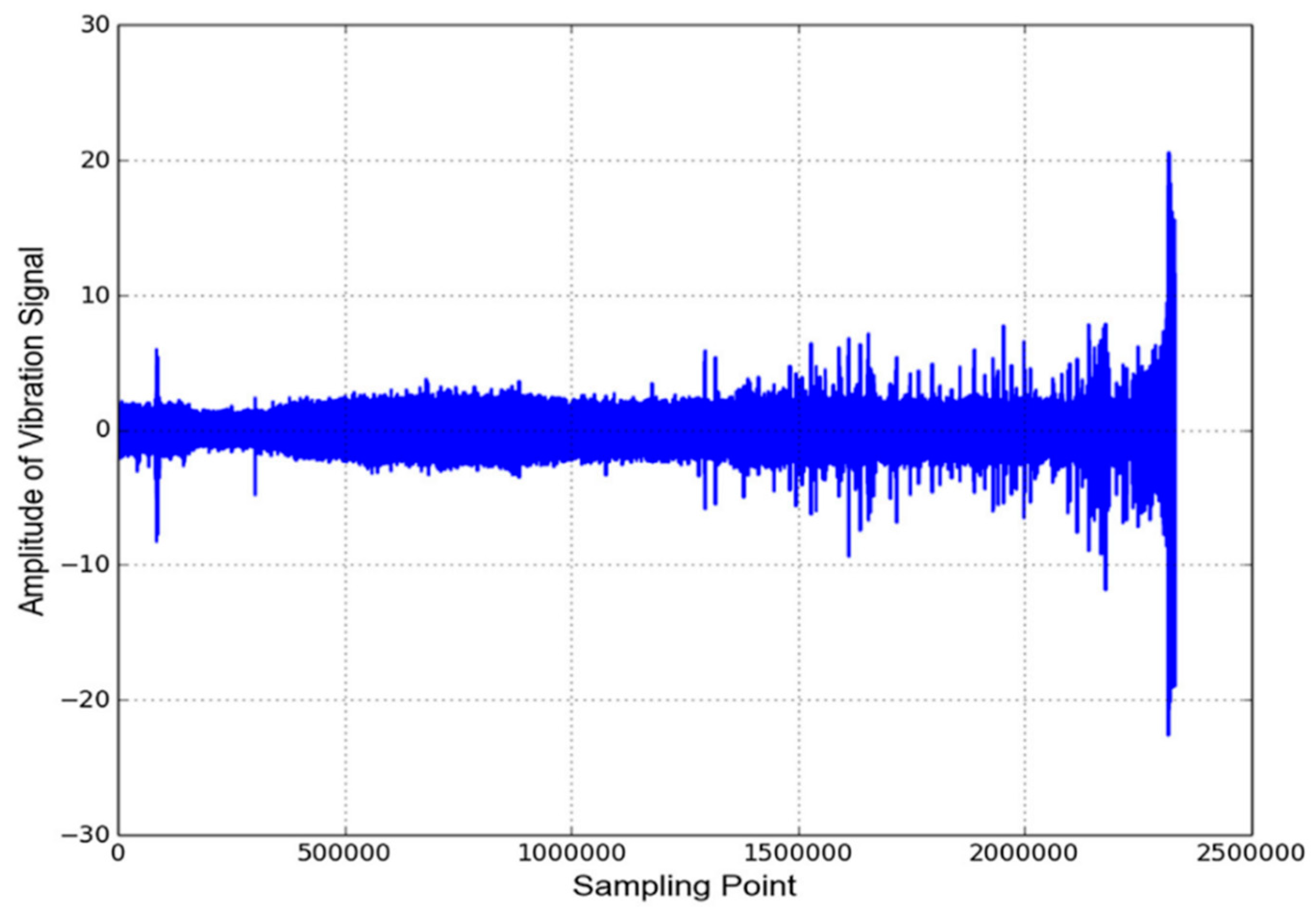

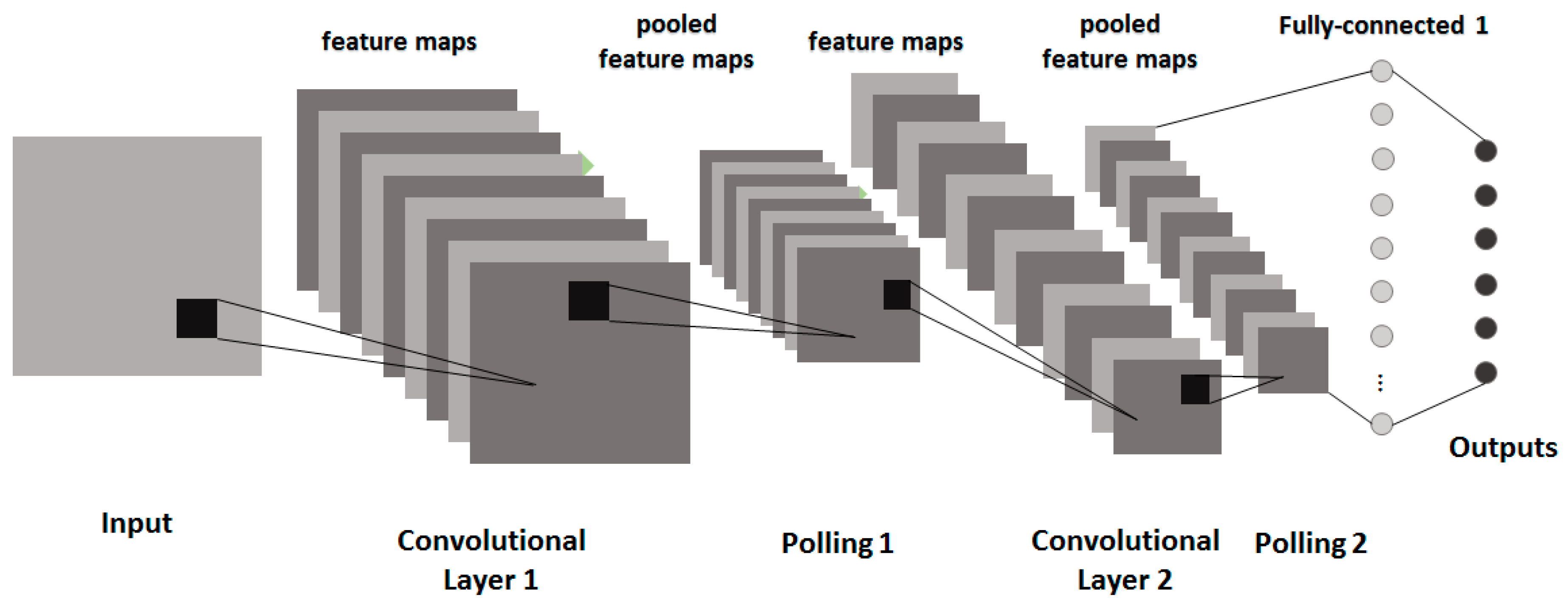

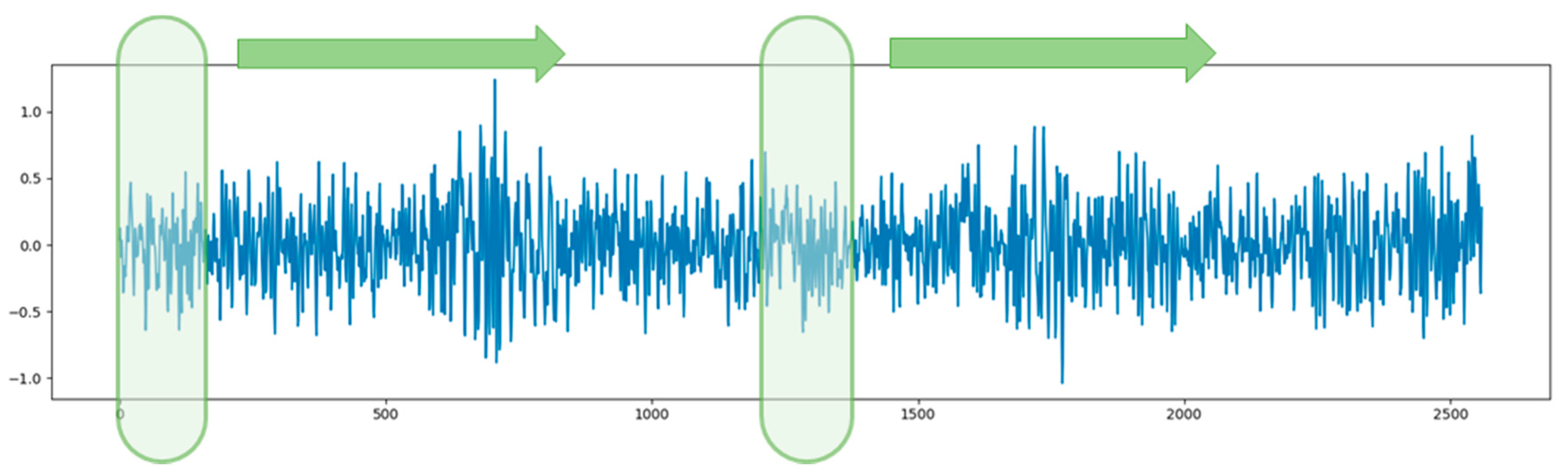

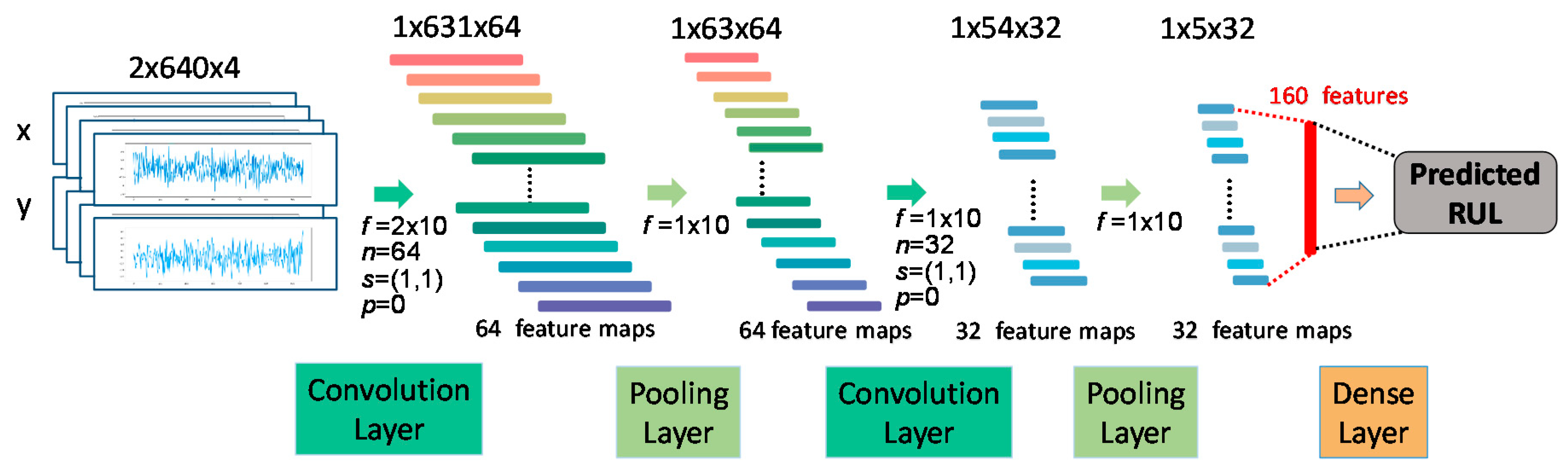

3.1. TSMC-CNN

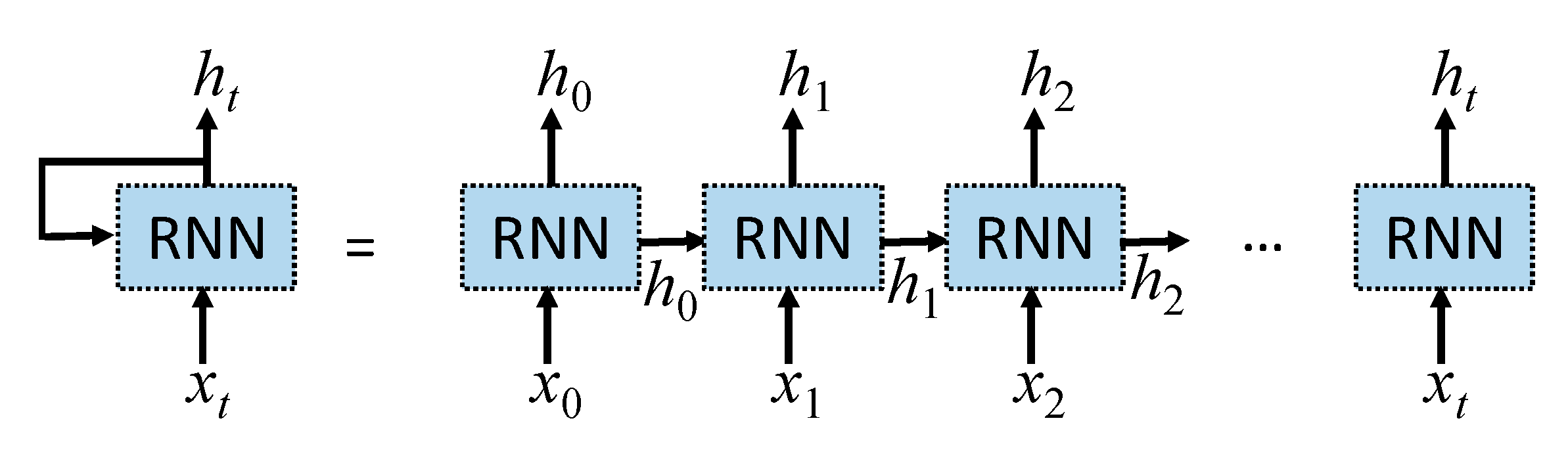

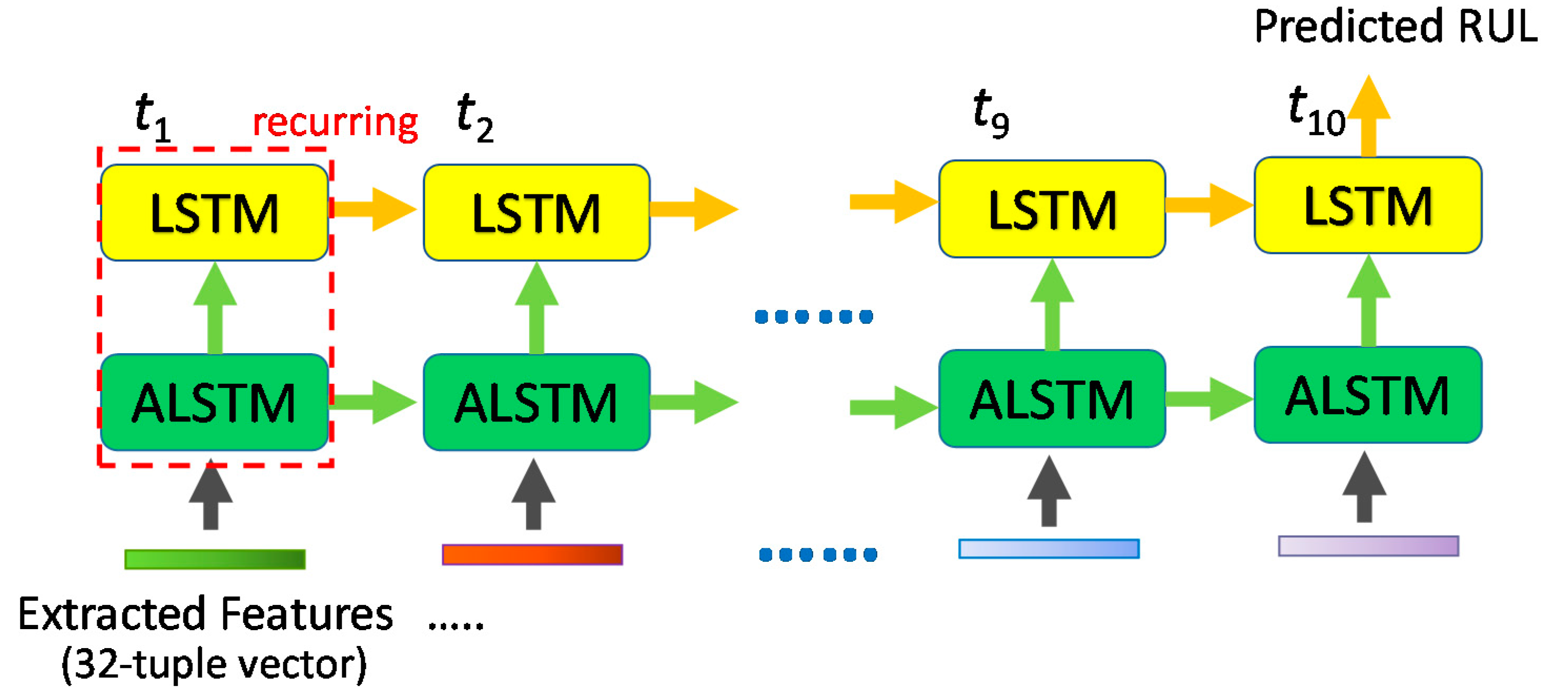

3.2. TSMC-CNN-ALSTM

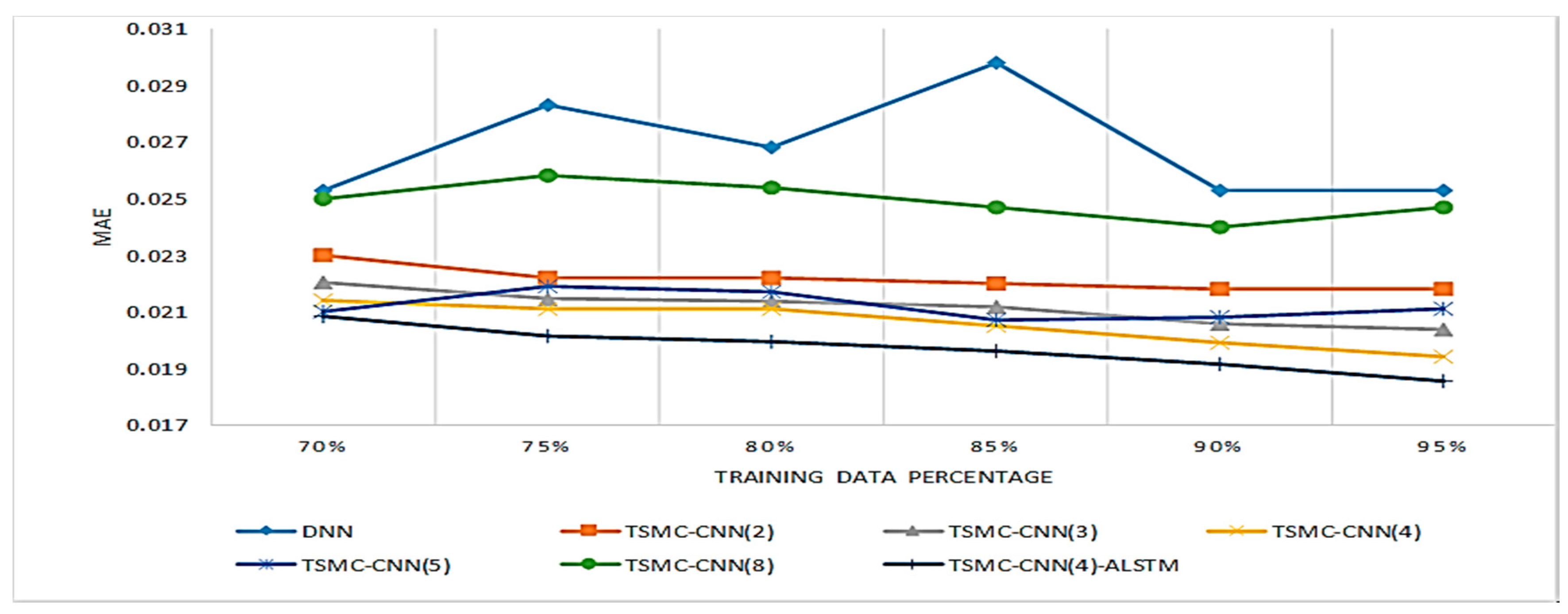

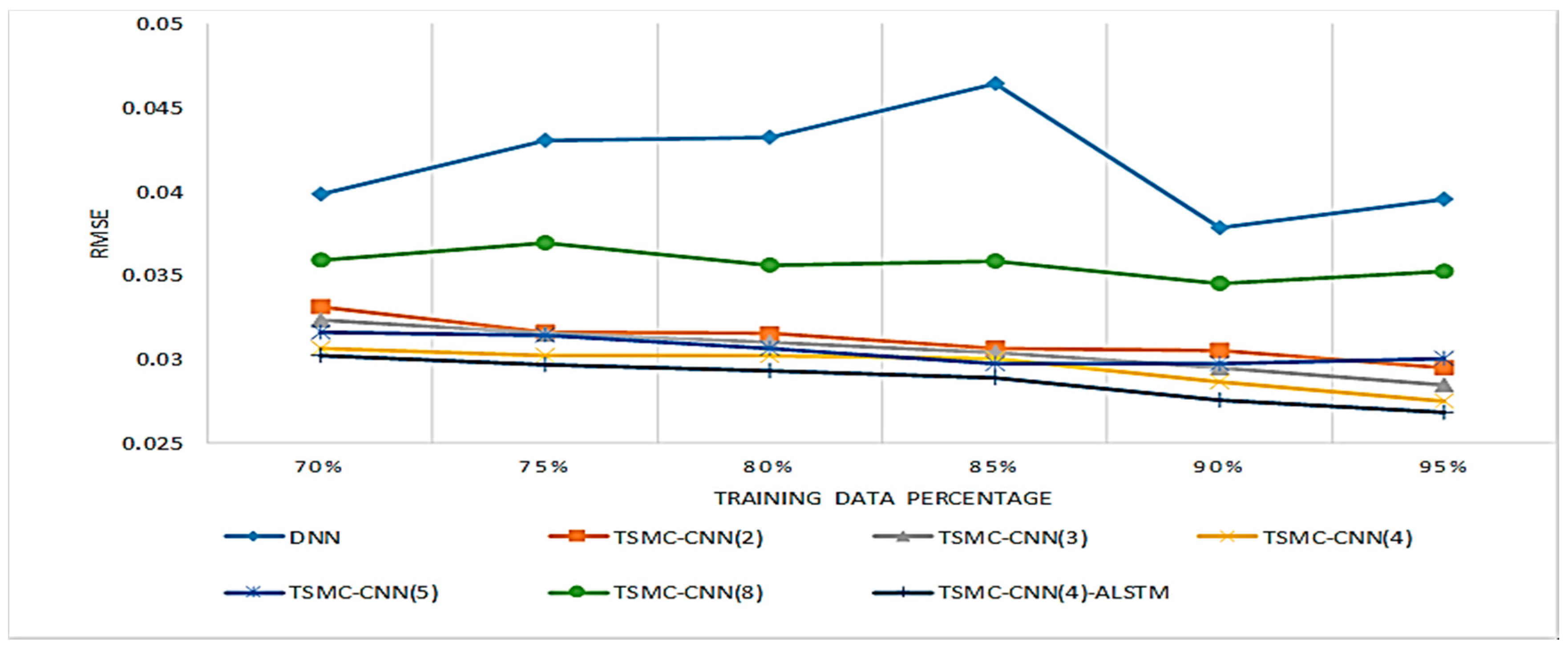

4. Performance Comparisons

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Nomenclature

| Adam | adaptive moment estimation |

| AI | artificial intelligence |

| ALSTM | attention-based long short-term memory |

| BPNN | backpropagation neural network |

| BR | Bayesian regression |

| CALCE | center for advanced life cycle engineering |

| CL | convolutional layer |

| CNN | convolutional neural network |

| CWT | continuous wavelet transform |

| CWTCNN-HI | continuous wavelet transform and CNN-based health indicator |

| DAE | deep autoencoder |

| DNN | deep neural network |

| ELM | extreme learning machine |

| FDF | frequency domain feature |

| FFT | fast Fourier transformation |

| FPT | first predicting time |

| FSPS | frequency spectrum partition summation |

| GBDT | gradient boosting decision tree |

| GPR | Gaussian process regression |

| GR | Gaussian regression |

| GRU | gated recurrent unit |

| HHT | Hilbert-Huang transform |

| HLR | high-level representation |

| Leaky ReLU | leaky rectified linear unit |

| LDA | linear discriminant analysis |

| LR | linear regression |

| LS-SVR | least squares-support vector regression |

| LSTM | long short-term memory |

| MAE | mean absolute error |

| MDGRU | multi-dimensional gated recurrent units |

| MSCNN | multi-scale CNN |

| MSE | mean squared error |

| PCA | principal component analysis |

| PHM | prognostics and health management |

| RBM | restricted Boltzmann machine |

| ReLU | rectified linear unit |

| RF | random forest |

| RMS | root mean square |

| RMSE | root mean squared error |

| RNN | recurrent neural network |

| RNN-HI | RNN-health indicator |

| RS | related-similarity |

| RUL | remaining useful life |

| SOM-HI | self-organizing map based health indicator |

| SSH | single scale-high |

| SSL | single scale-low |

| STFT | short-time Fourier transform |

| SVD | support vector data |

| SVM | support vector machine |

| SVR-HI | support vector regression health indicator |

| TDF | time domain feature |

| TFDF | time-frequency domain feature |

| TFR | time frequency representation |

| TSMC | time series multiple channel |

| WT | wavelet transform |

References

- Jiang, J.R. An improved cyber-physical systems architecture for Industry 4.0 smart factories. Adv. Mech. Eng. 2018, 10. [Google Scholar] [CrossRef]

- Lee, G.Y.; Kim, M.; Quan, Y.-J.; Kim, M.-S.; Kim, T.J.Y.; Yoon, H.-S.; Min, S.; Kim, D.-H.; Mun, J.-W.; Oh, J.W.; et al. Machine health management in smart factory: A review. J. Mech. Sci. Technol. 2018, 32, 987–1009. [Google Scholar] [CrossRef]

- Lee, J.; Wu, F.J.; Zhao, W.Y.; Ghaffari, M.; Liao, L.X.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Xia, T.; Dong, Y.; Xiao, L.; Du, S.; Pan, E.; Xi, L. Recent advances in prognostics and health management for advanced manufacturing paradigms. Reliab. Eng. Syst. Saf. 2018, 178, 255–268. [Google Scholar] [CrossRef]

- Saxena, A.; Goebel, K.; Simon, D.; Eklund, N. Damage propagation modeling for aircraft engine run-to-failure simulation. In Proceedings of the IEEE International Conference on Prognostics and Health Management (PHM 2008), Denver, CO, USA, 6–9 October 2008; pp. 1–9. [Google Scholar]

- Nielsen, J.; Sørensen, J. Bayesian estimation of remaining useful life for wind turbine blades. Energies 2017, 10, 664. [Google Scholar] [CrossRef]

- Carroll, J.; Koukoura, S.; McDonald, A.; Charalambous, A.; Weiss, S.; McArthur, S. Wind turbine gearbox failure and remaining useful life prediction using machine learning techniques. Wind Energy 2019, 22, 360–375. [Google Scholar] [CrossRef]

- Jiang, Z.; Banjevic, D.E.M.; Jardine, A.; Li, Q. Remaining useful life estimation of metropolitan train wheels considering measurement error. J. Qual. Maint. Eng. 2018, 24, 422–436. [Google Scholar] [CrossRef]

- Tongyang, L.I.; Shaoping, W.A.N.G.; Jian, S.H.I.; Zhonghai, M.A. An adaptive-order particle filter for remaining useful life prediction of aviation piston pumps. Chin. J. Aeronaut. 2018, 31, 941–948. [Google Scholar]

- Hsu, C.S.; Jiang, J.R. Remaining useful life estimation using long short-term memory deep learning. In Proceedings of the IEEE International Conference on Applied System Innovation 2018 (IEEE ICASI 2018), Chiba, Japan, 13–17 April 2018. [Google Scholar]

- Liao, L. Discovering prognostic features using genetic programming in remaining useful life prediction. IEEE Trans. Ind. Electron. 2013, 61, 2464–2472. [Google Scholar] [CrossRef]

- Li, N.; Lei, Y.; Jing, L.; Ding, S.X. An improved exponential model for predicting remaining useful life of rolling element bearings. IEEE Trans. Ind. Electron. 2015, 62, 7762–7773. [Google Scholar] [CrossRef]

- Paris, P.C.; Erdogan, F. A critical analysis of crack propagation laws. J. Basic Eng. 1963, 85, 528–533. [Google Scholar] [CrossRef]

- Sutrisno, E.; Oh, H.; Vasan, A.S.S.; Pecht, M. Estimation of remaining useful life of ball bearings using data driven methodologies. In Proceedings of the 2012 IEEE Conference on Prognostics and Health Management, Denver, CO, USA, 18–21 June 2012; pp. 1–7. [Google Scholar]

- Xia, M.; Kong, F.; Hu, F. An approach for bearing fault diagnosis based on PCA and multiple classifier fusion. In Proceedings of the 6th IEEE Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 20–22 August 2011; pp. 321–325. [Google Scholar]

- Jiang, L.; Fu, X.; Cui, J.; Li, Z. Fault detection of rolling element bearing based on principal component analysis. In Proceedings of the 24th Chinese Control and Decision Conference (CCDC), Taiyuan, China, 23–25 May 2012; pp. 2944–2948. [Google Scholar]

- Georgoulas, G.; Loutas, T.; Stylios, C.D.; Kostopoulos, V. Bearing fault detection based on hybrid ensemble detector and empirical mode decomposition. Mech. Syst. Signal Process. 2013, 41, 510–525. [Google Scholar] [CrossRef]

- Kang, M.; Kim, J.; Kim, J.M. Reliable fault diagnosis for incipient low-speed bearings using fault feature analysis based on a binary bat algorithm. Inf. Sci. 2015, 294, 423–438. [Google Scholar] [CrossRef]

- Dong, S.; Sun, D.; Tang, B.; Gao, Z.; Wang, Y.; Yu, W.; Xia, M. Bearing degradation state recognition based on kernel PCA and wavelet kernel SVM. J. Mech. Eng. Sci. 2015, 229, 2827–2834. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, T. A fault diagnosis approach using SVM with data dimension reduction by PCA and LD method. In Proceedings of the 2015 Chinese Automation Congress (CAC), Wuhan, China, 27–29 November 2015; pp. 869–874. [Google Scholar]

- Yunusa-Kaltungo, A.; Sinha, J.K.; Nembhard, A.D. A novel fault diagnosis technique for enhancing maintenance and reliability of rotating machines. Struct. Health Monit. 2015, 14, 604–621. [Google Scholar] [CrossRef]

- Yunusa-Kaltungo, A.; Sinha, J.K. Generic vibration-based faults identification approach for identical rotating machines installed on different foundations. Vib. Rotating Mach. 2016, 11, 499–510. [Google Scholar]

- Yunusa-Kaltungo, A.; Sinha, J.K. Sensitivity analysis of higher order coherent spectra in machine faults diagnosis. Struct. Health Monit. 2016, 15, 555–567. [Google Scholar] [CrossRef]

- Sheriff, M.Z.; Mansouri, M.; Karim, M.N.; Nounou, H.; Nounou, M. Fault detection using multiscale PCA-based moving window GLRT. J. Process Control 2017, 1, 47–64. [Google Scholar] [CrossRef]

- Fadda, M.L.; Moussaoui, A. Hybrid SOM–PCA method for modeling bearing faults detection and diagnosis. J. Braz. Soc. Mech. Sci. Eng. 2018, 40, 268. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, X.; Qian, P. Wind turbine fault detection and identification through PCA-based optimal variable selection. IEEE Trans. Sustain. Energy 2018, 9, 1627–1635. [Google Scholar] [CrossRef]

- Stief, A.; Ottewill, J.R.; Baranowski, J.; Orkisz, M. A PCA and two-stage Bayesian sensor fusion approach for diagnosing electrical and mechanical faults in induction motors. IEEE Trans. Ind. Electron. 2019, 66, 9510–9520. [Google Scholar] [CrossRef]

- Hamadache, M.; Jung, J.H.; Park, J.; Youn, B.D. A comprehensive review of artificial intelligence-based approaches for rolling element bearing PHM: Shallow and deep learning. JMST Adv. 2019, 1, 125–151. [Google Scholar] [CrossRef]

- Hoang, D.T.; Kang, H.J. A survey on deep learning based bearing fault diagnosis. Neurocomputing 2019, 335, 327–335. [Google Scholar] [CrossRef]

- Ren, L.; Cui, J.; Sun, Y.; Cheng, X. Multi-bearing remaining useful life collaborative prediction: A deep learning approach. J. Manuf. Syst. 2017, 43, 248–256. [Google Scholar] [CrossRef]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Ren, L.; Sun, Y.; Wang, H.; Zhang, L. Prediction of bearing remaining useful life with deep convolution neural network. IEEE Access 2018, 6, 13041–13049. [Google Scholar] [CrossRef]

- Mao, W.; He, J.; Tang, J.; Li, Y. Predicting remaining useful life of rolling bearings based on deep feature representation and long short-term memory neural network. Adv. Mech. Eng. 2018, 10, 12. [Google Scholar] [CrossRef]

- Ren, L.; Sun, Y.; Cui, J.; Zhang, L. Bearing remaining useful life prediction based on deep autoencoder and deep neural networks. J. Manuf. Syst. 2018, 48, 71–77. [Google Scholar] [CrossRef]

- Hinchi, A.Z.; Tkiouat, M. Rolling element bearing remaining useful life estimation based on a convolutional long-short-term memory network. Procedia Comput. Sci. 2018, 127, 123–132. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, N.; Peng, W. Estimation of bearing remaining useful life based on multiscale convolutional neural network. IEEE Trans. Ind. Electron. 2018, 66, 3208–3216. [Google Scholar] [CrossRef]

- Yoo, Y.; Baek, J.G. A novel image feature for the remaining useful lifetime prediction of bearings based on continuous wavelet transform and convolutional neural network. Appl. Sci. 2018, 8, 1102. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. Deep learning-based remaining useful life estimation of bearings using multi-scale feature extraction. Reliab. Eng. Syst. Saf. 2019, 182, 208–218. [Google Scholar] [CrossRef]

- Ren, L.; Cheng, X.; Wang, X.; Cui, J.; Zhang, L. Multi-scale dense gate recurrent unit networks for bearing remaining useful life prediction. Future Gener. Comput. Syst. 2019, 94, 601–609. [Google Scholar] [CrossRef]

- Acoustics and Vibration Database: IEEE PHM 2012 Data Challenge Bearing Dataset. Available online: http://data-acoustics.com/measurements/bearing-faults/bearing-6/ (accessed on 19 December 2019).

- Nectoux, P.; Gouriveau, R.; Medjaher, K.; Ramasso, E.; Chebel-Morello, B.; Zerhouni, N.; Varnier, C. PRONOSTIA: An experimental platform for bearings accelerated degradation tests. In Proceedings of the IEEE International Conference on Prognostics and Health Management, PHM’12, Denver, CO, USA, 18–21 June 2012; pp. 1–8. [Google Scholar]

- Sarlin, P. Self-organizing time map: An abstraction of temporal multivariate patterns. Neurocomputing 2013, 99, 496–508. [Google Scholar] [CrossRef]

- Huang, N.E. Hilbert-Huang Transform and Its Applications; World Scientific: Singapore, 2014. [Google Scholar]

- Zhao, X.; Ye, B.; Chen, T. Difference spectrum theory of singular value and its application to the fault diagnosis of headstock of lathe. J. Mech. Eng. 2010, 46, 100–108. [Google Scholar] [CrossRef]

- Soualhi, A.; Medjaher, K.; Zerhouni, N. Bearing health monitoring based on Hilbert–Huang transform, support vector machine, and regression. IEEE Trans. Instrum. Meas. 2015, 64, 52–62. [Google Scholar] [CrossRef]

- Yang, Y.; Sun, L.; Guo, C. Aero-material consumption prediction based on linear regression model. Procedia Comput. Sci. 2018, 131, 825–831. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, D.; Yu, J.; Peng, Y.; Peng, X. EMA remaining useful life prediction with weighted bagging GPR algorithm. Microelectron. Reliab. 2017, 75, 253–263. [Google Scholar] [CrossRef]

- Liu, Z.; Cheng, Y.; Wang, P.; Yu, Y.; Long, Y. A method for remaining useful life prediction of crystal oscillators using the Bayesian approach and extreme learning machine under uncertainty. Neurocomputing 2018, 305, 27–38. [Google Scholar] [CrossRef]

- Ding, X.; He, Q. Energy-fluctuated multiscale feature learning with deep convnet for intelligent spindle bearing fault diagnosis. IEEE Trans. Instrum. Meas. 2017, 66, 1926–1935. [Google Scholar] [CrossRef]

- Hong, S.; Zhou, Z.; Zio, E.; Hong, K. Condition assessment for the performance degradation of bearing based on a combinatorial feature extraction method. Digit. Signal Process. 2014, 27, 159–166. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Gontarz, S.; Lin, J.; Radkowski, S.; Dybala, J. A model-based method for remaining useful life prediction of machinery. IEEE Trans. Reliab. 2016, 65, 1314–1326. [Google Scholar] [CrossRef]

- Albelwi, S.; Mahmood, A. A framework for designing the architectures of deep convolutional neural networks. Entropy 2017, 19, 242. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A critical review of recurrent neural networks for sequence learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

| Ref. | Networks | Features | Methods Compared | Year |

|---|---|---|---|---|

| Ren et al. [30] | DNN | TDF + FDF | GBDT, SVM, BPNN, GR, BR | 2017 |

| Guo et al. [31] | RNN (LSTM) | RNN-HI (RS + TFDF) | SOM-HI | 2017 |

| Ren et al. [32] | CNN + DNN | Spectrum-Principal-Energy-Vector | SVM and DNN with wavelet features | 2018 |

| Mao et al. [33] | CNN + LSTM | Hilbert-Huang Transform Marginal Spectrum | SVM, LR, GPR, ELM | 2018 |

| Ren et al. [34] | DAE + DNN | TDF + FDF + TFDF | NAE-DNN, DNN/FSPS, SVM | 2018 |

| Hinchi et al. [35] | Convolutional LSTM | Raw data | CALCE | 2018 |

| Zhu et al. [36] | MSCNN | Time-Frequency-Representation (TFR) | RNN-HI, SOM-HI, SVR-HI | 2018 |

| Yoo et al. [37] | CNN | Continuous Wavelet Transform and CNN-based Health Indicator (CWTCNN-HI) | CALCE and methods proposed by Hong et al. Guo et al. and Lei et al. | 2018 |

| Li et al. [38] | MSCNN | Multi-scale High-level Representation | DNN, SSL, SSH, NoFPT | 2019 |

| Ren et al. [39] | RBM + MDGRU | TDF + FDF + TFDF | SVM, RF, BR | 2019 |

| Conditions | Load (N) | Speed (rpm) | Bearings |

|---|---|---|---|

| 1 | 4000 | 1800 | Bearing1-1, Bearing1-2, Bearing1-3, Bearing1-4, Bearing1-5, Bearing1-6, Bearing1-7 |

| 2 | 4200 | 1650 | Bearing2-1, Bearing2-2, Bearing2-3, Bearing2-4, Bearing2-5, Bearing2-6, Bearing2-7 |

| 3 | 5000 | 1500 | Bearing3-1, Bearing3-2, Bearing3-3 |

| Training Data (%) | 70% | 75% | 80% | 85% | 90% | 95% | |

|---|---|---|---|---|---|---|---|

| Methods | |||||||

| GBDT | |||||||

| MAE: | 0.0378 | 0.0393 | 0.0378 | 0.0303 | 0.0378 | 0.0378 | |

| RMSE: | 0.056 | 0.0547 | 0.0564 | 0.0552 | 0.0569 | 0.0866 | |

| SVM | |||||||

| MAE: | 0.06 | 0.058 | 0.057 | 0.057 | 0.058 | 0.059 | |

| RMSE: | 0.081 | 0.0797 | 0.0785 | 0.0787 | 0.079 | 0.0866 | |

| BPNN | |||||||

| MAE: | 0.1075 | 0.1106 | 0.1121 | 0.1121 | 0.1196 | 0.1181 | |

| RMSE: | 0.1428 | 0.1471 | 0.1457 | 0.1485 | 0.1514 | 0.1557 | |

| GR | |||||||

| MAE: | 0.059 | 0.0575 | 0.0575 | 0.0575 | 0.0575 | 0.059 | |

| RMSE: | 0.0927 | 0.0915 | 0.0947 | 0.092 | 0.0922 | 0.0925 | |

| BR | |||||||

| MAE: | 0.1368 | 0.1396 | 0.1393 | 0.1391 | 0.1403 | 0.1385 | |

| RMSE: | 0.17 | 0.1728 | 0.1714 | 0.1714 | 0.1728 | 0.1714 | |

| DNN | |||||||

| MAE: | 0.0253 | 0.0283 | 0.0268 | 0.0298 | 0.0253 | 0.0253 | |

| RMSE: | 0.0398 | 0.043 | 0.0432 | 0.0464 | 0.0378 | 0.0395 | |

| TSMC-CNN (2) | |||||||

| MAE: | 0.023 | 0.0222 | 0.0222 | 0.022 | 0.0218 | 0.0218 | |

| RMSE: | 0.0331 | 0.0316 | 0.0315 | 0.0306 | 0.0305 | 0.0295 | |

| TSMC-CNN (3) | |||||||

| MAE: | 0.022 | 0.0214 | 0.0214 | 0.0212 | 0.0206 | 0.0204 | |

| RMSE: | 0.0323 | 0.0315 | 0.031 | 0.0304 | 0.0295 | 0.0285 | |

| TSMC-CNN (4) | |||||||

| MAE: | 0.0214 | 0.0211 | 0.0211 | 0.0205 | 0.0199 | 0.0194 | |

| RMSE: | 0.0306 | 0.0302 | 0.0302 | 0.03 | 0.0286 | 0.0275 | |

| TSMC-CNN (5) | |||||||

| MAE: | 0.021 | 0.0219 | 0.0217 | 0.0207 | 0.0208 | 0.0211 | |

| RMSE: | 0.0316 | 0.0314 | 0.0306 | 0.0297 | 0.0297 | 0.03 | |

| TSMC-CNN (8) | |||||||

| MAE: | 0.025 | 0.0258 | 0.0254 | 0.0247 | 0.024 | 0.0247 | |

| RMSE: | 0.0359 | 0.0369 | 0.0356 | 0.0358 | 0.0345 | 0.0352 | |

| TSMC-CNN (4)-ALSTM | |||||||

| MAE: | 0.0208 | 0.0201 | 0.0199 | 0.0196 | 0.0192 | 0.0186 | |

| RMSE: | 0.0302 | 0.0296 | 0.0293 | 0.0289 | 0.0275 | 0.0268 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, J.-R.; Lee, J.-E.; Zeng, Y.-M. Time Series Multiple Channel Convolutional Neural Network with Attention-Based Long Short-Term Memory for Predicting Bearing Remaining Useful Life. Sensors 2020, 20, 166. https://doi.org/10.3390/s20010166

Jiang J-R, Lee J-E, Zeng Y-M. Time Series Multiple Channel Convolutional Neural Network with Attention-Based Long Short-Term Memory for Predicting Bearing Remaining Useful Life. Sensors. 2020; 20(1):166. https://doi.org/10.3390/s20010166

Chicago/Turabian StyleJiang, Jehn-Ruey, Juei-En Lee, and Yi-Ming Zeng. 2020. "Time Series Multiple Channel Convolutional Neural Network with Attention-Based Long Short-Term Memory for Predicting Bearing Remaining Useful Life" Sensors 20, no. 1: 166. https://doi.org/10.3390/s20010166

APA StyleJiang, J.-R., Lee, J.-E., & Zeng, Y.-M. (2020). Time Series Multiple Channel Convolutional Neural Network with Attention-Based Long Short-Term Memory for Predicting Bearing Remaining Useful Life. Sensors, 20(1), 166. https://doi.org/10.3390/s20010166