Abstract

A quickly growing location-based services area has led to increased demand for indoor positioning and localization. Undoubtedly, Wi-Fi fingerprint-based localization is one of the promising indoor localization techniques, yet the variation of received signal strength is a major problem for accurate localization. Magnetic field-based localization has emerged as a new player and proved a potential indoor localization technology. However, one of its major limitations is degradation in localization accuracy when various smartphones are used. The localization performance is different from various smartphones even with the same localization technique. This research leverages the use of a deep neural network-based ensemble classifier to perform indoor localization with heterogeneous devices. The chief aim is to devise an approach that can achieve a similar localization accuracy using various smartphones. Features extracted from magnetic data of Galaxy S8 are fed into neural networks (NNs) for training. The experiments are performed with Galaxy S8, LG G6, LG G7, and Galaxy A8 smartphones to investigate the impact of device dependence on localization accuracy. Results demonstrate that NNs can play a significant role in mitigating the impact of device heterogeneity and increasing indoor localization accuracy. The proposed approach is able to achieve a localization accuracy of 2.64 m at 50% on four different devices. The mean error is 2.23 m, 2.52 m, 2.59 m, and 2.78 m for Galaxy S8, LG G6, LG G7, and Galaxy A8, respectively. Experiments on a publicly available magnetic dataset of Sony Xperia M2 using the proposed approach show a mean error of 2.84 m with a standard deviation of 2.24 m, while the error at 50% is 2.33 m. Furthermore, the impact of devices on various attitudes on the localization accuracy is investigated.

1. Introduction

Indoor localization has become one of the potential research areas in the last decade. The proposal of RADAR [1] pioneered the indoor positioning with utilizing the radio signals. The emergence of smartphones in 21st century paved the way for the flourishing of localization. The inception and penetration of location-based services (LBS) further accelerated the research in the field of indoor localization. Today, LBS are offered to a large number of users, both indoors and outdoors. The global positioning system (GPS) can achieve localization accuracy ranging from 17 m to better than a few meters [2]. However, this accuracy depends upon many factors including the number and geometry of collected observations, mode, and type of observation, measurement model, level of used biases, design of GPS receiver, and receiving land structure like obstacles or no obstacles [2,3]. The GPS is used for outdoor positioning, yet its sensitivity to occlusions including ceilings and walls makes it inappropriate and inefficient for indoor localization. Although GPS can be used for indoor localization when the user is close to wide windows and the receiver can get signals, the provided location may have a higher error which can in certain scenarios be larger than the indoor localization area itself. The lower signal-to-noise ratio and multipath phenomena result in a less reliable position [4]. This led researchers to investigate alternative technologies that could potentially overcome such limitations and work efficiently for indoor environments.

A large body of work has been presented on such technologies including ultra-wideband (UWB) [5], radio frequency identification (RFID) [6], Wi-Fi [7], and vision [8], etc. Such technologies are however limited by their dependence on additional hardware (with the exception of vision), which needs to be installed in the area intended for localization. Additionally, the wide applicability is restricted by the shortcomings and software and hardware limitations of these techniques. For example, RFID is based on short-range communication and works only in a small area where RFID tags have been installed. The UWB based indoor localization systems provide precise position information but are expensive. Additionally, in the complex and occluded environments, more nodes are required to achieve higher accuracy which further increases the cost [9]. Vision-based indoor localization although it does not need additional infrastructure, but requires a significant amount of computational resources to perform the image matching. The modern graphics processing unit (GPU) can do the image matching in a reduced amount of time; however, vision-based systems’ performance is degraded in case of low lighting conditions and poor image quality which can happen with the phone holding orientation of the user.

The proliferation and wide usage of modern smartphones present a potential solution to many of the above-mentioned limitations. Today, modern smartphones are equipped with a variety of sensors that can be leveraged to perform indoor localization. Smartphone sensors including Wi-Fi, Bluetooth, and camera resulted in the development of many localization techniques. Wi-Fi and Bluetooth based localization systems are limited by inherent limitations of wireless communication e.g., the propagation losses and environmental changes cause substantial changes in received signal strength (RSS) [1,10,11]. The problems of multipath shadowing, signals fading, and impact of other dynamic factors including human mobility on signal fluctuation may lead to very high localization error. In the same fashion, the impact of human body loss causes signals absorption and the change in the RSS leads to higher localization error [12]. Additionally, the RSS has been found to be dependent on hardware and an antenna design which may be an inherent limitation of Wi-Fi positioning accuracy [13]. The sensors embedded in the smartphone are utilized in a variety of practical tasks. The authors present an object classification framework in [14] using the hyperspectral camera. Similarly, wearable sensors are utilized as well in many practical applications. A triaxial accelerometer-based human motion detection system is proposed in various research works [15,16]. The features extracted from the data are used in machine learning-based models for that purpose. Feature extraction for such applications is very important. Thus, we can find various works which aim at finding various features for these tasks. Research [17] works on feature extraction on the unmanned aerial vehicles (UAV). Algebraic representation of Spatio-temporal real-world objects is presented in [18]. Local descriptors are used to track a person in two different cameras with support vector machines (SVM) [19]. In the same way, similar human interactions are recognized with a supervised framework in [20]. Motion estimation is another important task in today’s real-world applications and we can find a large body of work about human activity detection [21], motion estimation, and real-time motion detection through multiple cameras [22,23]. Various sensors have been employed to achieve such tasks. For example, the authors in [24] present a geometric-constrained multi-view image matching method that aims at the efficient and reliable processing of multiple remote sensing images. Machine learning [25], as well as deep learning frameworks [26], have also been utilized for human and human interaction detection.

The geomagnetic field-based localization has emerged as a new paradigm during the last few years [27,28,29]. Today, a large body of works [30,31,32,33] can be found which utilizes the earth’s magnetic field data for indoor localization. The geomagnetic field (referred to as the magnetic field for convenience) is the natural phenomenon that is caused by the flow of convection current in the outer layer of the earth. The magnetic field is a vector field and possesses a direction and magnitude. It requires three parameters to represent the magnetic field at a point. The north, east, and downward components are represented x, y, and z. A common way to represent the magnetic field is with the total intensity F, the inclination I, and the declination D. However, the most widely used representation is through magnetic x, y, z, and F. Another way of showing the magnetic field is through the horizontal component H, the vertical component z, and the declination D [34]. The total magnetic strength on the earth’s surface varies from 25 micro Tesla to 65 micro Tesla [35]. The magnetic strength and its direction do not change over a small restricted area, yet man-made construction obstructs the magnetic field and alters it to cause magnetic disturbances. Such magnetic disturbances are called anomalies and observed to exhibit unique behavior. These magnetic anomalies have been studied and used as a fingerprint in many research works [30,36]. However, the techniques which utilize magnetic field fingerprints have two major limitations. First, owing to the use of various magnetometers in heterogeneous smartphones, the magnetic strength is different even for the same location [27]. This limits the wide applicability of magnetic field based localization systems as making a common fingerprint for various smartphones is not possible. As a result, the localization error is different even when a single localization approach is adopted for various smartphones. Second, multiple distant locations may have a very similar magnetic signature due to the indoor environment. It is highly probable, especially when the localization space is large. On account of the above-mentioned shortcomings, the fingerprinting technique is not suitable for indoor positioning where magnetic data are used. This study aims to leverage deep neural networks (NN) to address these issues.

Deep learning has recently been utilized to solve many problems and indoor localization is no exception. Deep NN and convolution neural networks (CNN) have been used for indoor scene recognition, object detection, and localization, etc. However, a single NN may perform worse in case of noisy data. This is why this study proposes the use of an ensemble that is based on multiple NN that are trained separately. The prediction from each of these classifiers is then employed to find the final location of the user. Deep learning is a data-intensive technique and requires a large amount of data for training. For this purpose, thousands of magnetic samples have been collected. The key contributions of this research can be summarized as:

- A deep neural network (NN) based approach is presented which performs the indoor localization based on the features extracted from the magnetic data.

- A soft voting criteria is defined to ensemble the prediction of multiple NNs. All NNs are trained with the same magnetic data to predict the user’s current location.

- The proposed approach is tested with heterogeneous devices including Galaxy S8, LG G6, LG G7, and Galaxy A8 to evaluate the localization accuracy. The results are compared with support vector machines (SVM) and another magnetic localization approach.

- Besides our own collected dataset, the proposed approach is tested on a publicly available magnetic dataset where the data have been collected with a Sony Xperia M2 smartphone.

- The impact of device varying attitude has also been investigated where the device attitude is changed from ’navigation’ to ’call listening’, and ’front pocket’ mode to analyze the localization performance of the proposed approach.

The rest of the paper is organized in the following manner. Section 2 presents an overview of a few studies related to this research. The current challenges of magnetic field based positioning are discussed in Section 3. Section 4 describes the proposed approach while Section 5 details the experiment setup and analyzes the results. Finally, conclusions are given in Section 6.

2. Related Work

The application of magnetic field data for indoor localization has been investigated by many research works. Such research includes the analysis of properties of magnetic field data that can be used for localization, as well as the impact of various devices usage, and the attitude of these devices [28,30,36]. Research works using the magnetic field can broadly be categorized into three groups: using magnetic field data alone, hybrid approaches that combine magnetic field with Wi-Fi, pedestrian dead reckoning (PDR), vision, etc., and approaches that utilize machine/deep learning. A few works related to each category are discussed here.

Authors in [37] investigated the use of a smartphone magnetometer to perform indoor localization. The investigation is aimed at studying the localization performance of magnetic data alone. The localization error is low if more elements of the magnetic field are used. However, the error may rise up to 20 m when the localization area is large and complicated by structure. The proposed technique is based on the fingerprint database of magnetic field data, which is laborious and time-consuming. Authors in [31] used the crowdsourcing approach to build the fingerprint and minimize the labor and cost involved in fingerprinting. They employed a revised Monte Carlo technique to locate a pedestrian indoor. The proposed approach is able to converge to a 5 m area by using 30 s data. The research suggests that localization error using magnetic field data alone is higher and other assistive technologies could help to lower the error. Therefore, many research works focusing on the use of magnetic field data with other localization techniques can be found.

For example, an indoor localization system is presented in [38] that combines Wi-Fi signals with the magnetic field data to build the fingerprint database. Initially, Wi-Fi access points (AP) are used to calculate an approximate location. This position is later used to restrict the search space that helps reduce the localization error to 4.5 m. The use of magnetic field data alone with the proposed technique results in an error of up to 16.6 m. Similarly, authors in [39] present an approach that is based on the fusion of magnetic field data with PDR. An artificial neural network is used to identify the user walking and stationary modes. The user movement is tracked at regular intervals and its relevant position is utilized to refine the magnetic position. The reported accuracy is 2–3 m at 50% with two different smartphones. Furthermore, an approach is proposed in [40] which works with PDR and magnetic data to locate a user in the indoor environment. An approach similar to particle filter has been adopted which takes into account the PDR and magnetic position of the user and predicts the final location of the user. Experiment results show under 2 m accuracy with two different devices.

Research proves that the fusion of more than one localization technology can significantly improve the localization accuracy. For instance, authors in [41] present a system called WAIPO. The system is based on the fusion of Wi-Fi and magnetic fingerprints, image matching, and people’s co-occurrence. Initially, the position is estimated using Wi-Fi fingerprints which can further be refined with image matching and Bluetooth beacons. The final position is then calculated with the magnetic data from the user smartphone. The reported accuracy of WAIPO is under 2 m at 98 percent.

Various machine learning models have been proposed as well for human detection in an indoor envrionment. Such models work on various features extracted from the sensor’s data and perform human and object detection. For example, research works [42,43] focus on human interaction recognition with the help of artificial neural networks. The genetic algorithm is applied to identify prominent objects under varying environmental settings. Likewise, authors in [44] make use of graph kernel-based SVM and bag-of-words to perform abnormal activity detection. Authors investigate the use of K-means++ and support vector data description in [45] to cluster the data for regions of interests. Additionally, the use of pyroelectric infrared sensors is reported to perform abnormal activity detection in [46]. The similarity between normal training samples is measured using Kullback–Leibler divergence, and one-class SVMs are used to perform the activity detection. Similarly, the use of depth information using the depth sensors has been proven to increase human activity recognition and tracking in smart houses [47,48].

Recently, the use of deep learning has been reported to perform localization with smartphone sensors. Authors in [49] propose a system that makes use of a variety of smartphone sensors to localize a pedestrian. The research uses a smartphone camera, motion sensors, compass, magnetometer, and Wi-Fi to do the localization. A CNN has been designed that can identify the indoor scene. The recognized scene is later used to narrow down the search space in the magnetic database. The reported localization error is 1.32 at 95%. In the same fashion, the research [50] proposes a multi-story localization approach based on smartphone sensors. The smartphone camera is utilized along with the magnetometer. Instead of magnetic intensity, the magnetic patterns are used to build the database. Smartphone camera pictures have been used for indoor scene recognition. The CNN model helps to identify a specific floor. It also increases the localization accuracy by narrowing down the magnetic database search space. The reported localization error is 1.04 m at 50 percent. CNN has been utilized with the magnetic data as well to perform the localization. For example, authors in [51] present a magnetic field-based indoor localization method that utilizes CNN with smartwatch. The magnetic data along with smartwatch orientation data are used for training. Experiment results show promising results. The NN has been utilized in Wi-Fi based localization as well. Authors in [52] propose a stacked denoising auto-encoder based feature extraction to extract Wi-Fi fingerprints to perform localization. The proposed approach tackles the problem of RSS fluctuation in dynamic environments and improves the localization accuracy.

The above-mentioned research works are limited by two factors in essence. The first problem lies in the use of Wi-Fi signals, which, as already discussed, are vulnerable to propagation loss. Furthermore, the RSS value is subject to dynamic factors including the presence of obstacles, shadowing, and human mobility. Secondly, the impact of device heterogeneity is not studied very well. Very few studies consider device heterogeneity, yet they, in turn, use longer data samples. For example, the authors in [39] consider 14 s data while the authors in [40] employ 8 s data to calculate the final location of the user. Additionally, the use of a smartphone camera consumes the battery very fast and is not an efficient solution. Similarly, the camera has its own inherent limitations including image quality under poor light conditions and dark environments. It is noteworthy to point out that deep learning has been utilized on smartphone camera images alone. This study aims to use deep neural networks on the magnetic field data to perform indoor localization.

3. Current Challenges in Magnetic Field Based Localization

3.1. Indoor Infrastructure and Time Variance

Although the magnetic field has proven to be time-invariant, yet it is highly affected by the indoor infrastructural change, especially those involving ferromagnetic material like iron, steel, etc. Similarly, the addition of steel doors and cupboards cause changes in the magnetic field strength [27]. Additionally, the placement of refrigerators and vending machines in corridors also tend to have a considerable impact on the magnetic field.

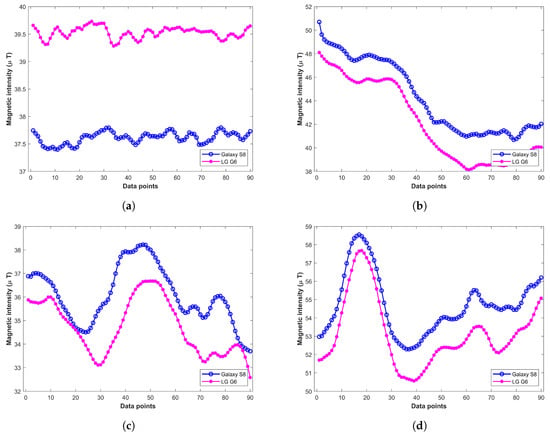

3.2. Heterogeneity of Smartphones

The magnetic field readings differ with different devices even for the same magnetic field [27]. Hence, it is a big challenge to make a localization system that can work with the heterogeneous device in the same fashion, as we have diverse categories of devices today. This is shown in Figure 1 where the magnetic readings from Samsung Galaxy S8, and LG G6 are plotted. Each subplot shows the magnetic readings from two devices at a separate location. The magnetic readings are collected standing at the same place with the smartphone held in the hand in front of the body.

Figure 1.

Magnetic readings from various smartphones at different indoor locations, (a) Location 1; (b) Location 2; (c) Location 3; (d) Location 4.

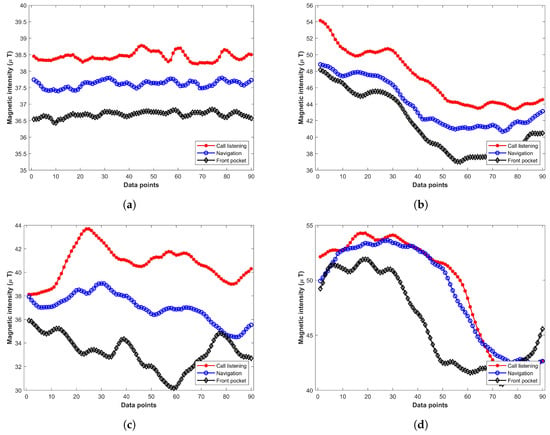

3.3. Various Device Attitudes

The various attitudes of even the same devices make a significant change in the magnetic readings. For example, Figure 2 shows the magnetic readings taken from Galaxy S8 at various locations with different orientations. Each subgraph shows the magnetic readings at the same location. The readings are collected for three attitudes including ‘navigation’, ‘call listening’, and ‘front pocket’. It shows that the total magnetic intensity for various orientations is different. Experiments show that the change in total magnetic intensity is minimal compared to that of x and y components. Thus, for a magnetic field-based positioning system that intends to utilize magnetic x, y, and z components, the common assumption is to fix the attitude of the device [27,53,54]. Traditionally, the phone is held in the hand in front of the body while the user walks.

Figure 2.

Magnetic readings with various attitudes of device at different places, (a) Location 1; (b) Location 2; (c) Location 3; (d) Location 4.

3.4. Low Strength of the Magnetic Field

The magnetic field strength is commonly very weak and measured in . Thus, it is possible to observe the same magnetic field strength at a number of locations in the indoor environment. Although the magnetic field is directional and 3D magnetic signals in x, y, and z directions can be utilized for positioning, in practice, it is difficult to implement, as it requires the tracking of device attitude during the positioning process.

4. Materials and Methods

This section provides the details of the proposed approach. The proposed approach is based on the use of deep learning to train NNs for localization. The first task is to find suitable features that are fed into the NNs.

4.1. Features Selection

The major limitation of using the magnetic field is the device dependence. The intensity of collected magnetic data may be different depending on the sensitivity of the installed magnetometer in various smartphones.

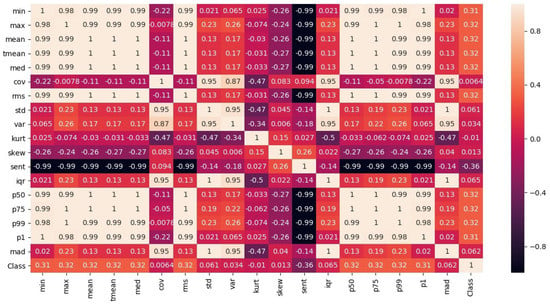

Another shortcoming of magnetic data is its low dimensionality. The magnetic x, y, and z are traditionally used to build the fingerprint. These values may be very similar in multiple locations, especially in a large space. Thus, contrary to using the magnetic field data, this study aims to work with the important features of this data. Initially, a total of 18 features, as shown in Table 1, are shortlisted. Then, a feature analysis is performed and the correlation of each feature to the prediction is evaluated, upon which features including ‘coefficient of variance’, ’kurtosis’, ’Shannon’s entropy’, and ’skewness’ are dropped due to their little correlation to the classification label. The correlation of the features is shown in Figure 3.

Table 1.

Features extracted from magnetic data.

Figure 3.

Correlation of selected features to predict a specific class. ’Class’ weight in rows shows the importance of features.

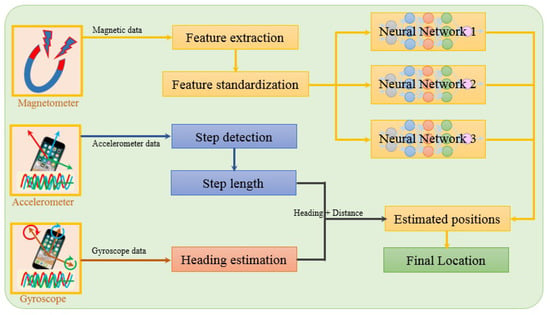

4.2. Proposed Approach

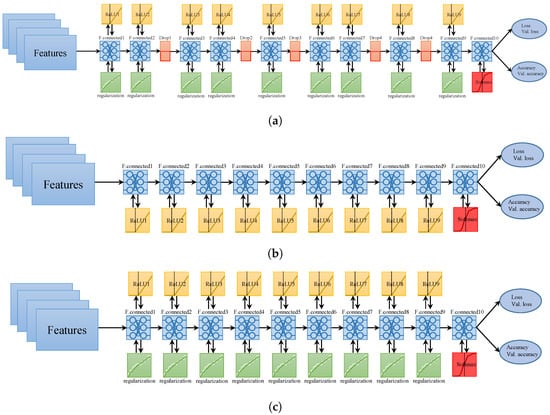

The architecture of the proposed approach is shown in Figure 4. The features extracted from the magnetic data are fed into neural networks. In addition, two other sensors including the accelerometer and gyroscope have been utilized to approximate the user’s relative motion and direction. Three different NNs make use of magnetic features to predict the user’s position. The purpose of using three different NNs is to leverage the predictions such that the prediction accuracy can be maximized. Each NN has a different architecture in terms of the number of hidden layers, the sequence of layers, and activation functions. The structure of each NN is shown in Figure 5. Each NN is comprised of different numbers of hidden layers, as well as the placement of dropout and regularization layers. The features extracted from the magnetic data are used to train the neural networks.

Figure 4.

Architecture of the proposed approach.

Figure 5.

Structure of neural networks (NN), (a) ; (b) ; (c) .

In the same fashion, during the positioning phase, first of all, the features from the user collected data are extracted and fed into each NN to get the prediction. The user data are collected for three consecutive frames at a sampling rate of 10 Hz/s, where each frame is comprised of 2 s. Pre-processing plays an important role in the prediction process. The noise in the training data degrades the performance of the classification models. The data from smartphone sensors contain noise, so pre-processing is performed to clean this noise. For this purpose, a low pass filter has been used on the sensor’s data before the feature extraction. The positioning process follows the steps given in Algorithm 1. Here, each step is described in detail.

Step 1 (line 1): The first step is to get the predictions from three NNs. For this, instead of a single prediction from each NN, top k classes with highest probability are selected where value of k is 10. The value of k is based on the empirical findings. The predictions are collected for and denoted as , , and for , , and , respectively.

| Algorithm 1 Find user location |

|

Step 2 (lines 2–8): Once top k predictions have been taken from each NN, a voting mechanism is needed to combine the predictions. For this purpose, the Euclidean distance d between the predictions is calculated and a soft voting scheme is followed. A threshold is set to select the common predictions from three NN. The value of is 2 m and location candidates are selected with the following criteria:

Equation (1) states that, if the distance between one prediction by and any of the predictions by , and is less than or equal to 2 m, then all the predictions from , , and are added in . Since three NNs may have the same predictions, duplicate predictions from are removed.

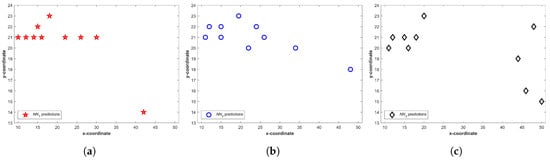

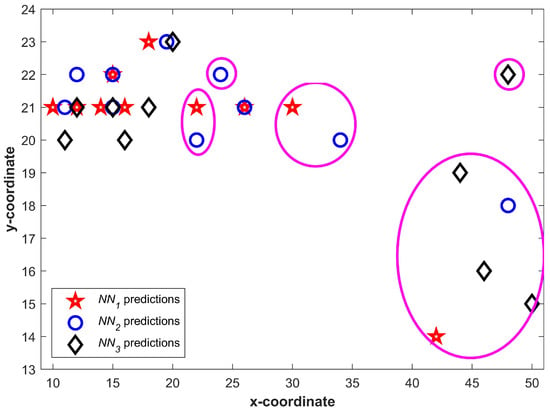

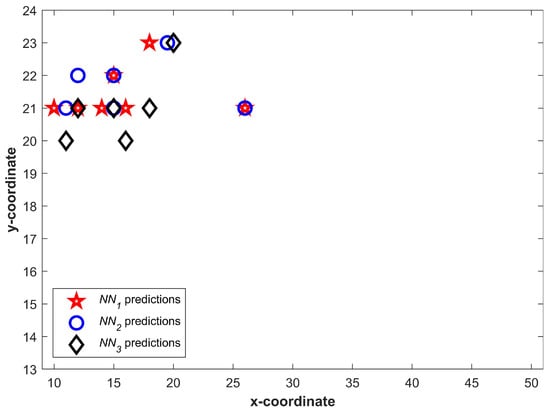

Figure 6 shows the predictions from three NNs for . We can see that a higher number of predictions fall in a small area and few of them may even overlap. It becomes more vivid when predictions are drawn together. Figure 7 shows all predictions drawn together. The predictions which are closer in spatial dimension can easily be seen. Once Equation (1) is applied, the predictions not shared by three NNs can easily be identified. The circled predictions in Figure 7 represent the predictions which are only made by either of NNs and not shared by other NNs (They do not fulfill the criteria set in Equation (1). Once the predictions which do not fulfill the criteria given in Equation (1) are removed, the selected can be seen in Figure 8.

Figure 6.

Predictions from three neural networks, (a) ; (b) ; (c) .

Figure 7.

All predictions from neural networks drawn together.

Figure 8.

Selected predictions from neural networks after using Equation (1).

Step 3 (lines 9–12): Now, are updated for , and with the help of user estimated step length and heading estimation. User approximate relative position is calculated to this end. For this purpose, user step length estimation and heading are required. Step detection is performed using the method proposed in [39], while step detection is done with Weinberg model [55]:

where and show the maximum and minimum acceleration during a time period. Once step length estimation is done, the position , can be calculated using and heading estimation as follows:

where and give the approximate relative position and show only how much the user has traveled in a particular direction during time T. The can be updated using the approximated position of the user.

After that, are refined with the predictions from NN for . The refining criterion is the same as given in Equation (1); however, d is now calculated using and NN predictions. As mentioned before, each T is comprised of the data of 2 s, and, at a moderate speed, the user can travel up to a 2 m distance during the considered time window. Thus, if the new predictions are within the range of 2 m from the locations given in , they are selected; otherwise, they are dropped. Since the distance data may contain an error due to noise, a compensating factor is introduced in the threshold and its value is 0.18 m. The value of is based on the error found during experiments and represents the average error in pedestrian dead reckoning (PDR) estimation over 2 s. Now, the value of is , and is defined as follows:

The same process is repeated for where new are updated with PDR data, and new predictions from NNs are refined with respect to .

Step 4 (line 13): After this step, location candidates converge to a small area. Now, their centroid is calculated which gives the user’s current predicted location .

5. Results and Discussion

This section describes the experiment set up used and various scenarios followed during the experimentation. It also analyzes the results of the experiments.

5.1. Experiment Set Up

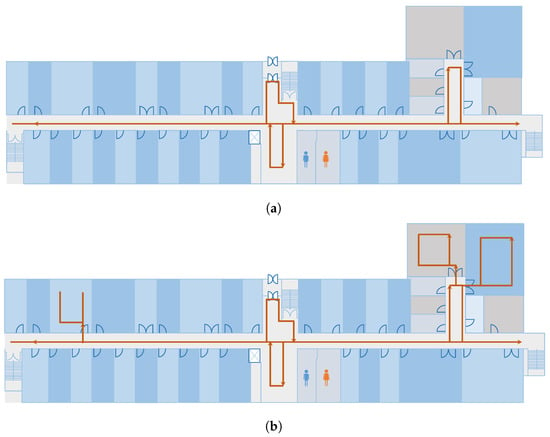

The experiments are conducted in the Information Technology (IT) Engineering building at Yeungnam University, Korea. The dimensions of the building where the localization is performed are 92 × 36 m2. Two scenarios are tested using the proposed approach as shown in Figure 9a,b. The first scenario includes the testing in the corridor space only while scenario 2 includes three laboratories as well. The laboratories are added on account of their availability, while other rooms and offices are not open for experiments. The same path is followed in the forward and backward direction. The magnetic data collection is carried out with a Samsung Galaxy S8 (SM-G950N) device (Samsung Seoul, Korea) for the training. Since the model accuracy highly depends on the training data quality, so training data are collected in a grid where each point is separated by a distance of 1 m. Later, spline interpolation is done to generate the intermediate points. This process is carried out because the continuous data collection from a user may change the size of the data depending upon the user’s speed. Instead, data are collected at specified points and intermediate data are interpolated. The interpolated data are then used to extract the magnetic features and fed them into NNs for training. The training is done using Nvidia TitanX (Santa Clara, CA, USA) on an Intel i7 machine (San Jose, CA, USA) running with a 16.0 GB random access memory. It takes approximately 2 to 2.5 h to finish the training process. A total of 33,500 samples are used for training with a split of 0.75 for training and 0.30 for validation, while 21,850 samples are used for testing. The testing is performed with an S8 and LG G6 (LG-G600L) device.

Figure 9.

The path used for the experiments for, (a) Scenario 1 and (b) Scenario 2.

The performance of the proposed approach is evaluated in terms of the localization error. The test data are collected along with the ground truth points. The localization error is determined using the following equation:

where and show the x and y coordinates for ground truth location, while and represent the location calculated using the proposed approach. It is noteworthy to point out that the same path has been used for training and testing experiments. However, the training data collection path and test path do not coincide with each other. The user does not strictly follow the training path and may deviate. Thus, the training data collection points on the path and testing points may be different for the experiment.

5.2. Results with Total Magnetic Intensity Features

This study considers a number of scenarios to evaluate the performance of the proposed approach. Traditionally, four elements of magnetic field data are utilized to perform localization: , , , and . The represents the total magnetic intensity and is calculated as:

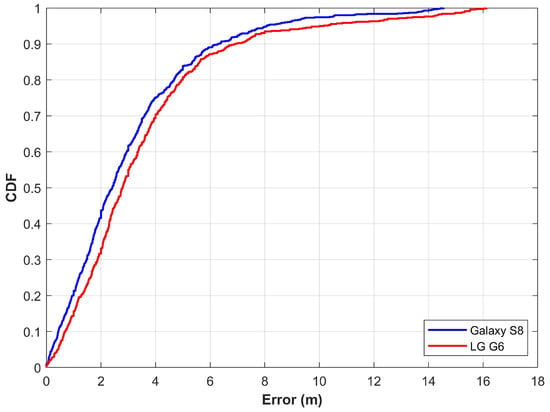

The first scenario considers the experiments with alone to extract the features for NN training. Similarly, testing is carried out in the same fashion. Results are demonstrated in Figure 10. Results demonstrate that the proposed approach is able to localize a user within 2.41 m and 2.80 m at 50% for Galaxy S8 and LG G6, respectively. Although the magnetic data samples from these smartphones are very different by magnitude, the localization results are very similar. Similarly, the localization accuracy at 75% is 4.01 m and 4.43 m for S8 and G6. Localization results are good at 50% and 75%; however, the maximum error is high, i.e., 14.55 and 16.12 for S8 and G6. Thus, this study considers the use of four elements of the magnetic field to train the NNs and predict the user’s position.

Figure 10.

The cumulative distributive function (CDF) graph when using features from magnetic F alone.

5.3. Results with Four Elements of Magnetic Data

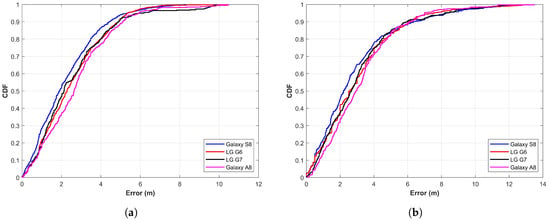

One challenge to using the magnetic field data is that they are low-dimensional. Unlike the Wi-Fi fingerprint that has the RSS value from a number of APs at a given point, the magnetic data have only four elements to be used for fingerprints. Since the results do not meet the standards of indoor localization when only the magnetic F is used, in the second experiment, magnetic x, y, z, and F are considered. The features from these elements are extracted for NN training. Hypothetically, the use of a higher number of magnetic elements would yield higher localization accuracy. The authors in [31,37] also point out that the localization accuracy is higher if magnetic x, y, z, and F are used than that of magnetic F alone. Results are generated using four elements of the magnetic field with the proposed approach and displayed in Figure 11. Results show that the use of four magnetic elements has improved localization accuracy. The localization accuracy at 50% is now 1.89 m, 2.27 m, 2.17 m, and 2.64 m for S8, G6, G7, and Q6, respectively. In the same way, the proposed approach is able to achieve a localization accuracy of 3.75 m at 75% irrespective of the smartphone when magnetic x, y, z, and F are used. The error goes higher than 6 m only after 94.96%. Similarly, the maximum error has also been reduced to 8.32 m, 10.44 m, 9.88 m, and 10.48 m for S8, G6, G7, and Q6, respectively. Although the localization accuracy is slightly different for the four devices used for the experiment, it is very similar, which reveals that the approach is less affected by the change of smartphone. Results demonstrate that the proposed approach achieves the goal of the study, which is to devise a method that can show very similar localization results with various smartphones when the magnetic data are used for localization.

Figure 11.

The CDF graph when using features from magnetic x, y, z, and F, (a) Scenario 1 and (b) Scenario 2.

Table 2 shows the statistics for localization with features from alone and features from four elements of magnetic data for scenarios 1 and 2. It reveals that mean error as well as the standard deviation and maximum error are high when localization is performed with alone. The localization accuracy is better with four components of the magnetic field, as the feature vector is high-dimensional compared to that of alone. When NNs are fed with more features, they perform better and localization accuracy is high. Scenario 2 adds three laboratories that add complexity to user walking movements along with different directions. It degrades the performance and the mean error is increased as a result. Even so, the proposed approach is able to predict the user within 4 m using two different devices at 75%.

Table 2.

Results statistics for single vs. multiple features based prediction.

5.4. Localization Results with Continuous Walk Training Data

As pointed out in Section 5.1, the training data are collected at grid points and later interpolated to extract features for training. However, this study considers data collection with a continuous walk in the area of localization. For this purpose, the path shown in Figure 9a is followed with Galaxy S8 held in the hand. During the data collection, a user walks continuously along the path at a medium pace without stopping. It is important to point out that only the training data collection scenario has been changed from ‘point to point data collection’ to ‘continuous data collection’. The testing procedure remains the same. The testing process involves the localization when the user is walking on the test path. The rest of the procedure is the same for both training and testing. The purpose of this experiment is to analyze if the difference in training data collection impacts the localization accuracy. Figure 12 shows the results for the scenario where the training data are collected with a continuous walk.

Figure 12.

The CDF graph when localizing with features extracted from continuous walk data.

Detailed statistics for the experiment are shown in Table 3. Results show that the localization performance is slightly degraded when the training data are collected with a continuous walk. Each time the data are collected, the data samples may be different depending upon the walking speed of the user. Moreover, the walking speed may vary during the walk at different locations, which affects the extracted features. It increases the localization error. Thus, the mean, as well as maximum error, are higher for this scenario. However, the proposed approach is able to localize a user withing 3.94 m at 75% with two different devices.

Table 3.

Results statistics when training data are collected with a continuous walk.

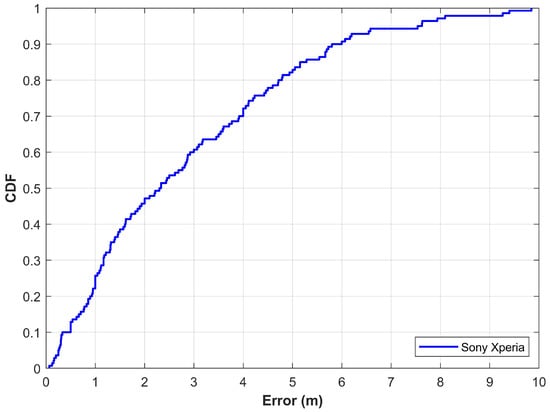

5.5. Localization Results with Publicly Available Magnetic Data

Most of the researchers evaluate their proposed approach on their private datasets where the reported results cannot be regenerated. The current study, however, makes use of a publicly available dataset to evaluate the performance of the proposed approach. For this purpose, the magnetic dataset introduced by Barsocchi et al. in [56] has been used. The dataset was presented in an indoor positioning and indoor navigation conference held in 2016. It contains the magnetic data collected with a Sony Xperia M2 (Minato Tokyo, Japan) within a building that contains offices, corridors, and open halls. The dataset contains a total of 36,495 magnetic samples continuously collected for an indoor environment of 185.12 m2 at a sampling rate of 10 Hz. The current study uses 70% data for training and 30% for testing purposes. Experiment results using the proposed approach with this data are shown in Figure 13. Results demonstrate that the proposed approach shows promising localization accuracy. The mean error is 2.84 m with a standard deviation of 2.24 m, while the maximum error is 9.84 m with a Sony Xperia smartphone. The localization error is 2.33 m and 4.20 m at 50% and 75%, respectively. The performance of the proposed approach using Sony Xperia is very similar to that of using smartphones including Galaxy S8, LG G6, LG G7, and Galaxy A8. Although the smartphones used are equipped with different embedded magnetometers, the localization results are very similar, which proves that the proposed approach can potentially mitigate the impact of various devices on localization accuracy.

Figure 13.

The CDF graph for localization with magnetic data from Barsocchi et al. [56].

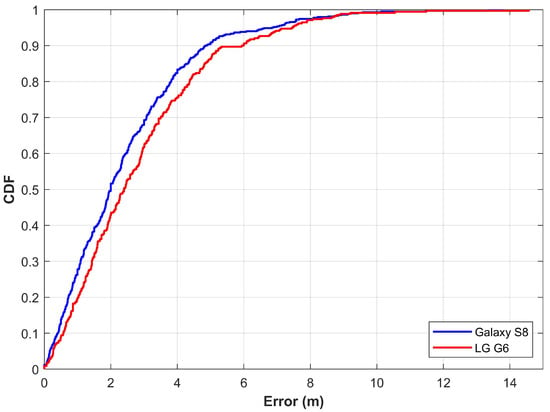

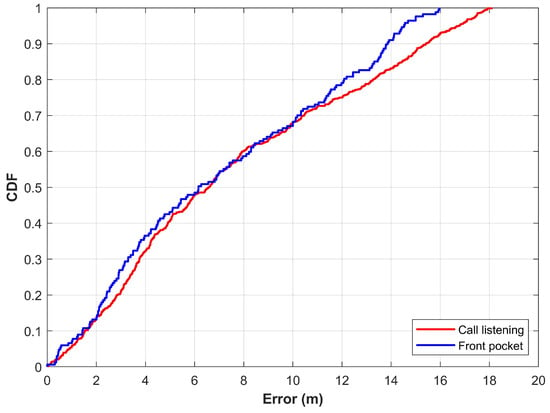

5.6. Impact of Various Device Attitudes on Localization Accuracy

As described in Section 3, various attitudes of even the same device have a significant change in the magnetic readings. This study considers attitudes of ’call listening’, and ’front pocket’ in addition to the regular mode of walking. Figure 14 shows the results for these attitudes using the proposed approach.

Figure 14.

CDF graph for ’call listening’ and ’front pocket’ attitudes.

The results show that the change in device attitude degrades the localization accuracy. The detailed statistics for three attitudes of the device are given in Table 4. It reveals that the mean error, in addition to the standard deviation and 50% error, is increased when localization is performed with different attitudes of the device. The important point to explain such differences is the change in the magnetic readings when the device’s attitude is changed. Since the features are extracted from the magnetic data, a change in magnetic data changes the extracted features, which, in turn, causes the erroneous predictions of user location. One possible solution to overcome this problem is to utilize separate NN for various attitudes of a device. Furthermore, the introduction of a module that could identify the device attitude can help to determine which NNs are to be used for localization.

Table 4.

Results statistics for various attitudes of the device.

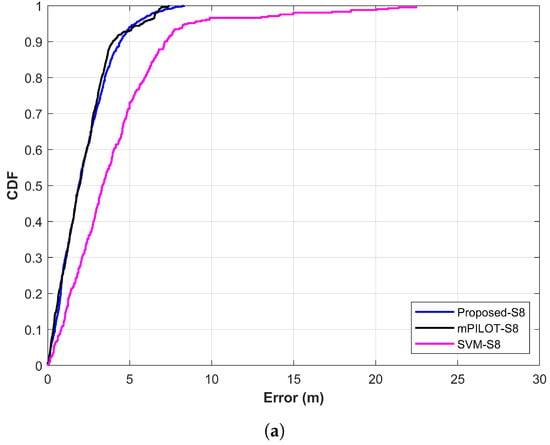

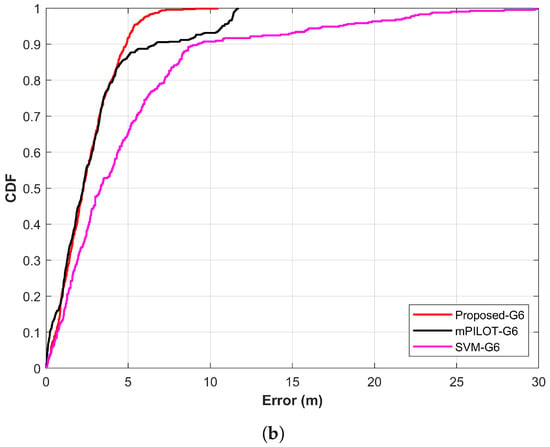

5.7. Performance Analysis

The results of the proposed approach have been compared with SVM and other magnetic positioning approach called mPILOT [39]. The approach presented in [39] makes use of PDR data and magnetic field data. It works with the magnetic fingerprint database technique and a total of 14 s data to calculate the current position of the user.

The SVM is used as well to analyze the performance of the proposed approach. Support vector machines are one of the widely used techniques for classification and regression problems proposed by Cortes and Vapnik [57,58]. It is basically designed to solve binary class problems; however, it can be used for multi-class classification too. For this purpose, it follows the “one to one” strategy [59]. This study uses it for multi-class classification and utilizes the top k predictions just like the NNs used in the study. The localization process is the same for NNs and SVM. Figure 15a,b shows the comparison of localization results for Galaxy S8 and LG G6 separately for the proposed technique, mPILOT, and SVM. Accuracy comparison reveals that the proposed approach outperforms mPILOT and SVM. Apparently, Figure 15 seems to show very similar localization accuracy for the proposed and mPILOT approaches; however, the important factor to decide the superiority of the approaches is the amount of data used for localization. As stated above, mPILOT uses 14 s of magnetic data. On the other hand, the proposed approach utilizes only three frames of 2 s data (6 s data in total). In other words, the proposed approach is able to achieve the same/better accuracy than that of mPILOT, with only 40% of the data used by mPILOT. The SVM shows poor performance compared to that of the proposed and mPILOT techniques. The detailed statistics for accuracy comparison are shown in Table 5.

Figure 15.

Comparison of proposed approach with mPILOT [39] and SVM: (a) Galaxy S8 results and (b) LG G6 results.

Table 5.

Results statistics for single vs. multiple features based prediction.

6. Conclusions

This study presents the use of deep neural networks (NN) to perform magnetic field-based indoor localization using heterogeneous devices. Wi-Fi based indoor positioning systems are unable to meet the requirements of fast-paced location-based services due to intrinsic limitations and dynamic environmental factors. Magnetic field-based positioning systems (MPS) have emerged as a potential candidate for indoor positioning; however, they are limited by the use of multifarious devices which impair their wide use. Contrary to conventional MPS that makes use of the magnetic data, this study leverages the features extracted from the magnetic data. An ensemble approach is proposed where the predictions from three NN along with the pedestrian dead reckoning (PDR) are exploited to locate a user. Experiments are conducted on Galaxy S8, LG G6, LG G7, and Galaxy A8 devices, where the NN are trained on Galaxy S8 data alone. Results demonstrate that the proposed approach potentially mitigates the impact of the device change. The localization accuracy is 2.64 m at 50% and 3.75 m at 75% without regard to the localization device used. Furthermore, results with a publicly available magnetic data from Sony Xperia M2 corroborate the performance of the proposed approach and show a localization error of 2.33 m and 4.20 m at 50% and 75%, respectively. Results prove that the use of four elements of magnetic data i.e., magnetic x, y, z, and F produce high localization accuracy than that of using magnetic F alone.

7. Limitations and Future Work

Although the proposed approach shows promising results even with four different devices, yetit is not without its demerits. The experiments are carried out with a fixed device attitude that is a common assumption for the majority of works in magnetic field-based localization. The localization accuracy is degraded when the device’s attitude is changed. One possible solution to tackle this issue is to train multiple neural networks (NN) and use them accordingly. The addition of the device attitude identification module could help to determine which NN to utilize for the localization. Additionally, more devices are planned to be tested with the proposed approach.

Author Contributions

Conceptualization, I.A. and Y.P.; Data curation, S.H.; Formal analysis, S.H. and S.P.; Funding acquisition, Y.P.; Investigation, I.A.; Methodology, I.A.; Project administration, S.P.; Writing—original draft, I.A.; Writing—review and editing, Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the ICT R&D program of MSIP/IITP. [2017-0-00543, Development of Precise Positioning Technology for the Enhancement of Pedestrian’s Position/Spatial Cognition and Sports Competition Analysis]. This research was also supported by the MSIT (Ministry of Science, ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2019-2016-0-00313) supervised by the IITP (Institute for Information and Communications Technology Promotion).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Bahl, P.; Padmanabhan, V.N.; Bahl, V.; Padmanabhan, V. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the IEEE INFOCOM 2000, Conference on Computer Communications, Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies, Tel Aviv, Israel, 26–30 March 2000. [Google Scholar]

- Karimi, H.A. Advanced Location-Based Technologies and Services; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Yoshimura, T.; Hasegawa, H. Comparing the precision and accuracy of GPS positioning in forested areas. J. For. Res. 2003, 8, 147–152. [Google Scholar] [CrossRef]

- Kjærgaard, M.B.; Blunck, H.; Godsk, T.; Toftkjær, T.; Christensen, D.L.; Grønbæk, K. Indoor positioning using GPS revisited. In Proceedings of the International Conference on Pervasive Computing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 38–56. [Google Scholar]

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.A.; Al-Khalifa, H.S. Ultra wideband indoor positioning technologies: Analysis and recent advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef] [PubMed]

- Ni, L.M.; Liu, Y.; Lau, Y.C.; Patil, A.P. LANDMARC: Indoor location sensing using active RFID. In Proceedings of the First IEEE International Conference on Pervasive Computing and Communications, 2003 (PerCom 2003), Fort Worth, TX, USA, 26 March 2003; pp. 407–415. [Google Scholar]

- Ashraf, I.; Hur, S.; Park, Y. Indoor positioning on disparate commercial smartphones using Wi-Fi access points coverage area. Sensors 2019, 19, 4351. [Google Scholar] [CrossRef] [PubMed]

- Wolf, J.; Burgard, W.; Burkhardt, H. Robust vision-based localization by combining an image-retrieval system with Monte Carlo localization. IEEE Trans. Robot. 2005, 21, 208–216. [Google Scholar] [CrossRef]

- Sahinoglu, Z. Ultra-Wideband Positioning Systems; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Bruno, R.; Delmastro, F. Design and analysis of a bluetooth-based indoor localization system. In Proceedings of the IFIP International Conference on Personal Wireless Communications; Springer: Berlin/Heidelberg, Germany, 2003; pp. 711–725. [Google Scholar]

- Papapostolou, A.; Chaouchi, H. Orientation-based radio map extensions for improving positioning system accuracy. In Proceedings of the 2009 International Conference on Wireless Communications and Mobile Computing: Connecting the World Wirelessly, Leipzig, Germany, 21–24 June 2009; pp. 947–951. [Google Scholar]

- Zou, H.; Lu, X.; Jiang, H.; Xie, L. A fast and precise indoor localization algorithm based on an online sequential extreme learning machine. Sensors 2015, 15, 1804–1824. [Google Scholar] [CrossRef]

- Lui, G.; Gallagher, T.; Li, B.; Dempster, A.G.; Rizos, C. Differences in RSSI readings made by different Wi-Fi chipsets: A limitation of WLAN localization. In Proceedings of the 2011 International Conference on Localization and GNSS (ICL-GNSS), Tampere, Finland, 29–30 June 2011; pp. 53–57. [Google Scholar]

- Amini, S.; Homayouni, S.; Safari, A.; Darvishsefat, A.A. Object-based classification of hyperspectral data using Random Forest algorithm. Geo-Spat. Inf. Sci. 2018, 21, 127–138. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Kim, K. A Wrist Worn Acceleration Based Human Motion Analysis and Classification for Ambient Smart Home System. J. Electr. Eng. Technol. 2019, 14, 1733–1739. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.; Sidduqi, M. A Triaxial acceleration-based human motion detection for ambient smart home system. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 353–358. [Google Scholar]

- Yu, H.; Wang, J.; Bai, Y.; Yang, W.; Xia, G.S. Analysis of large-scale UAV images using a multi-scale hierarchical representation. Geo-Spat. Inf. Sci. 2018, 21, 33–44. [Google Scholar] [CrossRef]

- Bakli, M.S.; Sakr, M.A.; Soliman, T.H.A. A spatiotemporal algebra in Hadoop for moving objects. Geo-Spat. Inf. Sci. 2018, 21, 102–114. [Google Scholar] [CrossRef]

- Huang, Q.; Yang, J.; Qiao, Y. Person re-identification across multi-camera system based on local descriptors. In Proceedings of the 2012 Sixth International Conference on Distributed Smart Cameras (ICDSC), Hong Kong, China, 30 October–2 November 2012; pp. 1–6. [Google Scholar]

- Chattopadhyay, C.; Das, S. Supervised framework for automatic recognition and retrieval of interaction: A framework for classification and retrieving videos with similar human interactions. IET Comput. Vis. 2016, 10, 220–227. [Google Scholar] [CrossRef]

- Jalal, A.; Uddin, M.Z.; Kim, J.T.; Kim, T.S. Daily Human Activity Recognition Using Depth Silhouettes and R Transformation for Smart Home. In Proceedings of the International Conference on Smart Homes and Health Telematics; Springer: Berlin/Heidelberg, Germany, 2011; pp. 25–32. [Google Scholar]

- Yoshimoto, H.; Date, N.; Yonemoto, S. Vision-based real-time motion capture system using multiple cameras. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Tokyo, Japan, 1 August 2003; pp. 247–251. [Google Scholar]

- Koller, D.; Klinker, G.; Rose, E.; Breen, D.E.; Whitaker, R.T.; Tuceryan, M. Real-time vision-based camera tracking for augmented reality applications. In Proceedings of the VRST 97 ACM Symposium on Virtual Reality Software and Technology; ACM: New York, NY, USA, 1997; Volume 97, pp. 87–94. [Google Scholar]

- Zhao, W.; Yan, L.; Zhang, Y. Geometric-constrained multi-view image matching method based on semi-global optimization. Geo-Spat. Inf. Sci. 2018, 21, 115–126. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. Individual detection-tracking-recognition using depth activity images. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyang, Korea, 28–30 October 2015; pp. 450–455. [Google Scholar]

- Berlin, S.J.; John, M. Human interaction recognition through deep learning network. In Proceedings of the 2016 IEEE International Carnahan Conference on Security Technology (ICCST), Orlando, FL, USA, 24–27 October 2016; pp. 1–4. [Google Scholar]

- Shu, Y.; Bo, C.; Shen, G.; Zhao, C.; Li, L.; Zhao, F. Magicol: Indoor localization using pervasive magnetic field and opportunistic WiFi sensing. IEEE J. Sel. Areas Commun. 2015, 33, 1443–1457. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Park, Y. MDIRECT-Magnetic field strength and peDestrIan dead RECkoning based indoor localizaTion. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 October 2018; pp. 24–27. [Google Scholar]

- Ashraf, I.; Hur, S.; Park, Y. BLocate: A building identification scheme in GPS denied environments using smartphone sensors. Sensors 2018, 18, 3862. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhang, L.; Liu, Q.; Yin, Y.; Cheng, L.; Zimmermann, R. Fusion of magnetic and visual sensors for indoor localization: Infrastructure-free and more effective. IEEE Trans. Multimed. 2017, 19, 874–888. [Google Scholar] [CrossRef]

- Zhang, C.; Subbu, K.P.; Luo, J.; Wu, J. GROPING: Geomagnetism and crowdsensing powered indoor navigation. IEEE Trans. Mob. Comput. 2015, 14, 387–400. [Google Scholar] [CrossRef]

- Pasku, V.; De Angelis, A.; De Angelis, G.; Arumugam, D.D.; Dionigi, M.; Carbone, P.; Moschitta, A.; Ricketts, D.S. Magnetic field-based positioning systems. IEEE Commun. Surv. Tutor. 2017, 19, 2003–2017. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Shafiq, M.; Park, Y. Floor identification using magnetic field data with smartphone sensors. Sensors 2019, 11, 2538. [Google Scholar] [CrossRef]

- Gunnarsdottir, E.L. The Earth’s Magnetic Field. Ph.D. Thesis, University of Iceland, Reykjavik, Iceland, 2012. [Google Scholar]

- Finlay, C.C.; Maus, S.; Beggan, C.; Bondar, T.; Chambodut, A.; Chernova, T.; Chulliat, A.; Golovkov, V.; Hamilton, B.; Hamoudi, M.; et al. International geomagnetic reference field: The eleventh generation. Geophys. J. Int. 2010, 183, 1216–1230. [Google Scholar]

- Li, B.; Gallagher, T.; Rizos, C.; Dempster, A.G. Using geomagnetic field for indoor positioning. J. Appl. Geod. 2013, 7, 299–308. [Google Scholar] [CrossRef]

- Li, B.; Gallagher, T.; Rizos, C.; Dempster, A.G.; Rizos, C. How feasible is the use of magnetic field alone for indoor positioning. In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, NSW, Australia, 13–15 November 2012; pp. 1–9. [Google Scholar]

- Li, Y.; Zhuang, Y.; Lan, H.; Zhang, P.; Niu, X.; El-Sheimy, N. WiFi-aided magnetic matching for indoor navigation with consumerportable devices. Micromachines 2015, 6, 747–764. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Park, Y. mPILOT-magnetic field strength based pedestrian indoor localization. Sensors 2018, 18, 2283. [Google Scholar] [CrossRef] [PubMed]

- Ashraf, I.; Hur, S.; Shafiq, M.; Kumari, S.; Park, Y. GUIDE: Smartphone sensors-based pedestrian indoor localization with heterogeneous devices. Int. J. Commun. Syst. 2019. [Google Scholar] [CrossRef]

- Gu, F.; Niu, J.; Duan, L. WAIPO: A fusion-based collaborative indoor localization system on smartphones. IEEE/ACM Trans. Netw. 2017, 25, 2267–2280. [Google Scholar] [CrossRef]

- Ahmed, A.; Jalal, A.; Rafique, A.A. Salient Segmentation based Object Detection and Recognition using Hybrid Genetic Transform. In Proceedings of the 2019 International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 27–29 August 2019; pp. 203–208. [Google Scholar]

- Jalal, A.; Mahmood, M.; Sidduqi, M. Robust spatio-temporal features for human interaction recognition via artificial neural network. In Proceedings of the IEEE Conference on FIT, Islamabad, Pakistan, 17–19 December 2018. [Google Scholar]

- Singh, D.; Mohan, C.K. Graph formulation of video activities for abnormal activity recognition. Pattern Recognit. 2017, 65, 265–272. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Ly, N.Q. Abnormal Activity Detection based on Dense Spatial-Temporal Features and Improved One-Class Learning. In Proceedings of the Eighth International Symposium on Information and Communication Technology, Nha Trang City, Viet Nam, 7–8 December 2017; pp. 370–377. [Google Scholar]

- Luo, X.; Tan, H.; Guan, Q.; Liu, T.; Zhuo, H.; Shen, B. Abnormal activity detection using pyroelectric infrared sensors. Sensors 2016, 16, 822. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A depth video sensor-based life-logging human activity recognition system for elderly care in smart indoor environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. Shape and motion features approach for activity tracking and recognition from kinect video camera. In Proceedings of the 2015 IEEE 29th International Conference on Advanced Information Networking and Applications Workshops, Gwangiu, Korea, 24–27 March 2015; pp. 445–450. [Google Scholar]

- Liu, M.; Chen, R.; Li, D.; Chen, Y.; Guo, G.; Cao, Z.; Pan, Y. Scene recognition for indoor localization using a multi-sensor fusion approach. Sensors 2017, 17, 2847. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Park, Y. The The Application of Deep Convolutional Neural Networks and Smartphone Sensors in Indoor Localization. Appl. Sci. 2019, 9, 2337. [Google Scholar] [CrossRef]

- Al-homayani, F.; Mahoor, M. Improved indoor geomagnetic field fingerprinting for smartwatch localization using deep learning. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 1–8. [Google Scholar]

- Wang, R.; Li, Z.; Luo, H.; Zhao, F.; Shao, W.; Wang, Q. A Robust Wi-Fi Fingerprint Positioning Algorithm Using Stacked Denoising Autoencoder and Multi-Layer Perceptron. Remote Sens. 2019, 11, 1293. [Google Scholar] [CrossRef]

- Bird, J.; Arden, D. Indoor navigation with foot-mounted strapdown inertial navigation and magnetic sensors [emerging opportunities for localization and tracking]. IEEE Wirel. Commun. 2011, 18, 28–35. [Google Scholar] [CrossRef]

- Galván-Tejada, C.; García-Vázquez, J.; Galván-Tejada, J.; Delgado-Contreras, J.; Brena, R. Infrastructure-less indoor localization using the microphone, magnetometer and light sensor of a smartphone. Sensors 2015, 15, 20355–20372. [Google Scholar] [CrossRef] [PubMed]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications. Available online: https://www.analog.com/media/en/technical-documentation/application-notes/513772624AN602.pdf (accessed on 21 December 2019).

- Barsocchi, P.; Crivello, A.; La Rosa, D.; Palumbo, F. A multisource and multivariate dataset for indoor localization methods based on WLAN and geo-magnetic field fingerprinting. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–8. [Google Scholar]

- Piyathilaka, L.; Kodagoda, S. Gaussian mixture based HMM for human daily activity recognition using 3D skeleton features. In Proceedings of the 2013 IEEE 8th conference on industrial electronics and applications (ICIEA), Melbourne, VIC, Australia, 19–21 June 2013; pp. 567–572. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Wu, H.; Pan, W.; Xiong, X.; Xu, S. Human activity recognition based on the combined svm&hmm. In Proceedings of the 2014 IEEE International Conference on Information and Automation (ICIA), Hailar, China, 28–30 July 2014; pp. 219–224. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).