The Life of a New York City Noise Sensor Network

Abstract

1. Introduction

2. Prior Work

3. Sensor Network Deployment and Data Collected

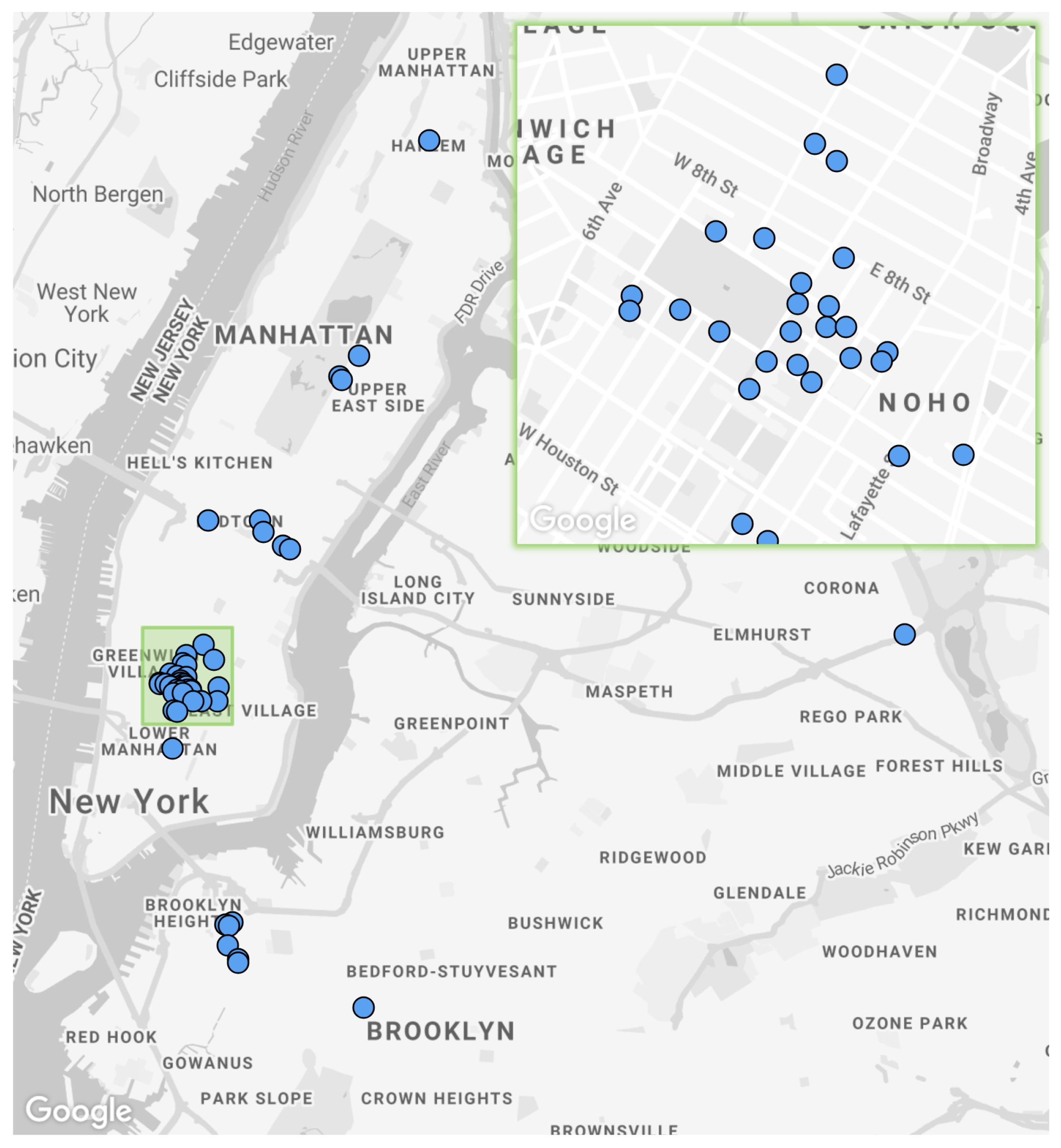

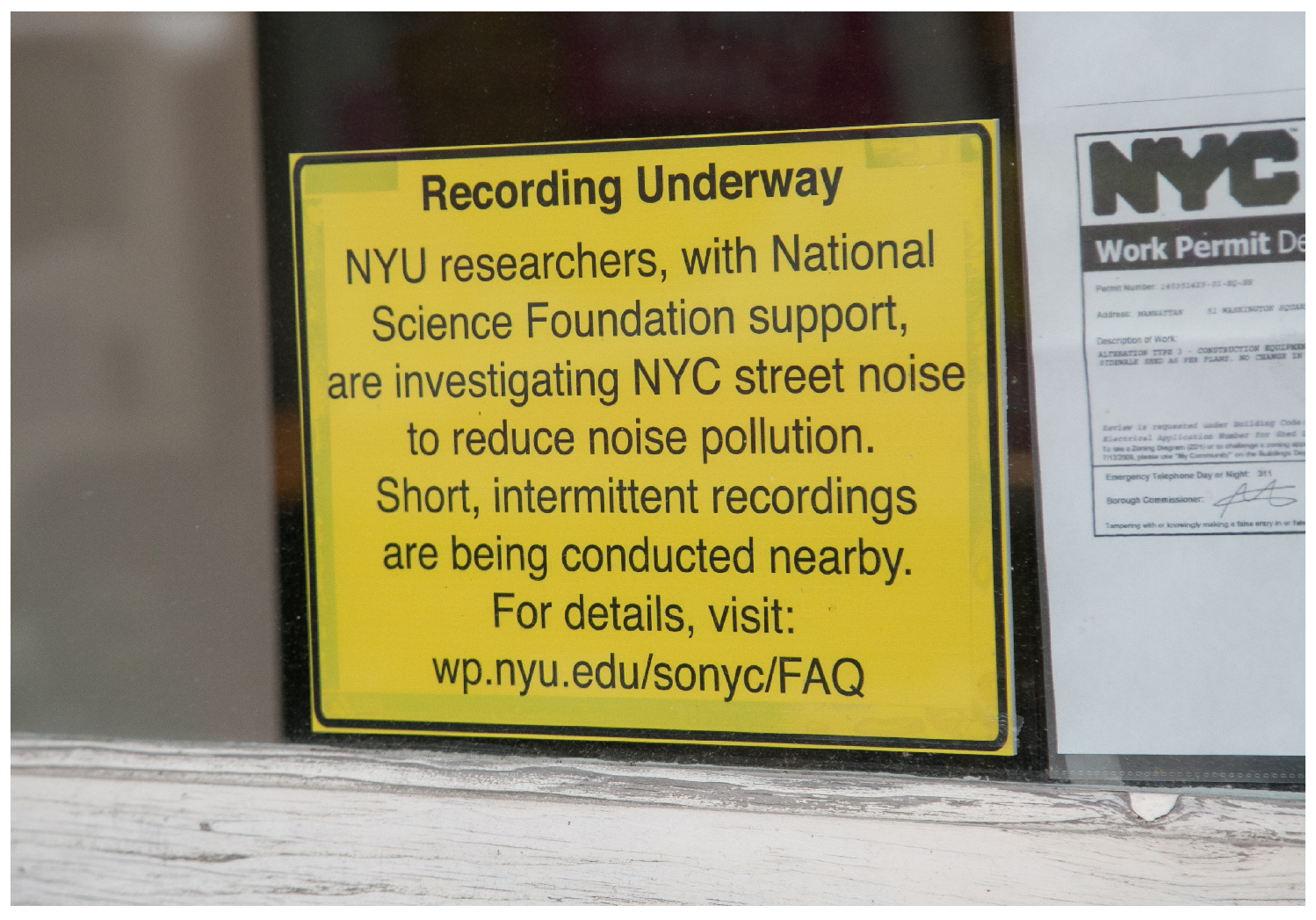

3.1. Deployment

3.2. Data Collected

4. Sensor Node

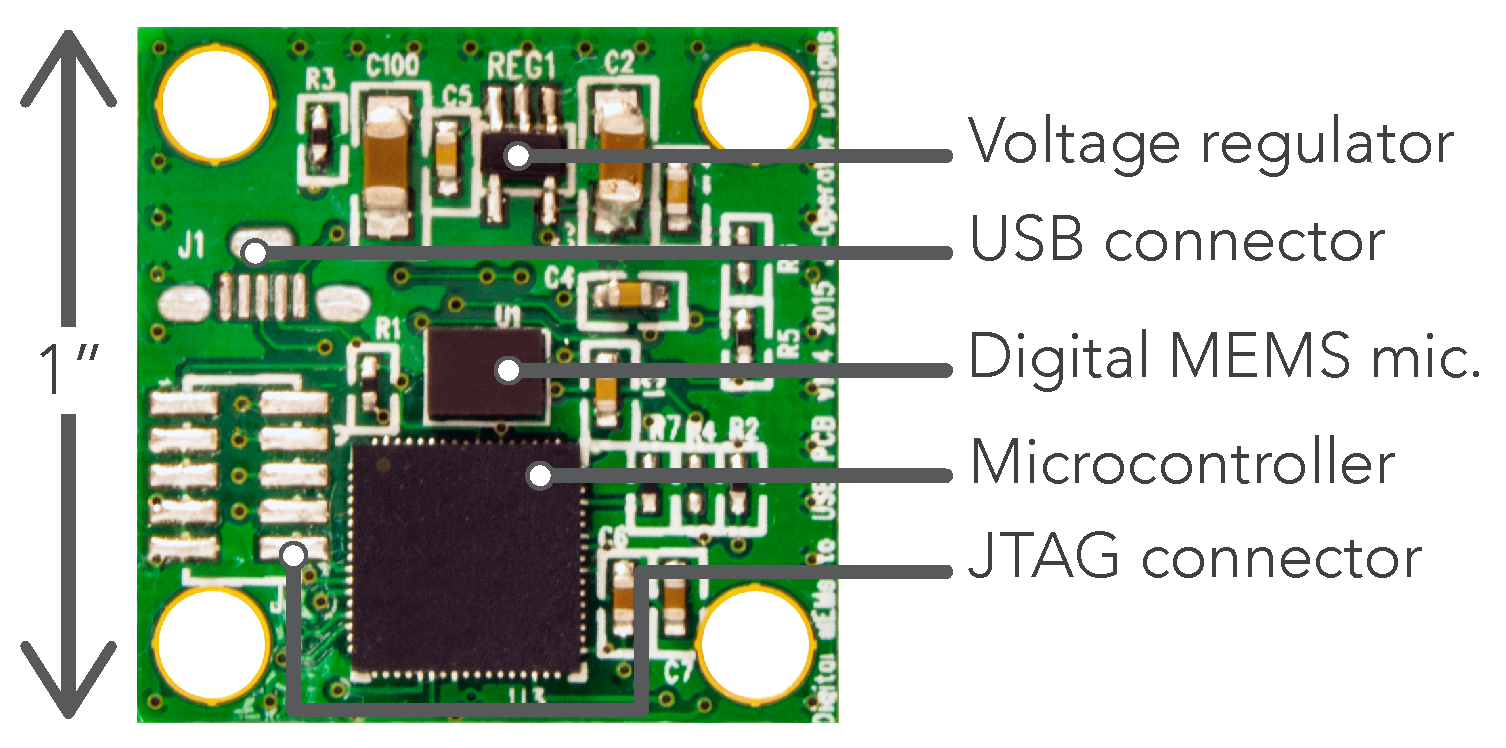

4.1. Sensor Core

4.2. Acoustic Sensing Module

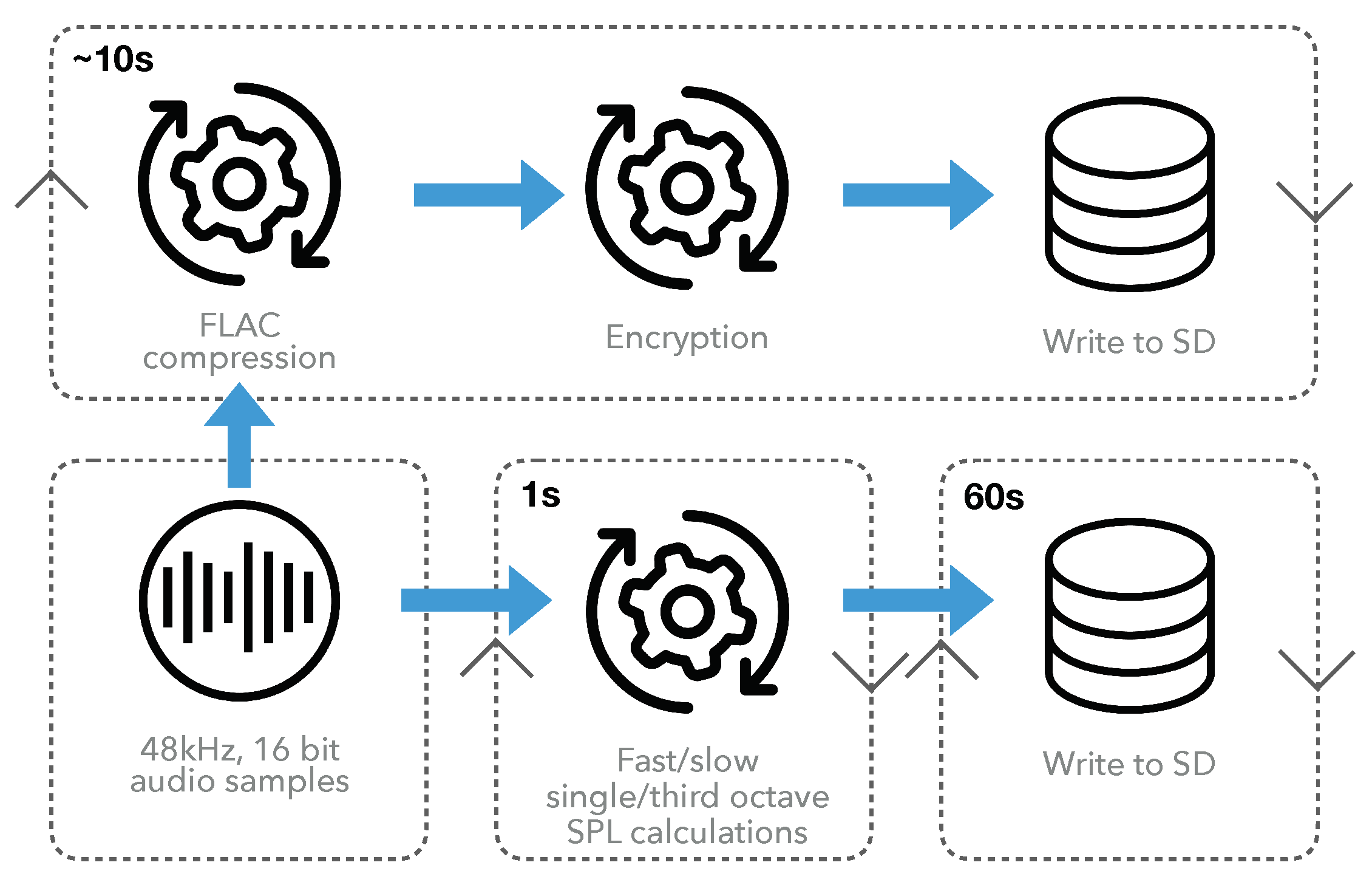

4.3. Data Capture Module

4.4. Operational Modules

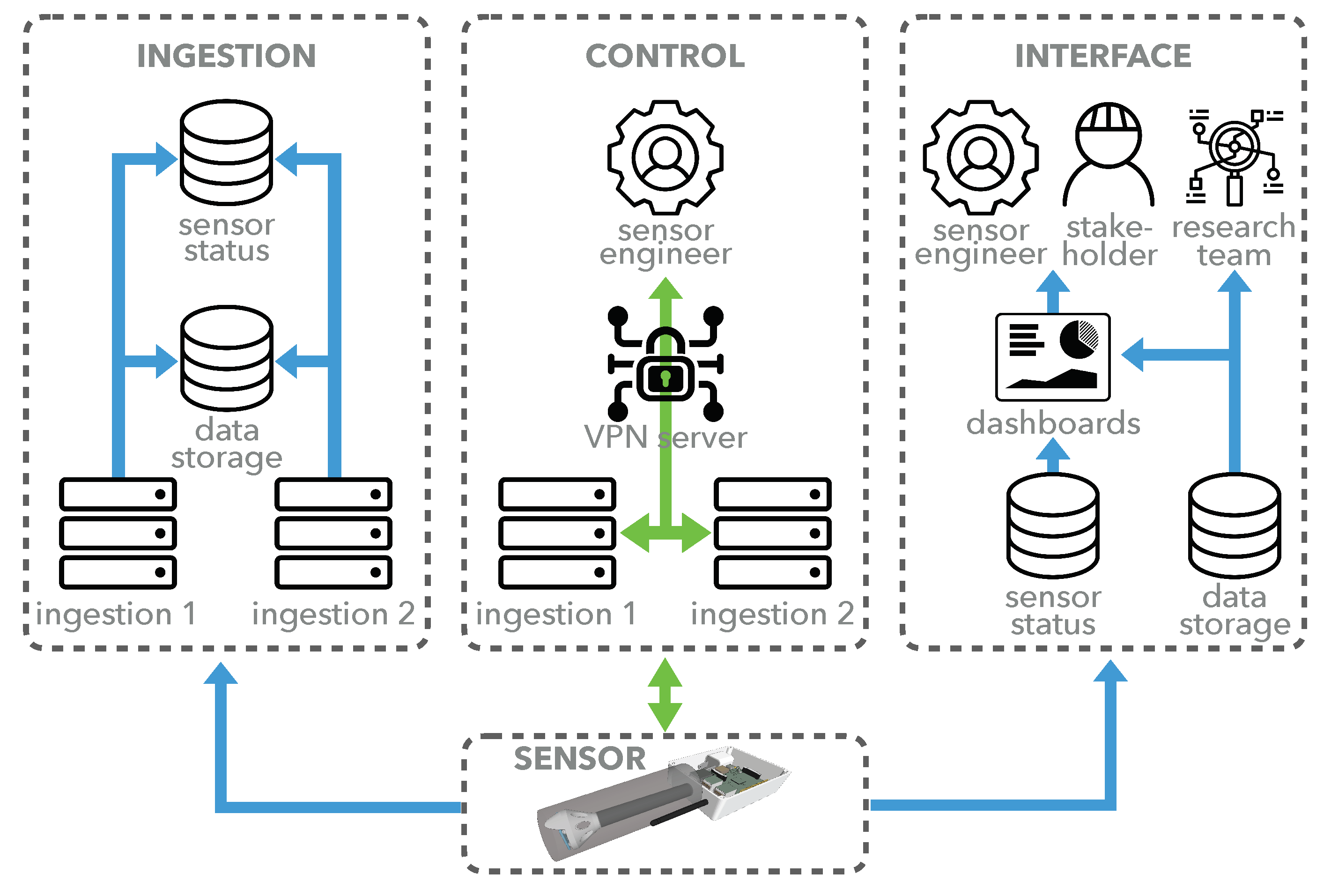

5. Sensor Network Infrastructure

5.1. Data Ingestion

5.2. Remote Sensor Control

6. User Interface

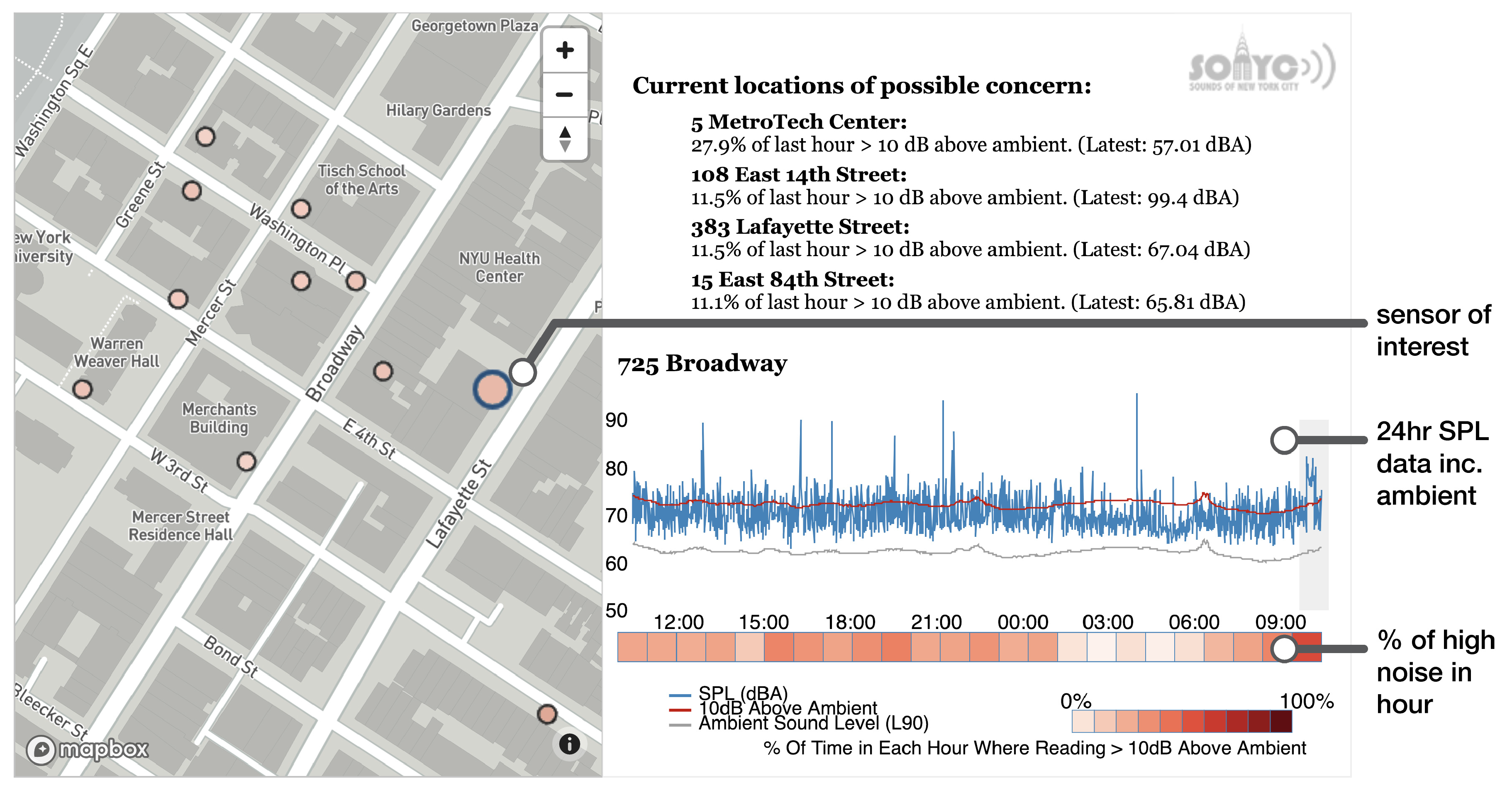

6.1. Stakeholder Interface

6.2. Data Access and Visualization

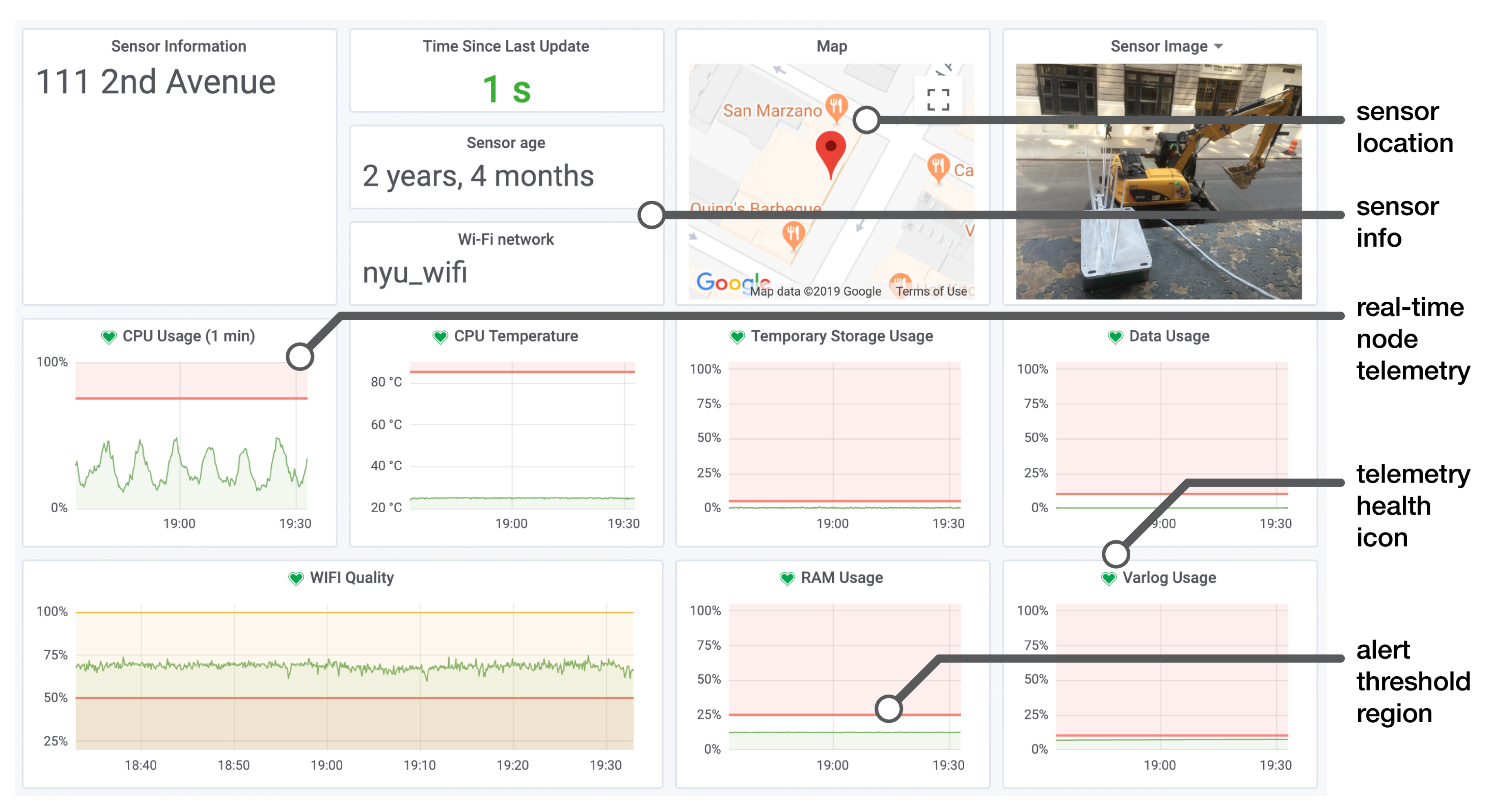

6.3. Sensor Network Monitoring

7. Sensor Network Downtime Analysis

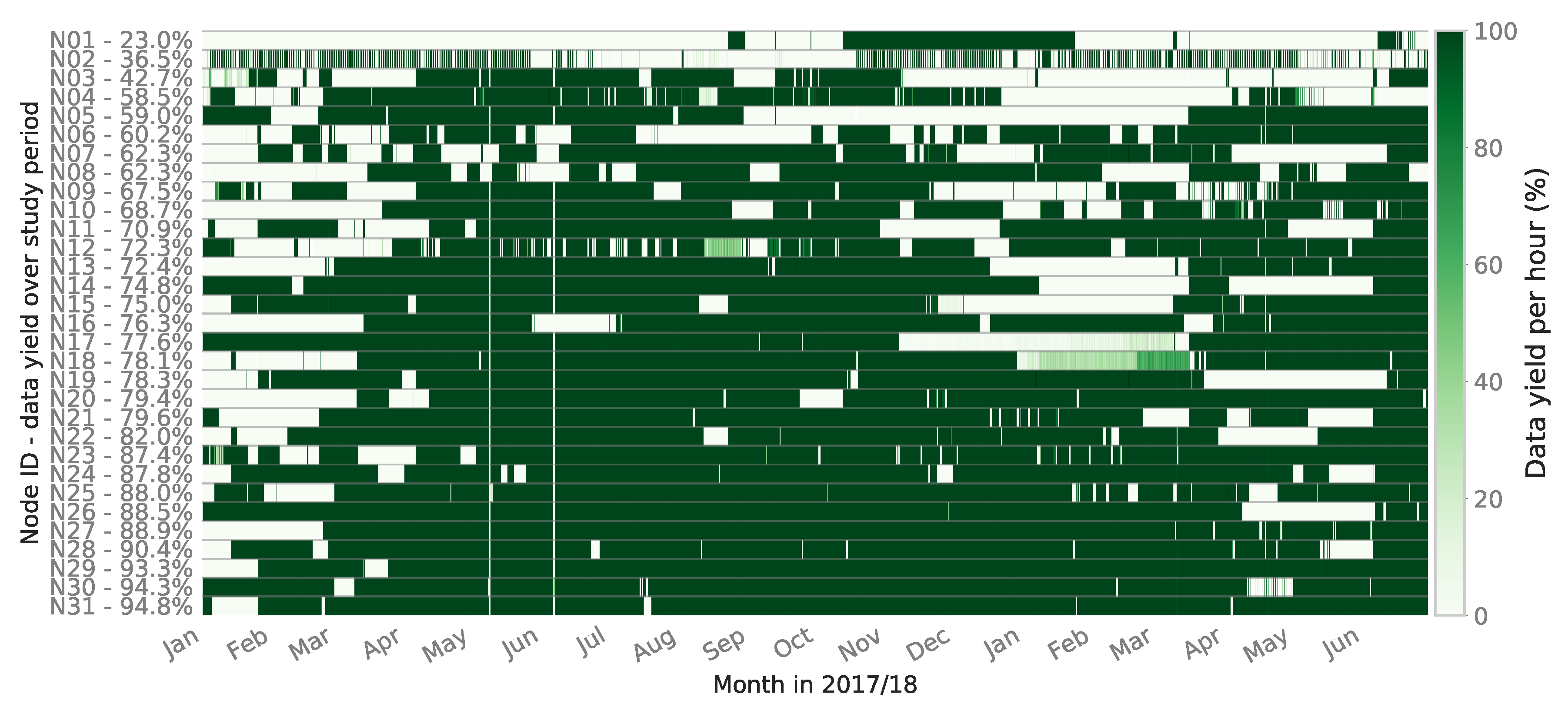

7.1. Data Yield

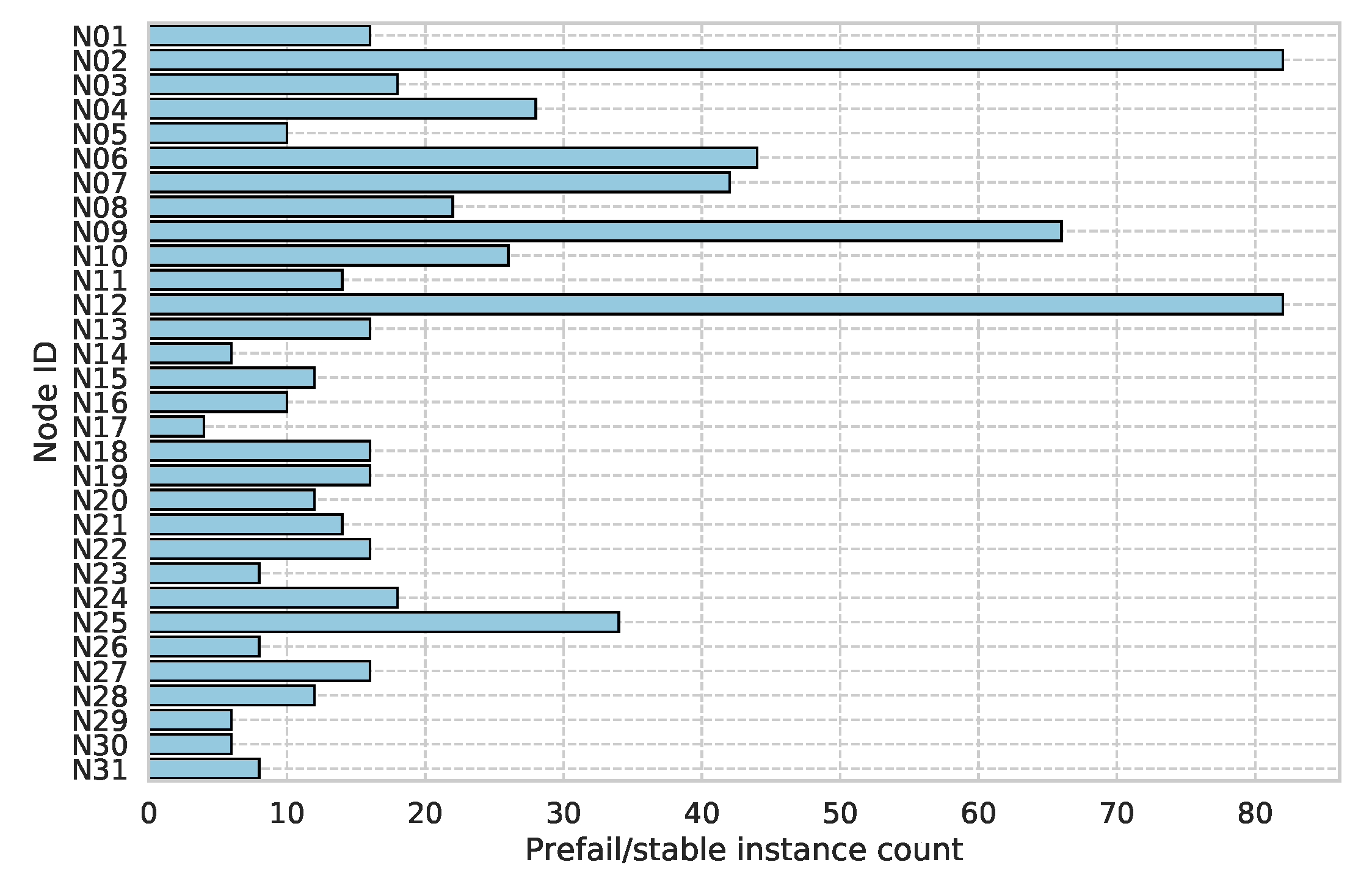

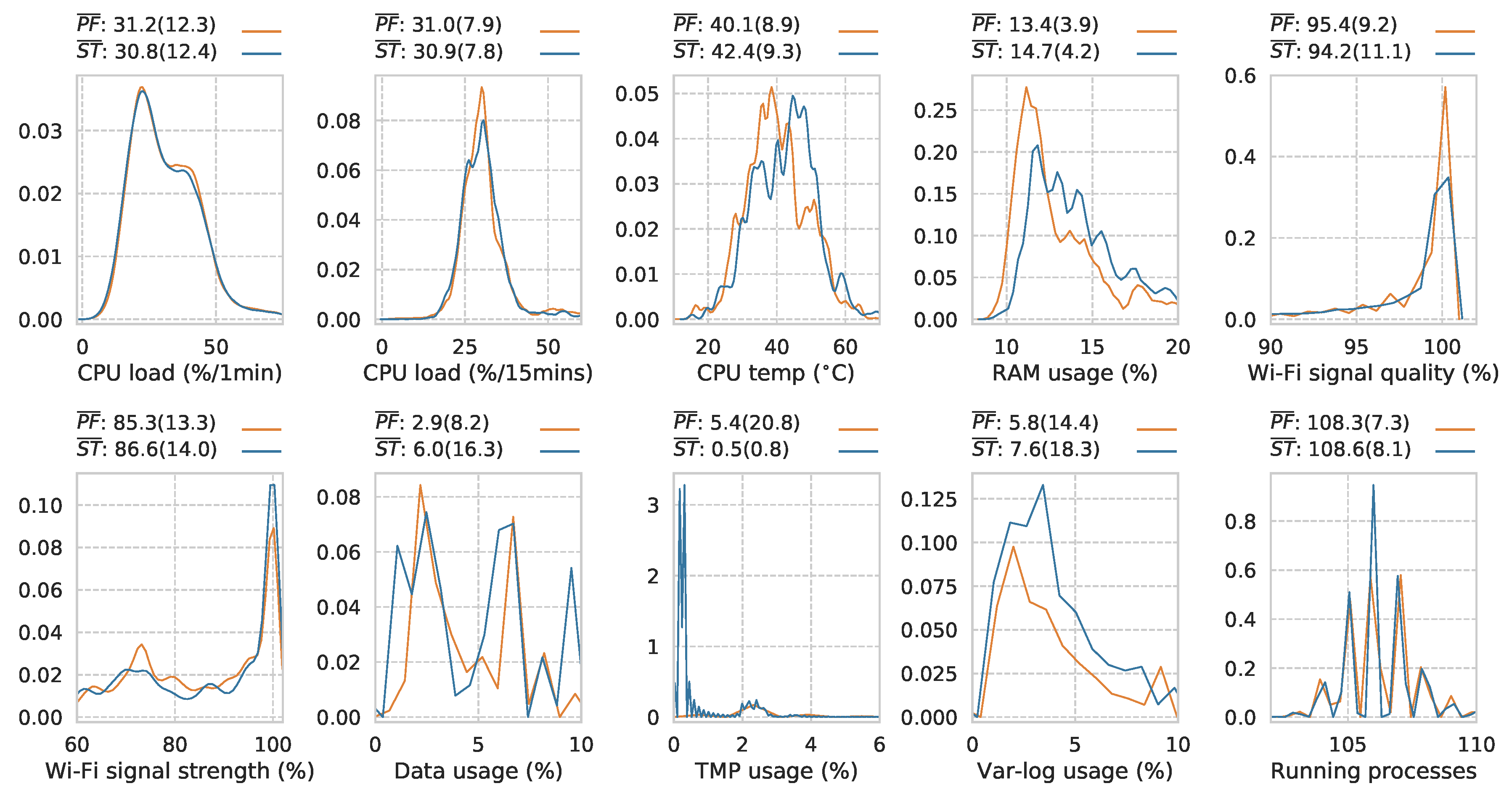

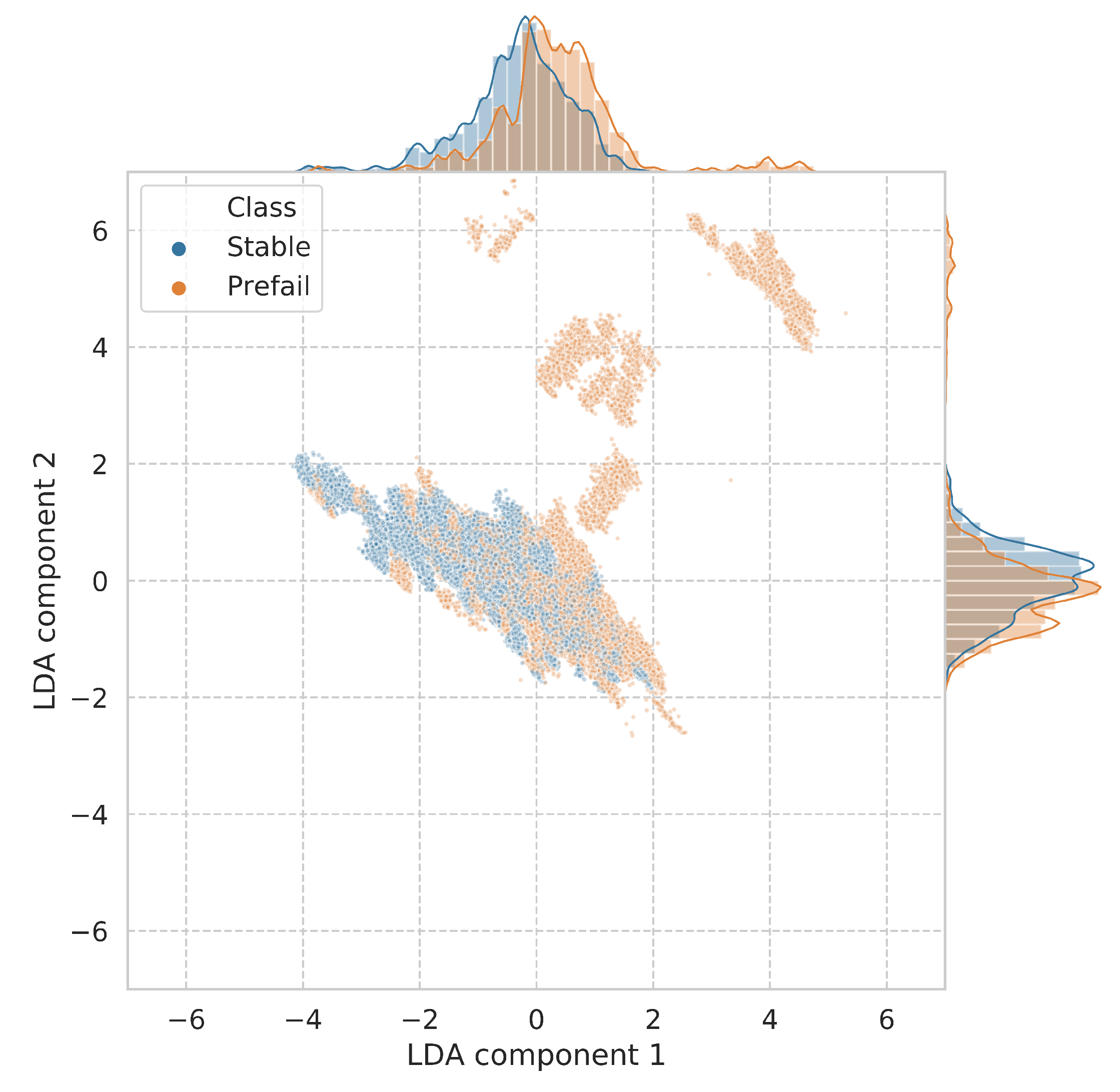

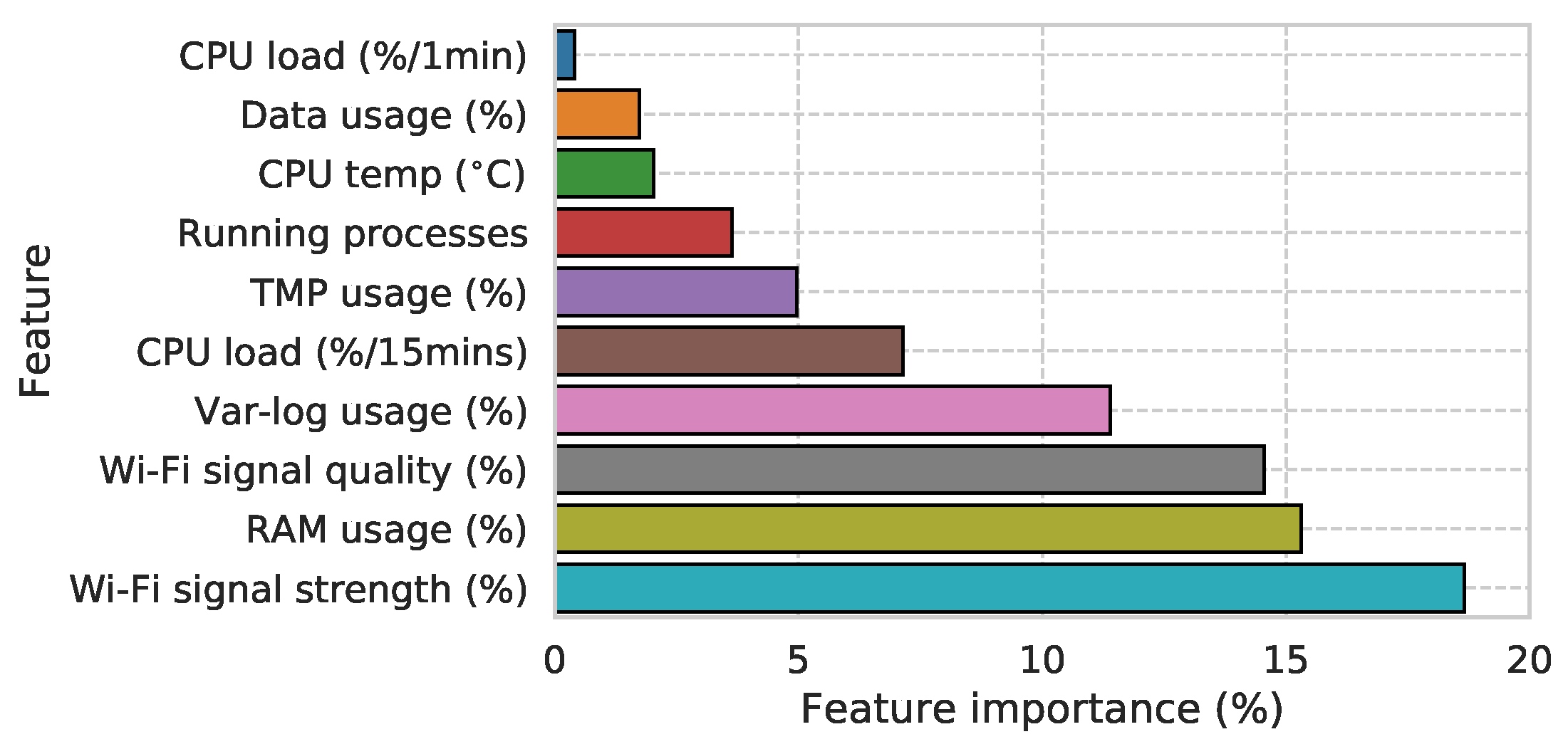

7.2. Telemetry Analysis and Fault Diagnosis

- CPU load (%/1 min): mean CPU usage over a 1 min period across all four 900 MHz CPU cores;

- CPU load (%/15 min): mean CPU usage over a 15 min period across all four 900 MHz CPU cores

- CPU temp (°C): core CPU temperature in degrees Celsius;

- RAM usage (%): usage of 925 MB RAM;

- Wi-Fi signal strength (%): measure of Wi-Fi signal strength;

- Wi-Fi signal quality (%): measure that factors in signal-to-noise ratio (SNR) and signal strength;

- Data usage (%): usage of 12 GB SD card data partition;

- TMP usage (%): usage of 50 MB RAM disk partition used for fast temporary I/O operations;

- Var-log usage (%): usage of 50 MB RAM disk log partition where all log files are written to;

- Running processes: count of running processes.

7.3. Summary

8. Conclusions

9. Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ising, H.; Kruppa, B. Health effects caused by noise: Evidence in the literature from the past 25 years. Noise Health 2004, 6, 5–13. [Google Scholar] [PubMed]

- Hammer, M.S.; Swinburn, T.K.; Neitzel, R.L. Environmental noise pollution in the United States: Developing an effective public health response. Environ. Health Perspect. 2014, 122, 115–119. [Google Scholar] [CrossRef] [PubMed]

- Bronzaft, A.; Van Ryzin, G. Neighborhood Noise and Its Consequences: Implications for Tracking Effectiveness of NYC Revised Noise Code; Technical Report, Special Report #14; Survey Research Unit, School of Public Affairs, Baruch College CUNY: New York, NY, USA, 2007; Available online: http://www.noiseoff.org/document/cenyc.noise.report.14.pdf (accessed on 21 March 2019).

- Fritschi, L.; Brown, L.; Kim, R.; Schwela, D.; Kephalopolos, S. Burden of Disease From Environmental Noise: Quantification of Healthy Years Life Lost in Europe; World Health Organization: Bonn, Germany, 2012. [Google Scholar]

- Basner, M.; Babisch, W.; Davis, A.; Brink, M.; Clark, C.; Janssen, S.; Stansfeld, S. Auditory and non-auditory effects of noise on health. Lancet 2014, 383, 1325–1332. [Google Scholar] [CrossRef]

- Bello, J.P.; Silva, C.; Nov, O.; Dubois, R.L.; Arora, A.; Salamon, J.; Mydlarz, C.; Doraiswamy, H. SONYC: A system for monitoring, analyzing, and mitigating urban noise pollution. Commun. ACM 2019, 62, 68–77. [Google Scholar] [CrossRef]

- Miranda, F.; Lage, M.; Doraiswamy, H.; Mydlarz, C.; Salamon, J.; Lockerman, Y.; Freire, J.; Silva, C. Time lattice: A data structure for the interactive visual analysis of large time series. Comput. Graph. Forum 2018, 37, 13–22. [Google Scholar] [CrossRef]

- Mydlarz, C.; Shamoon, C.; Bello, J. Noise monitoring and enforcement in New York City using a remote acoustic sensor network. In Proceedings of the INTER-NOISE and NOISE-CON Congress and Conference, Hong Kong, China, 27–30 August 2017; Institute of Noise Control Engineering: Reston, VA, USA, 2017. [Google Scholar]

- City of New York. 311 Civil Complaint Service. Available online: http://www.nyc.gov/311 (accessed on 21 March 2019).

- New York City Department of Health and Mental Hygiene. Ambient Noise Disruption in New York City; Data brief 45; New York City Department of Health and Mental Hygiene: New York, NY, USA, 2014.

- Murphy, E.; King, E.A. Smartphone-based noise mapping: Integrating sound level meter app data into the strategic noise mapping process. Sci. Total Environ. 2016, 562, 852–859. [Google Scholar] [CrossRef] [PubMed]

- Kardous, C.A.; Shaw, P.B. Evaluation of smartphone sound measurement applications. J. Acoust. Soc. Am. 2014, 135, EL186–EL192. [Google Scholar] [CrossRef] [PubMed]

- Kuhn, J.; Vogt, P. Analyzing acoustic phenomena with a smartphone microphone. Phys. Teach. 2013, 51, 118–119. [Google Scholar] [CrossRef]

- Roberts, B.; Kardous, C.; Neitzel, R. Improving the accuracy of smart devices to measure noise exposure. J. Occup. Environ. Hyg. 2016, 13, 840–846. [Google Scholar] [CrossRef] [PubMed]

- Maisonneuve, N.; Stevens, M.; Ochab, B. Participatory noise pollution monitoring using mobile phones. Inf. Polity 2010, 15, 51–71. [Google Scholar] [CrossRef]

- Kanjo, E. NoiseSPY: A real-time mobile phone platform for urban noise monitoring and mapping. Mob. Netw. Appl. 2010, 15, 562–574. [Google Scholar] [CrossRef]

- Guillaume, G.; Can, A.; Petit, G.; Fortin, N.; Palominos, S.; Gauvreau, B.; Bocher, E.; Picaut, J. Noise mapping based on participative measurements. Noise Mapp. 2016, 3, 140–156. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y.; Haklay, M. Participatory soundscape sensing. Landsc. Urb. Plan. 2018, 173, 64–69. [Google Scholar] [CrossRef]

- Picaut, J.; Fortin, N.; Bocher, E.; Petit, G.; Aumond, P.; Guillaume, G. An open-science crowdsourcing approach for producing community noise maps using smartphones. Build. Environ. 2019, 148, 20–33. [Google Scholar] [CrossRef]

- Pew Research Center. Mobile Fact Sheet—February 5th 2018. Available online: http://www.pewinternet.org/fact-sheet/mobile/ (accessed on 15 August 2018).

- Piper, B.; Barham, R.; Sheridan, S.; Sotirakopoulos, K. Exploring the “big acoustic data” generated by an acoustic sensor network deployed at a crossrail construction site. In Proceedings of the 24th International Congress on Sound and Vibration (ICSV), London, UK, 23–27 July 2017. [Google Scholar]

- Catlett, C.E.; Beckman, P.H.; Sankaran, R.; Galvin, K.K. Array of things: A scientific research instrument in the public way: Platform design and early lessons learned. In Proceedings of the 2nd International Workshop on Science of Smart City Operations and Platforms Engineering, Pittsburgh, PA, USA, 18–21 April 2017; ACM: New York, NY, USA, 2017; pp. 26–33. [Google Scholar] [CrossRef]

- International Organization for Standardization. Electroacoustics—Sound Level Meters—Part 1: Specifications (IEC 61672-1); Standard; IOS: Geneva, Switzerland, 2013. [Google Scholar]

- EMS Bruel & Kjaer. Environmental Noise and Vibration Monitoring—Sentinel. 2019. Available online: https://emsbk.com/sentinel (accessed on 21 March 2019).

- EMS Bruel & Kjaer. Web Trak—B&K Web Portal for Air Traffic Noise Monitoring. 2019. Available online: http://webtrak5.bksv.com/panynj4 (accessed on 21 March 2019).

- Port Authority of the Balearic Islands. Activity at the Port of Palma Alone Does Not Explain the Levels of Noise and Particles in the Air (…). 2019. Available online: http://www.portsdebalears.com/en/en/noticia/activity-port-palma-alone-does-not-explain-levels-noise-and-particles-air-according-study (accessed on 21 March 2019).

- Libelium. Libelium Remote Sensing. Available online: http://www.libelium.com (accessed on 21 March 2019).

- Mietlicki, F.; Mietlicki, C.; Sineau, M. An innovative approach for long-term environmental noise measurement: RUMEUR network. In Proceedings of the 10th European Congress and Exposition on Noise Control Engineering (EuroNoise), Maastricht, The Netherlands, 31 May–3 June 2015. [Google Scholar]

- Alías, F.; Alsina-Pagès, R.; Carrié, J.C.; Orga, F. DYNAMAP: A low-cost wasn for real-time road traffic noise mapping. In Proceedings of the Techni Acustica, Cadiz, Spain, 24–26 October 2018. [Google Scholar]

- Bell, M.C.; Galatioto, F. Novel wireless pervasive sensor network to improve the understanding of noise in street canyons. Appl. Acoust. 2013, 74, 169–180. [Google Scholar] [CrossRef]

- Botteldooren, D.; Van Renterghem, T.; Oldoni, D.; Samuel, D.; Dekoninck, L.; Thomas, P.; Wei, W.; Boes, M.; De Coensel, B.; De Baets, B.; et al. The internet of sound observatories. Proc. Meet. Acoust. 2013, 19, 040140. [Google Scholar]

- Sounds of New York City (SONYC) Project. SONYC FAQ Page. Available online: https://wp.nyu.edu/sonyc/faq (accessed on 21 March 2019).

- Mydlarz, C.; Salamon, J.; Bello, J. The implementation of low-cost urban acoustic monitoring devices. Appl. Acoust. Spec. Issue Acoust. Smart Cities 2017, 117, 207–218. [Google Scholar] [CrossRef]

- Xiph.Org Foundation. Free Lossless Audio Codec (FLAC). Available online: https://xiph.org/flac (accessed on 21 March 2019).

- OpenVPN Inc. OpenVPN: Open-Source Virtual Private Networking. Available online: https://openvpn.net (accessed on 21 March 2019).

- New York City Department of Environmental Protection. A Guide to New York City’s Noise Code. Available online: http://www.nyc.gov/html/dep/pdf/noise_code_guide.pdf (accessed on 21 March 2019).

- Divya, M.S.; Goyal, S.K. Elasticsearch: An advanced and quick search technique to handle voluminous data. Compusoft 2013, 2, 171. [Google Scholar]

- Grafana Labs. Open Source Software for Time Series Analytics. Available online: https://grafana.com (accessed on 21 March 2019).

- Slack. Slack: Work Collaboration Hub. Available online: https://slack.com (accessed on 21 March 2019).

- Telegram. Telegram: Cloud-Based Mobile and Desktop Messaging App. Available online: https://telegram.org (accessed on 21 March 2019).

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Unsupervised feature learning for urban sound classification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015. [Google Scholar]

- Salamon, J.; Bello, J.P. Feature learning with deep scattering for urban sound analysis. In Proceedings of the 2015 European Signal Processing Conference, Nice, France, 31 August–4 September 2015. [Google Scholar]

- Cartwright, M.; Dove, G.; Méndez, A.E.M.; Bello, J.P. Crowdsourcing multi-label audio annotation tasks with citizen scientists. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Ba, J.; Caruana, R. Do deep nets really need to be deep? In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 2654–2662. [Google Scholar]

| Data Quality | Scale | Longevity | Affordability | Accessibility | |

|---|---|---|---|---|---|

| Participatory sensing | low | large | short | high | low |

| Piper et al. | high | small | short | high | low |

| Array of Things | low | large | long | high | high |

| B & K/Norsonic/01dB | high | small | long | low | low |

| SmartSensPort-Palma | high | small | short | low | low |

| Rumeur | high | large | long | low | high |

| Dynamap Life+ | low | large | short | high | high |

| MESSAGE project | low | large | short | high | low |

| IDEA project | high | large | short | high | high |

| SONYC | high | large | long | high | high |

| Description | Format | Size | Frequency | Cached | Total Size |

|---|---|---|---|---|---|

| Sound pressure level (SPL) | tar | 150 KB | 60 s | yes | 2 TB |

| Audio snippets | tar.gz | 500 KB | 20 s | yes | 40 TB |

| Node status | JSON | 1 KB | 3 s | no | 80 GB |

| Split Group | Total Instances (Rows) | Class | Instance n (% of Total) | Row Count (% of Total) |

|---|---|---|---|---|

| Train | 550 (399,959) | Stable | 276 (50.2) | 204,304 (51.1) |

| Prefail | 274 (49.8) | 195,655 (48.9) | ||

| Test | 138 (101,891) | Stable | 68 (49.3) | 55,743 (54.7) |

| Prefail | 70 (50.7) | 46,148 (45.3) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mydlarz, C.; Sharma, M.; Lockerman, Y.; Steers, B.; Silva, C.; Bello, J.P. The Life of a New York City Noise Sensor Network. Sensors 2019, 19, 1415. https://doi.org/10.3390/s19061415

Mydlarz C, Sharma M, Lockerman Y, Steers B, Silva C, Bello JP. The Life of a New York City Noise Sensor Network. Sensors. 2019; 19(6):1415. https://doi.org/10.3390/s19061415

Chicago/Turabian StyleMydlarz, Charlie, Mohit Sharma, Yitzchak Lockerman, Ben Steers, Claudio Silva, and Juan Pablo Bello. 2019. "The Life of a New York City Noise Sensor Network" Sensors 19, no. 6: 1415. https://doi.org/10.3390/s19061415

APA StyleMydlarz, C., Sharma, M., Lockerman, Y., Steers, B., Silva, C., & Bello, J. P. (2019). The Life of a New York City Noise Sensor Network. Sensors, 19(6), 1415. https://doi.org/10.3390/s19061415