Robust Normal Estimation for 3D LiDAR Point Clouds in Urban Environments

Abstract

:1. Introduction

1.1. Related Work

1.2. Contribution

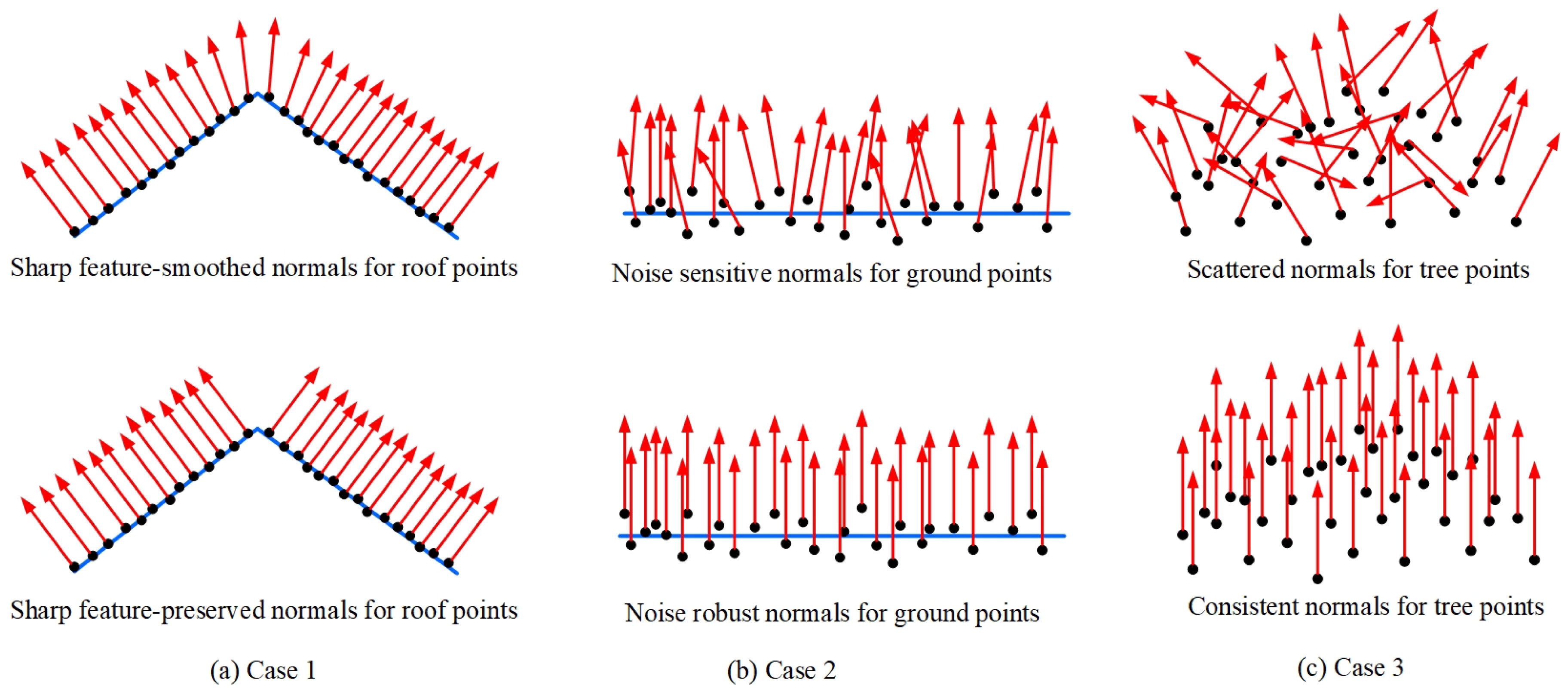

2. Methodology

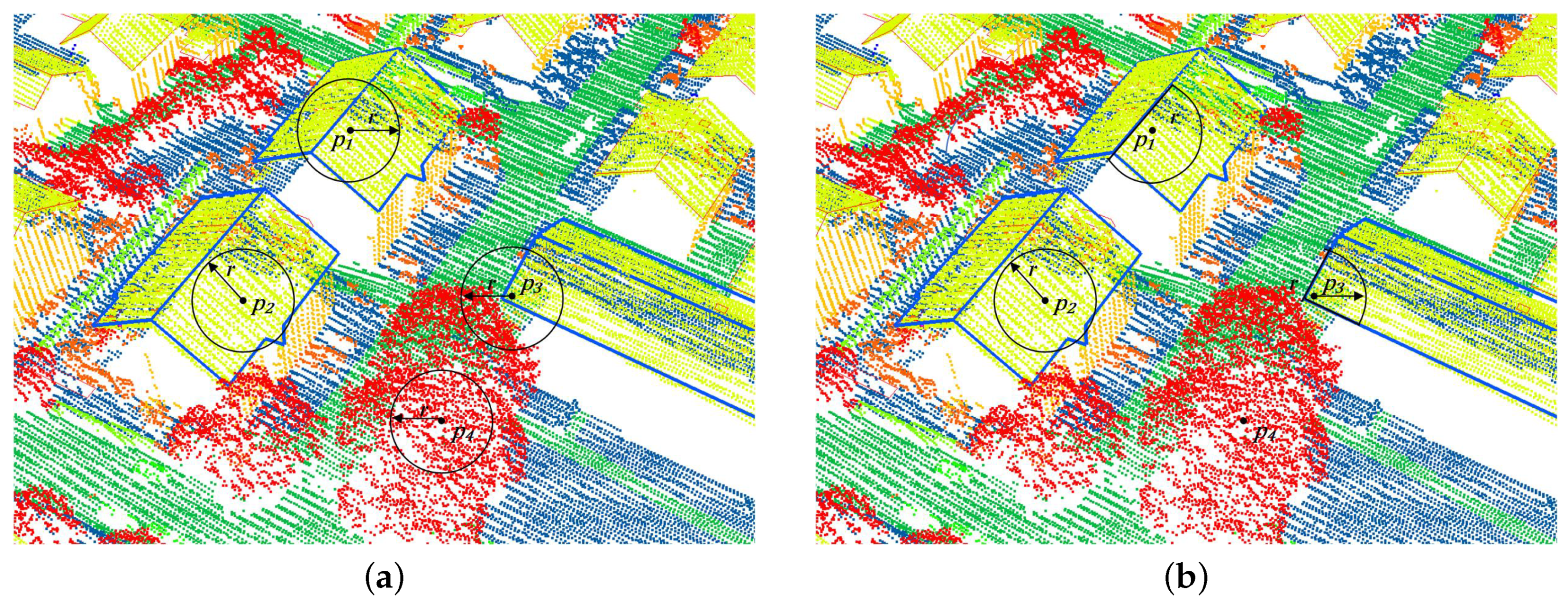

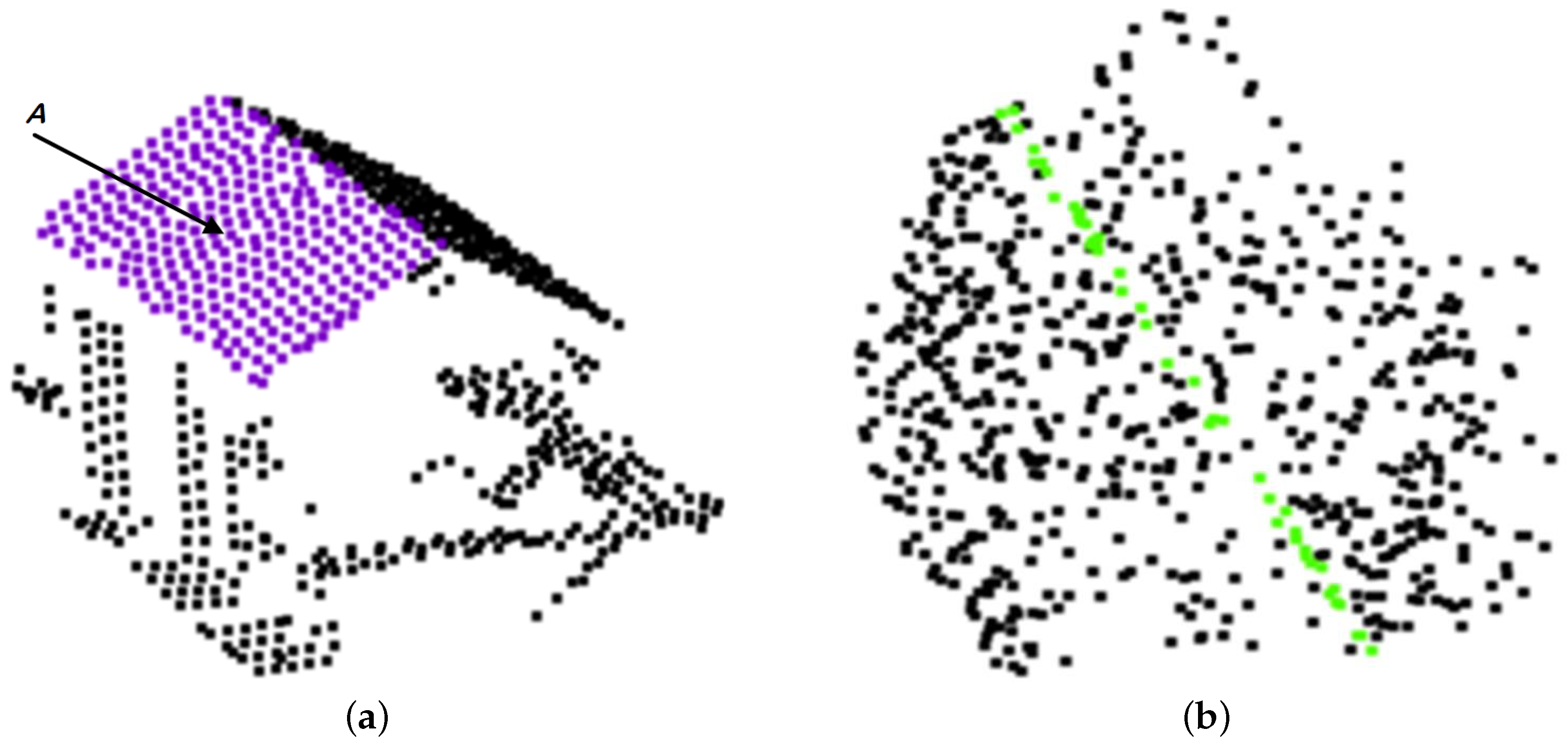

2.1. Consistent Neighbourhood Determination

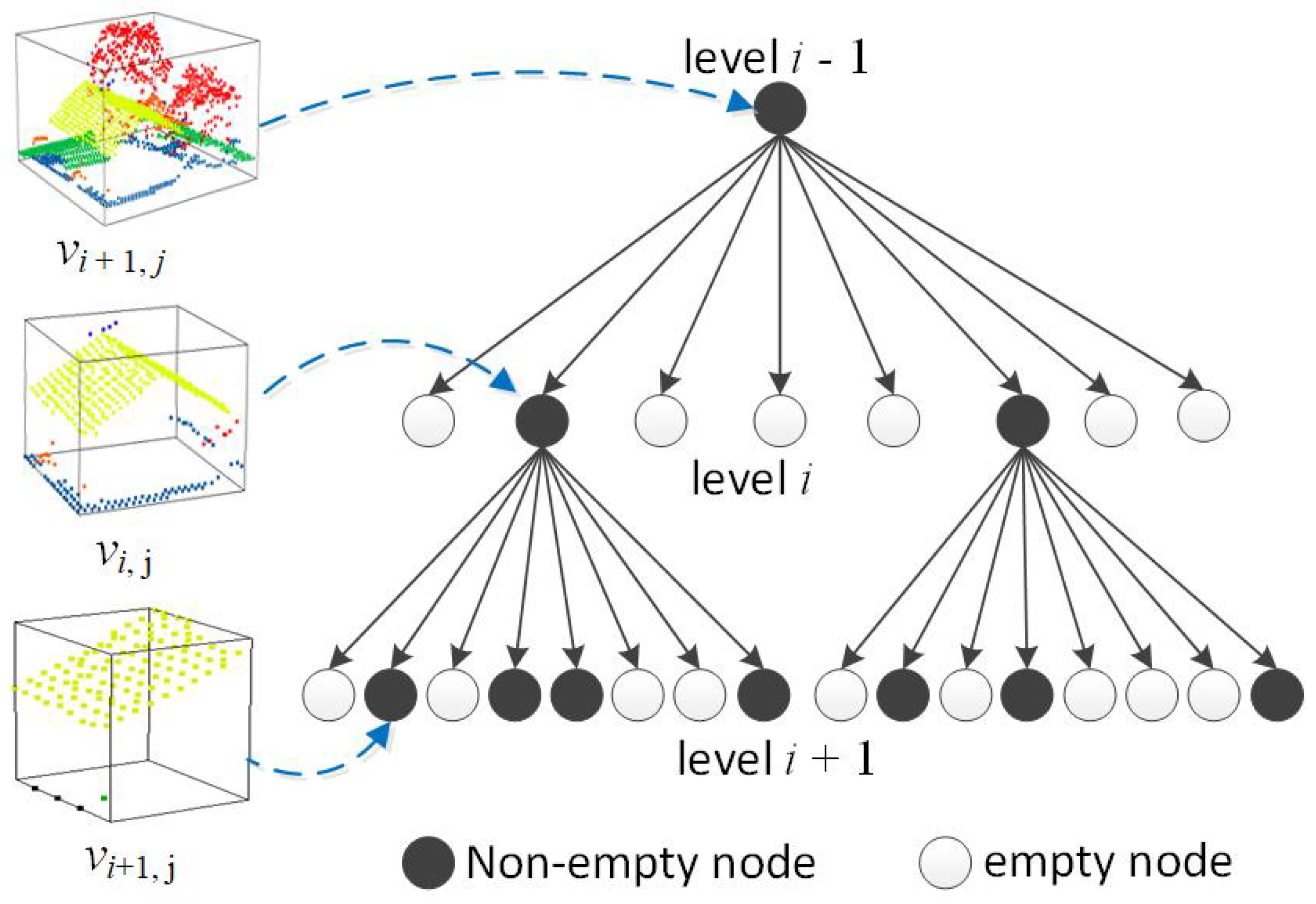

2.2. Detect Consistent Neighborhood at Multiscales

| Algorithm 1 Progressively detected consistent neighborhoods at multiscales. |

|

Optimization

3. Experiment Results and Performance Evaluation

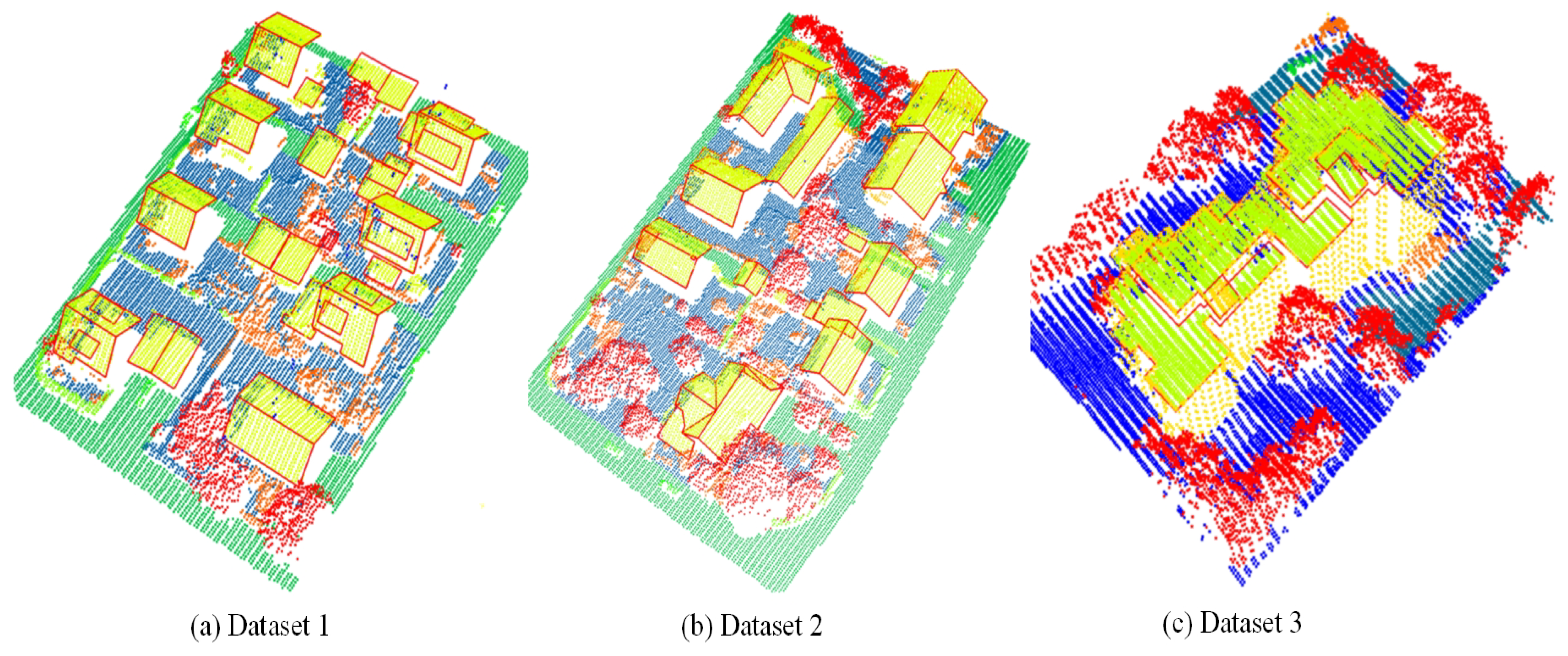

3.1. Datasets

3.2. Competing Methods and Parameter Selection

3.3. Experiment Results and Discussion

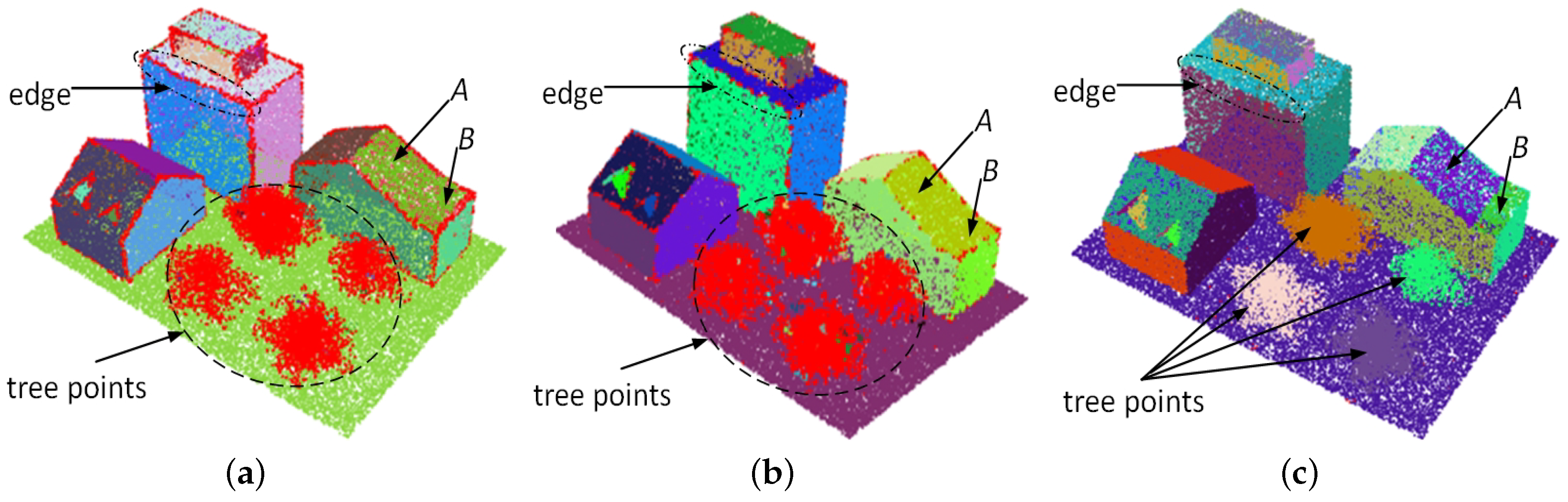

3.3.1. Synthetic Datasets

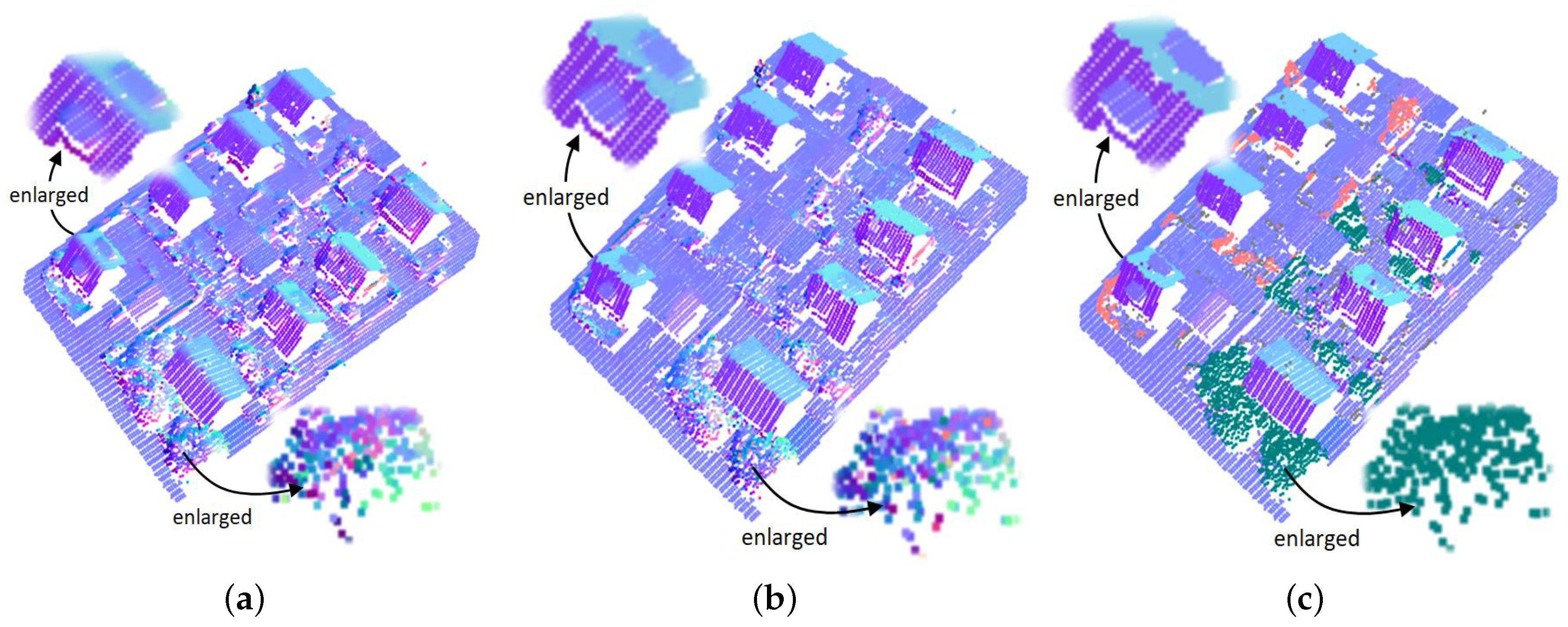

3.3.2. On Real Datasets

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yi, C.; Zhang, Y.; Wu, Q.; Xu, Y.; Remil, O.; Wei, M.; Wang, J. Urban building reconstruction from raw LiDAR point data. Comput. Aided Des. 2017, 93, 1–14. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. A system for the estimation of single-tree stem diameter and volume using multireturn LiDAR data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2479–2490. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, Q.; Li, J. Automated extraction of road markings from mobile LiDAR point clouds. Photogramm. Eng. Remote Sens. 2015, 78, 331–338. [Google Scholar] [CrossRef]

- Mongus, D.; Žalik, B. Parameter-free ground filtering of LiDAR data for automatic DTM generation. ISPRS J. Photogramm. Remote Sens. 2012, 67, 1–12. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X. Filtering airborne LiDAR data by embedding smoothness-constrained segmentation in progressive TIN densification. ISPRS J. Photogramm. Remote Sens. 2013, 81, 44–59. [Google Scholar] [CrossRef]

- Li, S.; Dai, L.; Wang, H.; Wang, Y.; He, Z.; Lin, S. Estimating leaf area density of individual trees using the point cloud segmentation of terrestrial LiDAR data and a voxel-based model. Remote Sens. 2017, 9, 1202. [Google Scholar] [CrossRef]

- Schuster, H.F. Segmentation of LiDAR data using the tensor voting framework. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 1073–1078. [Google Scholar]

- Zhao, R.; Pang, M.; Wang, J. Classifying airborne LiDAR point clouds via deep features learned by a multi-scale convolutional neural network. Int. J. Geogr. Inf. Sci. 2018, 32, 960–979. [Google Scholar] [CrossRef]

- Crasto, N.; Hopkinson, C.; Forbes, D.; Lesack, L.; Marsh, P.; Spooner, I.; van der Sanden, J. A LiDAR-based decision-tree classification of open water surfaces in an Arctic delta. Remote Sens. Environ. 2015, 164, 90–102. [Google Scholar] [CrossRef]

- Kovač, B.; Žalik, B. Visualization of LIDAR datasets using point-based rendering technique. Comput. Geosci. 2010, 36, 1443–1450. [Google Scholar] [CrossRef]

- Hoppe, H.; DeRose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. ACM SIGGRAPH Comput. Gr. 1992, 26, 71–78. [Google Scholar] [CrossRef]

- Abdi, H.; Williams, L.J. Principal component analysis. J. Comput. Gr. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z. A shape-based segmentation method for mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 81, 19–30. [Google Scholar] [CrossRef]

- Zancajo-Blázquez, S.; Lagüela-López, S.; González-Aguilera, D.; Martínez-Sánchez, J. Segmentation of indoor mapping point clouds applied to crime scenes reconstruction. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1350–1358. [Google Scholar] [CrossRef]

- Mattei, E.; Castrodad, A. Point cloud denoising via moving RPCA. Comput. Gr. Forum 2017, 36, 123–137. [Google Scholar] [CrossRef]

- Zhao, R.; Pang, M.; Wei, M. Accurate extraction of building roofs from airborne light detection and ranging point clouds using a coarse-to-fine approach. J. Appl. Remote Sens. 2018, 12, 1–16. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, H.Y.; Delorme, F.E.; Engin, S. An adaptive normal estimation method for scanned point clouds with sharp features. Comput.-Aided Des. 2013, 45, 1333–1348. [Google Scholar] [CrossRef]

- Holzer, S.; Rusu, R.B.; Dixon, M.; Gedikli, S.; Navab, N. Adaptive neighborhood selection for real-time surface normal estimation from organized point cloud data using integral images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; Volume 7198, pp. 2684–2689. [Google Scholar] [CrossRef]

- Mitra, N.J.; Nguyen, A.; Guibas, L. Estimating surface normals in noisy point cloud data. Int. J. Comput. Geom. Appl. 2004, 14, 261–276. [Google Scholar] [CrossRef]

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient Simplification of Point-sampled Surfaces. In Proceedings of the Conference on Visualization ’02, VIS ’02, Boston, MA, USA, 27 October–1 November 2002; IEEE Computer Society: Washington, DC, USA, 2002; pp. 163–170. [Google Scholar] [CrossRef]

- Castillo, E.; Liang, J.; Zhao, H. Point Cloud segmentation and denoising via constrained nonlinear least squares normal estimates. In Innovations for Shape Analysis; Breuß, M., Bruckstein, A., Maragos, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 283–299. [Google Scholar] [CrossRef]

- Aurenhammer, F. Voronoi diagrams-a survey of a fundamental geometric data structure. ACM Comput. Surv. 1991, 23, 345–405. [Google Scholar] [CrossRef]

- Dey, T.K.; Li, G.; Sun, J. Normal Estimation for Point Clouds: A Comparison Study for a Voronoi Based Method. In Proceedings of the Second Eurographics/IEEE VGTC Conference on Point-Based Graphics, SPBG’05, Stony Brook, NY, USA, 21–22 June 2005; Eurographics Association: Aire-la-Ville, Switzerland, 2005; pp. 39–46. [Google Scholar] [CrossRef]

- Amenta, N.; Bern, M. Surface Reconstruction by Voronoi Filtering. In Proceedings of the Fourteenth Annual Symposium on Computational Geometry, SCG ’98, Minneapolis, MN, USA, 7–10 June 1998; ACM: New York, NY, USA, 1998; pp. 39–48. [Google Scholar] [CrossRef]

- Li, B.; Schnabel, R.; Klein, R.; Cheng, Z.; Dang, G.; Jin, S. Robust normal estimation for point clouds with sharp features. Comput. Gr. 2010, 34, 94–106. [Google Scholar] [CrossRef]

- Dey, T.K.; Goswami, S. Provable surface reconstruction from noisy samples. In Proceedings of the Twentieth Annual Symposium on Computational Geometry, SCG ’04, Brooklyn, NY, USA, 8–11 June 2004; ACM: New York, NY, USA, 2004; pp. 330–339. [Google Scholar] [CrossRef]

- Alliez, P.; Cohen-Steiner, D.; Tong, Y.; Desbrun, M. Voronoi-based variational reconstruction of unoriented point sets. In Proceedings of the Fifth Eurographics Symposium on Geometry Processing, SGP ’07, Barcelona, Spain, 4–6 July 2007; Eurographics Association: Aire-la-Ville, Switzerland, 2007; pp. 39–48. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Belton, D.; West, G. Robust statistical approaches for local planar surface fitting in 3D laser scanning data. ISPRS J. Photogramm. Remote Sens. 2014, 96, 106–122. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, J.; Cao, J.; Li, B.; Liu, L. Quality point cloud normal estimation by guided least squares representation. Comput. Gr. 2015, 51, 106–116. [Google Scholar] [CrossRef]

- Boulch, A.; Marlet, R. Fast and robust normal estimation for point clouds with sharp features. Comput. Gr. Forum 2012, 31, 1765–1774. [Google Scholar] [CrossRef]

- Zhang, J.; Cao, J.; Liu, X.; Wang, J.; Liu, J.; Shi, X. Point cloud normal estimation via low-rank subspace clustering. Comput. Gr. 2013, 37, 697–706. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D. Robust normal estimation and region growing segmentation of infrastructure 3D point cloud models. Adv. Eng. Inform. 2017, 34, 1–16. [Google Scholar] [CrossRef]

- Boulch, A.; Marlet, R. Deep Learning for Robust Normal Estimation in Unstructured Point Clouds. Comput. Gr. Forum 2016, 35, 281–290. [Google Scholar] [CrossRef]

- Ben-Shabat, Y.; Lindenbaum, M.; Fischer, A. Nesti-Net: Normal Estimation for Unstructured 3D Point Clouds using Convolutional Neural Networks. arXiv, 2018; arXiv:1812.00709. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Gr. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 293–298. [Google Scholar] [CrossRef]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of LiDAR data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar] [CrossRef]

- Rabbani, T.; van den Heuvel, F.A.; Vosselman, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, XXXVI, 248–253. [Google Scholar]

| Datasets | KNN–PCA | RHT | Proposed Approach |

|---|---|---|---|

| Synthetic datasets | k = 50 | = 50, k = 20, = 5, = 15 | = 0.15, = 4 |

| Real datasets | k = 30 | = 80, k = 40, = 5, = 15 | = 0.10, = 3 |

| Noise () | KNN–PCA | RHT | Proposed Approach | ||||||

|---|---|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | |||||||

| 0.00 | 0.152 | 0.406 | 7.98 | 0.200 | 0.210 | 2.14 | 0.147 | 0.154 | 1.16 |

| 0.25 | 0.156 | 0.414 | 8.28 | 0.193 | 0.234 | 2.65 | 0.148 | 0.157 | 1.20 |

| 0.50 | 0.171 | 0.443 | 9.47 | 0.192 | 0.234 | 3.62 | 0.154 | 0.162 | 1.27 |

| 0.75 | 0.194 | 0.605 | 17.52 | 0.195 | 0.319 | 4.87 | 0.169 | 0.185 | 1.40 |

| 1.00 | 0.225 | 0.785 | 29.59 | 0.204 | 0.360 | 6.22 | 0.171 | 0.171 | 1.58 |

| KNN–PCA | RHT | Proposed Approach | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset 1 | 14 | 770 | 1054 | 9 | 279 | 1044 | 2 | 138 | 45 |

| Dataset 2 | 11 | 3187 | 5303 | 4 | 826 | 4480 | 1 | 313 | 306 |

| Dataset 3 | 2 | 587 | 6091 | 5 | 91 | 5676 | 0 | 22 | 147 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, R.; Pang, M.; Liu, C.; Zhang, Y. Robust Normal Estimation for 3D LiDAR Point Clouds in Urban Environments. Sensors 2019, 19, 1248. https://doi.org/10.3390/s19051248

Zhao R, Pang M, Liu C, Zhang Y. Robust Normal Estimation for 3D LiDAR Point Clouds in Urban Environments. Sensors. 2019; 19(5):1248. https://doi.org/10.3390/s19051248

Chicago/Turabian StyleZhao, Ruibin, Mingyong Pang, Caixia Liu, and Yanling Zhang. 2019. "Robust Normal Estimation for 3D LiDAR Point Clouds in Urban Environments" Sensors 19, no. 5: 1248. https://doi.org/10.3390/s19051248

APA StyleZhao, R., Pang, M., Liu, C., & Zhang, Y. (2019). Robust Normal Estimation for 3D LiDAR Point Clouds in Urban Environments. Sensors, 19(5), 1248. https://doi.org/10.3390/s19051248