Abstract

Automatic joint detection is of vital importance for the teaching of robots before welding and the seam tracking during welding. For narrow butt joints, the traditional structured light method may be ineffective, and many existing detection methods designed for narrow butt joints can only detect their 2D position. However, for butt joints with narrow gaps and 3D trajectories, their 3D position and orientation of the workpiece surface are required. In this paper, a vision based detection method for narrow butt joints is proposed. A crosshair laser is projected onto the workpiece surface and an auxiliary light source is used to illuminate the workpiece surface continuously. Then, images with an appropriate grayscale distribution are grabbed with the auto exposure function of the camera. The 3D position of the joint and the normal vector of the workpiece surface are calculated by the combination of the 2D and 3D information in the images. In addition, the detection method is applied in a robotic seam tracking system for GTAW (gas tungsten arc welding). Different filtering methods are used to smooth the detection results, and compared with the moving average method, the Kalman filter can reduce the dithering of the robot and improve the tracking accuracy significantly.

1. Introduction

In automatic welding, it is necessary to align the welding torch with the center of the joint to ensure the welding quality. Nowadays, the motion path of a welding robot is usually set by offline programming or manual teaching. However, during the welding process, the actual joint trajectory may deviate from the path set before welding due to factors, such as machining error, assembly error, and thermal deformation. In view of the abovementioned reason, it is necessary to perform automatic joint detection.

In the welding field, visual detection is widely used for the monitoring of weld defects [1], recognition of the weld joint [2,3,4], etc. For automatic joint detection, the structured light method based on optical triangulation is commonly used to detect the 3D position of joints with large grooves. Zou et al. projected a structured laser on the workpiece surface and extracted laser stripes from images strongly disturbed by an arc to calculate the 3D position of the joint in the world frame and control the motion of the welding torch in real time [5]. Li et al. proposed a robust automatic welding seam identification and tracking method by utilizing structured light vision, which can identify deformed laser stripes in the complex welding environment and find the position of the welding joint in the pixel coordinate [6]. Some companies have released commercial joint detection sensors based on the structured light method [7,8].

However, for the butt joint with a narrow gap (with a width less than 0.2 mm), the deformation of structured light stripes almost disappears, so it is difficult to detect the position of the narrow butt joint with the structured light method [9]. To solve this problem, researchers have proposed a variety of methods. Xu et al. developed a passive visual sensing method to capture the image of a molten pool and extracted the edge of the molten pool with an improved Canny operator to calculate the deviation of the joint relative to the torch [10]. Gao et al. tried to capture the image of a molten pool with an infrared camera and calculated the deviation of the joint relative to the torch by the shape of the weld pool. Then, an adaptive Kalman filter and Elman neural network were used to improve the accuracy [11]. Nilsen et al. estimated the offset of the joint relative to the torch in laser welding by the image of the keyhole and the spectrum of the plasma sprayed from the keyhole, respectively, and combined these two methods to construct a composite sensing system [12]. Shah et al. used an auxiliary light source to illuminate the workpiece. Considering the uneven brightness on the surface of the workpiece, the local thresholding method was used to extract the position of the joint in pixel coordinates [13]. Nele et al. constructed an image acquisition system, which was combined with the pattern learning algorithm to detect the position of the butt joint relative to the torch and corrected the torch position in real time [14]. Kramer et al. distinguished the boundaries between the two surfaces of the workpiece to be welded by their texture information, thereby finding the nearly invisible narrow line imaged by the joint gap [15]. Gao et al. introduced a novel method, in which the deviation of the weld joint relative to the torch was detected according to the magneto-optical effect [16].

The above methods for the detection of a narrow butt joint can only detect its 2D position. However, in the welding of a butt joint with a width less than 0.2 mm and with a 3D trajectory, the 3D position of the joint is required. Furthermore, the welding torch should also maintain a proper orientation relative to the workpiece surface to ensure the welding quality, so the normal vector of the workpiece surface also needs be obtained. Fang et al. presented a visual seam tracking system in which the deviation of the joint relative to the torch in the horizontal direction was detected according to the position of the joint in the image under natural light illumination, and the deviation in the vertical direction was detected using the structured light method, but the method was incapable of detecting the orientation of the workpiece surface [17]. Shao et al. projected three laser stripes onto the workpiece surface, blended the 2D information of the joint in the image with the 3D information of the structured light, calculated the 3D position of the joint and the normal vector of the workpiece surface, and adjusted the position and orientation of the torch in real time [18]. However, this method still relied on the deformation of laser stripes. Because of the machining error and assembly error, the width of the joint can be uneven and the gap of some points on the joint will disappear. Under this circumstance, this method will miss some joint points.

Zeng et al. designed a narrow butt detection sensor, which projected uniform light and crosshair structured light onto the surface of a workpiece and captured images alternately. Then, 2D and 3D information were combined to calculate the 3D position of the joint and the normal vector of the workpiece surface in a world frame, and the position of the torch was corrected in real time [9,19]. Based on this method, Peng et al. tried to fit the workpiece surface with the moving least squares (MLS) method in the calculation process to improve the fitting accuracy [20]. However, Zeng’s method [9,19] has certain limitations. To conveniently extract the 2D information of the joint from the image, the method requires strict lighting conditions for the auxiliary light source. When the auxiliary light source is on, the grayscale of the workpiece surface in the image needs to be almost saturated, but this requirement can be achieved only with a specular reflection workpiece surface and the workpiece surface needs to be close to the auxiliary light source. The working distance of those commercial joint detection sensors based on the structured light method can reach more than 100 mm [7,8], while the working distance in [9] does not exceed 40 mm generally. In the case of a diffuse reflection workpiece surface or remote detection, the illumination intensity of the LED light source is insufficient as the auxiliary light source. To achieve the desired high grayscale of the workpiece surface in the image, the exposure time of the camera needs to be extended, which will deteriorate the detection speed. If a laser light source is used as the auxiliary light source, speckle in the image will affect the image quality and make it difficult to extract the 2D information of the joint from the image. In this paper, a vision based detection method for a narrow butt joint is proposed, which reduces the requirements for the lighting conditions of the auxiliary light source, and the proposed method is used in building a robotic seam tracking system for GTAW (gas tungsten arc welding).

The rest of the paper is organized as follows. In Section 2, the processes and details of the proposed detection method are presented. To apply this detection method in the robotic seam tracking system, Section 3 introduces the necessary coordinate transformation. In Section 4, the configurations of the joint detection sensor and the robotic seam tracking system for GTAW are detailed. In Section 5, the detection results of the proposed method are presented, and different filtering methods are used to smooth the detection results to reduce the dithering of the robot and improve the seam tracking accuracy. Finally, Section 6 gives the conclusions of this paper.

2. Detection Method for the Narrow Butt Joint

In this section, the principle of the detection method is introduced first, then details of the method are discussed, including the grabbing of images with an appropriate grayscale distribution, image processing, and the calculation of the position and orientation of the joint. Finally, applications of the proposed method are discussed.

2.1. Principle of the Method

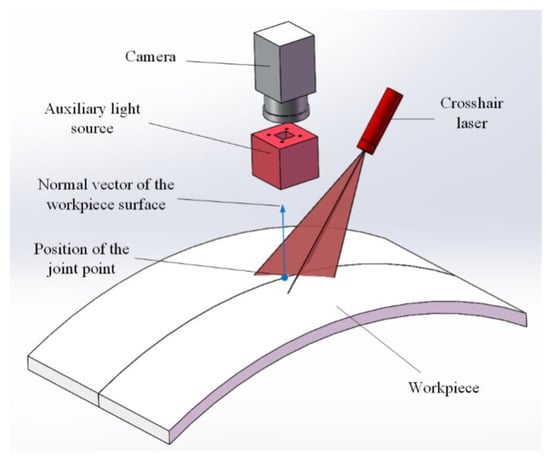

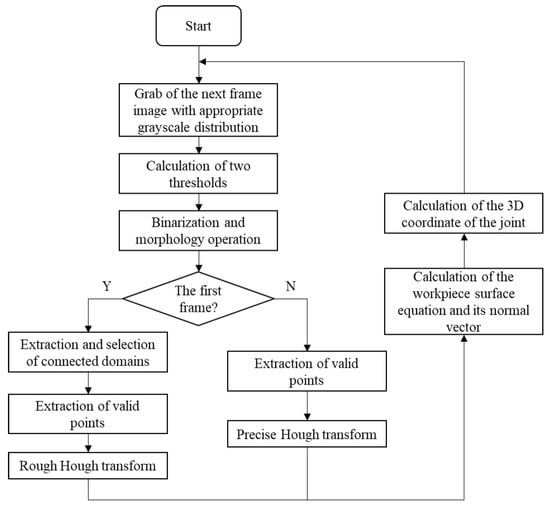

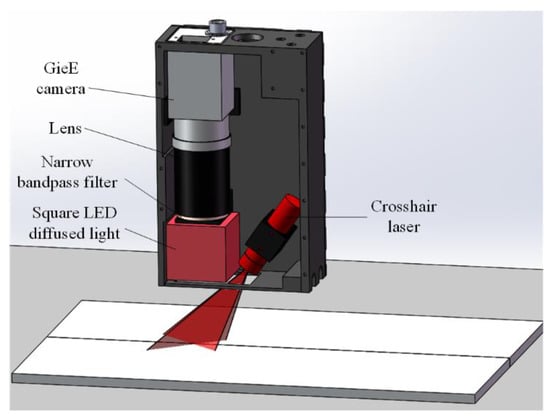

The basic experimental setup of the proposed detection method for a narrow butt joint is shown in Figure 1. A crosshair laser is projected onto the workpiece surface and the LED (light-emitting diode) auxiliary light source is used to illuminate the workpiece surface continuously. Images with an appropriate grayscale distribution are grabbed by using the auto exposure function of the camera to adjust the exposure time. Then, the joint region and laser stripe region can be extracted by different gray thresholds. The laser stripe region provides the 3D information of the workpiece surface (normal vector included) and the joint region provides the 2D information of the joint. By combining the 2D and 3D information together, the 3D position of the joint can be obtained. To improve the processing speed, different processing flows are used for the first frame and the subsequent frames. The flowchart of the proposed joint detection method is shown in Figure 2, and its details will be described in the following subsections.

Figure 1.

Basic experimental setup of the proposed method.

Figure 2.

Flowchart of the proposed joint detection method.

2.2. Grabbing of Images with an Appropriate Grayscale Distribution

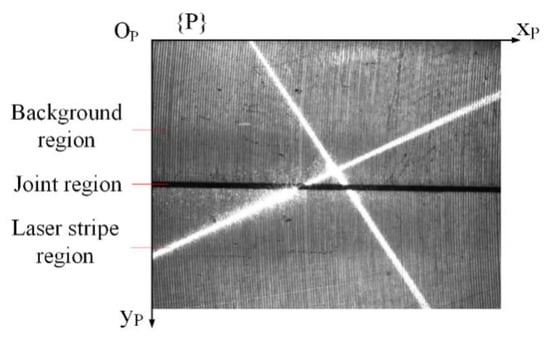

The ROI (region of interest) of the grabbed image is shown in Figure 3, and the field-of-view corresponding to it is 16.25 × 10 mm. The grayscale of the background region is affected by the illumination intensity of the auxiliary light source. The laser stripe region is created by the projection of the crosshair laser. Ideally, the grayscale for the joint region should be very low (close to 0) and that for the laser stripe region should be very high (close to 255) in the image. So, in the auto exposing of the camera, the expected average grayscale of the ROI is set to 128 to ensure that the joint region and laser stripe region can be differentiated clearly from the background region according to different gray thresholds. However, if the illumination intensity of the auxiliary light source is too strong, the exposure time will be reduced greatly, so the grayscale for the laser stripe region will be obviously smaller than 255 and be close to that of the background region when the expected average grayscale of the ROI is set to 128, which will make it difficult to differentiate the laser stripe region from the background region. So, to differentiate the laser stripe region from the background region, the illumination intensity of the auxiliary light source is controlled to be obviously weaker than that of the crosshair laser by adjusting its supply voltage to make sure that the grayscale for the laser stripe region is close to 255. In fact, this can easily be achieved, especially under the circumstance of remote detection, because the orientation of the LED light is much worse than that of the laser. The average grayscale of the ROI shown in Figure 3 is 128.4. The pixel coordinate system {P} is established on the ROI.

Figure 3.

The grabbed ROI (region of interest).

2.3. Image Processing

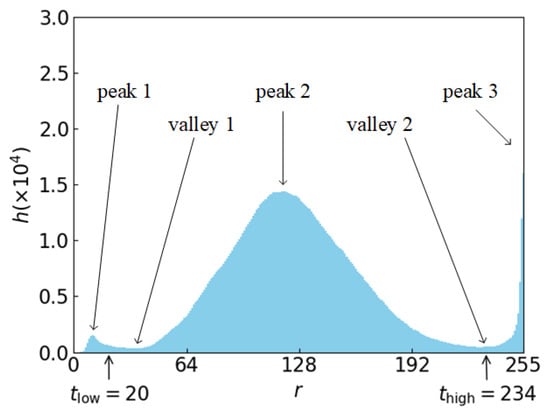

2.3.1. Determination of Thresholds for Binarization

The thresholds for the extraction of the laser stripe region and the joint region are determined according to the histogram, , of the ROI, where represents the grayscale and represents the number of pixels with a grayscale of . To eliminate false valleys caused by accidental factors, a Gaussian filter with a length of 5 is used to smooth the original histogram first, and the smoothed histogram is shown in Figure 4. The background region with a medium grayscale results in peak 2 in the histogram. For the laser stripe region, its grayscale is very high and its area is not very small, which causes peak 3 in the high grayscale region of the histogram. So, the grayscale corresponding to valley 2, which is between peak 2 and peak 3, can be regarded as the threshold, , and it can be used for extracting the laser stripe region from the background region. The grayscale corresponding to valley 2 is found to be 234 in the smoothed histogram, so .

Figure 4.

Smoothed histogram of the grabbed ROI.

For the joint region, the binarization threshold cannot be determined in the same way as the laser stripe region. This is because when the joint is extremely narrow, the area of the joint region in the ROI can be very small. In this case, peak 1 in the histogram does not exist at all, and neither does valley 1. To ensure the robustness of the threshold determination method, Equation (1) is used to determine the threshold, , for extracting the joint region from the background region:

where is the area of the ROI and is a ratio, which is . We get . The value of should be near the percentage of the joint region’s area in the ROI. If the value is set too high, the background region is not eliminated effectively. On the contrary, if the value is set too low, the main part of the joint region is not kept completely.

2.3.2. Binarization and Morphology Operation

The binarization of the images is processed with the thresholds, and , respectively, and the binary ROI are shown in Figure 5. In the two images of Figure 5, there are some disconnected small regions, so the morphology of the close operation is used to connect them in the two images, and the images after close operation are shown in Figure 6.

Figure 5.

ROI after binarization. (a) Laser stripe region. (b) Joint region.

Figure 6.

ROI after close operation. (a) Laser stripe region. (b) Joint region.

2.3.3. Extraction and Selection of Connected Domains

When the image is the first frame, the connected domains in the two images of Figure 6 are extracted. Because the laser stripe region and the joint region, ideally, do not have holes, only the outermost contours are kept if there are nested contours. For the laser stripe region and joint region, the connected domains have a relatively large area and a slender shape, while those falsely kept regions always have a relatively small area or a less slender shape, so we can retain the laser stripe region and joint region according to their area and circularity ratio [21] (pp. 844–845):

where is the area of the connected domains and is the area threshold. The values of are selected by several attempts to keep the main part of the laser stripe region or the joint region. For the laser stripe region, is set to 2000 pixel2 and for the joint region, is set to 1000 pixel2. This is because the area of the laser stripe region is obviously larger than that of the joint region. represents the circularity ratio of the connected domains and is the circularity ratio threshold. is defined as:

where is the perimeter of the connected ratio. The circularity ratio can represent the slenderness degree of a region. It is 1 for a circular region and 0 for a line, so the circularity ratio threshold, , is set to 0.5 for both the laser stripe region and the joint region and we find this value effective.

The connected domains kept are shown in Figure 7. It can be found that there is a false connected domain kept in Figure 7a, which results from the area threshold being not large enough. In fact, if the area threshold is set to 3000 pixel2, this false connected domain will be eliminated. However, even if it is kept, the laser stripe can still be extracted successfully in the following steps.

Figure 7.

Kept connected domains. (a) Laser stripe region. (b) Joint region.

Because the extraction and selection of the connected domains are time-consuming tasks, to improve the speed of image processing, they are only performed for the first frame. For the rest of the frames, those falsely kept regions do not influence the detection result because of the robustness of the image processing method, which is introduced in the content below.

2.3.4. Extraction of Valid Points

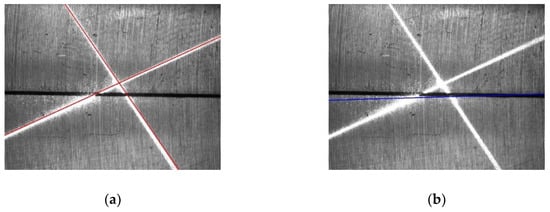

For the images in Figure 7, each column is scanned from top to bottom to find every line segment whose length is greater than 10, and then the midpoint of each found line segment is marked as a valid point, as shown in Figure 8. The valid points of the laser stripe region are marked in red and the valid points of the joint region are marked in blue. The use of a length threshold of the line segments is to eliminate those line segments located at the boundary of the connected domains.

Figure 8.

Extracted valid points. (a) Laser stripe region. (b) Joint region.

2.3.5. Line Extraction

Because the field-of-view corresponding to the ROI is small (with a size of 16.25 × 10 mm), the joint in the ROI can be approximated as a straight line when its trajectory does not change sharply. When the curvature of the joint trajectory increases, the size of the field-of-view corresponding to the ROI should be reduced to make sure that the joint region can be regarded as a straight line. Similarly, the workpiece surface in the ROI can be approximated as a plane when its curvature is small, so the laser stripes can be approximated as two straight lines.

The Hough transform [22] is a common method for the detection of straight lines. A line can be represented as , where is the perpendicular distance from the origin to the line and is the angle formed by this perpendicular line and the horizontal axis, so any line can be represented with . A 2D array or accumulator is created with a resolution of . For every point in the image, is changed within its domain of definition with a step size of and a different is obtained. For every pair, the value of the bin corresponding to it in the accumulator increases. Finally, the parameters of the bin corresponding to the maximum value in the accumulator are regarded as the extracted line.

However, the computational cost of the Hough transform increases with the improvement of its detection accuracy. To ensure the detection accuracy of the Hough transform and meanwhile ensure the speed of detection to meet the requirements of real-time performance, different parameter settings are adopted for the first frame and the subsequent frames. If the speed of detection is not fast enough, the detected point would not be dense enough so the detection accuracy of the joint trajectory will deteriorate.

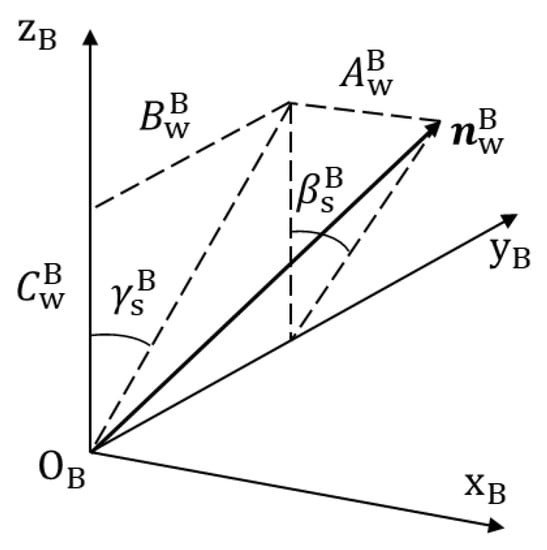

For the first frame, a rough Hough transform is used. The Hough transform is applied to extract two laser stripe lines and the joint line, respectively, and only valid points in Figure 8 are considered. To increase the detection speed, the resolution, and , are set with relatively large values, which means that the accuracy of the line extraction is relatively low. and are set here. Because we currently do not know any information about the line’s position, the value range of is set to , where is the diagonal length of the ROI and the value range of is set to . For the valid points of the laser stripe region, the accumulator’s bins with maximum values in the range of and are searched, respectively, and the parameters of these two found bins represent the two laser stripe lines. For the valid points of the joint region, the accumulator’s bin with the maximum value is searched for in the whole parameter space of and , and the parameters of the found bin represent the joint line. The found laser stripe lines and the joint line are drawn in Figure 9a,b, respectively, from which it can be seen that the accuracy of the line extraction is inadequate.

Figure 9.

Extracted lines with the rough Hough transform. (a) Laser stripe lines. (b) Joint line.

For the subsequent frames, a precise Hough transform is used. During two successive frames, there is no significant relative motion between the camera and the workpiece, so the position of the laser stripe region and the joint region only changes a little. Therefore, from the second frame, the value ranges of the parameters of the Hough transform only need to be near the extracted lines of the last frame. For example, suppose that the parameters of the extracted joint line in one frame are . Then, the value of is restricted to and is restricted to when extracting the joint line in the subsequent frame. and represent half of the value range of and in the Hough transform of the subsequent frame, which are set to be 100 pixels and 0.2 rad, respectively. In the same way, the value ranges for the extraction of two laser stripe lines are determined. Since the value ranges of the parameters become smaller, the value of and can be reduced to increase the extraction accuracy, so it is set that and . The laser stripe lines and the joint line extracted with this method are shown in Figure 10, from which we can see that the accuracy of the line extraction obviously increases.

Figure 10.

Extracted lines with a precise Hough transform. (a) Laser stripe lines. (b) Joint line.

It is worth noting that the extraction and selection of the connected domains described in Section 2.3.3 are not performed for the subsequent frames, so there may be some valid points falsely extracted. The restriction for the value ranges of the parameters of the Hough transform in the subsequent frames can eliminate the effect of these falsely extracted valid points on line extraction, which therefore increases the robustness of the line extraction method.

Below is a comparison of computational complexity between the rough Hough transform and the precise Hough transform. When the number of valid points is fixed, the computational complexity of the Hough transform is and the computational complexity of searching for the accumulator’s bin with the maximum value is , where and are the number of possible values for and , respectively.

For the first frame:

For the subsequent frames:

It can be seen that compared with the first frame, the computational complexity of the precise Hough transform for the subsequent frames does not change much though its accuracy increases significantly.

2.4. Calculation of the 3D Coordinates of the Joint and the Normal Vectors of the Workpiece Surface

By performing calibration in advance [23], the relationship between a point in the pixel coordinate system {P} and its corresponding point in the camera coordinate system {C} is obtained as:

where and represent the transformation function between {P} and {C}, which are determined by the camera itself. The equations of the two light planes of the crosshair laser source in {C} can also be obtained through calibration:

Two laser stripe lines are denoted as and . points centered on the intersection of and are selected with an equal distance on and in {P}, respectively, and these selected points are denoted as , . is set to 50 and the distance between two adjacent points is set to 10 pixels. For each selected point , its corresponding coordinate, , in {C} can be solved by combining Equations (6) and (7):

Because the field-of-view corresponding to the ROI is small, the workpiece surface in the ROI can be approximated as a plane in {C} when its curvature is small, and its least-squares plane can be estimated with , which can be represented with Equation (9):

Naturally, the normal vector of the workpiece surface in {C} can be represented as , which can also be regarded as the normal vector (or orientation) of the joint point.

Among the points on the joint line, only the point located at the middle along the y direction of the ROI is selected and calculated as the joint point here, which is denoted as in {P}. Then, its corresponding 3D coordinate, (, in {C} can be solved by combining Equations (6) and (9):

2.5. Applications of the Proposed Detection Method

The proposed detection method can be used both before welding and during welding. On the one hand, it can be used before welding to correct the path of the robot when the trajectory of the joint changes after teaching. Under this circumstance, it can be applied to welding methods, like laser welding, GMAW (gas metal arc welding), and GTAW. On the other hand, it can be used during welding to guide the motion of the torch in real time. Since this method requires images of the joint with little disturbance, it can be applied to welding methods that include almost no spatter, like GTAW.

3. Coordinate Transformation

In Section 2, the position and orientation of the joint point in the camera coordinate system {C} was calculated with the proposed joint detection method. When applying this method in the robotic seam tracking system, the position and orientation in the camera coordinate system {C} need to be transformed into the base coordinate system {B} of the robot to guide the motion of the robot.

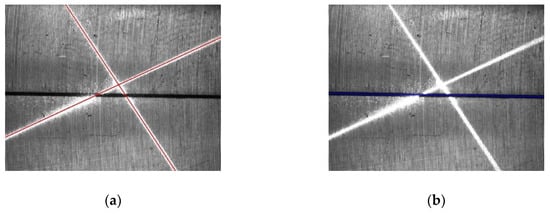

Coordinate systems involved in coordinate transformation include the camera coordinate system {C} fixed to the camera, the base coordinate system {B} attached to the robot base, and the tool coordinate system {T} fixed to the welding torch, as shown in Figure 11. A coordinate transformation can be described with a homogenous transformation matrix [24]. To describe the transformation relationship of {C} with respect to {B}, a homogenous transformation matrix, , is needed.

Figure 11.

Coordinate systems involved in the coordinate transformation.

To obtain a homogenous transformation matrix, , a homogenous transformation matrix, , is required, which describes the transformation relationship of {C} with respect to {T}, and , which describes the transformation relationship of {T} with respect to {B}. Then, can be derived from:

Since in the robotic seam tracking system the camera is fixed on the welding torch, can be predetermined by calibration. The origin of {T} is denoted as TCP (tool center point). is related to the position and orientation of the robot, which can be represented as , where represents the position of the TCP in {B} and represents the orientation of the welding torch in Euler angles. , , and are the roll, pitch, and yaw angles of {T} relative to {B}. With these six parameters known, can be derived, which is not detailed here. Additionally, can be derived from Equation (11). Then, the coordinates of the joint point, , in {B} can be derived from:

With derived, the normal vector of the joint point in {B} can be derived since is known. Suppose that can be represented as:

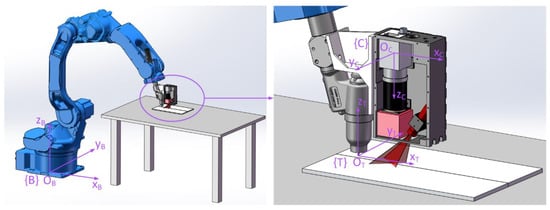

Therefore, the orientation of the joint point in {B} can be described with the Euler angles, and , as shown in Figure 12, which are given as follows:

Figure 12.

Illustration of and .

So, the position and orientation of the joint point in {B} can be represented with .

In the welding process, besides a relative position with the joint, the welding torch also needs to maintain a desired relative orientation with the workpiece surface to ensure the welding quality. Suppose that the welding torch should be perpendicular to the workpiece surface. Then, the axis of the welding torch, , needs to be parallel with . In the experiments described below, the joints are mainly along the direction and there is no sharp change of their trajectories, so the welding torch does not need to rotate around its axis, . Thus, is constant at 0. and are regarded as the target values of and in the seam tracking process, respectively. Therefore, in seam tracking, the target values of the position and orientation, , of the robot are that of the joint point, , when is fixed to 0.

4. Experiment Setup

The configuration of the joint detection sensor designed according to the abovementioned detection method for a narrow butt joint of GTAW is shown in Figure 13. The Gig (Gigabit Ethernet) camera has a resolution of 1600 × 1200 pixel and offers the auto exposure function. With a working distance (the distance from the bottom of the sensor to the detected workpiece) of 30 mm, the field-of-view of the camera is 20 × 15 mm. The size of the ROI is set to 1300 × 1000 pixel in the experiments. The square LED diffused light with a central wavelength of 630 nm is used as the auxiliary light source. Since the image captured by the camera is rectangular, and the shell of the sensor is cuboid, a square LED can make better use of the space in the sensor and the area in the image. The crosshair laser has a central wavelength of 635 nm and the narrow bandpass filter has a central wavelength of 635 nm and FWHM (full width at half maximum) of 10 nm. The central wavelength for the square LED diffused light, the crosshair laser, and the narrow bandpass filter can eliminate the effect of the arc light on joint detection in aluminum alloy welding using GTAW, since in the arc light spectrum, the intensity near 635 nm is relatively low [9].

Figure 13.

Configuration of the joint detection sensor.

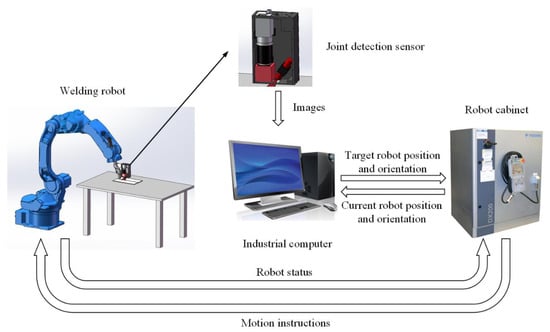

A schematic of the robotic seam tracking system for GTAW is shown in Figure 14. The joint detection sensor is fixed 52.3 mm in front of the welding torch. The welding torch is installed at the end of the robot arm, so its position and orientation can be controlled by changing the position and orientation of the robot arm. The robot is a Yaskawa MA1440 six-axis robot, which can be controlled directly with the DX200 robot cabinet. The industrial computer has 8 G RAM and i7-6700 CPU with a clock frequency of 2.60 GHz. The image of the workpiece surface is grabbed and sent to the industrial computer by the joint detection sensor. Then, the industrial computer performs image processing to obtain the position and orientation of the joint point in the camera coordinate system {C}. By combing them with the current position and orientation of the robot sent by the robot cabinet, the industrial computer performs coordinate transformation and calculates the position and orientation of the joint point in the base coordinate system {B}, namely, the target position and orientation of the robot.

Figure 14.

Schematic of the robotic seam tracking system for GTAW (gas tungsten arc welding).

5. Process and Result of the Joint Detection and Seam Tracking Experiment

In this section, a joint detection experiment is carried out with the robotic seam tracking system first. Then, a seam tracking experiment is carried out and in order to smooth the detection results and improve the tracking accuracy, different smoothing methods are used and their effects are compared.

5.1. Process and Results of the Joint Detection Experiment

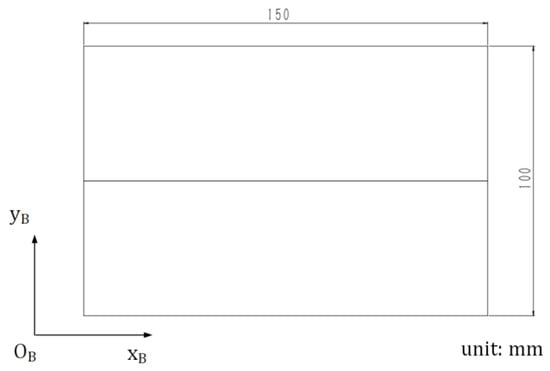

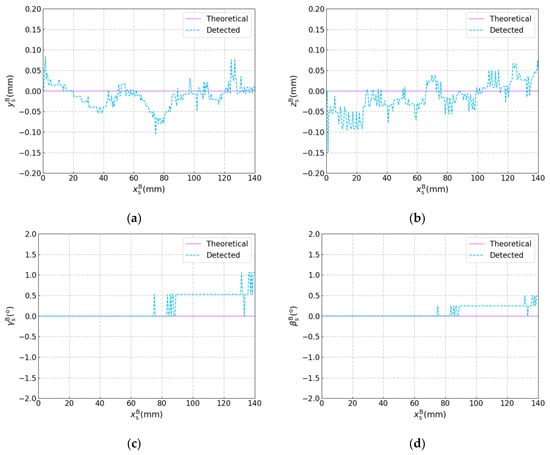

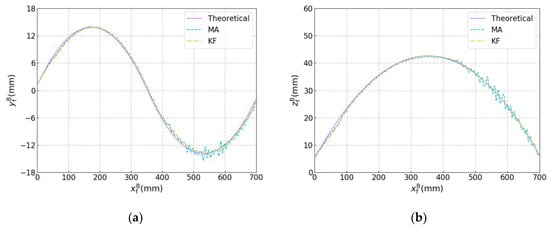

The joint detection experiment was performed with the robotic seam tracking system described in Section 4, in which the plane workpieces used are shown in Figure 15. The width of the joint between these two workpieces was less than 0.2 mm. The frame rate of the camera was 10 fps, and every image was used to calculate the target position and orientation. It should be noted that in this experiment, the welding torch moved along the axis of the base coordinate system {B} at a constant speed of 5 mm/s and did not change its motion status according to the detected result, so there was only joint detection and no seam tracking. The theoretical and detected results are shown in Figure 16 in which , , , and are plotted against , respectively. The theoretical results were calculated according to drawings of the plane workpieces in Figure 15. It can be seen that the position error does not exceed ±0.15 mm and the angle error does not exceed ±1.5°, which indicates the effectiveness of the proposed detection method for narrow butt joints.

Figure 15.

Dimension and orientation of the plane workpieces.

Figure 16.

Results of the joint detection experiment with the plane workpieces. (a) versus . (b) versus . (c) versus . (d) versus .

5.2. Process and Results of the Seam Tracking Experiment

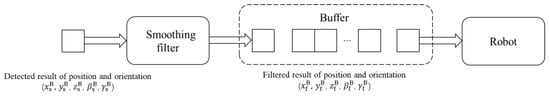

The seam tracking experiment was performed with the robotic seam tracking system, in which the curved workpieces used are shown in Figure 17. The joint to be tracked was a curve with a 3D trajectory and width less than 0.2 mm. The frame rate of the camera was 10 fps and the linear speed and angular speed of the robot were 5 mm/s and 5 °/s, respectively. From the results of the joint detection experiment shown in Figure 16, some fluctuations can be noted. If these detected results are used to guide the motion of the robot directly, dithering will happen in the robot’s motion because the detected results are not smooth enough, which will affect the accuracy of the detection and tracking. So, in the seam tracking experiment, the detected results need smoothing by a filter. Then, the smoothed results of position and orientation were sent to a buffer, and the results in the buffer were sent to the robot in sequence to guide its motion. The existence of the buffer is thus necessary. For the robot, we could only send it the next target after it had reached the last target. Because the next target may be detected before the robot had reached its last target, we needed the buffer to store these newly detected targets. The process is shown in Figure 18. In addition, only the filtered results whose positions were at a minimal distance (1.5 mm here) from that of the previous filtered result were sent to the buffer to make sure that positions of the filtered results used to guide the motion of the robot were not too close, otherwise obvious pauses in the motion of the robot would have resulted.

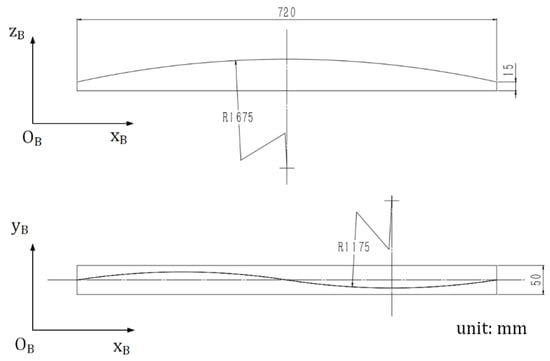

Figure 17.

Dimension and orientation of the curved workpieces.

Figure 18.

Filtering and sending of the position and orientation results.

Two smoothing methods were used to smooth the detected results, and their effects were compared.

The first smoothing method was the moving average (MA). The recent 10 detected results were taken into account in order to eliminate individual results with large errors. For each dimension in these results, the maximum and minimum were excluded and the average of the rest values were calculated and regarded as the filtered result. Taking as an example, the filtered value of is denoted as , which can be calculated from the following formula:

where is the th value of and is the th value of . For , , , and , the same method is applied and the filtered values are calculated, respectively.

The second smoothing method was the Kalman filter (KF) [25]. The state and measurement equations for a system can be described as:

where is the th value of the variable, and are the process and measurement noise, respectively, and they are assumed to be independent, white, and with normal probability distributions, and , respectively, where is the process noise covariance and is the measurement noise covariance. is the state transformation matrix and is the control matrix.

The Kalman filter iterated algorithm can be written as:

where is the th priori state estimate, is the th posteriori state estimate, is the th priori estimate error covariance, is the posteriori estimate error covariance, . is the th Kalman gain, is the th measurement, and is the measurement matrix.

The Kalman filter was applied for each dimension of the detected results to get the filtered results . Taking as an example, the average of the first 10 values of calculated from Equation (19) was regarded as . was regarded as the measurement, , so . Because it was unknown and uncontrollable how the position and orientation of the joint point would change, and were set. For the other parameters, , , and , which were determined from experience. The posteriori estimate, , was regarded as the filtered value of ; that is, .

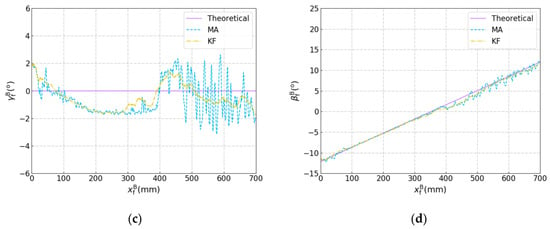

The theoretical and filtered results are shown in Figure 19. The theoretical results were calculated according to the drawings of the curved workpieces in Figure 17. When MA was used, obvious dithering happens in the robot’s motion. This indicates that MA was unable to smooth the detected results effectively, so the fluctuation of the detected results caused dithering of robot’s motion as the robot performs seam tracking and its motion follows the filtered position and orientation. Because the calculation and the communication between the robot cabinet and the industrial computer need time, there was some delay (about tens of milliseconds in our experiment) between the grab of the image and the acquisition of the current position and orientation of the robot, which may bring some detection error into the coordinate transformation. When dithering starts to happen, the detection error will increase and in turn aggravate the dithering of the robot’s motion. Compared with MA, the KF can smooth the detected results and eliminate the dithering of the robot’s motion much more effectively, therefore, increasing the accuracy of joint detection and seam tracking significantly, which indicates that KF is quite applicable for the proposed robotic seam tracking system.

Figure 19.

Results of the seam tracking experiment with the curved workpieces. (a) versus . (b) versus . (c) versus . (d) versus .

Next, the processing time for the joint detection and smoothing (including image processing, coordinate transformation and filtering) were tested and compared. One hundred results of the position and orientation of the joint points were detected and smoothed with MA and KF, respectively. Mean values of the required time were 44.7 ms and 44.5 ms, and the standard deviations were 9.8 ms and 8.0 ms, respectively. It can be found that KF does not lead to an increase in the processing time compared with MA. Suppose the welding speed is 10 mm/s, the distance between two detected points is less than 0.5 mm, so the processing speed of the proposed joint detection method meets the real-time requirements to make the detected trajectory accurate enough.

6. Conclusions

A vision based detection method for a narrow butt joint was proposed in this paper. The proposed method can detect the 3D position of the narrow butt joint with a width of less than 0.2 mm and the normal vector of the workpiece surface simultaneously. The position error does not exceed ±0.15 mm and the angle error does not exceed ±1.5 °. In addition, the proposed detection method was applied in a robotic seam tracking system for GTAW. It was found that the Kalman filter can reduce the dithering of the robot and improve the tracking accuracy significantly compared with the moving average method, which indicates that KF is applicable for the proposed robotic seam tracking system.

Author Contributions

Conceptualization, B.X.; Data curation, Y.G., Z.T. and G.W.; Formal analysis, B.X.; Investigation, G.P.; Supervision, B.C., D.D. and G.W.; Writing—original draft, B.X.; Writing—review & editing, B.C. and D.D.

Funding

This research was funded by the National Natural Science Foundation of China grant number U1537205 and the National Defence Basic Scientific Research Project grant number JCKY2014203A001.

Acknowledgments

The research was financially supported by the National Natural Science Foundation of China (No.U1537205), and the National Defence Basic Scientific Research Project (No.JCKY2014203A001).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hong, Y.; Chang, B.; Peng, G.; Yuan, Z.; Hou, X.; Xue, B.; Du, D. In-Process Monitoring of Lack of Fusion in Ultra-Thin Sheets Edge Welding Using Machine Vision. Sensors 2018, 18, 2411. [Google Scholar] [CrossRef] [PubMed]

- Zeng, J.; Chang, B.; Du, D.; Wang, L.; Chang, S.; Peng, G.; Wang, W. A Weld Position Recognition Method Based on Directional and Structured Light Information Fusion in Multi-Layer/Multi-Pass Welding. Sensors 2018, 18, 129. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Zhu, Z.; Sun, B.; Yu, Y. Principle of an innovative visual sensor based on combined laser structured lights and its experimental verification. Opt. Laser Technol. 2019, 111, 35–44. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, Z.; Sun, B.; Yu, Y. A novel multifunctional visual sensor based on combined laser structured lights and its anti-jamming detection algorithms. Weld. World 2018. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, T. Laser vision seam tracking system based on image processing and continuous convolution operator tracker. Opt. Laser Eng. 2018, 105, 141–149. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Ge, S.S.; Khyam, M.O.; Luo, C. Automatic Welding Seam Tracking and Identification. IEEE Trans. Ind. Electron. 2017, 64, 7261–7271. [Google Scholar] [CrossRef]

- Non-Contact Seam Tracking TH6D System. Available online: https://www.scansonic.de/en/products/th6d-optical-sensor (accessed on 29 December 2018).

- Smart Laser Probe. Available online: http://meta-vs.com/slpr.html (accessed on 29 December 2018).

- Zeng, J.; Chang, B.; Du, D.; Hong, Y.; Chang, S.; Zou, Y. A Precise Visual Method for Narrow Butt Detection in Specular Reflection Workpiece Welding. Sensors 2016, 16, 1480. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Fang, G.; Chen, S.; Zou, J.J.; Ye, Z. Real-time image processing for vision-based weld seam tracking in robotic GMAW. Int. J. Adv. Manuf. Technol. 2014, 73, 1413–1425. [Google Scholar] [CrossRef]

- Gao, X.; You, D.; Katayama, S. Seam Tracking Monitoring Based on Adaptive Kalman Filter Embedded Elman Neural Network During High-Power Fiber Laser Welding. IEEE Trans. Ind. Electron. 2012, 59, 4315–4325. [Google Scholar] [CrossRef]

- Nilsen, M.; Sikström, F.; Christiansson, A.; Ancona, A. Vision and spectroscopic sensing for joint tracing in narrow gap laser butt welding. Opt. Laser Technol. 2017, 96, 107–116. [Google Scholar] [CrossRef]

- Shah, H.N.M.; Sulaiman, M.; Shukor, A.Z.; Kamis, Z.; Rahman, A.A. Butt welding joints recognition and location identification by using local thresholding. Robot. CIM-Int. Manuf. 2018, 51, 181–188. [Google Scholar] [CrossRef]

- Nele, L.; Sarno, E.; Keshari, A. An image acquisition system for real-time seam tracking. Int. J. Adv. Manuf. Technol. 2013, 69, 2099–2110. [Google Scholar] [CrossRef]

- Kramer, S.; Fiedler, W.; Drenker, A.; Abels, P. Seam tracking with texture based image processing for laser materials processing. Proc. SPIE 2014, 8963, 89630P. [Google Scholar]

- Gao, X.; Liu, Y.; You, D. Detection of micro-weld joint by magneto-optical imaging. Opt. Laser Technol. 2014, 62, 141–151. [Google Scholar] [CrossRef]

- Fang, Z.; Xu, D.; Tan, M. Visual seam tracking system for butt weld of thin plate. Int. J. Adv. Manuf. Technol. 2010, 49, 519–526. [Google Scholar] [CrossRef]

- Shao, W.J.; Huang, Y.; Zhang, Y. A novel weld seam detection method for space weld seam of narrow butt joint in laser welding. Opt. Laser Technol. 2018, 99, 39–51. [Google Scholar] [CrossRef]

- Zeng, J.; Chang, B.; Du, D.; Peng, G.; Chang, S.; Hong, Y.; Wang, L.; Shan, J. A Vision-Aided 3D Path Teaching Method before Narrow Butt Joint Welding. Sensors 2017, 17, 1099. [Google Scholar] [CrossRef] [PubMed]

- Peng, G.; Xue, B.; Gao, Y.; Tian, Z.; Hong, Y.; Chang, B.; Du, D. Vision sensing and surface fitting for real-time detection of tight butt joints. J. Phys. Conf. Ser. 2018, 1074, 12001. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Publishing House of Electronics Industry: Beijing, China, 2007; pp. 844–845. [Google Scholar]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent 3069654, 18 December 1962. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- de Graaf, M.; Aarts, R.; Jonker, B.; Meijer, J. Real-time seam tracking for robotic laser welding using trajectory-based control. Control Eng. Pract. 2010, 18, 944–953. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina: Chapel Hill, NC, USA, 1995; pp. 1–16. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).