Abstract

In the wild, wireless multimedia sensor network (WMSN) communication has limited bandwidth and the transmission of wildlife monitoring images always suffers signal interference, which is time-consuming, or sometimes even causes failure. Generally, only part of each wildlife image is valuable, therefore, if we could transmit the images according to the importance of the content, the above issues can be avoided. Inspired by the progressive transmission strategy, we propose a hierarchical coding progressive transmission method in this paper, which can transmit the saliency object region (i.e. the animal) and its background with different coding strategies and priorities. Specifically, we firstly construct a convolution neural network via the MobileNet model for the detection of the saliency object region and obtaining the mask on wildlife. Then, according to the importance of wavelet coefficients, set partitioned in hierarchical tree (SPIHT) lossless coding is utilized to transmit the saliency image which ensures the transmission accuracy of the wildlife region. After that, the background region left over is transmitted via the Embedded Zerotree Wavelets (EZW) lossy coding strategy, to improve the transmission efficiency. To verify the efficiency of our algorithm, a demonstration of the transmission of field-captured wildlife images is presented. Further, comparison of results with existing EZW and discrete cosine transform (DCT) algorithms shows that the proposed algorithm improves the peak signal to noise ratio (PSNR) and structural similarity index (SSIM) by 21.11%, 14.72% and 9.47%, 6.25%, respectively.

1. Introduction

Wildlife monitoring is crucial for the balance and stability of the whole ecosystem [1,2]. Images and videos of wildlife are the main materials that can be collected in the monitoring process. However, processing those materials in real time and effectively is a challenge. Conventional wildlife monitoring methods include crewed field survey, GPS (Global positioning system) collar [3], infrared camera [4] and satellite remote sensing monitoring [5] approaches. However, these methods have their own limitations, such as limited monitoring range, data acquisition lag and low resolution, and so on. Wireless multimedia sensor networks (WMSNs) [6] are applied in wildlife image collection as they present better deployment ability and accuracy.

Due to the limitations of WMSNs with low processing capability, power consumption restrictions and narrow transmission bandwidth, the wildlife monitoring images collected encompass high resolution and large information data characteristics, which poses a challenge for the WMSN transmission process [7]. For transmitting task through resource-constrained WMSN, image compression coding is utilized to reduce the transmission workload. In this field, image compression algorithms such as discrete cosine transform (DCT) [8], singular value decomposition (SVD) [9], and fast fourier transformation (FFT) [10,11] are capable of achieving high-efficiency compression of image samples. However, these algorithms are generally applied to the encoding and decoding processes of entire images, which means that the capability to transfer important regions in real-time is limited, besides, the transmission result is susceptible to external interference, such as transmission interruption and signal disturbance.

To solve the problem of unsuccessful transmission effectively, the image progressive transmission method [12,13,14] is utilized, in which the transmission process adopts the strategy that important regions are transmitted prior to other regions. However, the progressive transmission methods [15,16], which are based on the general displacement and staggered plane lifting method [17], cannot satisfy the practical demand for quick use of the reconstructed image information to distinguish the species of wildlife.

Saliency object detection can provide the mask of wildlife for the progressive transmission process, which is utilized to separate the object and background information. Conventional saliency detection algorithms, such as the human-computer interaction [18], visual attention model [19] and multilevel deep pyramid model [20] have undesired algorithm complexity issues.

This paper proposed a progressive transmission method for wildlife images based on saliency object detection. Firstly, regions containing saliency objects (wildlife) are detected from the original source by convolution neural networks, and then the mask of the saliency object region was generated. After the generation of the mask, set partitioned in hierarchical tree (SPIHT) [21] lossless coding transmission was performed preferentially on the saliency image to ensure the transmission accuracy of the wildlife region. Then Embedded Zerotree Wavelets (EZW) [22] lossy coding transmission was performed on the background region to improve the transmission efficiency, on the premise of ensuring the image reconstruction quality.

2. Wildlife Monitoring System

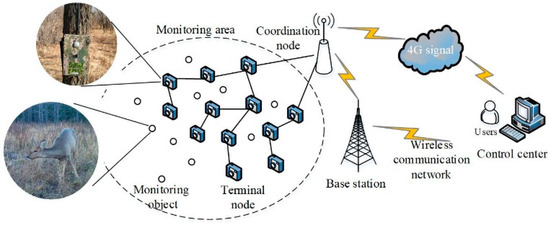

WMSN is widely used in wildlife monitoring systems to capture wildlife image materials with industrial grade cameras, which consist of WMSN terminal nodes, coordination nodes, gateway nodes and a data storage center (Back-end Sever). The wildlife monitoring system is acknowledged to present remote, real-time, all-weather and friendly monitoring merits, and the schematic diagram with detailed configurations are shown in Figure 1. Monitoring node devices developed by our laboratory are based on ZigBee network protocols and detailed parameters are shown in Table 1.

Figure 1.

Wildlife monitoring system.

Table 1.

Parameters of wireless multimedia sensor network (WMSN) Node.

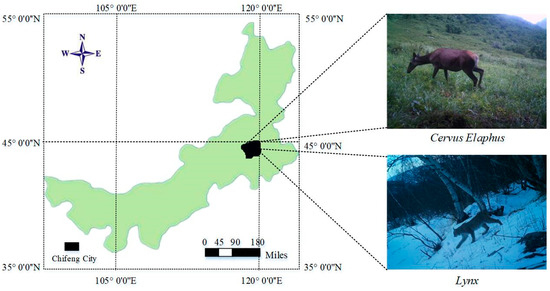

The WMSN monitoring system for wildlife monitoring was deployed in the Saihan Ula National Nature Reserve of Inner Mongolia Province, China. The experiment site has an average altitude of 1000 m above the sea level and it is temperate semi humid rainy climate. Wildlife species collected in the experimental site include Cervus Elaphus, Lynx, Capreolus pygargus, Sus Scrofa and Naemorhedus goral, and so on. Cervus Elaphus and Lynx are secondary national-protected species (shown in Figure 2). In this experiment, over 1600 images of more than 12 wildlife species were acquired and the total image data storage volume was 2.4 G.

Figure 2.

Wildlife monitoring images in Saihan Ula Nature Reserve.

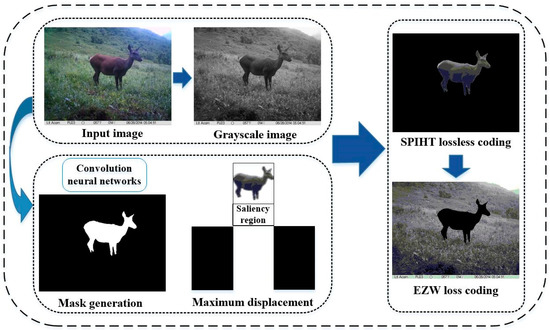

3. Hierarchical Coding Progressive Transmission Method

A novel hierarchical coding progressive transmission method is proposed to process the wildlife monitoring images with high resolution and large information data, as shown in Figure 3. The region of interest (ROI) [23,24] that contains the wildlife is the major object of study, while background regions only provide comparatively irrelevant reference information. The steps of the algorithm are as follows:

Figure 3.

Structure of wildlife image progressive transmission process.

- (1)

- Saliency region extraction based on convolution neural networks (CNN) [25], which are utilized to generate the mask image.

- (2)

- The maximum displacement method is applied to ensure the saliency region, in another words, the wildlife region is placed in the highest priority of compression transmission based on SPIHT coding.

- (3)

- To guarantee the transmission efficiency, the EZW coding algorithm is utilized to transmit the background region when the image information of the saliency region is received.

3.1. Mask Image Generation

Redundant background regions may cost undesired transmission bandwidth consumption, as background regions always account for a large percentage of the area of wildlife monitoring images. Therefore, extracting the wildlife region out of the background is the most significant procedure in our experiment. However, the high resolution and complex content in wildlife images aggravate the difficulty in completing saliency object detection and the mask image generation.

In our algorithm, the CNN is applied in the saliency object detection step, as it has been validated to detect the most visually distinctive object regions in the monitoring image. We selected 700 images as the training set, 100 images are used as the validation set and 200 images as the testing set, all of which are with 256 × 256 pixel size. The saliency object region is marked with pixels for model training, and the gray value 0/1 represents the background region/saliency object region.

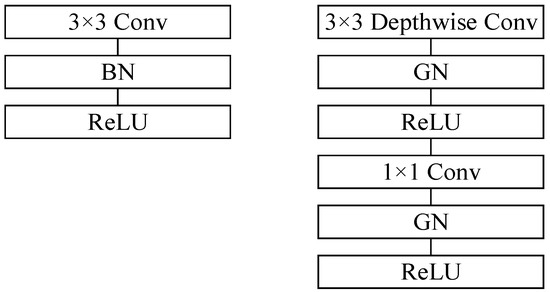

Novel network architecture based on the MobileNet model [26] is utilized in this paper. In the conventional MobileNet model shown in Figure 4, the batch normalization (BN) method is dependent on batch size, BN’s error increases rapidly when the batch size becomes smaller. To solve the impact of batch size on the model, the group normalization method [27] is utilized in this paper, shown as

Figure 4.

MobileNet Model proposed in our algorithm. Left: Standard convolutional layer with batch normalization and ReLU (Rectified Linear Units). Right: Proposed convolutional layer with group normalization and ReLU.

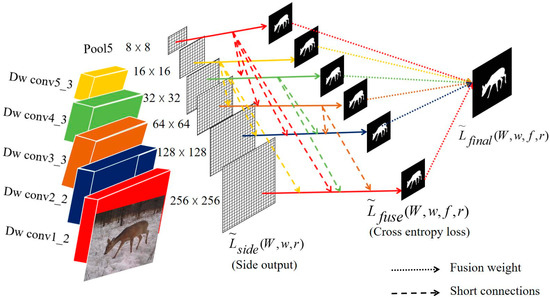

To obtain more detail and texture feature, skip layer short connections [28] which fuses the multi-level features extracted from different scales, is introduced into the network architecture, as shown in Figure 5.

Figure 5.

Skip layer short connections in network architecture.

In the network architecture, the side loss function and fusion loss function are calculated as

where is the weight of the mth side loss and denotes the cross-entropy loss function for side-outputs which is applied from [28]. denotes the distance between the ground truth map and the fused predictions, and the corresponding continuous ground truth saliency map .

Thus, we can get the final loss function

Hyper-parameters used in this section are learning rate (le-7), weight decay (0.0003), momentum (0.9) and loss weight for each side output (1). Our fusion layer weights are initialized at 0.2, and the group size is set to 32.

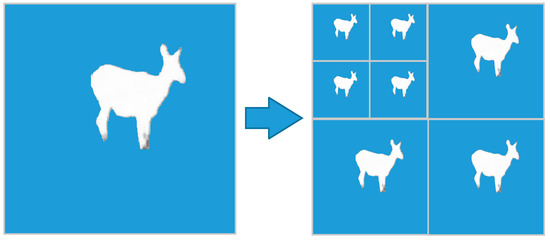

Regarding saliency object detection and extraction, the corresponding coefficient in the saliency and background regions are set to 1 and 0 respectively to obtain the binary mask image, as shown in Figure 6.

Figure 6.

Saliency object detection and extraction. (a) Original image; (b) Saliency object region; (c) Ground truth.

3.2. Progressive Transmission Strategy

After generating the mask of the wildlife region, we should determine which wavelet coefficients belong to the saliency region or the background region in the different levels of wavelet decomposition. In this paper, the mallat wavelet decomposition algorithm is utilized to obtain the wavelet coefficients, which are calculated by

where and are low/high pass filter respectively whose length we set is 4.

To ensure the integrity of the reconstructed image edge, the edge detection based on Canny operator is utilized in this paper, which aims to measure the convolution of the gaussian smoothing filter and the above saliency detection result to obtain the most optimized approximation operator.

where denotes convolutional result. refers to convolution function and is the position of the pixel in saliency result.

Then the partial derivative is obtained by calculating the first-order finite difference of the filter result.

Among them, represents the gradient partial derivative of image in direction and is the gradient partial derivative in direction. Therefore, the pixel amplitude matrix and gradient direction matrix are calculated as shown in the Equations (10) and (11).

Finally, non-maximum suppression is completed through seeking amplitude maximum of the matrix along the gradient direction. The pixels with maximum amplitude are considered as the edge pixel. To make the image edge close, this paper selects double appropriate threshold (high threshold and low threshold). As a consequence, the non-edge points that do not satisfy the threshold condition are removed. Then the connected domain is expanded to get the final edge detection result.

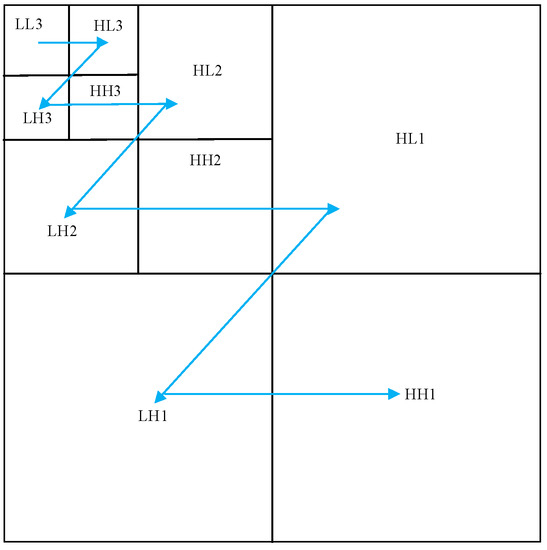

In the process of wavelet decomposition, the mask image is updated simultaneously shown in Figure 7 to specify the classification of wavelet coefficients in the different levels.

Figure 7.

Mask update with wavelet decomposition. Left: The mask of saliency object region. Right: Mask update of three level wavelet decomposition.

For the preferential transmission of the important region, the maximum displacement transmission is applied in this section. The researchers are most interested in the wildlife target while the background region is a supplement for wildlife in the monitoring images. In this paper, we think highly of all wavelet coefficients in the saliency object region over the wavelet coefficients in the background regions. The background information is transmitted after the saliency object region information is completed, which helps to identify the wildlife species in the initial stage of transmission. The maximum displacement transmission strategy firstly calculated the bit plane layer which according to the maximum value of the wavelet coefficients in background region.

where denotes integer operation and represents the maximum wavelet coefficient in the background region.

When the value of the bit plane layer is determined, the wavelet coefficients in the saliency region are multiplied by to ensure that all wavelet coefficients are greater than the maximum value in the background region. At the decoding process, we only need to judge whether or not the wavelet coefficients are greater than . If the coefficients are larger than , we can determine that the coefficients belong to the saliency region. When the bit plane layer is reduced by , the image wavelet coefficients can be restored to obtain the reconstructed image.

3.3. Saliency Object Region Transmission

Wildlife protection and wildlife scientific research, such as wildlife species identification and wildlife individual identification, both require high-resolution and high quality wildlife monitoring images, especially the wildlife itself. Therefore, after the bit plane layer of the saliency region is lifted, we utilized the SPIHT algorithm to achieve lossless compression transmission for saliency region coefficients, which guarantees the transmission quality of important region.

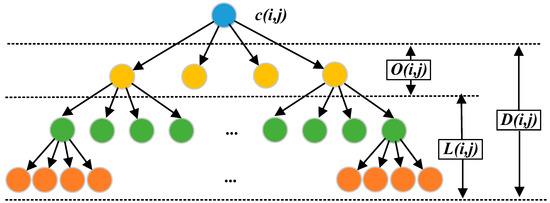

The spatial direction tree structure expressed in Figure 8 is utilized in this section to recombine image wavelet coefficients. In the spatial direction tree structure, each node is represented by coordinates, which are denoted as , and the coefficient of root node is denoted as .

Figure 8.

Three-level wavelet direction tree partition set.

Where denotes the coordinate set of the descendant nodes that belong to node , we can obtain the spatial direction tree by . The node represents the coordinate set of the direct descendant node that belongs to node . .

The function is proposed to evaluate the importance of coefficients, and the coefficient is important while .

where T is the current threshold and the initial threshold is selected as .

Considering the importance of wavelet coefficients according to in different frequency bands, the image wavelet coefficients are scanned by the order of zigzag, which is shown in the Figure 9. The coefficient with a large amplitude can be preferentially scanned, which preserves the main information and improve the quality of the reconstructed image.

Figure 9.

The coefficient scan order of zigzag.

In the wavelet coefficient scanning process, three lists are utilized to store the relevant information of the wavelet coefficients according to their importance: LIP (less significant pixels), LSP (significant pixels) and LIS (less significant sets).

The SPIHT rules set in this section include:

- (1)

- If the coordinate in is greater than the current threshold T, it will be stored in the LSP list.

- (2)

- All elements in are compared with the threshold T. The symbol is used to represent the coefficient set if there is no important element. Otherwise, the is divided into the set and set.

- (3)

- If there are important elements in , they will be stored in the LSP.

- (4)

- The symbol is used to represent the coefficient set when there is no important element. Otherwise, the is splitted into four parts.

- (5)

- Step (5) is performed periodically for each newly generated spatial direction tree until all the important elements are stored in the LSP.

Finally, the wavelet coefficients are refined, coding by successive approximation quantization. The wavelet coefficients are continuously coded according to the update threshold until all wavelet coefficients in the saliency region are coded.

3.4. Background Transmission

To improve the transmission efficiency, the lossy compression EZW algorithm is implemented to encode background information, which has some similarities with the SPIHT coding algorithm.

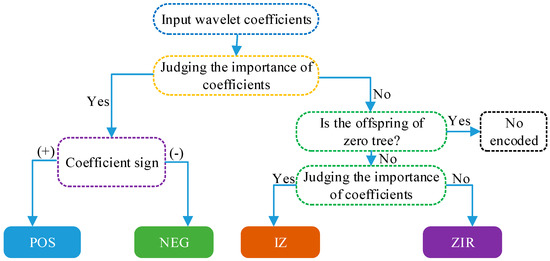

In this section, the zero tree structure, whose definition is that the root nodes and all offspring nodes are unimportant coefficients, we can use an encoding symbol to represent all coefficients in a zero tree, thus greatly improving coding efficiency. In the EZW encoding process, the wavelet coefficients are divided into four symbols by comparing with the threshold T, as shown in Figure 10.

Figure 10.

The encoding process of Embedded Zerotree Wavelets (EZW) algorithm.

When the wavelet coefficient dividing process is finished, the wavelet coefficient scanning and refined coding is completed with the SPIHT coding algorithm in the last section until all coefficients in the background region are coded.

4. Comparison and Discussion

To verify the adaptability and effectiveness of the proposed algorithm, transmission analysis of field-captured wildlife monitoring images is presented. The result is evaluated by several evaluation criteria and it is compared to other conventional algorithms for image transmission.

4.1. Evaluation Criteria

Both peak signal to noise ratio (PSNR) and structural similarity index (SSIM) [29] are utilized as objective criteria to evaluate the quality of image reconstruction.

PSNR is the ratio of the signal maximum possible power to the destructive noise power based on the mean square error (MSE) [30], which affects representation accuracy.

where MN is the total number of pixels in the sample image. is the reconstruction image and is the original image.

The SSIM is another measure of the similarity between reconstructed and original images. It achieves this by calculating the image distortion degree according to the change of image structure information.

where and are the mean value of the luminance in the original and reconstruction image respectively. and are the standard deviation of the luminance. The constants and are used to suppress instability in structural similarity comparison.

4.2. Experiment Result and Analysis

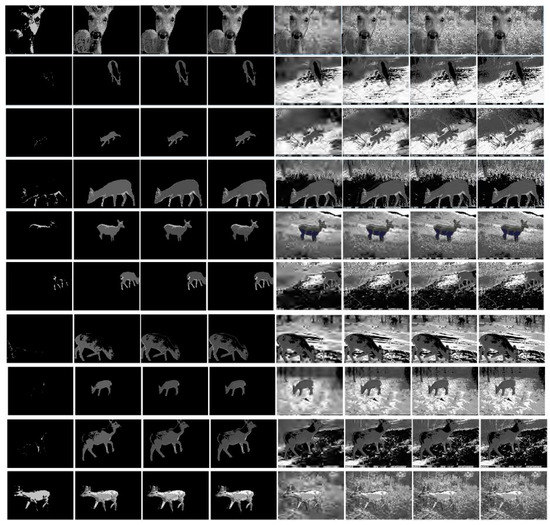

We applied our algorithm to the filed-capture wildlife monitoring images with high resolution, high noise interference and complex background selected from own image dataset [31]. Experimental results are presented from left to right column in Figure 10 as a time progression by setting the bits per pixel (bpp) to 0.1, 0.4, 0.7, 1. All experiments were performed using MATLAB (2014a) in the workstation with Intel (R) Core (TM) i5-4570 and 4GB RAM.

As a proposed method, the saliency region is firstly transmitted through a lossless approach and then the background region in a lossy way [32], which are shown in Figure 11 by the first four and latter four columns separately. In the transmission process, we can quickly use the reconstructed image information to recognize the species of wildlife, such as the progressive transmission effect in columns 2–3. And we can also actively choose to terminate transmission after obtaining satisfactory information.

Figure 11.

The flow chart of progressive transmission effect for wildlife images. The first 4 columns are the progressive transmission progress of saliency object region, and the last 4 columns are the progressive transmission progress of background region.

In the process of image transmission, the background region adopts lossy compression transmission to improve the efficiency of image transmission. We selected the PSNR and SSIM of the full reconstructed image to evaluate the proposed algorithm in this paper. The experimental results are shown in Table 2.

Table 2.

The comparison effect of saliency region and full image reconstructed.

According to above transmission result in Table 2, the average value of PSNR and SSIM in the saliency region (the 4th column in Figure 11) and full image (the 8th column in Figure 11) are 46.1596 dB, 0.9876 and 39.0365 dB, 0.9014 respectively. Therefore, the proposed algorithm is capable of ensuring the reconstruction quality of the saliency object region and the full image reconstruction quality can meet the requirements of forest operators.

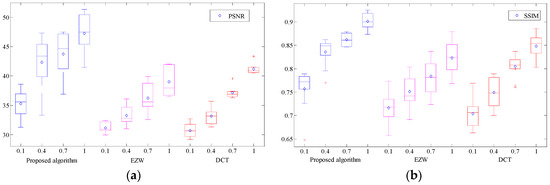

To verify the transmission effect, the EZW and DCT algorithms are compared with our proposed algorithm in this section. As shown in Figure 12, image quality is improved after adding the bpp. The reconstructed image quality corresponding to each bpp is improved when compared with EZW and DCT, which indicates that the scheme of preferentially transmitting the saliency region image is feasible. We can identify the wildlife species in the initial stage of transmission, which provides data support for subsequent research. Besides the average reconstructed results of PSNR and SSIM by our algorithm are 47.2515 and 0.9014 (bpp is set to 1.0), which increased by 21.11%, 14.72% and 9.47%, 6.25% respectively when compared with the EZW and DCT algorithms.

Figure 12.

Comparison result of different algorithms. (a) Average peak signal to noise ratio (PSNR) experiment result. (b) Average structural similarity index (SSIM) experiment result.

The average running time comparison result is shown in Table 3. The EZW algorithm cost the least calculation time, but the transmission quality is not ideal. Lossless coding can guarantee the quality of the transmitted image, while its running time cost is relatively high.

Table 3.

Mean time comparison of transmission.

5. Conclusions

In this paper, we proposed a novel hierarchical coding progressive transmission method for wildlife images, which can achieve progressive transmission of saliency region and background separately. We firstly utilize a convolutional neural network based on MobileNet model to detect the saliency object region for a wildlife image with a complex background. When we obtain the mask image of the wildlife region, a progressive transmission strategy using the maximum displacement method aims to transfer the wavelet coefficients of the saliency region preferentially, and then lossless coding transmission is performed on the saliency region and lossy coding performed on the background region. To demonstrate the efficiency and validation of the proposed method, the images from the field-captured wild monitoring database are processed. Comparison results show that the proposed algorithm has better performance than existing classical algorithms, that is, EZW and DCT. Specifically, the average PSNR and SSIM are increased, respectively, by 21.11%, 14.72% and 9.47%, 6.25%.

Author Contributions

W.F. and J.Z. conceived the experiments; W.F., Y.W. and H.Y. performed the experiments and simulations; W.F. analyzed the data; W.F. wrote the paper; J.Z. and C.H. revised the paper for intellectual content.

Funding

This study was financially supported by National Natural Science Foundation of China (Grant No. 31670553) and Fundamental Research Funds for the Central Universities (Grant No. 2016ZCQ08).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hori, B.; Petrell, R.J.; Fernlund, G.; Trites, A. Mechanical reliability of devices subdermally implanted into the young of long-lived and endangered wildlife. J. Mater. Eng. Perform. 2012, 21, 1924–1931. [Google Scholar] [CrossRef]

- Gor, M.; Vora, J.; Tanwar, S.; Tyagi, S.; Kumar, N.; Obaidat, M.S.; Sadoun, B. GATA: GPS-Arduino based Tracking and Alarm system for protection of wildlife animals. In Proceedings of the 6th International Conference on Computer, Information and Telecommunication Systems, Dalian, China, 21–23 July 2017; pp. 166–170. [Google Scholar]

- Pérez, J.M.L.; de la Varga, M.E.A.; García, J.J.; Lacasa, V.R.G. Monitoring lidia cattle with GPS-GPRS technology: A study on grazing behaviour and spatial distribution. Vet. Mex. 2017, 4, 1–17. [Google Scholar]

- Fernández-Caballero, A.; López, M.; Serrano-Cuerda, J. Thermal-infrared pedestrian ROI extraction through thermal and motion information fusion. Sensors 2014, 14, 6666–6676. [Google Scholar] [CrossRef] [PubMed]

- Handcock, R.N.; Swain, D.L.; Bishop-Hurley, G.J.; Patison, K.P.; Wark, T.; Valencia, P.; Corke, P.; O’Neill, C.J. Monitoring Animal Behaviour and Environmental Interactions Using Wireless Sensor Networks, GPS Collars and Satellite Remote Sensing. Sensors 2009, 9, 3586–3603. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Fapojuwo, A.O.; Davies, R.J. A wireless sensor network for feedlot animal health monitoring. IEEE Sens. J. 2016, 16, 6433–6446. [Google Scholar] [CrossRef]

- Li, X.H. Energy efficient wireless sensor networks with transmission diversity. Electron. Lett. 2003, 39, 1753–1755. [Google Scholar] [CrossRef]

- Poornachandra, S.; Ravichandran, V.; Kumaravel, N. Mapping of discrete cosine transform (DCT) and discrete sine transform (DST) based on symmetries. IETE J. Res. 2003, 49, 35–42. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, A.; Singh, G.K. Electrocardiogram signal compression based on singular value decomposition (SVD) and adaptive scanning wavelet difference reduction (ASWDR) technique. AEU Int. J. Electron. Commun. 2015, 69, 1810–1822. [Google Scholar] [CrossRef]

- Kong, W.B.; Zhou, H.X.; Zheng, K.L.; Mu, X.; Hong, W. FFT-based method with near-matrix compression. IEEE Trans. Antennas Propag. 2017, 65, 5975–5983. [Google Scholar] [CrossRef]

- Cheepurupalli, V.; Tubbs, S.; Boykin, K.; Naheed, N. Comparison of SVD and FFT in image compression. In Proceedings of the International Conference on Computational Science and Computational Intelligence, CSCI 2015, Las Vegas, NV, USA, 7–9 December 2015; pp. 526–530. [Google Scholar]

- Hung, K.L.; Chang, C.C.; Lin, I.C. Lossless compression-based progressive image transmission scheme. Imaging Sci. J. 2004, 52, 212–224. [Google Scholar] [CrossRef]

- Du, Y.G.; Li, Z.Y.; Stojmenovic, M.; Qu, W.Y.; Qi, H. A Low Overhead Progressive Transmission for Visual Descriptor Based on Image Saliency. J. Mult. Valued Logic Soft Comput. 2015, 25, 125–145. [Google Scholar]

- Baeza, I.; Verdoy, J.A.; Villanueva, R.J.; Villanueva-Oller, J. SVD lossy adaptive encoding of 3D digital images for ROI progressive transmission. Image Vision Comput. 2010, 28, 449–457. [Google Scholar] [CrossRef]

- Rubino, E.M.; Centelles, D.; Sales, J.J.; Marti, V.; Marin, R.; Sanz, P.J.; Alvares, A.J. Underwater radio frequency image sensor using progressive image compression and region ofinterest. J. Braz. Soc. Mech. Sci. Eng. 2017, 39, 4115–4134. [Google Scholar] [CrossRef]

- Osamu, W.; Hitoshi, K. An extension of ROI-based scalability for progressive transmission in JPEG2000 coding. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2003, E86A, 765–771. [Google Scholar]

- Baeza, I.; Verdoy, J.A.; Villanueva-Oller, J.; Villanueva, R.J. ROI-based procedures for progressive transmission of digital images: A comparison. Math. Comput. Model. 2009, 50, 849–859. [Google Scholar] [CrossRef]

- Moghaddam, B.; Biermann, H.; Margaritis, D. Defining image content with multiple regions-of interest. In Proceedings of the IEEE Workshop on Content-Based Access of Image and Video Libraries, Fort Collins, CO, USA, 20–22 June 1999; pp. 89–93. [Google Scholar]

- Elazary, L.; Itti, L. A Bayesian model for efficient visual search and recognition. Vis. Res. 2010, 50, 1338–1352. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Dai, L.; Cai, Y.F.; Chen, L.; Zhang, Y. Saliency detection by multilevel deep pyramid model. J. Sens. 2018, 2018. [Google Scholar] [CrossRef]

- Xiang, T.; Qu, J.Y.; Xiao, D. Joint SPIHT compression and selective encryption. Appl. Soft Comput. 2014, 21, 159–170. [Google Scholar] [CrossRef]

- Jang-Won, K. Improvement of Image Compression Using EZW Based in HWT. J. Korea Inst. Inf. Commun. Eng. 2011, 15, 2641–2646. [Google Scholar]

- Murray, N.; Vanrell, M.; Otazu, X.; Parraga, C.A. Saliency estimation using a non-parametric low-level vision model. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 433–440. [Google Scholar]

- Lin, Q.; Xu, X.G.; Zhan, Y.Z.; Liao, D.A. Extracting regions of interest based on visual attention model. In Proceedings of the 2011 International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 313–316. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Andrew, G.H.; Zhu, M.L.; Chen, B.; Dmitry, K.; Wang, W.J.; Tobias, W.; Marco, A.; Hartwig, A. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv, 2017; arXiv:1704.04861. [Google Scholar]

- Wu, Y.X.; He, K.M. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.B.; Cheng, M.M.; Hu, X.W.; Borji, A.; Tu, Z.W.; Torr, P. Deeply supervised salient object detection with short connections. IEEE Trans. Pattern Anal. Mach. Intell. 2018. [Google Scholar] [CrossRef] [PubMed]

- Rawat, C.; Meher, S. A hybrid image compression scheme using DCT and fractal image compression. Int. Arab J. Inf. Technol. 2013, 10, 553–562. [Google Scholar]

- Lee, C.; Youn, S.; Jeong, T.; Lee, E.; Sagrista, J.S. Hybrid compression of hyperspectral images based on PCA with pre-encoding discriminant information. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1491–1495. [Google Scholar]

- Feng, W.Z.; Zhang, J.G.; Hu, C.H.; Wang, Y.; Xiang, Q.M.; Yan, H. A novel saliency detection method for wild animal monitoring images with WMSN. J. Sens. 2018, 2018. [Google Scholar] [CrossRef]

- Hernández-Cabronero, M.; Blanes, I.; Pinho, A.J.; Marcellin, M.W.; Serra-Sagrista, J. Progressive lossy-to-lossless compression of DNA microarray images. IEEE Signal Process. Lett. 2016, 23, 698–702. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).