Detection of Performance of Hybrid Rice Pot-Tray Sowing Utilizing Machine Vision and Machine Learning Approach

Abstract

1. Introduction

2. Materials and Methods

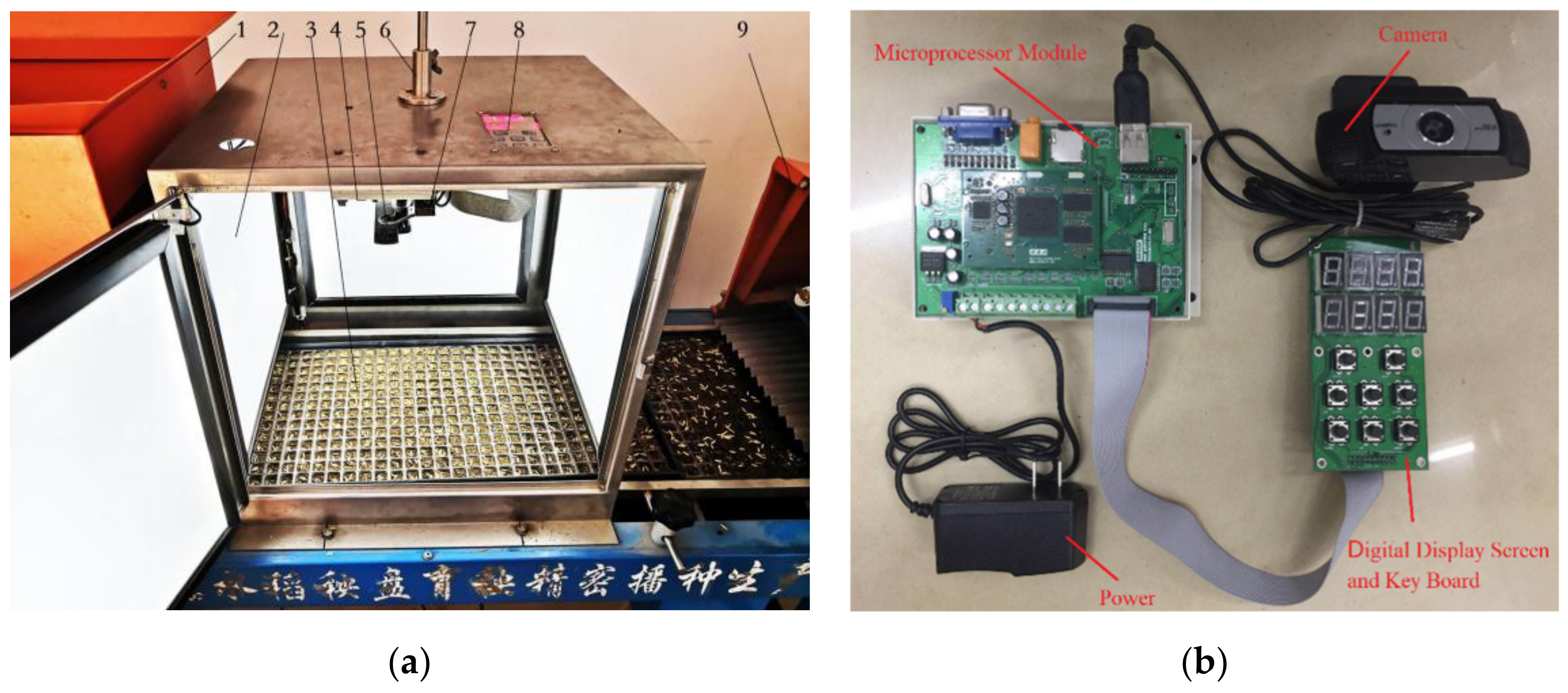

2.1. Device and Tools

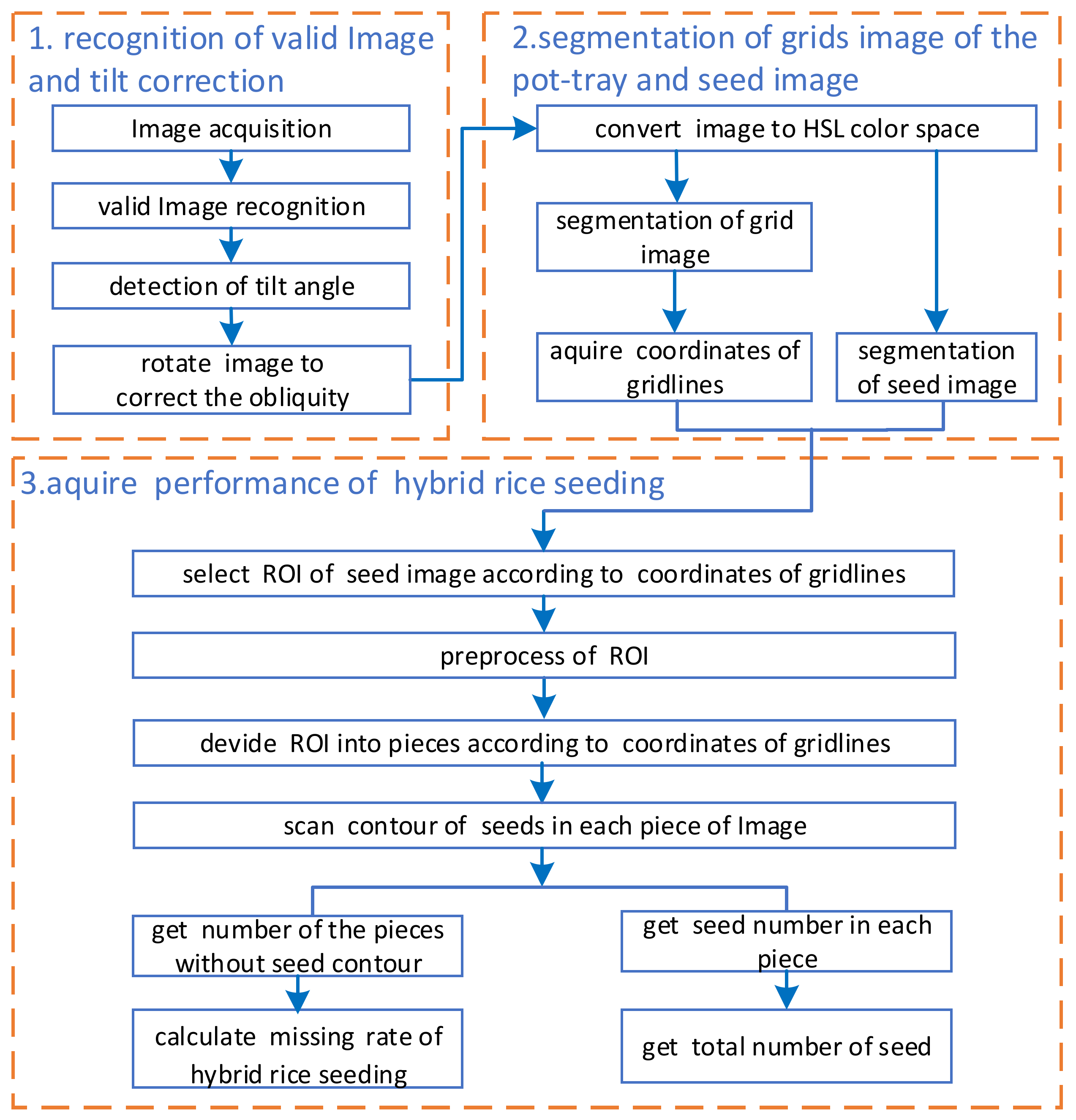

2.2. Algorithm for Seeding Performance Detection

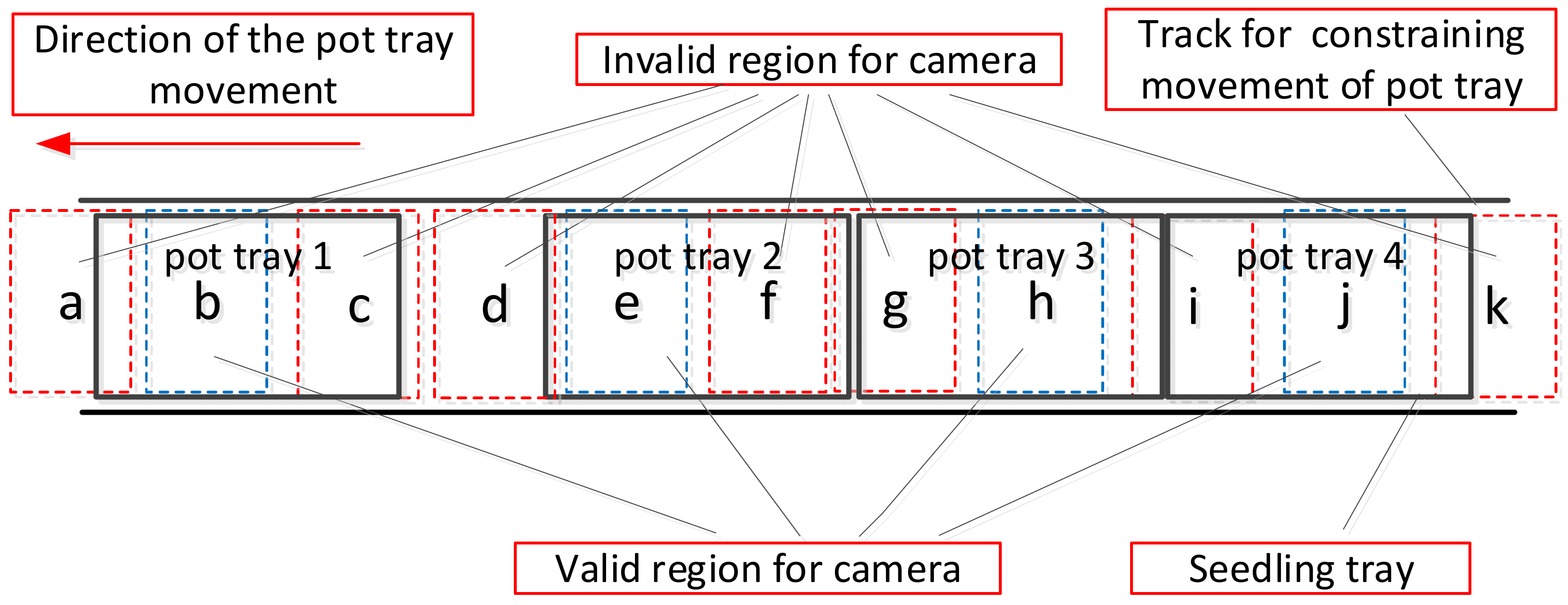

2.2.1. Recognition of Valid Images

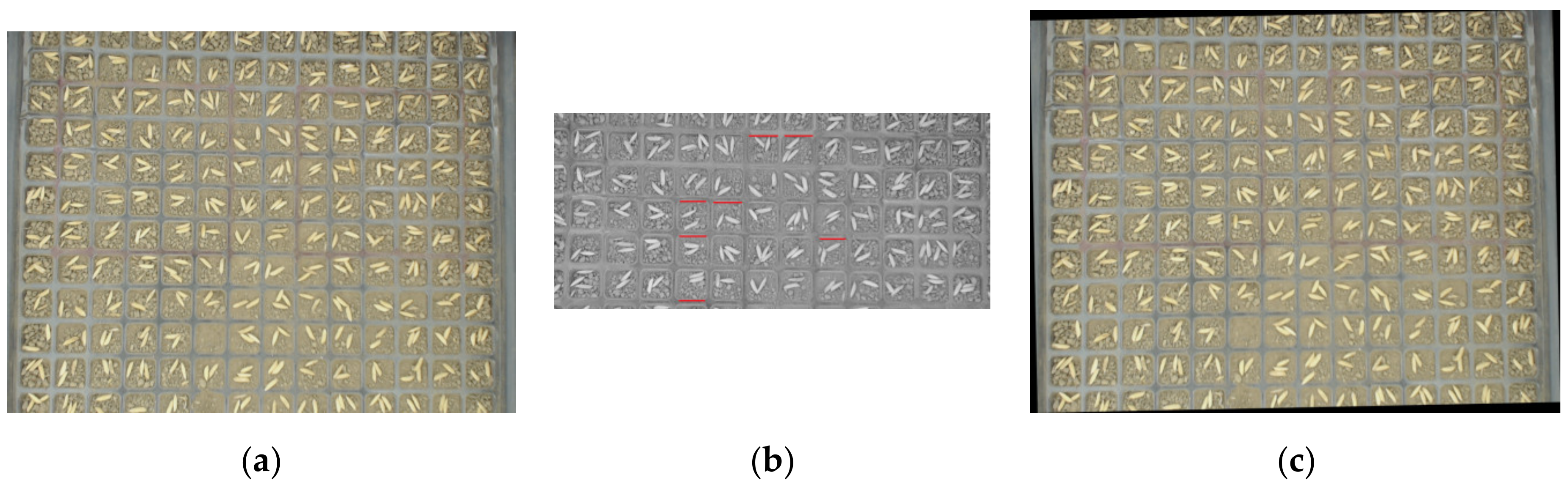

2.2.2. Image Tilt Angle Detection and Correction

Detection of Image Obliquity

Image Tilt Correction

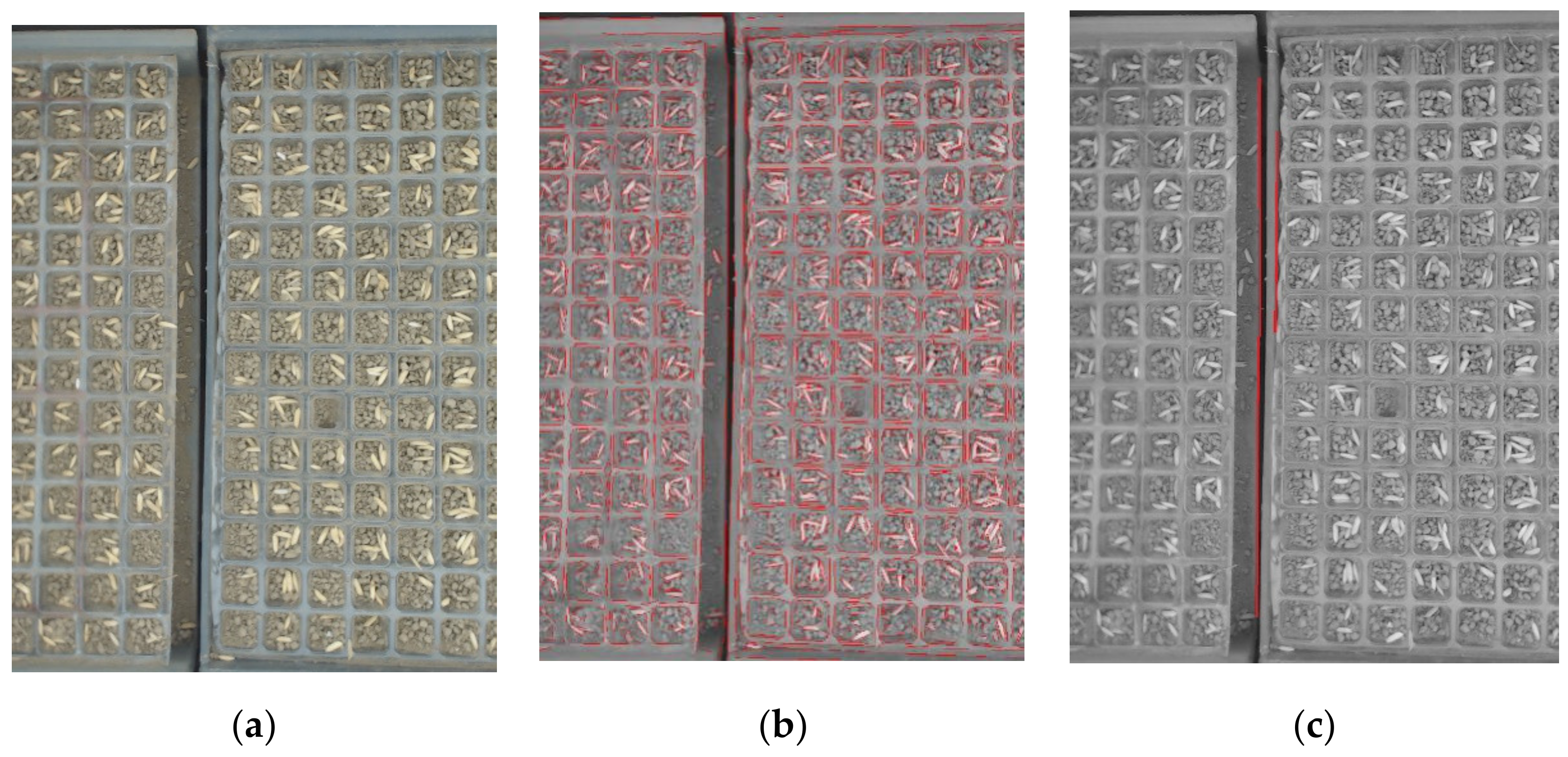

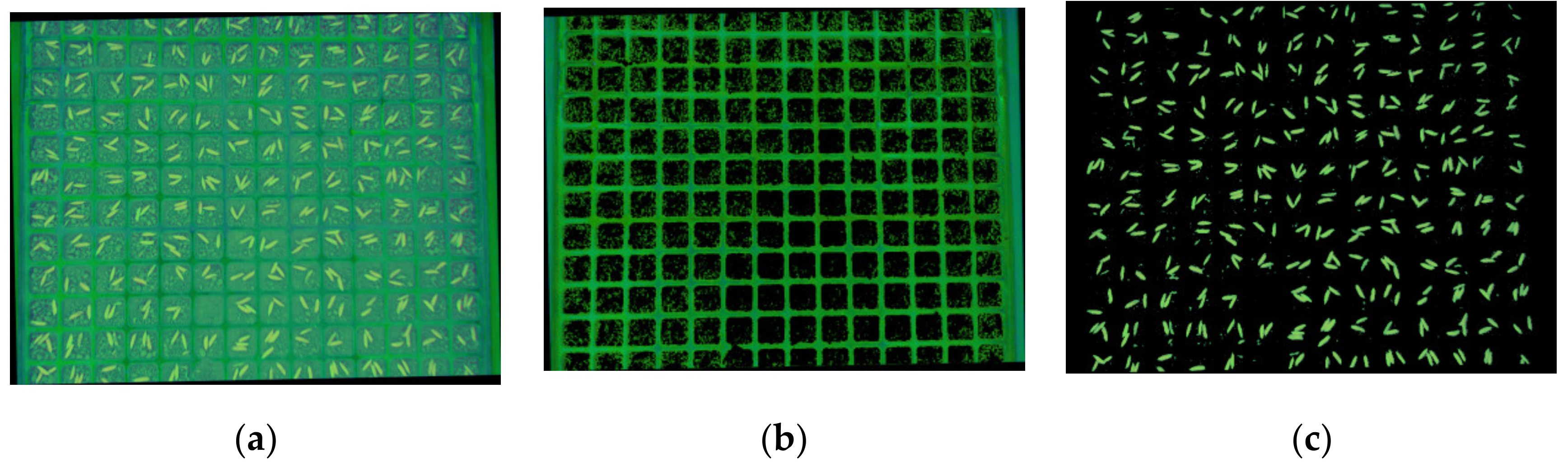

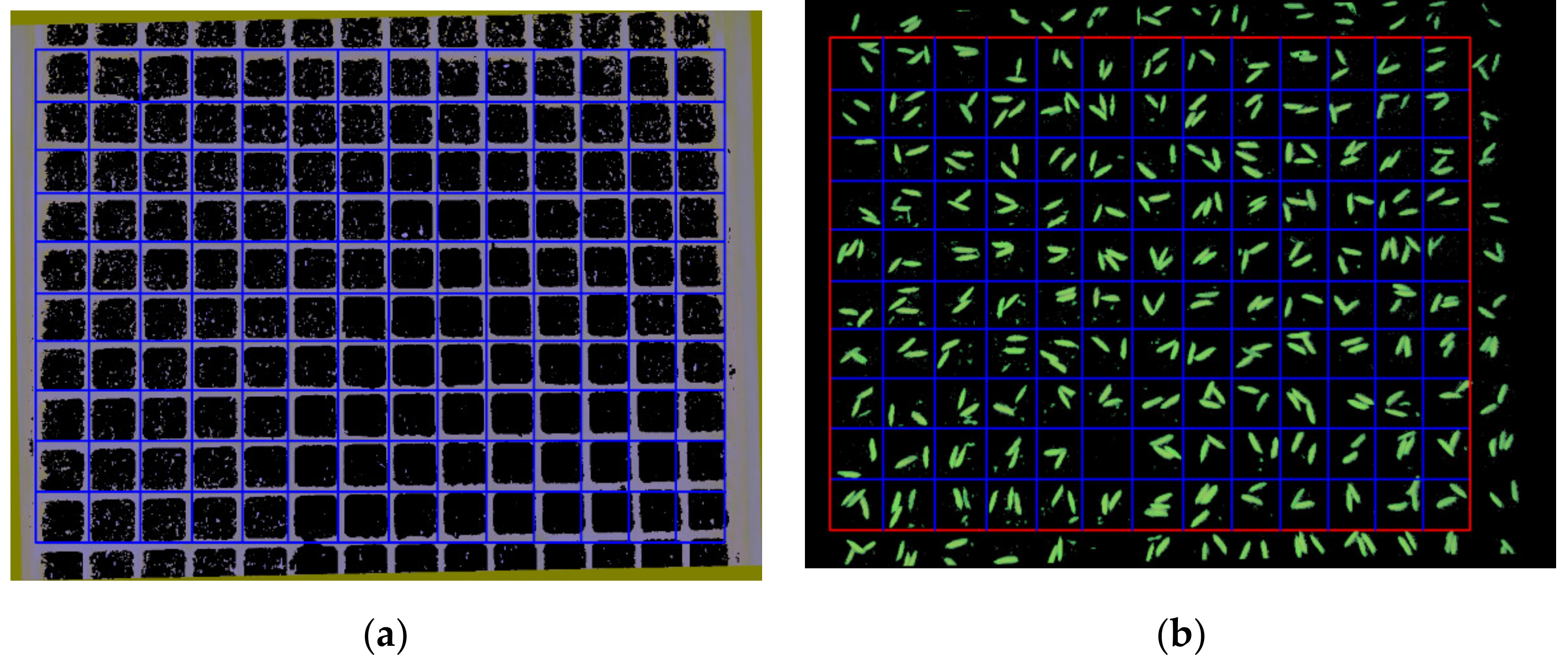

2.2.3. Segmentation of Grid and Seed Images

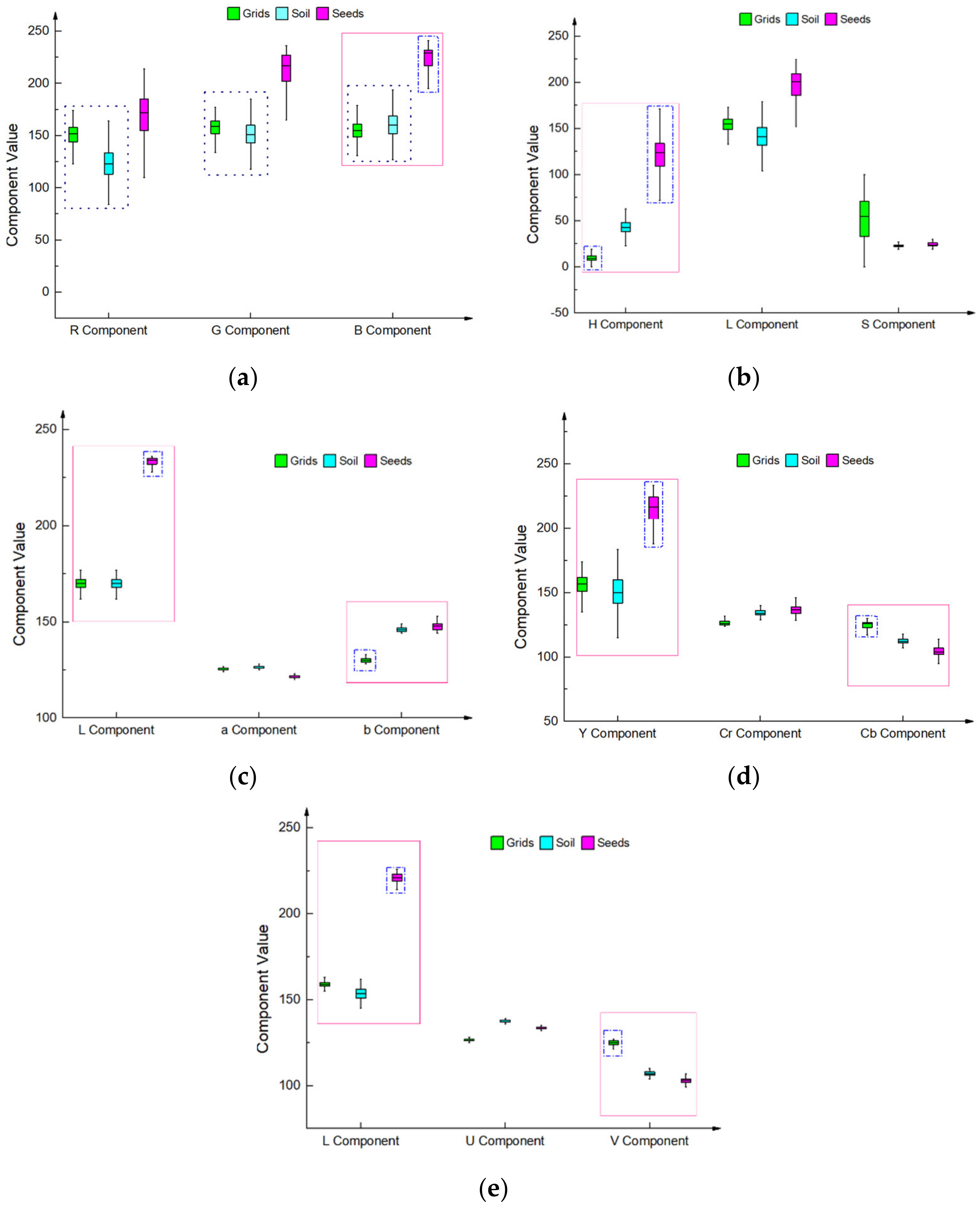

Analysis of Different Regions of the Image

Steps for Fixed Threshold Segmentation

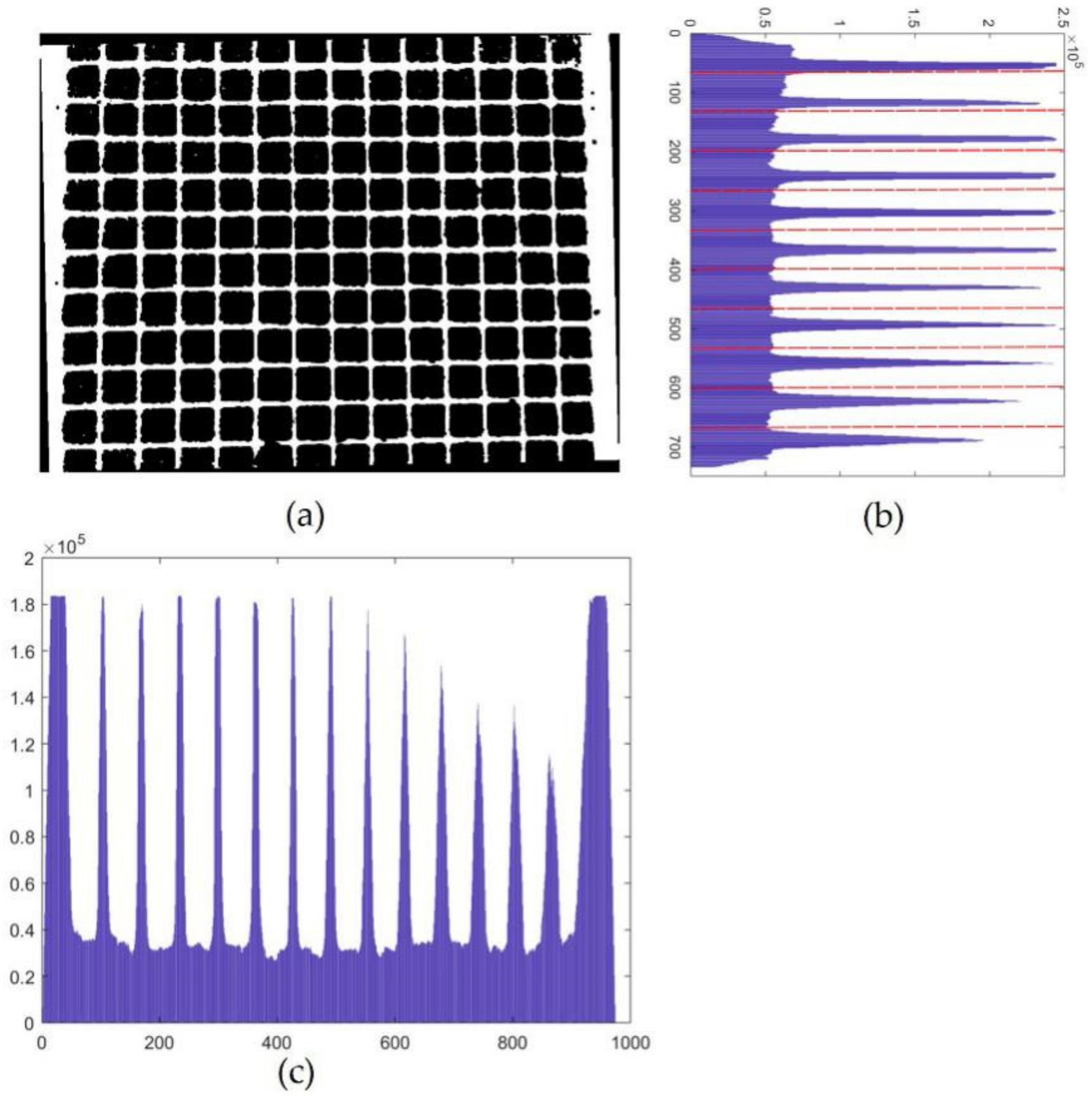

2.2.4. Obtaining Pixel Coordinates of Gridlines

2.2.5. ROI Selection of Seed Images

2.2.6. Preprocessing the ROI

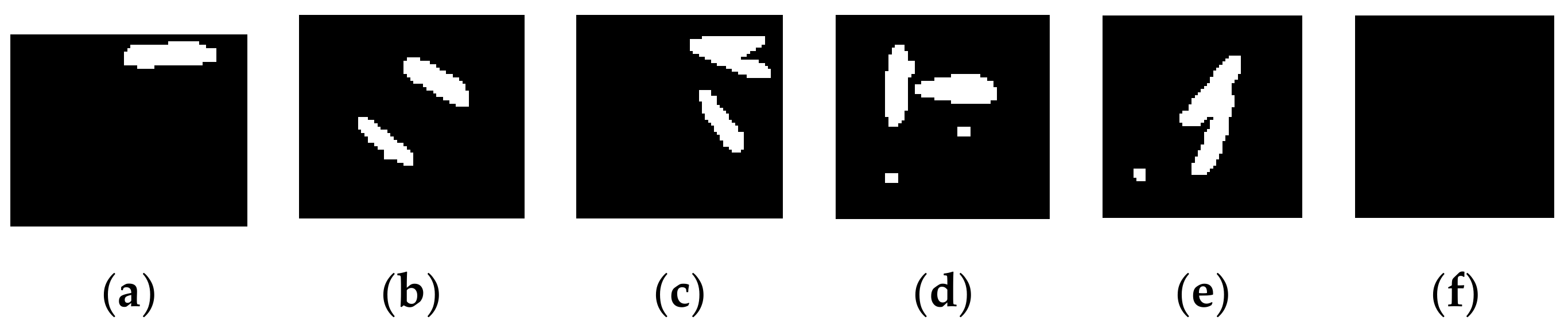

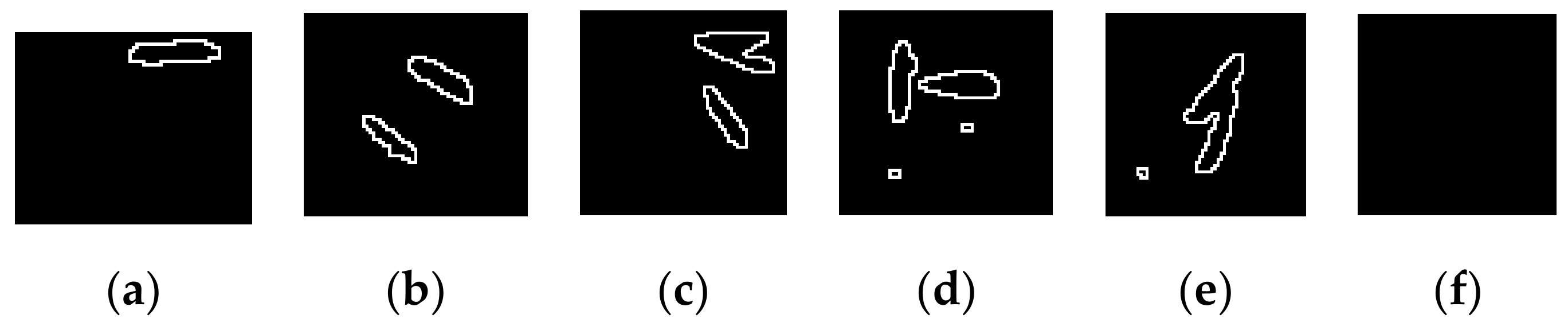

2.2.7. Detection of the Missing Rate

2.2.8. Detection of the Seed Number

3. Results and Discussions

3.1. Performance of Missing Rate Detection

3.2. Performance of Seed Number Detection

3.3. Discussion

- (1)

- There were some mildewed seeds in the experiment, and their color was very similar to the soil on the background. This made it difficult to use the fixed segment algorithm to completely segment the mildewed seeds from the image. Only small partial images of mildewed seeds could be segmented. The contour area of partial seeds was very small. The contour was eventually removed due to its small size when filtering. This resulted in the detected number of holes without the seeds being larger than that of a real situation.

- (2)

- There were big soil particles in the cells and the particles covered parts of seeds in the cells. Only small parts of seeds that were not covered by soil particles were captured by the camera. The contour area of these parts was so small that it was removed when the contours were filtered, which resulted in the number of empty cells being larger than it should be.

- (3)

- There were very few impurities in the subsoil, whose color is similar to that of the seeds. Some were big enough to be mistaken as parts of seeds, which decreased the number of empty cells, and resulted in a smaller missing rate.

4. Conclusions

- (1)

- An embedded seeding performance detection system was developed while using the embedded technology and machine vision technique. The proposed system can be integrated into the rice seedling nursery production line to evaluate the seeding performance on the go.

- (2)

- The component values of different parts of the image were analyses using different color models. A fixed threshold segmentation method was proposed based on the HSL model. The grid and seed images were extracted while using the proposed method with an average segmentation accuracy of 99.45%.

- (3)

- An algorithm was developed for calculating the missing rate of the seedling production line. The detection accuracy was 93.33% with an average processing time of 4.863 s, which was lower than the tray passing time of 7.2 s at a production rate of 500 trays per hour. This enabled the detection to be a real-time operation.

- (4)

- The number of seeds was also measured while using a machine learning approach and the average accuracy was 95.68%.

Author Contributions

Funding

Conflicts of Interest

References

- Ma, G.; Yuan, L. Hybrid rice achievements, development and prospect in China. J. Integr. Agric. 2015, 14, 197–205. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, D.; Zhao, Y. Effects of broadcast sowing and precision drilling of super rice seed on seedling quality and effectiveness of mechanized transplanting. Trans. Chin. Soc. Agric. Eng. 2009, 25, 99–103. (In Chinese) [Google Scholar]

- Jia, H.; Lu, Y.; Qi, J. Detecting seed suction performance of air suction feeder by photoelectric sensor combined with rotary encoder. Trans. Chin. Soc. Agric. Eng. 2018, 34, 28–39. (In Chinese) [Google Scholar]

- Kocher, M.F.; Ina, Y.; Chen, C. Opto-electronic sensor system for rapid evaluation of Planter seed spacing uniformity. Trans. Asae 1998, 14, 237–245. [Google Scholar] [CrossRef]

- Edwin, B.; John, A.; Andrés, J.; Vicente, G.D. A machine vision system for seeds quality evaluation using fuzzy logic. Comput. Electr. Eng. 2018, 71, 533–545. [Google Scholar]

- Hemad, Z.; Saeid, M.; Mohammad, R.A.; Ahmad, B. A hybrid intelligent approach based on computer vision and fuzzy logic for quality measurement of milled rice. Measurement 2015, 66, 26–34. [Google Scholar]

- Leemans, V.; Destain, M.F. A computer-vision based precision seed drill guidance assistance. Comput. Electron. Agric. 2007, 59, 1–12. [Google Scholar] [CrossRef][Green Version]

- Kim, D.E.; Chang, Y.S.; Kim, H.H. An Automatic Seeding System Using Machine Vision for Seed Line-up of Cucurbitaceous Vegetables. J. Biosyst. Eng. 2007, 32, 163–168. [Google Scholar] [CrossRef]

- Zhang, G.; Jayas, D.S.; White, N.D.G. Separation of touching grain kernels in an image by ellipse fitting algorithm. Biosyst. Eng. 2005, 92, 135–142. [Google Scholar] [CrossRef]

- Qi, L.; Ma, X.; Zhou, H. Seeding cavity detection in tray nursing seedlings of super rice based on computer vision technology. Trans. Csae 2009, 25, 121–125. (In Chinese) [Google Scholar]

- Tan, S.; Ma, X.; Mai, Z.; Qi, L.; Wang, Y. Segmentation and counting algorithm for touching hybrid rice grains. Comput. Electron. Agric. 2019, 162, 493–504. (In Chinese) [Google Scholar] [CrossRef]

- Tan, S.; Ma, X.; Wu, L.; Li, Z. Estimation on hole seeding quantity of super hybrid rice based on machine vision and BP neural net. Trans. Chin. Soc. Agric. Eng. 2014, 30, 201–208. (In Chinese) [Google Scholar]

- Tan, S.; Ma, X.; Qi, L. Fast and robust image sequence mosaicking of nursery plug tray images. Int. J. Agric. Biol. Eng. 2018, 11, 197–204. [Google Scholar] [CrossRef]

- Qiu, A.; Wu, W.; Qiu, Z. Leaf Area Measurement Using Android OS Mobile Phone. Trans. Chin. Soc. Agric. Mach. 2013, 44, 203–208. [Google Scholar]

- Liu, H.; Ma, X.; Tao, M.; Deng, R.; Bangura, K.; Deng, X.; Liu, C.; Qi, L. A Plant Leaf Geometric Parameter Measurement System Based on the Android Platform. Sensors 2019, 19, 1872. [Google Scholar] [CrossRef]

- Tu, K.; Li, L.; Yang, L.; Wang, J.; Sun, Q. Selection for high quality pepper seeds by machine vision and classifiers. J. Integr. Agric. 2018, 17, 1999–2006. [Google Scholar] [CrossRef]

- Ma, Z.; Mao, Y.; Liang, G. Smartphone-Based Visual Measurement and Portable Instrumentation for Crop Seed Phenotyping. IFAC PapersOnLine 2016, 49, 259–264. [Google Scholar]

- Tan, S.; Ma, X. Design of Rice nursery tray images wireless transmission system based on embedded machine vision. Trans. Chin. Soc. Agric. Mach. 2017, 48, 22–28. (In Chinese) [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Mancybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

| Color Space | HSL | Lab | YCrCb | LUV |

|---|---|---|---|---|

| Time to convert (ms) | 191 | 612 | 598 | 602 |

| Component of Different Parts | Color Component | ||

|---|---|---|---|

| H | S | L | |

| Component value of grids | 3–30 | 10–88 | 108–173 |

| Component value of soil | 28–68 | 17–28 | 83–187 |

| Component value of seeds | 70–168 | 18–31 | 153–232 |

| Contour Type | Type 1 | Type 2 | Type 3 | Type 4 | Type 5 |

|---|---|---|---|---|---|

| Sample number | 2280 | 1216 | 822 | 620 | 586 |

| Code | Total Cells | Manual Measurement of Empty Cells | System Measurement of Empty Cells | Manual Measurement of Missing Rate (%) | System Measurement of Missing Rate (%) | Relative Error (%) |

|---|---|---|---|---|---|---|

| 1 | 390 | 10 | 11 | 2.56 | 2.82 | 10.00 |

| 2 | 390 | 9 | 10 | 2.31 | 2.56 | 11.11 |

| 3 | 390 | 8 | 8 | 2.05 | 2.05 | 0.00 |

| 4 | 390 | 7 | 7 | 1.79 | 1.79 | 0.00 |

| 5 | 390 | 10 | 11 | 2.56 | 2.82 | 10.00 |

| 6 | 390 | 9 | 10 | 2.31 | 2.56 | 11.11 |

| 7 | 390 | 6 | 6 | 1.54 | 1.54 | 0.00 |

| 8 | 390 | 5 | 5 | 1.28 | 1.28 | 0.00 |

| 9 | 390 | 9 | 10 | 2.31 | 2.56 | 11.11 |

| 10 | 390 | 4 | 4 | 1.03 | 1.03 | 0.00 |

| 11 | 390 | 8 | 9 | 2.05 | 2.31 | 12.50 |

| 12 | 390 | 3 | 3 | 0.77 | 0.77 | 0.00 |

| 13 | 390 | 6 | 6 | 1.54 | 1.54 | 0.00 |

| 14 | 390 | 7 | 7 | 1.79 | 1.79 | 0.00 |

| 15 | 390 | 10 | 11 | 2.56 | 2.82 | 10.00 |

| 16 | 390 | 11 | 12 | 2.82 | 3.08 | 9.09 |

| 17 | 390 | 9 | 10 | 2.31 | 2.56 | 11.11 |

| 18 | 390 | 7 | 7 | 1.79 | 1.79 | 0.00 |

| 19 | 390 | 5 | 4 | 1.28 | 1.03 | 20.00 |

| 20 | 390 | 7 | 7 | 1.79 | 1.79 | 0.00 |

| Average | 390 | 7.5 | 7.95 | 1.92 | 2.03 | 5.33 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, W.; Ma, X.; Li, H.; Tan, S.; Guo, L. Detection of Performance of Hybrid Rice Pot-Tray Sowing Utilizing Machine Vision and Machine Learning Approach. Sensors 2019, 19, 5332. https://doi.org/10.3390/s19235332

Dong W, Ma X, Li H, Tan S, Guo L. Detection of Performance of Hybrid Rice Pot-Tray Sowing Utilizing Machine Vision and Machine Learning Approach. Sensors. 2019; 19(23):5332. https://doi.org/10.3390/s19235332

Chicago/Turabian StyleDong, Wenhao, Xu Ma, Hongwei Li, Suiyan Tan, and Linjie Guo. 2019. "Detection of Performance of Hybrid Rice Pot-Tray Sowing Utilizing Machine Vision and Machine Learning Approach" Sensors 19, no. 23: 5332. https://doi.org/10.3390/s19235332

APA StyleDong, W., Ma, X., Li, H., Tan, S., & Guo, L. (2019). Detection of Performance of Hybrid Rice Pot-Tray Sowing Utilizing Machine Vision and Machine Learning Approach. Sensors, 19(23), 5332. https://doi.org/10.3390/s19235332