Abstract

Reconstructing images from multi-view projections is a crucial task both in the computer vision community and in the medical imaging community, and dynamic positron emission tomography (PET) is no exception. Unfortunately, image quality is inevitably degraded by the limitations of photon emissions and the trade-off between temporal and spatial resolution. In this paper, we develop a novel tensor based nonlocal low-rank framework for dynamic PET reconstruction. Spatial structures are effectively enhanced not only by nonlocal and sparse features, but momentarily by tensor-formed low-rank approximations in the temporal realm. Moreover, the total variation is well regularized as a complementation for denoising. These regularizations are efficiently combined into a Poisson PET model and jointly solved by distributed optimization. The experiments demonstrated in this paper validate the excellent performance of the proposed method in dynamic PET.

1. Introduction

Positron emission tomography (PET) seeks to obtain radioactivity distributions by collecting numerous photons emitted by the annihilations of positrons that come from the isotope-labeled tracer injected in living tissue. Correspondently, considering the unique traits of physiological and molecular imaging, PET is universally and irreplaceably required in clinic imaging, especially in cancer diagnoses [1], lesion detection [2], and functional supervision in vivo. However, despite its predominance for functional imaging, PET is dwarfed in resolution due to limitations either from acquisition time or the injection dose when compared with other structural medical imaging systems such as magnetic resonance imaging (MRI) and computed tomography (CT). Moreover, in dynamic PET imaging, where the time-varying radioactivity concentration at each spatial location is obtained, the structural information and denoising performance are more critical, along with increasing demands for time activity curves (TAC) for different regions of interest (ROI). Under this framework, reliable algorithms for dynamic PET reconstruction have been discussed and debated for decades.

Initial attempts at PET reconstruction included the famous analytic filtered back-projected (FBP) [3] based algorithms, least squares (LS) [4], and the maximum likelihood-expectation maximization (ML-EM) [5] method. Although milestones, problematic conditions [6] always accompany the optimization of the mentioned algorithms (i.e., the solution might be sensitive to trivial fluctuation and thus consequently increase noise along the iterations). Thus, much effort has been devoted to this topic, among which the maximum a posteriori (MAP) [7] strategy, or penalized ML method [6], have become the key to the solution. The main idea for this strategy is to integrate regularization terms, or image priors from a Bayesian perspective, into the reconstruction model. There are various representative regularizations, including the weighted quadratic penalty [8], Gibbs prior [9], Gauss–Markov prior [10], Huber prior [11], total variation [12], and so on. More recently, especially for dynamic imaging, temporal priors are starting to be introduced in reconstruction (e.g., the tracer kinetics model [13,14,15], kernel model [16], and dose estimation [17]).

Nevertheless, in spite of the significant progress achieved by researchers, traditional reconstruction algorithms are still open to question. For one thing, traditional methods, to a large extent, only focus on denoising behavior while ignoring the structural information within the images. This has undoubtedly created a greater divide within the increasing clinical demand for resolution. The other problem, which is of greater significance, is that the trade-off between the temporal and the spatial resolution constantly degrades the quality of the dynamic reconstruction (i.e., a better temporal resolution requires more frequent sampling within a given duration, which causes fewer photon counts for each frame, thus undermining the spatial resolution, and vice versa).

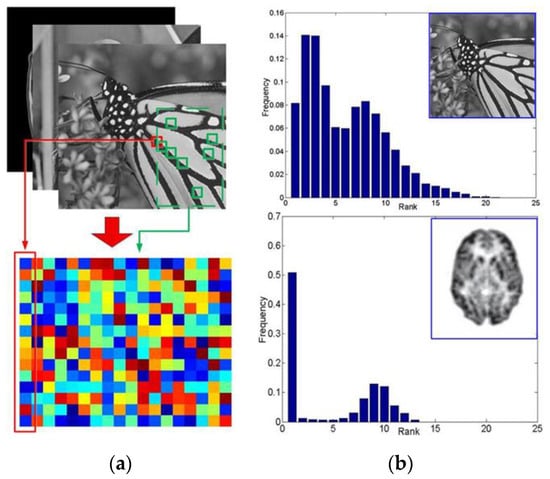

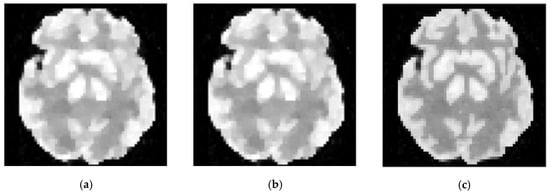

In this paper, we explore a potential solution for the mentioned dilemma by providing a novel tensor based nonlocal low-rank framework in dynamic PET reconstruction. Several efforts have been devoted to related topics. Low rank decomposition [18] and non-negative matrix factorization [19,20,21], on the one hand, have been found to be able to capture the inner temporal correlation in a dynamic PET. On the other hand, the nonlocal feature, which refers to the abundant self-similar structures within an image, are excellently adopted in image denoising (e.g., the nonlocal means (NLM) [22], weighted nuclear norm minimization (WNNM) [23], block-matching 3D denoising (BM3D) [24], nonlocal restoration [25], and nonlocal representation [26]). Moreover, in [27], Dong et al. demonstrate the existence of nonlocal self-similarities and sparse features in MRI images. As shown in Figure 1, nonlocal low-rank [28,29] features exist not only in ordinary natural images but also in PET reconstructed images. Furthermore, as will be illustrated in Section 3, we promote the use of matrix based nonlocal low-rank features in a novel tensor form and successfully apply it to dynamic PET reconstruction. In this way, the structures are not only enhanced spatially by the image itself but simultaneously completed by relevant frames across the temporal dimension.

Figure 1.

The nonlocal low-rank validation on images. For each random-selected exemplar patch (e.g., 5 × 5 sized), there can be found ample similar patches within the image itself. (a) By stretching the exemplar patch and its similar patches into vectors, a corresponding feature matrix (e.g., 25 × 30 sized) can be formulated. (b) The rank distribution for the matrices which is formulated from given images. Up: the monarch; Down: Positron emission tomography (PET) scan of an Alzheimer’s patient’s brain.

The main contributions are listed as follows:

- (1)

- An innovative form of a nonlocal low rank tensor constraint is adopted in the Poisson’s model, which captures data correlation in multiple dimensions in dynamic PET, beyond just spatiotemporal correlation. For one thing, without any additional information, Poisson’s model exploits the temporal information among the frames themselves, effectively complementing the structures in low-active frames and recovering severely corrupted data. For the other, it exploits the spatial information from nonlocal self-similarities within each frame, thereby enhancing the structured sparsity for each image.

- (2)

- As an additional regularization, the total variation (TV) constraint is employed to extract local structure and further complement the denoising function. On the other hand, the expectation–maximization method is employed as a fidelity term to incorporate hidden data in the objective function and thus increase efficiency in optimization.

- (3)

- In the optimization procedure, we develop a distributed optimization framework inspired by the alternative direction method of multipliers (ADMM) [30]. In this way, the mentioned terms can be explicitly organized in a united objective function and effectively handled as three subproblems during the iterations.

2. Background

2.1. Dynamic PET Imaging Model

Typically, during the detection procedure, the PET scanner collects the emitted photons and then pre-processes them into so-called sinogram data as the input of the reconstruction. For dynamic PET, let the sinogram matrix denote the collection of sinogram data vectors, where denotes the index of the frames; and sinogram vector = , denotes the sum of the photons collected in the t-th frame, where q represents the index of the total M pairs of detectors. Correspondently, denotes the collection of the images that are supposed to be recovered, where vector represents the t-th frame. Since Poisson distribution uses inherited PET systems, the reconstruction of each frame can be successfully modeled by the affine transformation:

where is the expectation of ; is the system matrix; and and represent the random coincidence and scatter coincidence, which inevitably contain heavy noise. In this way, we can obtain the likelihood function of as

Instead of maximizing Label (2), we estimate by minimizing the negative log-likelihood version for the convenience of optimization:

where the constant term is left out.

Therefore, in the scale of dynamic reconstruction, Equation (3) can be transformed into

However, optimization merely by Equation (4) is ill-conditioning—i.e., the reconstruction is vulnerable to accumulated iterative noise accompanying the iteration. The predominant solution to this shortcoming is to include the regularization terms in Equation (4) as the image prior. Thus, the object function of PET reconstruction can be written as

where denotes the fidelity term defined in Equation (4), and denotes the regularization term.

2.2. Tensor Decomposition

Just like the matrix, the low-rank approximation of the tensor is also inevitably based on tensor decomposition. At present, the strategies for tensor decomposition mainly fall into three groups: the CANDECOMP/PARAFAC [31] (CP) decomposition methods, Tucker decomposition [32,33] methods, and tensor-singular value decomposition [34,35,36] (t-SVD) methods. Because of the stability and efficiency inherited in their optimization, t-SVD has aroused increasing interest among the community.

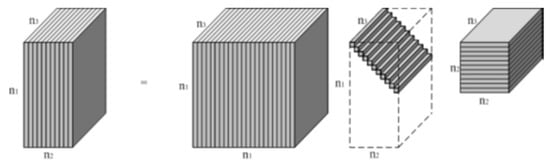

As shown in Figure 2, for a three-way tensor , t-SVD shares the same form as the matrix SVD:

where and are orthogonal tensors [37]; represents the conjugate transpose [34] of ; is a f-diagonal tensor in which each frontal slice is a diagonal matrix [35]; and denotes the t-product [37,38].

Figure 2.

Illustration of tensor-singular value decomposition (t-SVD) upon an tensor [35].

In this paper, we proposed a nonlocal tensor low-rank framework for dynamic PET reconstruction, where the tensor low-rank approximation is efficiently and effectively conducted by a t-SVD based method. The details of this method will be illustrated in Section 3.

3. Method

In this paper, we proposed a novel reconstruction framework that can jointly recover, denoise, and (mostly) critically complete the structures in the dynamic PET imaging system. The overall procedure is illustrated in Algorithm 1.

| Algorithm 1: Dynamic PET reconstruction via Nonlocal Low-rank Tensor Approximation and Total Variation |

| Input: Sinogram and system matrix , weighting parameters , and the reference frame index . |

| 1: Initialization: . |

| 2: Repeat: |

| 3: Compute the patch sets based on the -th frame using (7). |

| 4: Construct the nonlocal featured tensor by (8) and (9). 5: Approximate the low-rank tensor by adopting t-SVT method in (13) and (21). |

| 6: Construct by updating the differential vector via (22). 7: Update the Lagrangian multiplier via (23). |

| 8: Repeat: |

| 9: E-step: Introduce the expectation variable and construct the relevant objective function in (24). |

| 10: M-step: Update using (25) and (26). |

| 11: Until: Inner Relative change |

| 12: |

| 13: Until: Relative change |

| 14: Output: Reconstructed image sequence . |

3.1. Nonlocal Low Rank Tensor Approximation

The nonlocal tensor regularization consists of two parts: forming the nonlocal tensor within the recovered frames and formulating the low-rank property in the formed tensors.

3.1.1. Tensor Formulation

During the optimizing procedure, a temporary estimated image sequence will be obtained after each iteration, where represents the t-th frame of image vector as mentioned in Section 2.1. Similar to Figure 1, numerous W W sized overlapping patches (n = W W) can be extracted from each , where i denotes the index of the image patch. According to the nonlocal self-similar properties, within the image, there are plentiful patches that share the same structure with each . Based on the Euclidean distance, we choose the m nearest patches for each :

where is the index set of similar patches for the i-th positioned patch ; and is the threshold value defined by the distance between and its m-th nearest patch. Thus, for each exemplar , we formulate a matrix:

As shown in Figure 1, due to the nonlocal self-similarity, it is fairly assumed that each is low-rank.

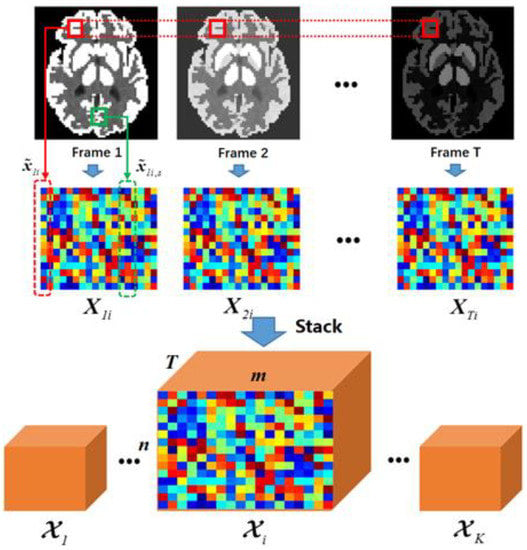

From a dynamic recovery perspective, sets for are identical, since the structure in dynamic PET is unchanged. Therefore, based on the patch position i, we can construct a 3D tensor , whose frontal slices are calculated as

Figure 3 illustrates the overall procedure for forming a nonlocal tensor. Within each iteration, numerous s for various i positions will be constructed and then approximated by a low-rank property.

Figure 3.

Illustration of tensor construction. The first row represents the temporary recovered image sequence. For the randomly chosen patch position i, similar patches can be found within its own frame and then grouped into matrices in the second row. By stacking the matrices along the frames, we managed to construct a feature tensor for position i.

3.1.2. Low Rank Tensor Approximation

The next step is the low rank approximation for constructed tensors . Traditionally, the primal model of rank regularization searches for the tensor , where

Nevertheless, this model is non-deterministic polynomial-time (NP) hard, and its direct optimization is nonconvex. Taking this situation into account, we choose a surrogate form of Equation (10) and turn it into a convex optimization issue:

Here, denotes the tensor nuclear norm [39] and denotes the Frobenius norm. Under this circumstance, it is feasible to get a closed-form solution by adopting tensor singular value thresholding (t-SVT) [39]:

with representing the mentioned t-SVD of and

where ; denotes the fast Inverse Fast Fourier Transform (IFFT) of X across dimension 3; and denotes the Fast Fourier Transform (FFT) of across dimension 3.

3.2. Total Variation Regularization in Dynamic PET

Apart from nonlocal low-rank tensor regularization, we also incorporate the total variation [40] (TV) as a complementary constraint into the dynamic PET reconstruction framework. Unlike the nonlocal tensor, the adopted TV regularization focuses on inherited local information within each frame. Moreover, this pixel-based regularization compromises patch-based regularization and thus improves the denoising performance of the proposed algorithm.

For each image frame in the estimated sequence , we adopt an l2 formed TV regularization, which is properly formulated in accordance with augmented Lagrangian optimization [41,42]:

where denotes the discrete gradient of at position j; and denotes the j-th element of the corresponding differential operator . Correspondingly, the augmented Lagrangian function can be written as:

where represents the collection of discrete gradient vectors for each recovered frame; represents the tunable weighting parameters of the quadratic term; and represents the updatable multiplier vector. Consequently, this method guarantees the convexity of TV regularization, and hence equips the proposed method with global convergence.

3.3. Expectation Maximization for Fidelity Term

Indisputably, solving the fidelity term Equation (4), to a large extent, is an essential mission in estimating the reconstruction images . Regardless of regularization, Equation (4) can be further written as:

where denotes the j-th pixel in image ; is the qj-th entry in system matrix G, representing the contribution of the j-th pixel given to the q-th detector; and the hidden variable represents the photon count from the j-th pixel to the q-th detector pair in the t-th frame.

The main challenge lies in the solving procedure, especially handling the hidden variable . In this work, we adopt the well-known expectation maximization (EM) [5,43], which introduces ‘complete data’ into the model and thus facilitates optimization. In order to solve Equation (18), there are two essential steps:

- E-step: This step employs the expectation = as the substitute for the hidden variable in Equation (18):

- M-step: This step maximizes the likelihood by zeroing the derivative of Equation (19):

The EM algorithm, which will be illustrated in greater detail in the next section, makes our proposed framework readily and efficiently solvable.

3.4. The Overall Optimization Framework

Based on Equation (5), the overall reconstruction model can be represented as

where is the fidelity term in the reconstruction model Equation (16); represents the tensor formed nonlocal low rank constraint in Section 3.1; represents the dynamic PET adopted total variation term in Section 3.2; and and denote the weighting parameters. By taking the mentioned terms into account, the objective function can be formulated as:

Normally, recovering directly from Equation (20) is a complex process. For this process, we refer to the alternative direction method of multipliers (ADMM) [30] and divide the model into three subproblems of and in a distributed optimization way.

- (1)

- -subproblem. Let denote the updated variable after the k-th iteration; e.g., represents the computed image sequence after the k-th iteration. In the (k + 1)-th iteration, nonlocal low-rank approximation is first implemented. After the formulation of nonlocal feature tensors , as illustrated in Section 3.1, we can obtain the function related to each low rank tensor:with

- (2)

- -subproblem. Unlike updating , the discrete gradient is updated frame by frame. As shown in Equation (15), we update by employing the shrinkage operator [44]:Correspondingly, the multiplier vector updates by:

- (3)

- -subproblem. After the update of and , the last critical process is to update . In addition to the fidelity term in Equation (18), the former mentioned regularizations must be considered. In this procedure, we adopt the EM algorithm and hence reform Equation (20) into a joint function relevant to (or ):

Here, (or ) denotes the t-th frontal slice of tensor ; denotes the contribution weight for pixel to tensor ; denotes the j-th element of , and denotes the j-th column of the differential operator ; and is the j-th element of multiplier .

According to the M-step in Equation (18), we can harvest a unitary quadratic equation:

Therefore, can be readily solved as the positive root of (25):

In this X sub-problem, given that we do not have a close-formed solution for the PET reconstruction model [5], the X-subproblem is not fully solved by the employment of EM. However, due to the implementation of ADMM based optimization, the overall framework will converge, even if its sub-problems are not carried out exactly [30].

Algorithm 1 demonstrates the overall procedure of our proposed algorithm. It is noteworthy that, in the initialization step, we employed the filtered backward projection (FBP) [3] method to produce a ‘warm start’. By doing so, as we will show in the next section, iterative performance is notably improved.

4. Experiments and Results

In this section, we perform various experiments, in order to validate the qualitative and quantitative measures of the proposed method. Data on diversified photon counts, sizes, tracers, and structures are recovered by our proposed method and compared with the results of representative and state-of-the-art algorithms.

4.1. Implementations

4.1.1. Evaluation Criteria

For the qualitative evaluation, randomly selected reconstructions are shown in this part, where the structural detail, noise level, and so on will be intuitively illustrated. For the quantitative evaluation, other than the peak signal-to-noise ratio (PSNR)), we also employ the relative bias and variance [45] as the indicators of the resolution and smoothness, respectively:

where denotes the ground truth in the j-th pixel; denotes the mean value of the ROI; and denotes the total number of pixels in the given ROI. Unlike the PSNR, the smaller the relative bias and variance are, the better the reconstruction is.

We also conduct the multiple simulations experiment. In this study, we employ the contrast recovery coefficient (CRC) and the standard deviation (STD) [16,46]:

Here, represents the number of realizations in the simulation. In Equation (29), represents the mean value of the ROI in r-th realization and represents the mean value of the background region in r-th realization. In Equation (30), denotes the total number of pixels in the background region; denotes the mean value of j-th pixel in background region across R realizations.

For the real data, we adopt the contrast to noise ratio (CNR) [47]:

where the and represent the intensity of the ROI and background region respectively, and is the standard deviation of the background region.

4.1.2. Dataset

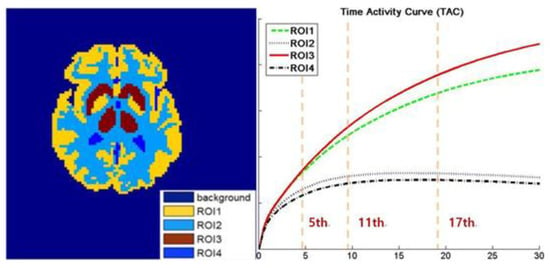

As shown in Figure 4, we mainly adopt a 64 64 sized Zubal brain phantom as the template and employ the 11C-dihydrotetrabenazine (denoted as DTBZ) as the tracer. The scanning procedure in simulated into 18 frames for a duration of 20 min with the corresponding TAC presented in Figure 1. Moreover, to validate the performance of the algorithms under a diversified tracer dose, the data are generated in 3 106, 107 and 3 107 total photon counts over 18 frames. Furthermore, in the multiple realizations experiment, we generate total 100 realizations for 3 106 and 3 107 counted data (R = 50 for each simulation). In addition, all the simulated settings correspond to real cases.

Figure 4.

The ground truth of brain phantom. Left: the region of interest (ROI) map. Right: the time activity curves (TAC) of this image sequence, with the 5th, 11th, and 17th frames are labeled in the figure.

Furthermore, 111 111 sized Zubal head phantoms [16] are recovered to validate the effectiveness of the proposed method on different tracers (18F-FDG) and image sizes. Moreover, real cardiac data are tested in this section. The data are scanned over 60 min by a Hamamatsu SHR-22000 (Hamamatsu Photonics K.K., Hamamatsu City, Japan). There are, overall, 19 frames, and each are scanned by 130 detector pairs from 192 angles.

4.1.3. Comparative Algorithms

To evaluate the performance of the proposed algorithm, we introduce five representative algorithms in comparison (the maximum likelihood-expectation maximization (ML–EM) algorithm [5], the penalized weighted least square (PWLS) method [8], the total variation optimized by augmented Lagrangian (TV-AL) method [48], the penalized likelihood incremental optimization method regularized by hyperbolic potential function [45,49] (denoted as PLH-IO), and the spatial-temporal total variation (ST-TV) method [50]) proposed for dynamic PET reconstruction.

4.1.4. Parameters Setting

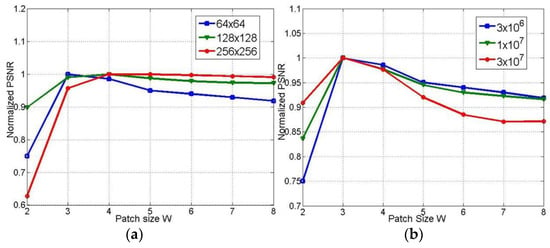

After deliberate examination, we set the weighting parameters as follows: the nonlocal tensor weight parameter ; TV weight parameter ; tensor thresholding parameter ; and and parameter . Another critical issue is the tensors’ sizes. As shown in Figure 5, the optimal patch size is 3 3. Thus, if the number of feature patches is set to 10 in the 18-frame sequence, each selected tensor’s size is 9 10 18. We set the maximum iteration to 500 for all methods in comparison.

Figure 5.

Normalized peak signal-to-noise ratio (PSNR) of reconstructions under different patch size W. (a) for images in different size, (b) for data under different photon count.

4.1.5. Experiment Description

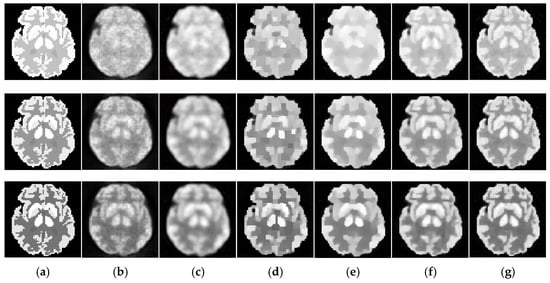

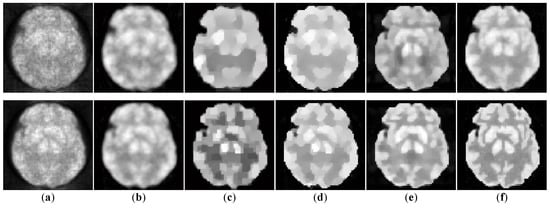

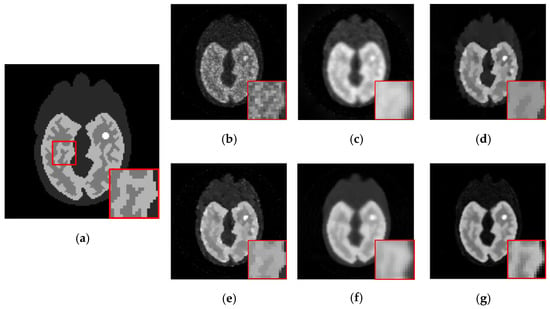

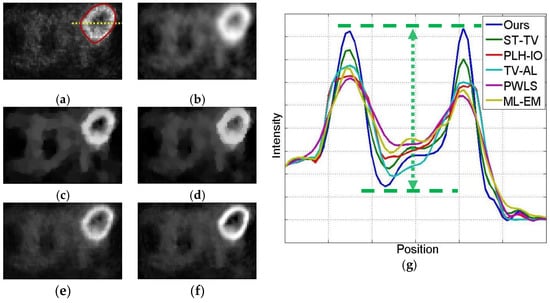

We evaluate our method both qualitatively and quantitatively on simulation and real PET data. We firstly compare the reconstructions for 64 64 sized Zubal brain data under 3 107 total photon counts, and demonstrate 5th, 11th, and 17th frame in Figure 6. In Figure 7, we further compare the 11th reconstructed frames under lower-counted sequences: 3 106 and 1 107 photon counts. In addition, we recover the 111 111 sized Zubal head phantom to validate the performance under different sizes and TACs, as shown in Figure 8. For the real patient study, we demonstrate the first frame of dynamic cardiac PET reconstructions in Figure 9, and compute the CNR for each method.

Figure 6.

Dynamic brain phantom reconstructed by different algorithms. The total photon counts are over 18 image frames. From the first to the last row: the 5th, 11th and 17th frame. (a) ground truth, (b) ML-EM (16.01 dB, 16.41 dB, 15.92 dB), (c) PWLS (17.71dB, 16.88dB, 16.23dB), (d) TV-AL (18.49 dB, 18.76 dB, 18.52 dB), (e) PLH-IO (21.26 dB, 19.56 dB, 18.39 dB), (f) ST-TV (21.27 dB, 20.59 dB, 19.02 dB), (g) Ours (21.79 dB, 21.71 dB, 20.63 dB).

Figure 7.

The simulated low-dosed images reconstructed by different algorithms. First row: the 11th frame under 186,337 photon counts. Second row: the 11th frame under 619,848 photon counts. (a) ML-EM (12.51 dB, 15.06 dB), (b) PWLS (15.89 dB, 16.67 dB), (c) TV-AL (16.09 dB, 16.45 dB), (d) PLH-IO (16.83 dB, 18.84 dB), (e) ST-TV (17.43 dB, 19.21 dB), (f) Ours (17.78 dB, 19.98 dB).

Figure 8.

The 15th frame of 111 111 sized Zubal head phantom reconstructed by different algorithms. (a) ground truth, (b) ML-EM (18.19 dB), (c) PWLS (18.35 dB), (d)TV-AL (19.22 dB), (e) PLH-IO (19.17 dB), (f) ST-TV (19.45 dB), (g) Ours (19.73 dB).

Figure 9.

The 2nd frame of the dynamic cardiac real data, under 221,858 photon counts. The ROI is marked by a red circle. (a) ML-EM (CNR = 10.97), (b) PWLS (CNR = 13.72), (c) TV-AL(CNR = 18.46), (d) PLH-IO (CNR = 19.59), (e) ST-TV (CNR = 22.06), (f) Ours (CNR = 22.70), (g) the intensity profile across the ROI (the yellow line in the first graph). Our proposed method presents a superior contrast between the ROI and background region.

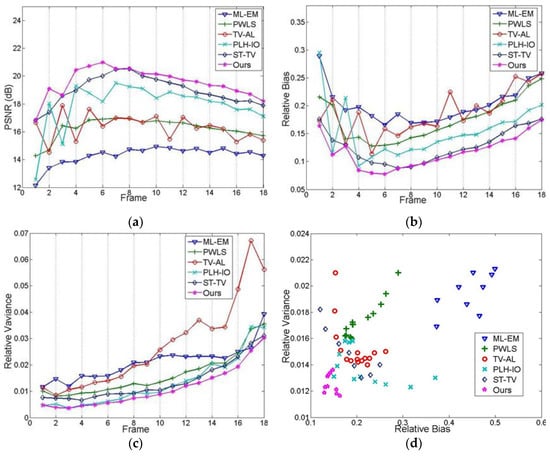

In the quantitative evaluations, we firstly present the PSNR, relative bias and variance for data under 3 107, 3 106 and 1 107 photon counts in Table 1; furthermore, we compute the relative bias and variance in each ROI for 3 106 counted data and demonstrate them in Table 2. In Figure 10a–c, we compute the PSNR, relative bias, and variance for each frame in 1 107 counted data. In Figure 10d, we demonstrate the performance of the trade-off between resolution and smoothness, by altering the parameters in each algorithm and plotting the bias and variance in each reconstruction.

Table 1.

Average value of statistical evaluation terms for reconstructed Brain Phantom sequences under different photon counts.

Table 2.

Average value of relative bias and variance in each region of interest (ROI). 3 × 106 photon counted data are tested and shown.

Figure 10.

The quantitative evaluation for each image frame. (a) PSNR, (b) relative bias, (c) relative variance, (d) the experiment on the trade-off between image resolution and denoising performance.

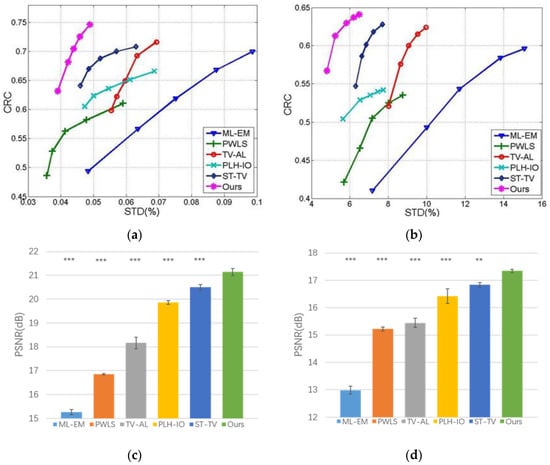

Moreover, we conduct the experiment on multiple simulations. In our experiment, we run 100 realizations for 3 107 and 3 106 counted data, and draw the CRC-STD curves, as shown in Figure 11a–b. Accordingly, the CRC is computed from ROI 2, and the background STD is computed from ROI 4, which represents the white matter. In addition, we run the Wilcoxon rank sum test on the multiple realization test, as demonstrated in Figure 11c–d. In addition, we further examine the universality of the proposed method by reconstructing data under wider range of photon counts, as demonstrated in Figure 11a, from 1 106 to 1 108. Further discussions on convergence are illustrated in Figure 11b and the discussion section.

Figure 11.

The statistical test on multiple realizations. First row: the CRC-STD curves for high-count and low-count simulations, with realization number R = 50 in each dataset. Second row: the PSNR bar plot and Wilcoxon rank sum test on multiple realizations datasets. Here ** and *** represent p-value < 0.01 and p-value < 0.001 respectively. (a) CRC-STD curves on 3 × 107 dataset, (b) CRC-STD curves on the 3 × 106 dataset, (c) Wilcoxon rank sum test on 3 × 107 dataset, and (d) Wilcoxon rank sum test on the 3 × 106 dataset.

4.2. Results

4.2.1. Qualitative Evaluation

The initial experiment focuses on the resolution and the denoising performance throughout the temporal dimension. According to the TAC in Figure 4, the distinction of activity between different ROIs is inconspicuous in the early imaging stage, which consequently hampers the recovery of the structural information in the corresponding image frames. As demonstrated in Figure 6, the reconstructions of ML-EM and PWLS suffer from severe iterative noise and fail to recover a clear boundary between regions. On the other hand, the TV-AL and PLH-IO show more acceptable results for the 17th frame. However, when it comes to former frames, neither of these two methods are able to recover clear structures. Moreover, the TV-AL suffers from the staircase effect and artifacts, and PLH-IO tends to over-smooth the image. Although ST-TV improves the resolution by incorporating temporal information, it is still limited by recovering more detailed structures. In contrast, our proposed method manages to recover more detailed structures and less noise in reconstructing the brain phantom sequence under photon counts. This contrast is more distinctive in simulated low-dose images. As we can see in Figure 7, when recovering early frames in the low-count data, our proposed method is able to recover substantially clearer structures than those of other methods under similar noise levels.

Meanwhile, our method also shows its universality in recovering sequences under different sizes and TACs. In this experiment, 111 111 sized Zubal head phantom data were tested in an 18F-FDG environment. In Figure 8, the 15th frame is randomly selected out of 24 frames. In addition, real patient data are tested. In Figure 9, the second sequence is shown, and the photon counts are around 2.2 105. Obviously, our proposed method yields clearer boundaries and more conspicuous contrast between ROIs and the background.

4.2.2. Quantitative Evaluation

The quantitative measurements are also meticulously implemented. Table 1 demonstrates the average statistical values for the dynamic image sequences under diversified photon counts. According to the table, the proposed method enjoys a higher PSNR and lower relative bias and variance, revealing solid and substantial merits in structural enhancement, resolution improvement, and image denoising. Table 2 provides more detailed measurements for each ROI reconstruction. As shown in the table, the proposed method reconstructs images at a lower bias and variance in each ROI to vary the count level, which demonstrates better resolution and smoothness than those of comparable methods.

Other than spatial information, temporal trends are also considered in Figure 10, where Figure 10a–c presents the PSNR, bias, and variance for each frame. It can be easily observed that the proposed method, overall, has better results for each frame, which will facilitate the exploitation of temporal information. In addition, given the fact that regular reconstruction methods are largely based on the trade-off between the resolution and the noise level, we also implemented an experiment of this trade-off, correspondingly represented by the relative bias and the relative variance in Figure 10d. As we can see, apart from the relatively poorer performances of ML-EM and PWLS, both TV-AL and PLH-IO show a negative correlation between these two indexes. In contrast, the marks of our proposed method are densely concentrated in the bottom-left of this graph, which shows a better image quality and better compromise for the mentioned trade-off.

To better validate the proposed method, we further implement the experiments on multiple realizations. As demonstrated in Figure 11a–b, we run the experiments under high-count and low-count scenarios (3 107 and 3 106 counted data) and draw the correspondent CRC-STD curves, which illustrate the performance of compared methods. In these curves, each point corresponds to a certain setting for parameters in the relative method. According to the figures, the proposed method manages to recover higher CRC while keeping the background STD in a low level, which validates the stability of our method in reducing the noise while keeping the contrast distinctive. Moreover, we analyze the statistical performance of the Wilcoxon rank sum test on the multiple realizations data. As shown in Figure 11c–d, we compute the p-value between the proposed method and compared methods, in terms of PSNR on multi-simulation. In this study, the proposed method significantly outperforms the ST-TV in 3 106 dataset, at p-value < 0.01; more distinctively, the proposed method outperforms other methods in 3 106 dataset and all methods in 3 107 dataset, at a p-value < 0.001.

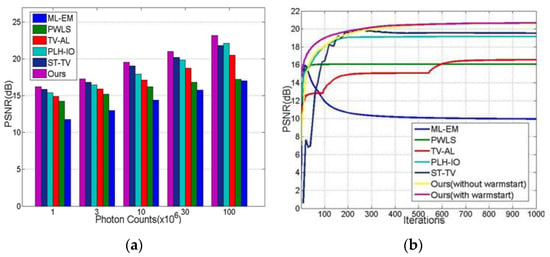

4.2.3. Robustness and Convergence Analysis

The robustness and convergence experiments are demonstrated in this section. As we can see from Figure 12a, the proposed method presents better performance and robustness than other methods under a broad range of photon counts.

Figure 12.

The robustness and convergence experiments. (a) the PSNR of reconstructed sequences under diversified photon counts; (b) the PSNR convergent trends along iterations.

5. Discussion

Other than merely denoising, the proposed method simultaneously provides enhancement and completion of structural sparsity by introducing a 3D tensor based nonlocal low-rank constraint. Unlike the tracer kinetics based dynamic reconstruction method, the proposed method spontaneously exploits the inner temporal correlation within the sequence without the need for tracer information and model fitting. In fact, our proposed method not only managed to suppress noise while recovering at a high resolution, it also enhanced, and even completed, the structural information in the reconstruction sequence.

This result is attributed to the tensor based low rank approximation. As presented in Figure 3, the third dimension of the exists alongside the temporal information. By implementing the tensor based low rank constraint on each , the spatial information is spontaneously infiltrated from high-counted frames to low-counted frames, while keeping the voxels’ relative intensities and edge arrangements fixed. Considering this feature, a dynamic PET sequence is considered ideal for this framework, given the unchanged boundary and structures along the dynamic sequences. On the other hand, since noise is randomly and sparsely arranged in the PET sequence, it is ruled out as the sparse component in the low rank approximation.

Furthermore, we demonstrate the contribution of different regularization components in Figure 13 by setting various hyper-parameters. According to the figure, tensor constraints can successfully recover detailed structures but are slightly limited in smoothing an image under low counts. Fortunately, the employment of a TV constraint compensates for this issue, as demonstrated in Figure 13c.

Figure 13.

The comparison of reconstructed images under different settings of hyper-parameters. (a) reconstruction constrained by TV regularization (by setting α = 0), (b) reconstruction constrained by nonlocal low rank tensor (by setting β = 0), (c) image reconstructed by proposed methods (α = 2.5, β = 1.4).

Nevertheless, there are still several concerns in this study. Firstly, for the data in unwilling but conspicuous motion, the proposed method is limited in harvesting temporal correlation, though other dynamic PET algorithms also suffer from this issue, to the best of our knowledge. Currently, the optimal solution is to conduct motion correction before reconstruction. Secondly, since 3D structural nonlocal features are not well proven in the computer vision community, this method is not guaranteed to function well in 3D PET reconstruction. However, we still provide two solutions for applying the proposed method in 3D data at the current stage: (1) Conduct the method slice by slice; and (2) rebin the data into a 2D form before reconstruction.

The last issue focuses on the computational cost. For simulated data in Figure 4, the computational time for each method is demonstrated in Table 3. Here, the computational experiments are implemented under Matlab R2014a (Mathworks, Natick, MA., USA), on the same desktop with an Intel Core i7-4720HQ CPU (Santa Clara, CA, USA) @2.60 GHz and 8 GB RAM. We have to concede that, compared with the traditional pixel-based algorithms, the computational cost is inevitable in the proposed method, due to the multiple decompositions for feature tensors generated by feature cubes (or patches in 2D situation [23,24]). To address this issue, we will continue optimizing the proposed algorithm and employing other algorithms, such as TCTF [51].

Table 3.

Computational time for each method.

In addition, the choice for the tensor decomposition model is still open in our future work. The T-SVD based method is proved effective in our work and [34,35,36], yet, strictly speaking, its tubal based rank is the analogous rank extended from SVD. In our future work, we will further analyze the data-structures, explore the feasibilities of other potential models, i.e., CP and Tucker rank [31], and testify the applicabilities of the latest proposed CP rank based methods [52,53] as well as Tucker rank based methods [54,55]).

6. Conclusions

In this paper, we provide a novel tensor based nonlocal low-rank framework for dynamic PET reconstruction. By introducing a nonlocal featured tensor and applying the t-SVT in low-rank tensor approximation, the image structures are efficiently enhanced while effectively depressing the noise. More significantly, structural information is further completed by other frames in an interactive way, thereby compromising the conflict between spatial and temporal resolution. On the other hand, accompanied by the TV term denoising (from a local and pixel-based perspective), the regularizations are firstly integrated in the Poisson reconstruction model and efficaciously optimized in a distributed framework.

Author Contributions

Conceptualization, N.X. and H.L.; Formal analysis, N.X.; Funding acquisition, H.L.; Investigation, N.X.; Methodology, N.X. and Y.C.; Project administration, H.L.; Supervision, Y.C. and H.L.; Validation, N.X.; Writing—original draft, N.X.; Writing—review and editing, Y.C. and H.L.

Funding

This research was funded by National Key Technology Research and Development Program of China (No: 2017YFE0104000, 2016YFC1300302); National Natural Science Foundation of China (No: 61525106, 61427807, U1809204); NSF/DMS1719932.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bohuslavizki, K.H.; Klutmann, S.; Kroger, S.; Sonnemann, U.; Buchert, R.; Werner, J.A.; Mester, J.; Clausen, M. FDG PET detection of unknown primary tumors. J. Nucl. Med. 2000, 41, 816. [Google Scholar] [PubMed]

- Ichiya, Y.; Kuwabara, Y.; Sasaki, M.; Yoshida, T.; Akashi, Y.; Murayama, S.; Nakamura, K.; Fukumura, T.; Masuda, K. FDG-PET in infectious lesions: The detection and assessment of lesion activity. Ann. Nucl. Med. 1996, 10, 185–191. [Google Scholar] [CrossRef] [PubMed]

- Basu, S.; Bresler, Y. O(N/sup 2/log/sub 2/N) filtered Backprojection Reconstruction Algorithm for Tomography. IEEE Trans. Image Process. 2000, 9, 1760–1773. [Google Scholar] [CrossRef] [PubMed]

- Kaufman, L. Maximum likelihood, least squares, and penalized least squares for PET. IEEE Trans. Med. Imaging 1993, 12, 200–214. [Google Scholar] [CrossRef]

- Shepp, L.A.; Vardi, Y. Maximum likelihood reconstruction for emission tomography. IEEE Trans. Med. Imaging 1982, 1, 113–122. [Google Scholar] [CrossRef]

- Qi, J.; Leahy, R.M. Iterative reconstruction techniques in emission computed tomography. Phys. Med. Biol. 2006, 51, R541. [Google Scholar] [CrossRef]

- Levitan, E.; Herman, G.T. A maximum a posteriori probability expectation maximization algorithm for image reconstruction in emission tomography. IEEE Trans. Med. Imaging 1987, 6, 185–192. [Google Scholar] [CrossRef]

- Fessler, J.A. Penalized weighted least-squares image reconstruction for positron emission tomography. IEEE Trans. Med. Imaging 1994, 13, 290–300. [Google Scholar] [CrossRef]

- Relaxation, S. Gibbs Distributions, and the Bayesian Restoration of Images. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 6, 721–741. [Google Scholar]

- Bouman, C.A.; Sauer, K. A unified approach to statistical tomography using coordinate descent optimization. IEEE Trans. Image Process. 1996, 5, 480–492. [Google Scholar] [CrossRef]

- Huber, P.J. Robust Statistics; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Figueiredo, M.A.T.; Bioucas-Dias, J.M. Restoration of poissonian images using alternating direction optimization. IEEE Trans. Image Process. 2010, 19, 3133–3145. [Google Scholar] [CrossRef] [PubMed]

- Tong, S.; Shi, P. Tracer kinetics guided dynamic PET reconstruction. In Proceedings of the Biennial International Conference on Information Processing in Medical Imaging, Kerkrade, The Netherlands, 2–6 July 2007; pp. 421–433. [Google Scholar]

- Kamasak, M.E.; Bouman, C.A.; Morris, E.D.; Sauer, K. Direct reconstruction of kinetic parameter images from dynamic PET data. IEEE Trans. Med. Imaging 2005, 24, 636–650. [Google Scholar] [CrossRef] [PubMed]

- Kawamura, N.; Yokota, T.; Hontani, H.; Sakata, M.; Kimura, Y. Parametric PET Image Reconstruction via Regional Spatial Bases and Pharmacokinetic Time Activity Model. Entropy 2017, 19, 629. [Google Scholar] [CrossRef]

- Wang, G.; Qi, J. PET image reconstruction using kernel method. IEEE Trans. Med. Imaging 2015, 34, 61–71. [Google Scholar] [CrossRef]

- An, L.; Zhang, P.; Adeli, E.; Wang, Y.; Ma, G.; Shi, F.; Lalush, D.S.; Lin, W.; Shen, D. Multi-level canonical correlation analysis for standard-dose PET image estimation. IEEE Trans. Med. Imaging 2016, 25, 3303–3315. [Google Scholar] [CrossRef]

- Chen, S.; Liu, H.; Hu, Z.; Zhang, H.; Shi, P.; Chen, Y. Simultaneous reconstruction and segmentation of dynamic PET via low-rank and sparse matrix decomposition. IEEE Trans. Biomed. Eng. 2015, 62, 1784–1795. [Google Scholar] [CrossRef]

- Kawai, K.; Yamada, J.; Hontani, H.; Yokota, T.; Sakata, M.; Kimura, Y. A robust PET image reconstruction using constrained non-negative matrix factorization. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 1815–1818. [Google Scholar]

- Kawai, K.; Hontani, H.; Yokota, T.; Sakata, M.; Kimura, Y. Simultaneous PET Image Reconstruction and Feature Extraction Method using Non-negative, Smooth, and Sparse Matrix Factorization. In Proceedings of the 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 1334–1337. [Google Scholar]

- Yokota, T.; Kawai, K.; Sakata, M.; Kimura, Y.; Hontani, H. Dynamic PET Image Reconstruction Using Nonnegative Matrix Factorization Incorporated with Deep Image Prior. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October 2019. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 60–65. [Google Scholar]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted nuclear norm minimization with application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 2862–2869. [Google Scholar]

- Egiazarian, K.; Foi, A.; Katkovnik, V. Compressed sensing image reconstruction via recursive spatially adaptive filtering. In Proceedings of the Image Processing (ICIP 2007), San Antonio, TX, USA, 16 September–19 October 2007; pp. I-549–I-552. [Google Scholar]

- Zhang, X.; Yuan, X.; Carin, L. Nonlocal Low-Rank Tensor Factor Analysis for Image Restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8232–8241. [Google Scholar]

- Wang, L.; Xiong, Z.; Shi, G.; Wu, F.; Zeng, W. Adaptive nonlocal sparse representation for dual-camera compressive hyperspectral imaging. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2104–2111. [Google Scholar] [CrossRef]

- Dong, W.; Shi, G.; Li, X.; Ma, Y.; Huang, F. Compressive sensing via nonlocal low-rank regularization. IEEE Trans. Image Process. 2014, 23, 3618–3632. [Google Scholar] [CrossRef]

- Zhu, L.; Fu, C.W.; Brown, M.S.; Heng, P.A. A non-local low-rank framework for ultrasound speckle reduction. In Proceedings of the 2017 IE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’17), Honolulu, HI, USA, 21–26 July 2017; pp. 5650–5658. [Google Scholar]

- Xie, N.; Chen, Y.; Liu, H. Nonlocal Low-Rank and Total Variation Constrained PET Image Reconstruction. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3874–3879. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Liu, J.; Musialski, P.; Wonka, P.; Ye, J. Tensor completion for estimating missing values in visual data. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 208–220. [Google Scholar] [CrossRef] [PubMed]

- Romera-Paredes, B.; Pontil, M. A new convex relaxation for tensor completion. In Proceedings of the Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013. [Google Scholar]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor robust principal component analysis: Exact recovery of corrupted low-rank tensors via convex optimization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5249–5257. [Google Scholar]

- Zhang, Z.; Aeron, S. Exact tensor completion using t-SVD. IEEE Trans. Signal Process. 2015, 65, 1511–1526. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Braman, K.; Hao, N.; Hoover, R.C. Third-order tensors as operators on matrices: A theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 2013, 34, 148–172. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Martin, C.D. Factorization strategies for third-order tensors. Linear Algebra Its Appl. 2011, 435, 641–658. [Google Scholar] [CrossRef]

- Yokota, T.; Hontani, H. Simultaneous visual data completion and denoising based on tensor rank and total variation minimization and its primal-dual splitting algorithm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3732–3740. [Google Scholar]

- Lu, C.; Feng, J.; Liu, W.; Lin, Z.; Yan, S. Tensor robust principal component analysis with a new tensor nuclear norm. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef] [PubMed]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Hestenes, M.R. Multiplier and gradient methods. J. Optim. Theor. Appl. 1969, 4, 303–320. [Google Scholar] [CrossRef]

- Powell, M.J.D. A method for nonlinear constraints in minimization problems. Optimization 1969, 5, 283–298. [Google Scholar]

- Lange, K.; Carson, R. EM reconstruction algorithms for emission and transmission tomography. J. Comput. Assisted Tomogr. 1984, 8, 306–316. [Google Scholar]

- Elad, M. Why Simple Shrinkage Is Still Relevant for Redundant Representations? IEEE Trans. Inf. Theory 2006, 52, 5559–5569. [Google Scholar] [CrossRef]

- Ahn, S.; Fessler, J.A.; Blatt, D.; Hero, A.O. Incremental optimization transfer algorithms: Application to transmission tomography. In Proceedings of the IEEE Symposium Conference Record Nuclear Science, Rome, Italy, 16–22 October 2004; pp. 2835–2839. [Google Scholar]

- Gong, K.; Cheng-Liao, J.; Wang, G.; Chen, K.T.; Catana, C.; Qi, J. Direct patlak reconstruction from dynamic PET data using the kernel method with MRI information based on structural similarity. IEEE Trans. Med. Imaging 2017, 37, 955–965. [Google Scholar] [CrossRef] [PubMed]

- Dutta, J.; Ahn, S.; Li, Q. Quantitative statistical methods for image quality assessment. Theranostics 2013, 3, 741. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Yin, W.; Jiang, H.; Zhang, Y. An efficient augmented Lagrangian method with applications to total variation minimization. Comput. Optim. Appl. 2013, 56, 507–530. [Google Scholar] [CrossRef]

- Lingenfelter, D.J.; Fessier, J.A. Augmented Lagrangian methods for penalized likelihood reconstruction in emission tomography. In Proceedings of the Nuclear Science Symposium Conference Record (NSS/MIC), Knoxville, TN, USA, 30 October–6 November 2010; pp. 3288–3291. [Google Scholar]

- Abascal, J.; Lage, E.; Herraiz, J.L.; Martino, M.E.; Desco, M.; Vaquero, J.J. Dynamic PET reconstruction using the split bregman formulation. In Proceedings of the 2016 IEEE Nuclear Science Symposium, Medical Imaging Conference and Room-Temperature Semiconductor Detector Workshop (NSS/MIC/RTSD), Strasbourg, France, 29 October–6 November 2016; pp. 1–4. [Google Scholar]

- Zhou, P.; Lu, C.; Lin, Z.; Zhang, C. Tensor factorization for low-rank tensor completion. IEEE Trans. Image Process. 2017, 27, 1152–1163. [Google Scholar] [CrossRef]

- Battaglino, C.; Ballard, G.; Kolda, T.G. A practical randomized CP tensor decomposition. SIAM J. Matrix Anal. Appl. 2018, 39, 876–901. [Google Scholar] [CrossRef]

- Henderson, J.; Malin, B.A.; Denny, J.C.; Kho, A.N.; Sun, J.; Ghosh, J.; Ho, J.C. CP Tensor Decomposition with Cannot-Link Intermode Constraints. In Proceedings of the 2019 SIAM International Conference on Data Mining, Calgary, AB, Canada, 2–4 May 2019; pp. 711–719. [Google Scholar]

- Chakaravarthy, V.T.; Choi, J.W.; Joseph, D.J.; Murali, P.; Pandian, S.S.; Sabharwal, Y.; Sreedhar, D. On optimizing distributed Tucker decomposition for sparse tensors. In Proceedings of the International Conference on Supercomputing, Beijing, China, 12–15 June 2018; pp. 374–384. [Google Scholar]

- Smith, S.; Karypis, G. Accelerating the tucker decomposition with compressed sparse tensors. In Proceedings of the European Conference on Parallel Processing, Cham, Switzerland, 28 August–1 September 2017; pp. 653–668. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).