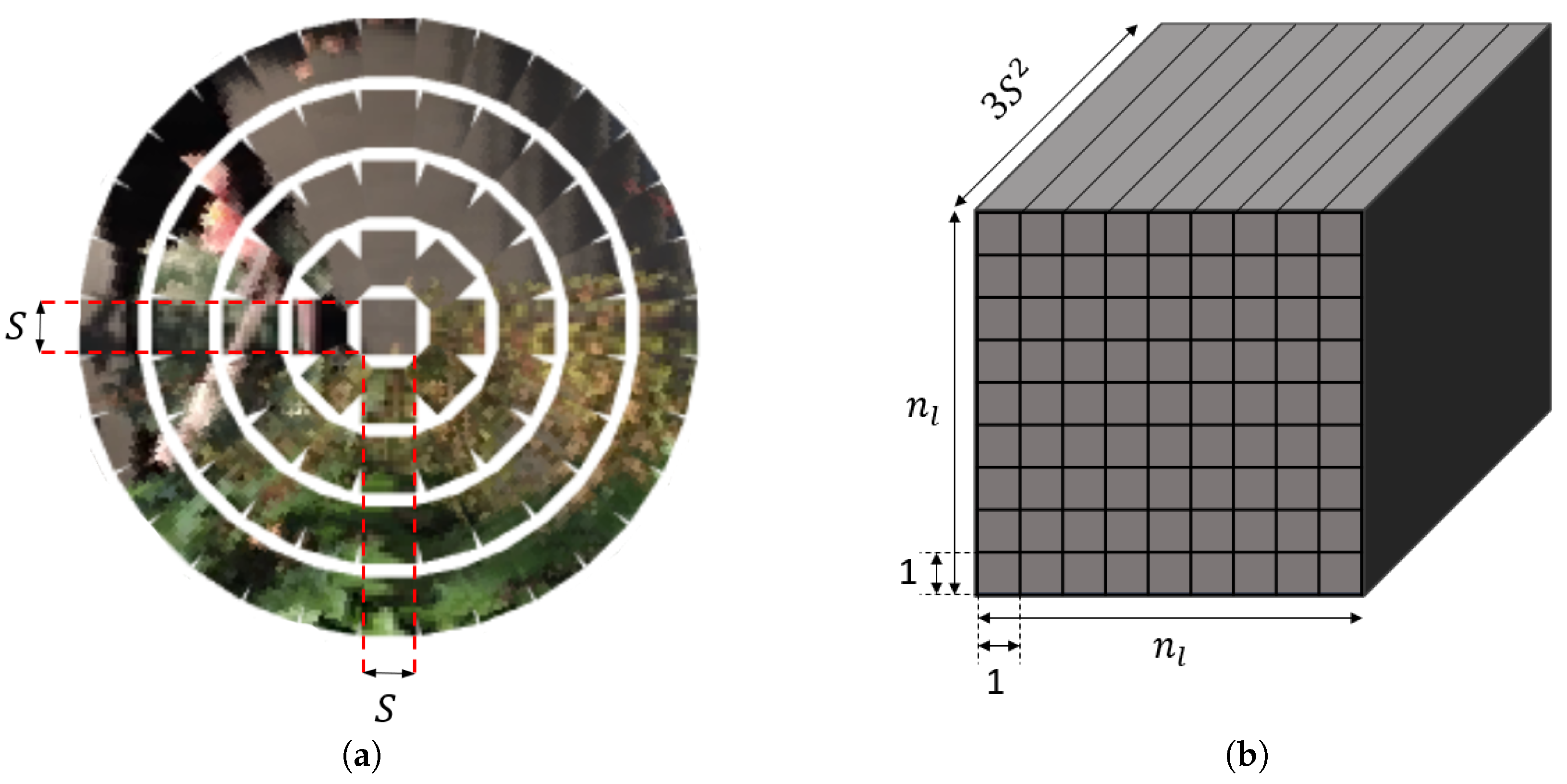

Figure 1.

An illustration of the compound image vectorization. Constrained compound images can be transformed to tensor representations of multi-dimensional 2D images. (a) Each single eye image has the size of , and reshaped into a vector of dimension . (b) The whole compound image is reshaped into a tensor of dimension . Here, is the size of the transformed tensor.

Figure 1.

An illustration of the compound image vectorization. Constrained compound images can be transformed to tensor representations of multi-dimensional 2D images. (a) Each single eye image has the size of , and reshaped into a vector of dimension . (b) The whole compound image is reshaped into a tensor of dimension . Here, is the size of the transformed tensor.

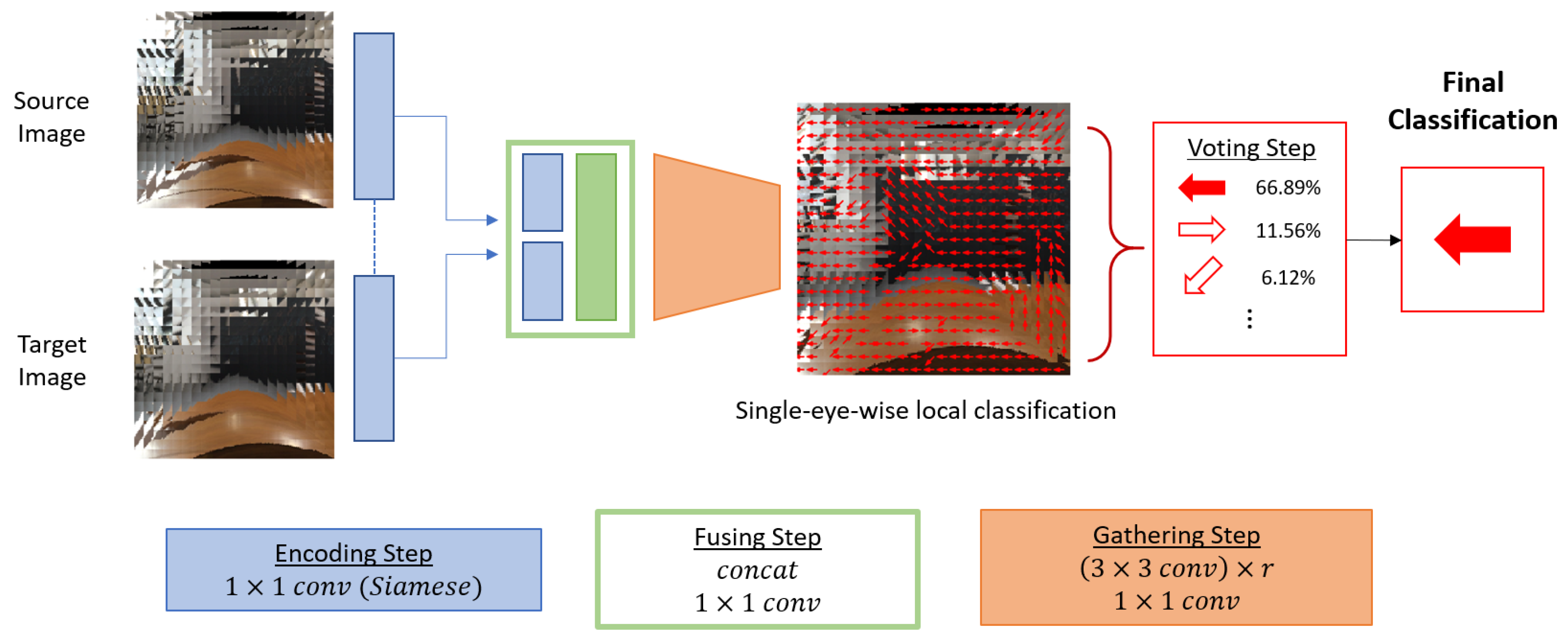

Figure 2.

Overview of the proposed compound eye ego-motion classification network. The whole structure consists of four steps: the encoding step, the fusing step, the gathering step, and the voting step. The encoding step consists of a convolutional layer with Siamese network structure to encode two inputs in the same manner. In the fusing step, encoded features from two inputs are concatenated and fused by a convolutional layer. In the gathering step, convolutional layers are applied to widen the receptive field of a local classification. r is the number of stacked convolutional layers which determines the range of the receptive field of varying configurations. At the end of the gathering step, local motion class of each single eye is obtained by a convolution. The voting step determines the final classification by finding the mode class among the local motion classifications.

Figure 2.

Overview of the proposed compound eye ego-motion classification network. The whole structure consists of four steps: the encoding step, the fusing step, the gathering step, and the voting step. The encoding step consists of a convolutional layer with Siamese network structure to encode two inputs in the same manner. In the fusing step, encoded features from two inputs are concatenated and fused by a convolutional layer. In the gathering step, convolutional layers are applied to widen the receptive field of a local classification. r is the number of stacked convolutional layers which determines the range of the receptive field of varying configurations. At the end of the gathering step, local motion class of each single eye is obtained by a convolution. The voting step determines the final classification by finding the mode class among the local motion classifications.

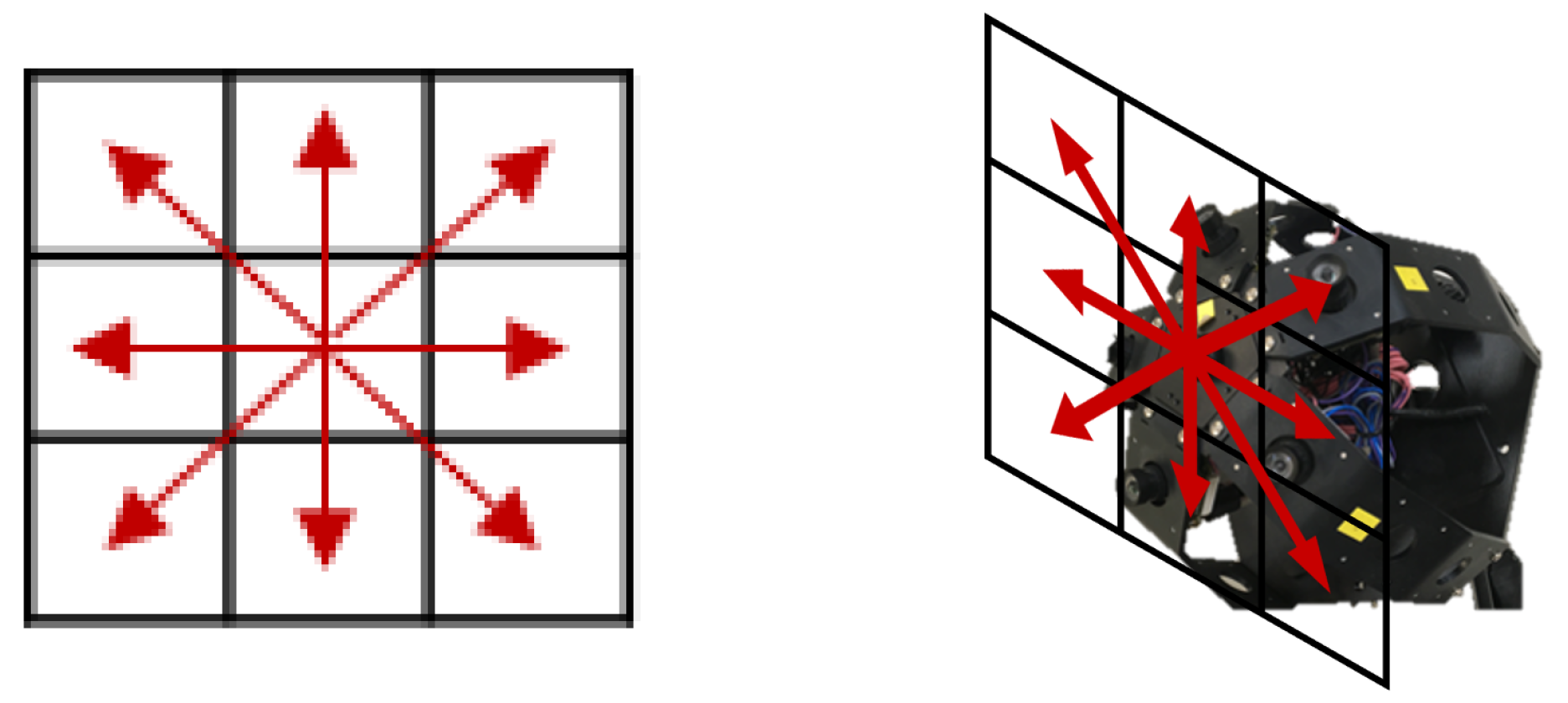

Figure 3.

(a,b): A visualization of the receptive field of a single-eye-wise local motion classification with . (c): A single-eye-wise local motion classification from compound images.

Figure 3.

(a,b): A visualization of the receptive field of a single-eye-wise local motion classification with . (c): A single-eye-wise local motion classification from compound images.

Figure 4.

Discretized camera motion in eight directions. The discretized camera motion space is a subset of 2D plane which is tangent to the center of the camera. The right image illustrates the discretized camera motion with the compound eye camera.

Figure 4.

Discretized camera motion in eight directions. The discretized camera motion space is a subset of 2D plane which is tangent to the center of the camera. The right image illustrates the discretized camera motion with the compound eye camera.

Figure 5.

An example of RGB image and corresponding compound image constructed by the compound image mapping method from [

10].

Figure 5.

An example of RGB image and corresponding compound image constructed by the compound image mapping method from [

10].

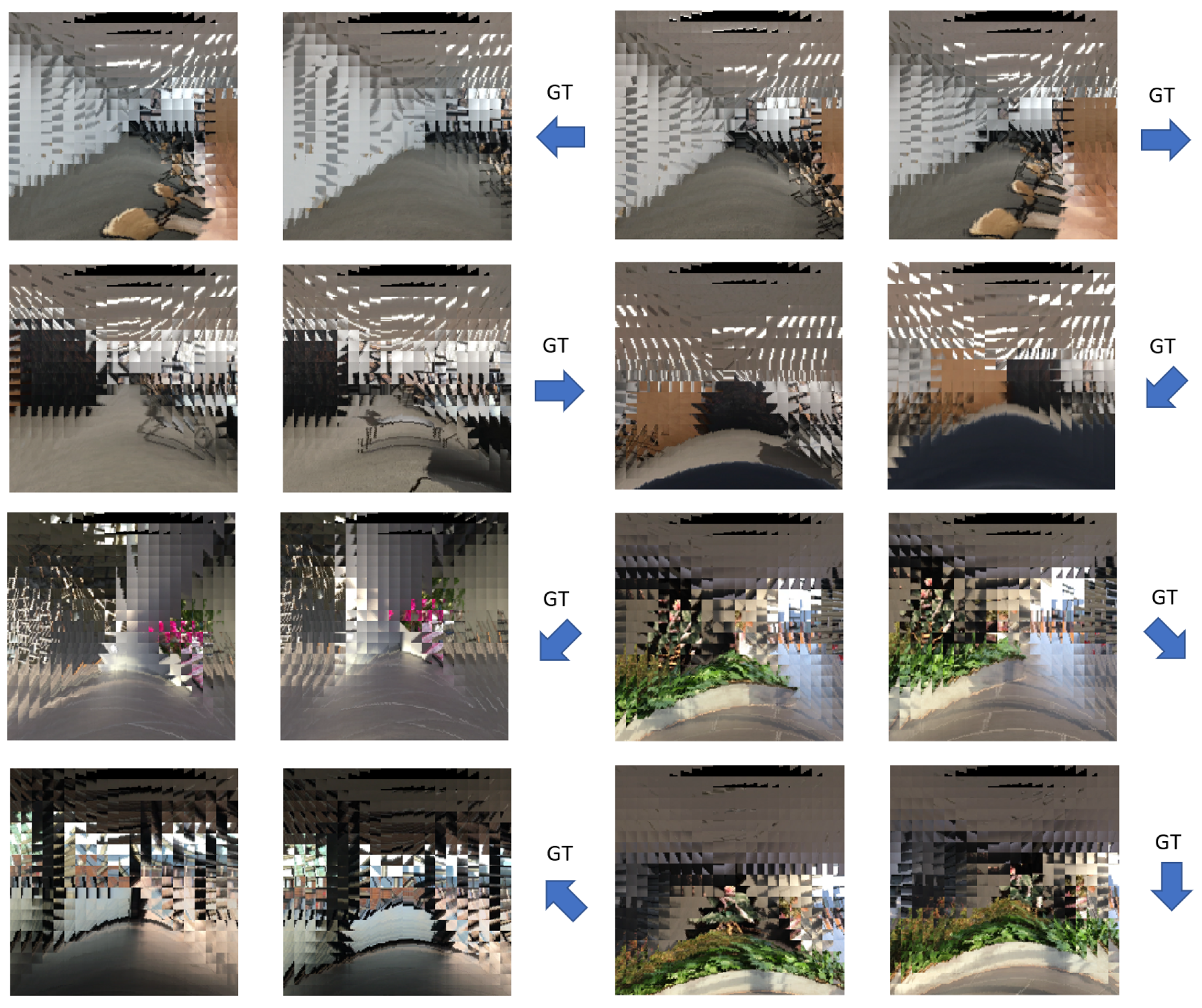

Figure 6.

Some examples of the proposed dataset. Each sample consists of two consecutive compound images and their corresponding ground truth camera motion. The proposed dataset contains scenes of inside and outside of a certain building such as classroom, aisle, stairs, and terrace.

Figure 6.

Some examples of the proposed dataset. Each sample consists of two consecutive compound images and their corresponding ground truth camera motion. The proposed dataset contains scenes of inside and outside of a certain building such as classroom, aisle, stairs, and terrace.

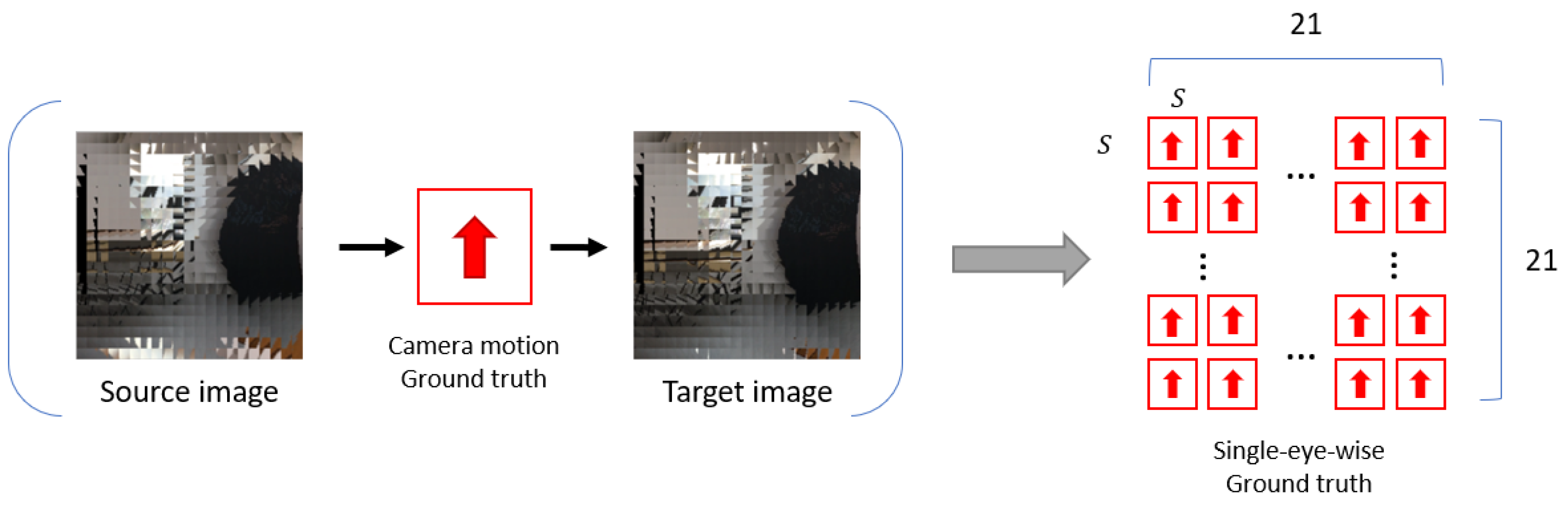

Figure 7.

Single-eye-wise ground truth generation method for training the proposed ego-motion classification network. We assign the same motion as the ground truth camera motion in the proposed dataset to each of the single eyes. With the assumption that the majority of single eyes capture the same motion as the ego-motion of the compound eye camera, the single-eye-wise ground truth data generated in this manner can be useful which considers the individual spatial information of single eyes, such as the proposed model.

Figure 7.

Single-eye-wise ground truth generation method for training the proposed ego-motion classification network. We assign the same motion as the ground truth camera motion in the proposed dataset to each of the single eyes. With the assumption that the majority of single eyes capture the same motion as the ego-motion of the compound eye camera, the single-eye-wise ground truth data generated in this manner can be useful which considers the individual spatial information of single eyes, such as the proposed model.

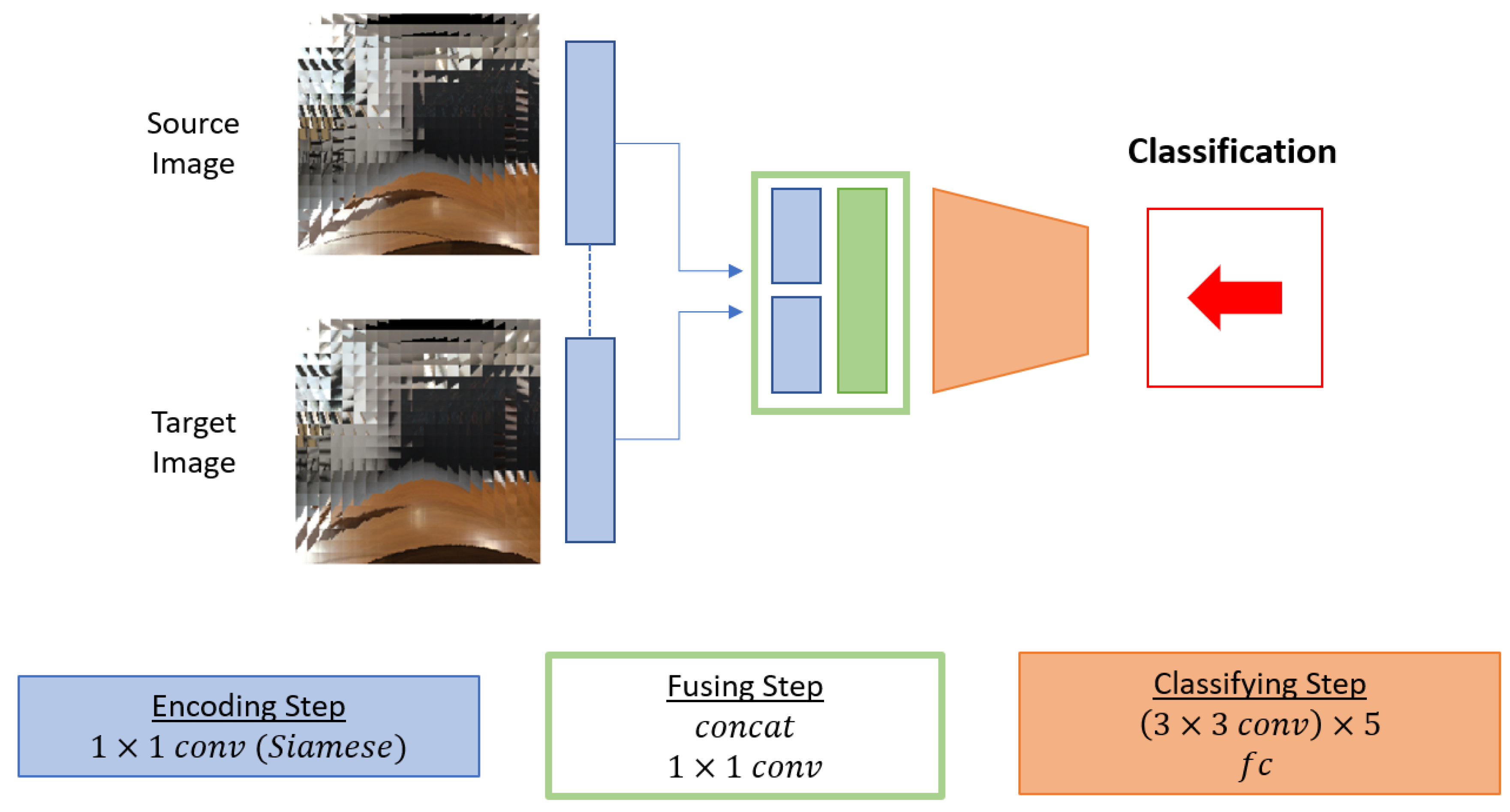

Figure 8.

An overview of the baseline network. The baseline structure consists of three steps: the

encoding step, the

fusing step, and the

classifying step. The

encoding step and the

fusing step are same as the proposed motion estimation network described in

Figure 2. The

classifying step consists of five

convolutional networks and two fully connected layers where three of the convolutional layers have stride of 2. The

classifying step outputs one motion classification for the whole compound image rather than providing local classes for each single eye locations like the proposed network.

Figure 8.

An overview of the baseline network. The baseline structure consists of three steps: the

encoding step, the

fusing step, and the

classifying step. The

encoding step and the

fusing step are same as the proposed motion estimation network described in

Figure 2. The

classifying step consists of five

convolutional networks and two fully connected layers where three of the convolutional layers have stride of 2. The

classifying step outputs one motion classification for the whole compound image rather than providing local classes for each single eye locations like the proposed network.

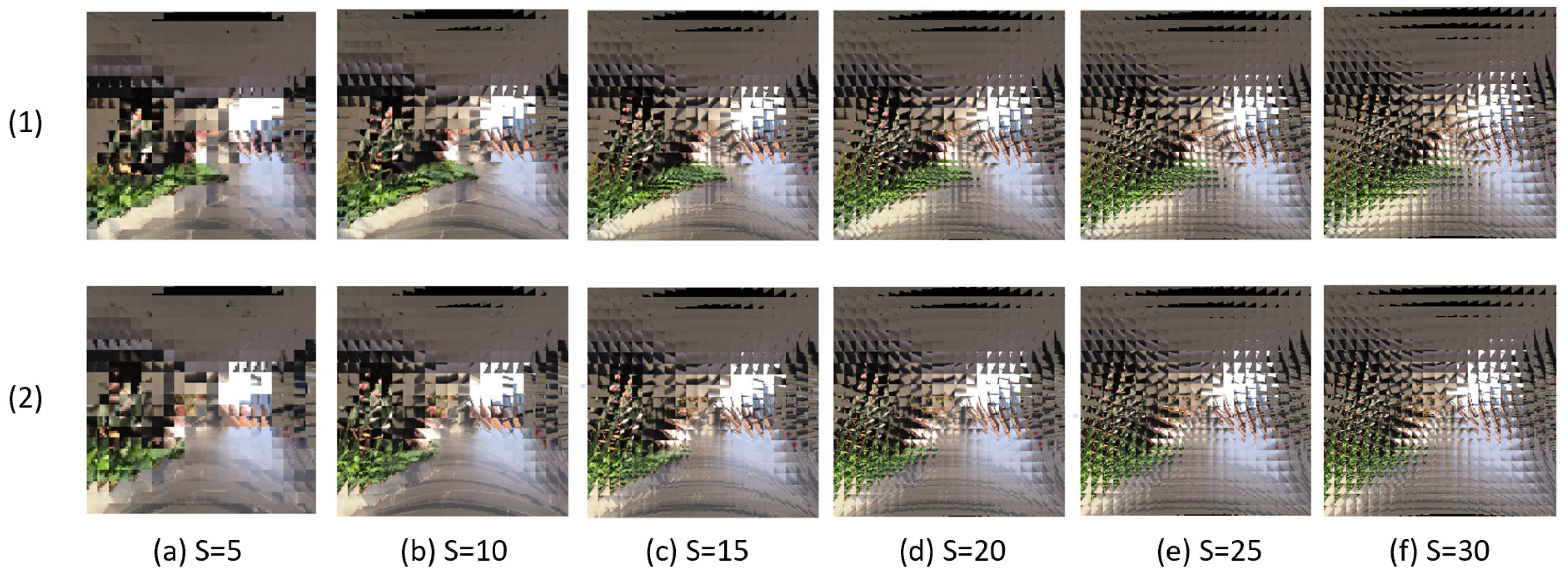

Figure 9.

Examples of pairs of compound images with various S. (1) and (2) represent two sequential scenes in the dataset. (a–f) show the compound images of different S from 5 to 30 at the same scene.

Figure 9.

Examples of pairs of compound images with various S. (1) and (2) represent two sequential scenes in the dataset. (a–f) show the compound images of different S from 5 to 30 at the same scene.

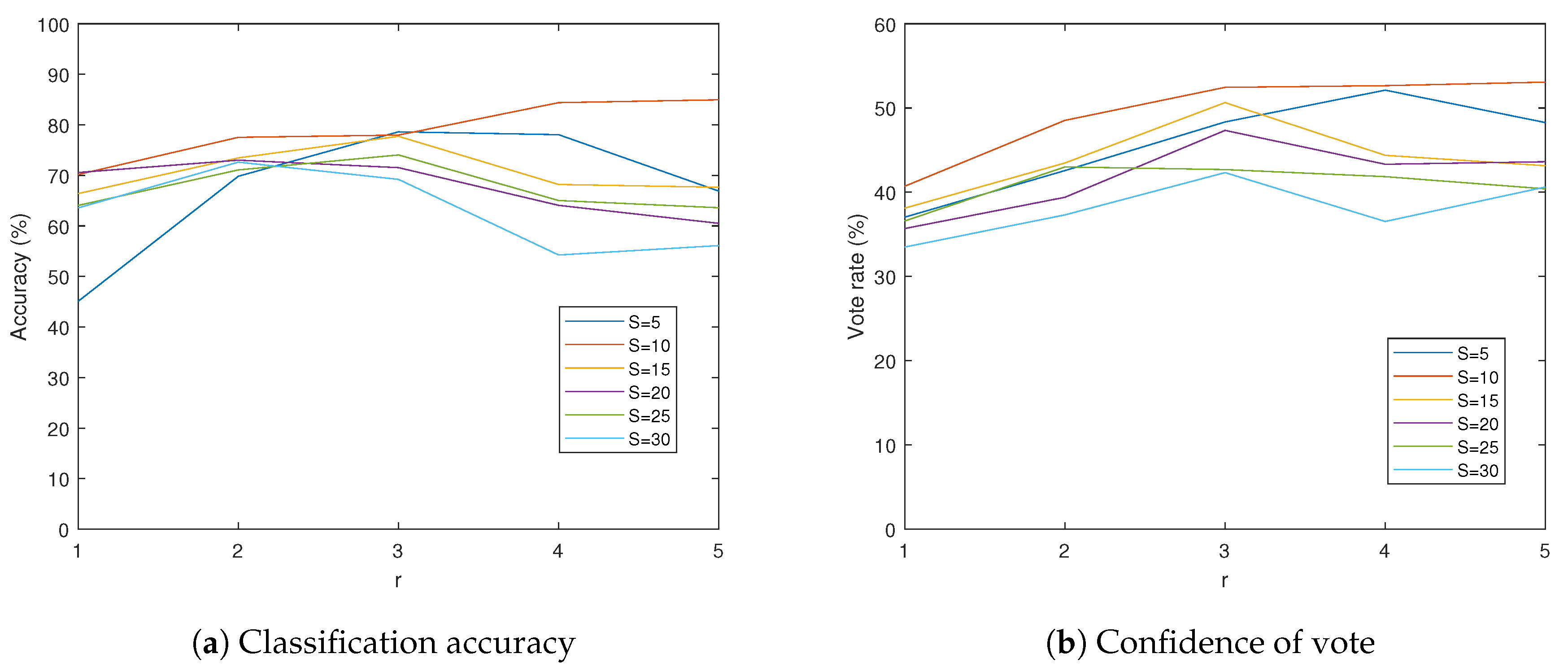

Figure 10.

Results of the proposed compound eye ego-motion classification network in varying S and r. achieves the highest accuracy and confidence value when r is fixed. Similarly, achieves the highest accuracy and confidence value when S is fixed, except for the case which has best performance at .

Figure 10.

Results of the proposed compound eye ego-motion classification network in varying S and r. achieves the highest accuracy and confidence value when r is fixed. Similarly, achieves the highest accuracy and confidence value when S is fixed, except for the case which has best performance at .

Figure 11.

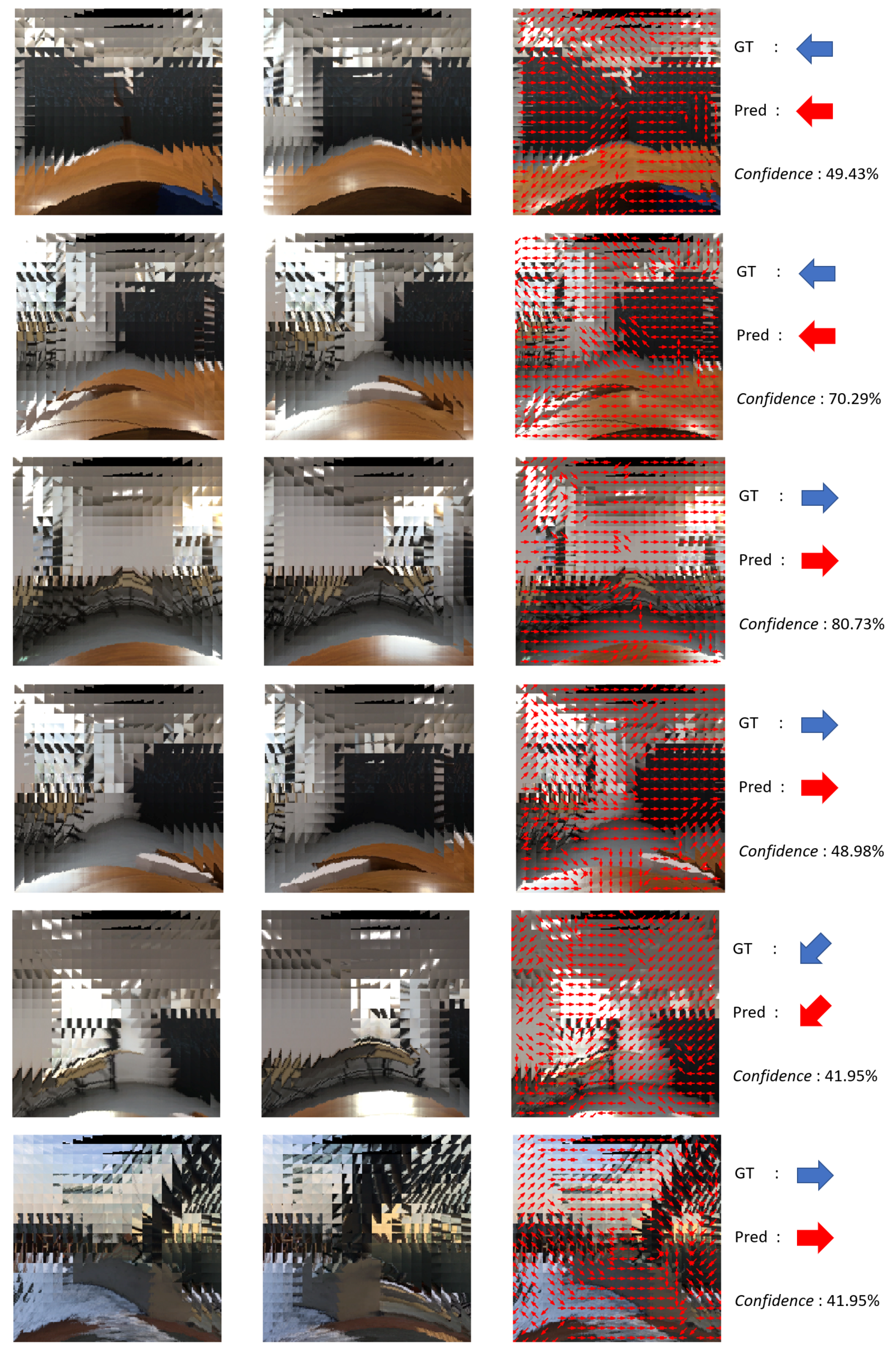

Some successful results of the proposed ego-motion classification network.The first, and the second columns are two consecutive compound images. The third column visualizes local classifications on each single eye position. The last column shows the ground truth and classified result of camera motion and confidence value.

Figure 11.

Some successful results of the proposed ego-motion classification network.The first, and the second columns are two consecutive compound images. The third column visualizes local classifications on each single eye position. The last column shows the ground truth and classified result of camera motion and confidence value.

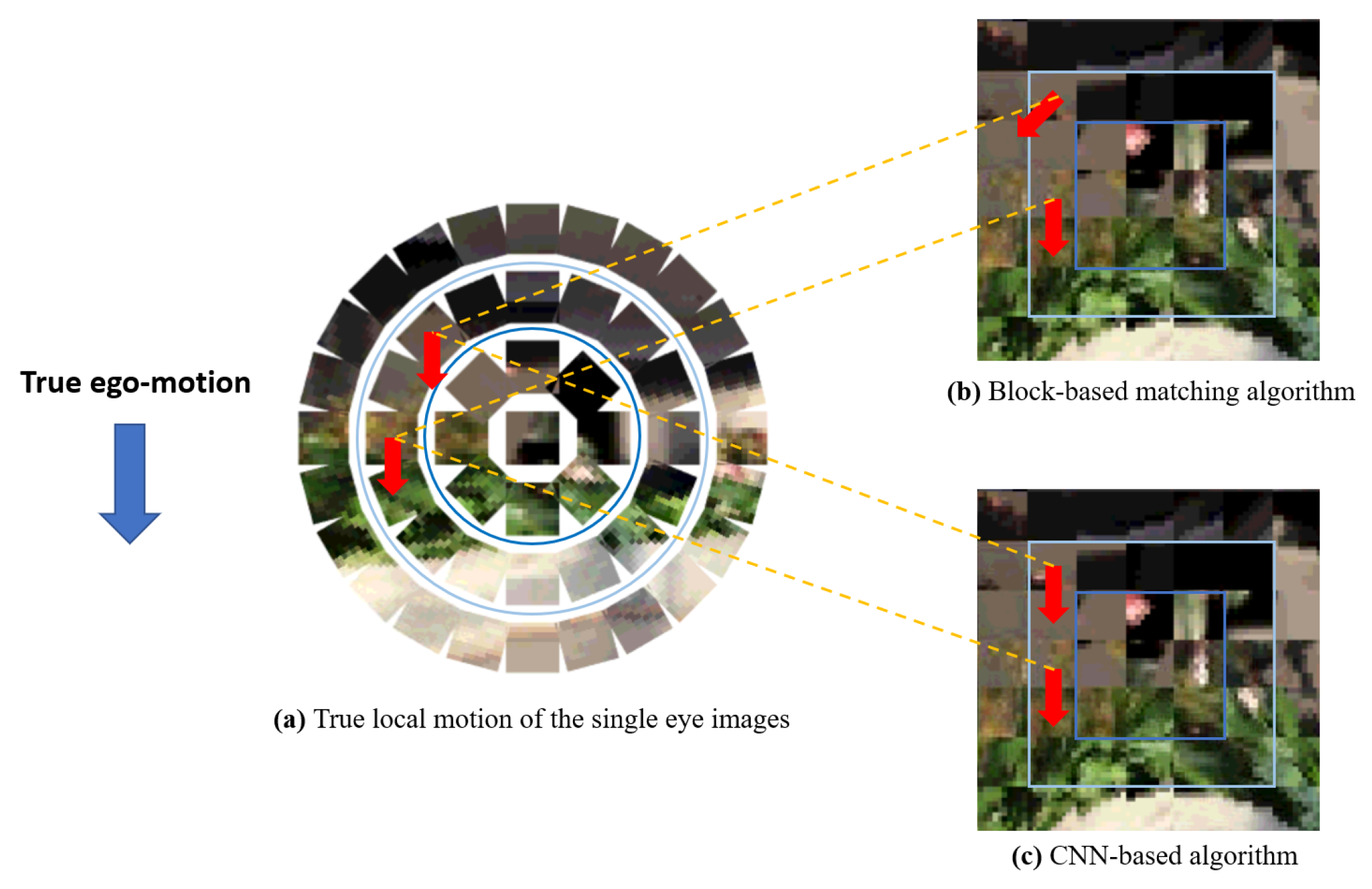

Figure 12.

An example of the geometric distortion when mapping a compound image to 2D plain surface. (a) True local motion of the single eye images on the compound image hemisphere surface. (b) Local classification via the block-based matching algorithm. Since the upper single eye image is rotated when projected to the 2D plane, the direction of its matching block is also moved. (c) Local classification via the CNN-based algorithm. The CNN learns how to classify true motion of each single eye from the distorted compound image on the 2D plane.

Figure 12.

An example of the geometric distortion when mapping a compound image to 2D plain surface. (a) True local motion of the single eye images on the compound image hemisphere surface. (b) Local classification via the block-based matching algorithm. Since the upper single eye image is rotated when projected to the 2D plane, the direction of its matching block is also moved. (c) Local classification via the CNN-based algorithm. The CNN learns how to classify true motion of each single eye from the distorted compound image on the 2D plane.

Table 1.

Detailed structure of the proposed ego-motion classification network. Each layer in the row has output dimension of c, stride of s, zero padding of p, and is repeated n times. Since all the convolutional layers in the proposed network have stride of 1, and all the convolutional layers have zero padding of 1, the size of the compound eye image () is preserved until the output. We also note that since all the layers with training parameters are convolutional layers, the proposed ego-motion classification network can be applied to any size of .

Table 1.

Detailed structure of the proposed ego-motion classification network. Each layer in the row has output dimension of c, stride of s, zero padding of p, and is repeated n times. Since all the convolutional layers in the proposed network have stride of 1, and all the convolutional layers have zero padding of 1, the size of the compound eye image () is preserved until the output. We also note that since all the layers with training parameters are convolutional layers, the proposed ego-motion classification network can be applied to any size of .

| Input | Operator | c | s | n | p |

|---|

| S | conv2d 1 × 1 | 16 | 1 | 1 | 0 |

| concat | 32 | - | 1 | - |

| conv2d 1 × 1 | 128 | 1 | 1 | 0 |

| conv2d 3 × 3 | 128 | 1 | r | 1 |

| conv2d 1 × 1 | 8 | 1 | 1 | 0 |

Table 2.

Detailed structure of the CNN based baseline network without voting. Each layer in the row has output dimension of

c, zero padding of

p, and stride of

s. In contrast to the proposed network described in

Table 1, the baseline network contains some convolutional layers with stride 2, which reduce the output feature map size.

Table 2.

Detailed structure of the CNN based baseline network without voting. Each layer in the row has output dimension of

c, zero padding of

p, and stride of

s. In contrast to the proposed network described in

Table 1, the baseline network contains some convolutional layers with stride 2, which reduce the output feature map size.

| Input | Operator | c | s | p |

|---|

| S | conv2d 1 × 1 | 16 | 1 | 0 |

| concat | 32 | 1 | - |

| conv2d 1 × 1 | 64 | 1 | 0 |

| conv2d 3 × 3 | 64 | 2 | 1 |

| conv2d 3 × 3 | 64 | 2 | 1 |

| conv2d 3 × 3 | 64 | 1 | 1 |

| conv2d 3 × 3 | 128 | 2 | 1 |

| conv2d 1 × 1 | 128 | 1 | 0 |

| fc | 512 | - | - |

| 512 | fc | 8 | - | - |

Table 3.

Compound eye ego-motion classification accuracy (%).

Table 3.

Compound eye ego-motion classification accuracy (%).

| S | 5 | 10 | 15 | 20 | 25 | 30 |

|---|

| r | |

|---|

| 1 | 45.1 | 70.2 | 66.4 | 70.6 | 64.1 | 63.6 |

| 2 | 69.8 | 77.5 | 73.5 | 73.0 | 71.1 | 72.6 |

| 3 | 78.6 | 78.0 | 77.7 | 71.5 | 74.0 | 69.2 |

| 4 | 78.1 | 84.4 | 68.2 | 64.1 | 65.0 | 54.3 |

| 5 | 66.9 | 85.0 | 67.7 | 60.5 | 63.6 | 56.1 |

Table 4.

Average percentage of the vote of the most frequently chosen direction in the voting step (=confidence, %).

Table 4.

Average percentage of the vote of the most frequently chosen direction in the voting step (=confidence, %).

| S | 5 | 10 | 15 | 20 | 25 | 30 |

|---|

| r | |

|---|

| 1 | 37.0 | 40.7 | 38.1 | 35.7 | 36.6 | 33.5 |

| 2 | 42.6 | 48.5 | 43.5 | 39.4 | 43.0 | 37.3 |

| 3 | 48.3 | 52.5 | 50.6 | 47.3 | 42.7 | 42.3 |

| 4 | 52.1 | 52.7 | 44.4 | 43.3 | 41.9 | 36.5 |

| 5 | 48.3 | 53.1 | 43.2 | 43.6 | 40.4 | 40.6 |

Table 5.

Number of parameters of various configurations of the proposed model.

Table 5.

Number of parameters of various configurations of the proposed model.

| S | 5 | 10 | 15 | 20 | 25 | 30 |

|---|

| r | |

|---|

| 1 | 303 K | 306 K | 312 K | 321 K | 332 K | 345 K |

| 2 | 450 K | 454 K | 460 K | 468 K | 479 K | 492 K |

| 3 | 598 K | 601 K | 607 K | 616 K | 627 K | 640 K |

| 4 | 745 K | 749 K | 755 K | 763 K | 774 K | 787 K |

| 5 | 893 K | 897 K | 903 K | 911 K | 922 K | 935 K |

Table 6.

FLOPs of various configurations of the proposed model.

Table 6.

FLOPs of various configurations of the proposed model.

| S | 5 | 10 | 15 | 20 | 25 | 30 |

|---|

| r | |

|---|

| 1 | 0.605 M | 0.612 M | 0.623 M | 0.640 M | 0.662 M | 0.688 M |

| 2 | 0.899 M | 0.907 M | 0.918 M | 0.935 M | 0.957 M | 0.983 M |

| 3 | 1.19 M | 1.20 M | 1.21 M | 1.23 M | 1.25 M | 1.29 M |

| 4 | 1.49 M | 1.50 M | 1.51 M | 1.53 M | 1.55 M | 1.57 M |

| 5 | 1.78 M | 1.79 M | 1.80 M | 1.82 M | 1.84 M | 1.87 M |

Table 7.

Comparison with some popular CNN-based image recognition algorithms. Note that MobileNetV2 [

33] and ShuffleNetV2 (

) [

34] are the networks which are designed to be light-weight.

Table 7.

Comparison with some popular CNN-based image recognition algorithms. Note that MobileNetV2 [

33] and ShuffleNetV2 (

) [

34] are the networks which are designed to be light-weight.

| | Number of Parameters | FLOPs |

|---|

| AlexNet [35] | 60 M | 720 M |

| VGG16 [36] | 138 M | 153 G |

| ResNet50 [30] | 25 M | 4 G |

| MobileNetV2 [33] | 3.4 M | 300 M |

| ShuffleNetV2 () [34] | 2.3 M | 146 M |

| Ours () | 0.9 M | 1.8 M |

Table 8.

Comparison with baseline algorithms.

Table 8.

Comparison with baseline algorithms.

| | 2D RGB Image | Compound Image |

|---|

| | Accuracy (%) | Confidence (%) | Accuracy (%) | Confidence (%) |

|---|

| Block-based matching | 46.5 | 28.6 | 19.3 | 22.3 |

| CNN w/o vote | 46.7 | - | 64.1 | - |

| CNN w/ vote (ours) | 88.0 | 49.3 | 85.0 | 53.1 |