Improved Visual Inspection through 3D Image Reconstruction of Defects Based on the Photometric Stereo Technique

Abstract

:1. Introduction

2. Photometric Stereo Technique

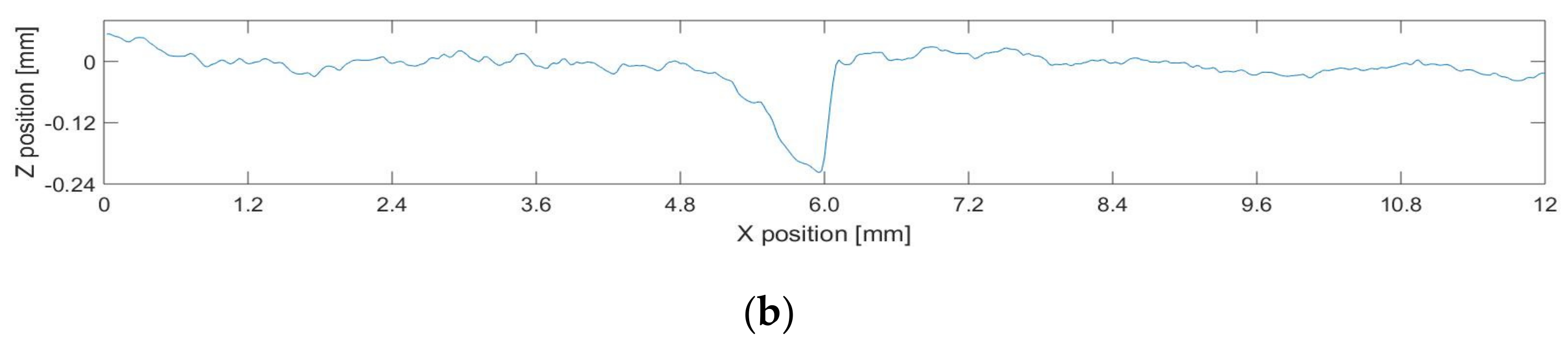

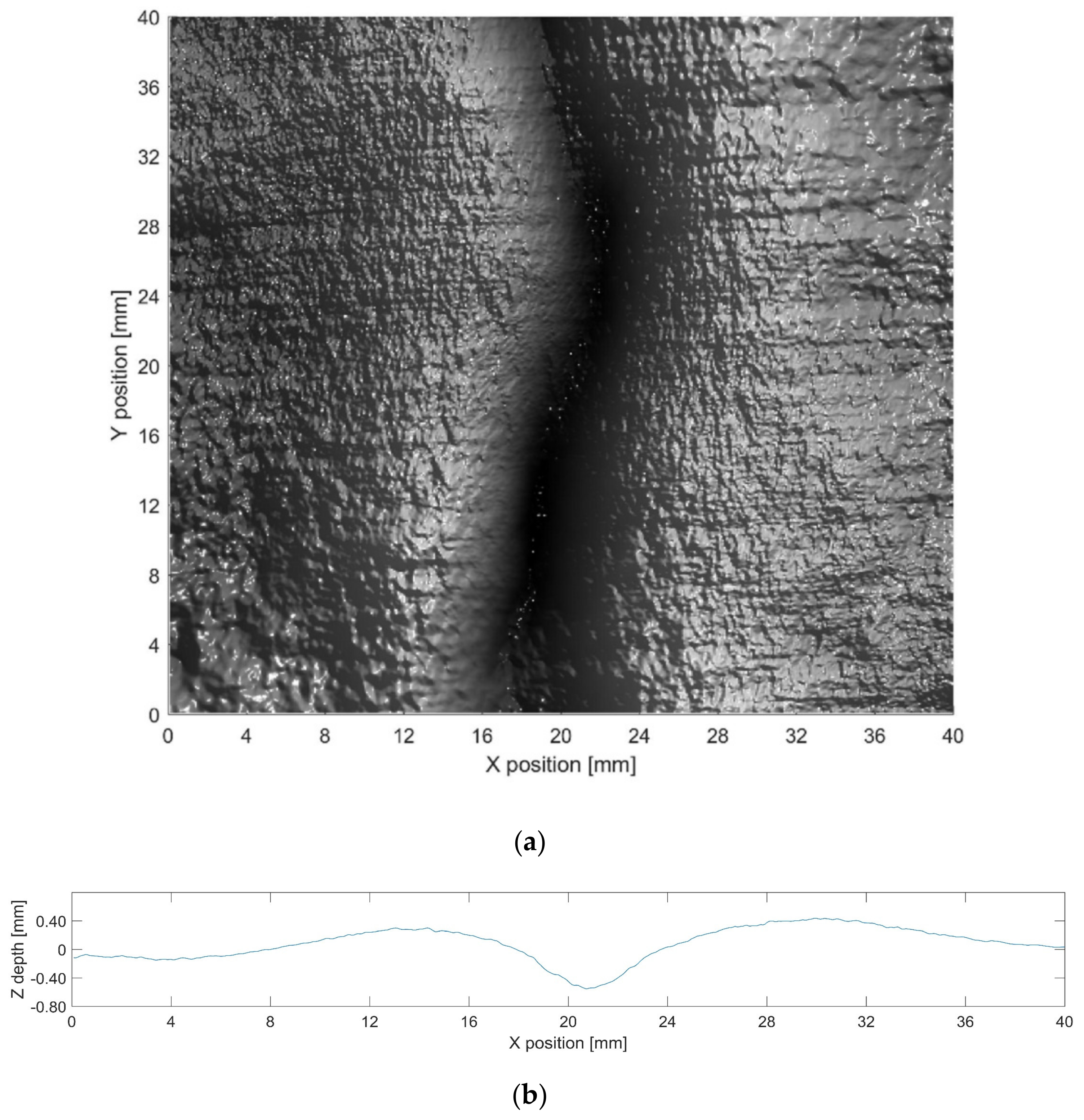

3. Three-Dimensional Shape Reconstruction of Defects

3.1. Existing Two-Dimensional Image Capture and Data Analysis Method

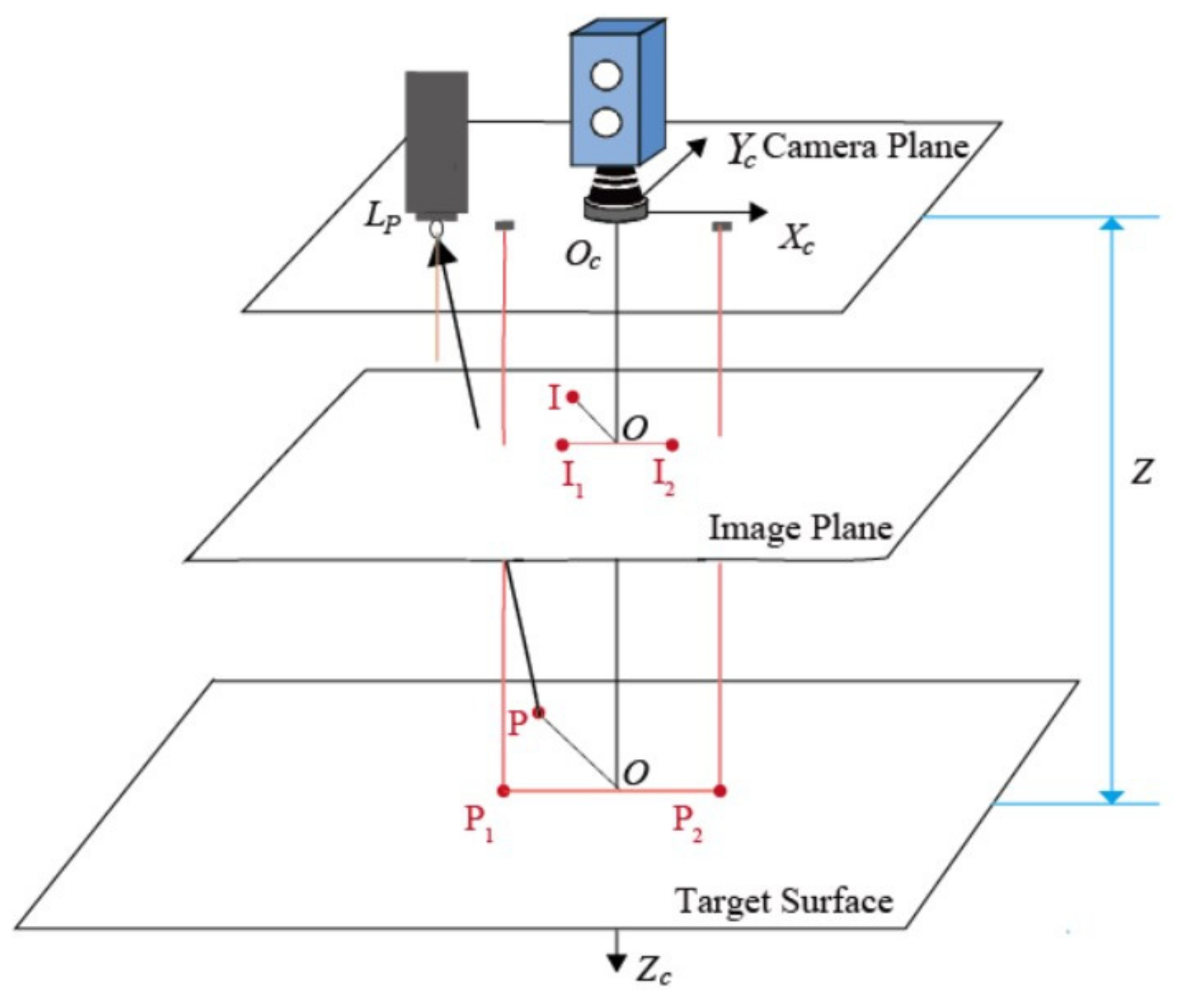

3.2. Photometric Stereo System

- (1)

- Estimate the distance between the PTZ (camera plane) and the target surface by using laser points and prior knowledge;

- (2)

- Estimate l and E for each pixel by determining the relationship between each image pixel and its corresponding point on the target surface, using the near-field light model;

- (3)

- Compute the normal by resolving the irradiance equations;

- (4)

- Compute the final 3D surface shape from the normal field via an optimization algorithm.

3.3. Estimation of Light Direction and Intensity

- (1)

- The distance z between the camera plane and the target plane is determined via using lasers;

- (2)

- The orthographic projection-based method is used to determine how a point on the target surface is related to a pixel on the image plane.

3.4. Three-Dimensional Reconstruction using Photometric Stereo Technique

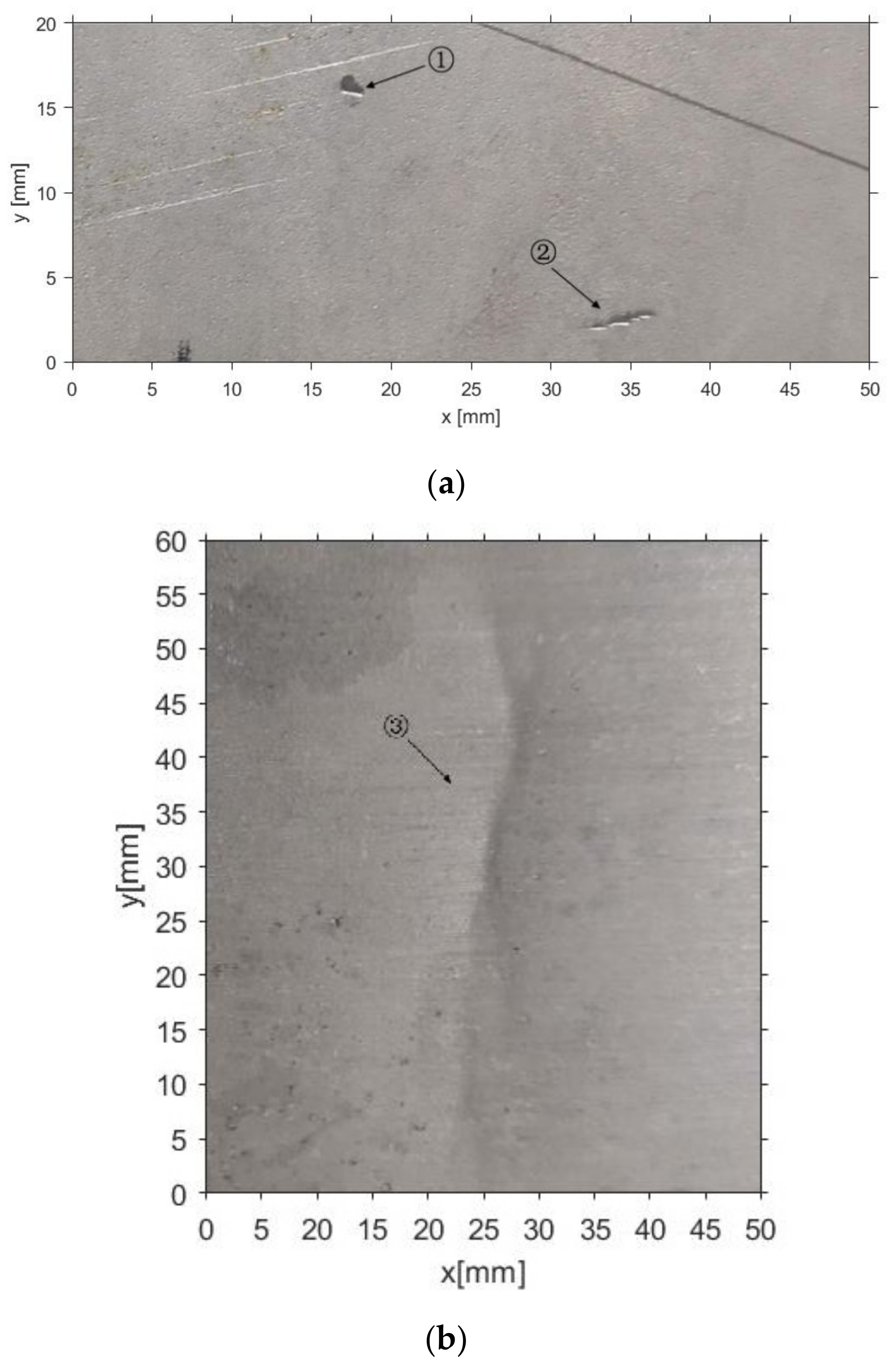

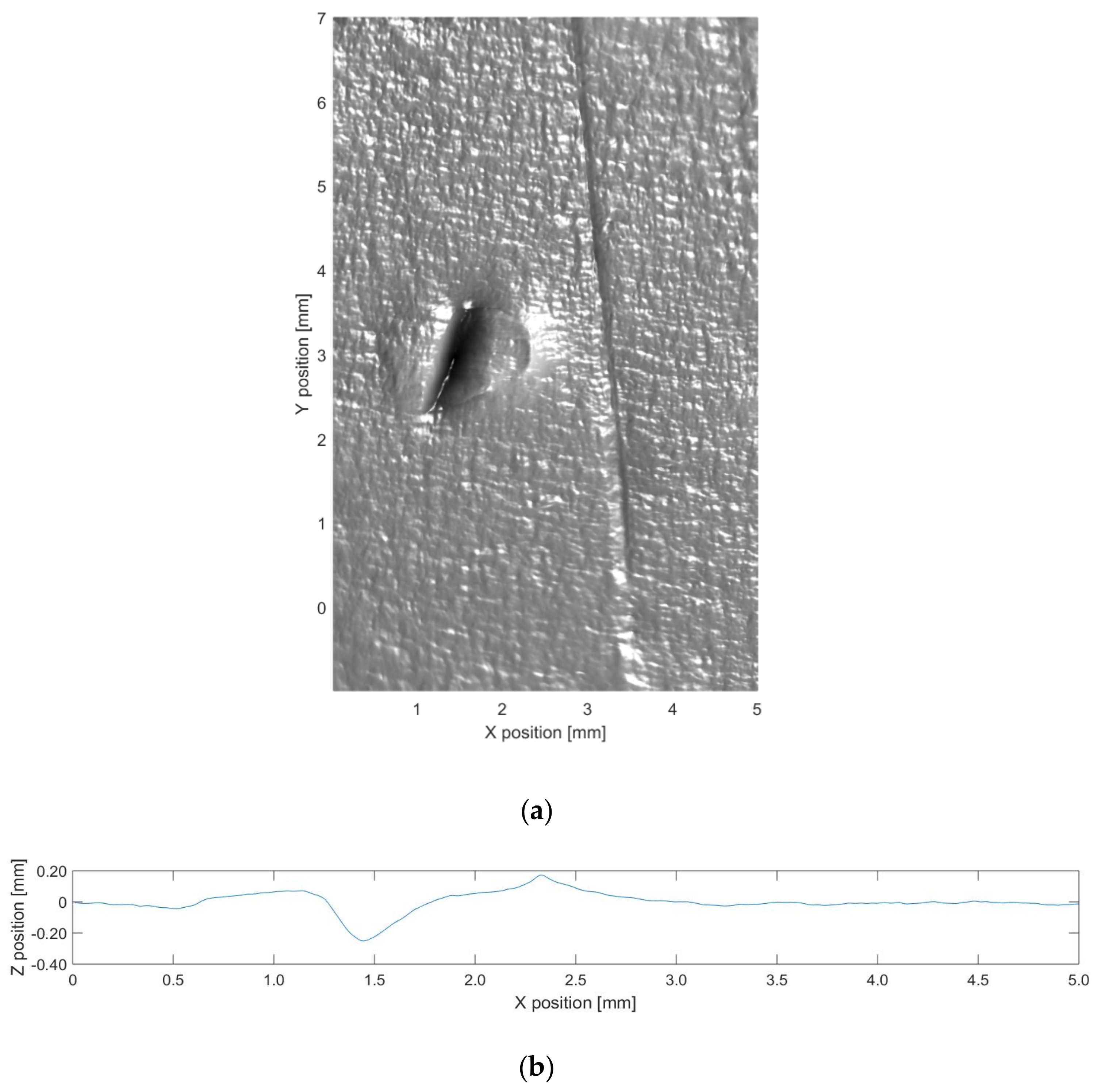

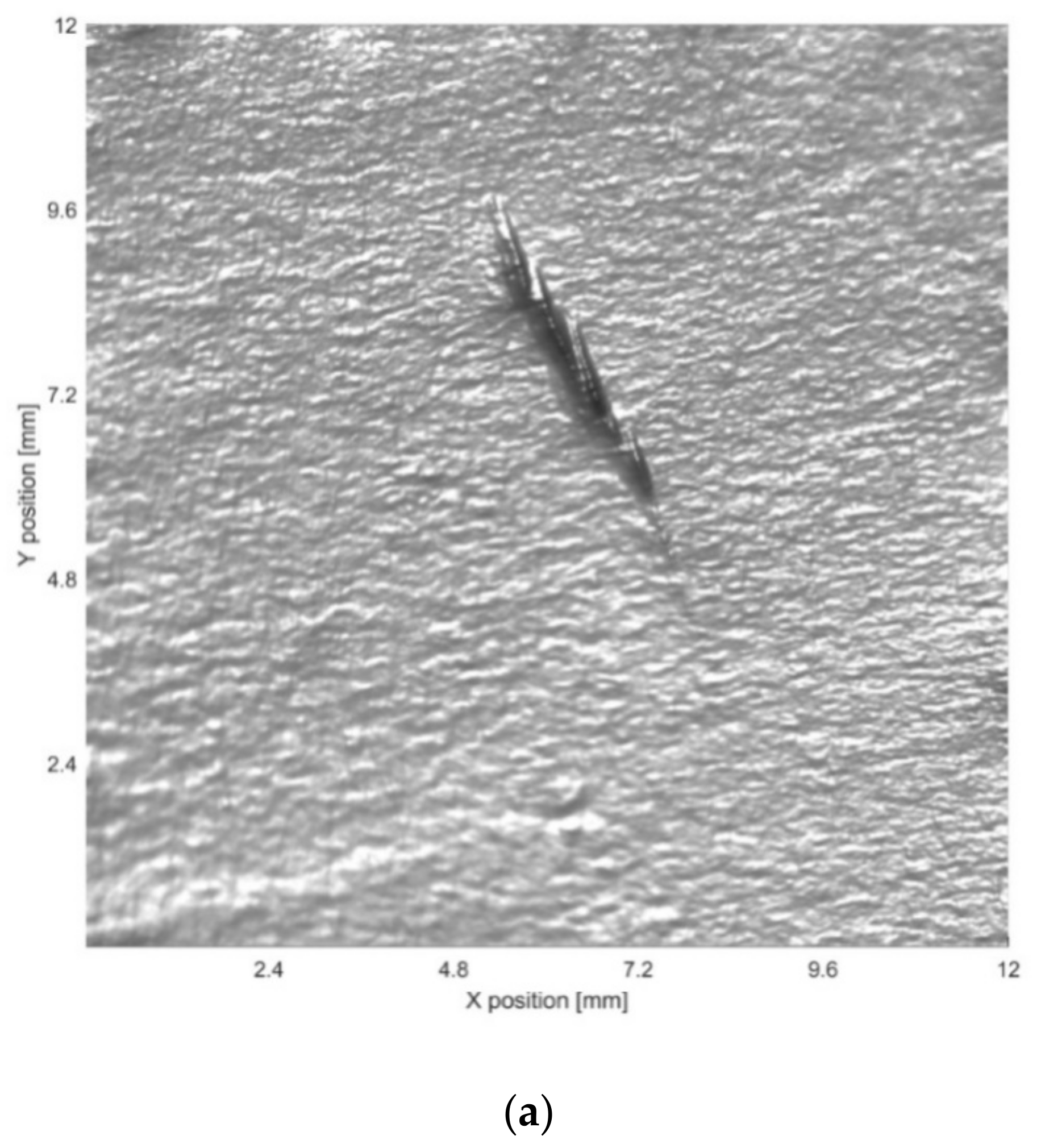

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cumblidge, S.E.; Doctor, S.R.; Anderson, M.T. The Capabilities and Limitations of Remote Visual Methods to Detect Service-Induced Cracks in Reactor Components. In Proceedings of the ASME Pressure Vessels and Piping Conference, Volume 5: High Pressure Technology, Nondestructive Evaluation, Pipeline Systems, Vancoucer, BC, Canada, 23–27 July 2006; pp. 183–193. [Google Scholar]

- U.S. Nuclear Regulatory Commission. Regulatory Guide 1.147: Inservice Inspection Code Acceptability, ASME Section, X.I; Division 1; NRC: Washington, DC, USA, 2004.

- Arias, J.; Hummel, W. Fuel Services Progress in Visual Examination and Measurements on Fuel Assemblies and Associated Core Components Track 1. In Proceedings of the Top Fuel 2009, Paris, France, 6–10 September 2009. [Google Scholar]

- Heinsius, J.; Tsvetkov, E. ENIQ-Qualified Visual Examinations by Means of a Remote Controlled Submarine. In Proceedings of the 19th World Conference on Non-Destructive Testing, Munich, Germany, 13–17 June 2016. [Google Scholar]

- Spinnler, K.; Bergen, T.; Sandvoss, J.; Wittenberg, T.; Fraunhofer-Institut für Integrierte Schaltungen IIS Röntgentechnik. Digital Image Processing for the Automation of NDT by Means of Endoscopy. In Proceedings of the 19th World Conference on Non-Destructive Testing, Munich, Germany, 13–17 June 2016. [Google Scholar]

- Hebel, C.; DiSabatino, R.; Gresh, K. Machine Vision Technology. Nucl. Plant J. 2012, 30, 28–29. [Google Scholar]

- Williams, T.; Hammel, K. Time is Money and Image is Everything. In Proceedings of the 19th World Conference on Non-Destructive Testing, Munich, Germany, 13–17 June 2016. [Google Scholar]

- West, G.; Murray, P.; Marshall, S. Improved Visual Inspection of Advanced Gas-Cooled Reactor Fuel Channels. Int. J. Progn. Health Manag. 2015, 6, 1–11. [Google Scholar]

- Woodham, R.J. Photometric Method for Determining Surface Orientation from Multiple Images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Kang, D.Y.; Jang, Y.J.; Won, S.C. Development of an Inspection System for Planar Steel Surface using Multispectral Photometric Stereo. Opt. Eng. 2013, 52, 039701. [Google Scholar] [CrossRef]

- Eva, W.; Sebastian, Z.; Matthias, S.; Eitzinger, C. Photometric Stereo Sensor for Robot-assisted Industrial Quality Inspection of Coated Composite Material Surfaces. In Proceedings of the SPIE 12th International Conference on Quality Control by Artificial Vision, Le Creusot, France, 3–5 June 2015. [Google Scholar]

- Han, T.Q.; Shen, H.L. Photometric Stereo for General BRDFs via Reflection Sparsity Modeling. IEEE Trans. Image Process. 2015, 24, 4888–4903. [Google Scholar] [CrossRef] [PubMed]

- Shi, B.X.; Mo, Z.P.; Wu, Z.; Duan, D.; Yeung, S.K.; Tan, P. A Benchmark Dataset and Evaluation for Non-Lambertian and Uncalibrated Photometric Stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 271–284. [Google Scholar] [CrossRef] [PubMed]

- Ackermann, J.; Goesele, M. A Survey of Photometric Stereo Techniques. Found. Trends Comput. Graph. Vis. 2015, 9, 149–254. [Google Scholar] [CrossRef]

- Mo, Z.P.; Shi, B.X.; Lu, F.; Yeung, S.K.; Matsushita, Y. Uncalibrated Photometric Stereo under Natural Illumination. In Proceedings of the CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Xie, W.Y.; Dai, C.K.; Wang, C. Photometric Stereo with Near Point Lighting: A solution by mesh deformation. In Proceedings of the CVPR 2015, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Xie, L.M.; Song, Z.H.; Jiao, G.H.; Huang, X.; Jia, K. A Practical Means for Calibrating an LED-based Photometric Stereo System. Opt. Lasers Eng. 2015, 64, 42–50. [Google Scholar] [CrossRef]

- Mecca, R.; Wetzler, A.; Bruckstein, A.M. Near Field Photometric Stereo with Point Light Source. SIAM J. Imaging Sci. 2014, 7, 2732–2770. [Google Scholar] [CrossRef]

- Queau, Y.; Durix, B.; Wu, T.; Cremers, D.; Lauze, F.; Durou, J.D. LED-based Photometric Stereo: Modeling, Calibration and Numerical Solution. J. Math. Imaging Vis. 2018, 60, 1–26. [Google Scholar] [CrossRef]

- Logothetis, F.; Mecca, R.; Cipolla, R. Semi-calibrated Near Field Photometric Stereo. In Proceedings of the CVPR 2017, Honolulu, HI, USA, 22–25 June 2017. [Google Scholar]

- Papadhimitri, T.; Favaro, P. Uncalibrated Near-Light Photometric Stereo. In Proceedings of the BMVC 2014, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Queau, Y.; Wu, T.; Cremers, D. Semi-calibrated Near-Light Photometric Stereo. In Proceedings of the SSVM 2017, Kolding, Denmark, 4–8 June 2017; pp. 656–668. [Google Scholar]

- Murray, P.; West, G.; Marshall, S.; McArthur, S. Automated In-Core Image Generation from Video to Aid Visual Inspection of Nuclear Power Plant Cores. Nucl. Eng. Des. 2016, 300, 57–66. [Google Scholar] [CrossRef]

- Papadhimitri, T.; Favaro, P. A New Perspective Uncalibrated Photometric Stereo. In Proceedings of the CVPR 2013, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Horn, B.K. Robot Vision; The MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Smith, D.J.G.; Bors, A.G. Height Estimation from Vector Fields of Surface Normal. In Proceedings of the International Conference on Digital Signal Processing, Hellas, Greece, 1–3 June 2002. [Google Scholar]

- Barsky, S.; Petrou, M. The 4-Source Photometric Stereo Technique for 3-Dimensional Surfaces in the Presence of Highlights and Shadows. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1239–1252. [Google Scholar] [CrossRef]

- Frankot, R.T.; Chellappa, R. A Method for Enforcing Integrability in Shape from Shading Algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 439–451. [Google Scholar] [CrossRef]

| Real Distance | Estimated Value | Error |

|---|---|---|

| 133 | 138 | 5 |

| 193 | 196 | 3 |

| 253 | - | - |

| 313 | 318 | 5 |

| 375 | 376 | 1 |

| 433 | 441 | 8 |

| 493 | 510 | 17 |

| Defect Number | Z | Baseline |

|---|---|---|

| 1 | 0.29 | 0.23 |

| 2 | 0.22 | 0.20 |

| 3 | 0.53 | 0.49 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.; Xu, K.; Li, M.; Wu, M. Improved Visual Inspection through 3D Image Reconstruction of Defects Based on the Photometric Stereo Technique. Sensors 2019, 19, 4970. https://doi.org/10.3390/s19224970

Huang S, Xu K, Li M, Wu M. Improved Visual Inspection through 3D Image Reconstruction of Defects Based on the Photometric Stereo Technique. Sensors. 2019; 19(22):4970. https://doi.org/10.3390/s19224970

Chicago/Turabian StyleHuang, Sanao, Ke Xu, Ming Li, and Mingren Wu. 2019. "Improved Visual Inspection through 3D Image Reconstruction of Defects Based on the Photometric Stereo Technique" Sensors 19, no. 22: 4970. https://doi.org/10.3390/s19224970

APA StyleHuang, S., Xu, K., Li, M., & Wu, M. (2019). Improved Visual Inspection through 3D Image Reconstruction of Defects Based on the Photometric Stereo Technique. Sensors, 19(22), 4970. https://doi.org/10.3390/s19224970