Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle

Abstract

1. Introduction

2. Related Work

2.1. Zero Velocity 2D–3D Trackers

2.2. Constant Velocity 2D–3D Trackers

2.3. Kalman Filter Based 2D–3D Trackers

2.4. Particle Filter Based 2D–3D Trackers

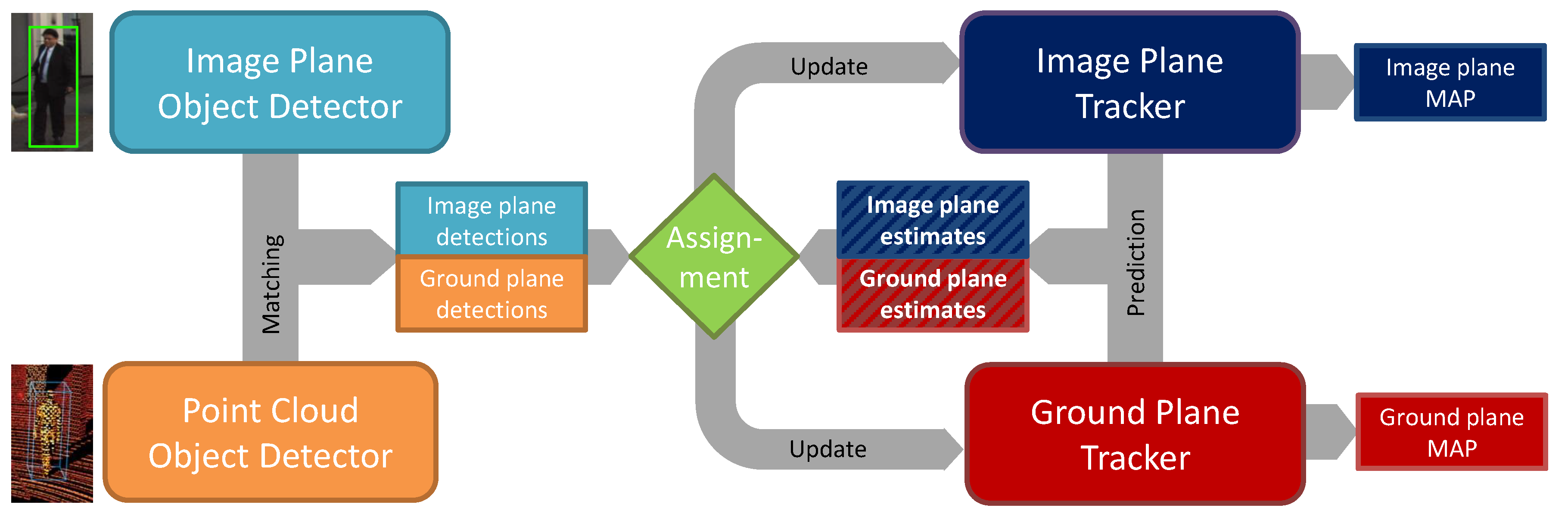

3. System Overview

3.1. General Layout and Contributions

3.2. Problem Definition

- Prediction prior to observing new data. Estimates are made on based on the past state and state transition using the particle filters where particle motion is governed by our behavioral motion model, Section 5.1 and Section 5.2.

- Association of newly detected pedestrians (observations) to the predicted targets . An assignment function based on the observation likelihood maximizes the matching similarity in a combined 2D–3D hyperspace. The result of the association is a list of feasible tuples , more details in Section 6.1.

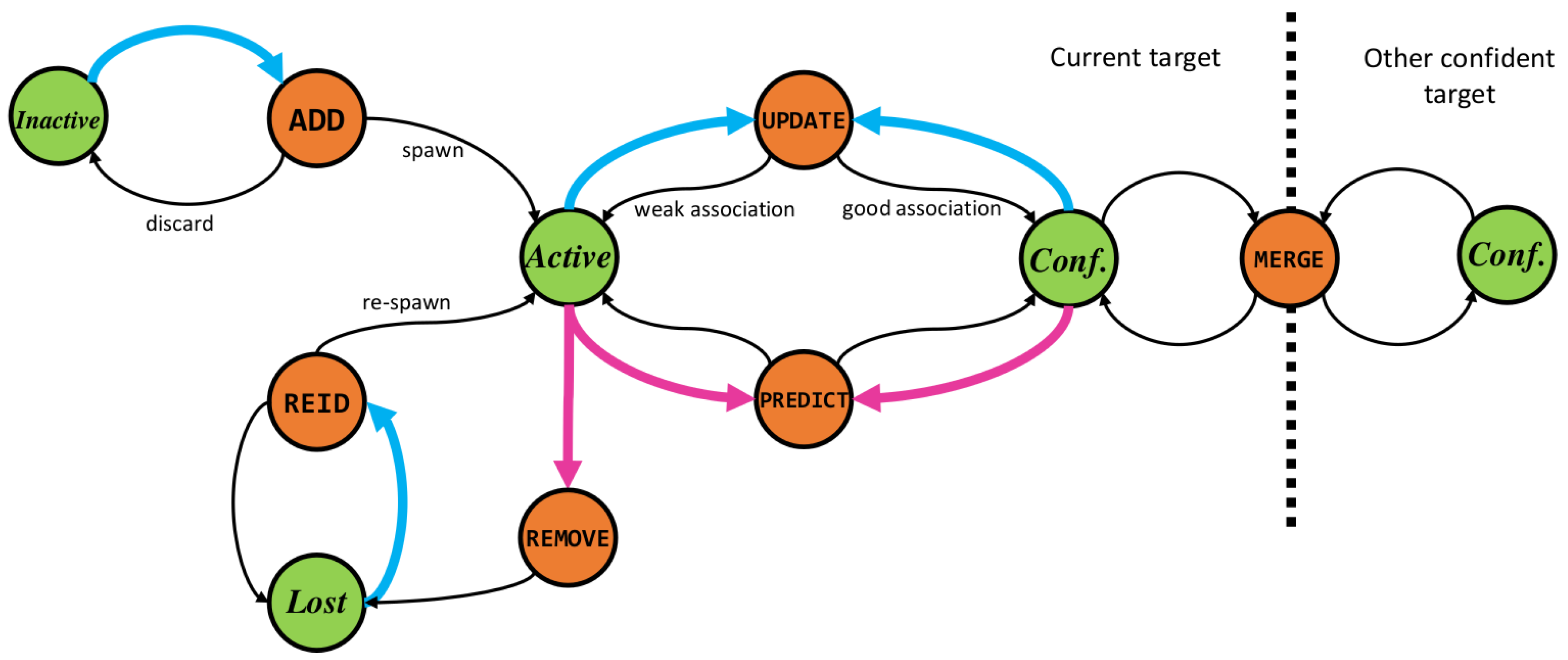

- Management which finds an optimal policy for creation, update, deletion or merger of new targets to the tracker based on the association result . Target updates are performed using the bootstrap particle filter formulation, details in Section 4.2, while creation, deletion, and merger of targets is performed based on our track confidence score , Section 6.3.

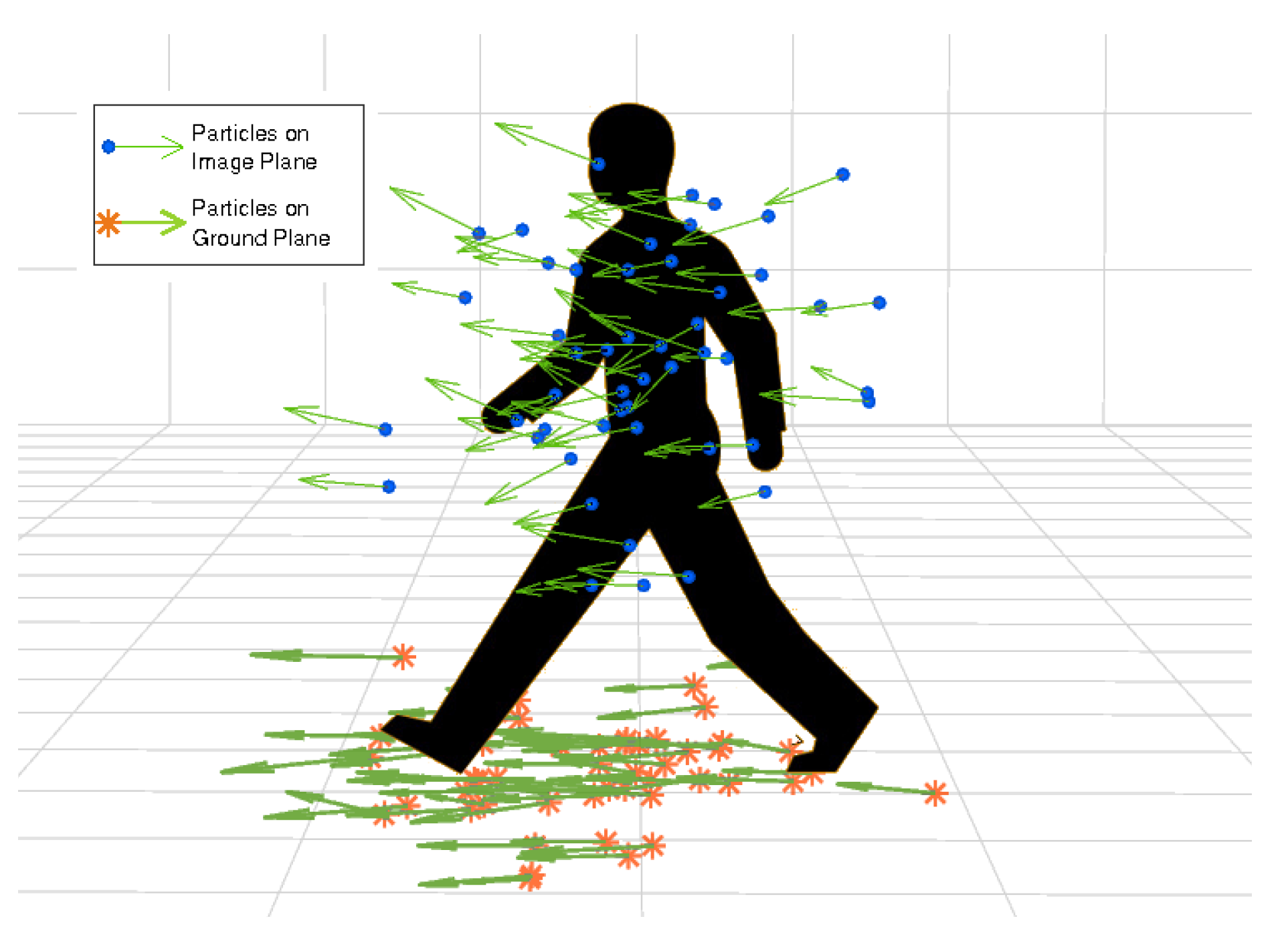

4. Proposed 2D–3D MOT Tracker

4.1. Bayesian Tracking

4.2. Particle Filters

5. Motion and Observation Models

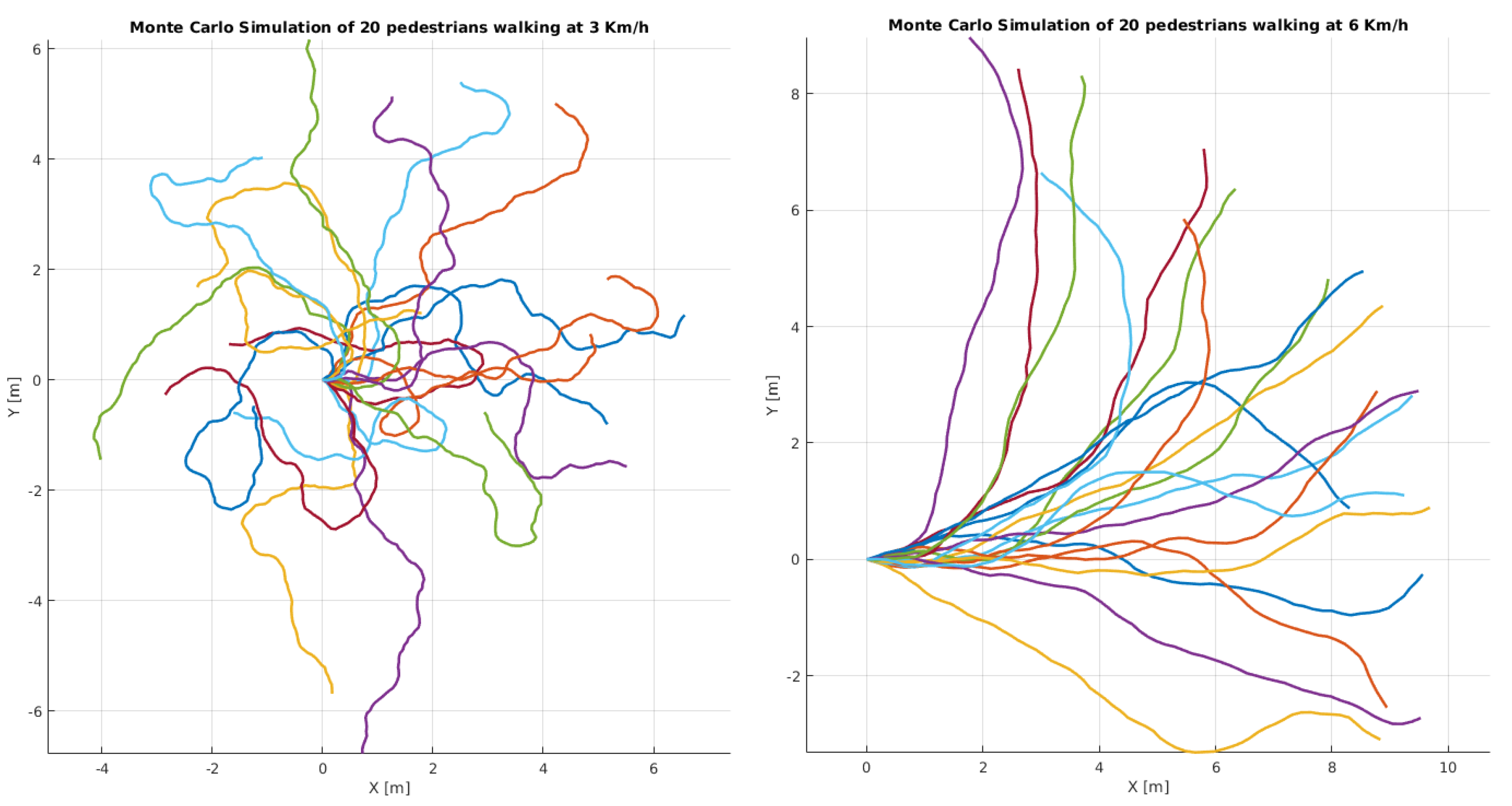

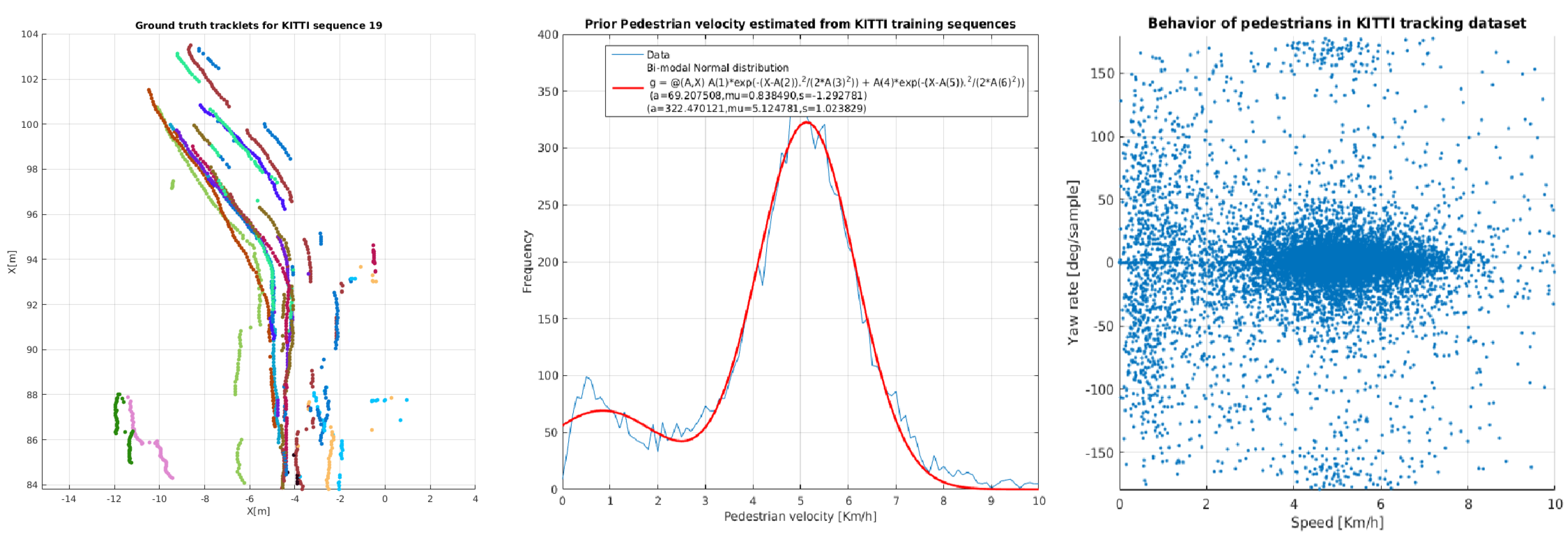

5.1. Ground Plane Motion Model

5.2. Image Plane Motion Model

5.3. Observation Likelihood Model

6. Data Association and Track Management

6.1. Optimal Data to Target Association

6.2. Track Confidence Score

6.3. Track Management

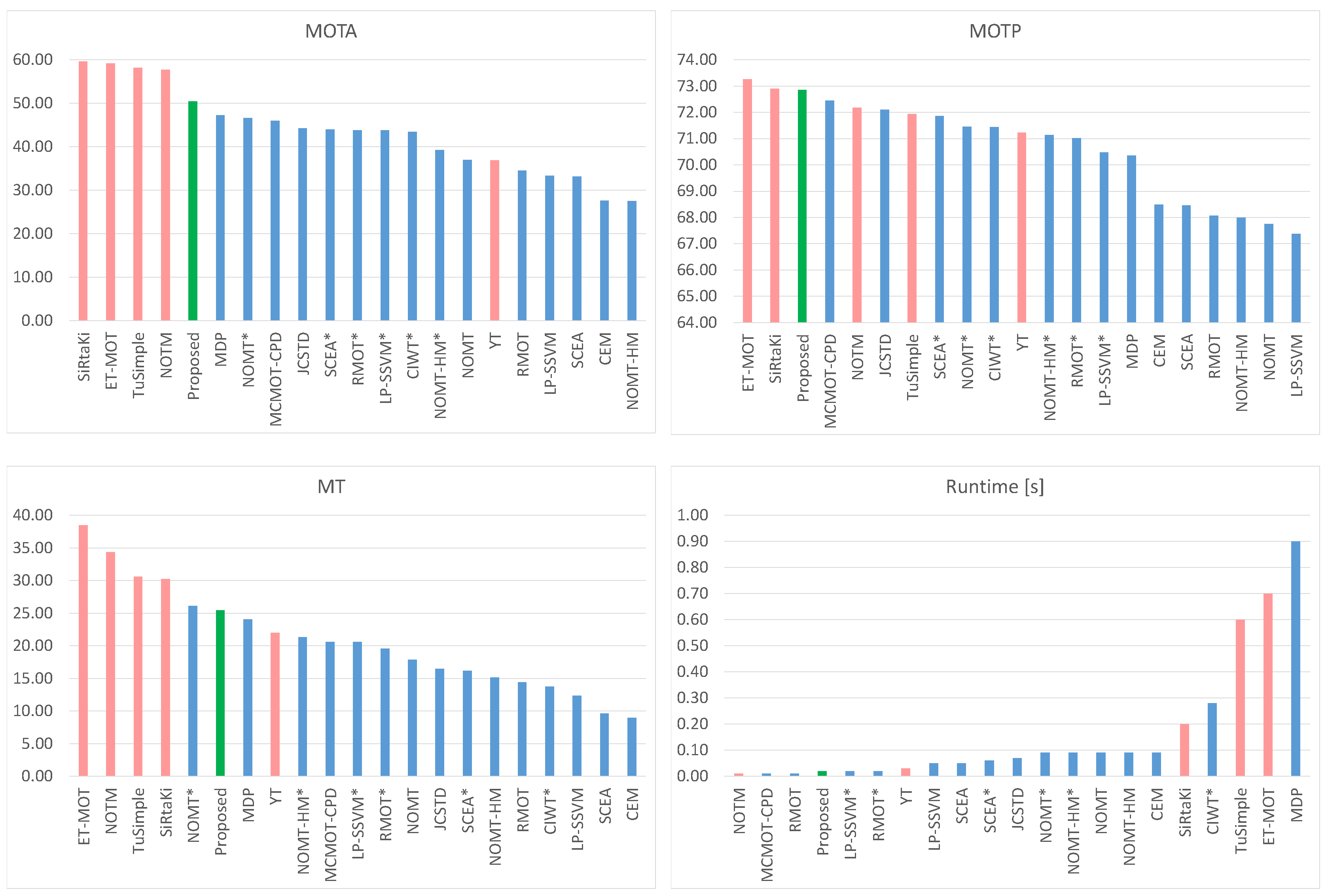

7. Evaluation and Results

7.1. Evaluation Metrics

7.2. Dataset and Implementation Details

7.3. Performance Evaluation

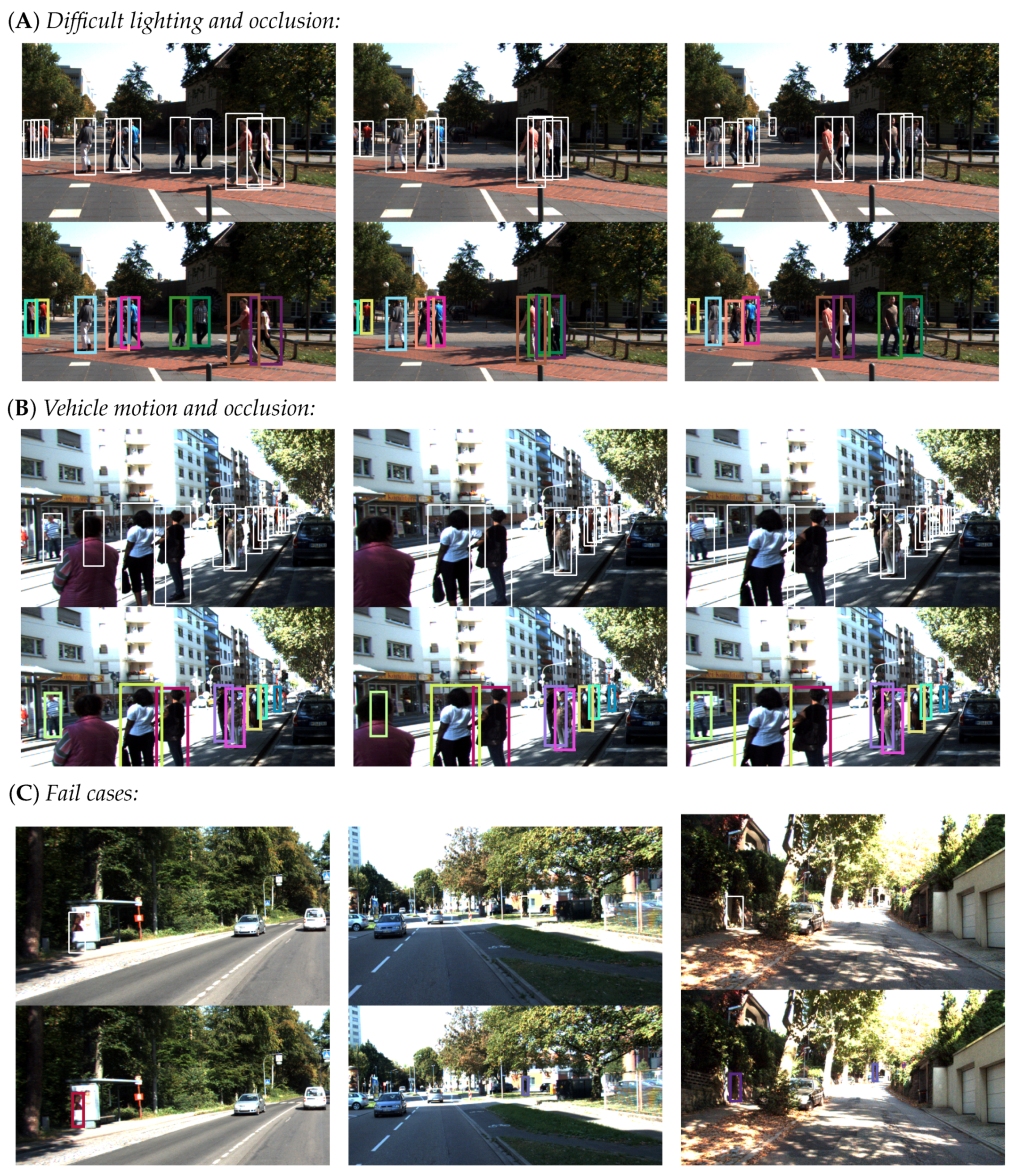

7.4. Qualitative Analysis and Discussion

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The KITTI Dataset. Int. J. Robot. Res. (IJRR) 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Leal-Taixe, L.; Milan, A.; Schindler, K.; Cremers, D.; Reid, I.D.; Roth, S. Tracking the Trackers: An Analysis of the State of the Art in Multiple Object Tracking. arXiv, 2017; arXiv:1704.02781. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally-optimal Greedy Algorithms for Tracking a Variable Number of Objects. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR ’11), Washington, DC, USA, 20–25 June 2011; pp. 1201–1208. [Google Scholar]

- Milan, A.; Schindler, K.; Roth, S. Multi-Target Tracking by Discrete-Continuous Energy Minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2054–2068. [Google Scholar] [CrossRef] [PubMed]

- Ban, Y.; Ba, S.; Alameda-Pineda, X.; Horaud, R. Tracking Multiple Persons Based on a Variational Bayesian Model. In Computer Vision—ECCV 2016 Workshops: Amsterdam, The Netherlands, 8–10 and 15–16 October 2016, Proceedings, Part II; Hua, G., Jégou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 52–67. [Google Scholar]

- Dicle, C.; Camps, O.I.; Sznaier, M. The Way They Move: Tracking Multiple Targets with Similar Appearance. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Milan, A.; Roth, S.; Schindler, K. Continuous Energy Minimization for Multitarget Tracking. IEEE TPAMI 2014, 36, 58–72. [Google Scholar] [CrossRef] [PubMed]

- Leal-Taixe, L.; Fenzi, M.; Kuznetsova, A.; Rosenhahn, B.; Savarese, S. Learning an Image-based Motion Context for Multiple People Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Fagot-Bouquet, L.; Audigier, R.; Dhome, Y.; Lerasle, F. Improving Multi-frame Data Association with Sparse Representations for Robust Near-online Multi-object Tracking. In Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part VIII; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 774–790. [Google Scholar]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple Hypothesis Tracking Revisited. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015. [Google Scholar]

- Kieritz, H.; Becker, S.; Hubner, W.; Arens, M. Online multi-person tracking using Integral Channel Features. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 122–130. [Google Scholar]

- Choi, W. Near-Online Multi-target Tracking with Aggregated Local Flow Descriptor. arXiv, 2015; arXiv:1504.02340. [Google Scholar]

- Osep, A.; Mehner, W.; Mathias, M.; Leibe, B. Combined Image- and World-Space Tracking in Traffic Scenes. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Sadeghian, A.; Alahi, A.; Savarese, S. Tracking The Untrackable: Learning To Track Multiple Cues with Long-Term Dependencies. arXiv, 2017; arXiv:1701.01909. [Google Scholar]

- Tang, S.; Andres, B.; Andriluka, M.; Schiele, B. Multi-Person Tracking by Multicut and Deep Matching. arXiv, 2016; arXiv:1608.05404. [Google Scholar]

- Darrell, T.; Gordon, G.; Harville, M.; Woodfill, J. Integrated Person Tracking Using Stereo, Color, and Pattern Detection. Int. J. Comput. Vis. 2000, 37, 175–185. [Google Scholar] [CrossRef]

- Salas, J.; Tomasi, C. People Detection Using Color and Depth Images. In Proceedings of the Pattern Recognition: Third Mexican Conference, MCPR 2011, Cancun, Mexico, 29 June–2 July 2011; Martínez-Trinidad, J.F., Carrasco-Ochoa, J.A., Ben-Youssef Brants, C., Hancock, E.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 127–135. [Google Scholar]

- Bansal, M.; Jung, S.H.; Matei, B.; Eledath, J.; Sawhney, H. A real-time pedestrian detection system based on structure and appearance classification. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 903–909. [Google Scholar]

- Dan, B.K.; Kim, Y.S.; Jung, J.Y.; Ko, S.J. Robust people counting system based on sensor fusion. IEEE Trans. Consum. Electron. 2012, 58, 1013–1021. [Google Scholar] [CrossRef]

- Han, J.; Pauwels, E.J.; de Zeeuw, P.M.; de With, P.H.N. Employing a RGB-D sensor for real-time tracking of humans across multiple re-entries in a smart environment. IEEE Trans. Consum. Electron. 2012, 58, 255–263. [Google Scholar] [CrossRef]

- Bajracharya, M.; Moghaddam, B.; Howard, A.; Brennan, S.; Matthies, L.H. A Fast Stereo-based System for Detecting and Tracking Pedestrians from a Moving Vehicle. Int. J. Robot. Res. 2009, 28. [Google Scholar] [CrossRef]

- Zhang, H.; Reardon, C.; Parker, L.E. Real-Time Multiple Human Perception With Color-Depth Cameras on a Mobile Robot. IEEE Trans. Cybern. 2013, 43, 1429–1441. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Liu, Y.; Cui, Y.; Chen, Y.Q. Real-time human detection and tracking in complex environments using single RGBD camera. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 3088–3092. [Google Scholar]

- Liu, J.; Liu, Y.; Zhang, G.; Zhu, P.; Qiu Chen, Y. Detecting and tracking people in real time with RGB-D camera. Pattern Recognit. Lett. 2015, 53, 16–23. [Google Scholar] [CrossRef]

- Junji, S.; Masaya Chiba, J.M. Visual Person Identification Using a Distance-dependent Appearance Model for a Person Following Robot. Int. J. Autom. Comput. 2013, 10, 438–446. [Google Scholar] [CrossRef]

- Muñoz Salinas, R.; Aguirre, E.; García-Silvente, M. People Detection and Tracking Using Stereo Vision and Color. Image Vis. Comput. 2007, 25, 995–1007. [Google Scholar] [CrossRef]

- Vo, D.M.; Jiang, L.; Zell, A. Real time person detection and tracking by mobile robots using RGB-D images. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 689–694. [Google Scholar]

- Harville, M. Stereo person tracking with adaptive plan-view templates of height and occupancy statistics. Image Vis. Comput. 2004, 22, 127–142. [Google Scholar] [CrossRef]

- Munoz-Salinas, R. A Bayesian plan-view map based approach for multiple-person detection and tracking. Pattern Recognit. 2008, 41, 3665–3676. [Google Scholar] [CrossRef]

- Munoz-Salinas, R.; Medina-Carnicer, R.; Madrid-Cuevas, F.; Carmona-Poyato, A. People detection and tracking with multiple stereo cameras using particle filters. J. Visual Commun. Image Represent. 2009, 20, 339–350. [Google Scholar] [CrossRef]

- Munoz-Salinas, R.; Garcia-Silvente, M.; Medina-Carnicer, R. Adaptive multi-modal stereo people tracking without background modelling. J. Visual Commun. Image Represent. 2008, 19, 75–91. [Google Scholar] [CrossRef]

- Choi, W.; Pantofaru, C.; Savarese, S. Detecting and Tracking People using an RGB-D Camera via Multiple Detector Fusion. In Proceedings of the Workshop on Challenges and Opportunities in Robot Perception, at the International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Choi, W.; Pantofaru, C.; Savarese, S. A General Framework for Tracking Multiple People from a Moving Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1577–1591. [Google Scholar] [CrossRef]

- Dimitrievski, M.; Hamme, D.V.; Veelaert, P.; Philips, W. Robust Matching of Occupancy Maps for Odometry in Autonomous Vehicles. In Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Rome, Italy, 27–29 February 2016; VISAPP (VISIGRAPP 2016), INSTICC. SciTePress: Setúbal, Portugal, 2016; Volume 3, pp. 626–633. [Google Scholar]

- Liu, C. Beyond Pixels: Exploring New Representations and Application for Motion Analysis. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, May 2009. [Google Scholar]

- Blackman, S.; Popoli, R. Design and Analysis of Modern Tracking Systems; Artech House Radar Library, Artech House: London, UK, 1999. [Google Scholar]

- Cappe, O.; Moulines, E.; Ryden, T. Inference in Hidden Markov Models (Springer Series in Statistics); Springer Inc.: Secaucus, NJ, USA, 2005. [Google Scholar]

- Van Der Merwe, R.; Doucet, A.; De Freitas, N.; Wan, E. The Unscented Particle Filter. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2001; Volume 13, pp. 584–590. [Google Scholar]

- Isard, M.; Blake, A. ICONDENSATION: Unifying Low-Level and High-Level Tracking in a Stochastic Framework. In Proceedings of the 5th European Conference on Computer Vision, Freiburg, Germany, 2–6 June 1998; Springer: London, UK, 1998; Volume I, pp. 893–908. [Google Scholar]

- Xiang, Y.; Choi, W.; Lin, Y.; Savarese, S. Subcategory-Aware Convolutional Neural Networks for Object Proposals and Detection. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 924–933. [Google Scholar] [CrossRef]

- Liu, C.; Gong, S.; Loy, C.C.; Lin, X. Evaluating Feature Importance for Re-identification. In Person Re-Identification; Gong, S., Cristani, M., Yan, S., Loy, C.C., Eds.; Springer: London, UK, 2014; pp. 203–228. [Google Scholar]

- Gray, D.; Tao, H. Viewpoint Invariant Pedestrian Recognition with an Ensemble of Localized Features. In Computer Vision—ECCV 2008: 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008, Proceedings, Part I; Forsyth, D., Torr, P., Zisserman, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 262–275. [Google Scholar]

- Burnham, K.P.; Anderson, D.R. Model Selection and Multi-Model Inference, 2nd ed.; Springer Inc.: Secaucus, NJ, USA, 2002; pp. 51–58. [Google Scholar]

- Kőnig, D. Grafok es matrixok. Matematikai es Fizikai Lapok 1931, 38, 116–119. [Google Scholar]

- Kuhn, H.W. The Hungarian Method for the assignment problem. Naval Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Blackman, S. Multiple hypothesis tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Xiang, Y.; Alahi, A.; Savarese, S. Learning to Track: Online Multi-object Tracking by Decision Making. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4705–4713. [Google Scholar]

- Milan, A.; Leal-Taixe, L.; Reid, I.D.; Roth, S.; Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv, 2016; arXiv:1603.00831. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

- Wu, B.; Nevatia, R. Detection and Tracking of Multiple, Partially Occluded Humans by Bayesian Combination of Edgelet based Part Detectors. Int. J. Comput. Vis. 2007, 75, 247–266. [Google Scholar] [CrossRef]

- De Vylder, J.; Goossens, B. Quasar: A Programming Framework for Rapid Prototyping. Presented at the GPU Technology Conference, Silicon Valley, CA, USA, 4–7 April 2016; NVIDIA: Santa Clara, CA, USA, 2016; p. 1. [Google Scholar]

- Lee, B.; Erdenee, E.; Jin, S.; Nam, M.Y.; Jung, Y.G.; Rhee, P.K. Multi-class Multi-object Tracking Using Changing Point Detection. In Computer Vision—ECCV 2016 Workshops: Amsterdam, The Netherlands, 8–10 and 15–16 October 2016, Proceedings, Part II; Hua, G., Jégou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 68–83. [Google Scholar]

- Tian, W.; Lauer, M. Joint tracking with event grouping and temporal constraints. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–5. [Google Scholar]

- Yoon, J.H.; Lee, C.R.; Yang, M.H.; Yoon, K.J. Online Multi-object Tracking via Structural Constraint Event Aggregation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1392–1400. [Google Scholar]

- Yoon, J.H.; Yang, M.H.; Lim, J.; Yoon, K.J. Bayesian Multi-object Tracking Using Motion Context from Multiple Objects. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 33–40. [Google Scholar]

- Wang, S.; Fowlkes, C.C. Learning Optimal Parameters for Multi-target Tracking with Contextual Interactions. arXiv, 2016; arXiv:1610.01394. [Google Scholar] [CrossRef]

| Method | Setting | MOTA | MOTP | MT | ML | IDS | FRAG | Runtime | Source |

|---|---|---|---|---|---|---|---|---|---|

| SiRtaKi | on | 59.61% | 72.89% | 30.24% | 15.81% | 136 | 1164 | 0.2 s/GPU | Unknown |

| ET-MOT | on | 59.10% | 73.26% | 38.49% | 10.31% | 316 | 1362 | 0.7 s/GPU | Unknown |

| TuSimple | on | 58.15% | 71.93% | 30.58% | 24.05% | 138 | 818 | 0.6 s/1 core | Unknown |

| NOTM | 57.67% | 72.17% | 34.36% | 19.24% | 108 | 799 | 0.01 s/1 core | Unknown | |

| Be-Track | la on | 50.39% | 72.85% | 25.43% | 27.84% | 235 | 967 | 0.02 s/GPU | PROPOSED |

| MDP | on | 47.22% | 70.36% | 24.05% | 27.84% | 87 | 825 | 0.9 s/8 cores | [47] |

| NOMT * | 46.62% | 71.45% | 26.12% | 34.02% | 63 | 666 | 0.09 s/16 cores | [12] | |

| MCMOT-CPD | 45.94% | 72.44% | 20.62% | 34.36% | 143 | 764 | 0.01 s/1 core | [52] | |

| JCSTD | on | 44.20% | 72.09% | 16.49% | 33.68% | 53 | 917 | 0.07 s/1 core | [53] |

| SCEA * | on | 43.91% | 71.86% | 16.15% | 43.30% | 56 | 641 | 0.06 s/1 core | [54] |

| RMOT * | on | 43.77% | 71.02% | 19.59% | 41.24% | 153 | 748 | 0.02 s/1 core | [55] |

| LP-SSVM * | 43.76% | 70.48% | 20.62% | 34.36% | 73 | 809 | 0.02 s/1 core | [56] | |

| CIWT * | st on | 43.37% | 71.44% | 13.75% | 34.71% | 112 | 901 | 0.28 s/1 core | [13] |

| NOMT-HM * | on | 39.26% | 71.14% | 21.31% | 41.92% | 184 | 863 | 0.09 s/8 cores | [12] |

| NOMT | 36.93% | 67.75% | 17.87% | 42.61% | 34 | 789 | 0.09 s/16 core | [12] | |

| YT | on | 36.90% | 71.22% | 21.99% | 25.43% | 267 | 995 | 0.03 s/4 cores | Unknown |

| RMOT | on | 34.54% | 68.06% | 14.43% | 47.42% | 81 | 685 | 0.01 s/1 core | [55] |

| LP-SSVM | 33.33% | 67.38% | 12.37% | 45.02% | 72 | 818 | 0.05 s/1 core | [56] | |

| SCEA | on | 33.13% | 68.45% | 9.62% | 46.74% | 16 | 717 | 0.05 s/1 core | [54] |

| CEM | 27.54% | 68.48% | 8.93% | 51.89% | 96 | 608 | 0.09 s/1 core | [7] | |

| NOMT-HM | on | 27.49% | 67.99% | 15.12% | 50.52% | 73 | 732 | 0.09 s/8 cores | [12] |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors 2019, 19, 391. https://doi.org/10.3390/s19020391

Dimitrievski M, Veelaert P, Philips W. Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors. 2019; 19(2):391. https://doi.org/10.3390/s19020391

Chicago/Turabian StyleDimitrievski, Martin, Peter Veelaert, and Wilfried Philips. 2019. "Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle" Sensors 19, no. 2: 391. https://doi.org/10.3390/s19020391

APA StyleDimitrievski, M., Veelaert, P., & Philips, W. (2019). Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors, 19(2), 391. https://doi.org/10.3390/s19020391