Superpixel-Based Temporally Aligned Representation for Video-Based Person Re-Identification †

Abstract

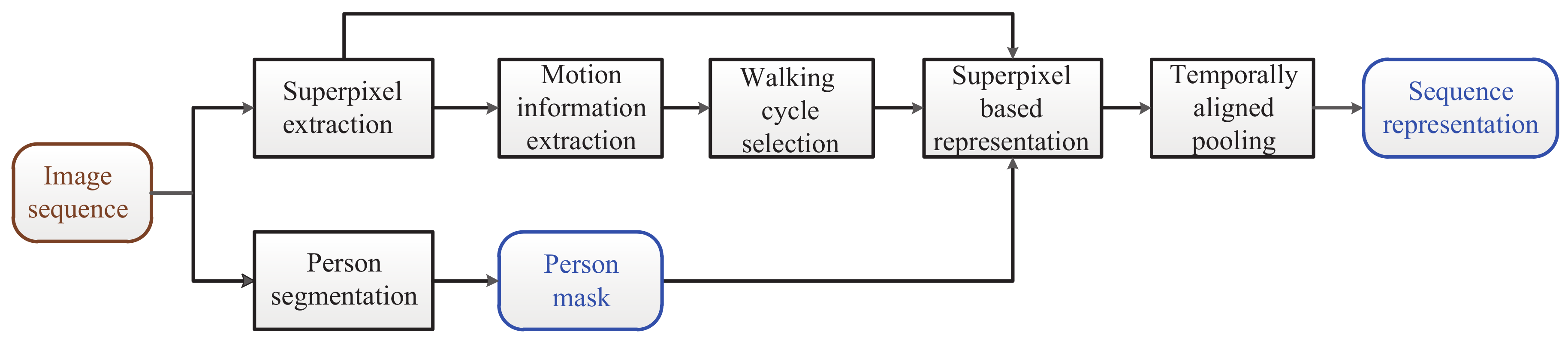

1. Introduction

- (1)

- We propose a robust temporal alignment method for video-based person re-id, which is featured by the superpixel-based motion information extraction, the effective criterion for candidate walking cycles, and the use of only the best walking cycle to build the appearance representation.

- (2)

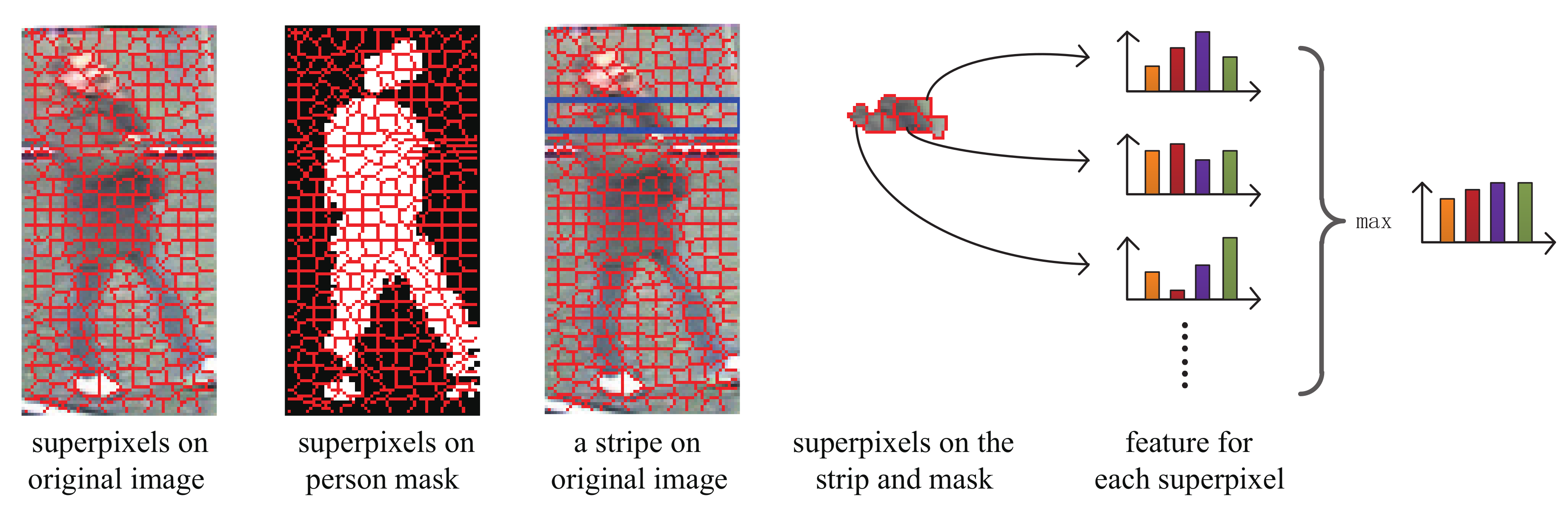

- Superpixel-based support regions and person masks are introduced to still image representation, so as to improve spatial alignment and thereby alleviate the undesired effects of the background.

- (3)

- The proposed method, performs favorably against the state-of-the-art methods, even deep learning-based ones.

2. Related Work

2.1. Multiple-Shot Re-Id vs. Video-Based Re-Id

2.2. Video-Based Re-Id Methods

- Temporal alignment. Temporal alignment has been demonstrated to be able to lead to a robust video-based representation in the context of gait recognition [59]. Some recent works try to consider this problem in video-based re-id [30,31]. Inspired by motion energy in gait recognition [59], Wang et al. propose to use Flow Energy Profile (FEP) to describe the motion of the two legs, and employe its local maxima/minima to temporally aligned the video sequences [30]. To improve the robustness of temporal alignment, Liu et al. proposed to use the frequency of dominant one in the discrete Fourier transform domain of FEP [31].Although the methods [30,31] have demonstrated the effectiveness of FEP based temporal alignment, they still suffer from heavy noise due to cluttered background and occlusions, since they apply optic flow to extract the motion information based on all the pixels of the lower body including the background. Moreover, in real video surveillance applications, the video sequence of a person usually contains several walking cycles, which is redundant.To address the aforementioned problems, this paper proposes a superpixel based temporal alignment method, by first extracting the superpixels on lowest portions of human in the first frame, and then tracking them to obtain the curves of their horizontal displacements, finally selecting the “best” cycle in the curves. Note that only one cycle is used for person representation, to address the problem of redundancy and cluttered background and occlusions. Our work is partially inspired by two aforementioned video-based re-id approaches [30,31]. However, our proposed method essentially departs from these existing methods in the following three aspects:

- (1)

- We adopt a superpixel-based strategy to extract the walking cycles of walking person, i.e., by tracking the superpixels of the lowest portions of human (like feet, ankles, or legs near the ankles), instead of relying on the motion information of all the pixels of the lower body as in previous approaches [30,31], which is less accurate or robust due to the heavy noise caused by background clutter or occlusions.

- (2)

- We propose to use only the best walking cycle for temporal alignment, rather than using all the walking cycles. An effective criterion based on the intrinsic periodicity property of walking persons is proposed to select the best walking cycle from the motion information of all the superpixels. The motion information of a superpixel matches the human walking pattern, to some extent means the superpixel lies on person, and not be occluded.

- (3)

- Based on our temporal alignment, we introduce a novel temporally aligned pooling method to establish the final feature representation. More specifically, we take superpixels as the region supports to characterize the individual still images. Meanwhile, we then utilize person masks to enhance the robustness further.

- Spatial–temporal representation. The gait based feature is the reliable information for person re-id [59]. However, it suffers from occlusions and cluttered background. Most spatial–temporal representations for video-based re-id are devised by considering the videos as 3D volumes, inspired by the some existing works of action recognition, for instance, 3D-SIFT [60], extended SURF [61], HOG3D [62], local trinary patterns [63], motion boundary histograms (MBH) [64]. Recently, some spatio–temporal representation methods have been proposed for person re-id [30,31,57,65,66,67]. For instance, Wang et al. use HOG3D [30], Liu et al. aggregate a 3D low-level spatial–temporal descriptors into a single Fisher Vector (STFV3D) [31], and Liu et al. propose Fast Adaptive Spatio-Temporal 3D feature (FAST3D) for video-based re-id [57]. Being built upon 3D volumes, these appearance based spatial–temporal representations also consider the dynamic motion information to some extent. It is worth noting that, combining with temporal alignment, these representations are more robust.Considering that most 3D representations are extensions to some widely used 2D descriptors, this paper proposes a simple framework to build a 3D representation, by combining single image based representations and temporally aligned pooling. Another reason is that lots of successful single image based representations have been proposed for person re-id, which are person re-id specific, such as LOcal Maximal Occurrence representation (LOMO) [8], Gaussian Of Gaussian (GOG) [35], and so forth. To introduce these features to 3D video data representations, we propose the temporally aligned pooling to integrate all single image based features to form a spatial–temporal representation.

- Metric learning. Learning a reliable metric for video matching is another important factor for the video-based re-id [30,54,55,56,68,69]. Recently, some works have been proposed for video matching. For instance, Simonnet et al. introduce Dynamic Time Warping (DTW) distance to metric learning for the video-based re-id [54]; Wang et al. propose Discriminative Video fragments selection and Ranking (DVR) method for video matching [30]. Based on the observation that the inter-class distances with the video-based representation are much smaller than that with single image based representation, You et al. propose a top-push distance learning model (TDL) for the video-based re-id [56].If we extract a fixed-length representation for a video sequence, the metric learning methods for video-based re-id is same with that for single-image-based re-id. Thus, the single-image-based metric learning methods can be used directly, such as KISSME in [8,31]. In this paper, we obtain the fixed-length representations by temporally aligned pooling and use Cross-view Quadratic Discriminant Analysis (XQDA) [8], a well known single-image-based metric learning method, for video matching.

3. Superpixel-Based Temporally Aligned Representation

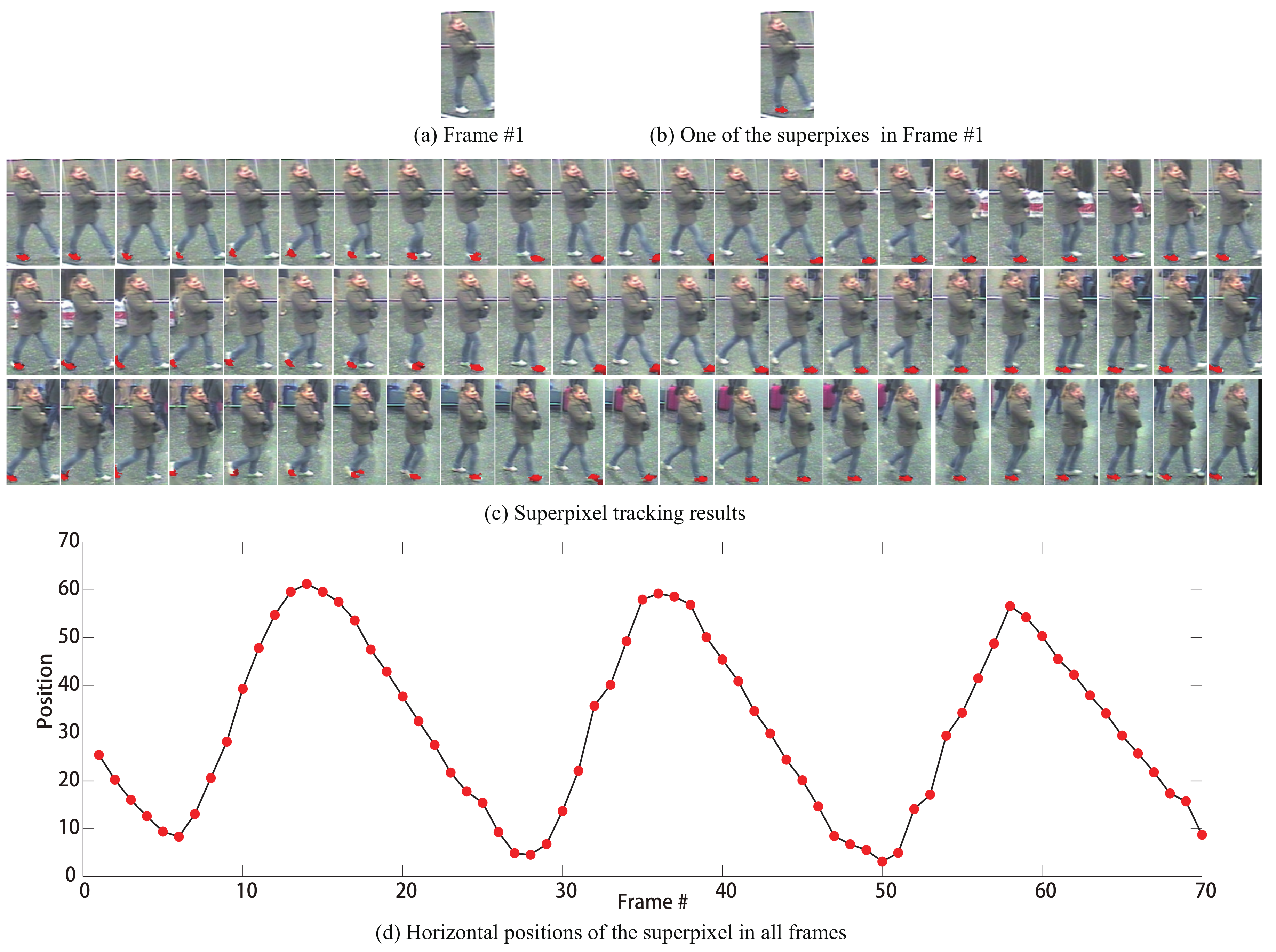

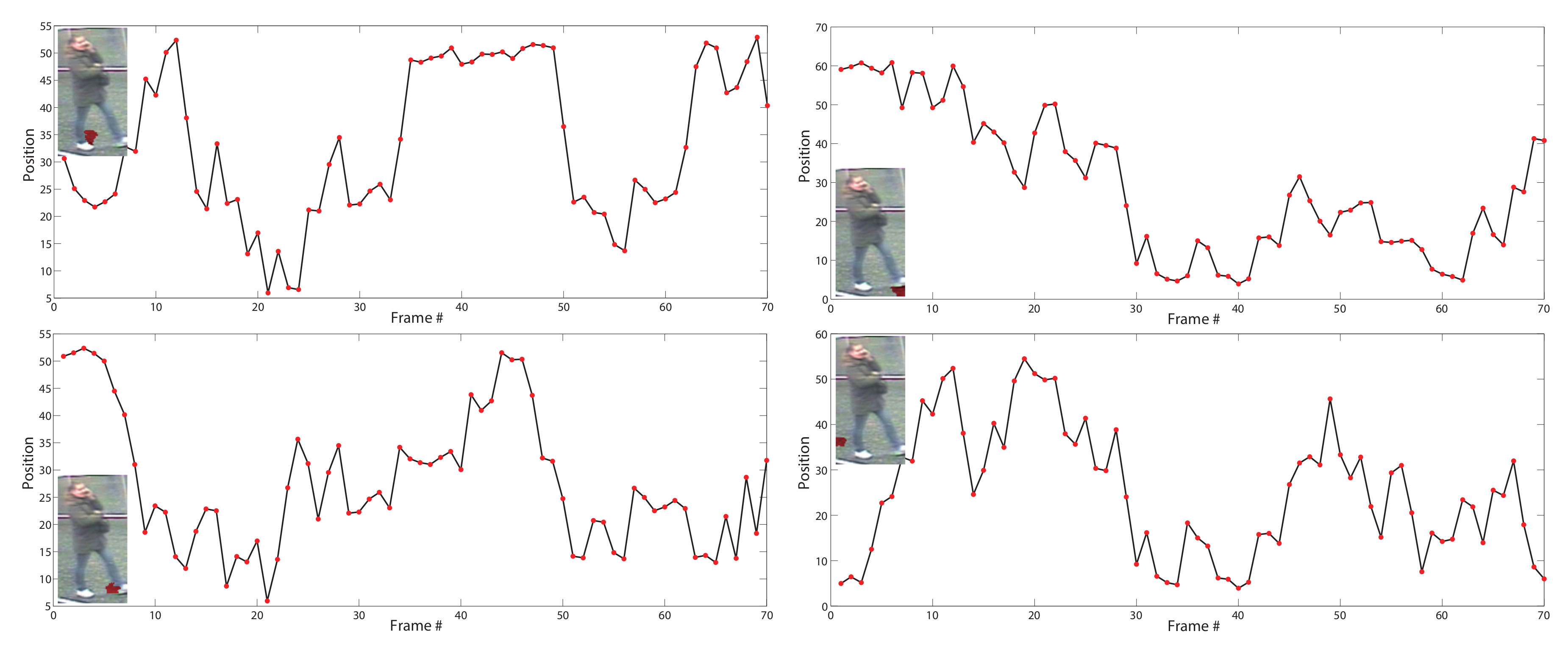

3.1. Motion Information Extraction

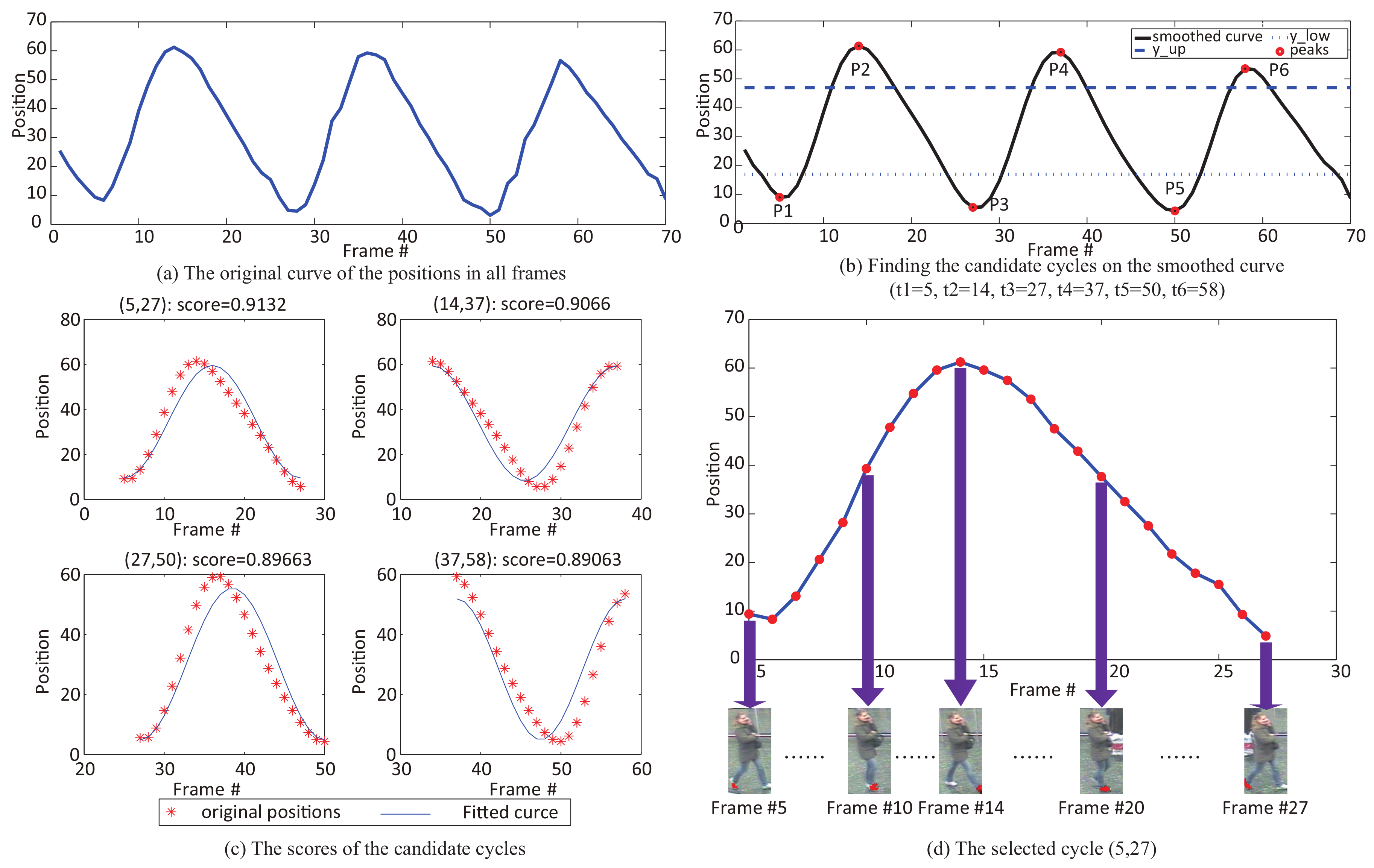

3.2. Walking Cycle Selection

3.3. Superpixel-Based Representation

3.4. Temporally Aligned Representation

4. Experimental Results

4.1. Datasets and Settings

4.1.1. Datasets

4.1.2. Settings

4.2. Comparison with the State-of-the-Art Methods

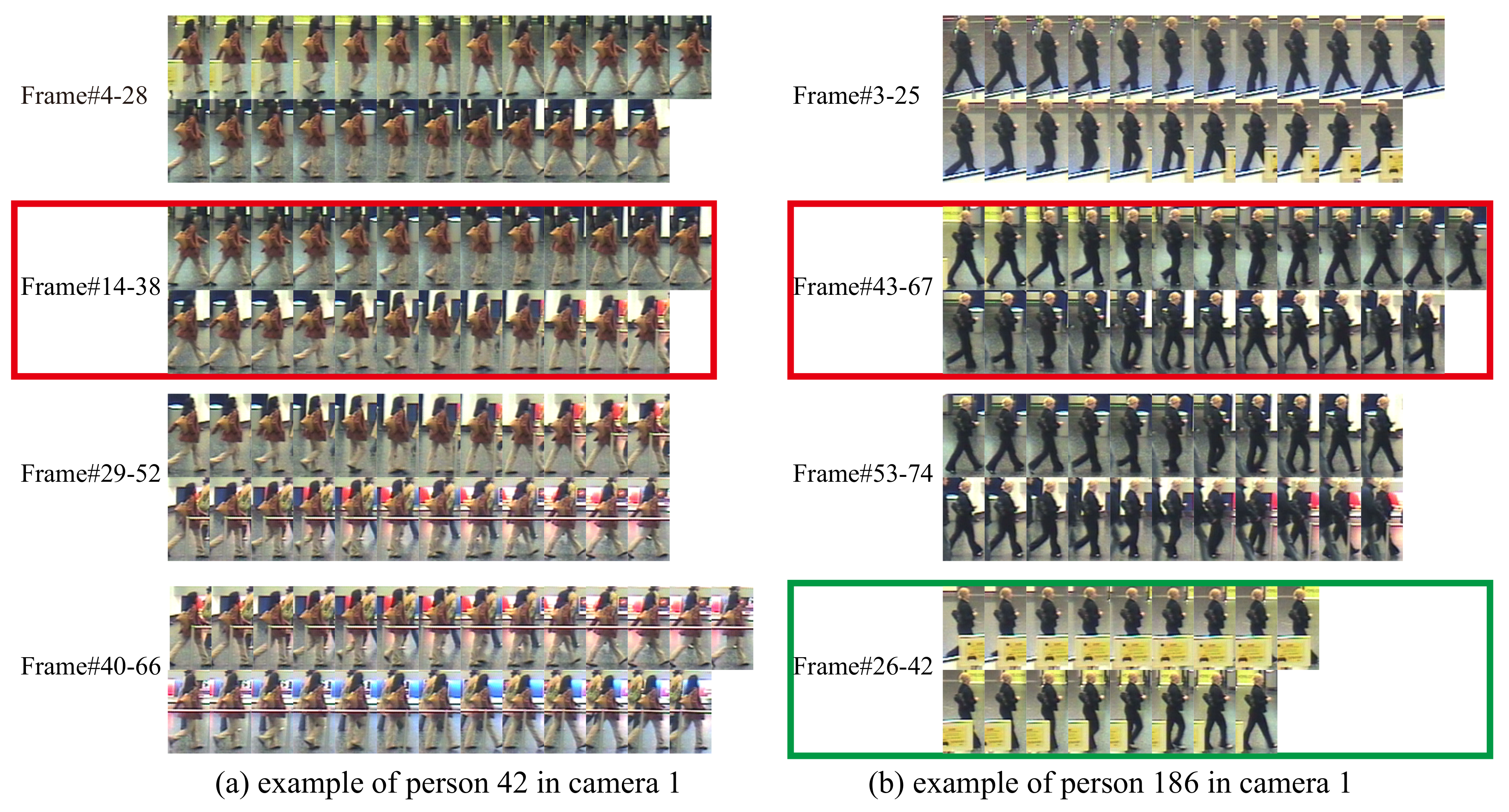

4.3. Examples of Selected Walking Cycles

4.4. Ablation Studies

4.4.1. Evaluation of Temporally Aligned Pooling Manners

4.4.2. Influence of Parameters

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Song, B.; Kamal, A.T.; Soto, C.; Ding, C.; Farrell, J.; Roy-Chowdhury, A.K. Tracking and activity recognition through consensus in distributed camera networks. IEEE Trans. Image Process. 2010, 19, 2564–2579. [Google Scholar] [CrossRef]

- Sunderrajan, S.; Manjunath, B. Context-Aware Hypergraph Modeling for Re-identification and Summarization. IEEE Trans. Multimed. 2016, 1, 51–63. [Google Scholar] [CrossRef]

- Vezzani, R.; Baltieri, D.; Cucchiara, R. People re-identification in surveillance and forensics: A survey. ACM Comput. Surv. 2013, 46, 29. [Google Scholar] [CrossRef]

- Li, W.; Wang, X. Locally aligned feature transforms across views. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 Junaury 2013; pp. 3594–3601. [Google Scholar]

- Bedagkar-Gala, A.; Shah, S.K. A survey of approaches and trends in person re-identification. Image Vis. Comput. 2014, 32, 270–286. [Google Scholar] [CrossRef]

- Gong, S.; Cristani, M.; Yan, S.; Loy, C.C. Person Re-Identification; Springer: Berlin, Germany, 2014. [Google Scholar]

- Zheng, L.; Yang, Y.; Hauptmann, A.G. Person Re-identification: Past, Present and Future. arXiv 2016, arXiv:1610.02984. [Google Scholar]

- Liao, S.; Hu, Y.; Zhu, X.; Li, S.Z. Person Re-identification by Local Maximal Occurrence Representation and Metric Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2197–2206. [Google Scholar]

- Satta, R. Appearance descriptors for person re-identification: A comprehensive review. arXiv 2013, arXiv:1307.5748. [Google Scholar]

- Bazzani, L.; Cristani, M.; Murino, V. Symmetry-driven accumulation of local features for human characterization and re-identification. Comput. Vis. Image Underst. 2013, 117, 130–144. [Google Scholar] [CrossRef]

- Zhao, R.; Ouyang, W.; Wang, X. Person re-identification by salience matching. In Proceedings of the IEEE International Conference on Computer Vision, Portland, OR, USA, 23–28 Junaury 2013; pp. 2528–2535. [Google Scholar]

- Ma, B.; Su, Y.; Jurie, F. Covariance descriptor based on bio-inspired features for person re-identification and face verification. Image Vis. Comput. 2014, 32, 379–390. [Google Scholar] [CrossRef]

- An, L.; Chen, X.; Yang, S.; Bhanu, B. Sparse representation matching for person re-identification. Inf. Sci. 2016, 355, 74–89. [Google Scholar] [CrossRef]

- Wei, L.; Zhang, S.; Yao, H.; Gao, W.; Tian, Q. GLAD: Global-Local-Alignment Descriptor for Scalable Person Re-Identification. IEEE Trans. Multimed. 2018, 21, 986–999. [Google Scholar] [CrossRef]

- Yang, L.; Jin, R. Distance Metric Learning: A Comprehensive Survey; Michigan State Universiy: East Lansing, MI, USA, 2006. [Google Scholar]

- Zheng, W.S.; Gong, S.; Xiang, T. Person re-identification by probabilistic relative distance comparison. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 649–656. [Google Scholar]

- Hirzer, M.; Roth, P.M.; Köstinger, M.; Bischof, H. Relaxed pairwise learned metric for person re-identification. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germay, 2012; pp. 780–793. [Google Scholar]

- An, L.; Kafai, M.; Yang, S.; Bhanu, B. Reference-based person re-identification. In Proceedings of the IEEE Advanced Video and Signal-based Surveillance (AVSS), Krakow, Poland, 27–30 August 2013; pp. 244–249. [Google Scholar]

- Ma, L.; Yang, X.; Tao, D. Person re-identification over camera networks using multi-task distance metric learning. IEEE Trans. Image Process. 2014, 23, 3656–3670. [Google Scholar]

- Zhou, T.; Qi, M.; Jiang, J.; Wang, X.; Hao, S.; Jin, Y. Person re-identification based on nonlinear ranking with difference vectors. Inf. Sci. 2014, 279, 604–614. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Z.; Wang, Y. Relevance Metric Learning for Person Re-Identification by Exploiting Listwise Similarities. IEEE Trans. Image Process. 2015, 24, 4741–4755. [Google Scholar] [CrossRef]

- Wang, Z.; Hu, R.; Liang, C.; Yu, Y.; Jiang, J.; Ye, M.; Chen, J.; Leng, Q. Zero-shot Person Re-identification via Cross-view Consistency. IEEE Trans. Multimed. 2016, 18, 260–272. [Google Scholar] [CrossRef]

- Wang, J.; Sang, N.; Wang, Z.; Gao, C. Similarity Learning with Top-heavy Ranking Loss for Person Re-identification. IEEE Signal Process. Lett. 2016, 23, 84–88. [Google Scholar] [CrossRef]

- Ye, M.; Liang, C.; Yu, Y.; Wang, Z.; Leng, Q.; Xiao, C.; Chen, J.; Hu, R. Person reidentification via ranking aggregation of similarity pulling and dissimilarity pushing. IEEE Trans. Multimed. 2016, 18, 2553–2566. [Google Scholar] [CrossRef]

- Sun, C.; Wang, D.; Lu, H. Person Re-Identification via Distance Metric Learning With Latent Variables. IEEE Trans. Image Process. 2017, 26, 23–34. [Google Scholar] [CrossRef]

- Bazzani, L.; Cristani, M.; Perina, A.; Farenzena, M.; Murino, V. Multiple-shot person re-identification by hpe signature. In Proceedings of the IEEE International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1413–1416. [Google Scholar]

- Farenzena, M.; Bazzani, L.; Perina, A.; Murino, V.; Cristani, M. Person re-identification by symmetry-driven accumulation of local features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2360–2367. [Google Scholar]

- Xu, S.; Cheng, Y.; Gu, K.; Yang, Y.; Chang, S.; Zhou, P. Jointly Attentive Spatial-Temporal Pooling Networks for Video-based Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhou, S.; Wang, J.; Shi, R.; Hou, Q.; Gong, Y.; Zheng, N. Large Margin Learning in Set-to-Set Similarity Comparison for Person Reidentification. IEEE Trans. Multimed. 2018, 20, 593–604. [Google Scholar]

- Wang, T.; Gong, S.; Zhu, X.; Wang, S. Person re-identification by video ranking. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 688–703. [Google Scholar]

- Zhang, W.; Ma, B.; Liu, K.; Huang, R. Video-based pedestrian re-identification by adaptive spatio–temporal appearance model. IEEE Trans. Image Process. 2017, 26, 2042–2054. [Google Scholar] [CrossRef]

- Gao, C.; Wang, J.; Liu, L.; Yu, J.G.; Sang, N. Temporally aligned pooling representation for video-based person re-identification. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 4284–4288. [Google Scholar]

- Wu, D.; Zheng, S.J.; Zhang, X.P.; Yuan, C.A.; Cheng, F.; Zhao, Y.; Lin, Y.J.; Zhao, Z.Q.; Jiang, Y.L.; Huang, D.S. Deep learning-based methods for person re-identification: A comprehensive review. Neurocomputing 2019, 337, 354–371. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, Z. Person re-identification by local feature based on super pixel. In International Conference on Multimedia Modeling; Springer: Berlin/Heidelberg, Germay, 2013; pp. 196–205. [Google Scholar]

- Matsukawa, T.; Okabe, T.; Suzuki, E.; Sato, Y. Hierarchical gaussian descriptor for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1363–1372. [Google Scholar]

- Wang, J.; Wang, Z.; Liang, C.; Gao, C.; Sang, N. Equidistance constrained metric learning for person re-identification. Pattern Recognit. 2018, 74, 38–51. [Google Scholar] [CrossRef]

- Liao, S.; Li, S.Z. Efficient psd constrained asymmetric metric learning for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Boston, MA, USA, 7–13 December 2015; pp. 3685–3693. [Google Scholar]

- Wang, Z.; Hu, R.; Chen, C.; Yu, Y.; Jiang, J.; Liang, C.; Satoh, S. Person reidentification via discrepancy matrix and matrix metric. IEEE Trans. Cybern. 2017, 48, 3006–3020. [Google Scholar] [CrossRef]

- Li, W.; Wu, Y.; Li, J. Re-identification by neighborhood structure metric learning. Pattern Recognit. 2017, 61, 327–338. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, J.; Ouyang, W. Quality aware network for set to set recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5790–5799. [Google Scholar]

- Adaimi, G.; Kreiss, S.; Alahi, A. Rethinking Person Re-Identification with Confidence. arXiv 2019, arXiv:1906.04692. [Google Scholar]

- Wang, J.; Wang, Z.; Gao, C.; Sang, N.; Huang, R. Deeplist: Learning deep features with adaptive listwise constraint for person reidentification. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 513–524. [Google Scholar] [CrossRef]

- Liu, J.; Ni, B.; Yan, Y.; Zhou, P.; Cheng, S.; Hu, J. Pose transferrable person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 Junuary 2018; pp. 4099–4108. [Google Scholar]

- Song, G.; Leng, B.; Liu, Y.; Hetang, C.; Cai, S. Region-based quality estimation network for large-scale person re-identification. In Proceedings of the Thirty-Second Aaai Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Li, M.; Zhu, X.; Gong, S. Unsupervised person re-identification by deep learning tracklet association. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germay, 2018; pp. 737–753. [Google Scholar]

- Sun, Y.; Xu, Q.; Li, Y.; Zhang, C.; Li, Y.; Wang, S.; Sun, J. Perceive Where to Focus: Learning Visibility-aware Part-level Features for Partial Person Re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 393–402. [Google Scholar]

- Zhong, Z.; Zheng, L.; Luo, Z.; Li, S.; Yang, Y. Invariance matters: Exemplar memory for domain adaptive person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 598–607. [Google Scholar]

- Zhang, Z.; Lan, C.; Zeng, W.; Chen, Z. Densely Semantically Aligned Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 667–676. [Google Scholar]

- Zheng, L.; Bie, Z.; Sun, Y.; Wang, J.; Su, C.; Wang, S.; Tian, Q. Mars: A video benchmark for large-scale person re-identification. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germay, 2016; pp. 868–884. [Google Scholar]

- Zhang, W.; He, X.; Lu, W.; Qiao, H.; Li, Y. Feature Aggregation With Reinforcement Learning for Video-Based Person Re-Identification. IEEE Trans. Neural Netw. Learn. Syst. 2019, 1–6. [Google Scholar] [CrossRef]

- Wu, Y.; Lin, Y.; Dong, X.; Yan, Y.; Ouyang, W.; Yang, Y. Exploit the unknown gradually: One-shot video-based person re-identification by stepwise learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 Junuary 2018; pp. 5177–5186. [Google Scholar]

- Wu, L.; Wang, Y.; Gao, J.; Li, X. Where-and-when to look: Deep siamese attention networks for video-based person re-identification. IEEE Trans. Multimed. 2018, 21, 1412–1424. [Google Scholar] [CrossRef]

- Zhou, Z.; Huang, Y.; Wang, W.; Wang, L.; Tan, T. See the forest for the trees: Joint spatial and temporal recurrent neural networks for video-based person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6776–6785. [Google Scholar]

- Simonnet, D.; Lewandowski, M.; Velastin, S.A.; Orwell, J.; Turkbeyler, E. Re-identification of pedestrians in crowds using dynamic time warping. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germay, 2012; pp. 423–432. [Google Scholar]

- Karanam, S.; Li, Y.; Radke, R.J. Sparse re-id: Block sparsity for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 33–40. [Google Scholar]

- You, J.; Wu, A.; Li, X.; Zheng, W.S. Top-push Video-based Person Re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Liu, Z.; Chen, J.; Wang, Y. A fast adaptive spatio–temporal 3D feature for video-based person re-identification. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 4294–4298. [Google Scholar]

- Ye, M.; Li, J.; Ma, A.J.; Zheng, L.; Yuen, P.C. Dynamic graph co-matching for unsupervised video-based person re-identification. IEEE Trans. Image Process. 2019, 28, 2976–2990. [Google Scholar] [CrossRef]

- Martín-Félez, R.; Xiang, T. Gait recognition by ranking. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 328–341. [Google Scholar]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the ACM International Conference on Multimedia, Bavaria, Germany, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Willems, G.; Tuytelaars, T.; Van Gool, L. An efficient dense and scale-invariant spatio–temporal interest point detector. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 650–663. [Google Scholar]

- Klaser, A.; Marszałek, M.; Schmid, C. A spatio–temporal descriptor based on 3d-gradients. In Proceedings of the British Machine Vision Conference, Leeds, UK, 1–4 September 2008; p. 275. [Google Scholar]

- Yeffet, L.; Wolf, L. Local trinary patterns for human action recognition. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 492–497. [Google Scholar]

- Dalal, N.; Triggs, B.; Schmid, C. Human detection using oriented histograms of flow and appearance. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 428–441. [Google Scholar]

- Bak, S.; Charpiat, G.; Corvee, E.; Bremond, F.; Thonnat, M. Learning to match appearances by correlations in a covariance metric space. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 806–820. [Google Scholar]

- Bedagkar-Gala, A.; Shah, S.K. Multiple person re-identification using part based spatio–temporal color appearance model. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 1721–1728. [Google Scholar]

- Gheissari, N.; Sebastian, T.B.; Hartley, R. Person reidentification using spatiotemporal appearance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1528–1535. [Google Scholar]

- Ma, X.; Zhu, X.; Gong, S.; Xie, X.; Hu, J.; Lam, K.; Zhong, Y. Person Re-Identification by Unsupervised Video Matching. arXiv 2016, arXiv:abs/1611.08512. [Google Scholar] [CrossRef]

- Zhu, X.; Jing, X.Y.; Wu, F.; Feng, H. Video-Based Person Re-Identification by Simultaneously Learning Intra-Video and Inter-Video Distance Metrics. IEEE Trans. Image Process. 2016, 27, 3552–3559. [Google Scholar] [CrossRef]

- McLaughlin, N.; Martinez del Rincon, J.; Miller, P. Recurrent Convolutional Network for Video-based Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. VRSTC: Occlusion-Free Video Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 7183–7192. [Google Scholar]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. Interaction-And-Aggregation Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 9317–9326. [Google Scholar]

- Zhang, R.; Li, J.; Sun, H.; Ge, Y.; Luo, P.; Wang, X.; Lin, L. SCAN: Self-and-Collaborative Attention Network for Video Person Re-identification. IEEE Trans. Image Process. 2019, 28, 4870–4882. [Google Scholar] [CrossRef]

- Zhao, Y.; Shen, X.; Jin, Z.; Lu, H.; Hua, X.S. Attribute-driven feature disentangling and temporal aggregation for video person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 January 2019; pp. 4913–4922. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef]

- Han, J. Bipedal Walking for a Full-Sized Humanoid Robot Utilizing Sinusoidal Feet Trajectories and Its Energy Consumption. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, VA, USA, 2012. [Google Scholar]

- Cross, R. Standing, walking, running, and jumping on a force plate. Am. J. Phys. 1999, 67, 304–309. [Google Scholar] [CrossRef]

- Luo, P.; Wang, X.; Tang, X. Pedestrian parsing via deep decompositional network. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2648–2655. [Google Scholar]

- Hirzer, M.; Beleznai, C.; Roth, P.M.; Bischof, H. Person re-identification by descriptive and discriminative classification. In Scandinavian Conference on Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011; pp. 91–102. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Dehghan, A.; Modiri Assari, S.; Shah, M. Gmmcp tracker: Globally optimal generalized maximum multi clique problem for multiple object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4091–4099. [Google Scholar]

- Pedagadi, S.; Orwell, J.; Velastin, S.; Boghossian, B. Local fisher discriminant analysis for pedestrian re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3318–3325. [Google Scholar]

| Dataset | iLIDS-VID | PRID 2011 | MARS | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Rank R | mAP | |||||||||

| GEI + RSVM [59] | 2.8 | 13.1 | 34.5 | - | - | - | - | - | - | - |

| HOG3D + DVR [30] | 23.3 | 42.4 | 68.4 | 28.9 | 55.3 | 82.8 | - | - | - | - |

| Color + LFDA [82] | 28.0 | 55.3 | 88.0 | 43.0 | 73.1 | 90.3 | - | - | - | - |

| STFV3D + KISSME [31] | 44.3 | 71.7 | 91.7 | 64.1 | 87.3 | 92.0 | - | - | - | - |

| CS-FAST3D + RMLLC [57] | 28.4 | 54.7 | 78.1 | 31.2 | 60.3 | 88.6 | - | - | - | - |

| SRID [55] | 24.9 | 44.5 | 66.2 | 35.1 | 59.4 | 79.7 | - | - | - | - |

| TDL [56] | 56.3 | 87.6 | 98.3 | 56.7 | 80.0 | 93.6 | - | - | - | - |

| RNN [70] | 58 | 84 | 96 | 70 | 90 | 97 | - | - | - | - |

| CNN + XQDA + MQ [49] | 53.0 | 81.4 | 95.1 | 77.3 | 93.5 | 99.3 | 68.3 | 82.6 | 89.4 | 49.3 |

| SPRNN [53] | 55.2 | 86.5 | 97.0 | 79.4 | 94.4 | 99.3 | 70.6 | 90.0 | 97.6 | 50.7 |

| ASTPN [28] | 62.0 | 86.0 | 94.0 | 77.0 | 95.0 | 99.0 | 44.0 | 70.0 | 81.0 | - |

| DSAN [52] | 61.9 | 86.8 | 98.6 | 77.0 | 96.4 | 99.4 | 73.5 | 85.0 | 97.5 | - |

| TAPR [32] | 55.0 | 87.5 | 97.2 | 68.6 | 94.6 | 98.9 | - | - | - | - |

| STAR | 67.5 | 91.7 | 98.8 | 69.2 | 94.9 | 99.1 | 80.0 | 89.3 | 95.1 | 70.0 |

| Methods | ||||

|---|---|---|---|---|

| STAR_avg | 67.5 | 91.7 | 95.9 | 98.8 |

| STAR_max | 65.6 | 91.3 | 96.1 | 98.7 |

| STAR_key | 56.2 | 87.4 | 94.8 | 98.3 |

| STAR_no | 52.8 | 83.5 | 90.8 | 95.7 |

| Methods | R = 1 | R = 5 | R = 10 | R = 20 |

|---|---|---|---|---|

| STAR_1 | 52.8 | 83.5 | 90.8 | 95.7 |

| STAR_2 | 64.7 | 88.9 | 93.8 | 97.9 |

| STAR_4 | 67.3 | 90.8 | 95.5 | 98.4 |

| STAR_8 | 67.5 | 91.7 | 95.9 | 98.8 |

| STAR_16 | 66.2 | 91.7 | 95.7 | 98.6 |

| STAR_32 | 65.9 | 91.5 | 95.8 | 98.7 |

| Superpixel Number | R = 1 | R = 5 | R = 20 |

|---|---|---|---|

| 50 | 65.9 | 88.0 | 99.0 |

| 75 | 65.9 | 89.4 | 98.8 |

| 100 | 67.5 | 91.7 | 98.8 |

| 125 | 66.7 | 90.0 | 98.7 |

| 150 | 65.8 | 88.6 | 98.7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, C.; Wang, J.; Liu, L.; Yu, J.-G.; Sang, N. Superpixel-Based Temporally Aligned Representation for Video-Based Person Re-Identification. Sensors 2019, 19, 3861. https://doi.org/10.3390/s19183861

Gao C, Wang J, Liu L, Yu J-G, Sang N. Superpixel-Based Temporally Aligned Representation for Video-Based Person Re-Identification. Sensors. 2019; 19(18):3861. https://doi.org/10.3390/s19183861

Chicago/Turabian StyleGao, Changxin, Jin Wang, Leyuan Liu, Jin-Gang Yu, and Nong Sang. 2019. "Superpixel-Based Temporally Aligned Representation for Video-Based Person Re-Identification" Sensors 19, no. 18: 3861. https://doi.org/10.3390/s19183861

APA StyleGao, C., Wang, J., Liu, L., Yu, J.-G., & Sang, N. (2019). Superpixel-Based Temporally Aligned Representation for Video-Based Person Re-Identification. Sensors, 19(18), 3861. https://doi.org/10.3390/s19183861