Abstract

Wearable devices offer a convenient means to monitor biosignals in real time at relatively low cost, and provide continuous monitoring without causing any discomfort. Among signals that contain critical information about human body status, electromyography (EMG) signal is particular useful in monitoring muscle functionality and activity during sport, fitness, or daily life. In particular surface electromyography (sEMG) has proven to be a suitable technique in several health monitoring applications, thanks to its non-invasiveness and ease to use. However, recording EMG signals from multiple channels yields a large amount of data that increases the power consumption of wireless transmission thus reducing the sensor lifetime. Compressed sensing (CS) is a promising data acquisition solution that takes advantage of the signal sparseness in a particular basis to significantly reduce the number of samples needed to reconstruct the signal. As a large variety of algorithms have been developed in recent years with this technique, it is of paramount importance to assess their performance in order to meet the stringent energy constraints imposed in the design of low-power wireless body area networks (WBANs) for sEMG monitoring. The aim of this paper is to present a comprehensive comparative study of computational methods for CS reconstruction of EMG signals, giving some useful guidelines in the design of efficient low-power WBANs. For this purpose, four of the most common reconstruction algorithms used in practical applications have been deeply analyzed and compared both in terms of accuracy and speed, and the sparseness of the signal has been estimated in three different bases. A wide range of experiments are performed on real-world EMG biosignals coming from two different datasets, giving rise to two different independent case studies.

1. Introduction

Surface electromyography (sEMG) is a technique to capture and measure the electrical potential at the skin surface due to muscle activity [1,2]. The registered EMG signal in a muscle is the collective action potential of all muscular fibers of the motor unit that work together since they are stimulated by the same motor neuron. The muscular contraction is generated by a stimulus that propagates from the brain cortex to the target muscle as an electrical potential, named action potential (AP). sEMG signal is frequently used for the evaluation of muscle functionality and activity, thanks to the non-invasiveness and ease of this technique [3,4,5]. Common applications are fatigue analysis [6] of rehabilitation exercises [5,7], postural control [8], musculoskeletal disorder analysis [9], gait analysis [10], movement recognition [11], gesture recognition [12], prosthetic control [13,14,15], to cite only a few. Among these applications monitoring and automatic recognition of human activities are of particular interests both for sport and fitness as well as for healthcare of elderly and impaired people [16,17]. Wireless body area networks (WBANs) provide an effective and a relatively low-cost solution for biosignal monitoring in real time [18,19]. A WBAN typically consists of one or more low-power, miniaturized, lightweight devices with wireless communication capabilities that operate in the proximity of a human body [20]. However, power consumption represents a major problem for the design and for the widespread of such devices. A large part of the device power consumption is required for the wireless transmission of the signals which are recorded from multiple channels at a high-sampling rate [21]. Standard compression protocols have a high computational complexity and the implementation in the sensor nodes would add a big overhead to the power consumption. Compressed sensing (CS) techniques that lie on the sparsity property of many natural signals, have been successfully applied in the WBAN long term signal monitoring, since CS significantly saves the transmit power by reducing the sampling rate [22,23,24,25,26]. Recent studies have applied CS to sEMG signals for gesture recognition, an innovative application field of the sEMG signal analysis [27,28]. In this context, CS has a great importance in reducing the size of transmitted sEMG data while being able to reconstruct good quality signals and recognize hand movements. The fundamental idea behind CS is that rather than first sampling at a high rate and then compressing the sampled data, as usually done in standard techniques, we would like to find ways to directly sense the data in compressed form, i.e., at a lower sampling rate. To make this possible, CS relies on the concept of sparsity which implies that certain classes of signals, sparse signals, when expressed in a proper basis have only a small number of non-zero coordinates. The CS field grew out of the seminal work of Candes, Romberg, Tao and Donoho [29,30,31,32,33,34,35], who showed that a finite dimensional sparse signal can be recovered from several samples much smaller that its length. The CS paradigm combines two fundamental stages, encoding and reconstruction. The reconstruction of a signal acquired with CS represents the most critical and expensive stage as it involves an optimization which seeks the best solution to an undetermined set of linear equations with no prior knowledge of the signal except that it is sparse when represented in a proper basis. To obtain the better performance in the reconstruction of the undersampled signal a large variety of algorithms have been developed in recent years [36]. In the class of computational techniques for solving sparse approximation problems, two approaches are computationally practical and lead to provably exact solutions under some defined conditions: convex optimization and greedy algorithms. Convex optimization is the original CS reconstruction algorithm formulated as a linear programming problem [37]. Unlike convex optimization, greedy algorithms try to solve the reconstruction problem in a less exact manner. In this class, the most common algorithms used in practical applications are orthogonal matching pursuit (OMP) [38,39,40,41,42], compressed sampling matching pursuit (CoSaMP) [43,44], normalized iterative hard thresholding (NIHT) [45]. All these algorithms are applicable in principle to a generic signal; however, in the design and implementation of a sensor architecture is of paramount importance to assess the performance with reference to the specific signal to be acquired. Additionally, the performance of the algorithms can vary very widely, so that a comparative study that demonstrates the practicability of such algorithms are welcomed by designers of low powers WBANs for biosignal monitoring [26].

The aim of this paper is to explore the trade-off in the choice of a compressed sensing algorithm, belonging to the classes of techniques previously described, to be applied in EMG sensor-applications. Thus, the ultimate goal of the paper is to present a comparative study of computational methods for CS reconstruction of EMG signals, in real-world EMG signal acquisition systems, leading to efficient, low-power WBANs. For example, a useful application of this comparative study can be the selection of the best algorithm to be applied in EMG-based gesture recognition. In addition, the effect of this basis used for reconstruction on signal sparseness has been analyzed for three different bases.

This paper is organized as follows. Section 2 summarizes the basic concept of CS theory. Section 3 is mainly focused on CS reconstruction algorithms and in particular gives a complete description of four algorithms: Convex Optimization, OMP, CoSaMP, and NIHT. Section 4 reports a comparative study of the four algorithms performance when applied to real-world EMG signals.

2. Compressed Sensing Background

In this section, we provide an overview of the basic concepts of the CS theory. In Table 1, for ease of reference, a list of the main symbols and definitions used throughout the text are reported. Some of these are currently adopted in the literature while other specific operators will be defined later.

Table 1.

Notation.

CS theory asserts that rather than acquire the entire signal and then compress, it is usually done in compression techniques, it is possible to capture only the useful information at rates smaller than the Nyquist sampling rate.

The CS paradigm combines two fundamental stages, encoding and reconstruction.

In the encoding stage the N-dimensional input signal f is encoded into a M-dimensional set of measurements y through a linear transformation by the measurement matrix where . In this way with the CS data acquisition system directly translates analog data into a compressed digital form.

In the reconstruction stage given by , assuming the signal f to be recovered is known to be sparse in some basis , in the sense that all but a few coefficients are zero, the sparsest solution x (fewest significant non-zero ) is found. The reconstruction algorithms exhibit better performance when the signal to be reconstructed is exactly k-sparse on the basis , i.e., with for . Thus, in some algorithms the elements of x that give negligible contributions are discarded. To this end the following operator is defined

that selects the set of k indexes corresponding to largest values . The set so derived represents the so-called set of sparsity. Another useful definition in this context is the operator that returns a vector with the same elements of x in the sub-set and zero elsewhere, formally

where means difference of the two sets and . The consecutive application of the two operators gives rise to a k-sparse vector obtained from x by keeping only the components with the largest values of , and will be synthetically denoted by , called reduced operator. Thus

A natural formulation of the recovery problem is within an -norm minimization framework, which seeks a solution x of the problem

where is a counting function that returns the number of non-zero components in its argument. Unfortunately, the -minimization problem is NP-hard, and hence cannot be used for practical application. A method to avoid using this computationally intractable formulation is to consider an -minimization problem.

It has been shown [33] that when x is the solution to the convex approximation problem

then the reconstruction is exact. More specifically only M measurements in the domain selected uniformly at random, are needed to reconstruct the signal provided M satisfies the inequality

where N represents the signal size, S the index of sparseness, C a constant and the coherence between the sensing basis and the representation basis . Coherence measures the largest correlation between any two elements of and and is given by , with . Random matrices are largely incoherent () with any fixed basis . Therefore, as the smaller the coherence the fewer samples are needed, random matrices are the best choice for sensing basis.

The usually adopted performance metric to measure the reduction in the data required to represent the signal f is the compression ratio defined as

that is the ratio between the length of the original and compressed signal vectors. Instead sparsity is usually defined as

Sometimes is more convenient to define sparsity with respect to dimension M, thus giving . Obviously the two equation are related by .

3. The Algorithms

As the CS sampling framework includes two main activities, encoding and reconstruction, some specific algorithms must be derived for this purpose.

3.1. Encoding

CS encoder uses a linear transformation to project the vector f into the lower dimensional vector y, through the measurement matrix . In addition of being incoherent with respect to the basis , measurement matrix must facilitate encoding practical implementation. One widely used approach is to use Bernoulli random matrices . This choice allows saving of multiplication in the matrix-product operation . Moreover, simple, fast and low-power digital and analog hardware implementations of the encoder are possible [26].

3.2. Basis Matrix

A wide range of basis matrices can be adopted in Equation (4), three of the most familiar bases will be used in this paper, namely DCT, Haar and Daubechies’ wavelet (DBW). Although DCT seems not to be an adequately sparse basis for EMG signal it was used in one of the two case studies because of signal pre-filtering during acquisition as it will be explained in Section 4. Additionally, other recent works [46,47] have demonstrated the validity of DCT basis for CS applied to EMG signal. Matrix for Haar and DB4 was built using parallel filter bank wavelet implementation [48].

3.3. Reconstruction

CS reconstruction algorithms can be divided into two classes: convex optimization and greedy algorithms.

3.3.1. Convex Optimization

L1-minimization

The CS theory asserts that when f is sufficiently sparse, the recovery via -minimization is provably exact. Thus, a fundamental algorithm for reconstruction is the convex optimization wherein the -norm of the reconstructed signal is an efficient measure of sparsity. The CS reconstruction process is described by Equation (5) which can be regarded as a linear programming problem. This approach is also known as basis pursuit.

By assuming is a frame of the EMG signal to be reconstructed, is an basis matrix, an Bernoulli matrix, then the constraint in Equation (5) can be rewritten as

with . Introducing the Lagrange function

where T denotes matrix transposition thus to solve the problem (5) is equivalent to determine the stationary point of with respect to both x and . A usual technique for this problem is the projected-gradient algorithm based on the following iterative scheme

where is a parameter that regulates the convergence of the algorithm. By deriving (10) we obtain

then combining Equations (11) and (12) with constraint and assuming is invertible, it results

Finally, the following iterative solution

is obtained. To make the convergence parameter independent of signal power the following normalized version of the algorithm can be adopted

with and . To initialize the algorithm a vector given by

that solves the following -minimization problem

has been chosen. The parameter determines the convergence of the algorithm; thus, to establish a proper choice of its value a convergence criterion should be derived. However, a complete treatment of convergence is a difficult task and is out of the scope of this paper. To face this problem the value of has been chosen using a semi-heuristic criterion that bounds the steady-state ripple given by

where specifies the desired accuracy. In such a way we obtain

which can be reduced to the more practical condition

An optimized version of the algorithm with a reduced number of products can be derived as follows. Let us rewrite Equation (15) as

where

and

The variation of q from to t

only depends on

which can be rewritten in a compact form as

where

By defining

Equation (27) is equivalent to

Finally, from Equations (24), (26) and (29) and defining the set we have

Thus, the summation in Equation (30) is extended only to the terms for which a sign changing from to occurs, thus reducing the number of products required at each step.

A pseudo-code of the algorithm is reported as Algorithm 1.

| Algorithm 1 L1-minimization |

| Input: Inizialize: Output: k-sparse coefficient vector x while do // reduce to binary vector if then // define the set of indices corresponding to a change from to else end if end while |

3.3.2. Greedy Algorithms

Orthogonal Matching Pursuit (OMP)

This algorithm solves the reconstruction of sparse coefficient vector x, i.e., with . The algorithm tries to find the k directions corresponding to the non-zero x-components, starting from the residual given by the measurements y. At each step the column of A that is mostly correlated with the residual is derived. Then the best coefficients are found by solving the following -minimization problem

thus giving

Finally, the residual, the difference between the actual measure and the A mathematical description of the algorithm is reported in Algorithm 2.

| Algorithm 2 OMP |

| Input: Initialize: Output: k-sparse coefficient vector x while do // find the column of A that is most strongly correlated with the residual // merge the new column // find the best coefficients from (31) // update the residual end while |

Compressive Sampling Matching Pursuit (CoSaMP)

Differently from OMP, the CoSaMP algorithm tries to find the columns of that are the most strongly correlated with the residual, thus making a correction of the reconstruction on the basis of residual achieved at each step. The columns are determined by the selection step

where is the sub-matrix of made by columns indexed by the set W, and is the residual at current iteration step t. Thus, the algorithm proceeds to estimate the best coefficients h for approximating the residual with the new columns indexed by . As this step corresponds to an LMS problem, it results

Finally, the sparsity operator

with is applied to obtain the sparse vector x. At the end of the algorithm the residual is updated with the new signal reconstruction . A pseudo-code of the algorithm is reported in Algorithm 3.

Normalized Iterative Hard Thresholding (NIHT)

The basic idea that underlies NIHT algorithm is that the sparse components to be identified give a large contribution to the gradient of residual. The algorithm tries to find these components by following the gradient of residual , i.e.,

thus obtaining

The sparse vector is derived at each iteration t by applying the reduced operator to the estimated vector ,

| Algorithm 3 CoSaMP |

| Input: Initialize: // find k columns of that are most strongly correlated with residual Output: k-sparse coefficient vector x while do // number of new columns to be selected // find columns of that are most strongly correlated with residual // merge the new columns such that // find the best coefficients for residual approximation // find the set of sparsity // find sparse vector x // update the residual end while |

As in CoSaMP the initialization is made by choosing the columns of that are most strongly correlated with residual

and then estimating the best coefficients

for residual approximation. A different step size has been used for each component by defining the step size vector as

thus, normalizing the components of the gradient vector . In this way the updated equation for x becomes

where is the normalized gradient vector and ⊙ denotes the element-wise product of vectors. The value of has been estimated by minimizing the residual, i.e., such that

or

A closed form of cannot be derived as it depends on the set selected after the updating of . To circumvent this problem an iterative approach has been used, starting from an initial estimation of to compute and then updating to the true value. In this way from previous Equation (45) we obtain

where

| Algorithm 4 NIHT |

Input: Initialize: Output: k-sparse coefficient vector x whiledo // update residual // step size vector // normalized gradient vector // initialize the set of sparsity ifthen while (stop criterion on ) do // update with step size given by (46) // update set of sparsity // find sparse vector end while else // find sparse vector end if end while |

4. Comparative Study

To quantify the performance of the CS algorithms previously described a comparative study has been conducted on two different sets of EMG signals, giving rise to case study A and case study B.

A similar study of CS applied to EMG signal was performed in [49]. In that work sparsity is enforced to the signal with a time-domain thresholding technique, and reconstruction SNR is measured with respect to the sparsified signal. In this work, to have an estimation of overall information loss of the signal, after enforcing sparsity with reduced operator for each basis, we measured SNR with respect to the original signal x.

4.1. Case Study A

The signals used in this study refer to three different muscles, namely biceps brachii, deltoideus medius, and triceps brachii. They were recorded by the sEMG acquisition set-up described in [16] and following the protocol outlined in [50,51]. The EMG signal was high-pass filtered at 5 Hz and low-pass filtered at 500 Hz before being sampled at 2 kHz. The algorithms were applied to frames of length , which is a large value enough to limit SNR variations among frames. In the simulations the index k and the compression factor , i.e., the inverse of , were varied. The performance has been measured based on the following equivalent signal to noise ratio

where is the reconstruction signal, by averaging the results obtained with different frames.

4.1.1. Basis Selection

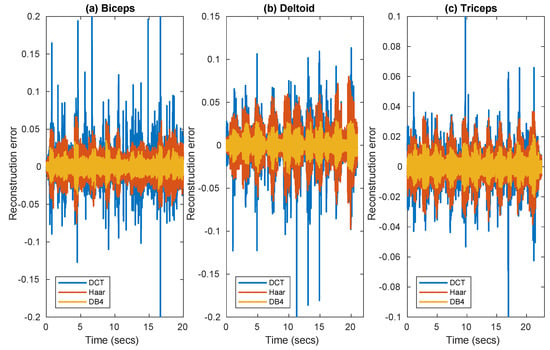

Figure 1 compares for the three muscles the reconstruction error in three frames extracted from the data set, as achieved with convex optimization using three different bases, DCT, Haar, and DB4. Since the signal was pre-filtered at 500 Hz, this can lead to an improvement of sparsity in the frequency domain making DCT worth testing.

Figure 1.

Reconstruction error of EMG signal as achieved with convex optimization, using DCT, Haar, and DB4 bases in frames corresponding to three muscles (a) Biceps (b) Deltoideus (c) Triceps.

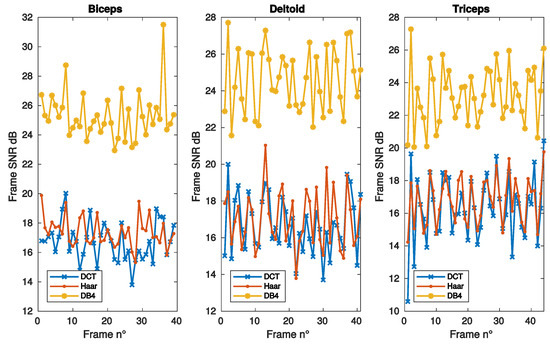

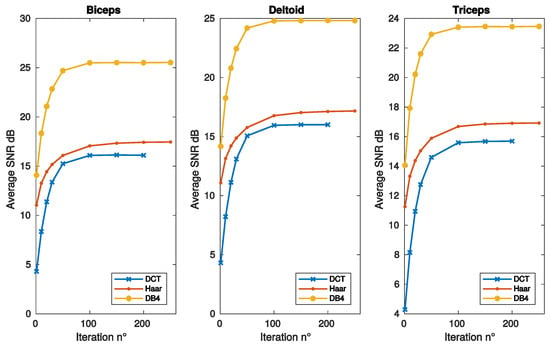

Figure 2 and Figure 3 report the SNR as a function of frame, sparsity and iteration respectively, for the same muscles of Figure 1. DB4 basis clearly shows the best reconstruction performance in all the conditions considered in these figures.

Figure 2.

SNR vs. frame number, as achieved with convex optimization, using DCT, Haar, and DB4 bases, for the same muscles of Figure 1.

Figure 3.

SNR vs. algorithm iterations, as achieved with convex optimization, for the same bases and muscles of Figure 1.

4.1.2. Comparison of Algorithms Performance

As the ultimate goal of this paper is to study and compare the CS methods for the reconstruction of EMG signals, a large experimentation has been carried out with the algorithms previously described.

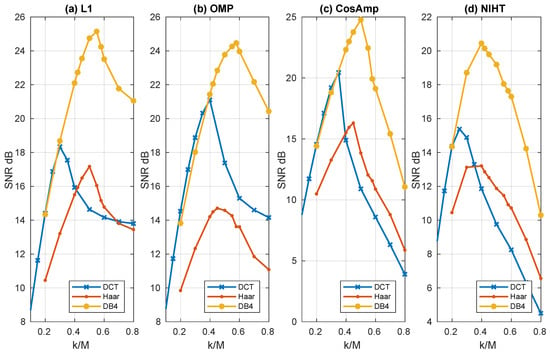

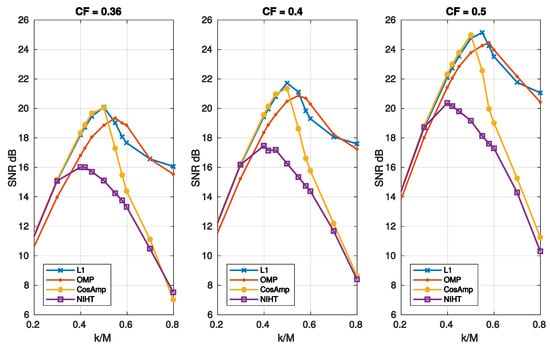

Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 report the performance achieved with the four algorithms L1, OMP, CoSaMP, and NIHT under different experimental conditions. In particular the behavior of SNR as a function of sparsity for the four algorithms and the three bases is shown in Figure 4, where a constant value of compression factor is used. Here the sparsity with respect to the dimension M has been adopted as for the behavior is not of particular significance. It is evident from these results the superiority of DB4 with respect to other bases as already pointed out in the previously figures. Concerning algorithm performance, all the algorithms show a pronounced peak near the value of . This behavior is due to the fact that the SNR is measured with respect to the original signal, and as decreases the fidelity between x and deteriorates. Moreover, while for OMP, CoSaMP, and NIHT, the SNR falls rapidly as increases, the L1 algorithm remains nearly constant beyond the maximum.

Figure 4.

Case Study A—SNR as a function of sparsity with a compression factor , for the four algorithms, (a) L1, (b) OMP, (c) CoSaMP, (d) NIHT, using the same bases of Figure 1.

Figure 5.

Case Study A—SNR as a function of sparsity and compression factor , for the four algorithms, L1, OMP, CoSaMP, NIHT, using the DB4 basis.

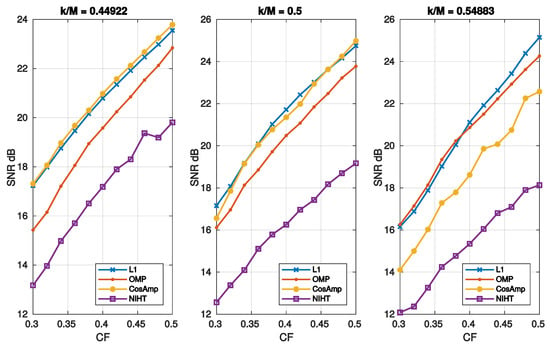

Figure 6.

Case Study A—SNR as function of compression factor for the four algorithms and three values of sparsity .

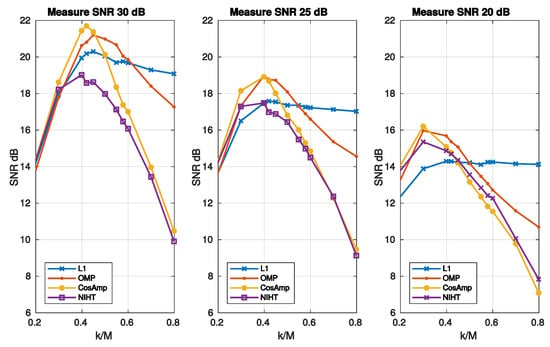

Figure 7.

Case Study A—SNR as a function of sparsity for the four algorithms and three values of noise superimposed to the signal.

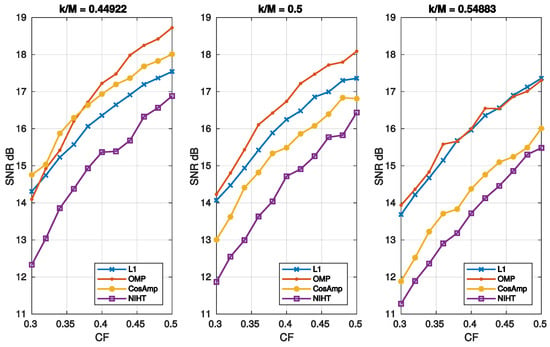

Figure 8.

Case Study A—SNR as a function of compression factor for the four algorithms and three values of sparsity . A value of SNR = 25 dB for the measurement signal y is used.

Figure 5 reports the SNR as a function of sparsity for different values of . In these cases, L1 and OMP have the better performance as they show a similar behavior. Figure 6 depicts the SNR as a function of compression factor and different values of . Also, in this case L1 and OMP show the better performance.

4.1.3. Noise Tolerance

Real data CS acquisition systems are inherently noisy, thus to simulate a more realistic situation some experiments have been conducted with a noise superimposed to the signal. The effect of a noisy signal y on CS reconstruction corresponds to an error in the sparse solution x given in this case by

where denotes the noise-free solution. The error term can be particularized for the four algorithms as follows:

where n is the noise superimposed to y. It is straightforward to show that the following inequality

holds, thus giving the relationship

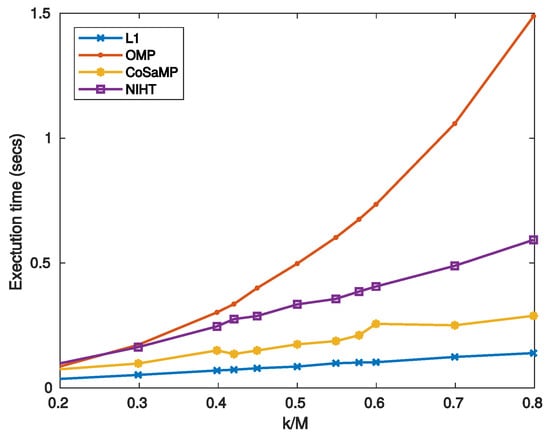

where and refer to the and components, respectively. For high values of noise SNR degenerates to SNR thus worsening the noise-free performance. It is worth noticing that for the SNR is independent of , as it results from Equation (50) and the definition of SNR. This implies that reducing the SNR does not affect the reconstruction, thus resulting almost constant with . For the other algorithms increases with , so that a maximum for the SNR is expected. Figure 7 reports the SNR as a function of sparsity for three values of noise superimposed, while Figure 8 is the noisy version of Figure 6, in which a value of SNR = 25 dB for the measurement signal y is used. The experimental results confirm the considerations stated above for L1, which shows the worst behavior when the measure SNR decreases. As for OMP, CoSaMP, and NIHT, their performances are almost independent of for low values of it, while suddenly worsen when exceeds a critical value of about 0.5. Finally, Figure 9 reports the computational cost and execution time on MATLAB respectively as functions of sparsity . The execution time was computed using MATLAB tic-toc functions. These figures clearly show that L1-minimization outperforms the other algorithms.

Figure 9.

Case Study A—Execution time on MATLAB as a function of sparsity .

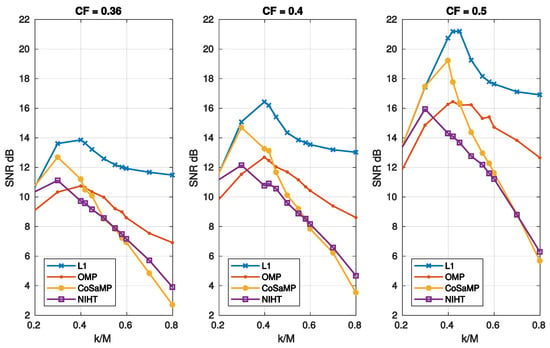

4.2. Case Study B

The EMG signals used in this case study come from PhysioBank [52], a large and growing archive of well-characterized digital recordings of physiological signals and related data for use by biomedical research community. In particular, the data come from the `Neuroelectric and Myoelectric Databases’ of PhysioBank archives. A class of this database, named `Examples of Electromyograms’ [53], has been used; it contains short EMG recordings from three subjects (one without neuromuscular disease, one with myopathy, one with neuropathy). The signals are sampled at a frequency of 4 kHz and the frame has a length , the same as case study A. As the signal from this dataset was not low-pass filtered, it contains all typical EMG frequency components, therefore this time we discarded DCT and Haar, using only DB4 basis. We chose to add this case study to analyse performances when signal has the lowest sparsity as possible which is the worst scenario for the reconstruction performance.

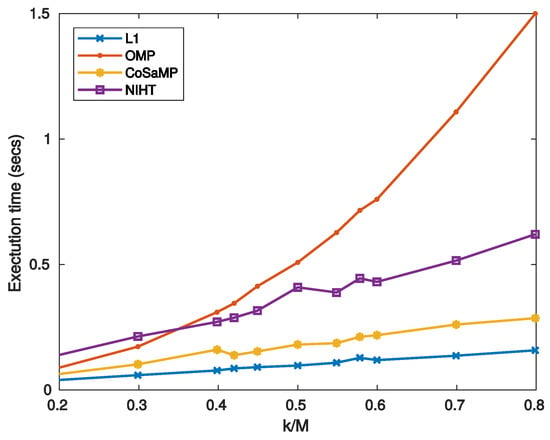

Figure 10 reports the execution time as a function of sparsity for the four algorithms. Figure 11 compares the SNR as a function of sparsity for three values of , as obtained with the four algorithms. As shown in these figures, the obtained results have a similar behaviour of those achieved in case study A.

Figure 10.

Case Study B—Execution time on MATLAB as a function of sparsity .

Figure 11.

Case Study B—SNR as a function of sparsity and compression factor , for the four algorithms, L1, OMP, CoSaMP, NIHT, using the DB4 basis.

Finally, based on the experimental results previously reported a qualitative assessment of the four reconstruction algorithms can be derived that explores the trade-off in the choice of a CS reconstruction algorithm for EMG sensor application. To this end Table 2 summarizes the performance, in terms of accuracy, noise tolerance, and speed, of the four reconstruction algorithms.

Table 2.

Comparison, in terms of accuracy and speed, of the four algorithms L1, OMP, CoSaMP, NIHT.

The L1 minimization algorithm has an excellent behavior for accuracy, noise tolerance, and speed, thus outperforming the other algorithms. Among these, CoSaMP shows the best trade-off between accuracy and speed.

5. Conclusions

This paper presents a comprehensive comparative study of four of the most common algorithms for CS reconstruction of EMG signals, namely L1-minimization, OMP, CoSaMP, and NIHT. The study has been conducted using a wide range of EMG biosignals coming from two different datasets. Concerning algorithm accuracy, all the algorithms show a pronounced peak of SNR near the value of . However, while for OMP, CoSaMP, and NIHT, the SNR falls rapidly, the L1 algorithm remains nearly constant beyond the maximum. As for the effect of noise on CS reconstruction, L1-minimization shows a behavior that is almost independent of . The results on computational cost and execution time on MATLAB show that L1-minimization outperforms the other algorithms. Finally, Table 2 summarizes the performance, in terms of accuracy, noise tolerance, speed, and computational cost of the four algorithms.

Author Contributions

Investigation, L.M., C.T., L.F., and P.C.; Methodology, L.M., C.T., L.F., and P.C.; Writing—original draft, L.M., C.T., L.F., and P.C.

Funding

This work was supported by a Università Politecnica delle Marche Research Grant.

Conflicts of Interest

The authors declare no conflict of interest. The funding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References

- Naik, G.R.; Selvan, S.E.; Gobbo, M.; Acharyya, A.; Nguyen, H.T. Principal Component Analysis Applied to Surface Electromyography: A Comprehensive Review. IEEE Access 2016, 4, 4025–4037. [Google Scholar] [CrossRef]

- Merlo, A.; Farina, D.; Merletti, R. A fast and reliable technique for muscle activity detection from surface EMG signals. IEEE Trans. Biomed. Eng. 2003, 50, 316–323. [Google Scholar] [CrossRef] [PubMed]

- Fukuda, T.Y.; Echeimberg, J.O.; Pompeu, J.E.; Lucareli, P.R.G.; Garbelotti, S.; Gimenes, R.; Apolinário, A. Root mean square value of the electromyographic signal in the isometric torque of the quadriceps, hamstrings and brachial biceps muscles in female subjects. J. Appl. Res. 2010, 10, 32–39. [Google Scholar]

- Nawab, S.H.; Roy, S.H.; Luca, C.J.D. Functional activity monitoring from wearable sensor data. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; Volume 1, pp. 979–982. [Google Scholar]

- Lee, S.Y.; Koo, K.H.; Lee, Y.; Lee, J.H.; Kim, J.H. Spatiotemporal analysis of EMG signals for muscle rehabilitation monitoring system. In Proceedings of the 2013 IEEE 2nd Global Conference on Consumer Electronics, Tokyo, Japan, 1–4 October 2013; pp. 1–2. [Google Scholar]

- Biagetti, G.; Crippa, P.; Curzi, A.; Orcioni, S.; Turchetti, C. Analysis of the EMG Signal During Cyclic Movements Using Multicomponent AM–FM Decomposition. IEEE J. Biomed. Health Inform. 2015, 19, 1672–1681. [Google Scholar] [CrossRef] [PubMed]

- Chang, K.M.; Liu, S.H.; Wu, X.H. A wireless sEMG recording system and its application to muscle fatigue detection. Sensors 2012, 12, 489–499. [Google Scholar] [CrossRef]

- Ghasemzadeh, H.; Jafari, R.; Prabhakaran, B. A Body Sensor Network With Electromyogram and Inertial Sensors: Multimodal Interpretation of Muscular Activities. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 198–206. [Google Scholar] [CrossRef] [PubMed]

- Du, W.; Omisore, M.; Li, H.; Ivanov, K.; Han, S.; Wang, L. Recognition of Chronic Low Back Pain during Lumbar Spine Movements Based on Surface Electromyography Signals. IEEE Access 2018, 6, 65027–65042. [Google Scholar] [CrossRef]

- Spulber, I.; Georgiou, P.; Eftekhar, A.; Toumazou, C.; Duffell, L.; Bergmann, J.; McGregor, A.; Mehta, T.; Hernandez, M.; Burdett, A. Frequency analysis of wireless accelerometer and EMG sensors data: Towards discrimination of normal and asymmetric walking pattern. In Proceedings of the 2012 IEEE International Symposium on Circuits and Systems, Seoul, Korea, 20–23 May 2012; pp. 2645–2648. [Google Scholar]

- Zhang, X.; Chen, X.; Li, Y.; Lantz, V.; Wang, K.; Yang, J. A Framework for Hand Gesture Recognition Based on Accelerometer and EMG Sensors. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2011, 41, 1064–1076. [Google Scholar] [CrossRef]

- Rahimi, A.; Benatti, S.; Kanerva, P.; Benini, L.; Rabaey, J.M. Hyperdimensional biosignal processing: A case study for EMG-based hand gesture recognition. In Proceedings of the 2016 IEEE International Conference on Rebooting Computing (ICRC), San Diego, CA, USA, 17–19 October 2016; pp. 1–8. [Google Scholar]

- Brunelli, D.; Tadesse, A.M.; Vodermayer, B.; Nowak, M.; Castellini, C. Low-cost wearable multichannel surface EMG acquisition for prosthetic hand control. In Proceedings of the 2015 6th International Workshop on Advances in Sensors and Interfaces (IWASI), Gallipoli, Italy, 18–19 June 2015; pp. 94–99. [Google Scholar]

- Yang, D.; Jiang, L.; Huang, Q.; Liu, R.; Liu, H. Experimental Study of an EMG-Controlled 5-DOF Anthropomorphic Prosthetic Hand for Motion Restoration. J. Intell. Robot. Syst. 2014, 76, 427–441. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Biagetti, G.; Crippa, P.; Falaschetti, L.; Turchetti, C. Classifier Level Fusion of Accelerometer and sEMG Signals for Automatic Fitness Activity Diarization. Sensors 2018, 18, 2850. [Google Scholar] [CrossRef] [PubMed]

- Roy, S.H.; Cheng, M.S.; Chang, S.S.; Moore, J.; Luca, G.D.; Nawab, S.H.; Luca, C.J.D. A Combined sEMG and Accelerometer System for Monitoring Functional Activity in Stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 2009, 17, 585–594. [Google Scholar] [CrossRef] [PubMed]

- Varshney, U. Pervasive Healthcare and Wireless Health Monitoring. Mob. Netw. Appl. 2007, 12, 113–127. [Google Scholar] [CrossRef]

- Movassaghi, S.; Abolhasan, M.; Lipman, J.; Smith, D.; Jamalipour, A. Wireless Body Area Networks: A Survey. IEEE Commun. Surv. Tutor. 2014, 16, 1658–1686. [Google Scholar] [CrossRef]

- Cavallari, R.; Martelli, F.; Rosini, R.; Buratti, C.; Verdone, R. A Survey on Wireless Body Area Networks: Technologies and Design Challenges. IEEE Commun. Surv. Tutor. 2014, 16, 1635–1657. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, F.; Shakhsheer, Y.; Silver, J.D.; Klinefelter, A.; Nagaraju, M.; Boley, J.; Pandey, J.; Shrivastava, A.; Carlson, E.J.; et al. A Batteryless 19 μW MICS/ISM-Band Energy Harvesting Body Sensor Node SoC for ExG Applications. IEEE J. Solid-State Circuits 2013, 48, 199–213. [Google Scholar] [CrossRef]

- Craven, D.; McGinley, B.; Kilmartin, L.; Glavin, M.; Jones, E. Compressed Sensing for Bioelectric Signals: A Review. IEEE J. Biomed. Health Inform. 2015, 19, 529–540. [Google Scholar] [CrossRef]

- Cao, D.; Yu, K.; Zhuo, S.; Hu, Y.; Wang, Z. On the Implementation of Compressive Sensing on Wireless Sensor Network. In Proceedings of the 2016 IEEE First International Conference on Internet-of-Things Design and Implementation (IoTDI), Berlin, Germany, 4–8 April 2016; pp. 229–234. [Google Scholar] [CrossRef]

- Ren, F.; Marković, D. A Configurable 12–237 kS/s 12.8 mW Sparse-Approximation Engine for Mobile Data Aggregation of Compressively Sampled Physiological Signals. IEEE J. Solid-State Circuits 2016, 51, 68–78. [Google Scholar]

- Kanoun, K.; Mamaghanian, H.; Khaled, N.; Atienza, D. A real-time compressed sensing-based personal electrocardiogram monitoring system. In Proceedings of the 2011 Design, Automation Test in Europe, Grenoble, France, 14–18 March 2011; pp. 1–6. [Google Scholar]

- Chen, F.; Chandrakasan, A.P.; Stojanovic, V.M. Design and Analysis of a Hardware-Efficient Compressed Sensing Architecture for Data Compression in Wireless Sensors. IEEE J. Solid-State Circuits 2012, 47, 744–756. [Google Scholar] [CrossRef]

- Mangia, M.; Paleari, M.; Ariano, P.; Rovatti, R.; Setti, G. Compressed sensing based on rakeness for surface ElectroMyoGraphy. In Proceedings of the 2014 IEEE Biomedical Circuits and Systems Conference (BioCAS) Proceedings, Cleveland, OH, USA, 17–19 October 2014; pp. 204–207. [Google Scholar]

- Marchioni, A.; Mangia, M.; Pareschil, F.; Rovatti, R.; Setti, G. Rakeness-based Compressed Sensing of Surface ElectroMyoGraphy for Improved Hand Movement Recognition in the Compressed Domain. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Near-Optimal Signal Recovery From Random Projections: Universal Encoding Strategies? IEEE Trans. Inf. Theory 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Donoho, D.L.; Stark, P.B. Uncertainty Principles and Signal Recovery. SIAM J. Appl. Math. 1989, 49, 906–931. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An Introduction To Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Qaisar, S.; Bilal, R.M.; Iqbal, W.; Naureen, M.; Lee, S. Compressive sensing: From theory to applications, a survey. J. Commun. Netw. 2013, 15, 443–456. [Google Scholar] [CrossRef]

- Tropp, J.A.; Wright, S.J. Computational Methods for Sparse Solution of Linear Inverse Problems. Proc. IEEE 2010, 98, 948–958. [Google Scholar] [CrossRef]

- Kim, S.; Koh, K.; Lustig, M.; Boyd, S.; Gorinevsky, D. An Interior-Point Method for Large-Scaleℓ1-Regularized Least Squares. IEEE J. Sel. Top. Signal Process. 2007, 1, 606–617. [Google Scholar] [CrossRef]

- Tropp, J.A. Greed is good: Algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 2004, 50, 2231–2242. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal Recovery From Random Measurements Via Orthogonal Matching Pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Cai, X.; Zhou, Z.; Yang, Y.; Wang, Y. Improved Sufficient Conditions for Support Recovery of Sparse Signals Via Orthogonal Matching Pursuit. IEEE Access 2018, 6, 30437–30443. [Google Scholar] [CrossRef]

- Davis, G.; Mallat, S.; Avellaneda, M. Adaptive greedy approximations. Constr. Approx. 1997, 13, 57–98. [Google Scholar] [CrossRef]

- Pati, Y.C.; Rezaiifar, R.; Krishnaprasad, P.S. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–3 November 1993; pp. 40–44. [Google Scholar]

- Needell, D.; Tropp, J. CoSaMP: Iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmon. Anal. 2009, 26, 301–321. [Google Scholar] [CrossRef]

- Dai, W.; Milenkovic, O. Subspace Pursuit for Compressive Sensing Signal Reconstruction. IEEE Trans. Inf. Theory 2009, 55, 2230–2249. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Normalized Iterative Hard Thresholding: Guaranteed Stability and Performance. IEEE J. Sel. Top. Signal Process. 2010, 4, 298–309. [Google Scholar] [CrossRef]

- Ravelomanantsoa, A.; Rabah, H.; Rouane, A. Compressed Sensing: A Simple Deterministic Measurement Matrix and a Fast Recovery Algorithm. IEEE Trans. Instrum. Meas. 2015, 64, 3405–3413. [Google Scholar] [CrossRef]

- Ravelomanantsoa, A.; Rouane, A.; Rabah, H.; Ferveur, N.; Collet, L. Design and Implementation of a Compressed Sensing Encoder: Application to EMG and ECG Wireless Biosensors. Circuits Syst. Signal Process. 2017, 36, 2875–2892. [Google Scholar] [CrossRef]

- Shukla, K.K.; Tiwari, A.K. Efficient Algorithms for Discrete Wavelet Transform: With Applications to Denoising and Fuzzy Inference Systems; Springer Publishing Company, Incorporated: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Dixon, A.M.R.; Allstot, E.G.; Gangopadhyay, D.; Allstot, D.J. Compressed Sensing System Considerations for ECG and EMG Wireless Biosensors. IEEE Trans. Biomed. Circuits Syst. 2012, 6, 156–166. [Google Scholar] [CrossRef]

- Biagetti, G.; Crippa, P.; Falaschetti, L.; Orcioni, S.; Turchetti, C. A portable wireless sEMG and inertial acquisition system for human activity monitoring. Lect. Notes Comput. Sci. 2017, 10209 LNCS, 608–620. [Google Scholar]

- Biagetti, G.; Crippa, P.; Falaschetti, L.; Orcioni, S.; Turchetti, C. Human Activity Monitoring System Based on Wearable sEMG and Accelerometer Wireless Sensor Nodes. BioMed. Eng. OnLine 2018, 17 (Suppl. 1), 132. [Google Scholar] [CrossRef]

- PhysioBank. Available online: https://physionet.org/physiobank/ (accessed on 19 March 2019).

- Neuroelectric and Myoelectric Databases—Examples of Electromyograms. Available online: https://physionet.org/physiobank/database/emgdb/ (accessed on 19 March 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).