Abstract

Nondestructive plant growth measurement is essential for researching plant growth and health. A nondestructive measurement system to retrieve plant information includes the measurement of morphological and physiological information, but most systems use two independent measurement systems for the two types of characteristics. In this study, a highly integrated, multispectral, three-dimensional (3D) nondestructive measurement system for greenhouse tomato plants was designed. The system used a Kinect sensor, an SOC710 hyperspectral imager, an electric rotary table, and other components. A heterogeneous sensing image registration technique based on the Fourier transform was proposed, which was used to register the SOC710 multispectral reflectance in the Kinect depth image coordinate system. Furthermore, a 3D multiview RGB-D image-reconstruction method based on the pose estimation and self-calibration of the Kinect sensor was developed to reconstruct a multispectral 3D point cloud model of the tomato plant. An experiment was conducted to measure plant canopy chlorophyll and the relative chlorophyll content was measured by the soil and plant analyzer development (SPAD) measurement model based on a 3D multispectral point cloud model and a single-view point cloud model and its performance was compared and analyzed. The results revealed that the measurement model established by using the characteristic variables from the multiview point cloud model was superior to the one established using the variables from the single-view point cloud model. Therefore, the multispectral 3D reconstruction approach is able to reconstruct the plant multispectral 3D point cloud model, which optimizes the traditional two-dimensional image-based SPAD measurement method and can obtain a precise and efficient high-throughput measurement of plant chlorophyll.

1. Introduction

The growth, yield, and quality of crops depend on a variety of nutrient elements, among which nitrogen has the most significant impact [1,2]. Because 70–80% of nitrogen in the leaves is concentrated in the chloroplasts, the chlorophyll content reflects the nitrogen nutritional status of the plant [1,3,4]. The concentration of chlorophyll, a critical pigment related to photosynthesis, is often used as an important indicator for evaluating a plant’s photosynthesis ability, growth status, environmental stress, nutritional stress, and pest and disease stress [5,6,7]. Therefore, an efficient, accurate, and nondestructive method for measuring the chlorophyll content of plants is of great significance for the rapid assessment of plant growth status and, as a result, precise cultivation management and accurate plant yield estimation.

Spectrophotometry is the most commonly used technique for performing routine measurements of chlorophyll content in plant leaves [2,8]. In particular, the Arnon method is used throughout the world [8]; it requires a series of operational procedures, involving leaf grinding, filtration, and centrifugation, to remove debris. Although it yields accurate and reliable results, this method is destructive, costly, time-consuming, and complicated to implement because chlorophyll pigments are prone to photolytic decomposition. Hence, spectrophotometry is not an ideal tool for regular, systematic plant research because it cannot meet the requirements for high-throughput measurements and is unsuitable for field monitoring.

The rapid development of sensing and computer vision technologies has enabled the nondestructive assessment of plant chlorophyll using monocular vision [9,10,11,12,13], laser scanning imaging [14], multispectral imaging [15,16], hyperspectral imaging [17,18,19], chlorophyll fluorescence imaging [20], and other techniques, combined with image-processing methods. The spectral reflectance data in certain wavebands of light [2,6,7] can be used to establish a chlorophyll measurement model that rapidly and effectively predicts the chlorophyll contents of plants. However, the performance of prediction models that directly use spectral data in the relevant wavebands is prone to the effects of numerous factors, such as the soil, atmospheric, and environmental conditions, as well as the leaf structure. Therefore, a vegetation index, which is typically established by combining data from multiple relevant wavebands, is often used to construct a chlorophyll measurement model. This approach effectively reduces the impact of external factors and improves the prediction performance and stability of the model [2,6,7].

Monocular vision-based chlorophyll assessment technology primarily uses characteristic parameters or combinations of 2D images in RGB, HSV, YUV, or LAB color spaces [10,13] to establish a plant chlorophyll assessment model. This technology requires images that are taken under very high environmental illumination and its assessment model has relatively low applicability. Hyperspectral imaging is frequently applied to determine chlorophyll-sensitive wavebands using methods involving the correlation coefficient, inverse spectrum, spectral difference analysis, stepwise regression, discriminant analysis, partial least-squares regression, principal component analysis, continuous projection algorithms, independent component analysis, and artificial neural networks [2,6,7]. After the characteristic wavebands are selected, multispectral imaging is applied to obtain the actual chlorophyll measurement. The measurement model is constructed using a linear or nonlinear method according to the spectral reflectivity or vegetation indexes at the characteristic wavebands. The linear methods include principal component regression, linear regression, multiple linear regression, and partial least-squares regression. Nonlinear modeling methods include an artificial neural network, soft independent modeling of class analogies, a support vector machine, and the kernel partial least-squares method [2,6,7]. Chlorophyll fluorescence imaging technology provides physiological information, such as plant nutrients, diseases, and stresses, by using characteristic manifestations in plant fluorescence images [20]. This technology has strict requirements for the imaging environment, excitation light source, and imaging devices; hence, it cannot be widely promoted and applied because its operational conditions are limited and it requires very expensive equipment.

At present, all plant chlorophyll measurement technologies, including monocular vision and multispectral, hyperspectral, and chlorophyll fluorescence imaging technologies, extract the characteristic parameters from 2D images of plants (mostly plant leaves, seedling plants, or canopy images) to establish a chlorophyll measurement model. However, because plants have a complex 3D morphology, it is difficult to obtain accurate and stable results using these 2D-image-based technologies and to determine the 3D distribution of chlorophyll using only single-view plant canopy imaging data. To date, plant 3D reconstruction methods have been extensively developed on the basis of 3D LiDAR data [21,22], the Kinect camera [23,24], multiview photogrammetry [25,26], and multicamera synchronous reconstruction [27]. Kinect sensor-based 3D plant reconstruction can be divided into single-view [28] and multiview reconstruction [29], the latter mainly using the iterative closest point (ICP) algorithm [23,30]. However, first of all, we need to solve the problem around the rough registration of multiview point clouds, otherwise ICP cannot be used for accurate registration. Furthermore, a reconstructed plant 3D point cloud model contains only coordinate information and RGB color information. Various studies have been conducted to reconstruct multispectral 3D point cloud models. Using plant images obtained from 50 angles of view (AOVs), Kenta et al. [31] constructed a plant 3D point cloud model that was based on the “structure from motion” theorem and developed a chlorophyll measurement model using a normalized red value, r/(r + g + b). Using multispectral plant canopy images obtained from 24 AOVs, Zhang et al. [32] established a multispectral 3D point cloud model and a regression model using the difference vegetation index (DVI), normalized DVI (NDVI), green NDVI (GNDVI), and ratio vegetation index (RVI). The relative chlorophyll content was measured by a soil and plant analyzer development (SPAD). However, the construction of a multispectral 3D point cloud model is inefficient, because plant images from dozens of AOVs are required. Thus, this technology is unsuitable for high-throughput chlorophyll measurement.

In this study, depth vision technology and multispectral imaging technology were jointly applied to obtain images of the study subjects, namely, greenhouse tomato plants. The Red-Green-Blue and Depth (RGB-D), and multispectral images of each plant were collected synchronously by a Kinect camera and an hyperspectral imager (SOC710: 710 series by surface optics corporation) at four AOVs with an angle interval of 90° in the same imaging room. Subsequently, the multispectral reflectance was registered with a Fourier transform-based approach using depth coordinates. With a Kinect pose estimation and self-calibration method, the multiview point cloud coordinate system was unified for a rough multiview point cloud registration. Finally, the ICP algorithm was used for precise multiview point cloud registration to construct a multispectral 3D point cloud model of a tomato plant. According to the multispectral point cloud models of the plant canopy, the vegetation indexes, namely, the NDVI, GNDVI, RVI, difference vegetative index ratio (NDVIR), chlorophyll green index (CIG), red-edge CIG (RCIG), normalized green index (NG), normalized red index (NR), and green RVI (GRVI), were calculated and combined with the chlorophyll SPAD values to establish highly efficient prediction models and to measure the chlorophyll content in the plant canopy accurately. This measurement method can be expanded to assess the physiological features of other plant canopies. In summary, this method provides good technical support for high-throughput plant phenotypic analysis and is significant for the development of plant phenomics and other research fields.

2. Materials and Methods

2.1. Sample Cultivation

On 5 March 2019, 60 tomato seedlings (Fenguan No. 1) were transplanted into a Venlo greenhouse at the Modern Controlled-Environment Agricultural Technology and Equipment Engineering Laboratory in Jiangsu Province. Galuku coir substrate and Yamazaki tomato formula nutrient solution (pH 6.0–6.5, EC 1.2 mS·cm−1) were used for cultivation. To manipulate the plant chlorophyll levels, 60 tomato plant samples were divided into five groups that received five different doses of nutrient solution, i.e., 25%, 75%, 100%, 150%, or 200% of the standard formula [33], with 100% being the dose required for normal tomato growth [34]; each group included 12 plants. From the 5th to 6th week after transplantation, the chlorophyll SPAD readings of the tomato leaves, as well as the plant multispectral and RGB-D images, were obtained.

2.2. Instrument and Chlorophyll Content Measurement

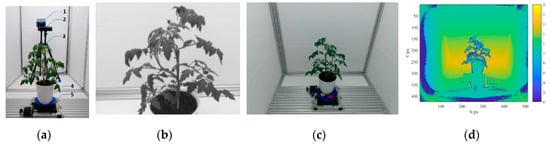

The multispectral 3D imaging system established in this study for greenhouse tomato plants consisted of an imaging room, a light source, an SOC710 hyperspectral imager, a Kinect sensor, an electric rotary table, a controller, and a graphics workstation (Figure 1a). The imaging room was made from aluminum profiles and its inner dimensions measured 180 (length) × 120 (width) × 160 cm (height); the interior sidewalls and floor were covered with white matte film. The power of the light source was 150 W, the response wavebands ranged from 380 to 2200 nm, and the intensity of the light source was stepless adjustable. The SOC710 hyperspectral imager had a built-in scanning system and covered a spectral range from 400 to 1000 nm for 128 wavebands; the image resolution was 696 × 510 px and the wavebands for imaging were selectable. The Kinect sensor (Microsoft Kinect 2.0) consisted of a color camera and a depth sensor. The RGB and depth images had resolutions of 1920 × 1080 px and 512 × 424 px, respectively; the frame rate was 30 fps, and the detection range was 0.50–4.50 m. The electric rotary table disc, which was 20.00 cm in diameter and was driven by a 57BYG stepper motor, could be rotated over a 360° range with a resolution of 0.0005° and a positioning precision of 0.01°. The controller used was an HW-36MT-3PG programmable controller with 20 input interfaces, 16 output interfaces, an RS232C communication interface, and a built-in 3-channel, 100 kHz, high-speed pulse output. The graphics workstation processor was an Intel (R) Xeon (R) E-2176M CPU @2.70 GHz with a 32G memory capacity and an NVIDIA Quadro P600 4G graphics card. Visual Studio 2015 and MATLAB 2017a were jointly used to program the system control.

Figure 1.

Multispectral three-dimensional reconstruction system for the greenhouse tomato plants. (a) Multispectral three-dimensional reconstruction system (1. SOC710 hyperspectral imager; 2. Kinect senor; 3. Tripod; 4. Tomato plant; 5. Electric turntable). (b) Spectral image at 466.93 nm by the SOC710 senor. (c) RGB image by the Kinect sensor. (d) Depth image by the Kinect sensor.

Figure 1b–d shows a representative spectral image taken by the SOC710 hyperspectral imager (using 466.93 nm as an example) and RGB and depth images obtained by the Kinect sensor. The multiview images collected by the SOC710 hyperspectral imager and the Kinect sensor were used to reconstruct the multispectral 3D point cloud model of the tomato plants using a multispectral 3D reconstruction algorithm.

In this study, a portable chlorophyll meter (Konica Minolta SPAD-502 Plus; Minolta, Osaka, Japan) was used to measure SPAD values ranging from −9.9 to 199.9 SPAD, with a precision of ±1.0 SPAD and a repeatability of ±0.3 SPAD. The SPAD value was measured next to the vein on the widest part of the tomato leaf surface. Each tomato canopy was divided into upper, middle, and lower layers and the SPAD values were measured at ten sites for each layer; a total of 30 SPAD readings were obtained and averaged to derive the final SPAD value for each plant.

2.3. Multispectral 3D Point Cloud Modeling

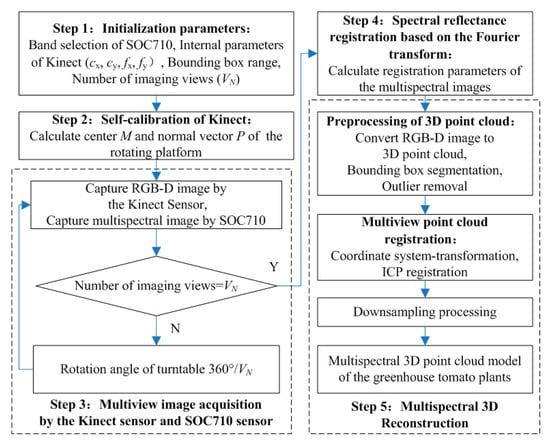

Figure 2 presents a flowchart of the multispectral 3D imaging system for greenhouse tomato plants. Step 1 was used to initialize the system parameters and included the selection of the characteristic wavebands: Blue, Green, Red, Red-edge, and Near infrared (Nir), by the SOC710 hyperspectral imager and the determination of (i) the intrinsic parameters for the Kinect sensor, such as the principal point coordinates (cx, cy) and focal length (fx, fy), (ii) the area of the plant region of interest (ROI) (row, width, and height), and (iii) the number of AOVs (VN) required for plant 3D modeling. Step 2 was the Kinect pose estimation and self-calibration process. RGB-D images of the electric rotary table surface were taken at two or more AOVs and used to determine the point cloud coordinates of the yellow and red calibration stickers on the table surface at different AOVs according to the color threshold value. Subsequently, the center points of the two calibration stickers were identified to calculate the center coordinates and the normal vector of the rotation axis on the rotary table. Step 3 was spectral reflectance registration and calibration. The registration transformation matrix was calculated on the basis of the Fourier transform, then the spectral images obtained by the SOC710 imager were registered to the RGB image coordinate system obtained by the Kinect sensor. The spectral reflectance of multiple channels was mapped to the corresponding depth coordinates by using the same transformation parameters. Step 4 was the acquisition of the plant multiview RGB-D images and multispectral images. The plant RGB-D images and multispectral images were taken at all the desired AOVs by turning the electric rotary table for 360°/VN after imaging at each AOV. Step 5 was the plant multispectral 3D reconstruction. According to the intrinsic parameters of the Kinect sensor, the RGB-D image at each AOV was converted into 3D point clouds. Subsequently, in accordance with the plant ROI, the 3D point clouds were pretreated by removing bounding boxes and outliers. Then, according to the center coordinates and normal vectors of the rotation axis on the rotary table, the coordinate system of the point cloud at each AOV was uniformly transformed, followed by the ICP registration of the point clouds at each AOV. Finally, the 3D point clouds were down-sampled to complete the plant 3D reconstruction, which also led to the successful reconstruction of the multispectral 3D point cloud model, because each spatial coordinate point contained information regarding the RGB value and the reflectance in each waveband.

Figure 2.

A flowchart for soil and plant analyzer development (SPAD) measurement of the greenhouse tomato plant canopy.

2.3.1. Spectral Reflectance Registration

When using both SOC710 images and Kinect images, one major task is to solve the transformation problems regarding the displacement, rotation angle, and zoom level. It is very difficult to obtain registration parameters by using an image feature point method (such as Scale-invariant feature transform [35], Speeded up robust feature [36], or Harris corner feature [37]) because (i) the image resolution of SOC710 is 696 × 510 px, the plant image area resolution of the Kinect sensor is 170 × 170 px, and the two imaging regions have large zoom multiples, (ii) the imaging regions of the two sensors are inconsistent, and (iii) the plant morphology is complex. In this study, the multispectral reflectance was registered to the depth coordinates using the principle of Fourier transform. In this approach, the translation, rotation matrix, and scaling coefficient between the two types of images are calculated according to the Fourier spectra of the images [38]. The frequency–domain relationship is shown in Formula (1), where the translation between the Kinect sensing image F (x, y) and the SOC710 multispectral image M (x, y) is (x0, y0), i.e., F (x, y) = M (x − x0, y − y0). As shown in Formula (2), the phase information on the cross-power spectrum includes the phase difference in the two images; through an inverse Fourier transformation of the cross-power spectrum, the 2D impact function δ (u − x0, v − y0) is obtained, which reaches its peak at (x0, y0) and is close to zero at the other positions. Finally, the translation parameter can be determined by identifying the peak.

where represents the Fourier transform of , represents the Fourier transform of , and represents the conjugate of .

Resolving the transformation problems in rotation and scaling between the SOC710 and Kinect images requires the transformation of the rotation and scaling relationship in the Cartesian coordinates into a translation relationship in the log–polar coordinates using the Fourier–Mellin transformation. If it is assumed that F (x, y) is obtained by rotating image M (x, y) by θ0 and that the scaling coefficient is σ, then the rotation in the frequency domain of the image can be determined by using Formula (3) because of the invariance and similarity features in the Fourier rotation. Subsequently, Formula (4) is used to map the frequency–domain coordinates to the log–polar coordinate space. Formula (5) is used to convert the rotation and scaling into a translation relationship and the rotation angle θ0 and scaling coefficient σ can be calculated.

where σ is the scaling coefficient, θ0 is the rotation angle, (x, y) are the image coordinates, results from mapping in the log–polar coordinate space, , and .

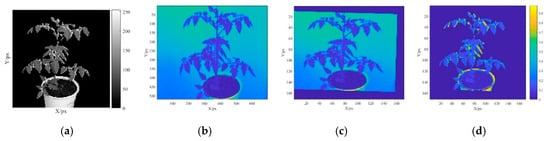

Figure 3a shows the to-be-registered ROI F (x, y) as a grayscale Kinect image. Figure 3b presents a grayscale SOC710 image M (x, y) and Figure 3c presents the image after the registration of M (x, y). Figure 3d shows an ROI region spectral reflectance of 466.93 nm, which reveals that the remarkable difference only appears in a small portion of the marginal region. This finding suggests that the spectral reflectance registration was successful.

Figure 3.

Spectral reflectance registration images. (a) Grayscale image of the calibration board (by SOC710), (b) grayscale image of the region of interest (ROI) on the calibration board (by Kinect), (c) image after SOC710 image registration, and (d) difference in the ROI.

It is unnecessary to calibrate the transformation matrix repeatedly if the relative position relationship between the SOC710 hyperspectral imager and the Kinect sensor is fixed. In this study, the same transformation parameters were used for all spectral reflectance images in each waveband to maintain the consistency of spectral reflectance mapping. Following the spectral registration, the RGB image, depth image, and registered multiband reflectance image of each plant sample were saved in a 3D array to lay the foundation for reconstructing a 3D point cloud model of the plant.

2.3.2. 3D Reconstruction of Multiview RGB-D Images

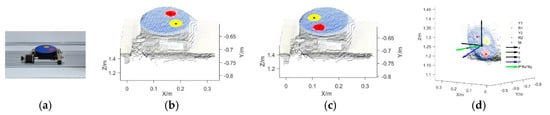

Figure 4a presents the electric rotary table used in this study, which had two circular calibration stickers (yellow and red, both 5 cm in diameter) on its round, blue surface. The Kinect pose estimation and self-calibration process was as follows. First, the RGB-D images of the rotary table were obtained and converted into 3D point clouds. According to the color threshold of the point clouds, the point cloud coordinates of the areas covered by the two calibration stickers were identified and the point cloud coordinates of the center of each sticker were calculated. Figure 4b demonstrates the coordinates of the two sticker centers, with Y1 representing the yellow sticker and R1 representing the red sticker, when the rotatory table was rotated by 0°. Similarly, Figure 4c shows the coordinates of the two sticker centers (Y2 and R2) when the rotatory table was rotated by 180°. Subsequently, according to the center coordinates of the calibration stickers in the two RGB-D images, the coordinates of the center M for the rotation axis of the rotary table were obtained. Finally, according to the coordinates of the calibration sticker centers in the two RGB-D images and the center coordinates M (a0, b0, c0) of the rotation axis, the normal vector P of the rotation axis of the rotary table was calculated and normalized to P (a, b, and c) (Figure 4d).

Figure 4.

Pose estimation and self-calibration of the Kinect sensor. (a) Electric rotary table, (b) identification of the centers of the calibration stickers (rotation angle 0°), (c) identification of the centers of the calibration stickers (rotation angle 180°), and (d) center of the rotation axis and the normal vector.

The rough registration stage: First, according to the intrinsic parameters of the Kinect sensor, i.e., the principal point coordinates (cx, cy) and focal length (fx, fy), the RGB-D images at various AOVs were converted into 3D point cloud images using Formula (6), in which the plant ROI area was identified from the determined ROI range. Subsequently, according to the center coordinates of the rotation axis M (a0, b0, c0), the translation transformation of the point cloud (xi, yi, zi) at each AOV was performed and the center of the rotation axis in the point cloud was shifted to the origin point (0, 0, 0) of the Kinect coordinate system, as shown in Formula (7). Next, according to the normal vector P (a, b, and c) of the rotation axis, the translated point cloud (xi′, yi′, zi′) was rotated until its normal vector reached the Y-axis, as shown in Formulas (8) and (9). Finally, using Formula (10), after the inverse rotation angle γ° around the Y-axis was determined according to the actual rotation angle of the image at each AOV, the coordinates of the point cloud (Xi, Yi, Zi) for the unified coordinate system were obtained.

The precise registration stage: The ICP algorithm was used to obtain a precise multiview point cloud registration [39] in this study. First, ICP registration was performed for the point cloud (Xi, Yi, Zi) at each AOV, that is, the point cloud at the first AOV was precisely ICP-registered with the point cloud at the adjacent second AOV. Then, the registration result was registered with the point cloud at the adjacent third AOV; the procedure was repeated until all the point clouds were registered to obtain a 3D point cloud model of the plant, namely, the 3DROI model.

where (i, j) are the coordinates of the depth image, (cx, cy) are the principal point coordinates of the Kinect sensor, (fx, fy) are the focal lengths of the Kinect sensor, Depth (i, j) is the depth value at coordinates (i, j) measured in mm, (xi, yi, zi) are the coordinates of the point cloud at the ith AOV, (xi′, yi′, zi′) are the post-translation coordinates of the point cloud at the ith AOV, (xi‴, yi‴, zi‴) are the coordinates of the point cloud at the ith AOV after one rotation around the X-axis, (xi‴, yi‴, zi‴) are the coordinates of the point cloud at the ith AOV after one rotation around the Z-axis, (Xi, Yi, Zi) are the coordinates of the point cloud at the ith AOV in the unified coordinate system, α is the angle between the Y-axis and the normal vector projection on the YOZ plane, measured in °, β is the angle between the Y-axis and the normal vector projection on the XOY plane, measured in °, γ is the rotation angle of the point cloud relative to the reference AOV (i.e., the first AOV for image acquisition in this study), measured in °, (a0, b0, c0) are the center coordinates of the rotation axis, and (a, b, c) is the normalized normal vector of the rotation axis on the rotary table.

2.4. Data Processing

This study included 60 tomato plant samples. For each plant, four RGB-D images were taken with a Kinect sensor at four AOVs with an interval angle of 90°. The four AOVs are represented by AOV1, AOV2, AOV3, and AOV4. The multispectral reflectances at the four AOVs were synchronously collected by an SOC710 imager and a Kinect sensor at the bandwidths of 466.93 (ρBlue), 564.91 (ρGreen), 696.32 (ρRed), 722.94 (ρRed-edge), and 841.4 nm (ρNir), which are the characteristic bands to which the nitrogen content in tomato plants were sensitive and therefore used as an example [6,7,30]. By following the method described in Section 2.3, we reconstructed the multispectral point cloud 3D models of the 60 tomato plant samples and we calculated the mean, standard deviation, number of points, and coefficient of variation of the spectral reflectance for the plant canopy in the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models. In addition, a series of vegetation indexes was calculated from the plant canopy multispectral 3D point cloud ROI model; these formulae are shown in Table 1. Prediction models that used various vegetation indexes and chlorophyll SPAD values were established and their coefficients of determination (R2) and root-mean-square-errors (RMSE) were calculated. The prototype functions of the prediction models included the linear function, quadratic function, exponential function, and power function, which were expressed as M1, M2, M3, and M4, respectively.

Table 1.

Vegetation indexes.

3. Results and Analysis

3.1. Multispectral 3D Point Cloud Modeling

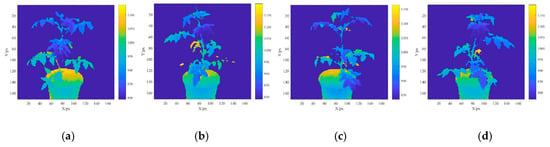

From the determined ROI range, the tomato plant area was identified and the background area was considered “not a number” (NaN). Figure 5a–d shows the depth images at AOV1, AOV2, AOV3, and AOV4, respectively. The spectral reflectance registration procedures described in Section 2.3.1 were used to register all the multispectral reflectance images at different AOVs in depth image coordinate systems. Figure 5e–h shows the spectral reflectance for the AOV1, AOV2, AOV3, and AOV4 images after the registration, respectively (with ρBlue as an example). The RGB-D image 3D reconstruction method described in Section 2.3.2 was used to convert the depth images at all the AOVs into point cloud images. Their multispectral reflectance values were ρBlue, ρGreen, ρRed, ρRed-edge, and ρNir. Figure 5i–l presents the 3D point clouds for the AOV1, AOV2, AOV3, and AOV4 images with the unified coordinate system (with ρBlue as an example). As a result of the morphological complexity of the plant canopy and the shielding effect of the leaves, it is impossible to establish a complete plant point cloud model from a single AOV; hence, it is necessary to integrate multiview point cloud models. In this study, the 3D point cloud models of the tomato plants at the four AOVs were obtained and used to reconstruct a multispectral 3D point cloud image through precise ICP registration. Figure 5m–q presents the 3D point cloud models of ρBlue, ρGreen, ρRed, ρRed-edge, and ρNir.

Figure 5.

Reconstruction of multispectral 3D point cloud models of tomato plants. (a) ROI depth image of ROI at angle of view (AOV)1, (b) ROI depth image of ROI at AOV2, (c) ROI depth image of ROI at AOV3, (d) ROI depth image of ROI at AOV4, (e) spectral reflectance profile of ROI at AOV1, (f) spectral reflectance profile of ROI at AOV2, (g) spectral reflectance profile of ROI at AOV3, (h) spectral reflectance profile of ROI at AOV4, (i) point cloud image of ROI at AOV1, (j) point cloud image of ROI at AOV2, (k) point cloud image of ROI at AOV3, (l) point cloud image of ROI at AOV4, (m) point cloud model at 466.93 nm, (n) point cloud model at 564.91 nm, (o) point cloud model at 696.32 nm, (p) point cloud model at 722.94 nm, and (q) point cloud model at 841.42 nm.

In this study, the SOC710 hyperspectral imager captured the image of the plant parts above the pot and the Kinect sensor captured the image of the entire plant area, including the pot and the background area. Because the range differs between the two types of images, the multiband spectral reflectance information was available only for the plant canopy area and not the plant part below the top edge of the pot. Therefore, the region with the missing spectral reflectance value was considered “not a number” (NaN). The ROI range was determined on the basis of the imaging distance and the relative positions of the sensor and lens parameters, which were appropriately selected according to the actual requirement for multispectral 3D reconstruction.

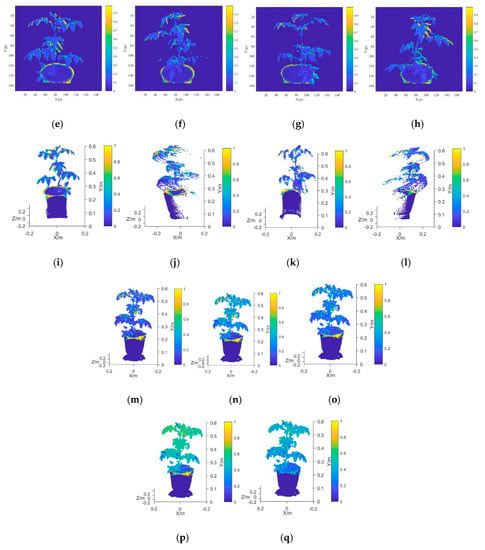

3.2. Spectral Reflectance Variability Analysis

In this study, the center coordinates of the rotation axis for the electric rotary table were (0, 0, 0) and the 3D point clouds of the plant canopy area were identified according to the height of the pot. The mean value, standard deviation (STD), and coefficient of variation (CV) of the spectral reflectance for the AOV1, AOV2, AOV3, AOV4, and canopy 3DROI point cloud models of 60 tomato plants in the Blue, Green, Red, Red-edge, and Nir characteristic wavebands were calculated (Figure 6a,b). In addition, the numbers of points in the AOV1, AOV2, AOV3, AOV4, and 3DROI canopy models were counted (Figure 6c).

Figure 6.

Analysis of spectral reflectance variability in multispectral point cloud models for the plant canopy. (a) Mean value and standard deviation of the spectral reflectance, (b) coefficients of variation of the spectral reflectance at different AOVs, (c) number of points in the point cloud models at different AOVs.

As shown in Figure 6a, because the 3D geometric morphological of the tomato plant canopy is complex, the plant ROI image varied at different AOVs, leading to significant variations in the spectral reflectance (Figure 5e–h). In this study, the STD was used to evaluate the degree of deviation in the spectral reflectance within the ROI region of the plants and the CV was used to assess the mean spectral reflectance variability among the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models.

The maximum value (MAX), minimum value (MIN) and average value (AVG) of spectral reflectance STD of the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models was calculated for all 60 tomato plants in the Blue, Green, Red, Red-edge, and Nir characteristic wavebands. As shown in Table 2, for the Blue, Green, Red, Red-edge, and Nir wavebands, the mean STD values of the spectral reflectance in the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models of the plant canopy had ranges of 0.1138–0.1193, 0.1046–0.1088, 0.1143–0.1200, 0.0944–0.1129, and 0.0870–0.1184, with maximum standard deviations of 0.1823, 0.1814, 0.1772, 0.2107, and 0.2889, respectively. This finding indicates that, regardless of whether the single-view or multiview reconstruction point cloud models was used, the spectral reflectance distribution of the plant canopy varied among the different spatial coordinates. Therefore, it is impossible to use multispectral reflectance under a single spatial coordinate system to construct an accurate SPAD prediction model. In addition, the variations in the chlorophyll distribution of the plant canopy should be considered.

Table 2.

Spectral reflectance standard deviation (STD) values.

In the Blue, Green, Red, Red-edge, and Nir wavebands, the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models of the 60 tomato plants had minimum spectral reflectance CVs of 3.25%, 0.97%, 2.09%, 0.67%, and 0.43%, maximum CVs of 16.95%, 12.03%, 22.08%, 23.00%, and 14.50%, and average CVs of 7.48%, 4.50%, 6.75%, 4.94%, and 3.73%, respectively (Figure 6b). This finding indicates that there were certain variations in the spectral reflectance among the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models and the differences were remarkable for different AOVs in some plants. Therefore, a SPAD value prediction model constructed using spectral reflectance data from single-view point cloud models would yield unstable results because of the variations among different AOVs.

Figure 6c shows the number of points in the point cloud models of the 60 tomato plants for AOV1, AOV2, AOV3, AOV4, and 3DROI. The mean numbers were 5695.83, 5226.22, 5063.57, 5421.18, and 13,782.83 for the AOV1, AOV2, AOV3, AOV4, and 3DROI models, respectively. Although the number of points in each single-view point cloud model did not vary significantly, there were far fewer points compared with the number of points in the multiview point cloud model reconstructed from the four AOV models. On average, the number of points in the AOV1, AOV2, AOV3, and AOV4 point cloud models respectively accounted for 43.23%, 39.39%, 38.08%, and 41.06% of the number of points in the 3DROI point cloud model. That is, overall, the number of points for all the single-view point cloud models accounted for a minimum of 21.62%, a maximum of 70.79%, and an average of 40.47% of the number in the multiview reconstructed point cloud model. Therefore, the spectral reflectance information for the plant canopy in a single-view model cannot fully reflect the actual status of the canopy and it is neither scientific nor rigorous enough to construct a plant canopy chlorophyll prediction model with only single-view spectral reflectance data.

In this study, the statistical analysis of characteristic variables in the plant canopy chlorophyll measurement model revealed that there were significant differences in the spectral reflectance distribution in the single-view point cloud models, as well as in the spectral reflectance value and the number of points among different AOV point cloud models. In addition, the number of points in the single-view point cloud models accounted for an average of only 40.47% of that in the 3D reconstructed point cloud model. This finding suggests that a single-view point cloud model cannot fully reflect the status of the characteristics of the spectral reflectance in a plant canopy. Therefore, this study proposes the construction of a prediction model for plant chlorophyll SPAD with the characteristic variables of multiview, multispectral point cloud models.

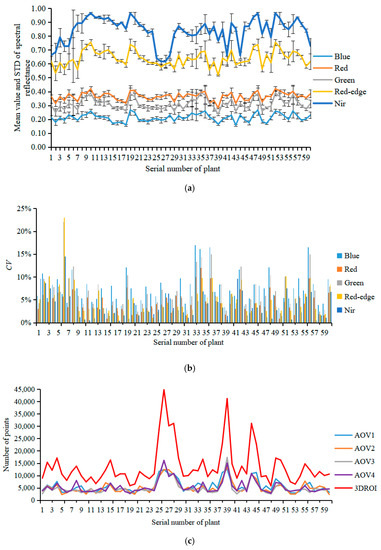

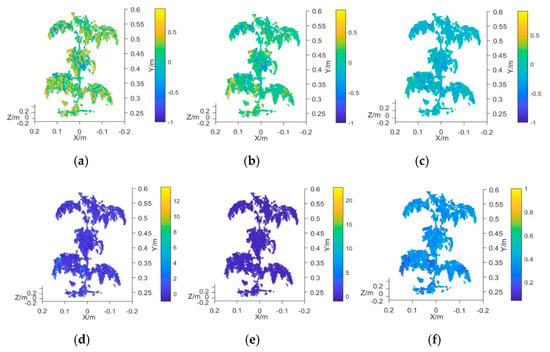

3.3. Plant Chlorophyll Measurement Model and Analysis

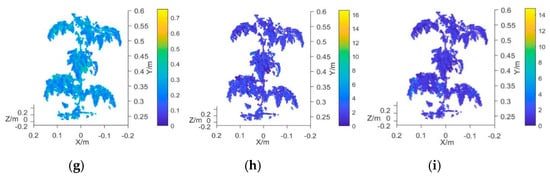

In this study, the average value of the vegetation index obtained from the 3D multispectral point cloud model of the plant canopy was employed as the characteristic input variable of the measurement model. The vegetation indexes used for the analysis were the NDVI, GNDVI, NDVIR, CIG, RCIG, NG, NR, RVI, and GRVI (Table 1). The measured mean value of plant chlorophyll was taken as the output variable of the model. The prototype functions used in the measurement model were the linear function, quadratic function, exponential function, and power function, which were represented by M1, M2, M3, and M4, respectively. Prediction models that each used a different vegetation index and the chlorophyll SPAD value were established and their R2 and RMSE values were calculated. Figure 7a–i presents the 3D multispectral point cloud models established by using NDVI, GNDVI, NDVIR, CIG, RCIG, NG, NR, RVI, and GRVI, respectively.

Figure 7.

Plant canopy point cloud model using different vegetation indexes. (a) Point cloud model using NDVI, (b) point cloud mode using GNDVI, (c) point cloud mode using NDVIR, (d) point cloud mode using CIG, (e) point cloud mode using RCIG, (f) point cloud mode using NG, (g) point cloud mode using NR, (h) point cloud mode using RVI, and (i) point cloud mode using GRVI.

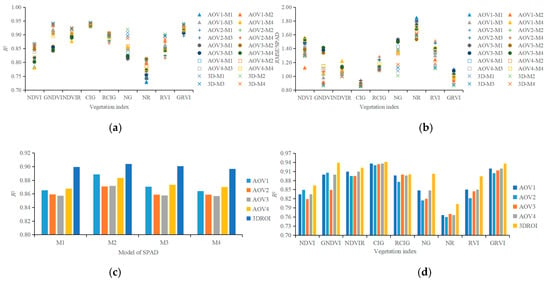

Figure 8a,b shows the R2 and RMSE values of the 180 regression models that were established using the SPAD value and nine vegetation indexes (NDVI, GNDVI, NDVIR, CIG, RCIG, NG, NR, RVI, and GRVI) from the AOV1, AOV2, AOV3, AOV4, and 3DROI point cloud models. The R2 and the RMSE values ranged from 0.7279 to 0.9443 and from 0.8505 to 1.8471, respectively.

Figure 8.

Performance of regression models used in plant canopy chlorophyll predictions. (a) R2 of regression models, (b) RMSE of regression models, (c) R2 when using different prototype functions to construct the regression models, and (d) R2 when using different vegetation indexes as input variable.

The comparison of the determination coefficient R2 values among the regression models with different prototype functions (Figure 8c) reveals that the mean R2 value of the regression model with the quadratic function (M2) as the prototype function was larger than that of the models using the linear function (M1), the exponential function (M3), or the power function (M4) as the prototype function. However, the difference in the overall regression/correlation was insignificant among all the M1–M4 regression models. This insignificance might be attributable to the small range of plant SPAD readings. In this study, the SPAD readings ranged from 31.46 to 60.90 and the average value ranged from 37.49 to 53.35. When the vegetation indexes from the 3DROI point cloud model were used as the input variables to construct the M1, M2, M3, and M4 regression models, the mean R2 values were 0.8995, 0.9041, 0.9008, and 0.8968 and the mean RMSE values were 1.1016, 1.0733, 1.0933, and 1.1206, respectively. The R2 of the regression model using the vegetation indexes from the 3DROI point cloud model was larger than that of the regression model using the vegetation indexes from the single-view point cloud models. This finding indicated that the chlorophyll measurement model that was established by using characteristic variables from the 3DROI point cloud model had a higher correlation regardless of the prototype function that was used for the regression analysis.

Figure 8d compares the mean determination coefficient R2 values of all four regression methods among the models with different vegetation indexes as the input variable. When the NDVI, GNDVI, NDVIR, CIG, RCIG, NG, NR, RVI, or GRVI from the 3DROI point cloud model were used as the input variables, the mean R2 values were 0.8641, 0.9390, 0.9222, 0.9418, 0.9002, 0.9022, 0.8027, 0.8945, and 0.9362 and the mean RMSE values were 1.3085, 0.8826, 1.003, 0.8623, 1.1133, 1.0950, 1.5927, 1.1409, and 0.8903, respectively. When the vegetation index from the 3DROI point cloud model was used for the regression, the determination coefficient R2 was larger than that obtained when the vegetation index from the single-view point cloud model was used. Furthermore, the highest R2 resulted from the regression model using CIG as the input, followed by GNDVI, GRVI, NDVIR, NG, RCIG, RVI, NDVI, and NR. Table 3 presents the SPAD regression equations and the results of using the NDVI, GNDVI, NDVIR, CIG, RCIG, NG, NR, RVI, or GRVI from the 3DROI point cloud model as the input variables.

Table 3.

SPAD Regression Equation and analysis results.

In summary, the vegetation indexes obtained from the 3DROI point cloud model can be used to predict the chlorophyll SPAD value and evaluate the spatial SPAD distribution in the plant canopy. The performance of the regression model that was constructed from 3DROI data is superior to those using single-view data. Because its accuracy and stability are high, the proposed method is a promising tool that can be expanded for canopy physiological assessments of other plants.

4. Conclusions

A multispectral, 3D, nondestructive measurement system for greenhouse tomato plants was designed and a heterogeneous image registration method based on the Fourier transform was proposed. The values of the translation, rotation angle, and scaling coefficient between the SOC710 hyperspectral and Kinect images were used to accurately register the multispectral reflectance information to the depth coordinate system, which laid the foundation for reconstructing a multispectral 3D point cloud model. A 3D reconstruction method using multiview RGB-D images was developed using Kinect pose estimation and self-calibration to obtain a unified transformation matrix for multiview RGB-D image coordinate systems, which solved the fast rough registration of multiview point clouds. Finally, a multispectral 3D point cloud model of the plant was reconstructed using a precise ICP registration algorithm. The vegetation index from the multispectral 3D point cloud model was used as the input variable and the measured SPAD value was the output, resulting in an SPAD measurement model of the plant canopy chlorophyll. The performance of the SPAD measurement model, based on the 3D multispectral point cloud model and single-view point cloud model, was compared and analyzed by a plant canopy chlorophyll measurement experiment. The results revealed that the measurement model that was established by using the characteristic variables from a multiview point cloud model was superior to the one established using the variables from a single-view point cloud model and obtained a precise and efficient high-throughput measurement of plant chlorophyll.

In the present study, only a single-frame RGB-D image was used to establish 3D point cloud models at each AOV; therefore, the accuracy of the point cloud modeling was limited by the Kinect sensor. This drawback could be overcome by using multiframe RGB-D images to construct point cloud models at each AOV. The integration of multiple point cloud models at each AOV might improve the performance of point cloud reconstruction for small plant stems, thereby increasing the precision of 3D point cloud modeling. As a result, the model performance for plant SPAD prediction can be further improved and can provide more precise information for optimizing the plant canopy SPAD spatial distribution and density. The multispectral 3D reconstruction approach for the greenhouse tomato plants used in this study can be used to reconstruct the plant multispectral 3D point cloud model, which optimizes the traditional 2D image measurement method and can serve as a precise and efficient tool for high-throughput plant chlorophyll measurements. This low-cost, easy-to-operate method can be expanded to the physiological assessments of other plant canopies. This approach is promising and of great significance to the development of plant phenomics and other research fields.

Author Contributions

Conceptualization, G.S. and X.W.; Methodology, G.S. and X.W.; Software, G.S. and Y.S.; Validation, G.S., and Y.S.; Formal Analysis, G.S. and W.L.; Investigation, G.S., X.W. and W.L.; Writing-Original Draft Preparation, G.S. and Y.S.; Writing-Review & Editing, G.S., X.W. and Y.D.; Project Administration, X.W. and Y.D.; Funding Acquisition, G.S., X.W.

Funding

This research was supported by the Natural Science Foundation of Jiangsu Province (Grant No. BK201707027), National Key R&D Program of China (Grant No. 2017YFD0701400), and the Fundamental Research Funds for the Central Universities (Grant No. KYGX201703), and the Natural Science Foundation of Jiangsu Province (Grant No. BK20150686).

Acknowledgments

The authors acknowledge the invaluable technical assistance from Yongbo Li and Yongnian Zhang.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Padilla, F.M.; Gallardo, M.; Pena-Fleitas, M.T.; de Souza, R.; Thompson, R.B. Proximal optical sensors for nitrogen management of vegetable crops: A review. Sensors 2018, 18, 2083. [Google Scholar] [CrossRef]

- Yang, Z.; Hu, J.; Duan, T.; Wang, S.; Wu, J.; Su, W.; Zhang, Y. Research progress of nondestructive diagnostic technique of chlorophyll in plants. Chin. Agric. Sci. Bull. 2019, 35, 139–144. [Google Scholar]

- Lin, F.F.; Qiu, L.F.; Deng, J.S.; Shi, Y.Y.; Chen, L.S.; Wang, K. Investigation of SPAD meter-based indices for estimating rice nitrogen status. Comput. Electron. Agric. 2010, 71, S60–S65. [Google Scholar] [CrossRef]

- Padilla, F.M.; Peña-Fleitas, M.T.; Gallardo, M.; Giménez, C.; Thompson, R.B. Derivation of sufficiency values of a chlorophyll meter to estimate cucumber nitrogen status and yield. Comput. Electron. Agric. 2017, 141, 54–64. [Google Scholar] [CrossRef]

- Ghasemi, M.; Arzani, K.; Yadollahi, A.; Ghasemi, S.; Khorrami, S.S. Estimate of leaf chlorophyll and nitrogen content in Asian pear (Pyrus serotina Rehd.) by CCM-200. Not. Sci. Biol. 2011, 3, 91–94. [Google Scholar] [CrossRef][Green Version]

- He, Y.; Peng, J.; Liu, F.; Zhang, C.; Kong, W. Critical review of fast detection of crop nutrient and physiological information with spectral and imaging technology. Trans. Chin. Soc. Agric. Eng. 2015, 31, 174–189. [Google Scholar]

- He, Y.; Zhao, C.; Wu, D. Fast detection technique and sensor instruments for crop-environment information: A review. Sci. China Ser. F Inf. Sci. 2010, 40, 1–20. [Google Scholar]

- Wang, X.; Huang, J. Principles and Techniques of Plant Physiological Biochemical Experiment; Higher Education Press: Beijing, China, 2006; pp. 134–136. [Google Scholar]

- Perez-Patricio, M.; Camas-Anzueto, J.L.; Sanchez-Alegria, A.; Aguilar-Gonzalez, A.; Gutierrez-Miceli, F.; Escobar-Gomez, E.; Voisin, Y.; Rios-Rojas, C.; Grajales-Coutino, R. Optical method for estimating the chlorophyll contents in plant leaves. Sensors 2018, 18, 650. [Google Scholar] [CrossRef]

- Jörg, P.; Pablo, R.; Hu, Y.; Sebastian, K.; Gero, B.; Bodo, M.; Urs, S. Use of a digital camera as alternative method for non-destructive detection of the leaf chlorophyll content and the nitrogen nutrition status in wheat. Comput. Electron. Agric. 2017, 140, 25–33. [Google Scholar] [CrossRef]

- Dario, C.P.; Maria, C.; Bernardo, P.; Antonio, F.L.; Giovanni, A. Contactless and non-destructive chlorophyll content prediction by random forest regression: A case study on fresh-cut rocket leaves. Comput. Electron. Agric. 2017, 140, 303–310. [Google Scholar] [CrossRef]

- Liang, Y.; Daisuke, U.; Liao, K.-L.; Hedrick, T.L.; Gao, Y.; Jones, A.M. A nondestructive method to estimate the chlorophyll content of Arabidopsis seedlings. Plant Methods 2017, 13, 26. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Shi, P.; Kenji, O. Estimating rice chlorophyll content and leaf nitrogen concentration with a digital still color camera under natural light. Plant Methods 2014, 10, 36. [Google Scholar] [CrossRef]

- Avinash, A.; Snehasish, D.G. Assessment of spinach seedling health status and chlorophyll content by multivariate data analysis and multiple linear regression of leaf image features. Comput. Electron. Agric. 2018, 152, 281–289. [Google Scholar] [CrossRef]

- Rei, S.; Tomohito, S.; Hideki, H. Using spectral reflectance to estimate leaf chlorophyll content of tea with shading treatments. Biosyst. Eng. 2018, 175, 168–182. [Google Scholar] [CrossRef]

- Yu, K.-Q.; Zhao, Y.-R.; Zhu, F.-L.; Li, X.-L.; He, Y. Mapping of chlorophyll and SPAD distribution in pepper leaves during leaf senescence using visible and near-infrared hyperspectral imaging. Trans. ASABE 2016, 59, 13–24. [Google Scholar] [CrossRef]

- Liu, B.; Yue, Y.M.; Li, R.; Shen, W.J.; Wang, K.L. Plant leaf chlorophyll content retrieval based on a field imaging spectroscopy system. Sensors 2014, 14, 19910–19925. [Google Scholar] [CrossRef]

- Zhang, J.; Han, W.; Huang, L.; Zhang, Z.; Ma, Y.; Hu, Y. Leaf chlorophyll content estimation of winter wheat based on visible and near-infrared sensors. Sensors 2016, 16, 437. [Google Scholar] [CrossRef]

- Qin, C.; Huang, J.; Wang, H.; Sun, H.; Zhang, Y.; Li, Y. Polarized hyperspectral characteristics and the ralationship with chlorophyll content of smooth leaves. J. Meteorol. Sci. 2019, 39, 421–426. [Google Scholar]

- Kuckenberg, J.; Tartachnyk, I.; Noga, G. Detection and differentiation of nitrogen-deficiency, powdery mildew and leaf rust at wheat leaf and canopy level by laser-induced chlorophyll fluorescence. Biosyst. Eng. 2009, 103, 121–128. [Google Scholar] [CrossRef]

- Thapa, S.; Zhu, F.; Walia, H.; Yu, H.; Ge, Y. A novel LiDAR-based instrument for high-throughput, 3D measurement of morphological traits Maize and Sorghum. Sensors 2018, 18, 1187. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakabayashi, K.; Omasa, K. 3-D modeling of tomato canopies using a high-resolution portable scanning lidar for extracting structural information. Sensors 2011, 11, 2166–2174. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, L.; Xiang, L.; Wu, Q.; Jiang, H. Automatic non-destructive growth measurement of leafy vegetables based on kinect. Sensors 2018, 18, 806. [Google Scholar] [CrossRef]

- Li, J.; Tang, L. Developing a low-cost 3D plant morphological traits characterization system. Comput. Electron. Agric. 2017, 143, 1–13. [Google Scholar] [CrossRef]

- Andujar, D.; Calle, M.; Fernandez-Quintanilla, C.; Ribeiro, A.; Dorado, J. Three-dimensional modeling of weed plants using low-cost photogrammetry. Sensors 2018, 18, 1077. [Google Scholar] [CrossRef]

- Rose, J.C.; Paulus, S.; Kuhlmann, H. Accuracy analysis of a multi-view stereo approach for phenotyping of tomato plants at the organ level. Sensors 2015, 15, 9651–9665. [Google Scholar] [CrossRef]

- Zhang, Y.; Teng, P.; Shimizu, Y.; Hosoi, F.; Omasa, K. Estimating 3D leaf and stem shape of nursery paprika plants by a novel multi-camera photography system. Sensors 2016, 16, 874. [Google Scholar] [CrossRef]

- George, A.; Michael, L.; Radu, B. Rapid characterization of vegetation structure with a microsoft kinect sensor. Sensors 2013, 13, 2384–2398. [Google Scholar] [CrossRef]

- Dionisio, A.; César, F.; José, D. Matching the best viewing angle in depth cameras for biomass estimation based on poplar seedling geometry. Sensors 2015, 15, 12999–13011. [Google Scholar] [CrossRef]

- Manuel, V.; David, R.; Dimitris, S.; Miguel, G.; Marlowe, E.; Hans, W. 3-D reconstruction of maize plants using a time-of-flight camera. Comput. Electron. Agric. 2018, 145, 235–247. [Google Scholar]

- Kenta, I.; Itchoku, K.; Fumiki, H. Three-dimensional monitoring of plant structural parameters and chlorophyll distribution. Sensors 2019, 19, 413. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y.; Xie, J.; Li, Z.N. Research on optimal near-infrared band selection of chlorophyll (SPAD) 3D distribution about rice plant. Spectrosc. Spectr. Anal. 2017, 37, 3749–3757. [Google Scholar] [CrossRef]

- Liu, H.; Mao, H.; Zhu, W.; Zhang, X.; Gao, H. Rapid diagnosis of tomato NPK nutrition level based on hyperspectral technology. Trans. Chin. Soc. Agric. Eng. 2015, 31, 212–220. [Google Scholar]

- Cai, X. Modern Vegetable Greenhouse Facilities and Management; Shanghai Science and Technology Press: Shanghai, China, 2000; p. 9. [Google Scholar]

- Mingjing, G.; Min, Y.; Hang, G.; Yuan, X. Mobile robot indoor positioning based on a combination of visual and inertial sensors. Sensors 2019, 19, 1773. [Google Scholar] [CrossRef]

- Yanli, L.; Heng, Z.; Hanlei, G.; Neal, N. A fast-brisk feature detector with depth information. Sensors 2018, 18, 3908. [Google Scholar] [CrossRef]

- Tomislav, P.; Tomislav, P.; Matea, Đ. 3D registration based on the direction sensor measurements. Pattern Recognit. 2019, 88, 532–546. [Google Scholar] [CrossRef]

- Henke, M.; Junker, A.; Neumann, K.; Altmann, T.; Gladilin, E. Automated alignment of multi-modal plant images using integrative phase correlation approach. Front. Plant Sci. 2018, 9, 1519. [Google Scholar] [CrossRef]

- Paul, B.J.; Neil, M.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).