1. Introduction

It is well known that multi-sensor fusion is an important issue for autonomous navigation of unmanned vehicles, especially when operating in real environments with unanticipated changes. With the aid of various types of sensors, such as temperature and humidity sensors, collision sensors, flow velocity and flow rate sensors, and displacement sensor, unmanned vehicles have been effectively applied to the fields of sounding survey [

1], environment monitoring [

2], underwater acoustics [

3], marine rescue [

4], target tracking [

5], and water monitoring [

6]. In these cases, all of the sensory information from multiple sensors is combined and effectively utilized to generate desirable trajectories for unmanned vehicles to follow, which is always formulated as a travelling salesman problem (TSP).

The TSP was proved to be a typical non-deterministically polynomially hard combination optimization problem in 1979 [

7]. Its goal is to design the shortest route for a traveler to visit each city without repetition and ultimately return to the departure city. With the search-space tending to infinity and complexity, traditional exact algorithms, such as the enumeration method, fail to approach an exact solution within a reasonable computation time. Hence, novel algorithms with the capability of self-organization and self-adaption need to be developed to discover an adequate solution, sacrificing optimality, accuracy, and completeness for running speed. Inspired by natural evolution models and adaptive population evolution, collective intelligence methods, including genetic algorithm [

8], particle swam optimization (PSO) [

9], ant colony optimization (ACO) [

10], artificial fish swarm algorithm [

11], and artificial bee colony algorithm [

12], have entered into a stage of rapid development for the TSP.

Particle swarm optimization, proposed by Eberhart and Kennedy in 1995, is an evolutionary metaheuristic technique [

13]. It solves the optimization problem by having a population of candidate solutions, called particles, and moving these particles around in multi-dimensional search-space with a certain velocity. With a fitness function to assess each solution, the movements of all of the particles are dynamically guided by their own experience, as well as the entire swarm’s experience. Finally, it is expected that the swarm will move toward the most satisfactory solution. Due to advantages of fast convergence speed, simple parameter settings, and easy implementation, the PSO algorithm has been widely used in various fields, including functions optimization [

14], training of neutral networks [

15], and fuzzy system control [

16].

Additionally, in order to improve the performance of PSO in solving the discrete-space-based TSP, valuable research has been conducted in recent times on the hybridization of heuristic methods. B. Shuang et al. proposed a hybrid algorithm that combined the respective advantages of PSO and ACO. The search mechanism of PSO was effectively utilized in which the particle’s experience helped to expand the search space, while the swarm experience pushed the global convergence [

17]. X. Zhang et al. improved PSO by using a priority coding method to code the solution, dynamically setting the velocity range to remove the side effect due to the discrete search-space, and introducing the k-centers method to avoid the local optimum. The improved algorithm performed well in reserving the swarm diversity [

18]. A hybrid fuzzy learning algorithm was proposed by H. M. Feng et al. in a large-scale search-space. The adaptive fuzzy C-mean algorithm was first used to divide the large-scale cities into subsets, following by the transform-based particle swarm optimization and the simulated annealing method acquiring the local optimal solution. Then the complete optimal route was rebuilt by the powerful MAX-MIN merging algorithm [

19]. In the work by M. Mahi et al., the authors introduced the PSO into the ACO to help to optimize the city selection parameters, and the 3-opt algorithm was used for the purpose of jumping out of the local optimum [

20]. In addition, the combination of PSO with genetic algorithm by W. Deng et al. [

21], and the method combining PSO with artificial fish swarm algorithm [

22] also show admirable improvement.

It is well known that the PSO performance depends heavily on the proper balance between exploration, namely searching a broader space, and exploitation, namely, moving to the local optimum. It contends that tuning the PSO parameters has a significant impact on the optimization performance. Hence, choosing proper parameters to improve the algorithm effectiveness has been a hot spot for many works. In the work by Y. Zhang et al., the raw fitness value was adjusted by the power-rank scaling method, the acceleration coefficients and the inertia weight were changed with iteration, and the random numbers were modified to be generated by a chaotic operator. Simulation results showed that the novel method succeeded elite genetic algorithms with migration, simulated algorithm, chaotic artificial bee colony, and PSO in both success rate and time cost [

23]. To solve the vehicle routing problem with time windows, a variant of PSO with three adaptive strategies was used, in which all parameters started with random values, but gradually tended to be applicable during iterations based on some limitations [

24]. K. R. Harrison et al. analyzed the results of PSO using 3036 configurations of control parameters for 22 benchmark problems and found the time-dependence of optimal values. Meanwhile, the optimal range of acceleration coefficients and inertia weight were recommended [

25].

Moreover, with the fast development of intelligent algorithms and autonomous navigation technology, PSO has also been successfully applied to the vehicle path planning problem. R. J. Kenefic combined a heading constraint heuristic with PSO to solve the turn rate limited TSP for an unmanned aerial vehicle. Permutations of the tour vertices’ orders were considered to eliminate the self-crossing phenomenon in the planned path. Results revealed that PSO performed better than a standard algorithm in MATLAB because of the discontinuous and multimodal nature of the objective function [

26]. To plan the shortest and smoothest route for the robot, a novel algorithm was presented with the PSO component used as a global planner and the modified probabilistic road map method used as a local planner. Results showed that this PSO-based algorithm was advantageous in runtime and path length [

27]. M. D. Phung et al. improved the PSO by integrating deterministic initialization, random mutation, and edge exchange. Experimental tests with real-world datasets from unmanned aerial vehicle inspection showed the proposed algorithm could enhance the performance in both computing time and travelling cost [

28]. To plan a multi-objective optimization path for an autonomous underwater vehicle in dynamic environments, the PSO was used to find suitable temporary waypoints, combined with the waypoint guidance to generate an optimal path [

29].

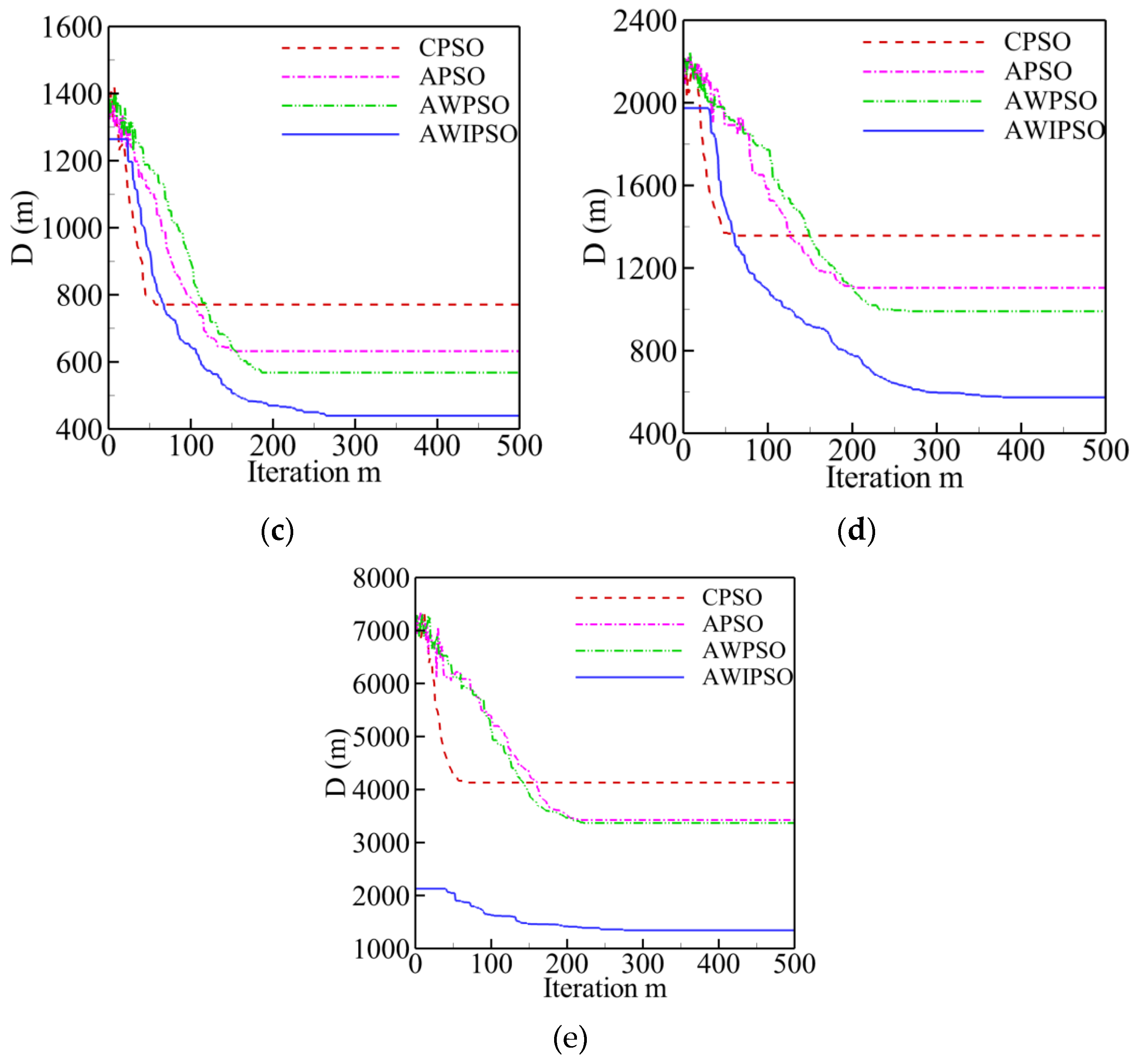

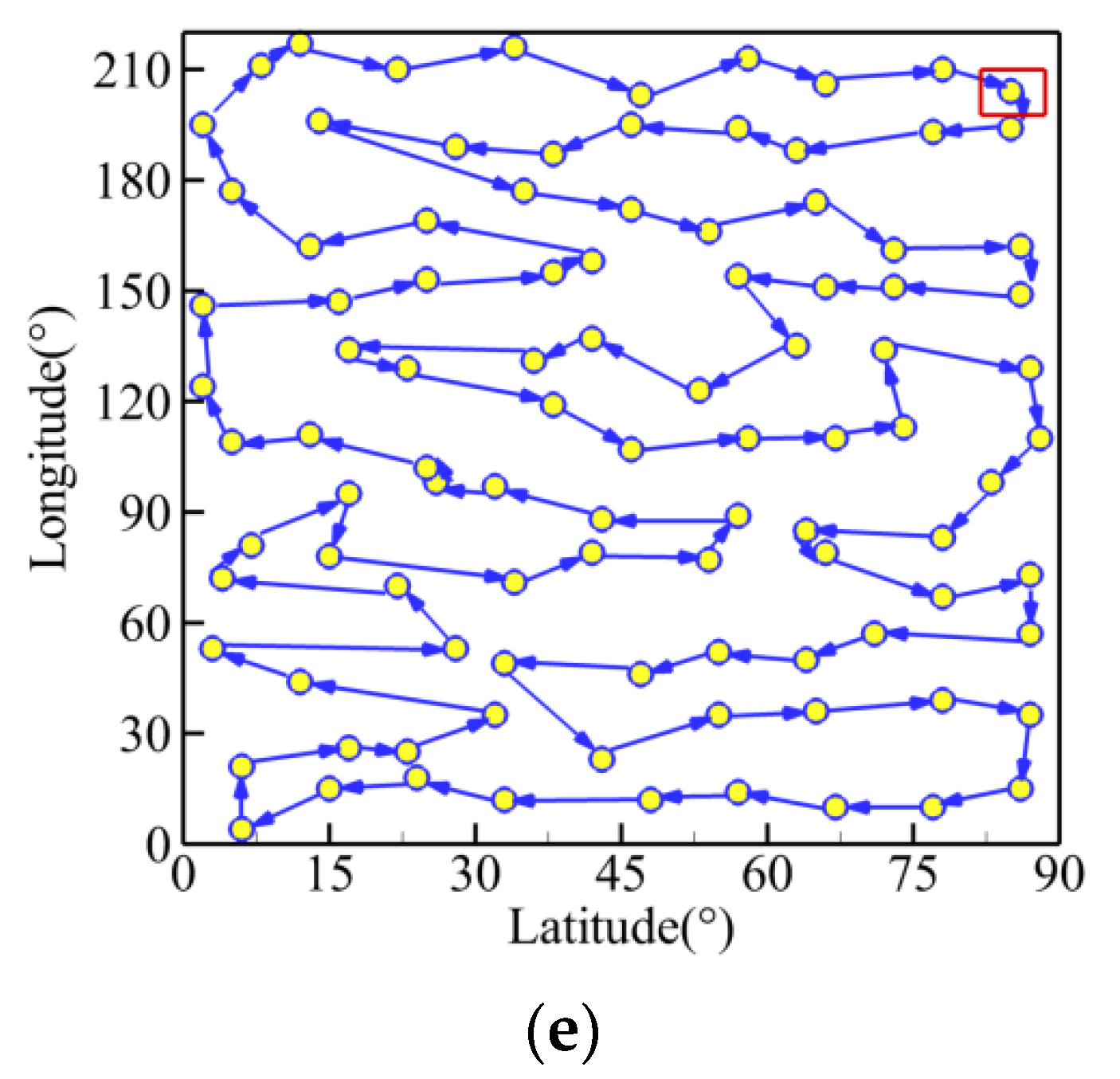

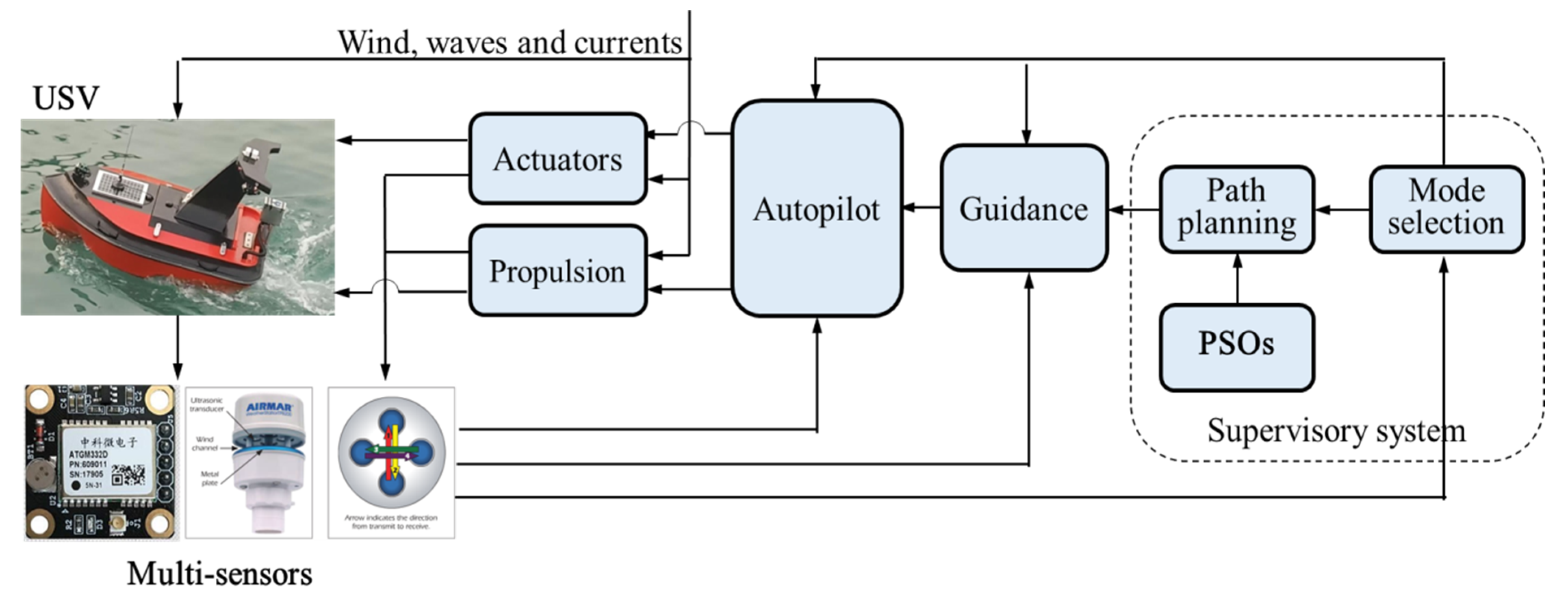

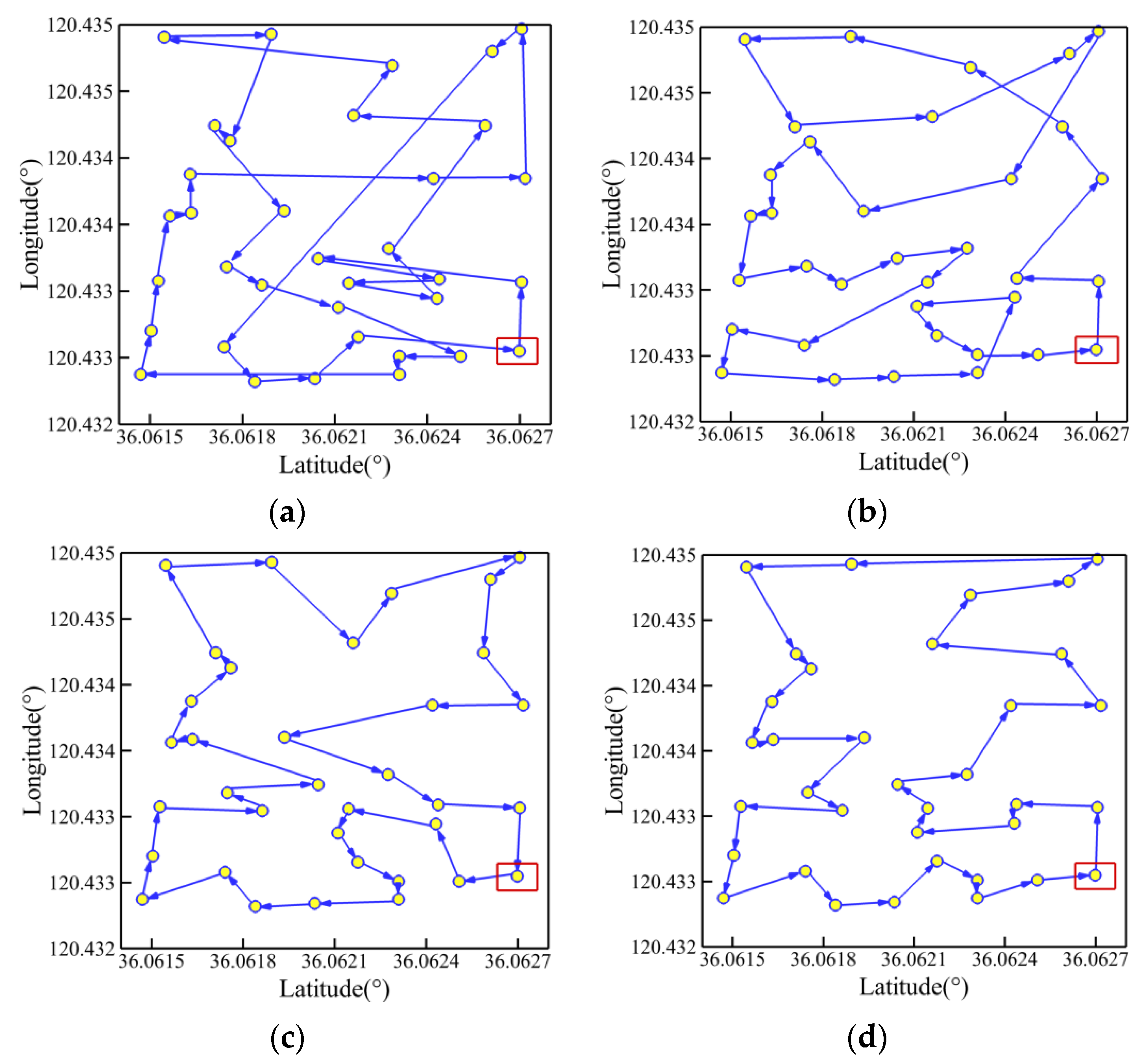

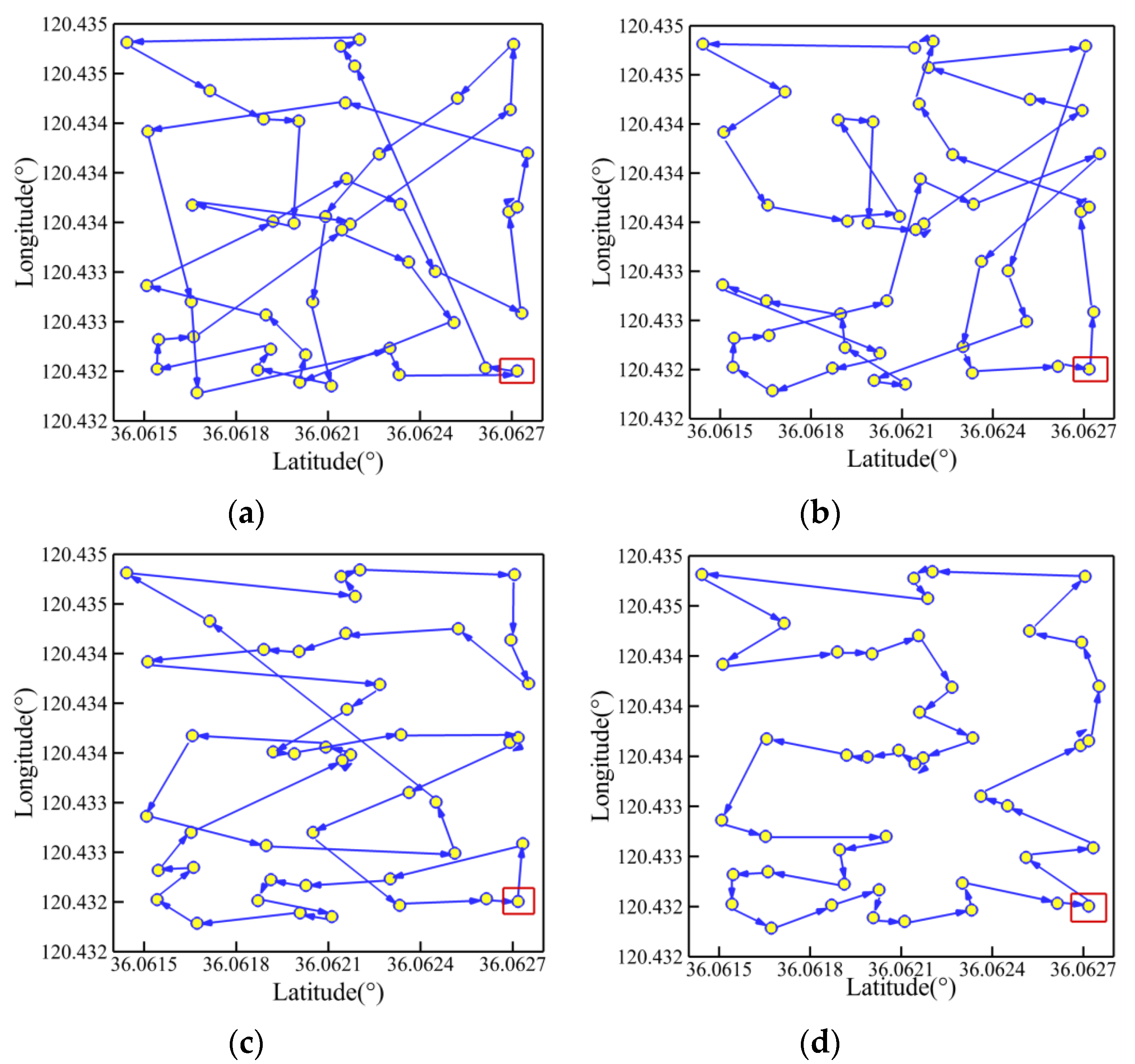

In order to avoid premature convergence, route self-crossing, and to enhance the robustness, this work proposes three improved algorithms on the basis of the PSO method by combining one or two optimization strategies as follows: linearly descending inertia weight, adaptively controlled acceleration coefficients, and random grouping inversion. First, a hundred Monte Carlo simulations are conducted for five TSPLIB instances in order to compare the effectiveness of each improved algorithm in terms of route length, computing efficiency and algorithm robustness. Furthermore, improved PSO algorithms are applied to the navigation, guidance and control system (NGC) of a self-developed USV with multi-sensor data in a real sea environment.

The main contributions of this work are as follows: (1) The important parameters, including the acceleration coefficients and inertia weight, are adjusted iteratively, with the aim of effectively reducing the path length and enhancing the robustness; (2) The strategy of random grouping inversion maintains the swarm diversity and accelerates the global convergence, which can avoid premature convergence and retain solution precision; (3) Path planning for a USV is conducted by combining the conventional PSO with the three optimization strategies, which generates feasible routes with satisfactory length and no self-crossing.

The rest of the paper is structured as follows. PSO algorithms with different optimization strategies are introduced concisely in

Section 2. Results and discussions of Monte Carlo simulations and applications to a USV are presented in

Section 3. Additionally, conclusions and future research directions are drawn in

Section 4.

2. Proposed Algorithms

2.1. Particle Swarm Optimization

As mentioned in

Section 1, the conventional PSO is a population-based stochastic optimization method. At the beginning of the evolutionary process, the PSO method generates

N candidate solutions (namely

N particles) randomly within an

S-dimensional search space. For the

i-th particle, its position can be represented by a vector

Xi = (

xi1,

xi2, ...,

xiS)

T. Meanwhile, its velocity can be defined by a vector

Vi = (

vi1,

vi2, ...,

viS)

T. A fitness function is used to evaluate the quality of each solution. For the TSP and path planning problem in this work, the fitness function is defined as 1/

D (

D stands for the route length). For every iteration, all the particles depend on two kinds of experience for guiding their movement: the best position (

Pis) an individual has known so far, and the best position (

Pgs) the entire swarm has known so far. Correspondingly, the velocity and position of each particle are updated following Equations (1) and (2) [

30].

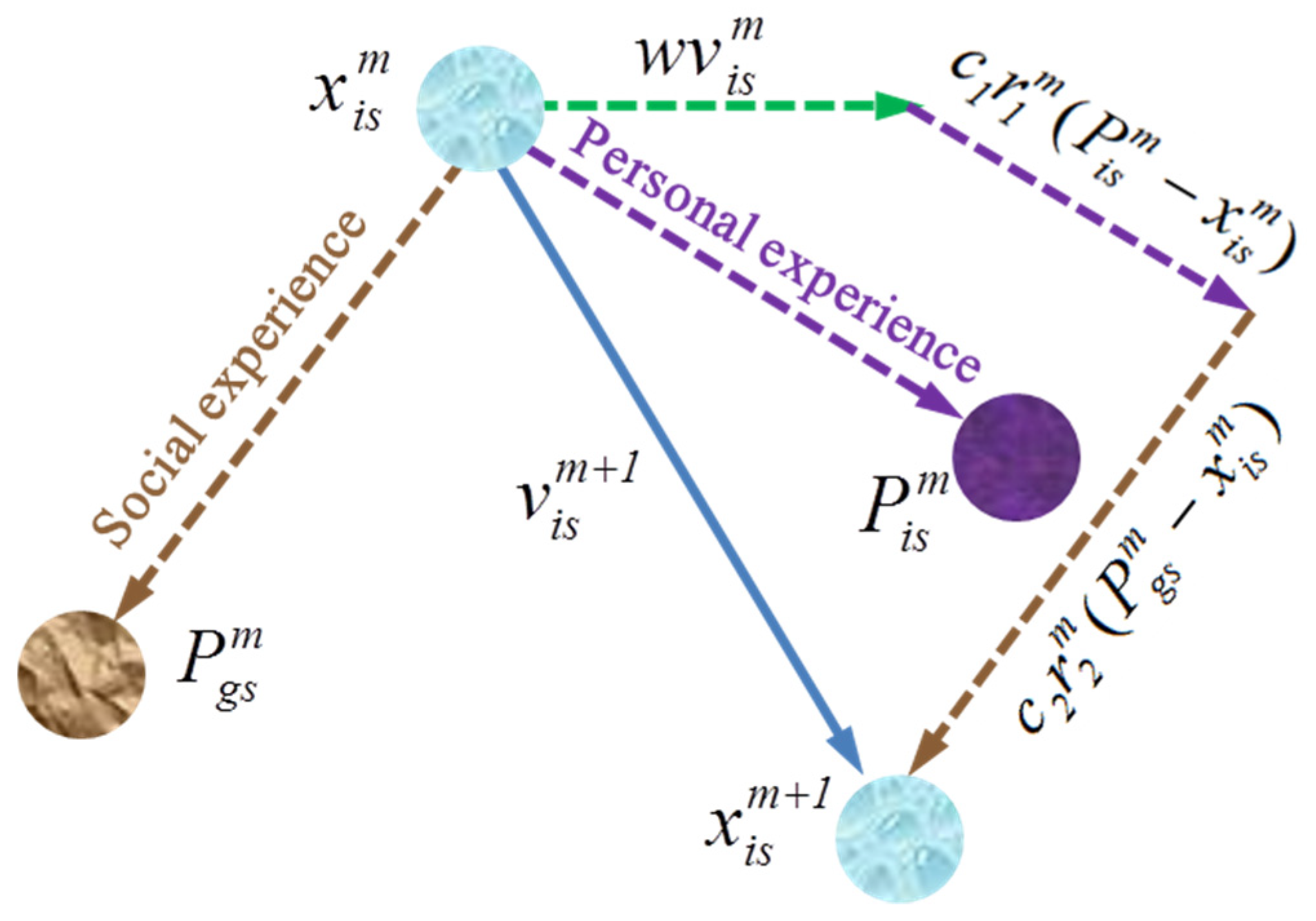

where

m and

s stand for the current number of iterations and the

s-th dimension, respectively.

r1 and

r2 are random and iteratively updated numbers uniformly distributed between 0 and 1.

c1,

c2, and

w are PSO control parameters called personal cognition coefficient, social cognition coefficient, and inertia weight, respectively.

It should be noted that there are three terms of velocity on the right side of Equation (1). The first term

is the inertia component, which makes the particle move in its original direction of last iteration. The inertia weight

w, first proposed by Y. Shi and R. C. Eberhart in 1998, affects the capability of global search and algorithm convergence, and it is typically set between 0.8 and 1.2 [

30]. The second item

is called the personal cognition component, which causes the particle to move according to its memory of individual best-known position. Meanwhile, the third item

is the social cognition component, which will guide the particle to move towards the swarm’s best known position based on communication with other particles. The acceleration coefficients

c1 and

c2 play an important role in balancing the effects of personal cognition and social cognition on guiding the particle towards the target optimal solution. The values of

c1 and

c2 are usually suggested to be 2. In addition, it is reported that the stochastic characteristics of

r1 and

r2 can weaken the effects of the individual best known position and the swarm best known position on the velocity update. The diversity of population could be maintained, and the phenomenon of premature convergence could be avoided to some degree [

31].

Figure 1 shows a schematic diagram of position change of a particle for two successive iterations. The algorithm procedure will be terminated when the maximum number of iterations (

M) or a minimum error threshold is achieved. The pseudo code of conventional PSO is presented in Algorithm 1.

| Algorithm 1. Conventional Particle Swarm Optimization for TSP |

| select swarm size and maximum iterations |

| define fitness function |

| preset acceleration coefficients (c1, c2) and inertia weight (w) |

| for each particle do |

| initialize velocity and position |

| evaluate initial fitness value |

| record initial Pis and Pgs |

| end |

| while maximum iterations or minimum error criteria is not achieved do |

| for each particle do |

| calculate the new velocity using Equation (1) |

| update the new position using Equation (2) |

| evaluate new fitness function |

| update Pis and Pgs |

| end |

| end |

2.2. Linearly Descending Inertia Weight

Note that the inertia weight w reflects the effect of historical velocity on current velocity for each particle. It could balance the capacities of local and global searching. When w = 0, it could be found based on Equation (1) that the particle velocity only depends on its current cognition of the personal best-known position (Pis) and the swarm’s best known position (Pgs). If one particle is in its current Pgs, it will remain stationary, while others will fly at a weighted speed of Pis and Pgs. Given this circumstance, the entire swarm will be pulled towards the current Pgs and converge to the local optimum. On the contrary, with the aid of the inertia component, all the particles have a tendency to explore a larger space. Hence, when faced with various optimization problems such as functions optimization, training of neutral network, and Fuzzy system control, it is necessary to adjust the value of w to balance the algorithm capability of local and global searching.

In addition, the inertia weight

w also affects the global search behavior, especially the convergence behavior. Generally, a lower value of

w would help speed up the convergence of global optimum, while a larger value of

w would contribute to the exploration of the entire search space. To obtain a better global search capability during early iterations, and enhance the local exploitation during later iterations to avoid being trapped into local optimum, the inertia weight

w is adjusted dynamically with the form of linearly descending over the iterations according to Equation (3).

where

wmax and

wmin represent the maximum and minimum value of inertia weight

w, respectively.

2.3. Adaptively Controlled Acceleration Coefficients

The acceleration coefficients c1 and c2 reflect the information exchange among particles, and determine the distance a particle will move towards target solution under the guidance of personal cognition and social cognition in a single iteration. Small values of acceleration coefficients would make the particle wander far from the target region, while large values of acceleration coefficients would urge the particle to move quickly towards the target region but ultimately deviate from this region. When c1 and c2 are both equal to zero, the particle will fly at its current velocity until it hits the border of the search space. As a result, the satisfactory solution is hard to find within the restricted search space. If c1 is zero, the particle will lose cognitive function. Although the search space could be enlarged by taking into account the particle interactions, it is more likely to be trapped in the local optimum when faced with a complex optimization problem. In addition, when c2 is zero, no information exchanges exist in the swarm; each particle will work independently. It is almost impossible to find the optimal solution.

As mentioned in

Section 2.1, the values of

c1 and

c2 keep constant during the whole evolutionary procedure for the conventional PSO. However, the fixed settings have inherent limitations: large values make each particle rapidly converge towards the local optimum, while low values cause each particle to wander far from target regions. Hence, a concept of iteratively linearly changing acceleration coefficients was employed by A. Ratnaweera et al. [

32], Y. Zhang et al. [

23], and Z. Yan et al. [

29]. A relatively larger

c1 and a relatively lower

c2 were used during the early stage of iterations. With the increasing iterations, the value of

c1 was linearly reduced, while the value of

c2 was linearly increased, as formulated by Equations (4) and (5). It was reported that the linear-changing acceleration coefficients could help to reduce the probability of premature convergence during early iterations, and also enhance the convergence performance during later iterations.

where the subscripts

max and

min stand for the maximum and minimum values of acceleration coefficients

c1 and

c2.

However, it is thought that the effects of acceleration coefficients on algorithm convergence are restricted when their values are changing linearly during the whole evolutionary procedure. For instance, a larger influence of social information is of great significance in later stage of algorithm to improve the searching efficiency, which could not be realized timely by the simple linear variations of acceleration coefficients. Consequently, an evaluation parameter (

K) is introduced in this work according to the degree of swarm convergence. Its value is defined as the radio of the number of successfully converged particles (called advantageous particles) in a single iteration to the initial swarm size, as represented in Equation (6). Then the evaluation parameter (

K) is employed to adaptively control the changing rate of acceleration coefficients by using Equations (7) and (8).

where

P stands for the number of particles that could successfully converge in a single iteration. The strategy of adaptively controlled acceleration coefficients associates the values of acceleration coefficients with the optimization status by use of the evaluation parameter (

K). With the increase of iterations, the number of advantageous particles in a swarm is increasing; this will enlarge the influence of advantageous particles on the entire swarm. Hence, it is supposed that excellent solutions would be protected as much as possible to help avoid local optima.

2.4. Random Grouping Inversion

It should be noted that the CPSO uses a single swarm consisting of all the particles for evolution. Hence, it is likely to result in a phenomenon in which all the particles cluster around a certain position and stop exploring the other area of the search-space. To avoid the easy occurrence of premature convergence, the concept of random grouping inversion is proposed and added before the update of Pis and Pgs during every iteration. The single swarm is divided into several subgroups, in which independent evolution is in process. As a result, the diversity of the swarm can be strengthened and the global convergence for the entire swarm is accelerated.

As to the number of particles in a subgroup, preliminary research indicated that a larger number would decrease the inherent capability of merit-based selection, while a lower number would weaken the role of grouping mechanism. Finally, the number was set as four; in other words, four particles were randomly sorted to form a subgroup.

On the basis of the random grouping strategy, a further operation is proposed, simulating the inversion operation during the process of biologic evolution. After evaluating the fitness of each particle, all four particles clustered around the local optimum discovered by each subgroup. Then the inversion was carried out to generate new particles and replace two original particles of the subgroup, in which the TSP tour orders for two randomly selected inversion points were inversed.

Indeed, the strategy of random grouping inversion is based on Darwin’s theory of evolution: internal competition of population and uncertain mutation. In theory, the internal competition of the population is a process of merit-based selection, namely, only the fittest one survives. The inversion is a type of uncertain mutation which could help to maintain the swarm diversity. Ultimately, the pool of swarm particles reserves not only the fittest individual of each subgroup, but also the inversion-based variant. Hence, it is supposed that this strategy would help to enhance the population diversity and improve the effectiveness of swarm optimization. The pseudo code of random grouping inversion is shown in Algorithm 2.

| Algorithm 2. Random Grouping Inverion |

| Randperm swarm size |

| for each subgroup |

| find the fittest particle among four particles |

| randomly select inversion points |

| inversion |

| update Pgs |

| end |