Higher Order Feature Extraction and Selection for Robust Human Gesture Recognition using CSI of COTS Wi-Fi Devices

Abstract

1. Introduction

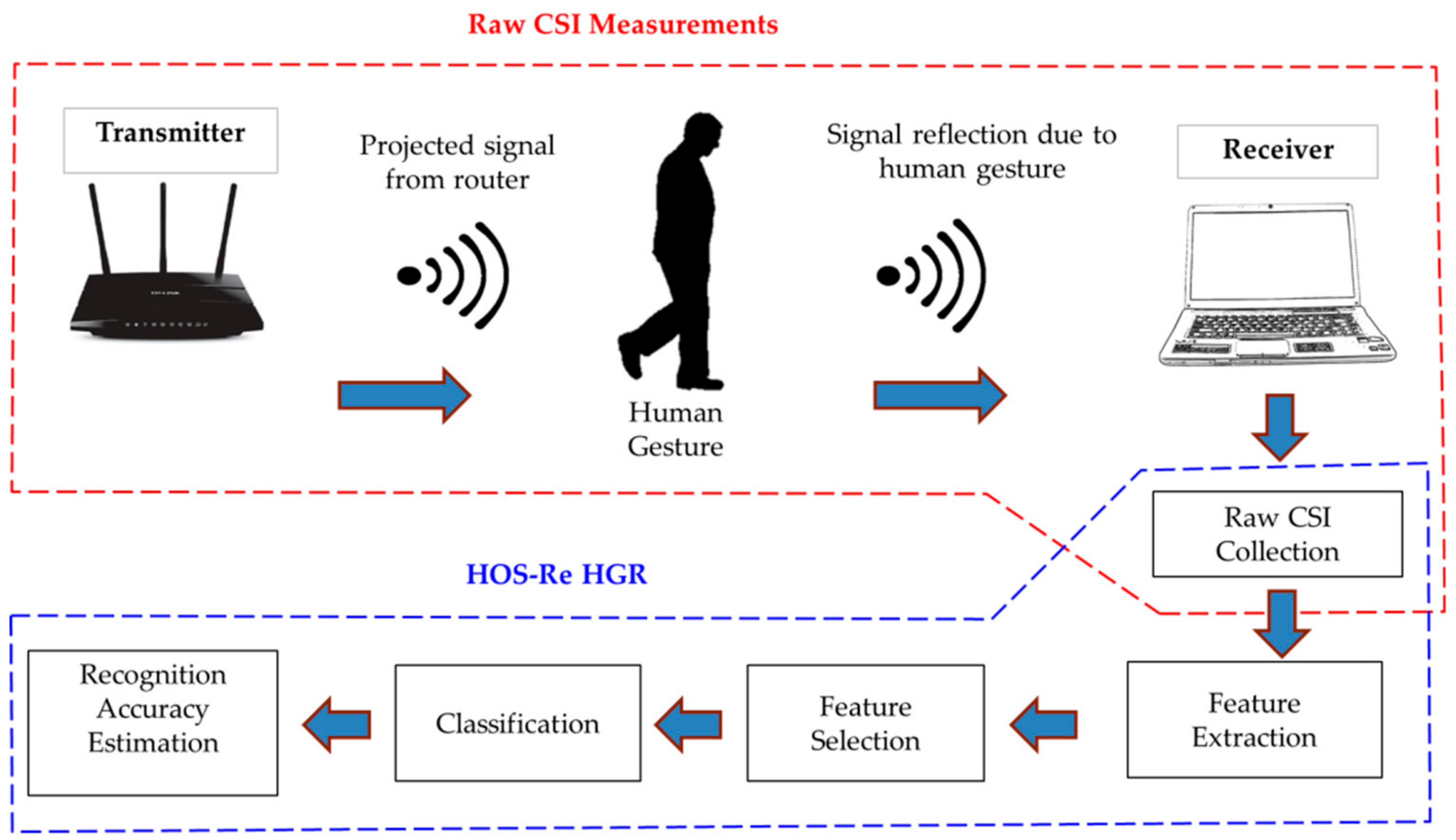

- This paper proposes a HOS-based third order cumulant feature extraction from raw CSI traces and applies various feature selection methods derived from information theory, which effectively extracts and selects a robust feature subset considering the non-Gaussian signal distribution.

- HOS-Re measures the recognition performance using an ML classifier with feature inputs from feature selection methods. The experiment explores most of the mutual information based feature selection methods on cumulant features and identifies methods having higher recognition accuracy.

- To the best of our knowledge, this is the first reported work to use the combination of cumulant features + feature selection method for machine learning in the HGR domain using CSI traces and achieve significant performance.

2. Related Work

2.1. Pre-Processed CSI Signals

2.2. Raw CSI Signals

2.3. Feature Selection

3. Preliminaries

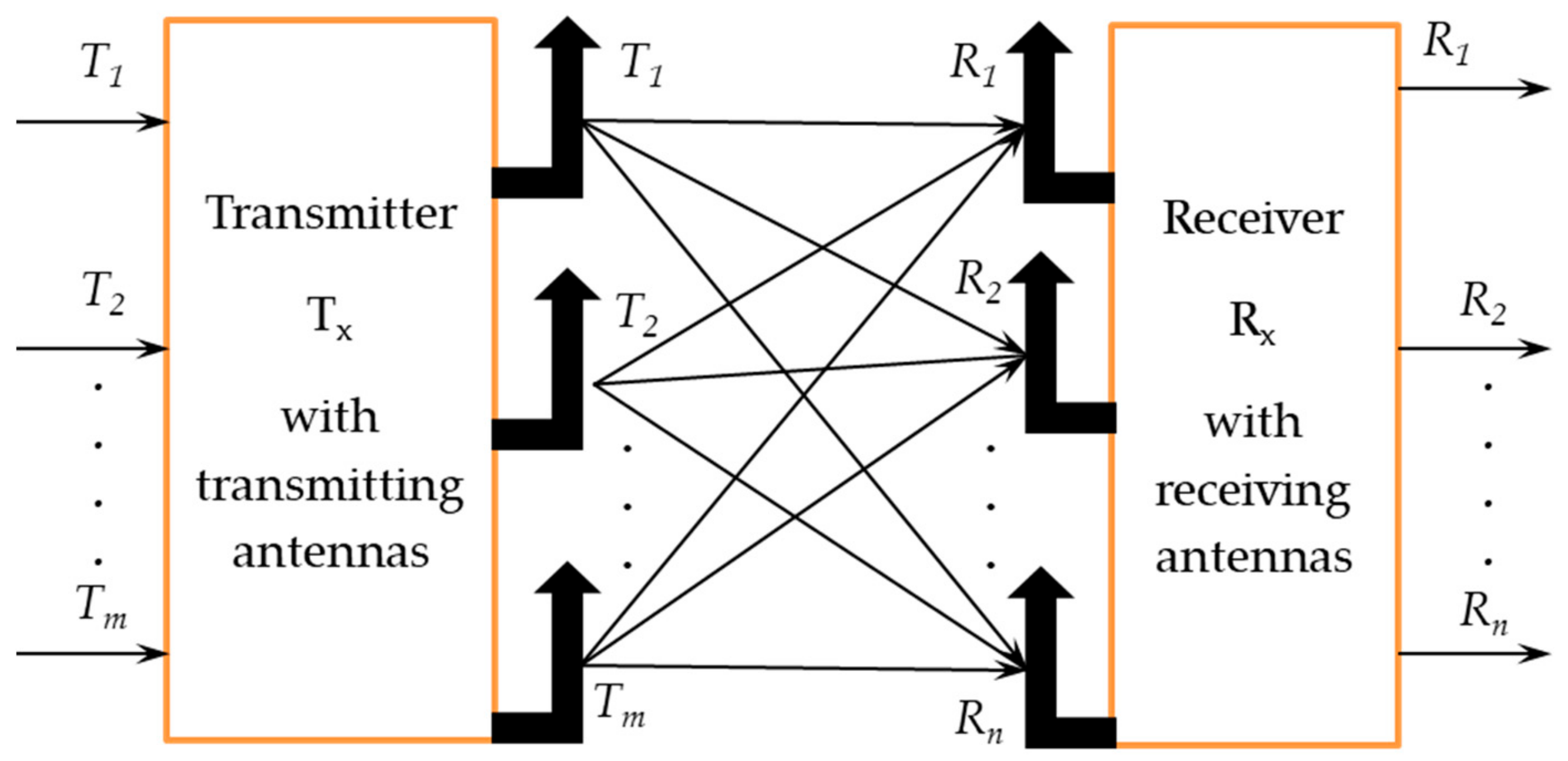

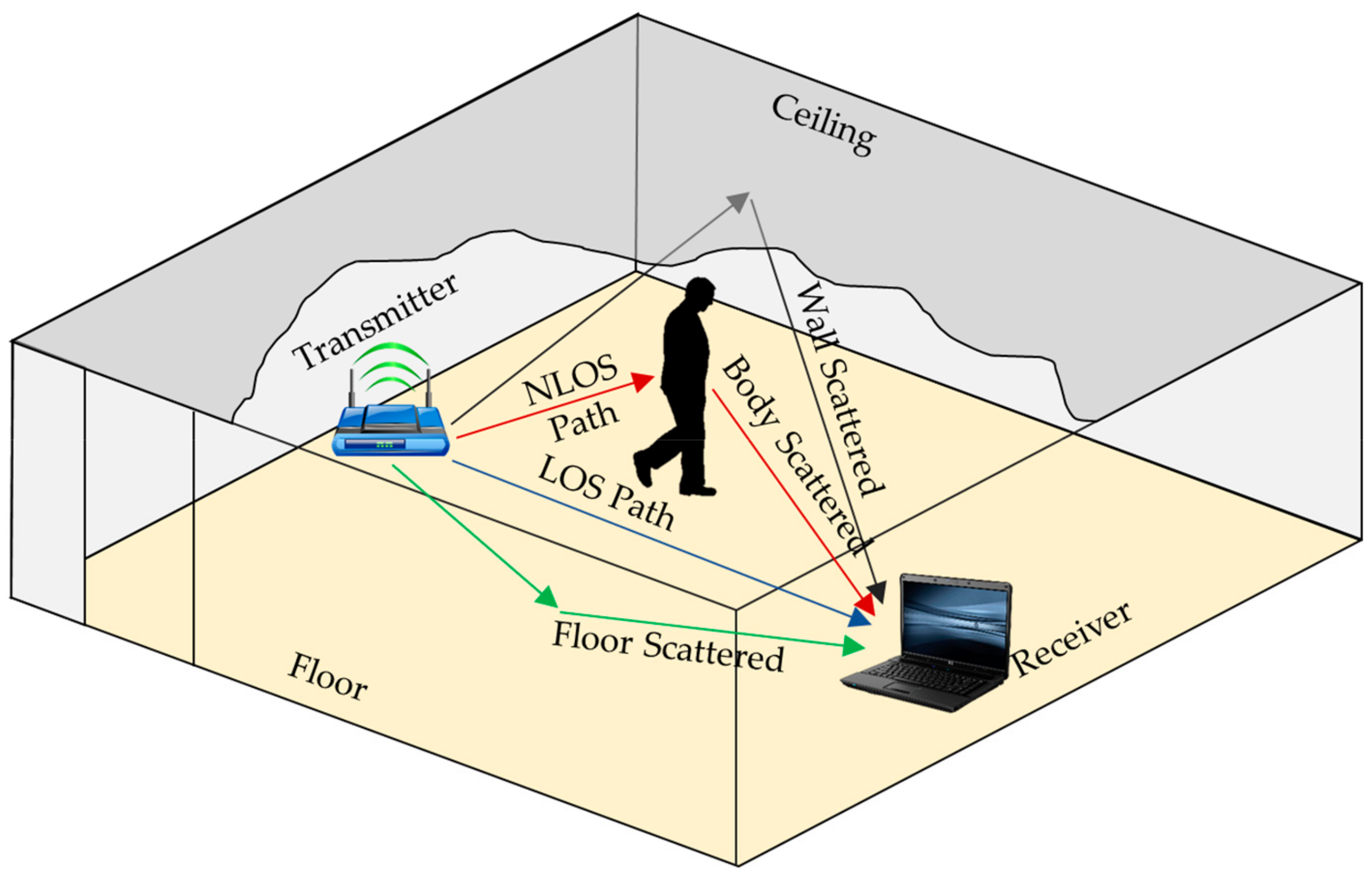

3.1. Channel State Information (CSI)

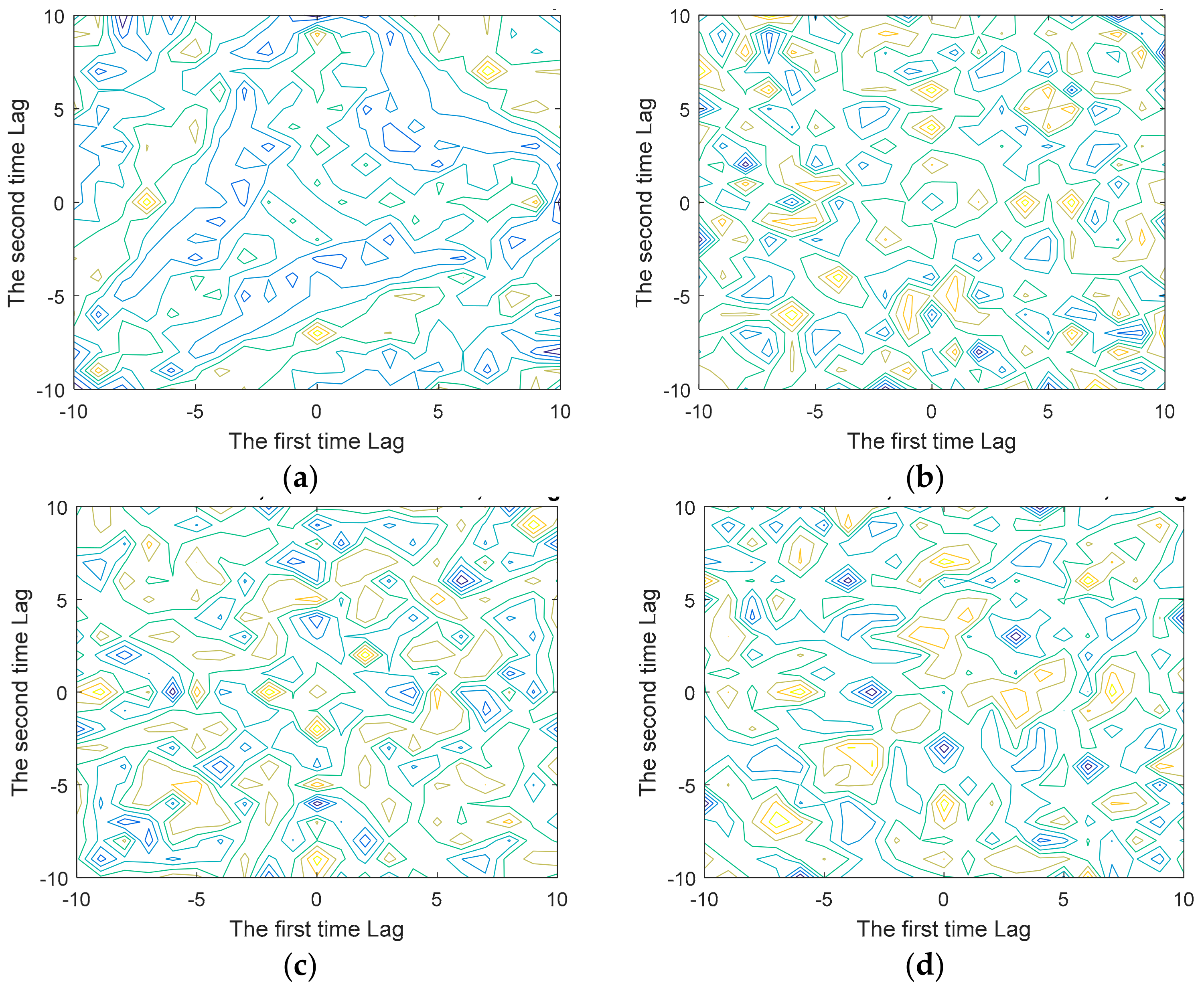

3.2. Higher Order Cumulants

mg2 x(τ1) = E [X(i) X(i + τ1)]

mg3 x(τ1, τ2) = E [X(i) X(i + τ1) X(i + τ2)]

mg4 x(τ1, τ2, τ3) = E [X(i) X(i + τ1) X(i + τ2) X(i + τ3)]

C2x (τ1) = mg2 x(τ1)

C3x(τ1, τ2) =mg3 x(τ1, τ2)

C4x (τ1, τ2, τ3) = mg4 x(τ1, τ2, τ3) − mg2 x(τ1) mg2 x(τ2 − τ3) − mg2 x(τ2) mg2 x(τ3 − τ1) − mg2 x(τ3) mg2 x(τ1 − τ2),

3.3. Feature Selection

4. System Overview—HOS–Re

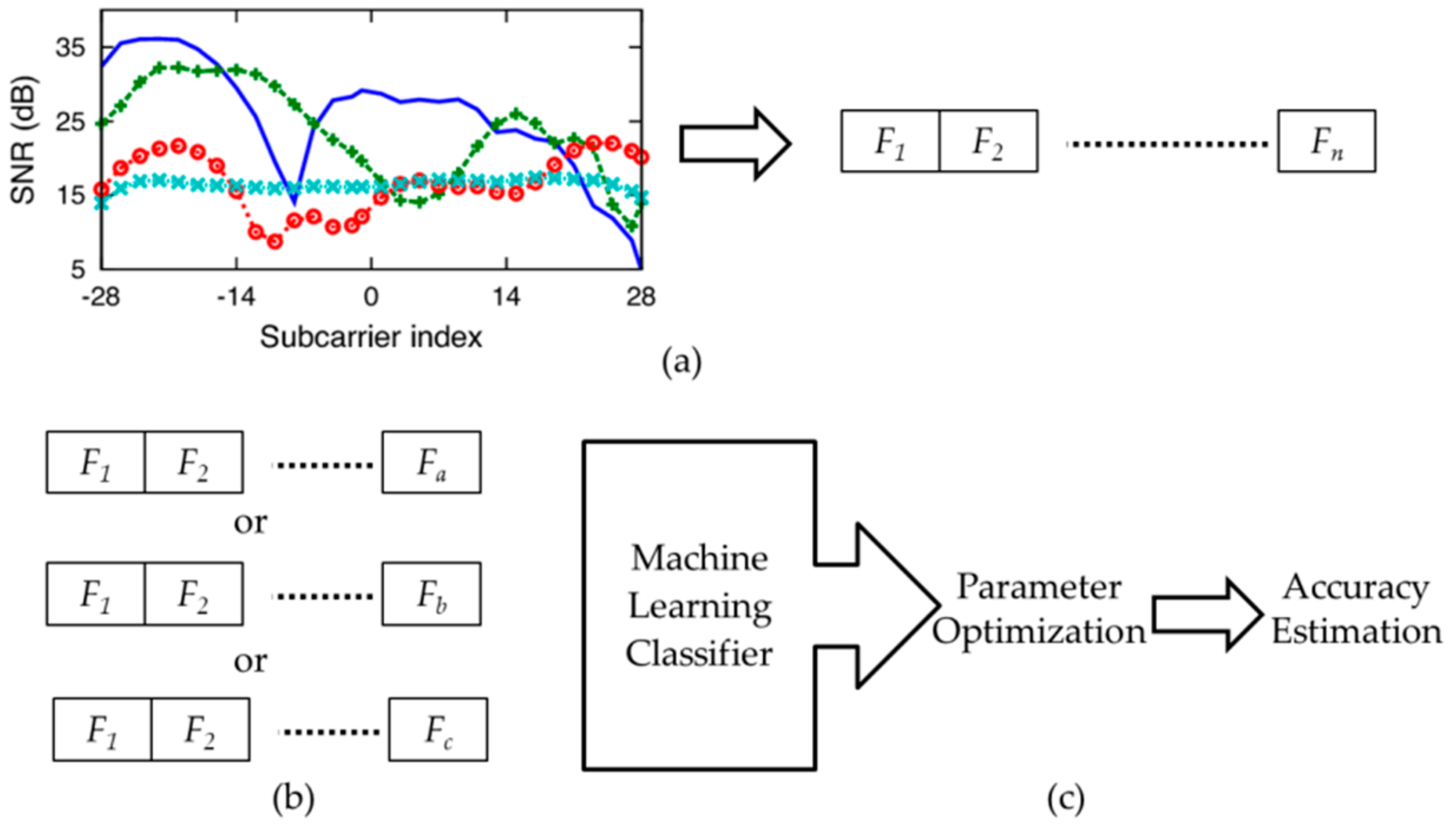

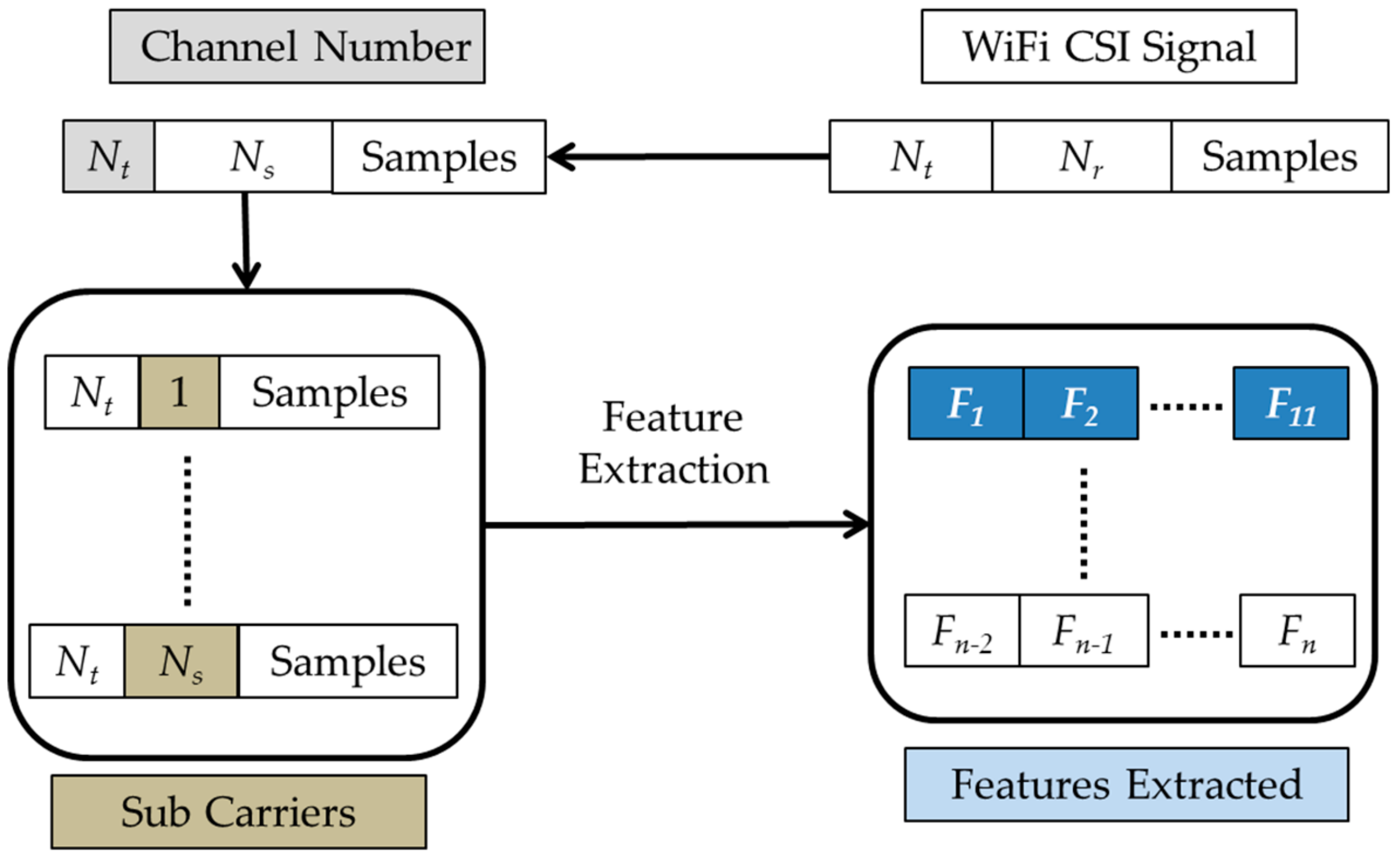

4.1. Feature Extraction

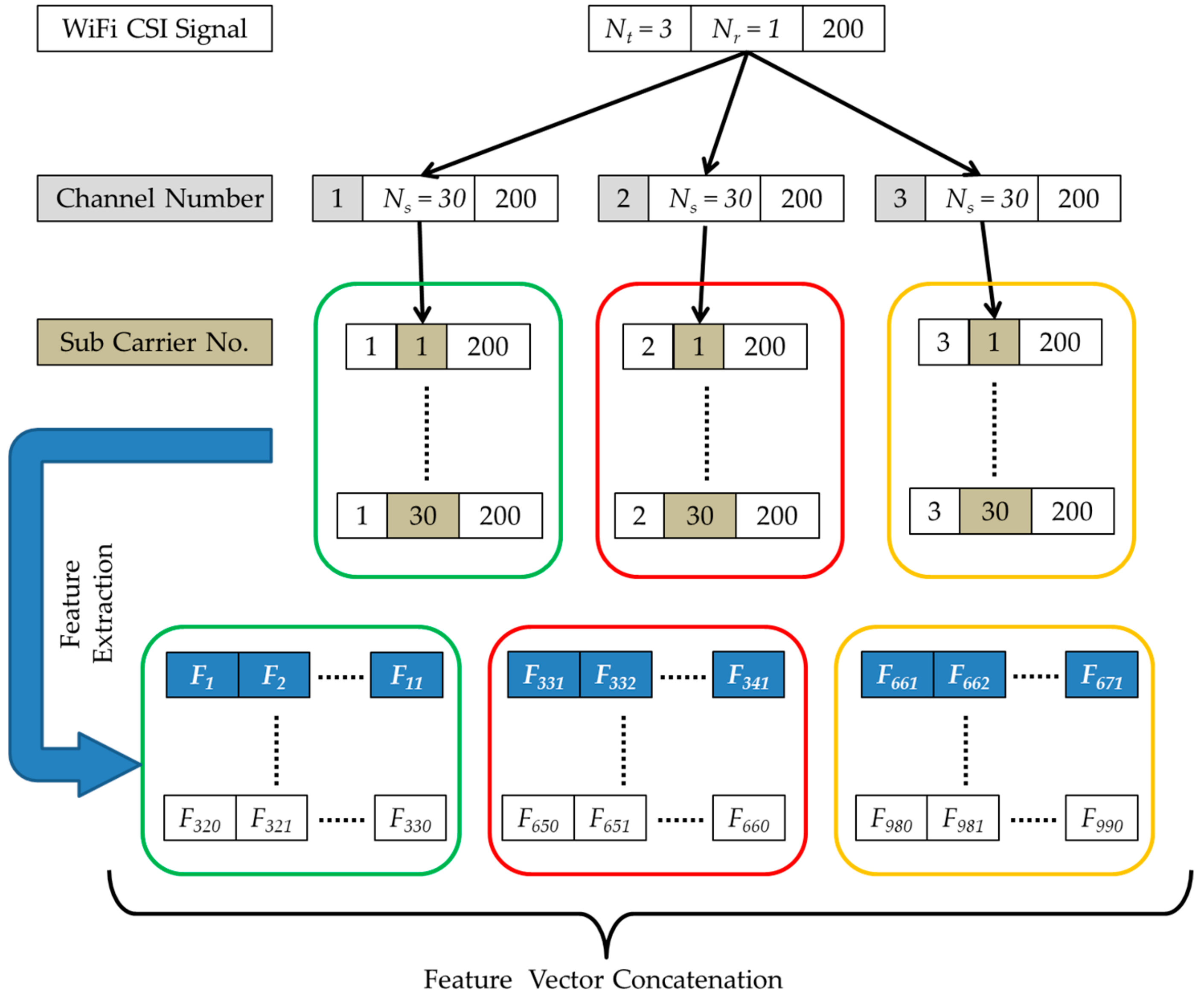

Third Order Cumulants

4.2. Feature Selection

4.3. Human Gesture Recognition

5. Implementation and Evaluation

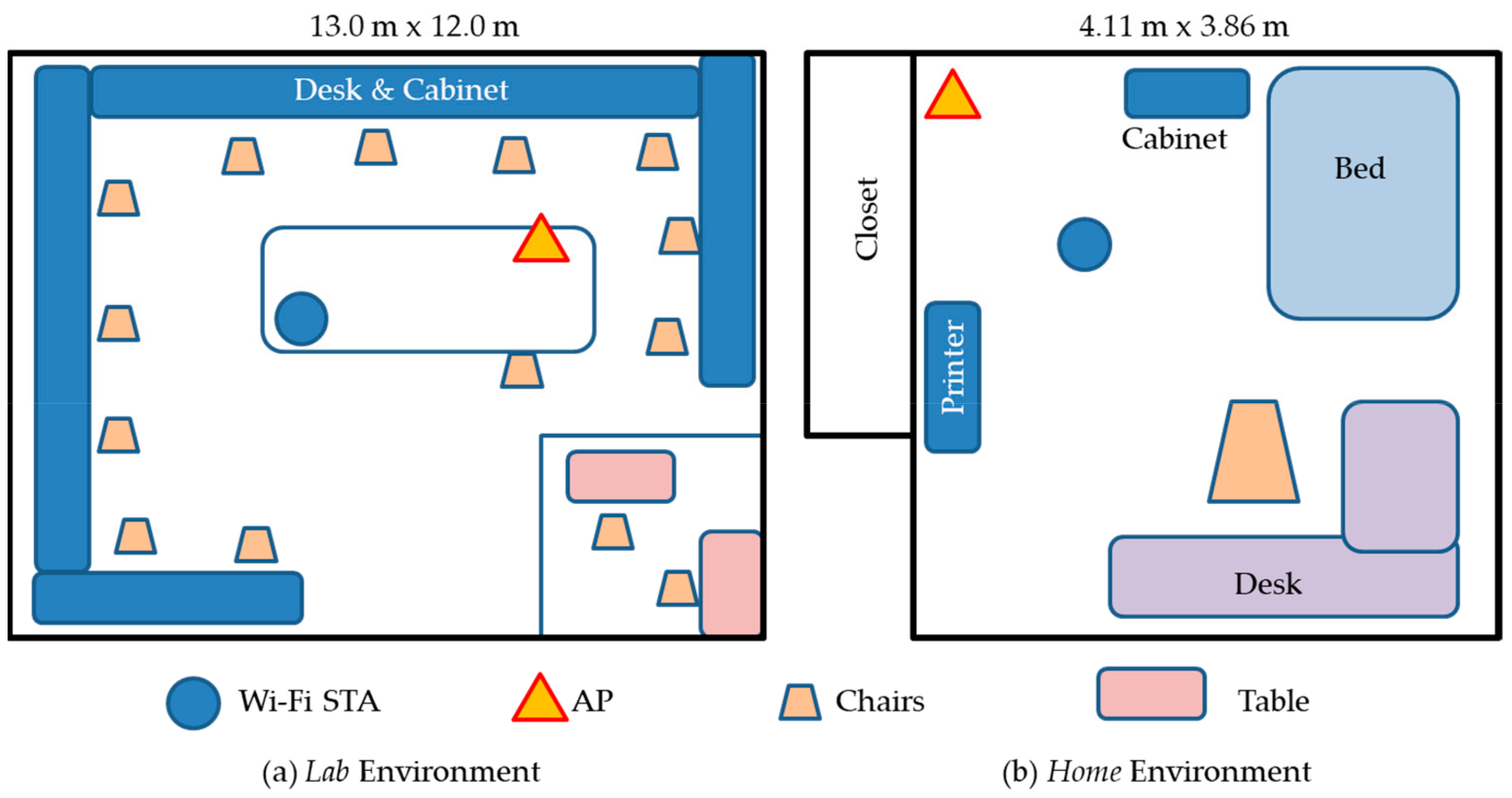

5.1. SignFi Dataset

5.2. Cumulant Feature Extraction

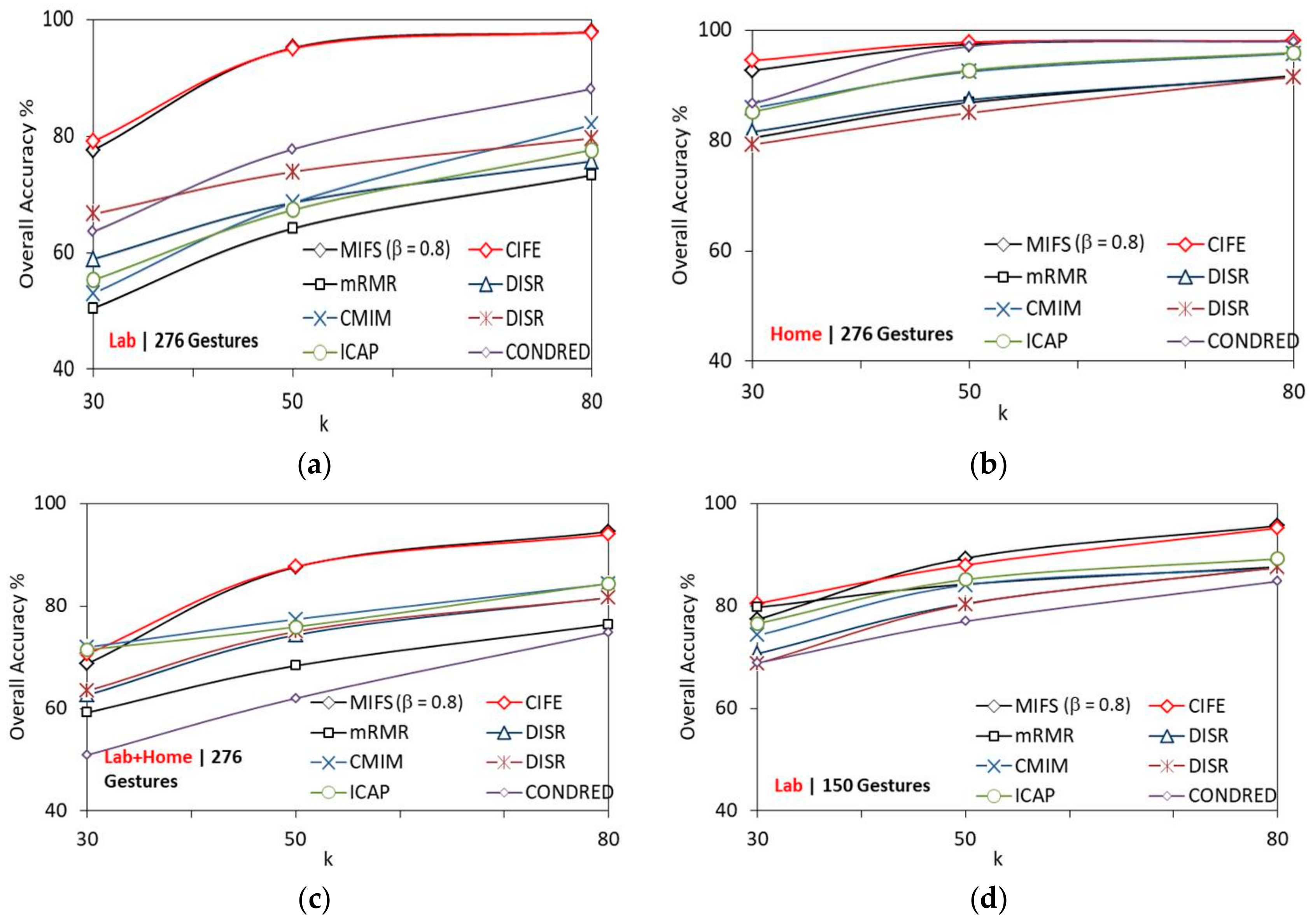

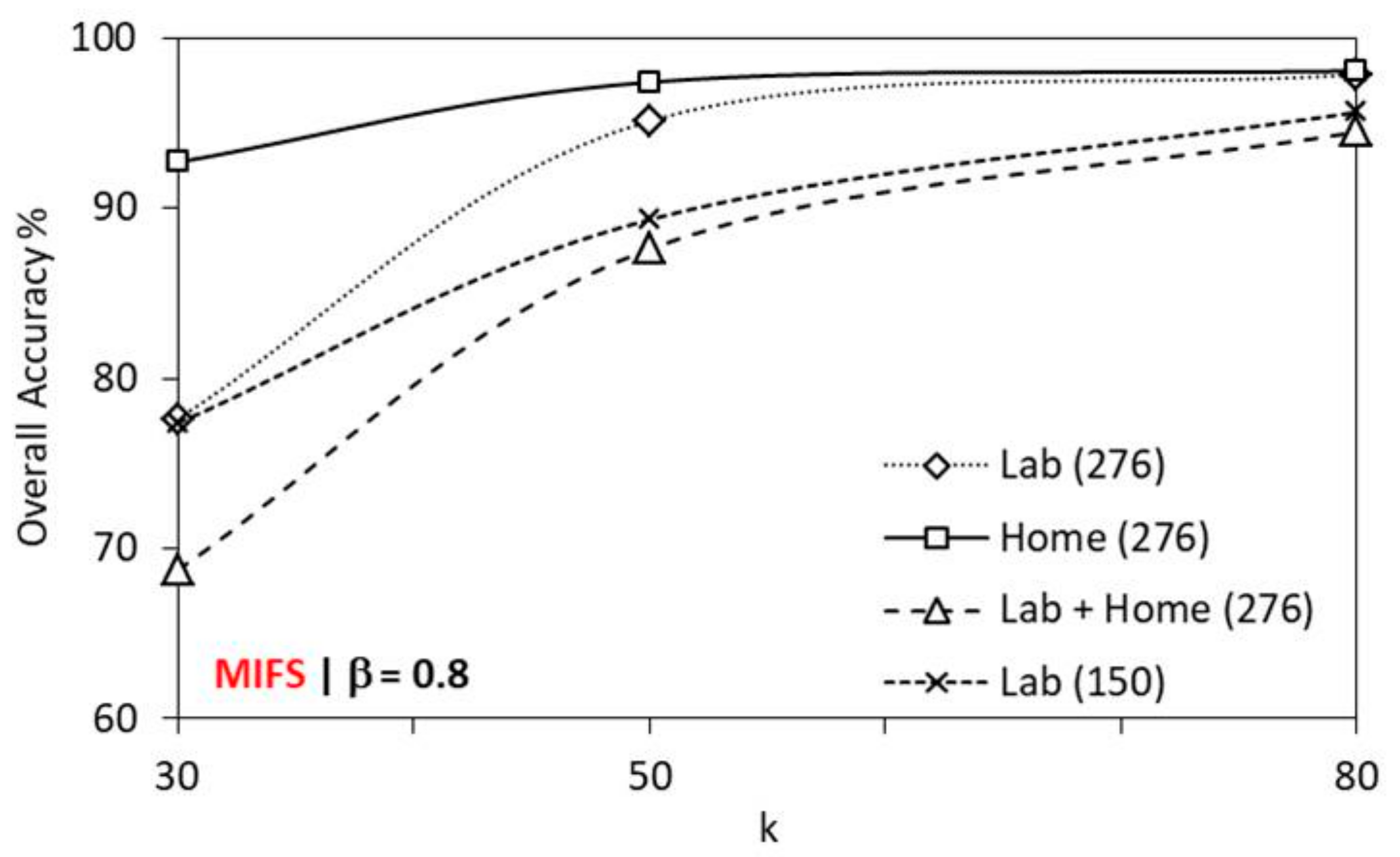

5.3. Feature Selection

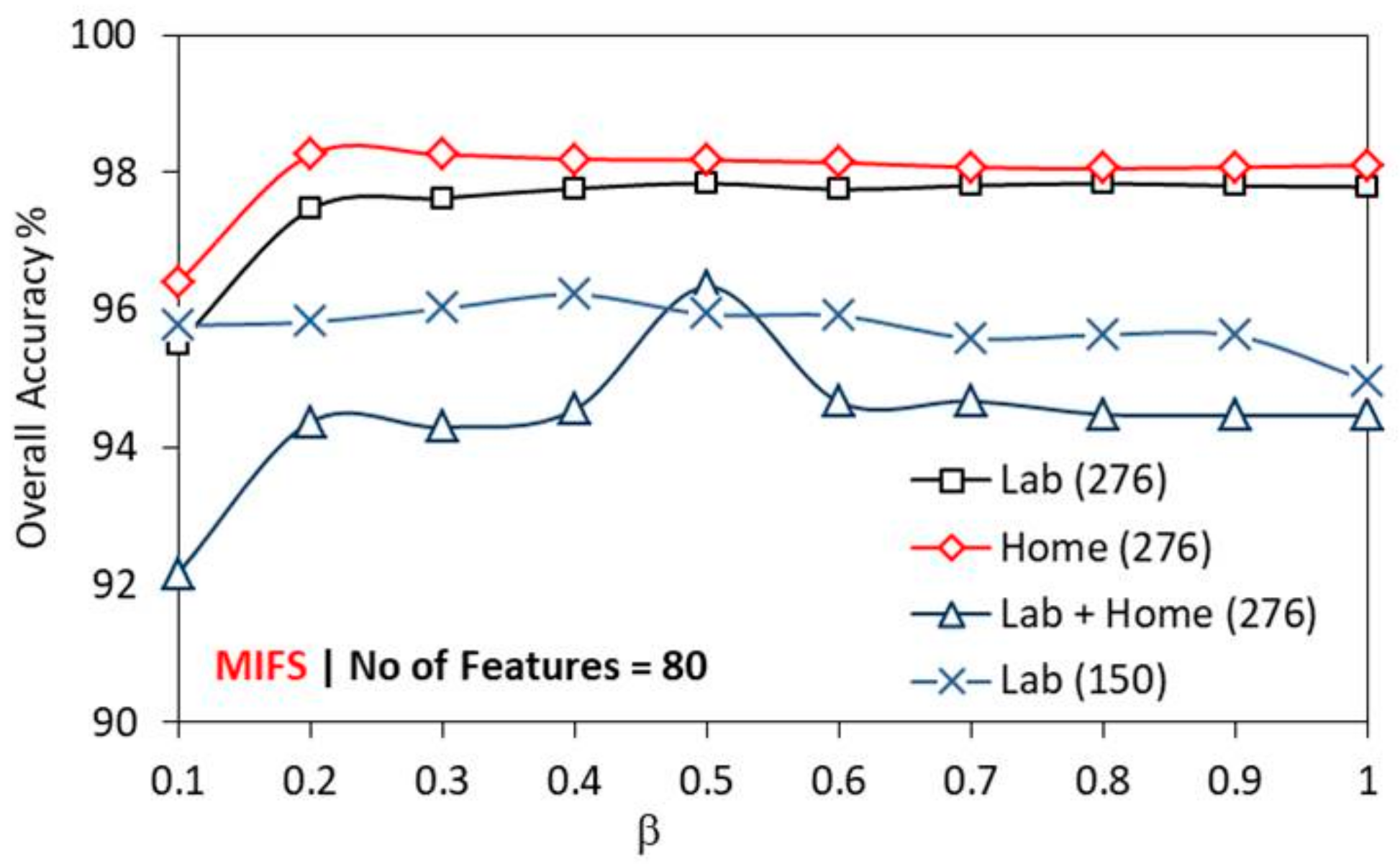

5.3.1. MIFS

- Set CF ← Initial ‘n’ cumulant features (Fn CF), S ← Empty set

- For each cf ∈ CF, compute MI (GC; cf)(Compute mutual information, MI for every individual feature ‘cf’ that belong to the output gesture class ‘GC’)

- Find the cf that maximizes MI (GC; cf)

- Set CF ← CF\{cf}; Set S ← {cf}

- Greedy selection repeats until |S| = k (where, k = 30/50/80)

- Computation of MI between CF:for all pair of variables (cf, s) with cf ∈ CF, s ∈ S. Compute I(cf, s), if it is not already available

- Selection of next CF:Choose feature cf as the one that maximizes I(GC; cf) – βSet CF ← CF\{cf}; set S∈ S ∪ {cf}

- Output the set S containing the selected features (Selected feature subset with 30/50/80)

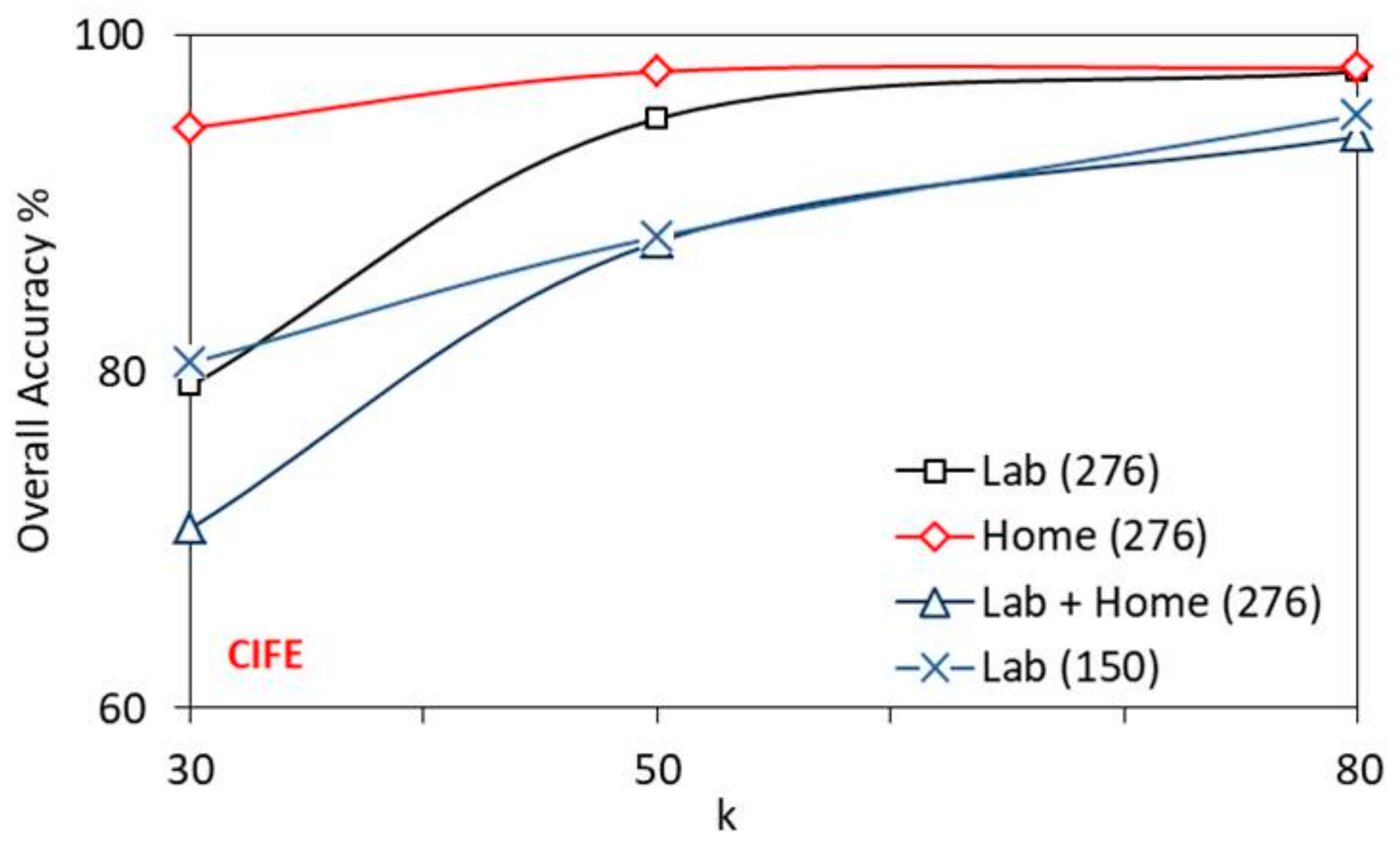

5.3.2. CIFE

5.4. HGR Performance Evaluation

5.4.1. Recognition Accuracy

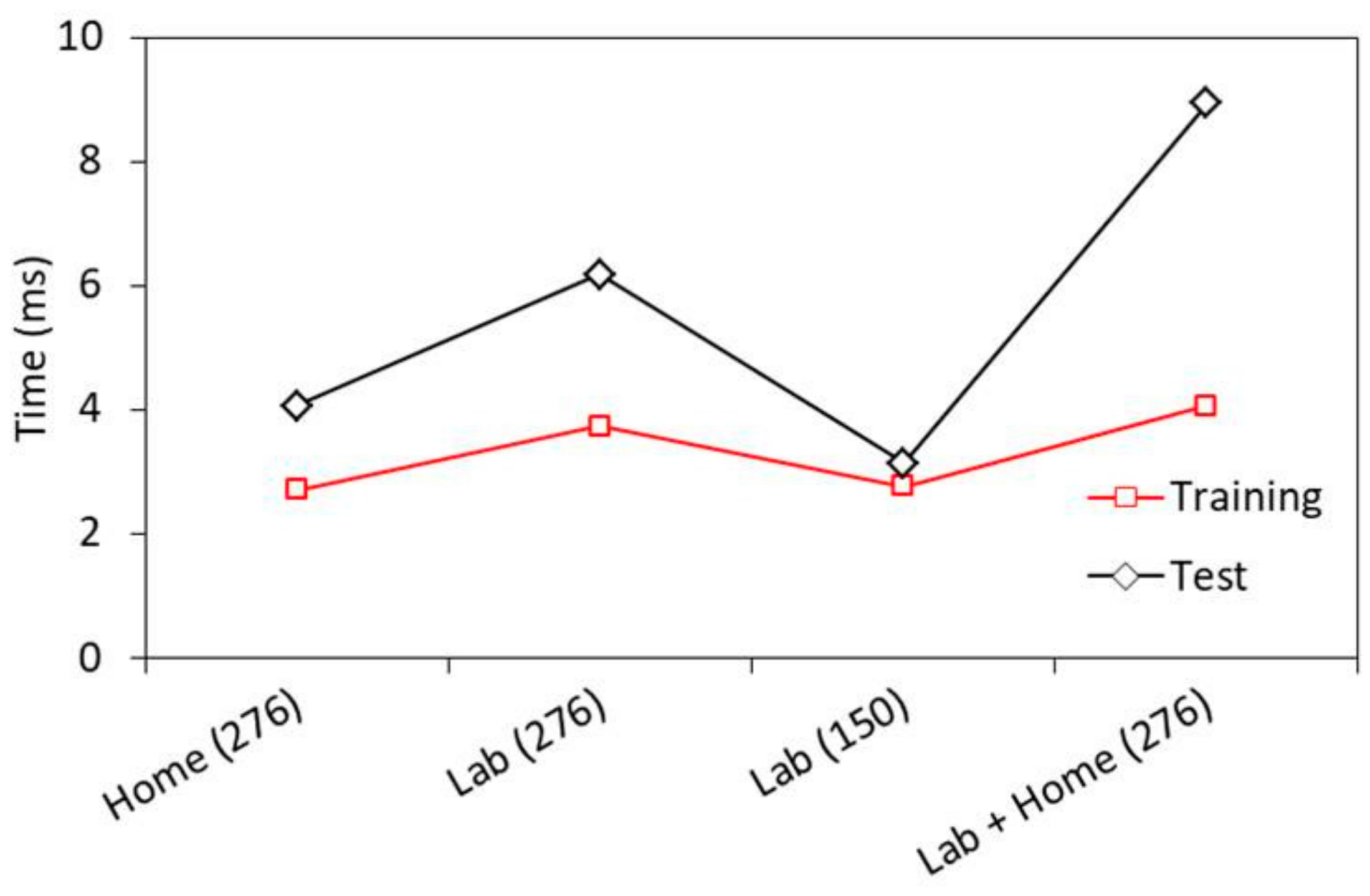

5.4.2. SVM Classifier Execution Time

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Saini, R.; Kumar, P.; Roy, P.P.; Dogra, D.P. A novel framework of continuous human-activity recognition using Kinect. Neurocomputing 2018, 311, 99–111. [Google Scholar] [CrossRef]

- Kellogg, B.; Talla, V.; Gollakota, S. Bringing gesture recognition to all devices. In Proceedings of the 11th USENIX Symposium on Networked Systems Design and Implementation (NSDI 14), Seattle, WA, USA, 2–4 April 2014; pp. 303–316. [Google Scholar]

- Zhao, M.; Adib, F.; Katabi, D. Emotion recognition using wireless signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 95–108. [Google Scholar]

- Rana, S.P.; Dey, M.; Ghavami, M.; Dudley, S. Signature Inspired Home Environments Monitoring System Using IR-UWB Technology. Sensors 2019, 19, 385. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Tan, B.; Piechocki, R.J. WiFi-based passive sensing system for human presence and activity event classification. IET Wirel. Sens. Syst. 2018, 8, 276–283. [Google Scholar] [CrossRef]

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. Analyzing shopper’s behavior through wifi signals. In Proceedings of the 2nd workshop on Workshop on Physical Analytics, Florence, Italy, 22 May 2015; pp. 13–18. [Google Scholar]

- Chereshnev, R.; Kertész-Farkas, A. GaIn: Human gait inference for lower limbic prostheses for patients suffering from double trans-femoral amputation. Sensors 2018, 18, 4146. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Zou, H.; Jiang, H.; Xie, L. Device-Free Occupant Activity Sensing Using WiFi-Enabled IoT Devices for Smart Homes. IEEE Internet Things J. 2018, 5, 3991–4002. [Google Scholar] [CrossRef]

- Lee, H.; Ahn, C.R.; Choi, N.; Kim, T.; Lee, H. The Effects of Housing Environments on the Performance of Activity-Recognition Systems Using Wi-Fi Channel State Information: An Exploratory Study. Sensors 2019, 19, 983. [Google Scholar] [CrossRef] [PubMed]

- Xin, T.; Guo, B.; Wang, Z.; Li, M.; Yu, Z.; Zhou, X. Freesense: Indoor human identification with Wi-Fi signals. In Proceedings of the 2016 IEEE Global Communications Conference (GLOBECOM), Washington, DC, USA, 4–8 December 2016; pp. 1–7. [Google Scholar]

- Wang, G.; Zou, Y.; Zhou, Z.; Wu, K.; Ni, L.M. We can hear you with wi-fi! IEEE Trans. Mob. Comput. 2016, 15, 2907–2920. [Google Scholar] [CrossRef]

- Sun, L.; Sen, S.; Koutsonikolas, D.; Kim, K.-H. Widraw: Enabling hands-free drawing in the air on commodity wifi devices. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 77–89. [Google Scholar]

- Xiao, F.; Chen, J.; Xie, X.H.; Gui, L.; Sun, J.L.; none Ruchuan, W. SEARE: A system for exercise activity recognition and quality evaluation based on green sensing. IEEE Trans. Emerg. Top. Comput. 2018. [Google Scholar] [CrossRef]

- Di Domenico, S.; De Sanctis, M.; Cianca, E.; Giuliano, F.; Bianchi, G. Exploring training options for RF sensing using CSI. IEEE Commun. Mag. 2018, 56, 116–123. [Google Scholar] [CrossRef]

- Ali, K.; Liu, A.X.; Wang, W.; Shahzad, M. Keystroke recognition using wifi signals. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 90–102. [Google Scholar]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Device-free human activity recognition using commercial WiFi devices. IEEE J. Sel. Areas Commun. 2017, 35, 1118–1131. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, W.; Wang, J.; Zhou, X.; Li, H.; Huang, L. WiStep: Device-free Step Counting with WiFi Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1. [Google Scholar] [CrossRef]

- Dang, X.; Si, X.; Hao, Z.; Huang, Y. A Novel Passive Indoor Localization Method by Fusion CSI Amplitude and Phase Information. Sensors 2019, 19, 875. [Google Scholar] [CrossRef] [PubMed]

- Zhuo, Y.; Zhu, H.; Xue, H. Identifying a new non-linear CSI phase measurement error with commodity WiFi devices. In Proceedings of the 2016 IEEE 22nd International Conference on Parallel and Distributed Systems (ICPADS), Wuhan, China, 13–16 December 2016; pp. 72–79. [Google Scholar]

- Battiti, R. Using mutual information for selecting features in supervised neural net learning. IEEE Trans. Neural Networks 1994, 5, 537–550. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, X.V.; Chan, J.; Romano, S.; Bailey, J. Effective global approaches for mutual information based feature selection. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, New York, NY, USA, 24–27 August 2014; pp. 512–521. [Google Scholar]

- Lin, D.; Tang, X. Conditional infomax learning: An integrated framework for feature extraction and fusion. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 68–82. [Google Scholar] [CrossRef]

- Yang, H.; Moody, J. Feature Selection Based on Joint Mutual Information. Available online: https://pdfs.semanticscholar.org/dd69/1540c3f28decb477a7738f16aa92709b0f59.pdf (accessed on 28 June 2019).

- Fleuret, F. Fast binary feature selection with conditional mutual information. J. Mach. Learn. Res. 2004, 5, 1531–1555. [Google Scholar]

- Meyer, P.E.; Bontempi, G. On the Use of Variable Complementarity for Feature Selection in Cancer Classification. Available online: https://www.semanticscholar.org/paper/On-the-Use-of-Variable-Complementarity-for-Feature-Meyer-Bontempi/d72ff5063520ce4542d6d9b9e6a4f12aafab6091 (accessed on 28 June 2019).

- Jakulin, A. Machine Learning Based on Attribute Interactions. Ph.D. Thesis, Univerza v Ljubljani, Ljubljana, Slovenia, 2005. [Google Scholar]

- Brown, G.; Pocock, A.; Zhao, M.-J.; Luján, M. Conditional likelihood maximisation: A unifying framework for information theoretic feature selection. J. Mach. Learn. Res. 2012, 13, 27–66. [Google Scholar]

- Ma, Y.; Zhou, G.; Wang, S.; Zhao, H.; Jung, W. Signfi: Sign language recognition using wifi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 23. [Google Scholar] [CrossRef]

- Kanokoda, T.; Kushitani, Y.; Shimada, M.; Shirakashi, J.-I. Gesture Prediction Using Wearable Sensing Systems with Neural Networks for Temporal Data Analysis. Sensors 2019, 19, 710. [Google Scholar] [CrossRef] [PubMed]

- Shukor, A.Z.; Miskon, M.F.; Jamaluddin, M.H.; bin Ali, F.; Asyraf, M.F.; bin Bahar, M.B. A new data glove approach for Malaysian sign language detection. Procedia Comput. Sci. 2015, 76, 60–67. [Google Scholar] [CrossRef]

- Goswami, P.; Rao, S.; Bharadwaj, S.; Nguyen, A. Real-Time Multi-Gesture Recognition using 77 GHz FMCW MIMO Single Chip Radar. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–4. [Google Scholar]

- Tan, S.; Yang, J. WiFinger: Leveraging commodity WiFi for fine-grained finger gesture recognition. In Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Paderborn, Germany, 5–8 July 2016; pp. 201–210. [Google Scholar]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. Wigest: A ubiquitous wifi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Kowloon, Hong Kong, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar]

- Wang, H.; Zhang, D.; Wang, Y.; Ma, J.; Wang, Y.; Li, S. RT-Fall: A real-time and contactless fall detection system with commodity WiFi devices. IEEE Trans. Mob. Comput. 2017, 16, 511–526. [Google Scholar] [CrossRef]

- Al-qaness, M.A.A.; Li, F. WiGeR: WiFi-Based Gesture Recognition System. ISPRS Int. J. Geo-Inf. 2016, 5, 92. [Google Scholar] [CrossRef]

- Tian, Z.; Wang, J.; Yang, X.; Zhou, M. WiCatch: A Wi-Fi Based Hand Gesture Recognition System. IEEE Access 2018, 6, 16911–16923. [Google Scholar] [CrossRef]

- Zhang, O.; Srinivasan, K. Mudra: User-friendly Fine-grained Gesture Recognition using WiFi Signals. In Proceedings of the 12th International on Conference on emerging Networking EXperiments and Technologies, Irvine, CA, USA, 12–15 December 2016; pp. 83–96. [Google Scholar]

- Zhou, R.; Lu, X.; Zhao, P.; Chen, J. Device-free presence detection and localization with SVM and CSI fingerprinting. IEEE Sens. J. 2017, 17, 7990–7999. [Google Scholar] [CrossRef]

- Hong, F.; Wang, X.; Yang, Y.; Zong, Y.; Zhang, Y.; Guo, Z. WFID: Passive device-free human identification using WiFi signal. In Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 47–56. [Google Scholar]

- Zhao, J.; Liu, L.; Wei, Z.; Zhang, C.; Wang, W.; Fan, Y. R-DEHM: CSI-Based Robust Duration Estimation of Human Motion with WiFi. Sensors 2019, 19, 1421. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Xiao, F.; Sun, L.; Wang, R.; Yang, P. R-TTWD: Robust device-free through-the-wall detection of moving human with WiFi. IEEE J. Sel. Areas Commun. 2017, 35, 1090–1103. [Google Scholar] [CrossRef]

- Palipana, S.; Rojas, D.; Agrawal, P.; Pesch, D. FallDeFi: Ubiquitous fall detection using commodity Wi-Fi devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 155. [Google Scholar] [CrossRef]

- Jia, W.; Peng, H.; Ruan, N.; Tang, Z.; Zhao, W. WiFind: Driver fatigue detection with fine-grained Wi-Fi signal features. IEEE Trans. Big Data 2018. [Google Scholar] [CrossRef]

- Zhang, Z.; Ishida, S.; Tagashira, S.; Fukuda, A. Danger-pose detection system using commodity Wi-Fi for bathroom monitoring. Sensors 2019, 19, 884. [Google Scholar] [CrossRef]

- Fang, B.; Lane, N.D.; Zhang, M.; Boran, A.; Kawsar, F. BodyScan: Enabling radio-based sensing on wearable devices for contactless activity and vital sign monitoring. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, Singapore, 26–30 June 2016; pp. 97–110. [Google Scholar]

- Gong, L.; Yang, W.; Man, D.; Dong, G.; Yu, M.; Lv, J. WiFi-based real-time calibration-free passive human motion detection. Sensors 2015, 15, 32213–32229. [Google Scholar] [CrossRef]

- Gong, L.; Yang, W.; Zhou, Z.; Man, D.; Cai, H.; Zhou, X.; Yang, Z. An adaptive wireless passive human detection via fine-grained physical layer information. Ad Hoc Netw. 2016, 38, 38–50. [Google Scholar] [CrossRef]

- Gao, Q.; Wang, J.; Ma, X.; Feng, X.; Wang, H. CSI-based device-free wireless localization and activity recognition using radio image features. IEEE Trans. Veh. Technol. 2017, 66, 10346–10356. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Y.; Zhang, Q. Privacy-preserving location authentication in Wi-Fi networks using fine-grained physical layer signatures. IEEE Trans. Wirel. Commun. 2016, 15, 1218–1225. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, X.; Cao, R.; Wang, X. Robust indoor human activity recognition using wireless signals. Sensors 2015, 15, 17195–17208. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Wei, B.; Hu, W.; Kanhere, S.S. WiFi-ID: Human identification using wifi signal. In Proceedings of the 2016 International Conference on Distributed Computing in Sensor Systems (DCOSS), Washington, DC, USA, 26–28 May 2016; pp. 75–82. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- De Sanctis, M.; Cianca, E.; Di Domenico, S.; Provenziani, D.; Bianchi, G.; Ruggieri, M. Wibecam: Device free human activity recognition through wifi beacon-enabled camera. In Proceedings of the 2nd workshop on Workshop on Physical Analytics, Florence, Italy, 22 May 2015; pp. 7–12. [Google Scholar]

- Mendel, J.M. Tutorial on higher-order statistics (spectra) in signal processing and system theory: Theoretical results and some applications. Proc. IEEE 1991, 79, 278–305. [Google Scholar] [CrossRef]

- Swami, A.; Mendel, J.M.; Nikias, C.L. Higher Order Spectral Analysis Toolbox, for Use with MATLAB; The MathWorks Inc.: Natick, MA, USA, 1998. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Caselli, N.K.; Sehyr, Z.S.; Cohen-Goldberg, A.M.; Emmorey, K. ASL-LEX: A lexical database of American Sign Language. Behav. Res. Methods 2017, 49, 784–801. [Google Scholar] [CrossRef]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Linux 802.11 n CSI Tool. 2010. Available online: http://dhalperi.github.io/linux-80211n-csitool (accessed on 2 December 2016).

- Kumar, S.; Cifuentes, D.; Gollakota, S.; Katabi, D. Bringing cross-layer MIMO to today’s wireless LANs. In Proceedings of the ACM SIGCOMM Computer Communication Review, Hong Kong, China, 12–16 August 2013; pp. 387–398. [Google Scholar]

- Harkat, H.; Ruano, A.; Ruano, M.; Bennani, S. GPR target detection using a neural network classifier designed by a multi-objective genetic algorithm. Appl. Soft Comput. 2019, 79, 310–325. [Google Scholar] [CrossRef]

- Hoque, N.; Bhattacharyya, D.K.; Kalita, J.K. MIFS-ND: A mutual information-based feature selection method. Expert Syst. Appl. 2014, 41, 6371–6385. [Google Scholar] [CrossRef]

- Mariello, A.; Battiti, R. Feature Selection Based on the Neighborhood Entropy. IEEE Trans. Neural Netw. Learn. Syst. 2018, 1–10. [Google Scholar] [CrossRef]

Sample Availability: SignFi dataset: CSI traces, labels, and videos of the 276 sign words are made available by the authors at https://yongsen.github.io/SignFi/ for download. |

| Gesture Class | Count of Samples | Description | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Common | 16 | ‘FINISH’ | ‘GO’ | ‘HELP’ | ‘LIKE’ | ‘LOVE’ | ‘MORE’ | ‘NEED’ | ‘NO’ | ‘NOT’ |

| ‘PLEASE’ | ‘RIGHT’ | ‘SORRY’ | ‘WANT’ | ‘WITH’ | ‘WITHOUT’ | ‘YES’ | - | - | ||

| Animals | 15 | ‘ANIMAL’ | ‘BEAR’ | ‘BIRD’ | ‘CAT’ | ‘COW’ | ‘DOG’ | ‘ELEPHANT’ | ‘GIRAFFE’ | ‘HORSE’ |

| ‘LION’ | ‘MONKEY’ | ‘PET’ | ‘RAT’ | ‘SNAKE’ | ‘TIGER’ | - | - | - | ||

| Colors | 12 | ‘BLACK’ | ‘BLUE’ | ‘BROWN’ | ‘COLOR’ | ‘GRAY’ | ‘GREEN’ | ‘ORANGE’ | ‘PINK’ | ‘PURPLE’ |

| ‘RED’ | ‘WHITE’ | ‘YELLOW’ | - | - | - | - | - | - | ||

| Descriptions | 32 | ‘AWFUL’ | ‘BAD’ | ‘BALD’ | ‘BEAUTIFUL’ | ‘CLEAN’ | ‘COLD’ | ‘CUTE’ | ‘DEPRESSED’ | ‘DIFFERENT’ |

| ‘EXCITED’ | ‘FINE’ | ‘GOOD’ | … | - | - | - | - | - | ||

| Family | 31 | ‘AUNT’ | ‘BABY’ | ‘BOYFRIEND’ | ‘BROTHER’ | ‘CHILDREN’ | ‘COUSIN(FEMALE)’ | ‘COUSIN (MALE)’ | ‘DAUGHTER’ | ‘DIVORCE’ |

| ‘DAUGHTER’ | ‘DIVORCE’ | ‘FAMILY’ | ‘FATHER’ | ‘FRIEND’ | … | - | - | - | ||

| Food | 54 | ‘DELICIOUS’ | ‘DINNER’ | ‘DRINK’ | ‘EAT’ | ‘EGGS’ | ‘FISH’ | ‘FOOD’ | ‘FRUIT’ | ‘GRAPES’ |

| ‘HAMBURGER’ | ‘HOTDOG’ | ‘ICECREAM’ | ‘KETCHUP’ | ‘LEMON’ | … | - | - | - | ||

| Home | 17 | APARTMENT’ | ‘BATHROOM’ | ‘BICYCLE’ | ‘BUY’ | ‘CAR’ | ‘CLEAN’ | ‘DOOR’ | ‘GARAGE’ | ‘GO-IN/ENTER’ |

| ‘HOME’ | ‘HOUSE’ | ‘KITCHEN’ | ‘ROOM’ | ‘SHOWER’ | ‘SOFA’ | ‘TELEPHONE’ | ‘TOILET’ | - | ||

| People | 13 | ‘ASK-YOU’ | ‘BOY’ | ‘DEAF’ | ‘GIRL’ | ‘GIVE-YOU’ | ‘HEARING’ | ‘I/ME’ | ‘MAN’ | ‘MY/MINE’ |

| ‘WOMAN’ | ‘WORK’ | ‘YOU’ | ‘YOUR’ | - | - | - | - | - | ||

| Questions | 6 | ‘HOW’ | ‘WHAT’ | ‘WHEN’ | ‘WHERE’ | ‘WHO’ | ‘WHY’ | - | - | - |

| School | 26 | ‘BOOK’ | ‘BUS’ | ‘CLASS’ | ‘COLLEGE’ | ‘DORM’ | ‘DRAW’ | ‘ELEMENTARY’ | ‘ENGLISH’ | ‘HIGH-SCHOOL’ |

| ‘HISTORY’ | ‘KNOW’ | … | - | - | - | - | - | - | ||

| Time | 31 | ‘AFTER’ | ‘AFTERNOON’ | ‘AGAIN’ | ‘ALWAYS’ | ‘BEFORE’ | ‘DAY’ | ‘FRIDAY’ | ‘FUTURE’ | ‘HOUR’ |

| ‘LAST/PAST’ | ‘MIDNIGHT’ | … | - | - | - | - | - | - | ||

| Others | 23 | ‘AIRCONDITIONER’ | ‘BODY’ | ‘LEG’ | ‘FAN’ | ‘RADIO’ | ‘TV’ | REFRIGERATOR | ‘BROWSER’ | ‘WINDOW’ |

| ‘WASHER’ | ‘COMPUTER’ | … | - | - | - | - | - | - | ||

| Algorithms | Description |

|---|---|

| MIFS [20] | A greedy approach that selects only highly informative feature and forms an optimal feature subset. It identifies the non-linear relationship between the selected feature and its output class to reduce the amount of redundancy and uncertainty in the feature vector [61,62,63]. |

| mRMR [21,22] | An incremental feature selection algorithm that forms an optimal feature subset by selecting features with minimum Redundancy and Maximum Relevancy. |

| CIFE [23] | It forms an optimal feature subset that maximizes the class-relevant information between the features by reducing the redundancies among the features. |

| JMI [24] | An increment to mutual information which finds the conditional mutual information to define the joint mutual information among the features and eliminates the redundant features if any. |

| CMI [25] | Selects the features only if it carries additional information and eases the prediction task of the output class. |

| DISR [26] | DISR measures the symmetrical relevance and combines all features variable to describe more information about the output class instead of focusing on individual feature information. |

| ICAP [27] | Features are selected based on the interactions and understand the regularities of the feature set. |

| CONDRED [28] | Identifies the conditional redundancy exists between the features. |

| Dataset | Number of Gestures (Ng) | Number of Users (Nu) | Number of Samples per Gesture per User (Nsu) | Total Number of Gesture Samples (Ngs) | Number of Samples Per User | Overall Recognition Accuracy | ||

|---|---|---|---|---|---|---|---|---|

| SignFi + CNN without SP [29] | SignFi + CNN with SP [29] | Present Work without SP (HOS-Re) | ||||||

| Lab 276 | 276 | 1 | 20 | 5520 | 5520 | 95.72 | 98.01 | 97.84 |

| Home 276 | 276 | 1 | 10 | 2760 | 2760 | 93.98 | 98.91 | 98.26 |

| Lab + Home 276 | 276 | 1 | - | 8280 | 8280 | 92.21 | 94.81 | 96.34 |

| Lab 150 | 150 | 5 | 10 | 7500 | 1500 | - | 86.66 | 96.23 |

| Reference/ Sensing Metric | Signal Processing (SP) | Learning Algorithm | Application | Number of Gestures | Recognition Accuracy |

|---|---|---|---|---|---|

| Wigest [34]/ RSSI | Wavelet Filter; FFT, DWT; Threshold based signal extraction; | Pattern Matching | Hand Gesture Recognition: Hand movements with mobile device. | 7 hand gestures | 87.5%/96% (1 AP/3 AP’s) |

| WiGer [36]/ CSI | Butterworth low pass filter | Segmentation: multi-level wavelet decomposition algorithm and the short-time energy algorithm, DTW | Hand gesture recognition | 7 hand gestures | 97.28%, 91.8%, 95.5%, 94.4% and 91% (Scenario 1 to 5) |

| WiCatch [37]/ CSI | MUSIC algorithm | SVM | Two hand moving trajectories recognition | 9 hand gesture | 95% |

| WiFinger [33]/ RSSI and CSI | Butterworth filter, Wavelet based denoising and PCA | DTW | Finger gesture recognition | 8 finger gestures | 76%(RSSI) and 95% (CSI) |

| WiKey [15]/ CSI | Low pass filter, PCA, DWT Shape features | DTW | Keystroke recognition | 37 keys | 77.4% to 93.4% |

| Mudra [38]/ CSI | Thresholding | Stretch limited DTW | Mudra recognition | 9 finger gestures | 96% |

| SignFi [29]/ CSI | Without SP | CNN | Sign language gesture recognition | 276 gestures | 95.72%, 93.98%, and 92.21% for lab 276, home 276 and lab + home 276 environments respectively |

| SignFi [29]/ CSI | With SP—Multiple Linear Regression | CNN | Sign language gesture recognition | 276 gestures | 98.01%, 98.91%, 94.81%, and 86.66% for lab 276, home 276, lab + home 276 and lab 150 environments respectively |

| HOS-Re (Present work) | Without SP Cumulant Features | SVM | Sign language gesture recognition | 276 gestures | 97.84%, 98.26%, 96.34%, and 96.23% for lab 276, home 276, lab + home 276 and lab 150 environments respectively |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farhana Thariq Ahmed, H.; Ahmad, H.; Phang, S.K.; Vaithilingam, C.A.; Harkat, H.; Narasingamurthi, K. Higher Order Feature Extraction and Selection for Robust Human Gesture Recognition using CSI of COTS Wi-Fi Devices. Sensors 2019, 19, 2959. https://doi.org/10.3390/s19132959

Farhana Thariq Ahmed H, Ahmad H, Phang SK, Vaithilingam CA, Harkat H, Narasingamurthi K. Higher Order Feature Extraction and Selection for Robust Human Gesture Recognition using CSI of COTS Wi-Fi Devices. Sensors. 2019; 19(13):2959. https://doi.org/10.3390/s19132959

Chicago/Turabian StyleFarhana Thariq Ahmed, Hasmath, Hafisoh Ahmad, Swee King Phang, Chockalingam Aravind Vaithilingam, Houda Harkat, and Kulasekharan Narasingamurthi. 2019. "Higher Order Feature Extraction and Selection for Robust Human Gesture Recognition using CSI of COTS Wi-Fi Devices" Sensors 19, no. 13: 2959. https://doi.org/10.3390/s19132959

APA StyleFarhana Thariq Ahmed, H., Ahmad, H., Phang, S. K., Vaithilingam, C. A., Harkat, H., & Narasingamurthi, K. (2019). Higher Order Feature Extraction and Selection for Robust Human Gesture Recognition using CSI of COTS Wi-Fi Devices. Sensors, 19(13), 2959. https://doi.org/10.3390/s19132959