A New Approach to Fall Detection Based on Improved Dual Parallel Channels Convolutional Neural Network

Abstract

1. Introduction

2. SEMG Signal Acquisition and Preprocessing

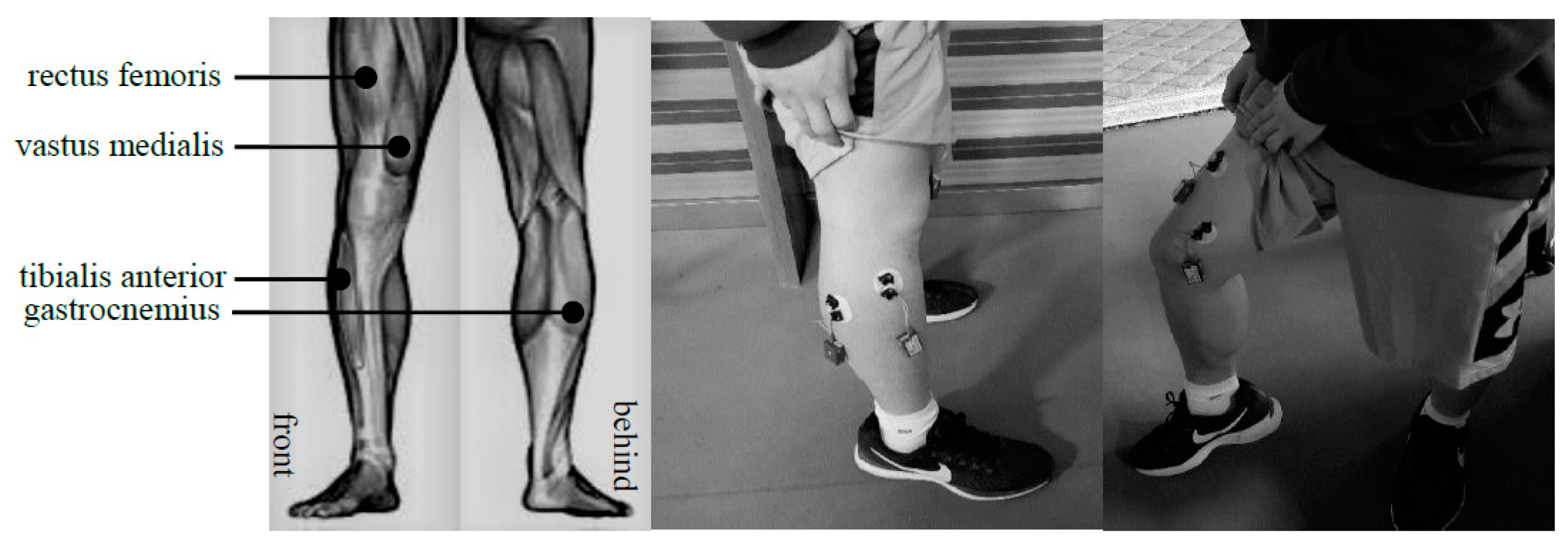

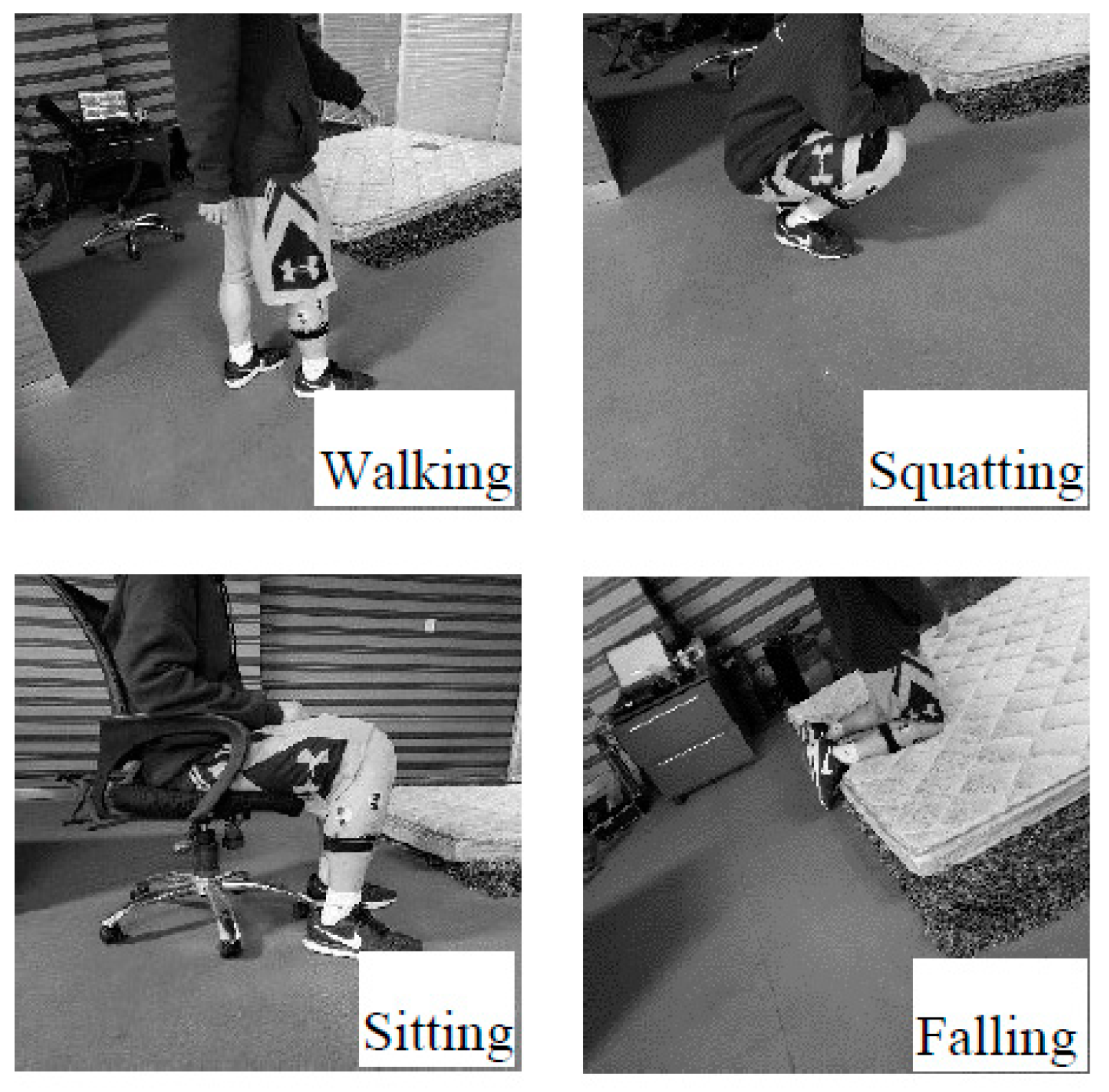

2.1. SEMG Acquisition

2.2. Signal Preprocessing

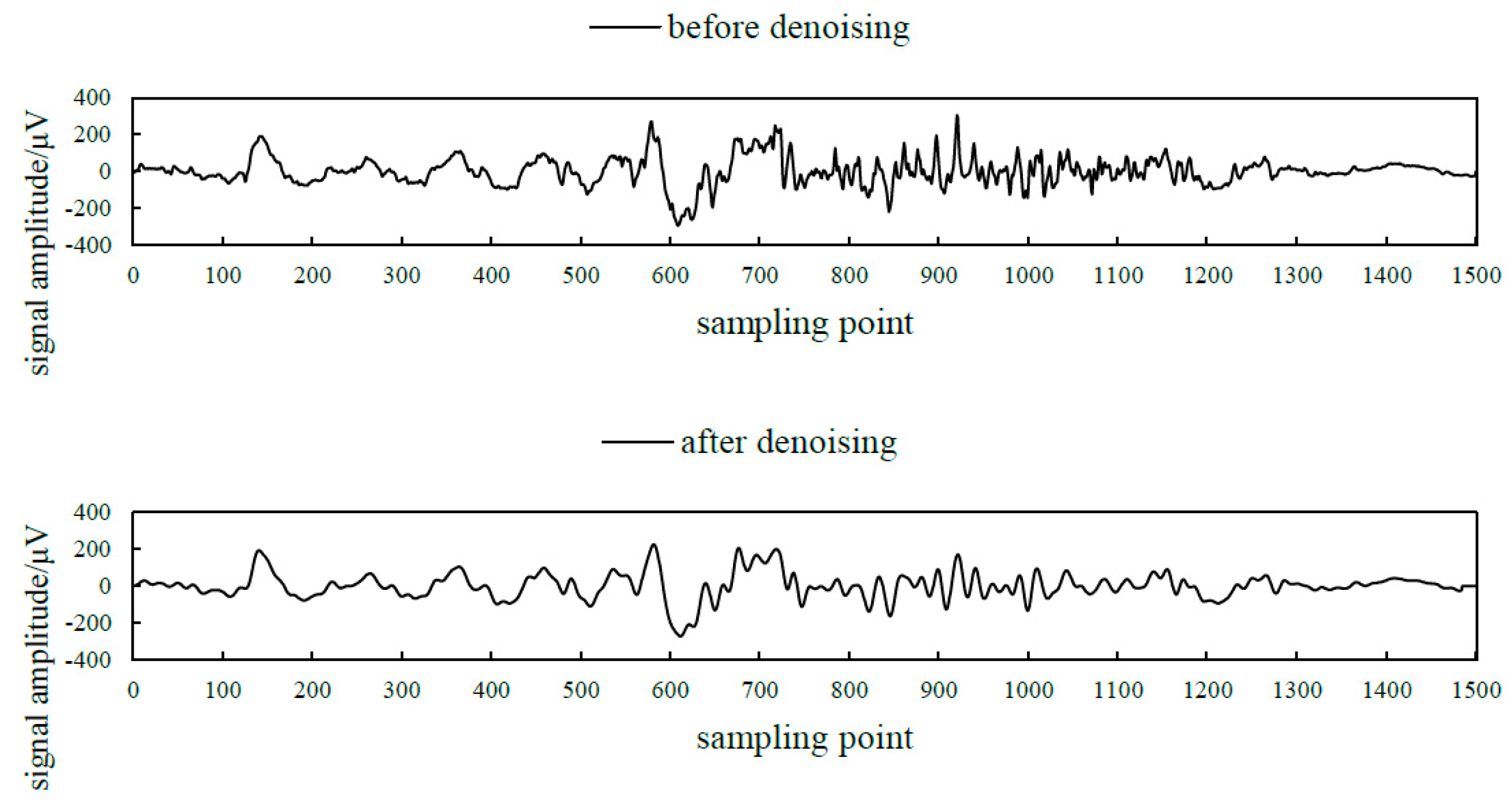

2.2.1. Signal Denoising

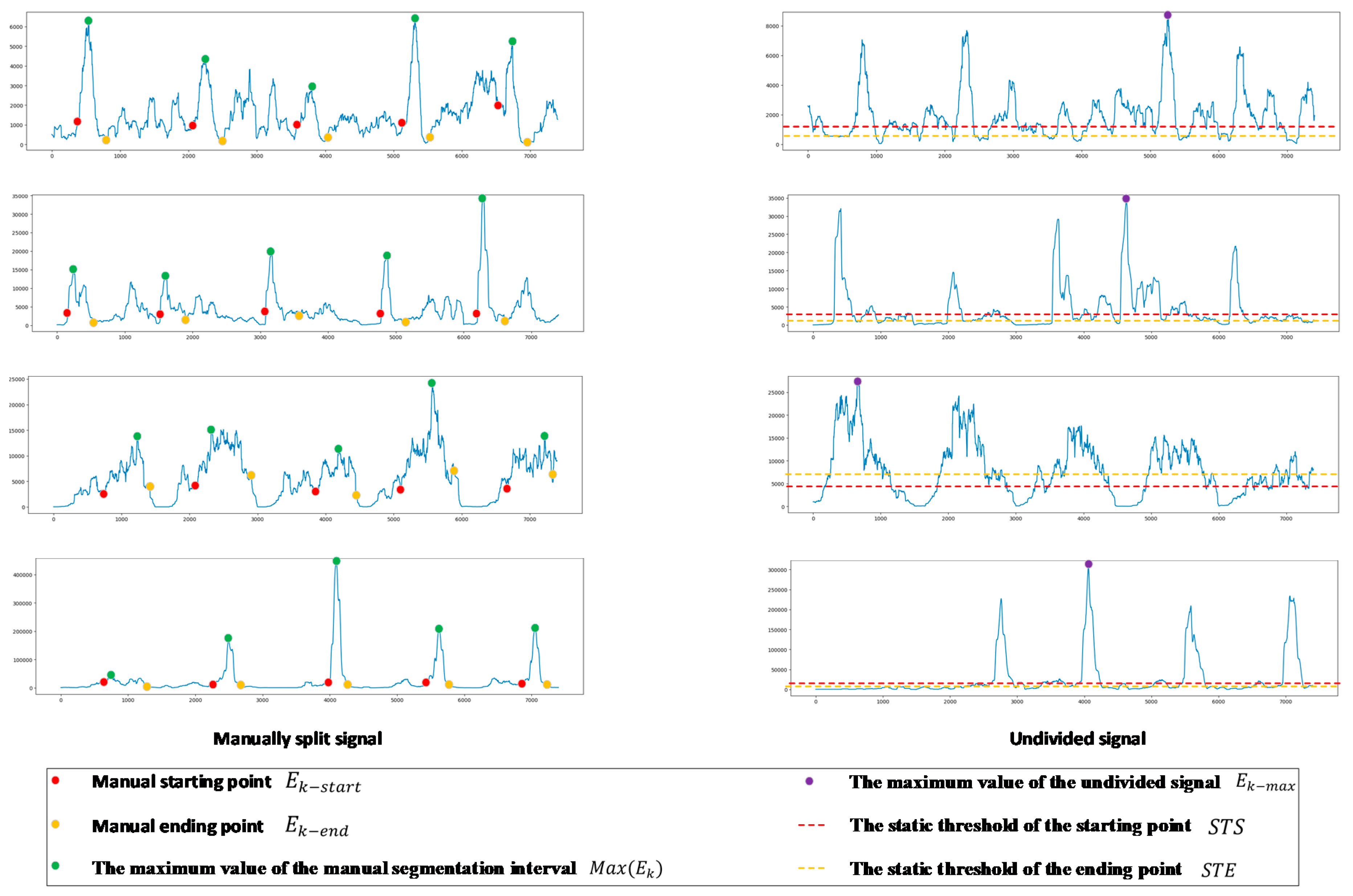

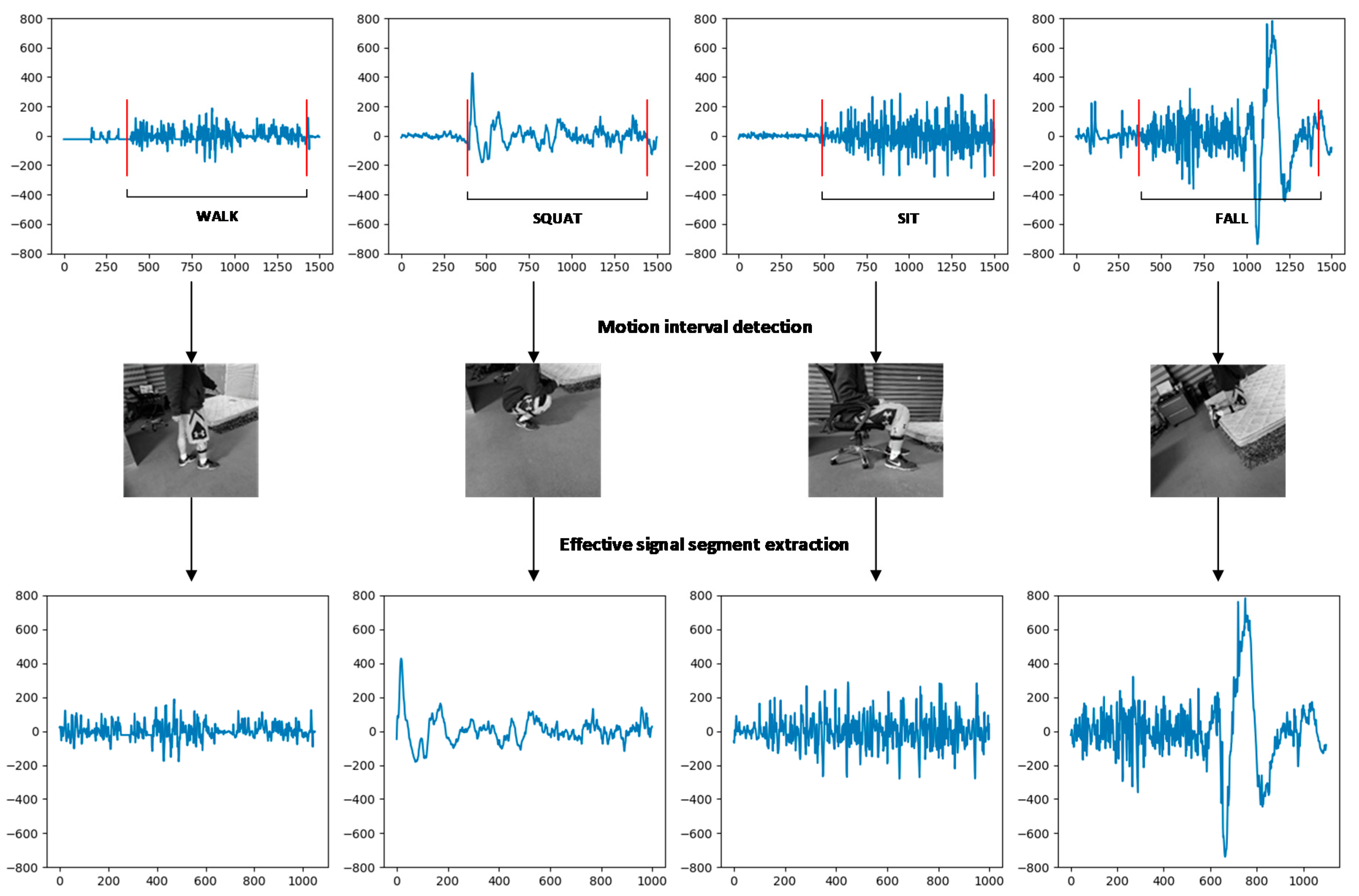

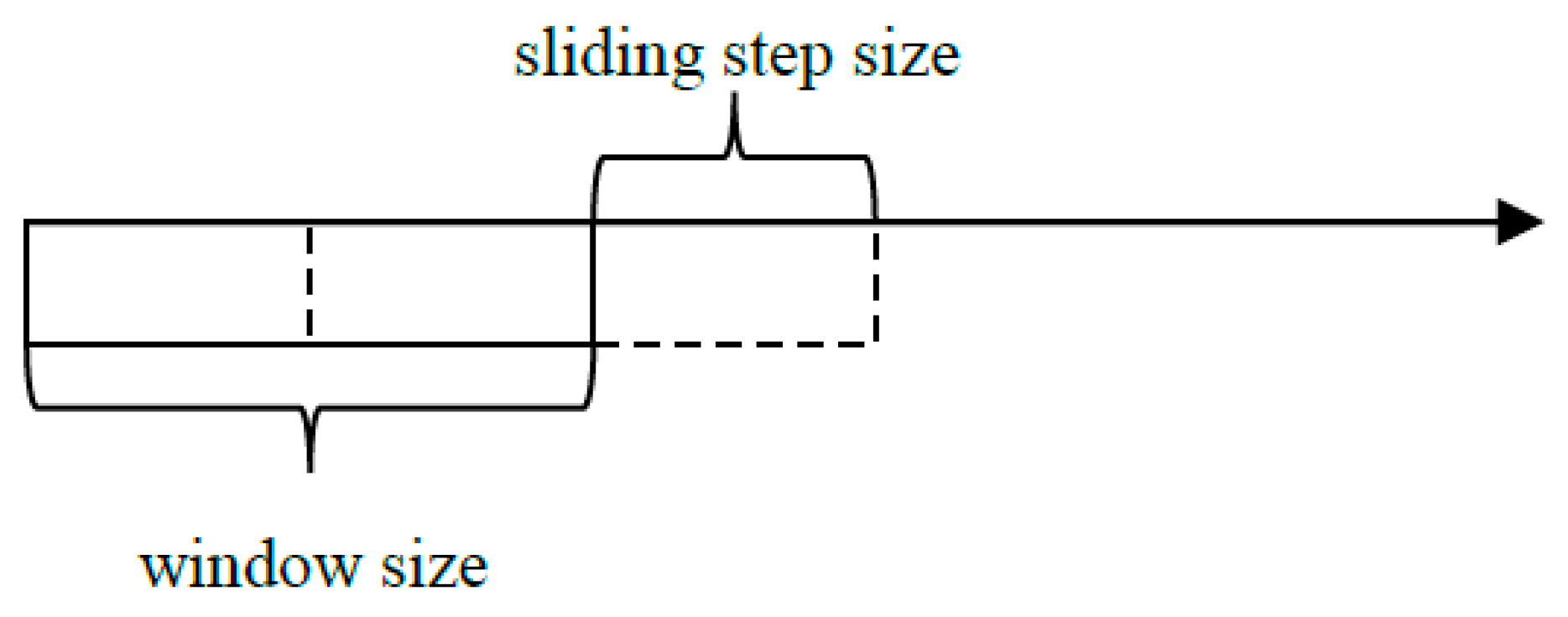

2.2.2. Extraction of Effective Signal Segment

3. SEMG Feature Selection and Fall Detection Method

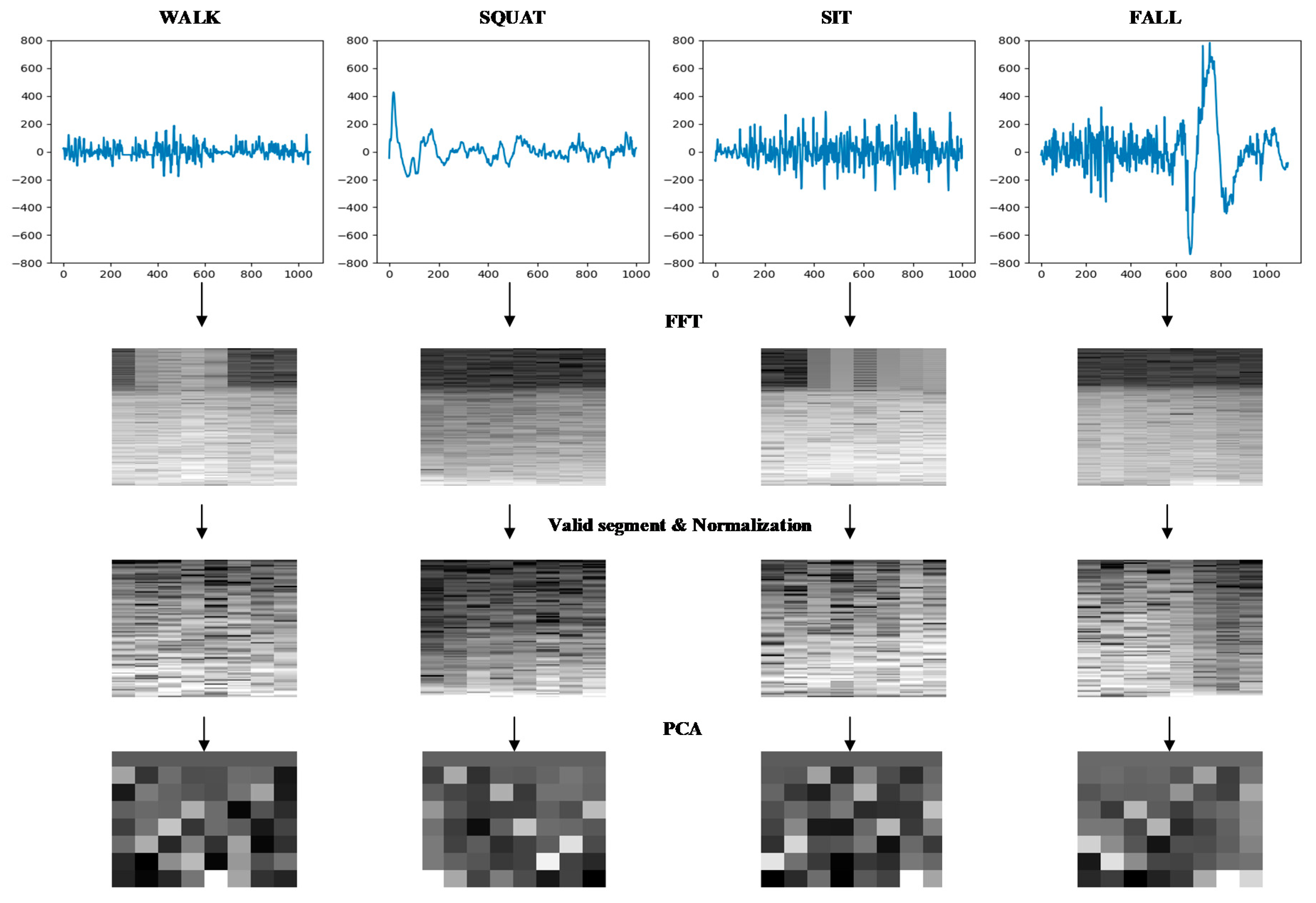

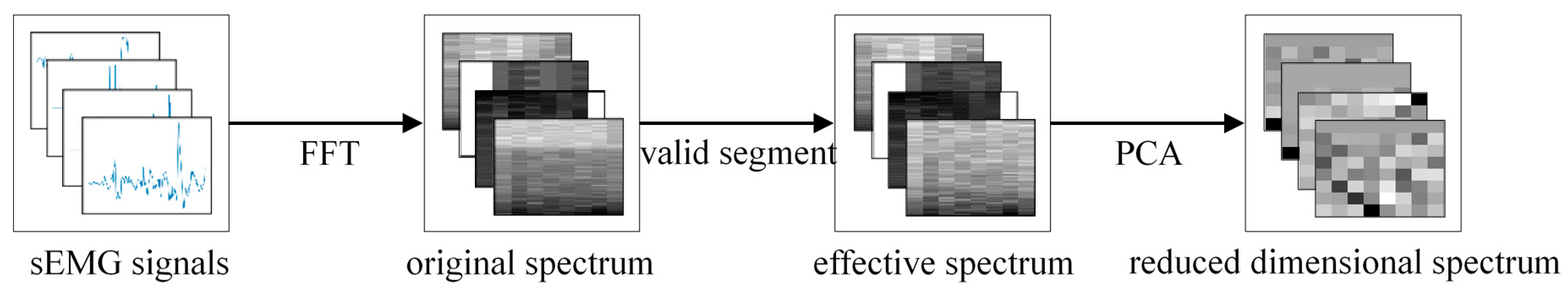

3.1. SEMG Spectrograms Extraction

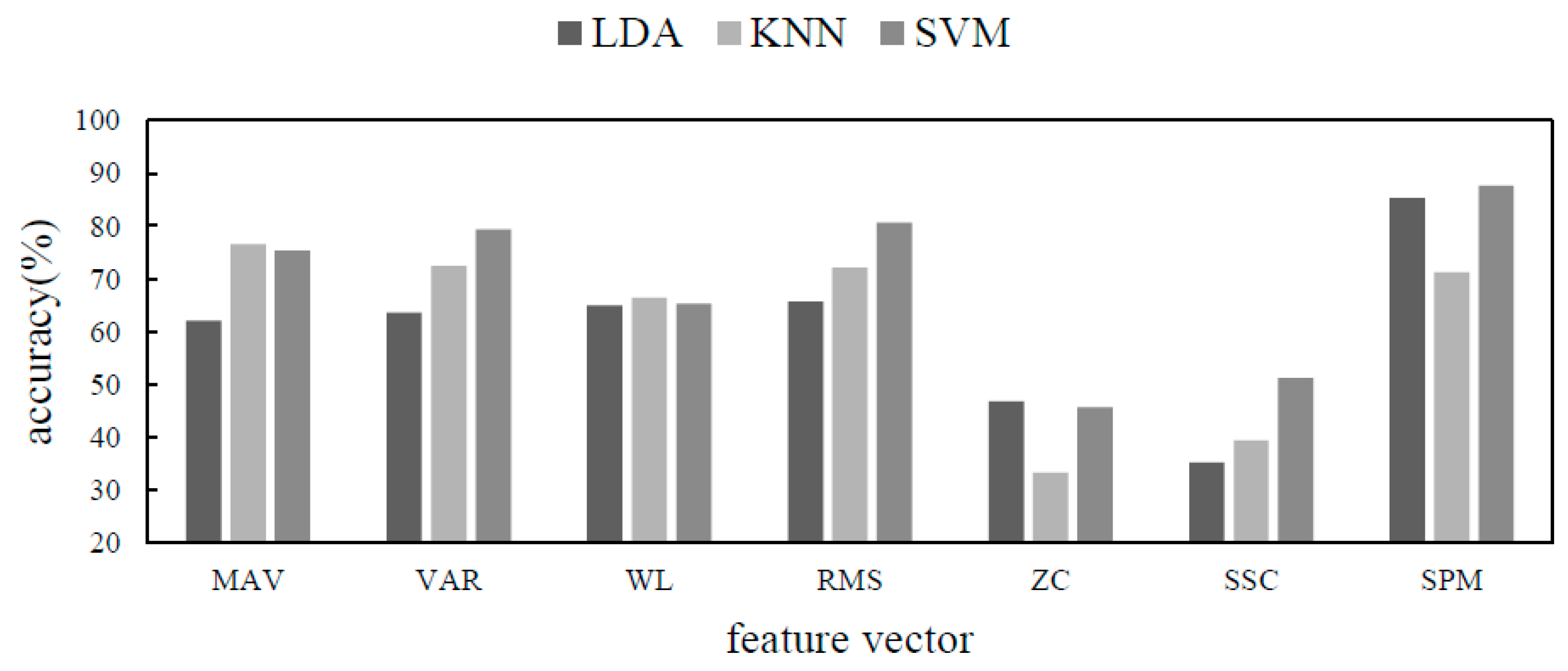

3.2. SEMG Feature Selection

- mean absolute value:

- variance:

- waveform length:

- root mean square:

- zero crossing:

- slope sign change:

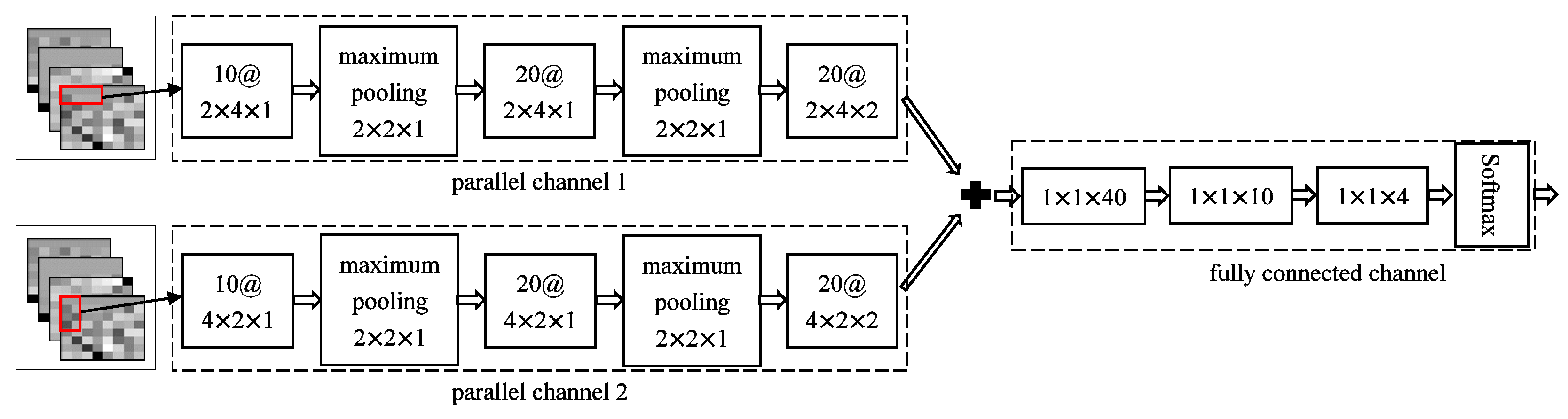

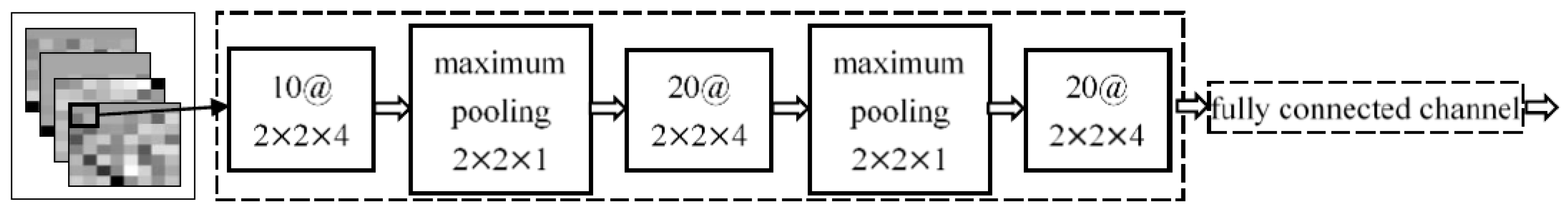

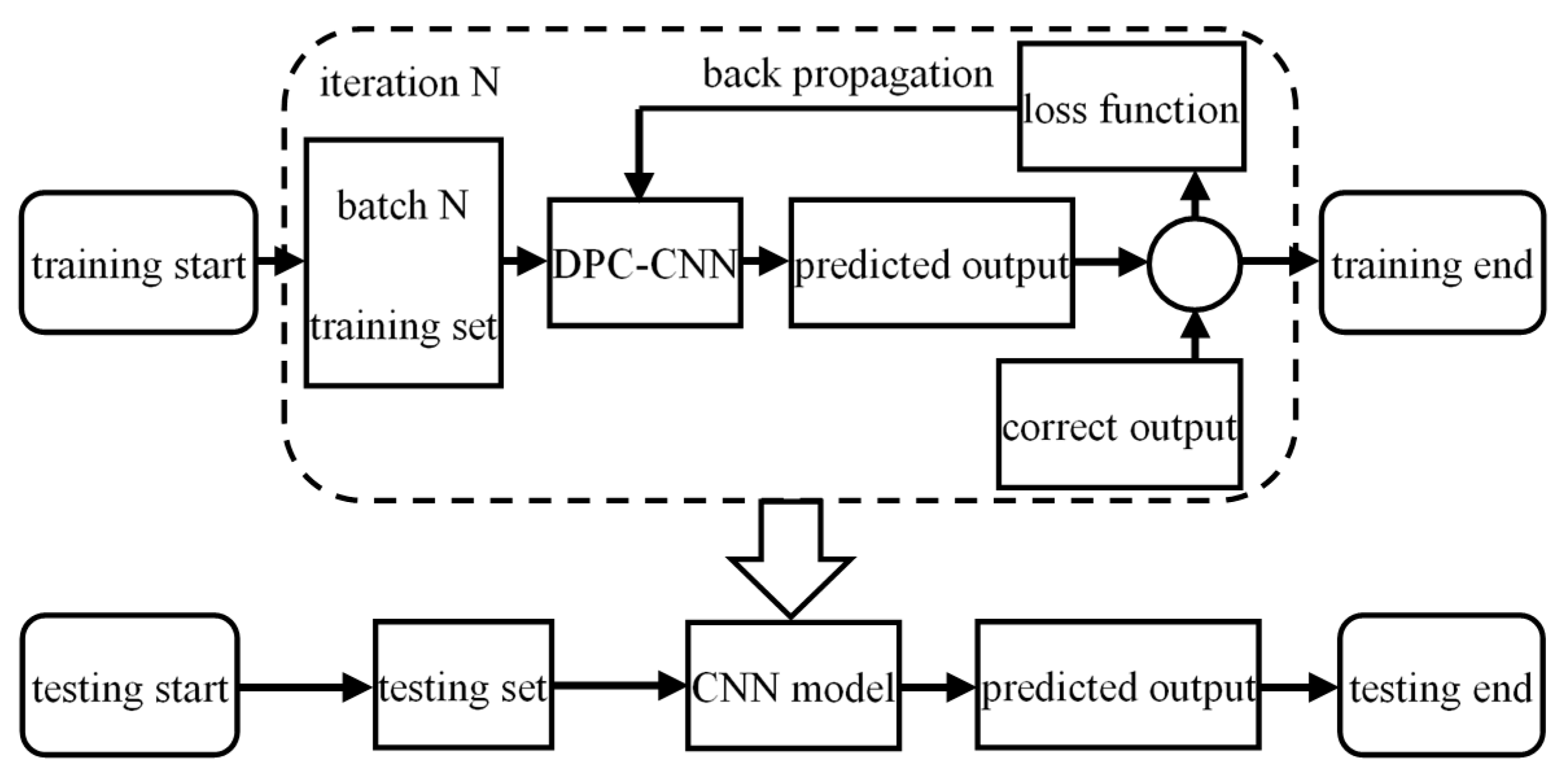

3.3. Fall Detection Method Based on IDPC-CNN

4. Experiment

- Accuracy (), the accuracy of all samples and the formula is:

- Sensitivity (), the detection rate of all fall samples and the formula is:

- Specificity (), the detection rate of all daily activity samples and the formula is:

5. Results

6. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rubenstein, L.Z. Falls in older people: epidemiology, risk factors and strategies for prevention. Age Ageing 2006, 35, ii37–ii41. [Google Scholar] [CrossRef] [PubMed]

- Luo, D.; Luo, H.Y. Fall detection algorithm based on random forest. J. Comput. Appl. 2015, 35, 3157–3160. [Google Scholar]

- Sase, P.S.; Bhandari, S.H. Human Fall Detection using Depth Videos. In Proceedings of the 2018 5th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 22–23 February 2018; pp. 546–549. [Google Scholar]

- Li, X.; Nie, L.; Xu, H.; Wang, X. Collaborative Fall Detection Using Smart Phone and Kinect. Mob. Netw. Appl. 2018, 23, 775–788. [Google Scholar] [CrossRef]

- Baldewijns, G.; Debard, G.; Mertes, G.; Croonenborghs, T.; Vanrumste, B. Improving the accuracy of existing camera based fall detection algorithms through late fusion. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, Korea, 11–15 July 2017; pp. 2667–2671. [Google Scholar]

- Wang, F.T.; Chan, H.L.; Hsu, M.H.; Lin, C.K.; Chao, P.K.; Chang, Y.J. Threshold-based fall detection using a hybrid of tri-axial accelerometer and gyroscope. Physiol. Meas. 2018, 39, 105002. [Google Scholar] [CrossRef] [PubMed]

- Mao, A.; Ma, X.; He, Y.; Luo, J. Highly portable, sensor-based system for human fall monitoring. Sensors 2017, 17, 2096. [Google Scholar] [CrossRef] [PubMed]

- Er, P.V.; Tan, K.K. Non-intrusive fall detection monitoring for the elderly based on fuzzy logic. Measurement 2018, 124, 91–102. [Google Scholar] [CrossRef]

- Hsieh, C.Y.; Liu, K.C.; Huang, C.N.; Chu, W.C.; Chan, C.T. Novel Hierarchical Fall Detection Algorithm Using a Multiphase Fall Model. Sensors 2017, 17, 307. [Google Scholar] [CrossRef]

- Mezghani, N.; Ouakrim, Y.; Islam, M.R.; Yared, R.; Abdulrazak, B. Context aware adaptable approach for fall detection bases on smart textile. In Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February2017; pp. 473–476. [Google Scholar]

- Nizam, Y.; Mohd, M.; Jamil, M. Development of a user-adaptable human fall detection based on fall risk levels using depth sensor. Sensors 2018, 18, 2260. [Google Scholar] [CrossRef] [PubMed]

- Iazzi, A.; Rziza, M.; Thami, R.O.H. Fall detection based on posture analysis and support vector machine. In Proceedings of the 2018 4th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sousse, Tunisia, 21–24 March 2018; pp. 1–6. [Google Scholar]

- Yoo, S.G.; Oh, D. An artificial neural network–based fall detection. Int. J. Eng. Bus. Manag. 2018, 10, 1847979018787905. [Google Scholar] [CrossRef]

- Yang, X.; Xiong, F.; Shao, Y.; Niu, Q. WmFall: WiFi-based multistage fall detection with channel state information. Int. J. Distrib. Sens. Netw. 2018, 14, 1550147718805718. [Google Scholar] [CrossRef]

- Yu, S.; Chen, H.; Brown, R.A. Hidden Markov model-based fall detection with motion sensor orientation calibration: A case for real-life home monitoring. IEEE J Biomed. Health Inform. 2018, 22, 1847–1853. [Google Scholar] [CrossRef] [PubMed]

- Junior, C.L.B.; Adami, A.G. SDQI-Fall Detection System for Elderly. Lat. Am. Trans. 2018, 16, 1084–1090. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Hu, Y.; Wong, Y.; Wei, W.; Du, Y.; Kankanhalli, M.; Geng, W. A novel attention-based hybrid CNN-RNN architecture for sEMG-based gesture recognition. PLoS ONE 2018, 13, e0206049. [Google Scholar] [CrossRef] [PubMed]

- Samuel, O.W.; Asogbon, M.G.; Geng, Y.; AI-Timemy, A.H.; Pirbhulal, S.; Ji, N.; Chen, S.; Fang, P.; Li, G. Intelligent EMG Pattern Recognition Control Method for Upper-Limb Multifunctional Prostheses: Advances, Current Challenges, and Future Prospects. IEEE Access 2019, 7, 10150–10165. [Google Scholar] [CrossRef]

- Feng, N.; Shi, Q.; Wang, H.; Gong, J.; Liu, C.; Lu, Z. A soft robotic hand: design, analysis, sEMG control and experiment. Int. J. Adv. Manuf. Technol. 2018, 97, 319–333. [Google Scholar] [CrossRef]

- Cheng, L.; Chen, M.; Li, Z. Design and Control of a Wearable Hand Rehabilitation Robot. IEEE Access 2018, 6, 74039–74050. [Google Scholar] [CrossRef]

- Sun, Y.; Li, C.; Li, G.; Jiang, G.; Jiang, D.; Liu, H.; Zheng, Z.; Shu, W. Gesture recognition based on kinect and sEMG signal fusion. Mob. Net. Appl. 2018, 23, 797–805. [Google Scholar] [CrossRef]

- Cheng, J.; Wei, F.; Li, C.; Liu, Y.; Liu, A.; Chen, X. Position-independent gesture recognition using sEMG signals via canonical correlation analysis. Comput. Biol. Med. 2018, 103, 44–54. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, Y.; He, L.; Wang, H.; Li, W.; Liu, Z. Motion Estimation from Surface Electromyogram Using Adaboost Regression and Average Feature Values. IEEE Access 2019, 7, 13121–13134. [Google Scholar] [CrossRef]

- Zhao, C.; Ma, S.; Liu, Y. Onset detection of surface diaphragmatic electromyography based on sample entropy and individualized threshold. J. Biomed. Eng. 2018, 35, 852–859. [Google Scholar]

- Allard, U.C.; Nougarou, F.; Fall, C.L.; Giguère, P.; Gosselin, C.; Laviolette, F.; Gosselin, B. A convolutional neural network for robotic arm guidance using semg based frequency-features. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2464–2470. [Google Scholar]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.L.; Yang, C.Y. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Naik, G.R.; Selvan, S.E.; Gobbo, M.; Acharyya, A.; Nguyen, H.T. Principal component analysis applied to surface electromyography: a comprehensive review. IEEE Access 2016, 4, 4025–4037. [Google Scholar] [CrossRef]

- Zhai, X.; Jelfs, B.; Chan, R.H.M.; Tin, C. Self-recalibrating surface EMG pattern recognition for neuroprosthesis control based on convolutional neural network. Front. Neurosci. 2017, 11, 379. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of Wrist-Worn, Real-Time Hand and Surface Gesture Recognition via sEMG and IMU Sensing. IEEE Trans. Ind. Inf. 2018, 14, 3376–3385. [Google Scholar] [CrossRef]

- Ding, Z.; Yang, C.; Tian, Z.; Yi, C.; Fu, Y.; Jiang, F. sEMG-Based Gesture Recognition with Convolution Neural Networks. Sustainability 2018, 10, 1865. [Google Scholar] [CrossRef]

| Subject | Gender | Age | Height/cm | Weight/kg | Lower Limbs Diseases |

|---|---|---|---|---|---|

| 1 | Male | 24 | 176 | 74 | No |

| 2 | Male | 23 | 175 | 78 | No |

| 3 | Male | 23 | 172 | 72 | No |

| 4 | Male | 25 | 179 | 83 | No |

| 5 | Male | 24 | 170 | 70 | No |

| 6 | Female | 27 | 168 | 51 | No |

| 7 | Female | 23 | 165 | 47 | No |

| 8 | Female | 24 | 162 | 45 | No |

| 9 | Female | 24 | 170 | 55 | No |

| 10 | Female | 23 | 160 | 44 | No |

| Principal Component | Variance Contribution Rate | Accumulated Variance Contribution Rate |

|---|---|---|

| 1 | 47.6 | 47.6 |

| 2 | 25.5 | 73.1 |

| 3 | 10.2 | 83.3 |

| 4 | 5.3 | 88.6 |

| 5 | 3.1 | 91.7 |

| 6 | 1.8 | 93.5 |

| 7 | 1.1 | 94.6 |

| 8 | 0.7 | 95.3 |

| 9 | 0.2 | 95.5 |

| 10 | 0.1 | 95.6 |

| 20 | 0.06 | 96.38 |

| Performance Indicators | RMS | SPM |

|---|---|---|

| Signal preprocessing time(ms) | 25.09 | 25.09 |

| Feature extraction time(ms) | 101.37 | 357.16 |

| Classifier training time(h) | 7.5 | 10.6 |

| Classifier test result time(ms) | 57.12 | 63.5 |

| Accuracy(%) | 84.21 | 92.55 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Li, H.; Lou, C.; Liang, T.; Liu, X.; Wang, H. A New Approach to Fall Detection Based on Improved Dual Parallel Channels Convolutional Neural Network. Sensors 2019, 19, 2814. https://doi.org/10.3390/s19122814

Liu X, Li H, Lou C, Liang T, Liu X, Wang H. A New Approach to Fall Detection Based on Improved Dual Parallel Channels Convolutional Neural Network. Sensors. 2019; 19(12):2814. https://doi.org/10.3390/s19122814

Chicago/Turabian StyleLiu, Xiaoguang, Huanliang Li, Cunguang Lou, Tie Liang, Xiuling Liu, and Hongrui Wang. 2019. "A New Approach to Fall Detection Based on Improved Dual Parallel Channels Convolutional Neural Network" Sensors 19, no. 12: 2814. https://doi.org/10.3390/s19122814

APA StyleLiu, X., Li, H., Lou, C., Liang, T., Liu, X., & Wang, H. (2019). A New Approach to Fall Detection Based on Improved Dual Parallel Channels Convolutional Neural Network. Sensors, 19(12), 2814. https://doi.org/10.3390/s19122814