Abstract

The extended target probability hypothesis density (ET-PHD) filter cannot work well if the density of measurements varies from target to target, which is based on the measurement set partitioning algorithms employing the Mahalanobis distance between measurements. To tackle the problem, two measurement set partitioning approaches, the shared nearest neighbors similarity partitioning (SNNSP) and SNN density partitioning (SNNDP), are proposed in this paper. In SNNSP, the shared nearest neighbors (SNN) similarity, which incorporates the neighboring measurement information, is introduced to DP instead of the Mahalanobis distance between measurements. Furthermore, the SNNDP is developed by combining the DBSCAN algorithm with the SNN similarity together to enhance the reliability of partitions. Simulation results show that the ET-PHD filters based on the two proposed partitioning algorithms can achieve better tracking performance with less computation than the compared algorithms.

1. Introduction

In most multi-target tracking applications, it is assumed that each target produces one measurement per time step at most. This is reasonable for cases when the target’s extension is assumed to be negligible in comparison to sensor resolution. However, with the increase in sensor resolution capabilities, this assumption is no longer valid. In this case, different scattering centers of one target may give rise to several distinct detections varying from scan to scan, both in its number and the relative origin location. The correspoding cases can be found in the following situations: (1) Vehicles use radar sensors to track other road-users. (2) The ground radar stations track airplanes which are suffificiently close to the sensor. (3) Pedestrians are tracked using laser range sensors in mobile robotics. In addition, due to the high inner-density of the group target, it is neither practical nor necessary to track all individual targets within the target group. A group target can be tracked as a whole, and the problem formulation for tracking the group target is the same as that for tracking the extended target. Thus, in some works, such as [1,2,3,4,5,6,7], the extended target and the group target are studied together. Moreover, some studies take a group target as an extended target [1,4,5,6], which is also applied in this paper.

Extended target tracking has attracted much attention in the last decade. The studies on single extended target tracking mainly focus on measurement distribution, and the description and estimation of the target extension [1,6,7,8,9,10]. Multiple extended target tracking is a challenging problem due to the complexities caused by data association. In [11,12], the random matrix model and mixture RHMs were integrated into the probabilistic multi-hypothesis tracking (PMHT) framework to track multiple extended targets, respectively. In [13], the joint probabilistic data association (JPDA) was applied to tackle the problem of multiple extended target tracking. A box particle filter was developed to track multiple extended objects by combining interval-based techniques and the Bayesian framework [14]. Another way is based on a random finite set (RFS), such as the probability hypothesis density (PHD) filter [15], cardinalized probability hypothesis density (CPHD) filter [16] and multi-Bernoulli Filter [17]. In the work [18], an extended-target PHD (ET-PHD) filter was presented by extending the PHD filter to multiple extended targets. A CPHD filter for extended targets was proposed in [19], and a unified CPHD filter was proposed to track extended targets and unresolved group targets [20]. A Gaussian-mixture implementation of the ET-PHD filter was proposed in [21,22], called the extended target Gaussian-mixture PHD (ET-GMPHD) filter. The generalized labeled multi-Bernoulli filter was applied to track multiple extended targets based on the gamma Gaussian inverse Wishart model [17]. In [23], the Gaussian surface matrix was introduced into the PHD filter for multiple extended targets. Though those works based on RFS [17,18,19,20,21,22,23] can avoid explicit associations between measurements and targets, with all possible partitions of the measurement set need to be theoretically considered. In addition, the number of all possible partitions will grow dramatically with the increase in the number of measurements. Distance Partitioning (DP) and Distance Partitioning with Sub-Partitioning (DPSP) were proposed to obtain a reasonable subset of all possible partitions by Granstrom et al. in [22]. A fast partitioning algorithm based on a fuzzy ART model was proposed for the ET-GMPHD filter [24]. The algorithm consumed less computation time than DP without losing tracking performance. Since many of the cell and Gaussian mixture component pairs will be distant, the effect of updating that part of the PHD intensity with that cell will be negligible. According to this, Scheel et al. proposed a data segmentation method to alleviate computational complexity in [25]. The shape selection partitioning measurement partitioning algorithm was proposed in [26]. The algorithm first calculated potential centres and shapes of targets, and then combined each centre with different shapes to divide the measurements into subcells. In [27], the generalised distance partitioning (GDP), which applied the distance partitioning and Lmax-partitioning, was proposed to reduce the number of partitions and decrease computational complexity. The DBSCAN algorithm and the relaxed intersection were used to deal with data association and reduce the computational complexity in the data association process for a multiple extended target box particle filter [14].

When the density of measurements varies with the target, those measurement partitioning algorithms, which were applied Mahalanobis distance between measurements [28], cannot work well, such as DP, DPSP and GDP. In this paper, we employ the shared nearest neighbors (SNN) similarity [29], which can reflect the local configuration of measurements in the measurement space, to propose two measurement set partitioning approaches. The two approaches, SNN Similarity Partitioning (SNNSP) and SNN Density Partitioning (SNNDP), are relatively insensitive to variation in measurement density of extended targets. In SNNSP, the SNN similarity is introduced instead of the Mahalanobis distance between measurements, and the SNNDP is developed by combining the DBSCAN [30] with the SNN similarity to enhance the reliability of partitions.

Although it takes some time to calculate the SNN similarity, the ET-PHD filter based on the proposed partitioning approaches decreases the computational burden due to the less number of the resulting partitions. Especially in high clutter scenarios, a significant reduction in computational complexity can be achieved. Simulation results demonstrate that the proposed partitioning approaches can outperform the compared ones in several typical scenarios, namely, differing densities of measurements, high clutter and proximity among extended targets.

The rest of the paper is organized as follows. We briefly describe the problem formulation in Section 2. Section 3 provides a summary of the DP and DPSP, which are proposed in [22]. Section 4 presents two proposed partitioning approaches in this paper. In Section 5, simulation results are given to compare the performance of the ET-GMPHD filter using proposed partitioning algorithms with that using the compared algorithms. Section 6 presents concluding remarks and outlines future research directions.

2. Problem Formulation

The PHD measurement update equation for the extended target PHD filter, based on the Poisson multitarget measurement model [31], is derived in [18]. The corrected PHD-intensity, which is given by the multiplication of the predicted PHD-intensity and the measurement pseudo-likelihood, can be shown as

where is the corrected PHD-intensity; is the predicted PHD-intensity. is the measurement pseudo-likelihood function which can be defined as

where, is the detected probability of the target; is the mean number of measurements from one target; is the mean number of clutter measurements per scan; is the spatial distribution of the clutter over the surveillance region; means that the partition p partitions the measurement set into non-empty cells W; denotes that the set W is a cell in the partition p; and are nonnegative coefficients defined for each partition and cell, respectively; is the likelihood function for a single target-generated measurement.

The first summation on the right hand side of (2) is taken over all partitions of the measurement set . All possible partitions of the measurement set need to be considered in theory. For example, the measurement set contains three individual measurements . All possible partitions of are shown as follows

While the number of all possible partitions will grow dramatically with the increase in the number of sensor measurements. Thus, only considering a reasonable subset of all possible partitions is necessary to decrease computational complexity.

3. Review of Distance Partitioning and Distance Partitioning with Sub-Partitioning

3.1. Distance Partitioning (DP)

The DP introduced by Granstrom [22] is based on the distance between measurements. Given a set of measurements and a distance measure , the distances between each pair of measurements can be calculated as

Granstrom has proved that there is a unique partition that leaves all pairs of measurements satisfying in the same cell. The algorithm is used to generate alternative partitions of the measurement set Z, by selecting different thresholds

The thresholds are selected from the set , and the Mahalanobis distance is selected as the distance measure in [22]. If one uses all of the elements in to form alternative partitions, partitions are obtained. In order to reduce the computational load, partitions are computed only for a subset of thresholds in the set .

For two target-originated measurements and belonging to the same target, is distributed with degrees of freedom equaling to the dimension of the measurement vector. A unitless distance threshold can be computed as for a given probability , where invchi2(·) is the inverse cumulative distribution function. Granstrom et al. [21] have illustrated that good target tracking results are achieved with partitions computed using the subset of distance thresholds in satisfying the condition , for lower probabilities and upper probabilities .

3.2. Distance Partitioning with Sub-Partitioning (DPSP)

The results given by the ET-GMPHD filter using the DP show the problem with underestimation of target set cardinality in the situations where two or more extended targets are spatially close [21]. In this situation, the DP will put those measurements from more than one target in the same cell W, and thus the ET-PHD filter based on the DP will interpret measurements from multiple targets as originating from the same target. The DPSP was proposed to remedy this problem in [22].

Suppose that a set of partitions using the DP has been obtained. Then, the maximum likelihood (ML) is applied to estimate the number of targets for each cell , denoted by . If this estimate is larger than one, split the cell into smaller cells, denoted by . Finally, a new partition, consisting of the new cells along with the other cells in , will be added to the list of partitions obtained by the DP. In [22], the K-means++ clustering, which modifies the standard K-means algorithm to overcome the problem that the cluster result is greatly affected by initial value [32], is applied to split measurements in the cell.

4. The Proposed SNN Partitioning

The partitions by the DP solely depend on the distances between each pair of measurements, ignoring the information from the local configuration of measurements. Though the distance thresholds are limited between and , to reduce the computational load, the number of partitions still grows dramatically with the increase in the number of measurements. Moreover, a considerable number of partitions obtained by the DP are unreasonable, but will lead to high computational complexity of the ET-PHD filter.

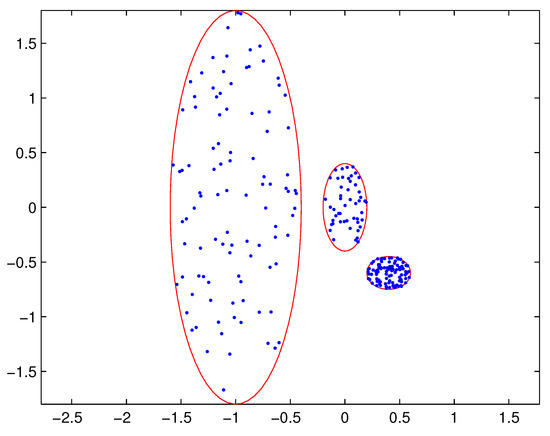

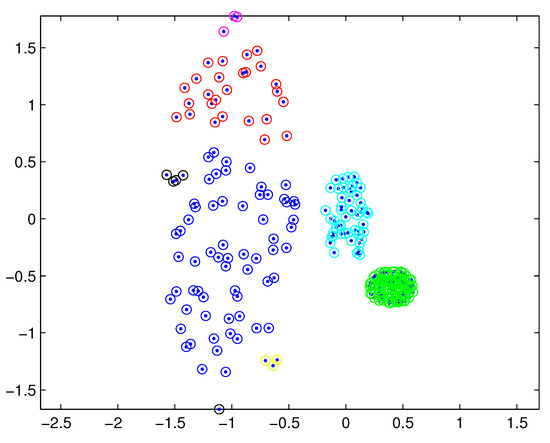

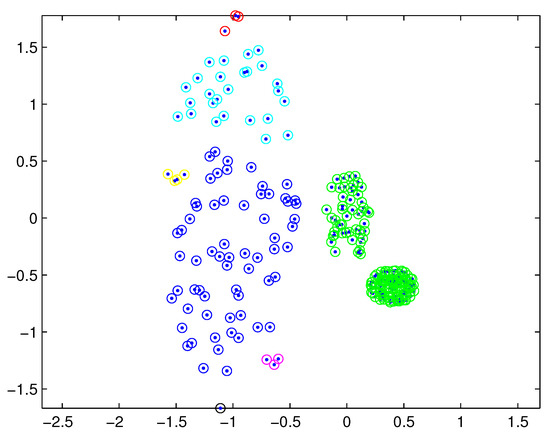

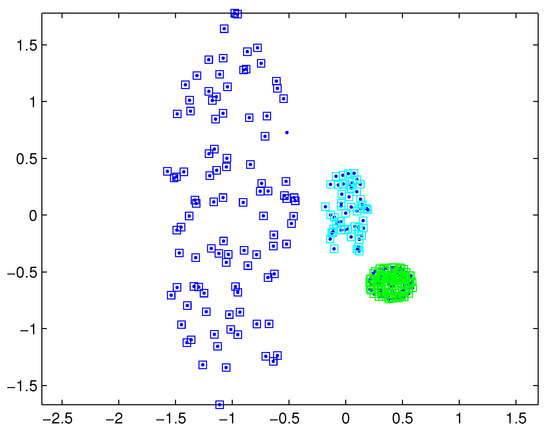

In practical applications, there may be a difference in the densities of measurement sources between different extended targets, and thus the density of measurements varies from target to target. In this case, reasonable partitions may not be included in the partitions by the DP. For example, Figure 1 depicts measurements from three extended targets with different measurement densities at a certain time scan. Measurements from the same extended target would be split into several small cells for a small threshold , as shown in Figure 2 (one cell is represented by circles of the same color). On the other hand, for a slightly bigger threshold , measurements from two targets are put in one cell as shown in Figure 3. Indeed, the appropriate distance threshold is unavailable for measurements shown in Figure 1, and the DP could not achieve the reasonable partition.

Figure 1.

Measurements from three extended targets.

Figure 2.

Partition by DP for a small threshold.

Figure 3.

Partition by DP for a bigger threshold.

The computational complexity of the ET-PHD recursion is strongly dependent on the total number of cells in the resulting partitions. Since the clutter measurements are usually far away from each other, they tend to form individual multiple cells by the DP and DPSP. If there is a large amount of clutter measurements over the surveillance region, the number of cells constituted by clutter measurements is always far larger than that from targets. Thus, most computation of the ET-PHD filter based on the DP and DPSP is caused by dealing with the clutter.

For high clutter and the densities of measurements varing from target to target, we apply the SNN similarity, which reflects the local configuration of measurements in the measurement space to develop two measurement partitioning algorithms, SNNSP and SNNDP. The SNNSP only depends on the SNN similarity to decide whether two measurements are in the same cell or not. To promote the reliability further, we develop the SNNDP by combining the SNN similarity with the DBSCAN algorithm.

4.1. SNN Similarity

The SNN similarity was firstly proposed by Jarvis and Patric [29]. The SNN similarity between and is defined as the number of the nearest neighbors shared by the two measurements if and only if and have each other in their K nearest neighbor lists, as shown below

4.2. SNN Partitioning Algorithm

This section introduces the SNNSP and SNNDP proposed in this paper.

4.2.1. SNNSP

The SNNSP algorithm based on the SNN similarity puts all pairs of measurements satisfying in the same cell, and selects different similarity thresholds to obtain different partitions. The SNNSP can be described as the following steps.

- Step 1: Select the Mahalanobis distance as the distance measure , and then compute the distance between each pair of measurements.

- Step 2: Find K nearest neighbors for each measurement, and then compute the SNN similarity between each pair of measurements.

- Step 3: Decide the similarity threshold set . The similarity threshold set can be selected from its effective range, which is between the minimum and maximum of the SNN similarity, i.e., belonging to . K is usually small, and so is the number of partitions by the SNNSP. Note that the neighborhood list size will decide the maximum of the upper similarity threshold.

- Step 4: For each given , leave all pairs of measurements satisfying in the same cell. partitions of the measurement set Z can be generated by selecting different similarity thresholds. Some resulting partitions might be identical, and hence, need to be discarded so that each partition at the end is unique.

The SNN similarity reflects the local configuration of measurements in the measurement space, and has built-in automatic scaling. When measurements are widely spread, the volume containing K nearest neighborhoods expands, and conversely, the volume shrinks when measurements are tightly positioned. Therefore, the SNNSP does not critically depend on the distance thresholds, and is relatively insensitive to variations in density.

4.2.2. SNNDP

To enhance the reliability of partitions further, we propose the SNNDP by combining the SNN similarity with the DBSCAN algorithm. In the DBSCAN algorithm [30], the density of a point is obtained by counting the number of points in a specified radius around the point. Points with a density above a specified threshold are classified as core points. A point considered as a border point is in the neighborhood region of a certain core point, while points which are neither core points nor border points are taken as noise points. Noise points are discarded, and the clusters are formed around the core points. If two core points are neighbors of each other, then their clusters are joined.

Let denote the set of the measurements between which the SNN similarity is not less than a given threshold . Then, the SNN density of can be regarded as the number of the meaurements in . Since measurements with high SNN densities tend to be generated from extended targets, they are considered as core measurements, and measurements with low SNN densities are taken as border measurements. The details of the SNNDP are as follows.

Steps 1–3 are the same as that in the SNNSP. For each given , carry out step 4 and step 5 shown as below.

- Step 4: For a given , compute the SNN density of every measurement. Measurements whose SNN densities are not less than a given SNN density threshold are considered as core measurements, while those that are less than but larger than 0 are considered as border measurements.

- Step 5: Leave all pairs of core measurements satisfying in the same cell. For the border measurement , if the measurement is the nearest core point according to the SNN similarity, will be put in the cell where is.

The pseudo-code of the SNNDP is given in Table 1.

Table 1.

The pseudo-code of the SNNDP.

As done in the SNNSP, identical partitions must be discarded to ensure that each partition at the end is unique. It is noted that the SNNDP reduces to the SNNSP under the situation that is 1.

The number of the partitions by the SNNSP and SNNDP is not larger than the neighborhood list size. Therefore, the computational complexity of the ET-PHD filter using the proposed partitioning algorithms is much less than that using distance partitioning, especially in the case of a large number of clutter measurements.

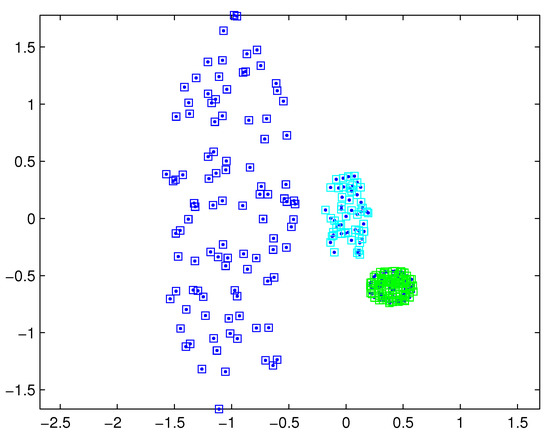

The SNNDP (like the SNNSP) could also handle the situation that the densities of measurements varies from target to target. Reconsidering measurements shown in Figure 1, the SNNSP and SNNDP contain the basically correct partition as shown in Figure 4 and Figure 5, respectively, where the neighbor list size K is 20 and the SNN density threshold is set to 5.

Figure 4.

Partition by SNNSP for a certain similarity threshold.

Figure 5.

Partition by SNNDP for a certain similarity threshold.

4.3. Parameterizations

The neighborhood list size K and the SNN density threshold are two important parameters. If the neighborhood list size K is too small, the resulting partitions tend to focus on local variations, and thus, measurements from one extended target would be broken up into multiple cells even with the minimum similarity threshold. Conversely, if the neighborhood list size K is too large, the resulting partitions tend to neglect local variations, and thus, measurements from different extended targets would be put in one cell even with the maximum similarity threshold. The SNN density threshold is usually set to be greater than 1 by the SNNDP, due to the SNNDP reducing to the SNNSP under the situation that is 1. If the threshold SNN density is too large, measurements from extended targets would be considered as clutter. could be selected according to K, since the appropriate value of mainly depends on K for given measurements and a given threshold .

Simulation experiments are carried out to analyze the effect of K and on the resulting partitions. The number of measurements is set to follow the Poisson distribution, and measurements from extended targets follow the uniform distribution and Gaussian distribution, respectively. Actually, there is no significant difference in the experimental results between Gaussian distribution and uniform distribution. Table 2 and Table 3 illustrate the range of K and , when the resulting partitions contain the correct partition or basically correct partition.

Table 2.

Desirable range of the neighborhood list size.

Table 3.

Desirable range of the SNN density threshold.

As can be seen from Table 2, the more the expected number of measurements per target is, the looser the requirement for the neighborhood list size K is. An appropriate value of the neighborhood list size K could be obtained even when there is a big difference in the expected numbers of measurements between different extended targets. From Table 3, it can be observed that the desirable range of is large. In addition, the SNNSP and SNNDP wouldn’t work well if the expected number of measurements per target is small.

5. Simulation Results

In this section, simulation results are given to show the performance of the ET-PHD filter using the proposed partitioning approaches compared to using the compared algorithms. Here, we apply the Gaussian-mixture implementation of the ET-PHD filter (ET-GMPHD) proposed in [21,22]. Section 5.1 presents the simulation setup for tracking multiple extended targets. In Section 5.2, performance comparisons are conducted among the SNNSP, SNNDP, DP, DPSP and Distance Density Partitioning (DDP). The DDP applies the DBSCAN algorithm to cluster measurements and selects different specified radii to generate different partitions.

5.1. Simulation Setup

For illustration purposes, we consider a two-dimensional scenario over the surveillance region (in m). The kinematic state of each extended target consists of the corresponding position components and velocity components . denotes the transpose of a matrix .

The dynamic evolution of each target state is assumed to follow a linear Gaussian model

for , where is the state transition matrix, is the noise gain, is Gaussian white noise with the covariance , and is the number of extended targets.

The sensor measurements from target j are generated according to the following linear Gaussian model

where is the observation matrix, and is white Gaussian noise with covariance . Each target is assumed to give rise to measurements independently of the other targets.

The parameters in the dynamic and measurement models are shown as follows,

with sampling time s, covariance matrices for process noise and measurement noise (2 m/s) and (20 m), respectively. is identity matrix.

The probability of survival is set to , and the probability of detection is . The birth intensity in the simulations is

with

The spawn intensity is

where , and is the target from which the new target is spawned.

5.2. Scenarios and Results

In this section, two scenarios are used to validate the performance of the SNNSP and SNNDP. The neighborhood list size K is set to 10. The density threshold of the SNNDP and DDP is set to 3. To keep the number of Gaussian components at a computationally tractable level, the pruning and merging algorithm is performed as in [33]. After pruning and merging, the ET-GMPHD filter selects the means of the Gaussian components that have weights greater than some threshold, e.g., 0.5, as multiple extended target state estimates.

The OSPA distance makes a comprehensive evaluation for the estimated number of targets and estimates of kinematic states. It was considered as a metric for performance evaluation of the multitarget filter in [34,35] and is widely applied in recent several years [20,21,22,36]. However, the OSPA distance is not suitable for comparing the performance of the ET-PHD filter with different partitioning approaches. If the cut-off parameter of the OSPA distance is relatively small, the big error in the estimated kinematic states could not be reflected adequately. On the contrary, if the cut-off parameter is set to a big value, the OSPA distance stresses the error of the estimated number of targets, ignoring the estimation error of kinematic states.

In this paper, the performance of the multi-target filter on kinematic states is evaluated by the mean error based on an —Wasserstein metric, which is defined as

where, is the set of kinematic states; is the set of estimates of kinematic states; M is the number of Monte Carlo runs; is Euclidian distance; represents the set of permutations of length m with elements taken from . To analyse the computational complexity of the ET-GMPHD filter with different partitioning algorithms, the number of partitions, the number of cells, computational time of the partitioning algorithm, and computational time of the ET-GMPHD filter recursion per scan are given. They are obtained by averaging over 100 Monte Carlo runs, respectively.

5.2.1. Differing Densities

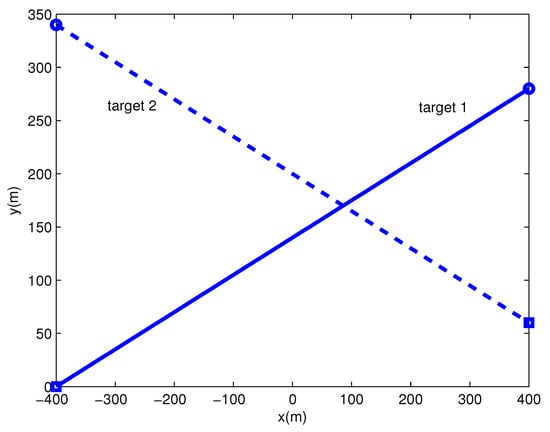

There are two extended targets with different measurement density in the surveillance region, and their trajectories are shown in Figure 6. Each extended target generates measurements per scan with Poisson rate 20. The density of measurements from target 2 is about five times that from target 1, and measurements from extended targets follow Gaussian distribution. Clutter measurements per scan are generated with Poisson rate 10, and uniformly distributed over the surveillance region.

Figure 6.

Trajectories of extended targets (’o’ is the start point, is the end point).

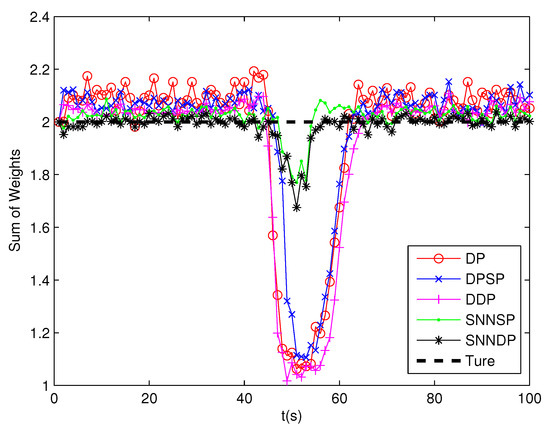

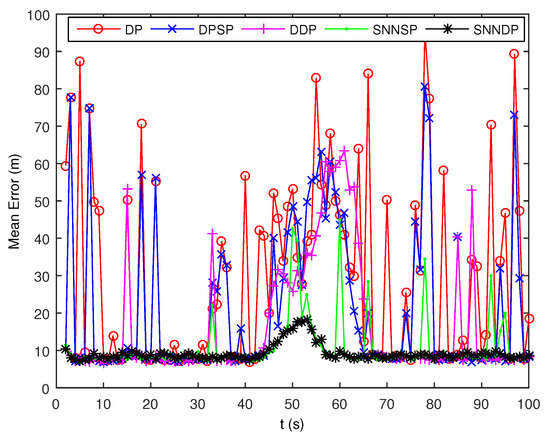

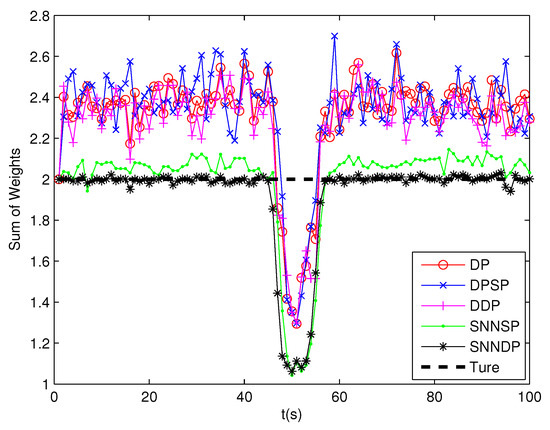

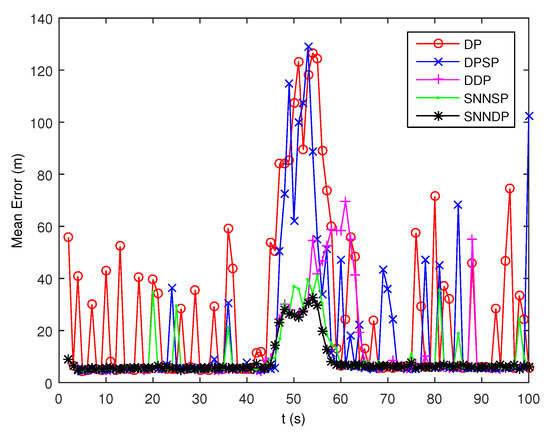

As seen from Figure 7 and Figure 8, the DPSP only exhibits very slightly better than the the DP. The mean error of kinematic states estimated by the ET-GMPHD filter using the SNNSP and SNNDP is smaller than that using the DP, DPSP and DDP, and with a better estimate of the number of targets. The advantages are more evident when the two extended targets are spatially close at 45–60 s. The reason for this is that the SNN similarity measure reflects the local configuration of measurements in the measurement space, and thus is relatively insensitive to variations in density. In addition, due to the DDP and SNNDP incorporating the density information of the individual measurement, the ET-GMPHD filter with the DDP behaves better than that with the DP and DPSP, and the ET-GMPHD filter using the SNNDP outperforms that using the SNNSP, likewise.

Figure 7.

Sum of weights.

Figure 8.

Mean error of estimated kinematic states.

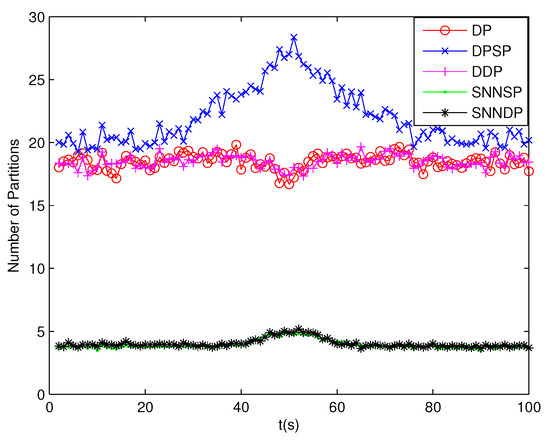

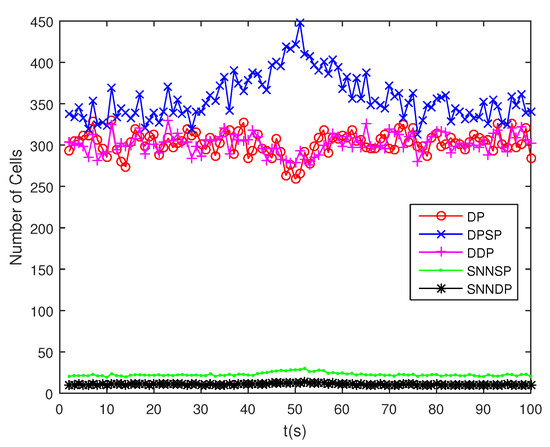

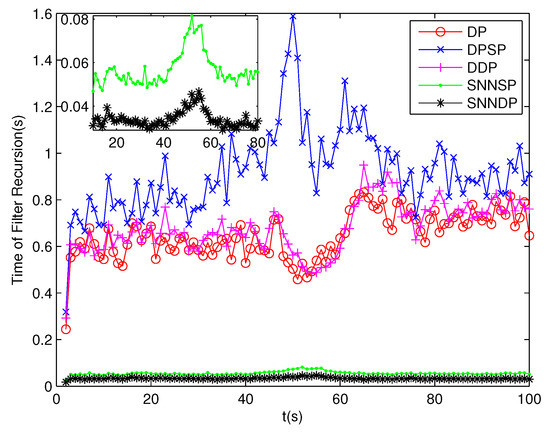

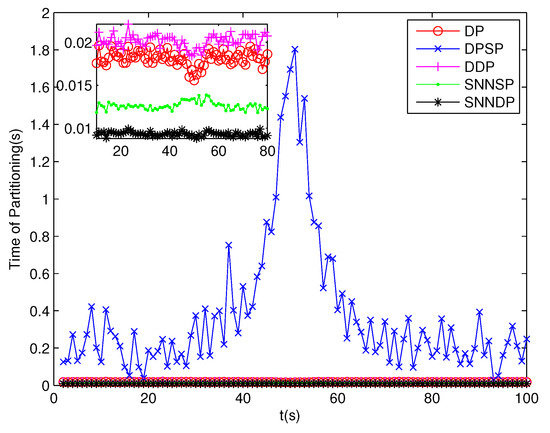

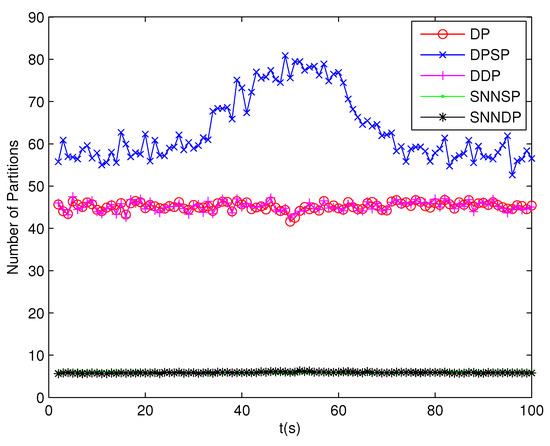

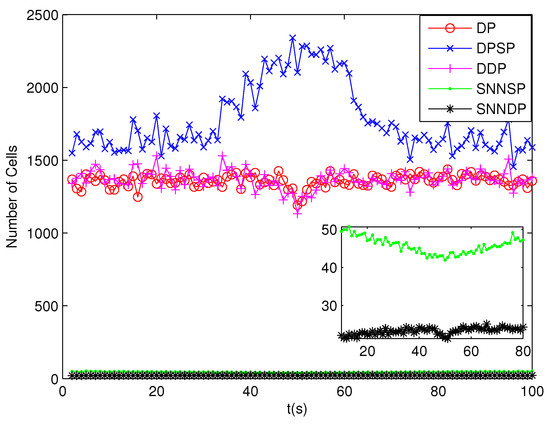

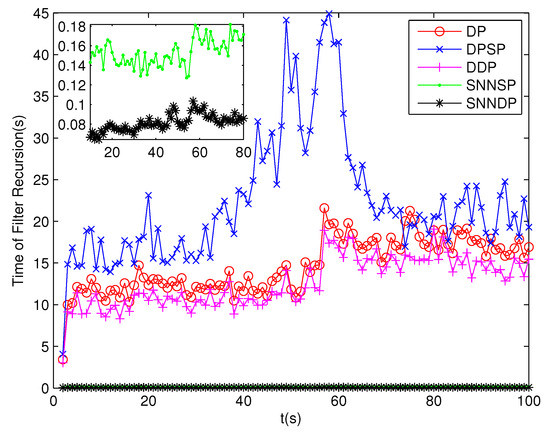

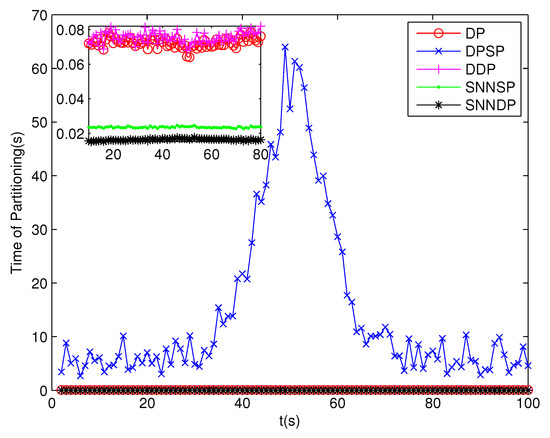

The number of partitions by the DP, DPSP and DDP grows rapidly with the increase in the number of measurements, while the number of partitions by the SNNSP and SNNDP mainly depends on the neighbor list size K which is a relatively small value. Therefore, as can be seen from Figure 9, the number of partitions by the SNNSP and SNNDP is smaller than that by the DP, DPSP and DDP. The number of cells is even less, about one-tenth, as shown in Figure 10. Updating one Gaussian component of the predicted PHD intensity by measurements in one cell, will form that of the posterior PHD intensity. Thus, the computational complexity of the ET-GMPHD recursion is strongly dependent on the number of cells in the resulting partitions. As can be seen from Figure 11, the computational time of the ET-GMPHD filter recursion using the SNNSP and SNNDP is dramatically smaller than that using DP and DDP. Although the SNNSP and SNNDP need to compute the SNN similarity for each pair of measurements, the computational time cost is usually less than that cost by DP and DDP due to the less number of their partitions, as shown in Figure 12. While the time required by DPSP is significantly longer because of adding partitions by Sub-Partitioning. Note that the simulations are implemented by Matlab2010 on Inter Core i5-4570 3.20 GHz processor and 4 GB RAM.

Figure 9.

Number of partitions.

Figure 10.

Number of cells.

Figure 11.

Computational time of the ET-GMPHD filter.

Figure 12.

Computational time of Partitioning.

5.2.2. High Clutter

In this scenario with high clutter, the trajectories of two extended targets are the same as in the above scenario (shown in Figure 6). Clutter measurements per scan are generated with Poisson rate 40 (four times the clutter level in the first scenario). Each extended target generates measurements per scan with Poisson rate 20, and the densities of measurements from two targets are set to be the same.

Because clutter measurements are distributed randomly in the surveillance region, the number of distance thresholds increases greatly in the presence of high clutter measurements. As can be seen from Figure 13 and Figure 14, the number of partitions and the number of cells obtained by the DP, DPSP and DDP are significantly larger, about ten and thirty times, respectively, than that by the SNNSP and SNNDP. As a result, the ET-GMPHD filters based on the SNNSP and SNNDP approaches indicate an evident decrease in computational time as shown in Figure 15, and the time of partitioning shows the same trend as shown in Figure 16.

Figure 13.

Number of partitions.

Figure 14.

Number of cells.

Figure 15.

Computational time of the ET-GMPHD filter.

Figure 16.

Computational time of Partitioning.

The DP and DPSP tend to leave clutter measurements to individual multiple cells, and thus the extended target PHD filter may consider the clutter as a target in the high clutter environment. Consequently, the number of targets is overestimated when using DP and DPSP as shown in Figure 17. As shown from Figure 18, the mean error of the kinematic states given by the ET-GMPHD filter based on the proposed approaches is smaller than the one using DP, DPSP and DDP, especially in the situation that extended targets are spatially close.

Figure 17.

Sum of weights.

Figure 18.

Mean error of estimated kinematic states.

6. Conclusions

This paper proposes two measurement set partitioning approaches for the ET-PHD filter based on the SNN similarity. The SNN similarity, which can reflect the local configuration of measurements in the measurement space, is applied to handle the situation that the density of measurements varies from target to target. To promote the reliability further, the SNNDP is developed by combining the SNN similarity with the DBSCAN algorithm. The resulting ET-PHD filter based on the proposed partitioning approaches decreases computational burden due to the smaller number of partitions. Especially in high clutter scenarios, a significant reduction in computational complexity can be achieved. Moreover, better estimates about the target number and the kinematic states can also be achieved in some challenging scenarios, such as differing densities of measurements, high clutter and proximity among extended targets. We will discuss the extension of the developed approaches for the extended target with complex shape, and extend our current result to the multi-sensor case with appropriate sensor management strategies [37,38] in our future work.

Author Contributions

Conceptualization, Y.H.; methodology, Y.H.; software, Y.H.; validation, Y.H. and C.H.; formal analysis, Y.H.; investigation, C.H.; resources, Y.H.; data curation, Y.H.; writing—original draft preparation, Y.H.; writing—review and editing, Y.H.; visualization, Y.H.; supervision, C.H.; project administration, Y.H.; funding acquisition, Y.H.

Funding

This research was funded by Natural Science Foundation of Ningxia (NZ16004), and National Natural Science Foundation of China (61863030, 61650304, 61370037) and the Research Starting Funds for Imported Talents, Ningxia University (BQD2015007).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Koch, J.W. Bayesian approach to extended object and cluster tracking using random matrices. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 1042–1059. [Google Scholar] [CrossRef]

- Koch, J.W.; Feldmann, M. Cluster tracking under kinematical constraints using random matrices. Robot. Auton. Syst. 2009, 57, 296–309. [Google Scholar] [CrossRef]

- Feldmann, M.; Franken, D.; Koch, J.W. Tracking of extended objects and group targets using random matrices. IEEE Trans. Signal Process. 2011, 59, 1409–1420. [Google Scholar] [CrossRef]

- Baum, M.; Hanebeck, U.D. Random hypersurface models for extended object tracking. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Ajman, United Arab Emirates, 14–17 December 2009. [Google Scholar]

- Baum, M.; Noack, B.; Hanebeck, U.D. Extended Object and Group Tracking with Elliptic Random Hypersurface Models. In Proceedings of the International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Baum, M.; Hanebeck, U.D. Shape Tracking of Extended Objects and Group Targets with Star-Convex RHMs. In Proceedings of the International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011. [Google Scholar]

- Aftab, W.; Hostettler, R.; De Freitas, A.; Arvaneh, M.; Mihaylova, L. Spatio-Temporal Gaussian Process Models for Extended and Group Object Tracking With Irregular Shapes. IEEE Trans. Veh. Technol. 2019, 68, 2137–2151. [Google Scholar] [CrossRef]

- Gilholm, K.; Salmond, D. spatial distribution model for tracking extended objects. IEE Proc. Radar Sonar Navig. 2005, 152, 364–371. [Google Scholar] [CrossRef]

- Wahlström, N.; Özkan, E. Extended Target Tracking Using Gaussian Processes. IEEE Trans. Signal Process. 2015, 63, 4165–4178. [Google Scholar] [CrossRef]

- Zea, A.; Faion, F.; Baum, M.; Hanebeck, U.D. Level-set random hypersurface models for tracking nonconvex extended objects. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2990–3007. [Google Scholar] [CrossRef]

- Wieneke, M.; Koch, J.W. Probabilistic tracking of multiple extended targets using random matrices. Proc. SPIE Signal Data Process. Small Targets 2010, 7698, 769812. [Google Scholar]

- Baum, M.; Noack, B.; Beutler, F.; Itt, D.; Hanebeck, U.D. Optimal Gaussian filtering for polynomial systems applied to association-free multi-target tracking. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011. [Google Scholar]

- Vivone, G.; Braca, P. Joint probabilistic data association tracker for extended target tracking applied to x-band marine radar data. IEEE J. Ocean. Eng. 2016, 41, 1007–1019. [Google Scholar] [CrossRef]

- De Freitas, A.; Mihaylova, L.; Gning, A.; Schikora, M.; Ulmke, M.; Angelova, D.; Koch, W. A Box Particle Filter Method for Tracking Multiple Extended Objects. IEEE Trans. Aerosp. Electron. Syst. 2018. [Google Scholar] [CrossRef]

- Mahler, R. Multitarget Bayes filtering via first-order multi target moments. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1152–1178. [Google Scholar] [CrossRef]

- Mahler, R. PHD filters of higher order in target number. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1523–1543. [Google Scholar] [CrossRef]

- Beard, M.; Reuter, S.; Granstrom, K.; Vo, B.T.; Vo, B.N.; Scheel, A. Multiple Extended Target Tracking with Labeled Random Finite Sets. IEEE Trans. Signal Process. 2016, 64, 1638–1653. [Google Scholar] [CrossRef]

- Mahler, R. PHD filters for nonstandard targets, I: Extended targets. In Proceedings of the International Conference on Information Fusion, Seattle, WA, USA, 6–9 July 2009. [Google Scholar]

- Orguner, U.; Lundquist, C.; Granstrom, K. Extended target tracking with a cardinalized probability hypothesis density filter. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011. [Google Scholar]

- Lian, F.; Han, C.; Liu, W.F.; Liu, J.; Sun, J. Unified cardinalized probability hypothesis density filters for extended targets and unresolved targets. Signal Process. 2012, 92, 1729–1744. [Google Scholar] [CrossRef]

- Granstrom, K.; Lundquist, C.; Orguner, U. A Gaussian mixture PHD filter for extended target tracking. In Proceedings of the International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Granstrom, K.; Lundquist, C.; Orguner, U. Extended Target Tracking using a Gaussian Mixture PHD filter. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 3268–3286. [Google Scholar] [CrossRef]

- Yang, J.; Li, P.; Li, Z.; Yang, L. Multiple extended target tracking algorithm based on Gaussian surface matrix. J. Syst. Eng. Electron. 2016, 27, 279–289. [Google Scholar] [CrossRef]

- Zhang, Y.Q.; Ji, H.B. A novel fast partitioning algorithm for extended target tracking using a Gaussian mixture PHD filter. Signal Process. 2013, 93, 2975–2985. [Google Scholar] [CrossRef]

- Scheel, A.; Granström, K.; Meissner, D.; Reuter, S.; Dietmayer, K. Tracking and data segmentation using a GGIW filter with mixture clustering. In Proceedings of the 17th International Conference on Information Fusion, Salamanca, Spain, 7–10 July 2014. [Google Scholar]

- Li, P.; Ge, H.; Yang, J.; Zhang, H. Shape selection partitioning algorithm for Gaussian inverse Wishart probability hypothesis density filter for extended target tracking. IET Signal Process. 2016, 10, 1041–1051. [Google Scholar] [CrossRef]

- Shen, X.; Song, Z.; Fan, H.; Fan, H.; Fu, Q. Generalized Distance Partitioning for Multiple-Detection Tracking Filter Based on Random Finite Set. IET Radar Sonar Navig. 2018, 12, 260–267. [Google Scholar] [CrossRef]

- McLachlan, G.J. Mahalanobis distance. Resonance 1999, 4, 20–26. [Google Scholar] [CrossRef]

- Jarvis, R.A.; Patrick, E.A. Clustering Using a Similarity Measure Based on Shared Nearest Neighbors. IEEE Trans. Comput. 1973, 22, 1025–1034. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.; Sander, J.; Xu, X.W. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 22–25 August 1996. [Google Scholar]

- Gilholm, K.; Godsill, S.; Maskell, S.; Salmond, D. Poisson models for extended target and group tracking. In Proceedings of the Signal and Data Processing of Small Targets, San Diego, CA, USA, 15 September 2005. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. K-means++: The advantages of careful seeding. In Proceedings of the ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007. [Google Scholar]

- Vo, B.-N.; Ma, W.-K. The Gaussian Mixture Probability Hypothesis Density Filter. IEEE Trans. Signal Process. 2006, 54, 4091–4104. [Google Scholar] [CrossRef]

- Schuhmacher, D.; Vo, B.T.; Vo, B.N. A consistent metric for performance evaluation of multi-object filters. IEEE Trans. Signal Process. 2008, 56, 3447–3457. [Google Scholar] [CrossRef]

- Ristic, B.; Vo, B.N.; Clark, D.; Vo, B.T. A Metric for Performance Evaluation of Multi-Target Tracking Algorithms. IEEE Trans. Signal Process. 2011, 59, 3452–3457. [Google Scholar] [CrossRef]

- Granstrom, K.; Orguner, U. A PHD Filter for Tracking Multiple Extended Targets using random matrices. IEEE Trans. Signal Process. 2012, 60, 5657–5671. [Google Scholar] [CrossRef]

- Lian, F.; Hou, L.M.; Liu, J.; Han, C.Z. Constrained multi-sensor control using a multi-target MSE bound and a δ-GLMB filter. Sensors 2018, 18, 2308. [Google Scholar] [CrossRef] [PubMed]

- Lian, F.; Hou, L.M.; Wei, B.; Han, C.Z. Sensor selection for decentralized large-scale MTT network. Sensors 2018, 18, 4115. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).