1. Introduction

An increasing number of urban manhole covers are being used in road administration (e.g., for electricity and gas supply, fire control, water supply and draining, communication, and sewage discharge) as rapid urban development in the digital age promotes constant growth, city planning improvements, and road construction. The daily management, maintenance, and inspection of these covers now involve increased workloads and difficulties. Frequent and heavy traffic also leads to cover damage and subsidence, which often reduces road and infrastructure safety, thus posing potential dangers to road users [

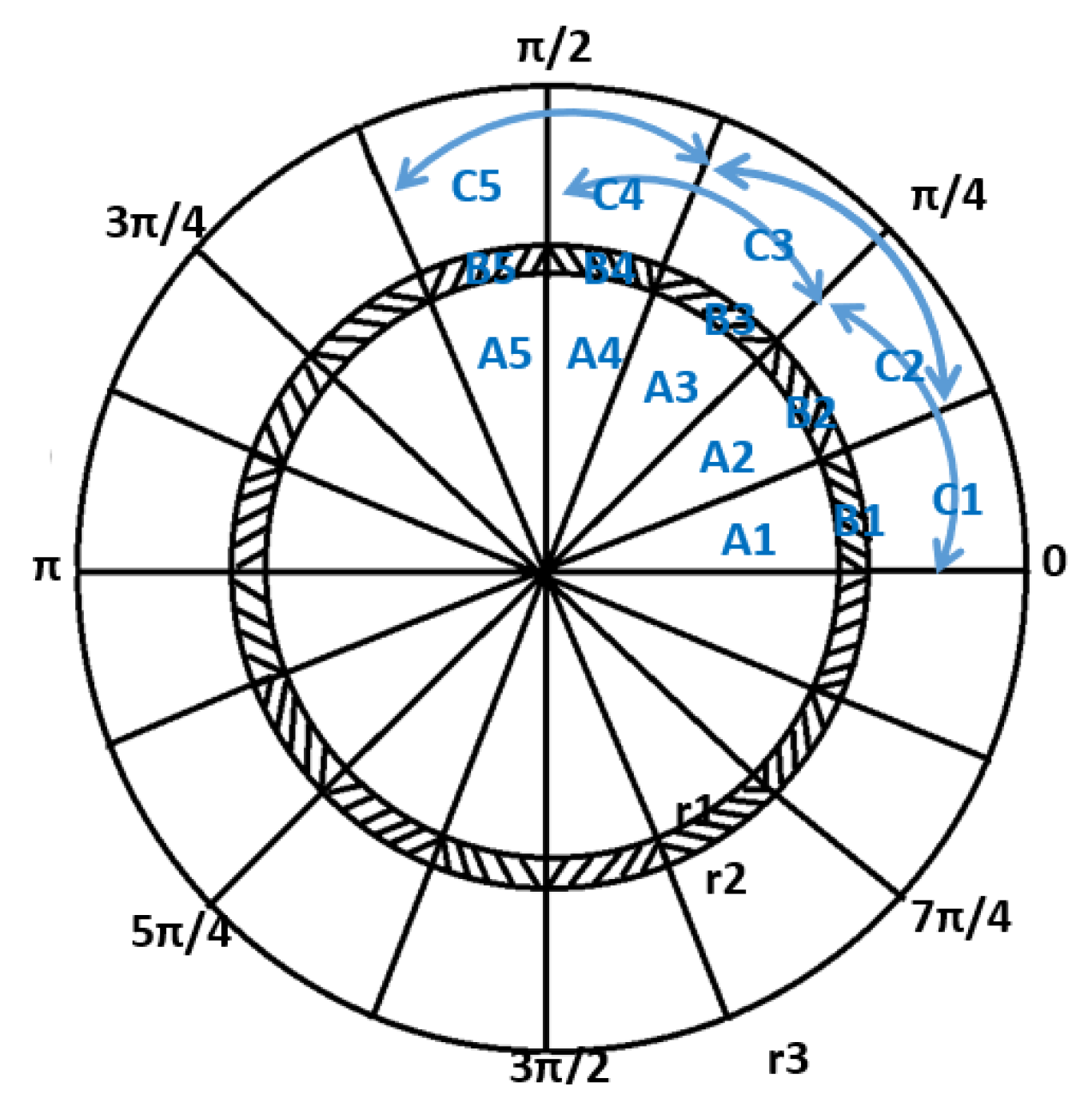

1,

2]. The manual detection and repair of defective manhole covers via traditional methods can no longer meet the demands of modern cities in terms of timeliness, safety, and inspection accuracy. The automation of urban manhole-cover management, which acquires accurate location and ownership information—and their inspection and maintenance at higher operational safety levels—is now a primary focus in intelligent road administrations. The recent emergence and rapid advancement of mobile mapping [

1,

2,

3] present effective methods for the monitoring and maintenance of defective manhole covers, while also functioning as data sources. The mobile mapping system is a new-generation multi-platform and multi-band mobile information acquisition system, this system is mounted on a carrier vehicle and integrated with various sensors, such as a laser scanner, inertial navigation system, global navigation and positioning system, and digital camera [

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. It offers a high-precision and high-efficiency means of acquiring large quantities of three-dimensional (3D) point cloud and image data in real-world environments, and has now become an indispensable component in the evolution of digital cities, providing long-term support in promoting the construction and management of modern cities and the transformation to digital management and operation. It also presents new possibilities and methods for the automated location, extraction, and identification of urban manhole covers.

Few studies have been conducted to date that address the monitoring and maintenance of urban manhole covers. Nan et al. [

14] developed an underground practical sensing system to detect manholes beneath the urban pavement environment. Fu et al. [

15] developed an intelligent system for manhole cover detection and management via the deployment of many sensors at each manhole cover to provide real-time monitoring. However, this method requires a considerable (and constantly increasing) amount of equipment to monitor all the manhole covers in a given urban area. Timofte et al. [

16] presented a multi-view scheme for manhole detection and recognition based on a van-mounted camera and global positioning system (GPS) for data acquisition, but this method was limited in its ability to detect manhole covers, and the ownership information could not be obtained due to the low image resolution. It is also slow and inconvenient to monitor all the manhole covers via radio-frequency identification tagging [

17]. Murasaki et al. [

18] estimated the degree of manhole cover wear based on a texture recognition approach that used the local binary pattern feature of the image-processing method. Ji et al. [

19] presented a manhole detection method that employed a multi-view matching and feature extraction technique based on close-range images, and inertial navigation system and light detection and ranging (LiDAR) data, and utilized canny edge detection for manhole cover recognition. However, this method depends on the detected edge of the manhole cover, which may be difficult to detect due to the similar intensities of the manhole cover and road. Yu et al. [

2] used multi-scale tensor voting and distance thresholds to extract the manholes from two-dimensional georeferenced-intensity feature images [

1], with these feature maps generated from mobile LiDAR data, but this method is computationally intensive. Yu et al. [

20] detected manhole covers by using a supervised deep-learning model from the georeferenced-intensity feature maps that were generated via the interpolation of inverse-distance-weighted laser points. This method yielded better manhole-cover detection results, but it is solely based on laser points from the RIEGL VMX-450 mobile laser scanning system. Therefore, detailed cover ownership information could not be acquired, and the method only focused on manhole cover detection, with no manhole identification or assessment information obtained.

Two problems are identified in the above manhole-cover detection methods. First, the data obtained by conventional mobile-mapping systems [

1,

2,

20] are not high-definition ground images, and therefore cannot provide detailed manhole-cover ownership information (e.g., ownership unit, use, and cover specifications), which is necessary for road administration purposes. Second, most researchers determine the manhole cover locations by using point cloud or image data, whereas the combined implementation of both data sources achieves higher precision results. Furthermore, no manhole cover assessments have been conducted during the detection and identification process to assess whether a given manhole cover needs to be repaired or replaced. In summary, current conventional mobile-mapping systems do not meet the growing demands of manhole cover detection, identification, maintenance, and management for urban road administration purposes.

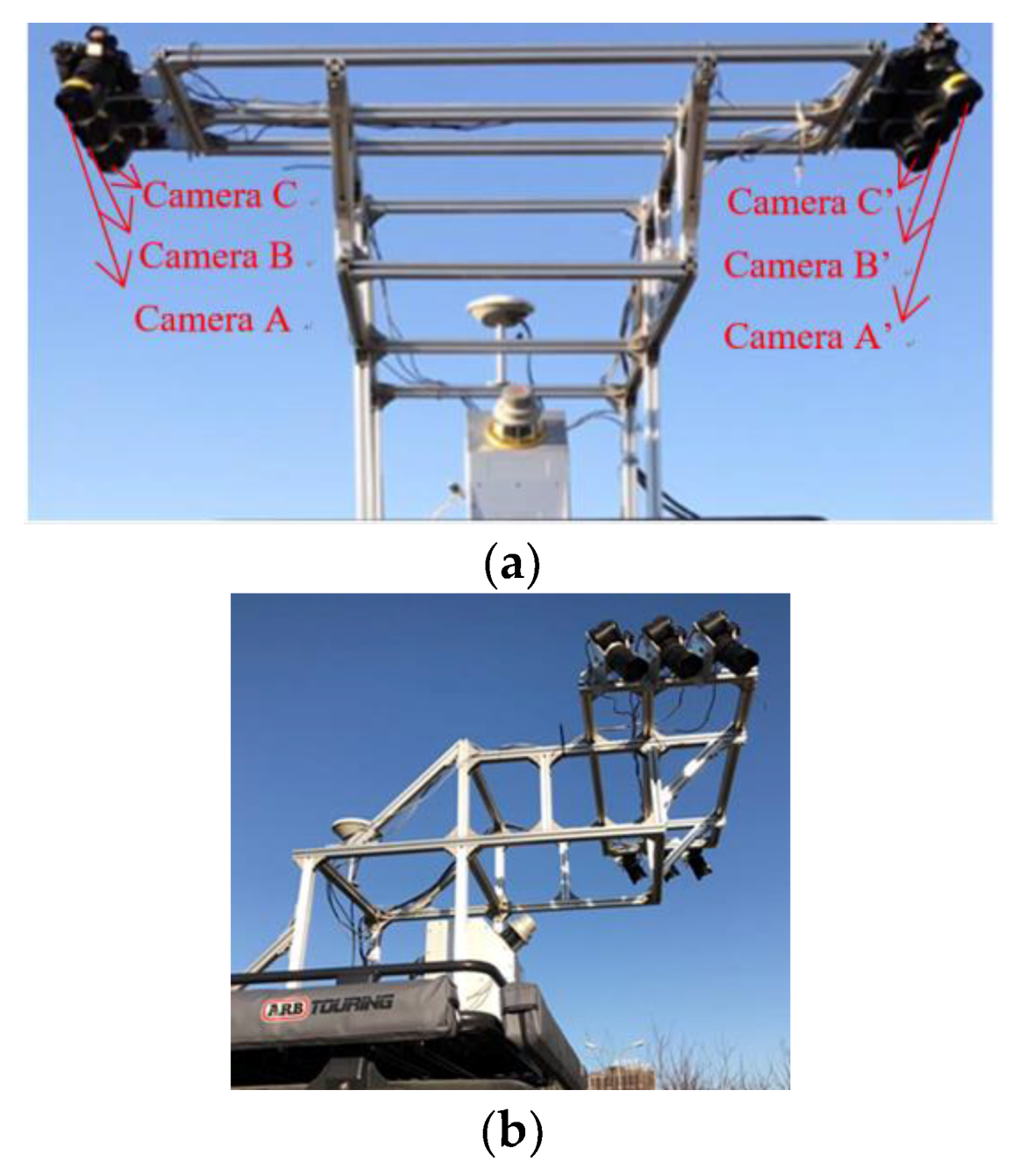

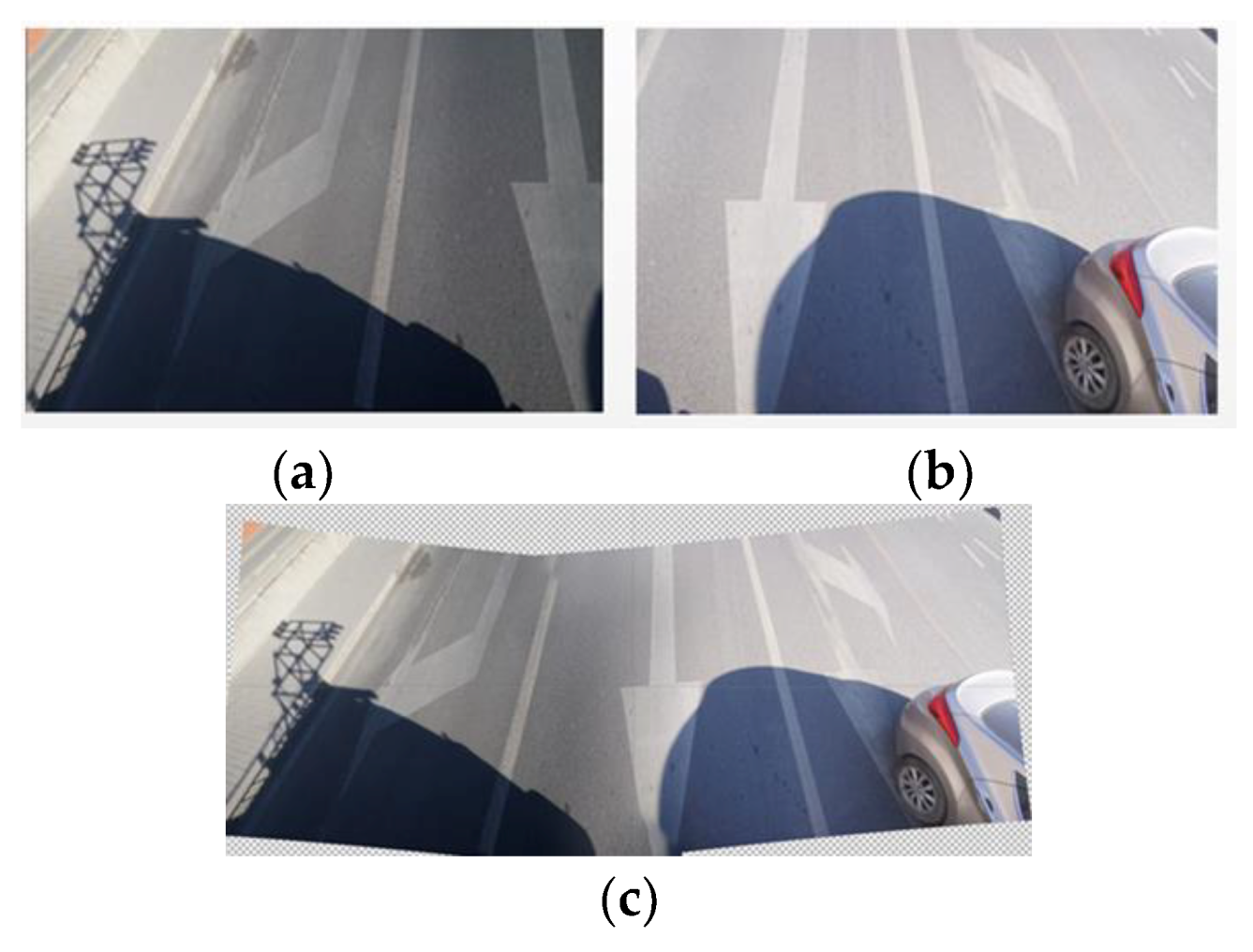

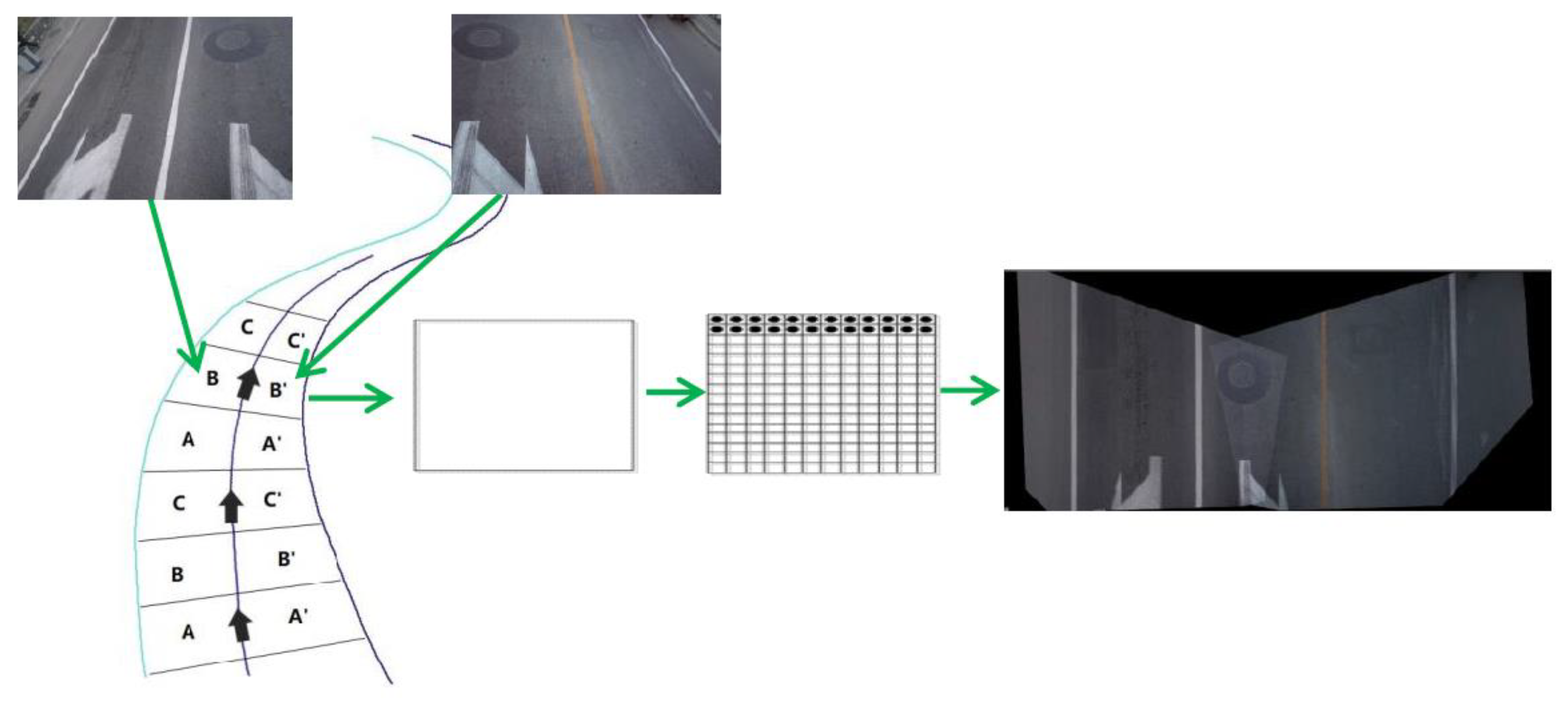

This study proposes a modified manhole-cover detection, recognition, and assessment system that utilizes the integration of two image-acquisition devices. An improved sequential exposure scheme using a symmetrical arrangement of multiple cameras, in combination with a high-density laser scanner, is proposed to obtain high-density point cloud and ultra-high-resolution ground images simultaneously. An improved method based on the histogram of oriented gradients (HOG) descriptor with symmetry features, support vector machine (SVM), and statistical analysis is proposed. This improved and optimized system, in combination with the proposed data-processing method, enables the efficient and rapid detection, identification, and assessment of manhole covers. In this paper, we first provide a description of the developed manhole-cover detection system, and then introduce the key technologies used in manhole cover detection and maintenance, followed by an analysis of the experimental results.

3. Results and Discussion

3.1. Experimental Data

The experimental datasets are acquired using the SSW-D Mobile LiDAR System described in

Section 2 to verify the feasibility, practicality, and effectiveness of the proposed method. The SSW-D is integrated with a RIEGL VUX-1HA laser sensor, cameras (SONY A7), an inertial measurement unit, GPS antenna, odometer, and other features, with the specific sensor parameters listed in

Table 1. A RIEGL VUX-1HA laser sensor with the following specifications is used in the customized mobile-scanning system mentioned: laser pulse repetition-rate = 1014 kHz, scan frequency (number of laser lines recorded per second) = 250 Hz, and measuring range = 2–200 m at a reflectivity of 80%. A SONY A7 camera, with a focal length of 12 mm, pixel size of 6 μm, and image size of 24 million pixels, is used. The POS2010 inertial navigation system (Beijing) [

3] is used.

For convenience, we choose an urban road in Beijing, China, with more manhole covers, as the experimental area to verify the effectiveness and feasibility of the proposed method. The vehicle is driven at 40 km/h during the data acquisition, with the entire study area shown in

Figure 9a, among,

Figure 9b–f is the sample A,B,C,D,E located at the area of

Figure 9a. A total of 216.885 km of roads were surveyed, with 1.80 × 10

5 high-definition ground images and 6016 laser files acquired. The laser files contain 1.36 × 10

9 laser-scanned points at a point density of 1800 p/m

2. The data include various complex ground features, such as vehicles, trees, buildings, and acoustic barriers, to meet road administration requirements, and ensure effective and accurate manhole cover detection. The following experimental analyses are performed on the five road-section samples. The data acquired with the proposed system in this study are used for the automated extraction of manhole covers, and the subsequent detection, identification, and assessment of manhole covers employ the proposed method. This manhole cover experiment confirms the feasibility of the acquisition system and the effectiveness of the method.

3.2. Manhole Cover Detection

Ground point extraction is the first step in manhole cover detection from laser-scanned point clouds. The effective selection of ground points and the elimination of non-ground points significantly reduce the computational workload of large laser datasets. Here, we directly use the ground points in the manhole cover detection and identification, with the ground point extraction based on the method outlined in our previous paper [

3]. An image resolution of 0.01 m is set to generate the intensity-based images from the ground points. The modified HOG–SVM joint method with symmetry features used for the extraction in this study requires sample data for the calculation, which are created directly from the intensity images. The final intensity images include 13,838 positive samples and 10,846 negative samples. Five road-section samples are randomly selected in the study area to provide further details on the extraction results, as shown in

Table 2. The first column shows the ground points, the second column data displays the manhole-cover detection result as green rectangles, the third column data shows the manhole-cover outline extraction result as blue circles, and the fourth column depicts the superimposed result based on the laser points. It can be seen that the proposed method effectively extracts the location of the manhole covers and their outline based on the intensity images generated from the laser points.

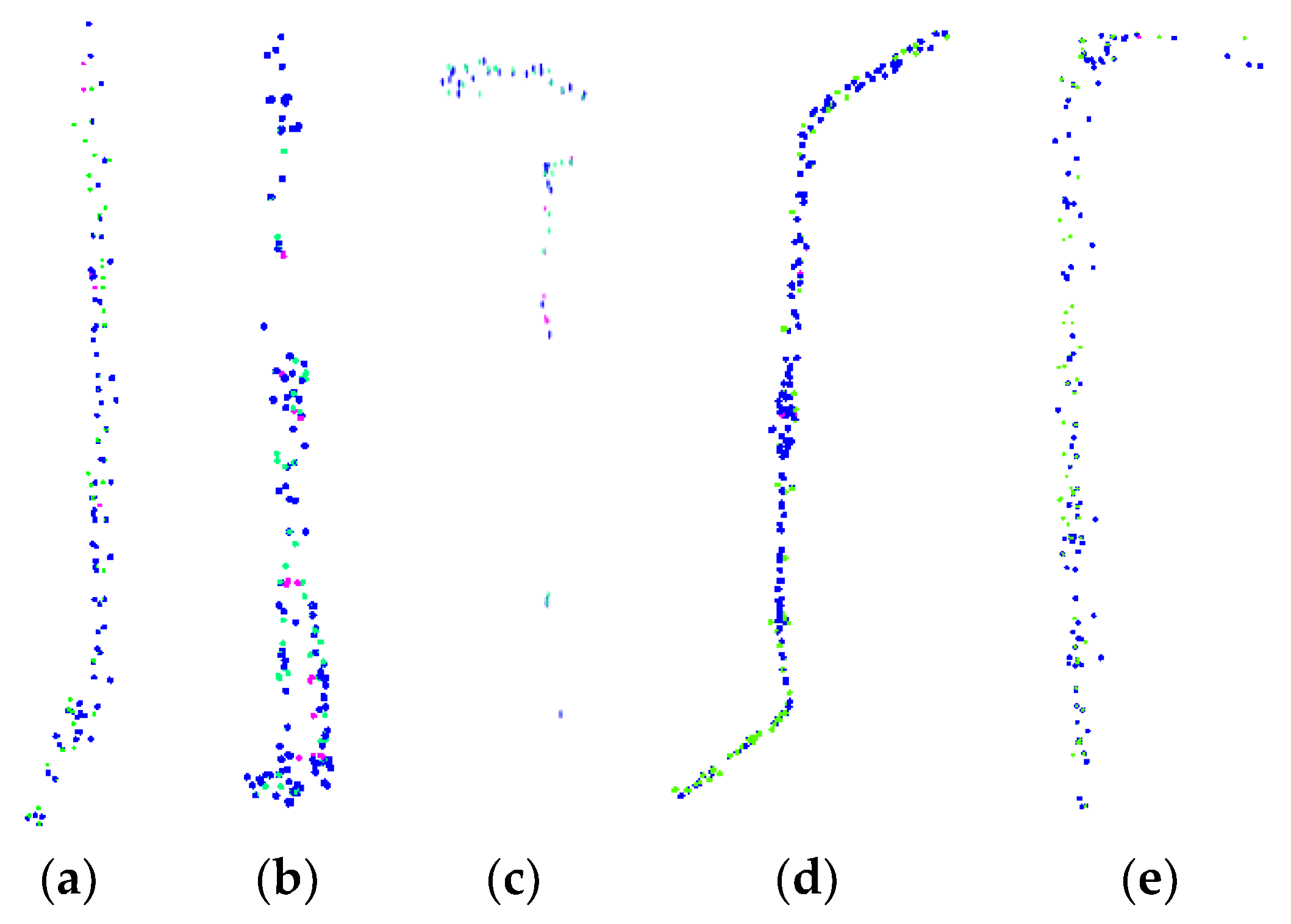

The manhole cover extraction from the images consists of first generating the ground orthophotos from the images, with the manhole cover extraction and detection carried out using the method described in the

Section 2.2.3. The high degree of agreement between the laser-scanned point clouds and image data yields overlaps in the extraction results that need to be removed. In total, 7015 positive samples and 8185 negative samples are generated from the ground orthophotos. The robustness of the proposed method is verified by the data from road samples A–E, as shown in

Figure 10. The blue points are the extraction results from the point clouds, the green points are the results from the image data, and the magenta points are the undetected covers. Numerous overlaps are present in the extracted structures detected by the two data sources, as seen in

Figure 10. However, some manhole covers can only be extracted from the intensity-based images, while others can only be extracted from the ground orthophotos. Improved results are therefore achieved by merging the extraction outcomes of the two data sources and removing the repeated extracted results, as given in

Table 3.

We computed the completeness (CPT), correctness (CRT), and F-measure [

3] to assess the success of the extracted result via the proposed method against manual inspection. CPT indicates the percentage of detected manhole covers. CRT is the percentage of correct manhole covers detected. CPT = CA/TA, CRT = CA/TC, and F-measure = 2 × (CPT × CRT)/(CPT + CRT), where CA is the correct number of extracted manhole covers, TA is the total number of manhole covers via manual inspection, and TC is the total number of extracted manhole covers. These assessment results are compared with the previous method [

28] based on the HOG descriptor without symmetry features, which extracted manhole covers by using laser points, with the results given in

Table 4.

By combining the laser points and images as outlined in the proposed method, the average CRT, CPT, and F-measure for manhole cover detection are 96.18%, 94.27%, and 95.22%, respectively. When compared with our previous method [

28], which used only laser points, the CPT, CRT, and F-measure are all higher for the proposed method, which employs a combination of laser points and images. Although the proposed method obtained better extraction results, it failed to extract 5.37% of the manhole covers, the laser points incorrectly extracted 3.38% of the manhole covers, and the ground images incorrectly extracted 4.93% of the manhole covers. Four key factors likely influence these manhole-cover extraction failures: (1) the integrity of the data, as vehicles, pedestrians, and other obstructions cause unavoidable data loss in both the laser point and image acquisitions; (2) laser point density, which is related to the range, angle, long-term vehicle rolling, heat, weather, and other factors, with a higher density of laser points yielding a higher extracted result; and (3) interference from ground objects that are similar to the manhole covers (e.g., bicycle marks and wheels), as these objects can easily be mistaken for manhole covers. The following three measures can be incorporated to improve the extracted result. First, a vehicle speed limit should be enforced to avoid reducing the point cloud density due to a fast-travelling vehicle. Second, the data acquisition should be performed during the daytime during non-peak traffic hours to improve the work efficiency and integrity of the acquired data. Finally, the interference of similar ground objects can be addressed by either adding the negative samples containing these interfering features or employing a deep-learning method that participates in the combined laser point/ground image-based manhole cover extraction.

It can be concluded from the above discussion that the two data sources acquired with the modified mobile LiDAR system presented in this study and processed via the proposed method could effectively realize the automated detection of manhole covers to obtain a better extraction result. The complementary advantages of the two data sources can improve the accuracy and completeness of the results. Better extraction results would also validate the effectiveness and feasibility of the proposed modification scheme.

3.3. Manhole Cover Identification

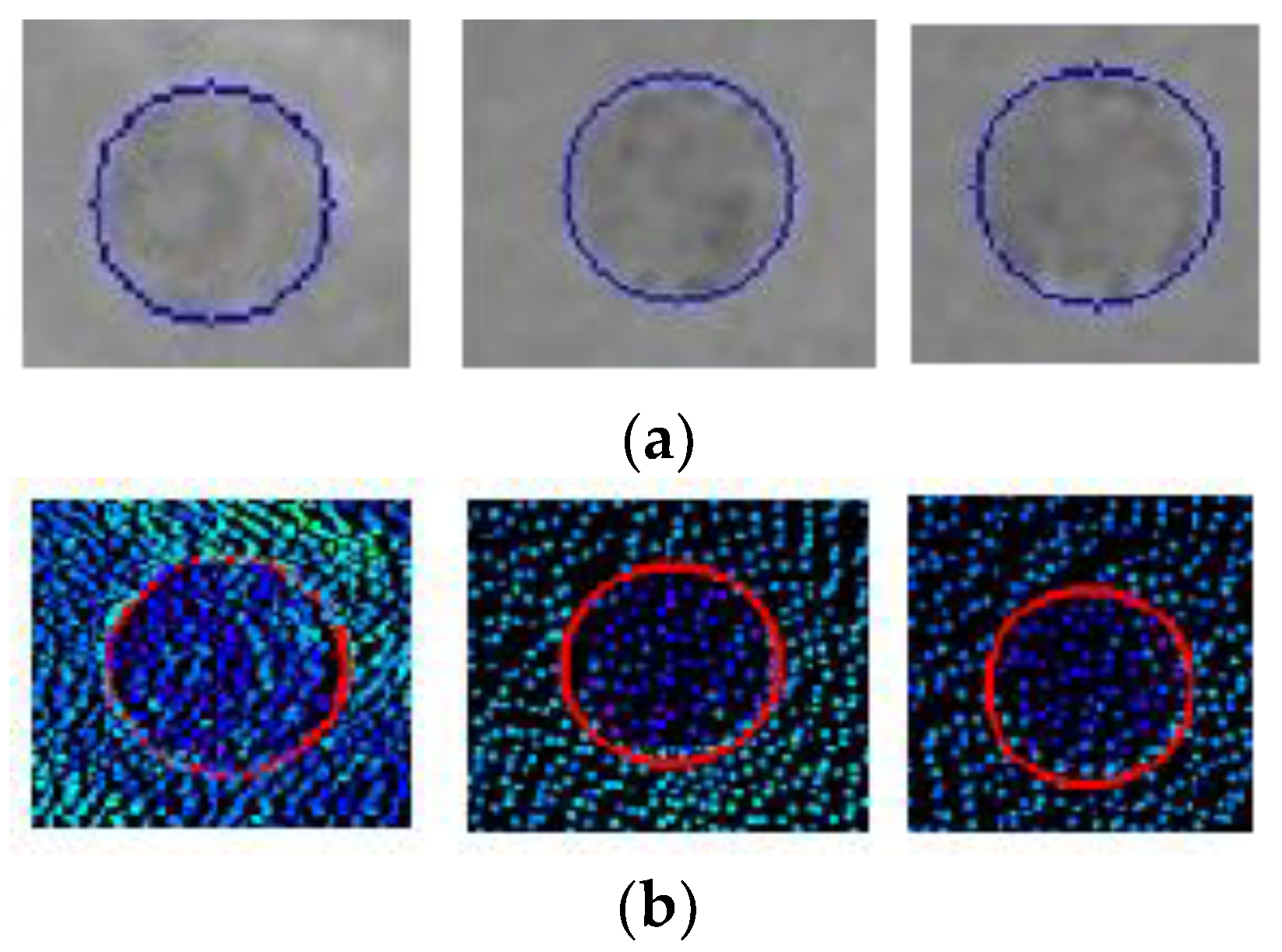

Manhole cover identification includes manhole maintenance and ownership information acquisition, which is achieved based on the extraction results presented in

Section 2.2.4. Manhole cover maintenance is determined from the elevation fluctuations of the manhole cover, its rim, and the surrounding areas, which require the manhole cover outline. We can obtain the manhole cover outline via the proposed method, with the extracted outline result computed from the intensity-based images (

Figure 11a) and determined by superimposing the computed outlines on the point cloud data (

Figure 11b).

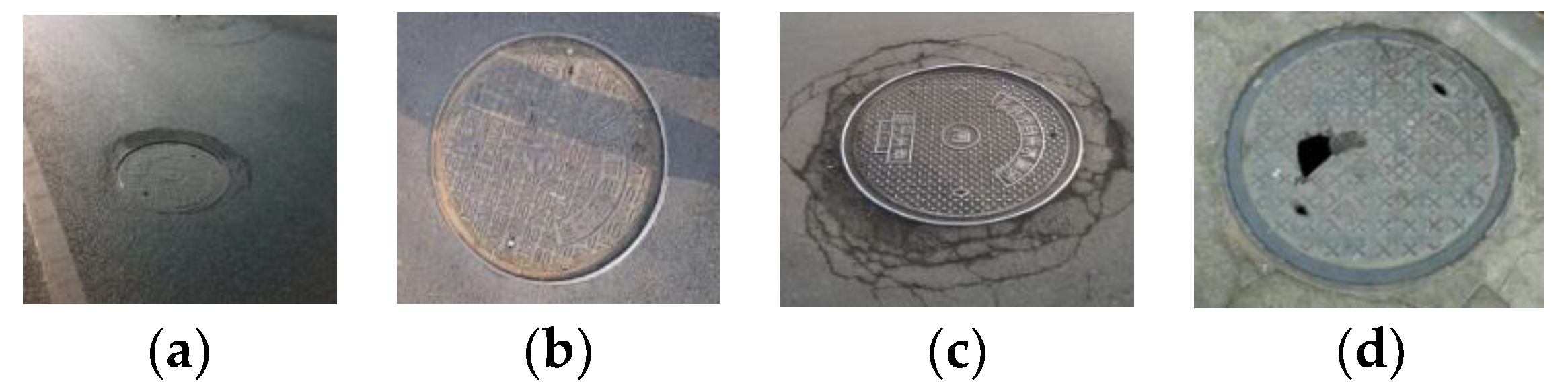

The elevation fluctuations of the manhole cover, its rim, and the surrounding areas can be calculated based on the manhole cover outline to identify whether the manhole cover requires maintenance or replacement. Ownership information is also acquired based on the manhole location, with the aid of the real-time amplification function in the SWDY program, which has been developed by our team. Each real-time manhole-cover photo is obtained directly from its respective location to acquire the ownership information, which can also be used to verify the correctness of the test results. The manhole cover diameter is calculated from the outline data, where the diameter is generally either 60 or 80 cm. The number of covers with abnormal elevations in road sections A–E is determined by statistical analysis. Two damaged manhole covers, 10 sinking manhole covers, 13 sinking manhole rings, and 18 broken manhole covers, which comprise 0.29, 1.45, 1.88, and 2.61% of the total manholes on these five road sections, respectively, are identified. This indicates that 6.24% of the manholes require maintenance. The experimental result highlights the potential to conduct on-site maintenance directly. The proposed method enabled the identification of 10,399 manhole covers from the point-cloud laser data and 9759 from the ground image data for the entire experimental dataset. The manual detection of such a large number of covers would entail a heavy workload, high risk, and low detection accuracy.

The modified acquisition system presented in this study, and the corresponding manhole-cover detection method transforms the surveying, data processing, inspection, and maintenance of manhole covers into a primarily office-based endeavor that improves both work efficiency and detection accuracy, while also reducing the risk generally associated with road works. The manhole cover detection and identification procedure conducted in this study has the potential to improve the management and maintenance of manhole covers for road administration purposes.

Therefore, the developed system and the proposed method are effective in the detection and identification of manhole covers. This system is able to acquire an abundance of ground data, including high-density, high-precision laser-scanned point clouds and high-resolution ground images. The manhole cover information extracted via the proposed method aids in the data collection, inquiry, inspection, and statistical analysis of resources for road administration purposes. Furthermore, our improved acquisition scheme and extraction method are not limited to the extraction of manhole covers, but can also be applied to other ground features, such as road markings and road edge boundary.

Compared to traditional manual methods, this method improves the automation, efficiency, accuracy, and security. However, this method also has some limitations. For shaded manhole covers in grass, it is easy to be shaded in the acquisition of manhole cover, leading to the failure of extraction and identification of the well cover. This needs to be combined with manual detection methods.

4. Conclusions

A sequential exposure scheme using multiple cameras in a symmetric arrangement is proposed as a modification and upgrade to the original acquisition design for manhole cover detection. Careful equipment model selection and installation are undertaken to meet the requirements for the effective detection of manhole covers. High-density, high-precision laser-scanned point clouds and ultra-high-resolution ground images are acquired with this modified system, which also makes the extraction of manhole cover conditions and ownership information possible. Modified HOG and SVM algorithms are applied to the intensity-based ground images and orthophotos generated from point clouds and ground images, respectively, to obtain the location, ownership, and state-of-health information of the manhole covers. The detection average accuracy is 96.18%, data completeness is 94.27%, and F-measure is 95.22%. The method described in this paper is highly efficient, accurate, and safe. It has been practically applied, and is currently used on the roads of Beijing to inspect and analyze manhole covers thoroughly. This method provides strong technical support in the data acquisition, surveying, and inspection of manhole covers in road administration works, and also promotes the intelligent construction and management of urban infrastructure.

While satisfactory experimental results and verifications are obtained using the manhole cover detection and identification methodology presented here, there is still room for improvement in the following areas. Deep learning should be integrated into the detection and extraction of the manhole cover information to improve the extraction results further. Future research should also implement automatic manhole-cover identification, with a focus on any unique identifiers that are engraved on the covers. Furthermore, data collection during peak traffic times should be avoided to reduce shielding and data gaps.