1. Introduction

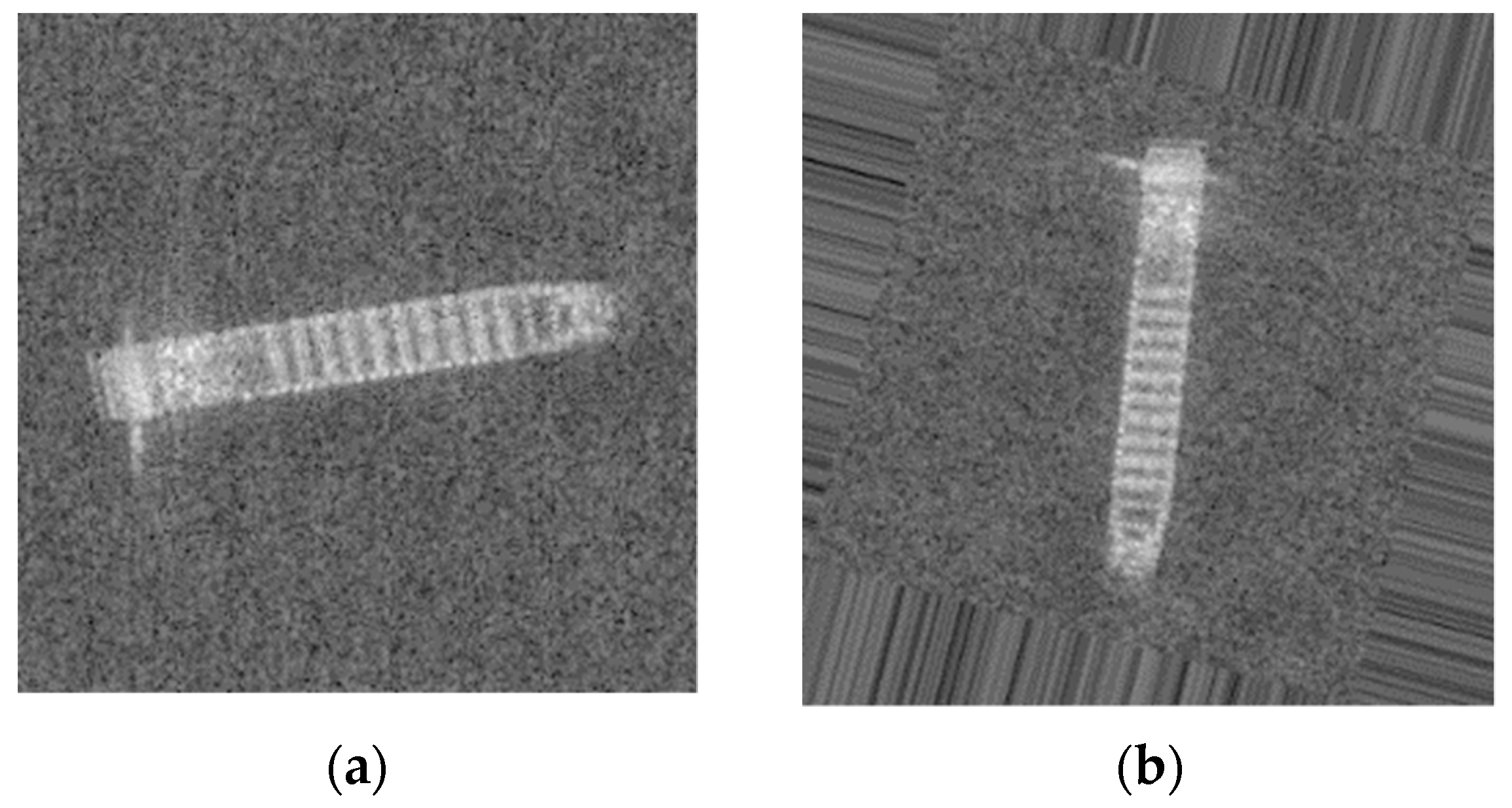

Synthetic aperture radar (SAR) is an active Earth observation system that can be installed on planes, satellites, spacecraft, etc. It can perform observations on the ground all day and in all weather conditions. Now we can get more high-resolution SAR images by recent development of SAR satellites, e.g., RADARSAT-2, TerraSAR-X [

1,

2], etc. By using these images, lots of applications can be implemented. Pieralace et at al. [

3] presents a new simple and very effective filtering technique, which is able to process full-resolution SAR images. Gambardella at al. [

4] presents a methodological approach for a fast and repeatable monitoring, which can be applied to higher resolution data. Ship classification is an important application of SAR images.

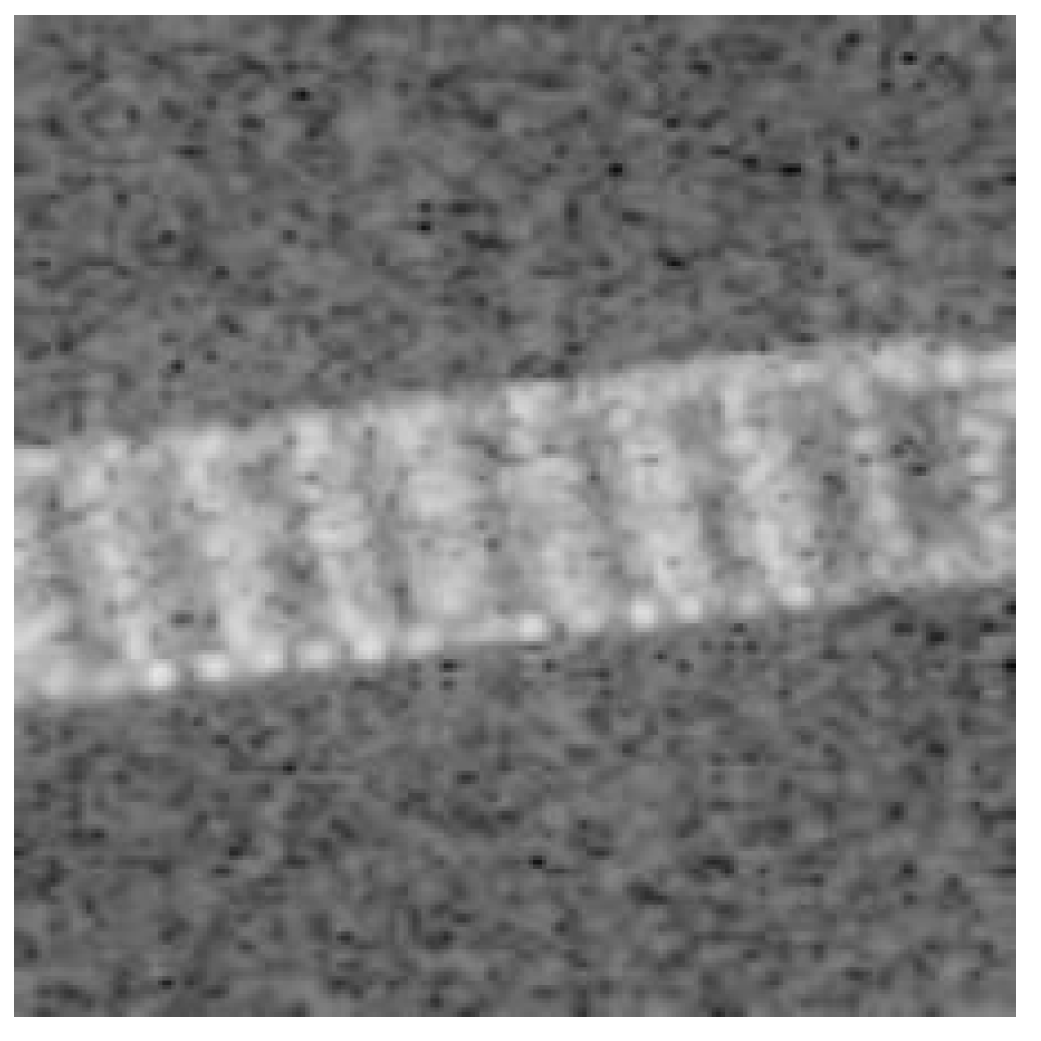

Researchers usually used traditional classification methods in ship classification, including image processing, feature extraction and selection, and classification. Feature extraction is a key step in ship classification. Researchers widely used geometric features, scattering features in feature extraction [

5]. For geometric features, it contains ship area, ship rectangularity, moment of inertia, fractal dimension, spindle direction angle and ratio of length to width [

6], etc. For scattering features, it contains superstructure scattering features [

7], three-dimensional scattering feature [

8], radar-cross-section (RCS) [

9], and symmetric scattering characterization (SSCM) [

10], etc. As for classifiers, artificial neural networks (ANNs) [

11] can establish a general classification scheme by training, which makes it widely used in ship classification. Support vector machines (SVM) [

12] is also a popular model. Researchers also proposed some methods to get high-classification accuracy [

13,

14,

15], these methods have high requirements for on features and classifiers, so these methods could not applied in other datasets. With the development in neural networks, researchers now focus on processing SAR images with deep neural networks (DNNs) [

16,

17,

18].

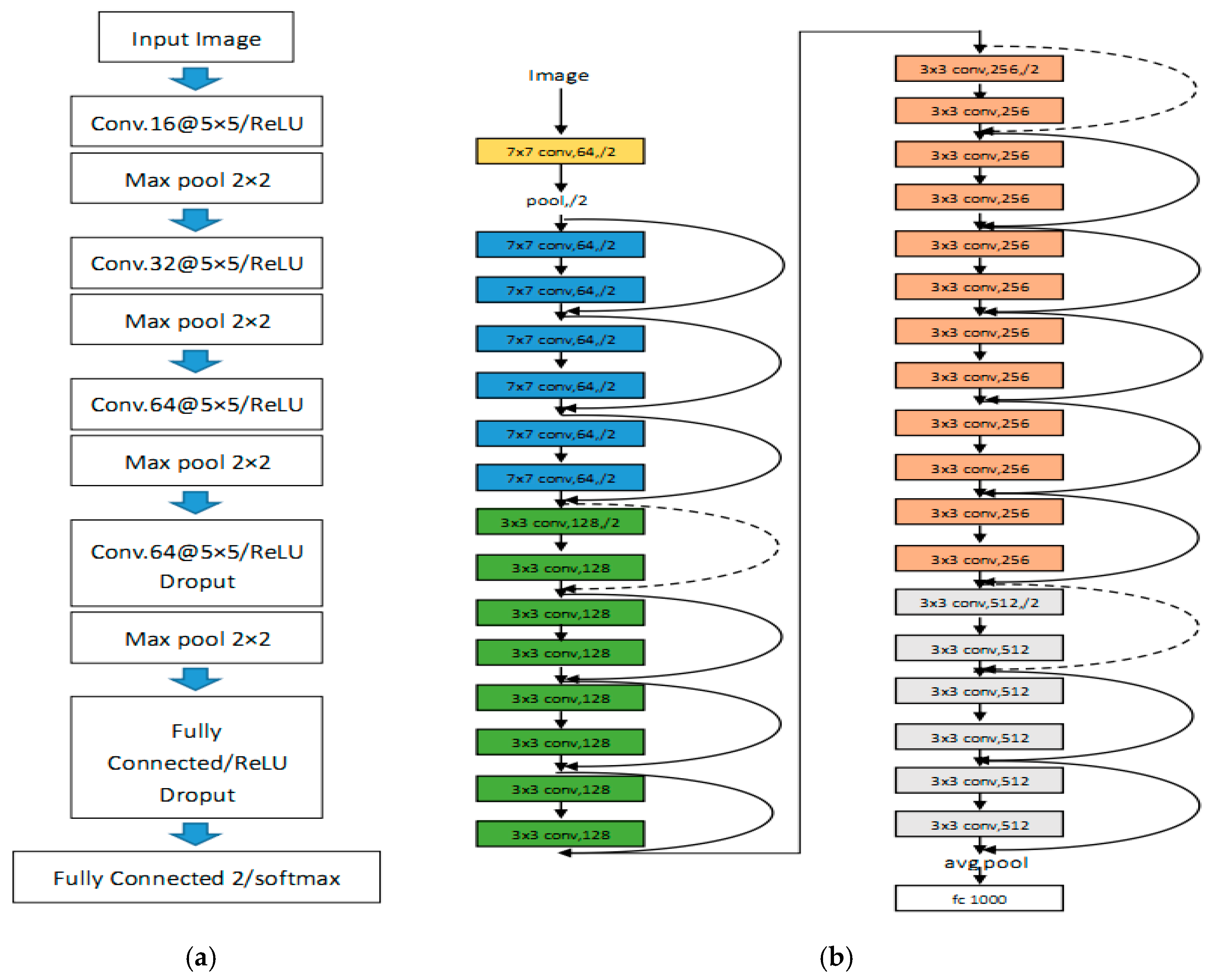

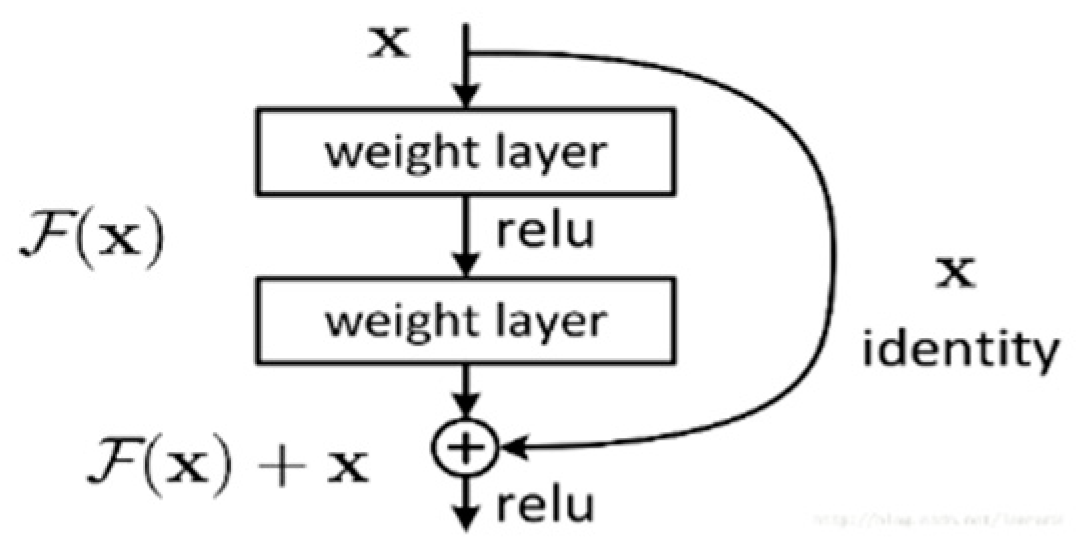

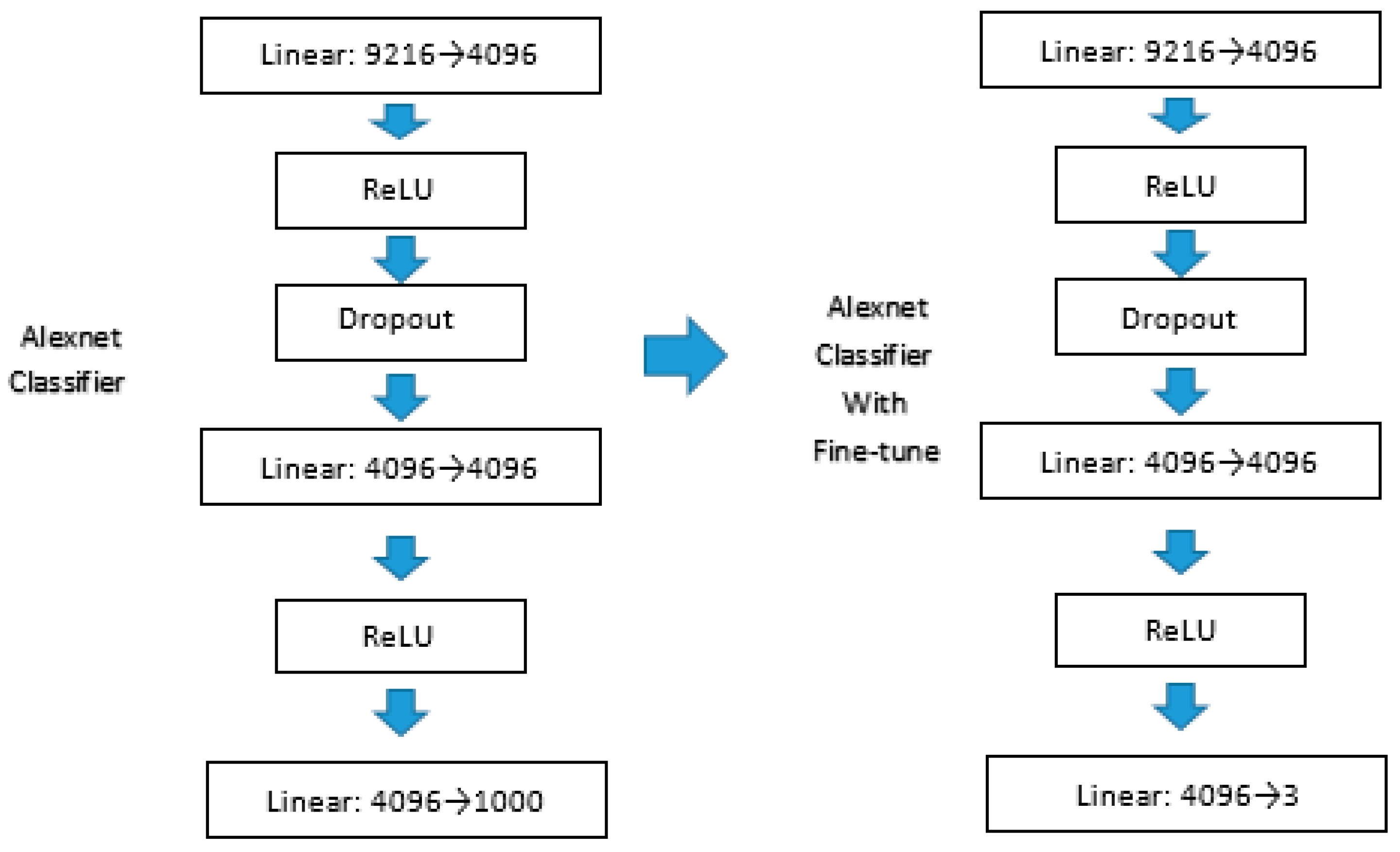

The deep neural network (DNN) is artificial neural network (ANNs)’s promotion, which includes lots of hidden layers between the input and output layers [

19,

20]. DNN can give good expression of an object by its deep architectures and performs well in modeling complex nonlinear relationships [

21]. DNN have many popular models, such as recurrent neural networks (RNNs) [

22] and convolutional deep neural networks (CNNs). Nowadays, CNNs [

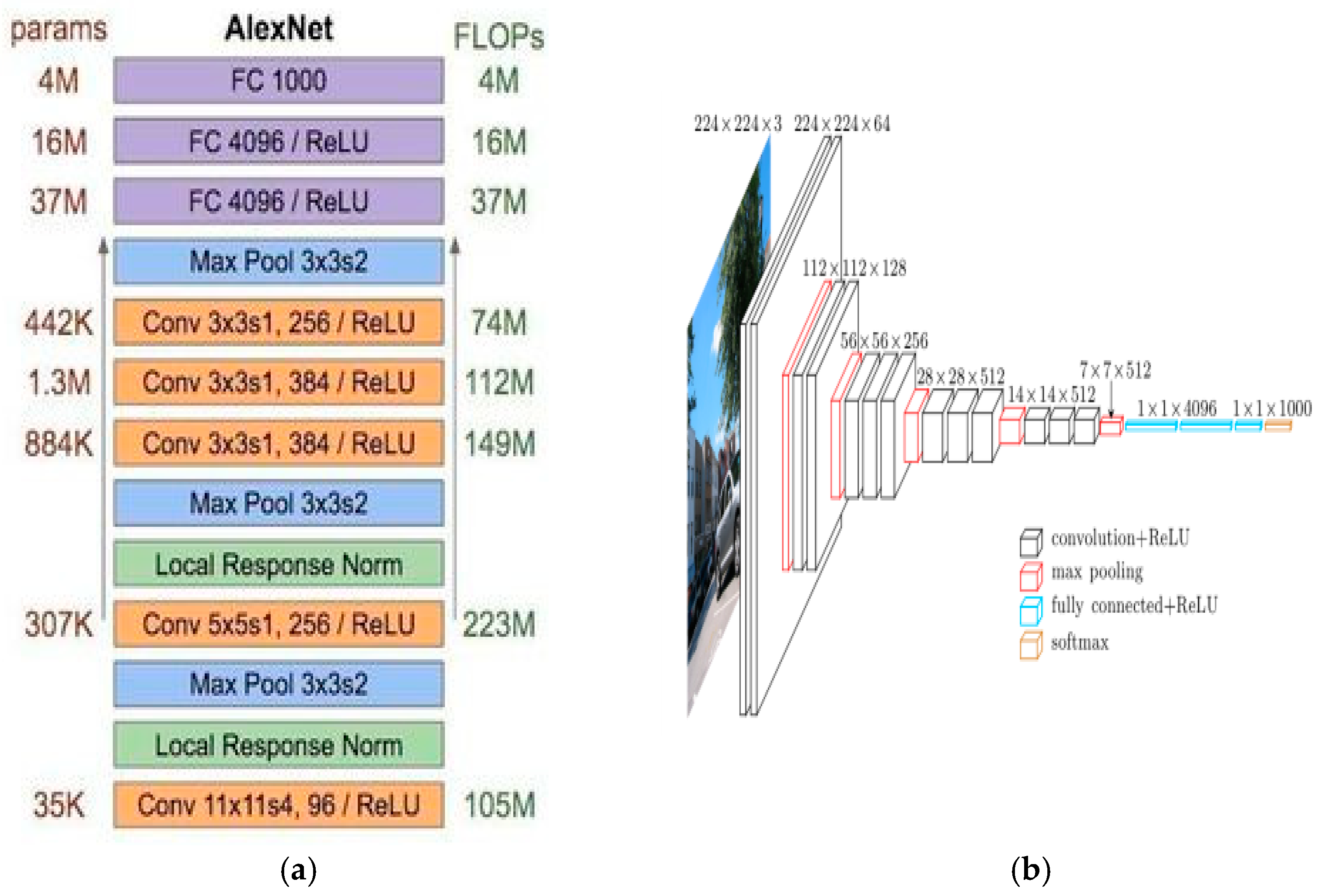

23] are playing an important role in detection and recognition. One of the most remarkable results was its application in the ImageNet data set. The ImageNet dataset includes over 15 million images with 22,000 different categories. By using a model called Alexnet [

24], the researchers achieved a remarkable result, which have reduced the error rates of 8.2% in top-one error rates and 8.7% in top-five error rates than the previous work [

24,

25]. Furthermore, CNNs have achieved many impressive results in computer the vision area, such as handwritten digits recognition [

26], traffic sign recognition [

27], and face recognition [

28].

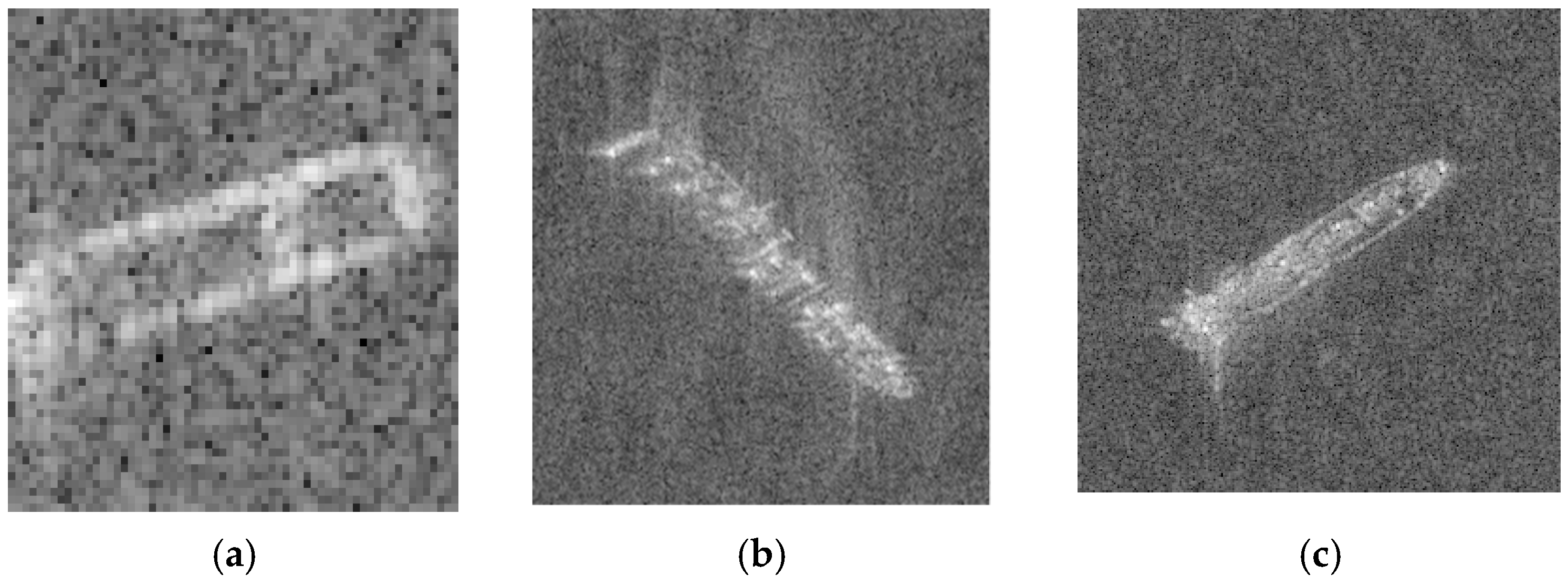

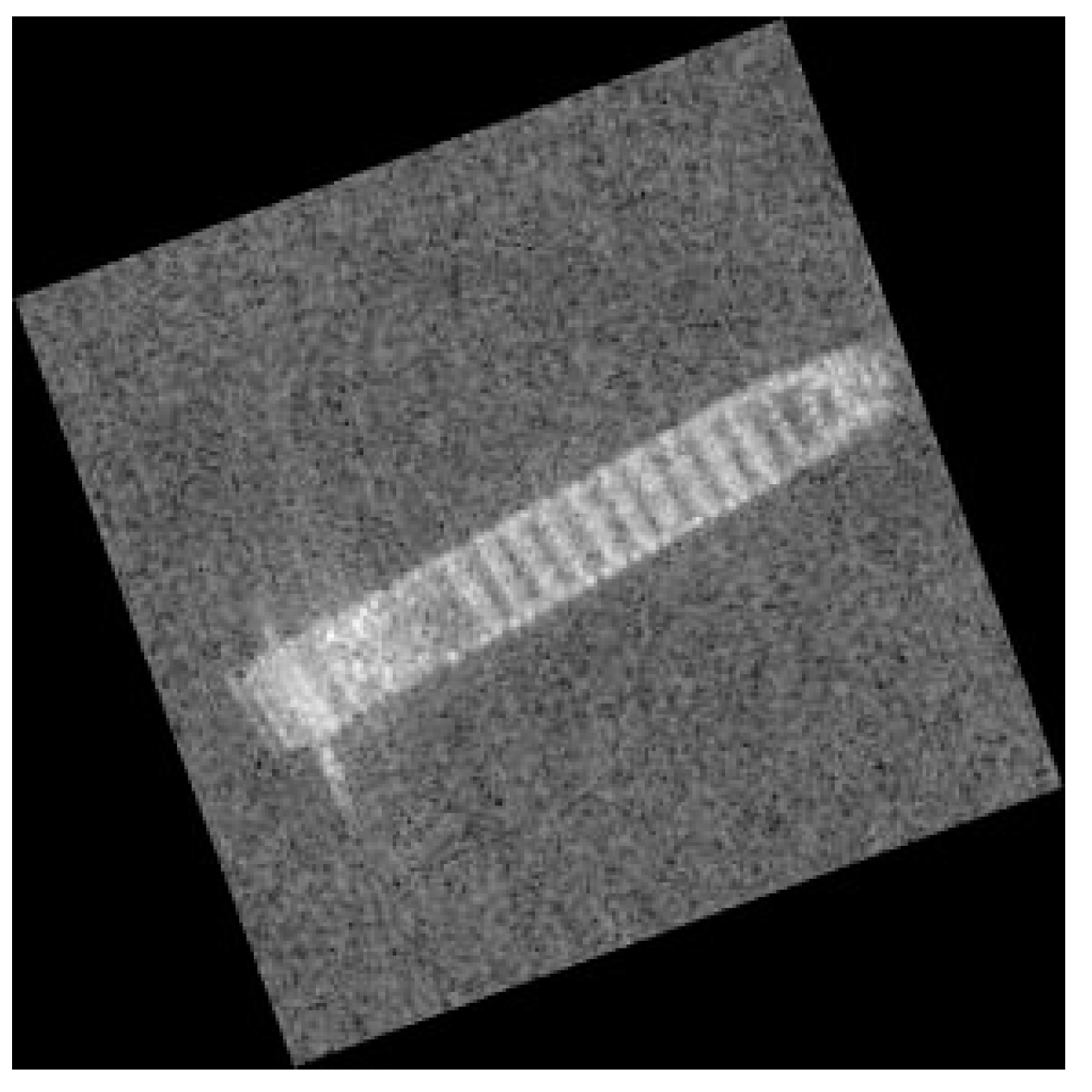

Deep learning applications in SAR images have been gradually used. Zhang et al. [

5] showed an application in ship classification with transfer learning and fine-tuning. Kang et al. [

16] used deep neural networks for SAR ship detection and get good results. Chen et al. [

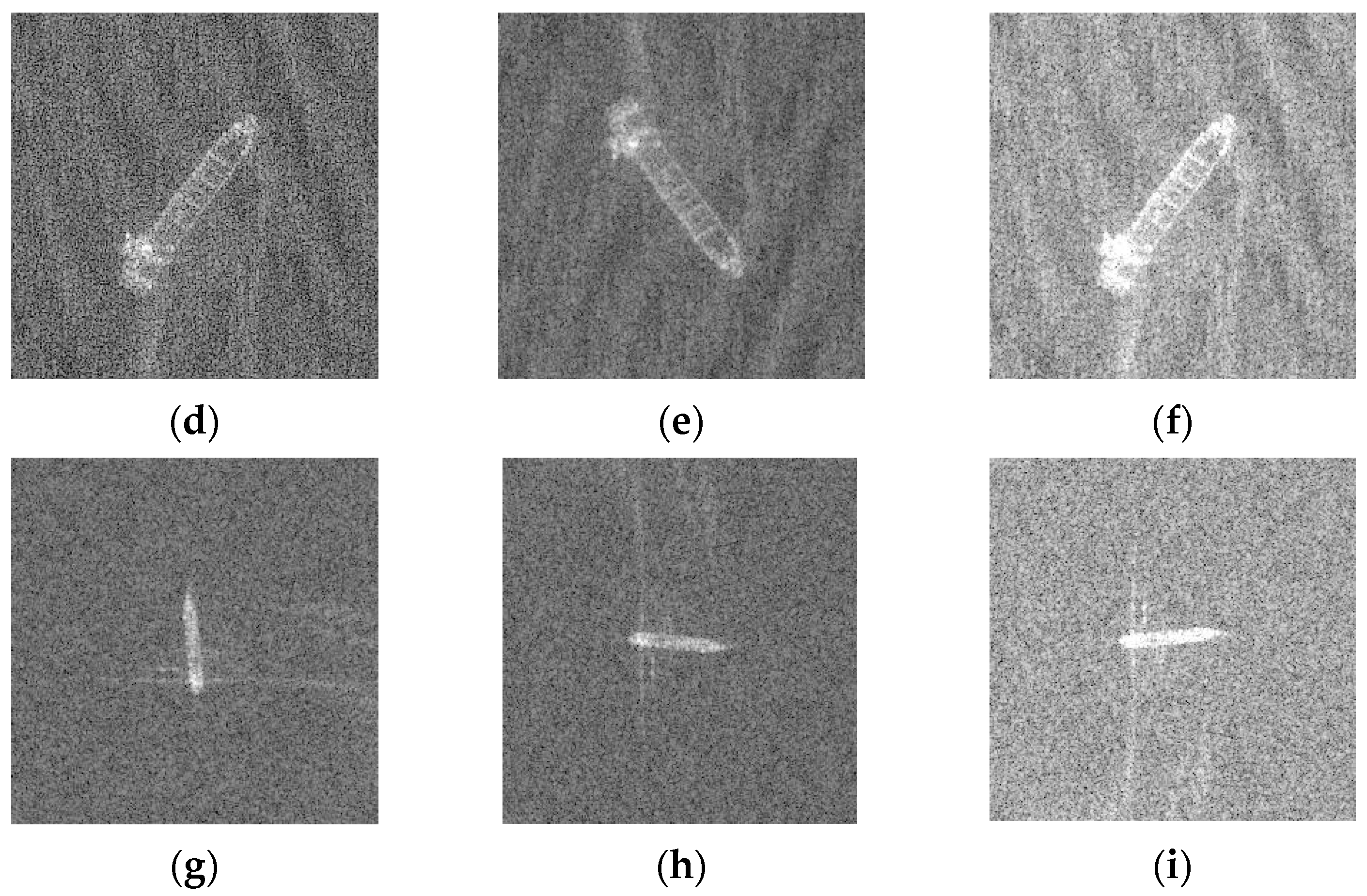

29] presented a method for classification of 10 categories of SAR images, and showed application in ship target recognition. By using deep learning methods, SAR images avoid complicated feature extraction, which completed by deep networks. It can obviously improve the performance of classifiers. However, there are still many problems. Compared with computer vision, SAR image interpretation has the same purpose—extracting useful information from images—but the processed SAR image is significantly different from visible light image, mainly reflected in the band, imaging principle, projection direction, angle of view, etc. Furthermore, the small dataset may be a problem, too. Therefore, when we use the method in SAR images, we need to fully consider these problems.

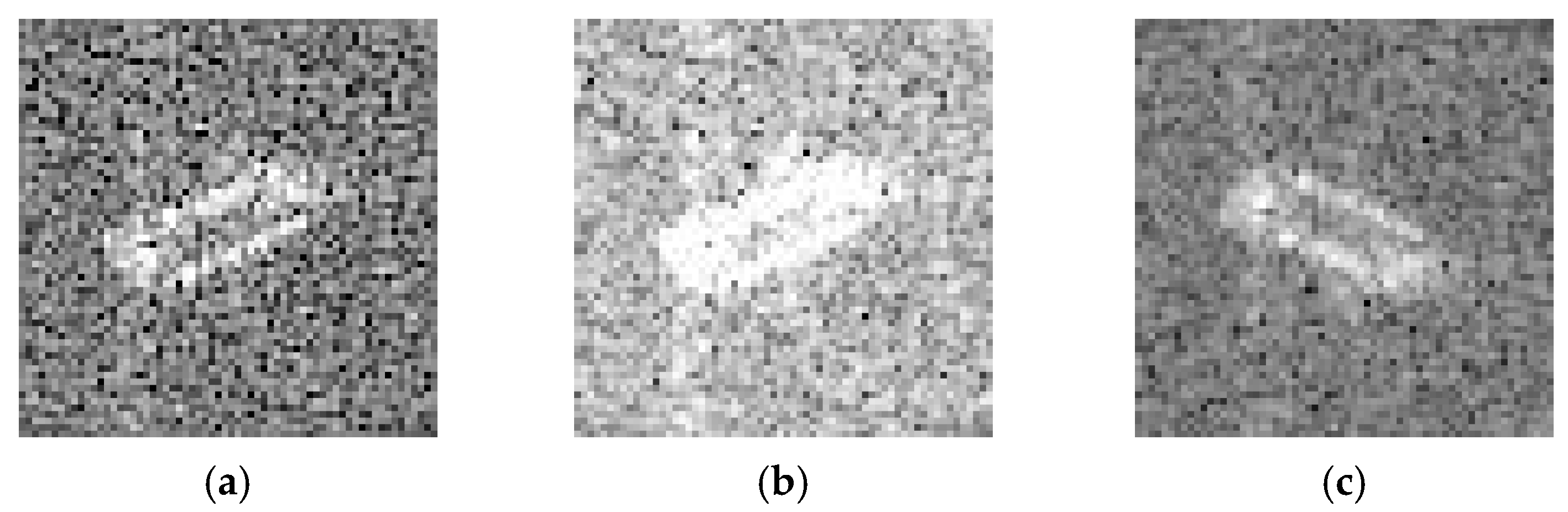

As with ship classification, many issues may arise when training CNNs. One common issue is over-fitting. Over-fitting can be explained as the neural network models the training data too well and perform bad in data which is different from training data. If a model learns the most of detail and noise of training data, it cannot get good performance in new data, then the over-fitting happens. Some useless information such as noise and random fluctuations have been learned while training as parts of the models. Then the models cannot have good generalize ability in new data. CNNs are prone to over-fitting when model have rare dependencies in the training data. To solve this problem, CNNs often require tens of thousands of examples to train adequately. However, in many cases, we cannot get enough training data in SAR applications, which may cause severe over-fitting. A popular way to solve this problem is data augmentation, such as flipping, brightening, and contrast [

30]. Another way to solve this problem is transfer learning, which often been applied in natural images [

31].

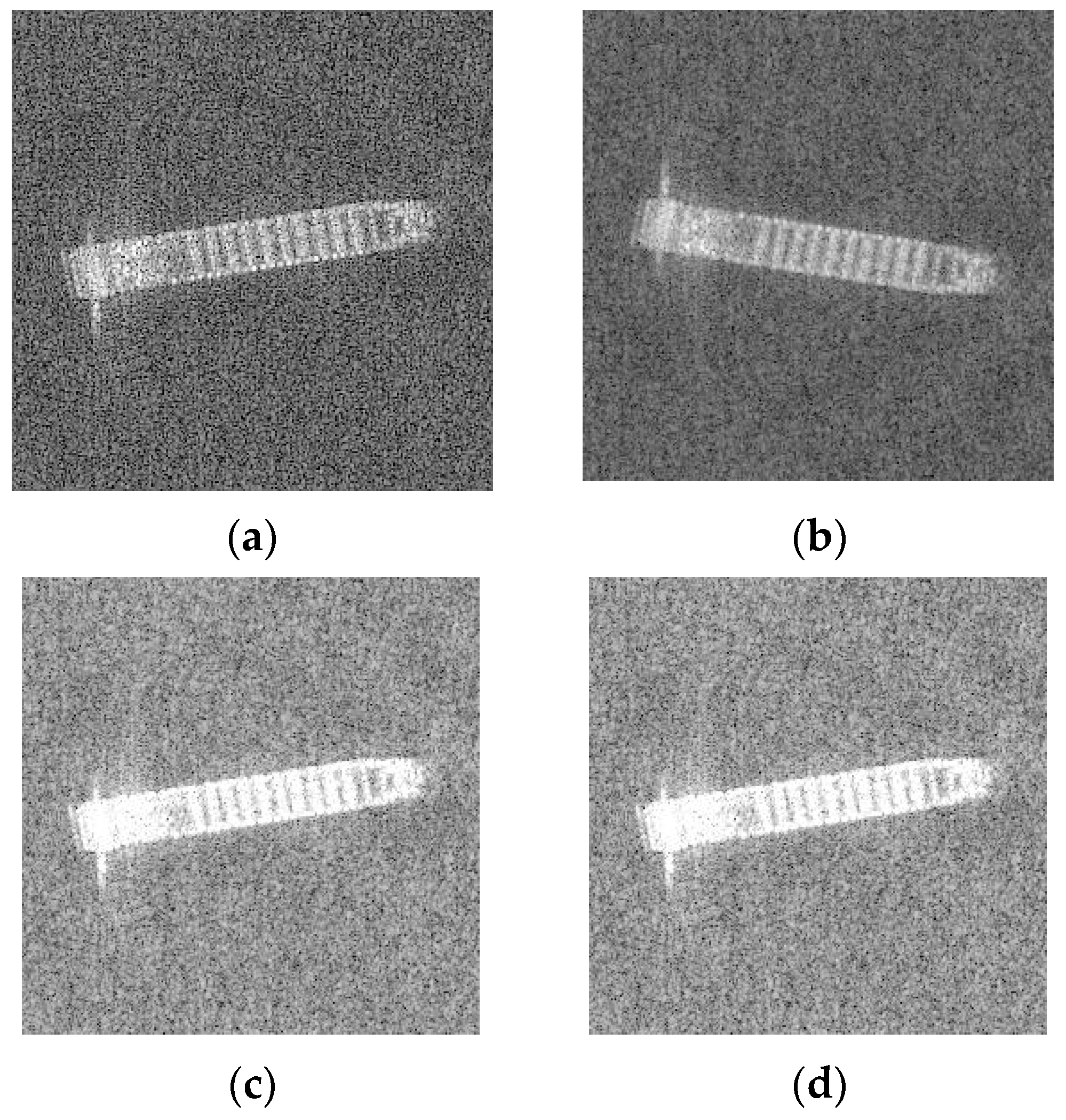

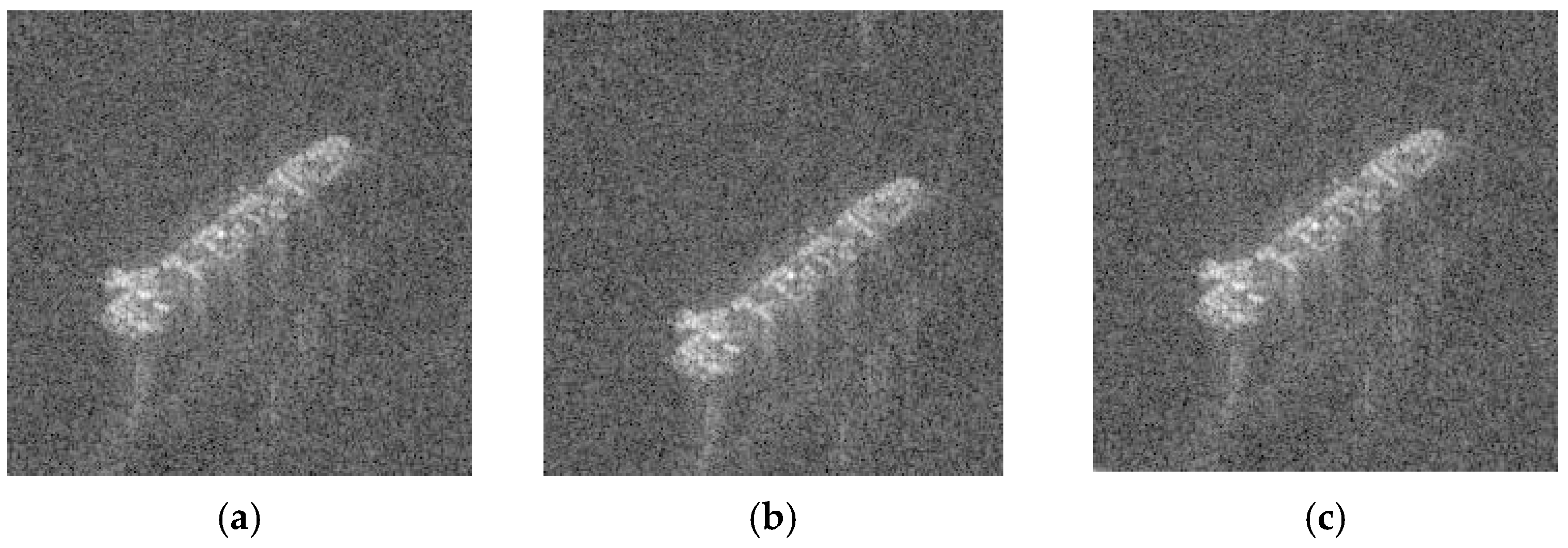

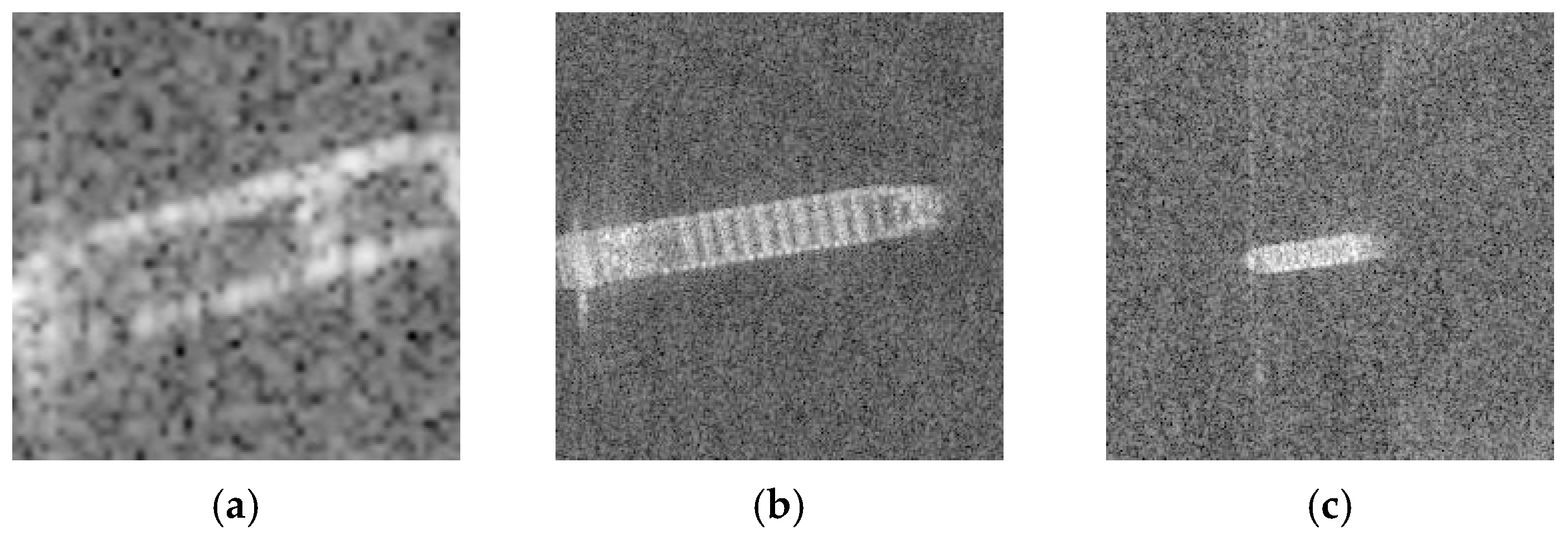

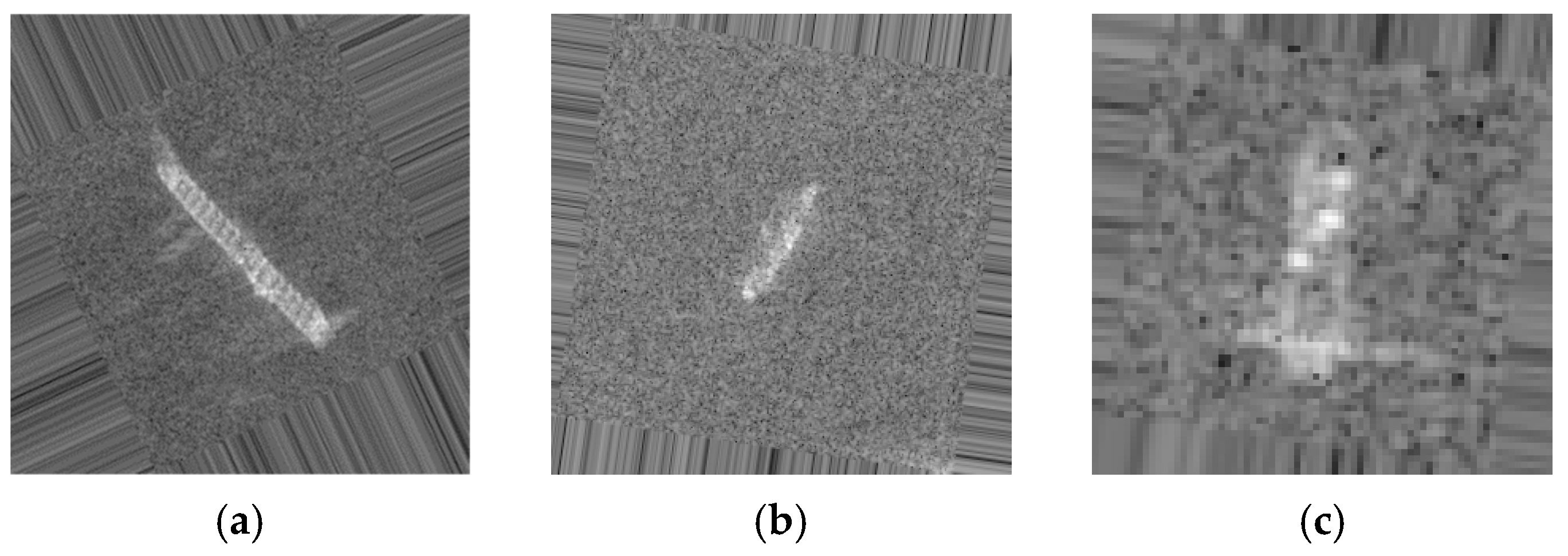

The application which using deep neural networks in SAR ship images worth paying attention in improving the performance in SAR ship detection and classification. When dealing with SAR images, we should take both the data processed method and CNN training method into consideration. To get good performance, we should retain the important information of the images and get enough images when we make data augmentation and use some good models for training to reduce the amount requirement of training set. In this paper, we proposed a new method for SAR ship classification. First, we proposed a new data augmentation method which can keep important information while increasing the amount of data and achieve the requirements of the dataset. Then, by coupling transfer learning with the processed data, we can get good performance in classification. When dealing with a small training dataset, the method can successfully enlarge the training datasets. With the enlarged datasets coupled with transfer learning, CNNs can avoid the over-fitting issue and achieve excellent classification accuracy. Comparison experiments demonstrated the good performance of our method.

The remainder of this paper is organized as follows.

Section 2 presents an introduction of the theory for CNNs, transfer learning and components of our method. The details of the experiments are described in

Section 3, we also present our discussion in this section. Finally,

Section 4 offers the conclusion.