Abstract

This paper proposes a new interferometric near-field 3-D imaging approach based on multi-channel joint sparse reconstruction to solve the problems of conventional methods, i.e., the irrespective correlation of different channels in single-channel independent imaging which may lead to deviated positions of scattering points, and the low accuracy of imaging azimuth angle for real anisotropic targets. Firstly, two full-apertures are divided into several sub-apertures by the same standard; secondly, the joint sparse metric function is constructed based on scattering characteristics of the target in multi-channel status, and the improved Orthogonal Matching Pursuit (OMP) method is used for imaging solving, so as to obtain high-precision 3-D image of each sub-aperture; thirdly, comprehensive sub-aperture processing is performed using all sub-aperture 3-D images to obtain the final 3-D images; finally, validity of the proposed approach is verified by using simulation electromagnetic data and data measured in the anechoic chamber. Experimental results show that, compared with traditional interferometric ISAR imaging approaches, the algorithm proposed in this paper is able to provide a higher accuracy in scattering center reconstruction, and can effectively maintain relative phase information of channels.

1. Introduction

Near-field 3-D imaging is a microwave imaging technique that is developed on the basis of two-dimensional (2-D) synthetic aperture imaging. As it has higher spatial resolution capability, and has easy availability for engineering realization, near-field 3-D imaging is widely applied in Radar Cross Section (RCS) [1], non-destructive testing and evaluation (NDTE) [2,3], security check [4], concealed weapon detection [5,6,7], through-wall and inner wall imaging [8,9], breast cancer detection [10,11], etc. So far, a variety of techniques have been applied in near-field 3-D imaging to improve its performance, such as imaging based on range migration algorithm (RMA) [12,13,14] and polar format algorithm (PFA) [14], tomography imaging method [15], microwave holography method [16], confocal radar-based imaging [17], and NUFFT-based imaging [18,19].

Imaging approaches as mentioned above are all based on the traditional Nyquist sampling principle and matched filtering. In general, high range resolution is obtained by transmitting wideband signals, and high azimuth resolution is obtained in longer synthetic aperture time. However, with higher requirements for imaging resolution, traditional imaging approaches are encountering problems such as the sampling rate is too high, the data volume is too large, and the fast processing is difficult to carry out. According to the Compressed Sensing (CS) [20,21], a sparse signal or a sparse signal in a particular transform domain is sampled in a way that is lower than or far below than requirements of the Nyquist sampling theorem. The high-dimensional target signal can be accurately reconstructed by applying low-dimensional observation data by solving a minimum L-norm constrained optimization problem. At present, many scholars are combining CS with high-resolution near-field imaging and have achieved a number of research results, which fully demonstrate great potential of CS in reducing data sampling rate and improving imaging resolution [3,8,22,23].

At present, research on near-field 3-D imaging mainly focuses on planar scanning 3-D imaging. Although high-resolution target 3-D images can be obtained by applying the planar scanning 3-D imaging, the size of sampling data is huge, and conducting the measurement is time-consuming and the imaging efficiency is low. For the interferometric inverse synthetic aperture radar (InISAR) [24,25] method, 3-D views of the target can be obtained by applying the multi-antenna phase interference method, and data acquisition and signal processing are relatively simple, which make it easy for the system to perform functions. Thus, InISAR can be widely used in near-field 3-D imaging. InISAR 3-D imaging based on CS technology has the following advantages: (1) high-resolution ISAR images can be obtained only by short-time observation data. At the same time, rotation of the target can be approximately considered to be uniform in short-phase processing interval and thus the occurrence probability of range cell migration is reduced; (2) imaging results are not affected by sidelobe, and image resolution can be improved by increasing imaging grids, so that it is helpful to suppress angular glint phenomenon in InISAR imaging and (3) through the CS technology, the ISAR images can be further reconstructed by adopting sparse sampling data, thus reducing the pressure of data acquisition.

In interferometric imaging, compressed sampling and sparse reconstruction can be separately performed for each channel, so as to reduce the system sampling rate and improve the quality of radar imaging. For example, 3-D InISAR imaging method based on sparse constraint model is proposed using the sparsity of ISAR images in reference [24]. However, traditional InISAR imaging methods have the following problems: (1) because the observation objectives are consistent, multi-channel echoes in the InISAR system have a strong correlation, i.e., images of each channel have the same target support set. However, in single-channel independent processing, such prior information is not considered, and consistent location and number of scattering points among channel images cannot be ensured, which means it cannot ensure that all scattering points on the target are located in positions with the same pixel in two interferometric images, thus reducing the estimation accuracy of interferometric phase information and (2) in InISAR imaging, scattering characteristics of the target vary with the observation angle, and the imaging azimuth accuracy is limited by scattering anisotropy of the target.

Motived by the above problems in traditional InISAR imaging methods, this paper proposes the interferometric near-field 3-D imaging based on multi-channel joint sparse reconstruction. Firstly, a more universal multi-channel interferometric near-field echo signal model is set up; secondly, the two observed full apertures are divided into several sub-apertures according to the same criteria. By analyzing sparse characteristics of the target echo in each channel, a joint sparse constrained optimization model is set up and the problem of multi-channel high-resolution imaging is transformed into an optimization problem based on multi-channel joint sparse reconstruction. The improved orthogonal matching pursuit (OMP) is applied for high-resolution imaging solving to obtain 3-D images of each sub-aperture target; thirdly, the 3-D images of each sub-aperture are synthesized to obtain final 3-D imaging results of the target under full aperture; finally, the effectiveness of the proposed approach is verified by processing point target simulation data and Backhoe electromagnetic simulation data, and the InISAR system is set up in the microwave anechoic chamber to verify the practical applications of the proposed approach by processing the measured data obtained. Compared with traditional InISAR imaging methods, the proposed method in this paper has the following advantages: (1) reconstruction accuracy of strong scattering centers in ISAR images is improved owing to utilization of correlation among cross channels, and relative phase information of cross channels is kept effectively, so as to obtain interferometric phase information with higher accuracy; (2) owing to sub-aperture synthesis method applied, the target with scattering anisotropy in all directions can be accurately described, and the problem that accuracy of imaging azimuth angle is limited can be overcome; (3) because of information complementation and redundancy among multi-channel signals applied, given relatively large compression sampling ratio, generation of false scattering points can be effectively suppressed, thereby improving the imaging quality.

2. Signal Model of Inisar Near-Field Imaging

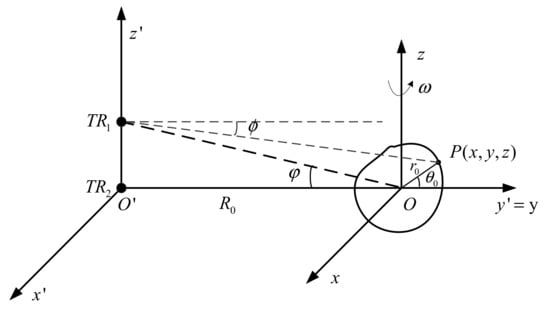

The InISAR system includes multiple antennas. This paper proposes a dual-antenna ISAR imaging system. Figure 1 shows geometric relationship between antennas and the target, where antenna TR2 is located at origin O’. Antennae TR1 and TR2 form the vertical baseline along axis Z’. Define two coordinate systems, where T’(x’,y’,z’) is the radar coordinate system, axis y’ is the line of sight of radar, x’ and y’ represent the horizontal and vertical directions, respectively. T(x,y,z) is the target coordinate system, in which axis y is coincident with axis y’, and axes x and y represent the azimuth direction and range direction of ISAR, respectively, and distance between origins of the two coordinate systems is R0 (R0 < 4D2/λ, D is the maximum size of the target and λ is the wavelength of the incident wave). The target is moving at a constant speed in plane (x,y) at an angular velocity ω, and plane (x’,y’) is parallel to plane (x,y). Assuming that coordinate of any point P on the target is (x,y,z), and the coordinate in the cylindrical coordinate system is (r0,θ0,z). Then, at the moment t, the distance from the antenna I (i∈ TR1, TR2}) to the point P is:

where, αi refers to the pitch angle from the antenna i to the origin of target coordinate system. Assuming that the antenna transmits a step frequency wideband signal [26]:

where:

is the frequency of the pulse centered at time and for pulses spaced equally in frequency and time; ; is the frequency difference for each step in the pulse burst; T is the time interval between pulses (pulse repetition period of pulses in the burst); M the number of pulses in each burst; -the index of emitted burst. The echo of the target received by antenna after the coherent demodulation is (to understand easily, rectangular coordinates are used):

where, refers to the imaging scene area, refers to the position coordinate of the target, and indicates the backscatter coefficient of the target at position received by antenna . Since the imaging process is a linear system, and in the high frequency region, the total scattering of the target can be seen as a linear superposition of multiple strong scattering points, i.e., represents superposition of echo signals of all scattering points at in the entire imaging region . The signal is a complex signal, amplitude represents scattering intensity of scattering point , and phase contains position information of the scattering point. represents the summation of all the scattered echoes in the imaging scene. With signal processing technology, the target image can be obtained by separating position and amplitude from complex signal .

Figure 1.

Geometric sketch of dual-antenna InISAR imaging.

The imaging scene is discretized. In order to represent the radar signal as a matrix, the corresponding 2-D backscatter coefficient matrix is concatenated into a one-dimensional column vector by row or line:

where, refers to the vector of , and is the discrete grid number of axis . is the discrete grid number of axis .

According to the Formula (4), the discrete echo data can be indicated as follows:

where, refers to wave length corresponding to the frequency . refers to the distance between the antenna and the target at time t.

The range (or frequency) and azimuth angle are also discrete in actual situations. Assuming that the range sampling point is , the azimuth sampling point is . The frequency of (frequency) pulse in the burst is defined by , where is the initial frequency, is the step interval, and the azimuth is discretized as , ( is the angular interval). Formula (6) can be discretized as:

Equation (7) is represented as a matrix considering the effect of noise in actual situations:

where is a vector with the size of , which is formed through signal sampling; is a dictionary matrix with the size of , which is formed through mapping relationship between the target and the signal; is a vector with the size of , which is composed of scene backscatter coefficients; is the additive complex noise in the channel. Specified composition of each vector and matrix in formula (8) is as follows:

Make:

Following vectors are defined as:

Then the dictionary matrix can be obtained as:

Therefore, the projection relationship between the scene and signal in channel is obtained. The radar signal model in the interferometric channel is represented as follows:

where, corresponds with the echo signal in the interferometric channel, corresponds with the dictionary matrix in the interferometric channel, corresponds with the backscatter coefficient in each interferometric channel, and corresponds with the additive noise in each interferometric channel. It should be noted that the dictionary matrix corresponding with each interferometric channel may be the same. However, in order to keep their generality, dictionary matrixes of the two interferometric channels should be represented separately.

In order to reduce the sampling data of each interferometric channel effectively, the angular position is randomly selected to transmit signal in the azimuth direction, and then frequency point is randomly selected in the distance direction. After compressed sampling, the interferometric echo signal model can be represented as follows:

where, refers to CS echo signal with the size of , refers to the measurement matrix corresponding with each channel, refers to the Kronecker product, refers to the sensing matrix with the size of , refers to the scene backscatter coefficient with the size of , and refers to noise with the size of .

Since , , recovery of the signal from the measurements is ill-posed in general. However, according to the CS theory, when the matrix has the Restricted Isometry Property (RIP) [27], it is indeed possible to recover the largest ’s from a similarly sized set of measurements . The RIP is closely related to an incoherency property between and , where the rows of do not provide a sparse representation of the columns of , and vice versa.

In order to obtain the target images of each channel, the problem of interferometric near-field imaging can be converted into an optimization and reconstruction problem of two independent channels according to CS theory and sparse space distribution characteristics of the target scene:

where, refers to the zero norm of vector, namely the number of non-zero elements in the vector, refers to the Frobenius norm of matrix, and is a positive number which depends on the noise level. In Formula (15), it is quite important to select an appropriate noise level for the final optimization result: too high noise level will lead to the loss of some weak scattering points, while too low noise level will make it difficult to suppress strong noises. In Formula (15), optimization solving can be performed by applying the OMP method which not only guarantees reconstruction accuracy, but also has high computational efficiency.

3. Joint Sparsity Constraint Near-Field 3-D Imaging of Inisar Based on CS

3.1. Algorithm Flow Description

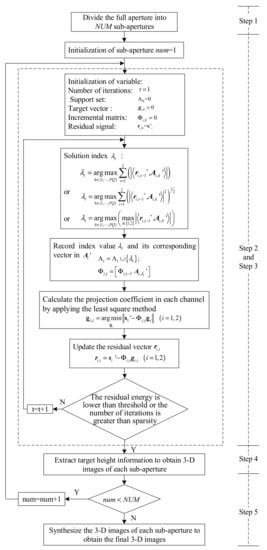

We first give the flow of the proposed approach in this paper, and describe the solution precisely in the following subsections. The process of the proposed approach is presented as follows:

- Step 1:

- Full apertures of the two channels are divided into several sub-apertures by the same criteria, and each sub-aperture has a very small azimuth angle range.

- Step 2:

- Global sparsity constraint and improved OMP algorithm are applied to obtain the 2-D complex images and of each sub-aperture in the two channels.

- Step 3:

- Two images of each sub-aperture are performed with interferometric processing to obtain projection coordinates of the scattering points along the baseline.

- Step 4:

- 3-D images of each sub-aperture target are constructed by synthesizing the 2-D ISAR images of the interferometric processing results.

- Step 5:

- 3-D images of all sub-apertures are processed synthetically to obtain the final 3-D images.

- Step 6:

- The imaging flow is shown in Figure 2.

Figure 2. Imaging flow of proposed approach.

Figure 2. Imaging flow of proposed approach.

3.2. Joint Sparse Constrained Optimization Model

As mentioned in Section 2, a small amount of compressed sampled data is used to achieve high-resolution reconstruction of the scene in the single-channel independent CS approach. However, it cannot guarantee consistency of positions and numbers of all scattering points in each channel, and integrity of the cross-information of channels is destroyed, which are not favorable for target scattering information extraction. In addition, complementarity and redundancy among multi-channel data does not fully exploit in the single-channel independent CS approach, so it cannot further improve the SNR gain and reduce data volume of the system.

Two ISAR images used in the InISAR imaging are usually highly correlated, so they are more obvious in joint sparsity. Based on such prior information, the following joint sparse metric functions with global sparsity can be obtained as:

where, is zero norm, which represents the number of non-zero elements in the vector. Different values correspond with different mixed norm forms. This paper defines the following three global sparsity constraints:

- (1)

- when , , is termed as mixed sum norm, i.e., sparsity of amplitude sum of the two ISAR images is taken as the global sparsity constraint;

- (2)

- when , is termed as mixed Euclidean norm;

- (3)

- when , is termed as mixed infinite norm, i.e., the sparsity of one of the two ISAR images (with larger amplitude) is taken as the overall sparsity constraint.

The mixed sum norm and mixed Euclidean norm are both measured by taking the number of non-zero elements of amplitude sum of images in all channels as the global sparsity. Since the scattering points are aligned at different angles, the additivity is reasonable. The mixed infinite norm takes the number of non-zero elements of the pixel point with the largest amplitude in each image as the joint sparsity, and can also ensure that scattering points in the reconstructed sub-aperture images are aligned.

3.3. Optimal Solution Algorithm Constrained by Joint Sparse

According to the constructed global sparsity constraint function, the problem of InISAR imaging solution is transformed into the problem of multi-channel joint sparse optimal reconstruction problem:

where, is determined by the minimum noise level of each channel to ensure that each channel can generate the target image. In Formula (17), data cannot be processed directly by applying the traditional CS optimal reconstruction algorithm. In [28], an improved convex optimization approach was proposed to solve the joint sparse imaging problem of interferometric channels. As sparse reconstruction is only performed for azimuth, the computational complexity is not high. While it is applied to the 2-D sparse reconstruction concerning range and azimuth as studied in this paper, the computational complexity becomes intensive, especially in high-resolution imaging. The target coefficient vector to be reconstructed usually has a large size, and thus the memory shortage of the computational platform will be inevitably encountered for practical applications. OMP algorithm is a commonly used greedy algorithm, which has high computational efficiency and can guarantee excellent reconstruction results. Therefore, this paper proposes an effective and improved OMP algorithm to solve the multi-channel joint sparse reconstruction problem based on OMP algorithm. Regarding the three mixed norm solutions proposed in this paper, step (2) is different in the way of index finding:

- (1)

- Initialization: number of iterations , and support set . For the channel, its initialized target vector , and incremental matrix , which is composed of column vectors in the support set. Make the residual signal after iterations, and initialize .

- (2)

- Obtain index by solving the following formulas:where, is the column vector in perception matrix.

- (3)

- Record the obtained index to the support set and its corresponding vector in to the incremental matrix:

- (4)

- Adopt the least square method to calculate the projection coefficient of each channel:

- (5)

- Update residual signal :

- (6)

- For the number of iteration , repeat step (2) to (4) until the energy of the residual signal is lower than the preset threshold Thres or the number of iterations reaches the preset sparsity K.

Compared with the standard OMP algorithm, the proposed algorithm is mainly improved in step (2). In the standard OMP algorithm, the support set for different channels may be different, because the index is determined only for the single-channel signal itself:

Such independent processing makes inconsistent positions and number of non-zero coefficients in the target vector finally reconstructed in each channel, which is not favorable for extraction of target scattering information. For the improved OMP algorithm, the multi-channel target scattering information is used to determine candidate vectors and solve the projection coefficients of each channel in the same support set, so as to ensure the consistency of the position and number of non-zero coefficients in target vector reconstructed in each channel.

Setting of the preset threshold Thres is related to the noise level of the echo signal. Considering the high SNR ratio, the threshold can generally be set as about 0.05 of the energy of the echo signal. At this time, most of the scattering centers on the target can be accurately reconstructed; whereas, with the increase of noise in echo signal, the set threshold value also increases, so as to avoid more false scattering points caused by noise in the generated image. If the sparsity is known, the reconstruction results obtained by applying the CS matching pursuit reconstruction algorithm are quite excellent but it is difficult to obtain accurate sparsity in actual engineering. In such case it always requires a large number of SAR images for statistical analysis to determine approximate sparsity range of different kinds of observation scenes, and then obtain the optimal sparsity within the determined range by the optimization criteria.

3.4. Extraction of Target Scattering Information

When the distance between the target and the antenna satisfies the near-field condition and the baseline of the antennas is much smaller than , according to plane spectrum theory [29], the distance in Formula (1) can be represented as:

where, is the angle between the target and antenna in the plane Oxy. To simplify the expression, the Cartesian coordinates of the target are represented in the cylindrical coordinate system, so Equation (25) can be expressed as:

Then, the echo signal of the scattering point can be represented as:

where, . This item is the same for the two antennas. According to Formula (26), it is found that phase information of the scattering point in target ISAR image contains height information of the scattering point, interferometric processing for two ISAR images is conducted, and the interferometric phase difference of images is:

In the InISAR imaging system, the baseline length is much less than the distance between the antennas and target, so pitch angle difference between the antenna and is very small, namely , . In this paper, the antenna is at the origin, so , , and the height of the scattering point can be estimated as:

When the antenna and are symmetrically distributed in the coordinate origin, namely , the height of the scattering point is estimated as:

For target containing scattering points, the height of each scattering point can be estimated by interferometric processing of the corresponding pixel points in the two ISAR images.

In actual processing, in order to avoid possible ambiguity of interferometric phase difference, the following judgements on the interferometric phase difference are required:

4. Experiments and Analysis

In order to verify the effectiveness of the algorithm in this paper, point target simulation data, electromagnetic software simulation data, and measured data in anechoic chamber is adopted to carry out imaging verification and performance analysis, respectively.

4.1. Numerical Simulations

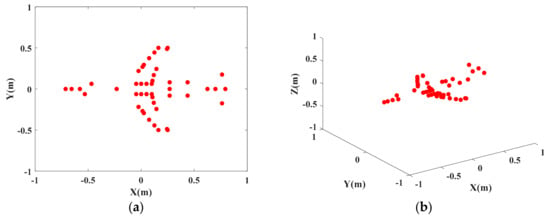

The simulation target is composed of 46 scattering centers withe shape of plane model, and its distribution is shown in Figure 3. Stepped frequency signal of radar transmission and system parameters setting are as shown in Table 1.

Figure 3.

Geometric distribution for scattering model of plane point. (a) 2D distribution diagram of scattering point; (b) 3-D distribution diagram of scattering point.

Table 1.

Simulation parameter.

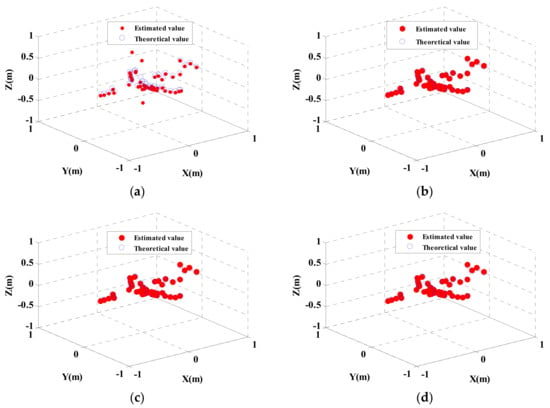

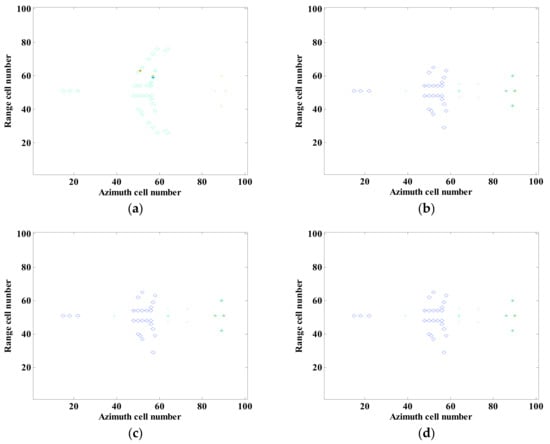

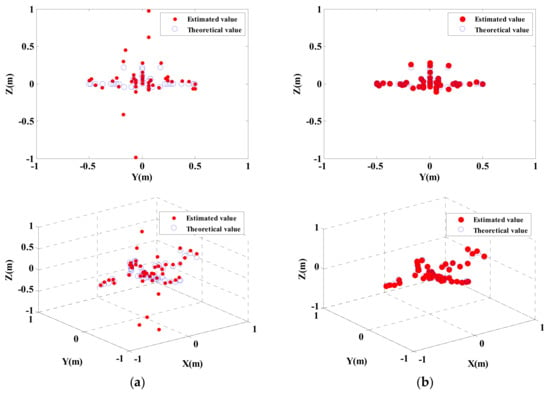

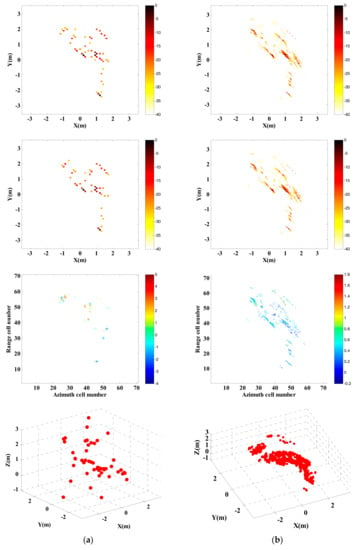

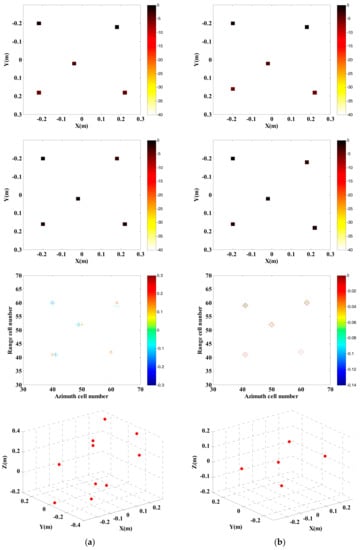

Independent CS processing and joint CS processing based on global sparsity are separately used for InISAR imaging. The 3-D distribution of scattering points is shown in Figure 4. The above experiments are carried out with full data and signal without noise. Figure 4a is the single-channel independent processing reconstruction result, Figure 4b–d are the global sparse joint sparse reconstruction results. It can be seen from the figures that traditional single-channel independent processing can basically reflect the 3-D distribution of scattering points of the target, but cannot guarantee location consistency of all scattering points in different channels, which results in deviated location estimation. While with the proposed method, consistency of location and number of scattering points and more accurate reconstruction results can be ensured.

Figure 4.

3-D Imaging results of near-field InISAR. (a) Traditional imaging approach, (b) Global sparsity mixed sum norm processing; (c) Global sparsity mixed Euclidean norm processing (d) Global sparsity mixed infinite norm processing.

4.1.1. Precision Analysis of Scattering Point Coordinate Estimation

Based on the above experiments, three typical scattering points are selected for statistical comparison. It can be seen from the coordinate values of scattering points in the Table 2 that the conventional imaging approach has obvious deviation in estimating the height coordinate values of scattering points, while the proposed approach is more accurate in estimating the location of scattering points. For example, when estimating the height information, the maximum deviation of the traditional imaging approach is 0.6438, while the maximum deviation of the approach in this paper is 0.046. Compared with the traditional method, the accuracy of the proposed approach is improved by roughly 90% as indicated by statistical analysis of the location errors of all scattering points.

Table 2.

Comparison of coordinates of typical scattering points.

4.1.2. Precision Analysis of Interferometric Phase

For near-field InISAR imaging system, to estimate the height information of target scattering point through the phase difference of corresponding pixel among complex images of various channels, it must ensure the consistency of strong scattering position among various channels images during imaging of various channels. The accuracy of interferometric phase has a direct influence on imaging quality. Figure 5 is the interferometric phase distribution of the complex image of two channels after processing. According to the comparative study on Figure 5a–d, the accuracy of scattering point in obtaining interferometric phase is higher and broader for the proposed approach comparing with that of the independent single-channel processing imaging approach.

Figure 5.

Interferometric phase image of near-field InISAR 3-D imaging. (a) Traditional imaging approach, (b) Global sparsity mixed sum norm processing; (c) Global sparsity mixed Euclidean norm processing (d) Global sparsity mixed infinite norm processing.

4.1.3. Noise Suppression Performance

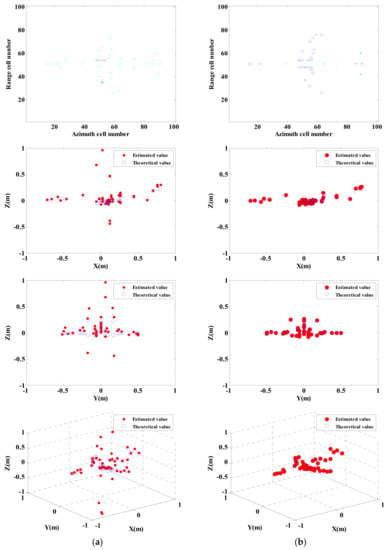

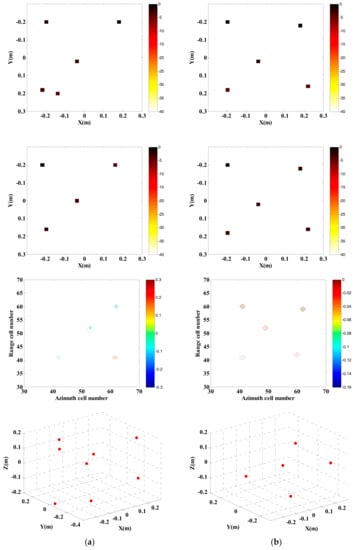

Figure 6 shows the results obtained by applying the traditional imaging approach and the proposed approach when there is noise in echo signal, where the noise is 5 dB complex white Gaussian noise. Add the complex white Gaussian noise with SNR ratio of −15 dB to 25 dB in the echo data, repeat Monte-Carlo simulation test for 100 times under every noise level, and calculate MSE estimated on the basis of height.

Figure 6.

Imaging results obtained by adopting traditional approach and proposed approach respectively under 5 dB Noise. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); interferometric phase images (top layer); complex images of channels 1 and 2 (second and third layers); final 3-D imaging results (last layer).

In Figure 6, imaging results show that there is a considerable deviation in estimation of traditional processing approach on height of target scattering point. While by applying the imaging approach (global sparsity Euclidean norm) proposed in this paper, the height of target scattering point can be estimated more accurate.

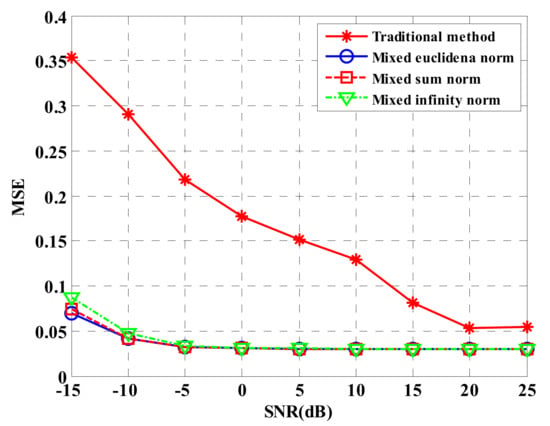

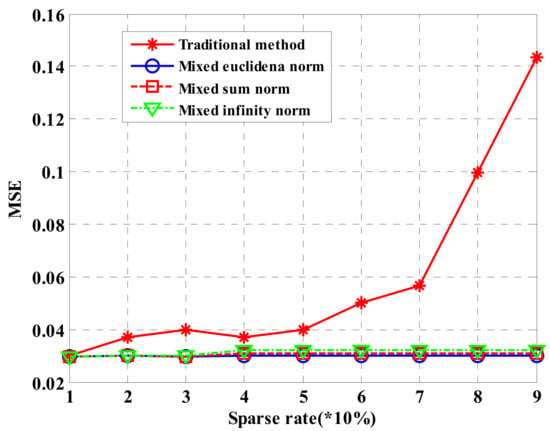

Figure 7 describes MSE estimated by heights of four compressed sensing approaches under different SNRs. Under low SNR, the performance estimated by the heights of the four approaches is low. With the increase of SNR, height estimation performance increases. However, the estimation performance based on global sparsity in this paper is obviously superior to that obtained by the traditional processing approach.

Figure 7.

Comparison for imaging performance of four approaches under different SNRs.

4.1.4. Performance of Sparsity Sampling Imaging

Randomly select a certain quantity of data from echo data to carry out InISAR imaging for further investigating the influence of sparsity sampling on height estimation. Then, estimate the height information of the scattering point through interference processing. The SNR is fixed as 10 dB, and the pitch angle of antenna TR1 is fixed as 0.05°. Carry out Monte-Carlo simulation for 100 times to every sparsity sampling scheme, and calculate the MES estimated on the basis of height.

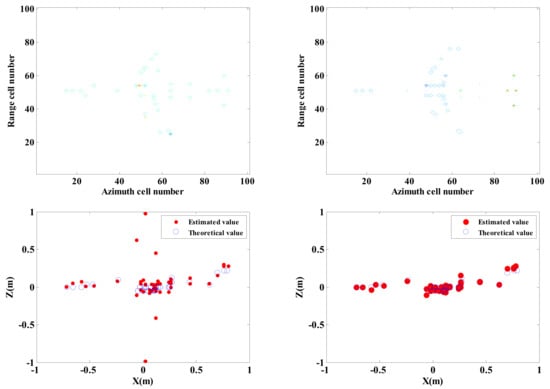

Figure 8 shows that application of the traditional approach fails to perform effective imaging of target given 80% under-sampling rate. However, with the proposed approach, accurate imaging of target can be performed. Figure 9 shows that the height estimation performance of processing based on global sparsity is still superior to that of results obtained on the basis of independent processing under sparsity sampling condition. Even when the measurement quantity is low (10% of full data), the overall sparsity constraint can still ensure a better interferometric imaging performance.

Figure 8.

Imaging results of traditional approach and proposed approach under 20% of effective data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); interferometric phase images (top layer); complex images of channels 1 and 2 (second and third layers); final 3-D imaging results (last layer).

Figure 9.

Comparison for imaging performance of four approaches under different sparsity samplings.

4.1.5. Computational Complexity

The running time of independent CS approach of traditional single-channel depends on step (2). Its computing cost is , wherein, the is the times of algorithm iterative circulation, and the is the signal sampling times. The proposed approach has higher calculation efficiency and is only added with times of addition calculation compared to independent CS approach of single-channel. The increased calculation times by applying the proposed approach can be nearly ignored in practical application. On the basis of the space storage efficiency, the approach proposed in the paper needs to occupy more memory space compared to that needed by applying the independent CS approach of single-channel, but it can be effectively released through parallelization.

4.2. Experiments and Analysis of Backhoe

The electromagnetic scattering echo data which is more closed to actual measurement is obtained by using high-frequency electromagnetic software and 3-D model of target. In this paper, Backhoe electromagnetic simulation data is adopted to verify the effectiveness of the proposed approach. In the experiment, select two groups of data with the adjacent pitch angle of 42° and 42.07° to divide the whole aperture into 17 sub-apertures. Specific parameters are shown in Table 3, and Figure 10 shows the CAD model of Backhoe.

Table 3.

Parameters of electromagnetic simulation system.

Figure 10.

3-D CAD model of Backhoe.

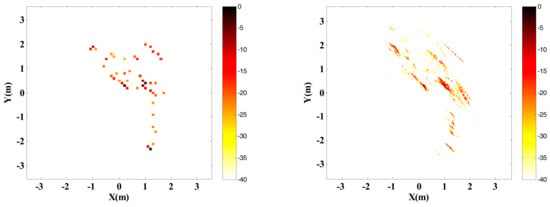

Adopt traditional imaging approach and the proposed approach respectively to carry out InISAR imaging, and the 3-D distribution of target scattering points is shown in Figure 11.

Figure 11.

InISAR imaging results of Backhoe with complete data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

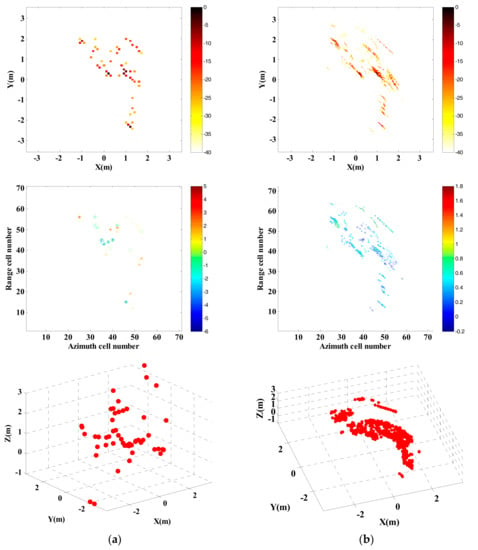

Randomly select 25% observed data from full data to generate the sparsity sampling data, and adopt traditional imaging approach and the proposed approach respectively to carry out InISAR imaging. The 3-D distribution of target scattering points is shown in Figure 12. Figure 11 and Figure 12 show that the reconstructed target information of traditional approach has a larger deviation. Regardless of complete data or insufficient data provided, the target can be imaged effectively. What’s more, with the decrease of data quantity, the imaging performance gets worse. On the contrary, by applying the proposed approach, 3-D target images with higher quality can be obtained. When the effective data is 25%, it can still maintain accuracy of height information in estimation.

Figure 12.

InISAR imaging results of Backhoe with 25% data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

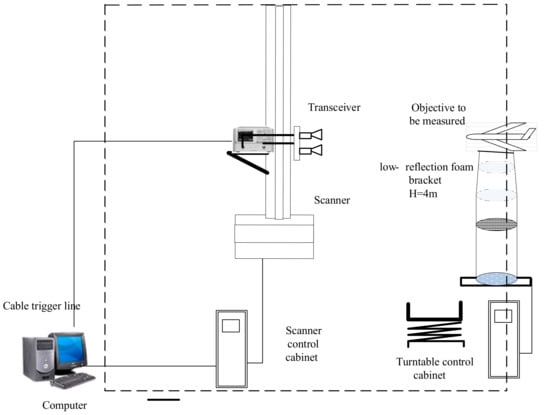

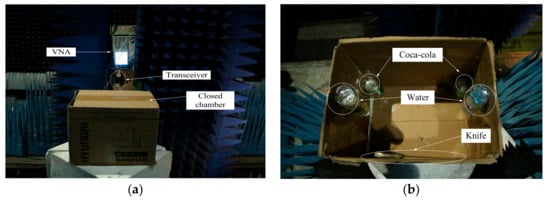

4.3. Actual Measurement Experiment in Anechoic Chamber

In order to further verify the effectiveness of the proposed approach in terms of practical applications perspective, a near-field InISAR test platform in an anechoic chamber is established, and the test system is shown as Figure 13. The target is put on the low scattering foam bracket, under which there is a turntable. The two antennas are fixed with interval of 0.2 m, and achieve azimuth accumulation through the rotation of turntable. Test parameters are shown in Table 4.

Figure 13.

Frame diagram for near-field InISAR imaging system in anechoic chamber.

Table 4.

Parameters of near-field InISAR test system.

The parameter calculation rules are as follows:

(1) Antenna baseline

Height information of the target is mainly calculated by phase difference of dual-antenna propagation path. In general, the interferometric phase difference is a periodic function for the period with . In order to avoid fuzzy height, the interferometric phase difference shall meet the requirement of , so that the baseline length meet the requirement of , wherein represents transmitting frequency, represents distance from the receiving/transmitting antenna to the target, and represents maximum height of the target.

(2) Sampling principle

Range resolution in ISAR imaging is , and azimuth resolution is , wherein represents signal bandwidth and represents azimuth accumulation angle. In actual imaging, the range resolution is generally equal to the azimuth resolution. Concerning resolution requirements, the signal bandwidth and azimuth accumulation angle can be determined through and .

Concerning range resolution requirements, the frequency sampling interval is , also taken as step frequency interval.

Concerning azimuth resolution requirements, the azimuth sampling interval is , wherein represents maximum size of the target.

(3) Distance from antenna and target

This paper focuses on near-field imaging, in principle, distance from the antenna to the target is represented as: , wherein represents maximum size of the target and represents length of incident electromagnetic wave.

In addition, scanning in vertical direction does not exist because the two antennas are located fixedly, the beam center is fixed and only the target rotates in InISAR. As mentioned in Section 4.3, In the experiment, the range sampling interval and azimuth sampling interval are and respectively.

The test is adopted with stepped frequency signal, which features easy achievement of wideband and low requirements for hardware system. In order to make easier application of CS in the test, this paper adopts the deterministic sparsity observation approach based on Cat sequence for distance-oriented compressed sampling. The following shows the steps of Cat mapping to produce random sequence and construct observation matrix:

(1) Produce chaos sequence according to Cat mapping equation, and the mapping is defined as:

where, represents the integer whose real number is casted out, namely . sequence is selected to construct the needed deterministic random sequence.

(2) For using the chaos sequence construction in stable area, cast out the value in front of the sequence. It means to select as the starting point of the sampling. Meanwhile, sample the produced sequence with the interval of for ensuring the mutual independence of elements in chaos sequence:

After obtaining the output sequence of Formula (33), directly divide the into with equal interval. Select the corresponding position of maximum value in various intervals, and assign 1 to corresponding position of , and others a zero:

where, each row has a random sequence with length of , and value 1 is at the position of the maximum value in the corresponding interval of the chaos sequence produced in , and others are zero.

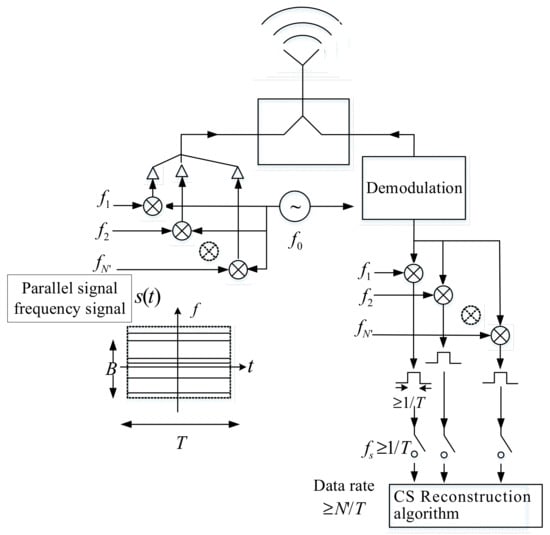

In practical applications, adopt parallelization to transmit random single frequency signal, and its data rate is . Its achievement process is shown in Figure 14.

Figure 14.

Range compressed sampling process of stepped frequency signal.

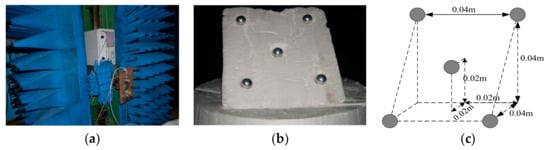

Figure 15 is the optical pictures of the measurement system and target distribution. Figure 16 and Figure 17 show imaging results by applying the two approaches provided with complete data and compressed sampling data. It can be found that by applying the traditional approach, target height information cannot be estimated completely because of incomplete correspondence of scattering point position, especially that the effective height information of target cannot be extracted if the compressed sampling proportion is large. Comparatively, by adopting the proposed approach, the height information of target can be estimated accurately given full data and compressed sampling data.

Figure 15.

Target model of five metal balls. (a) Scanning frame and probe; (b) optical picture of five balls; (c) distribution of target spatial position.

Figure 16.

InISAR imaging results of five metal balls with complete data. (a) Traditional imaging processing; (b) imaging process of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

Figure 17.

InISAR imaging results of five metal balls with 20% data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

From Table 5 and Table 6, it can be found that by applying the traditional approach, position consistency of all scattering points in images of different channels cannot be ensured. If the compression rate is high, the consistency is more obvious. However, by applying the proposed approach, reconstruction is performed through multi-channel joint sparsity, so as to ensure that the scattering points are at the same pixel of the images from different channels, which is more favorable for extraction of target height information.

Table 5.

Target position information provided with complete data.

Table 6.

Target position information provided with compressed sampling data.

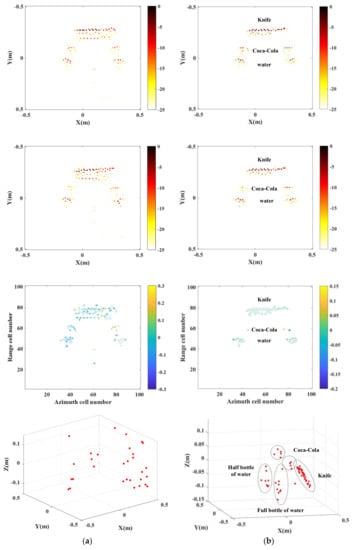

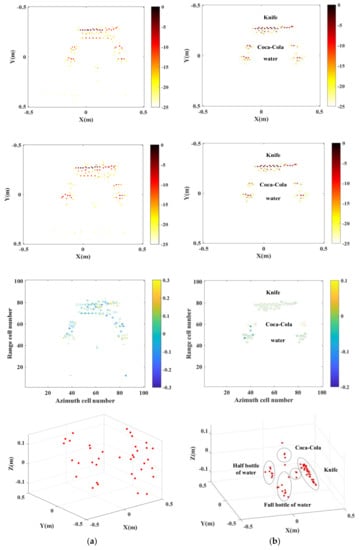

One of the practical applications of the proposed approach is security check. Here we conduct detecting and imaging on closed chamber in the anechoic chamber. The chamber is used to simulate luggage carrier, which is placed with one knife, two bottles of water (one is full and the other is half-full) and two bottles of Coco-cola. The test parameters are consistent with parameters as shown in Table 4. With 10% echo data adopted, Figure 18 shows the test system and target scene and distribution of targets within the box. The imaging results are as shown in Figure 19.

Figure 18.

Imaging test for closed chamber. (a) Imaging system and target scene; (b) distribution of targets.

Figure 19.

InISAR imaging results of anechoic chamber target with complete data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

It can be seen from Figure 20 that in the case of only 20% echo data applied, effective imaging on the targets is unable to be achieved with the traditional imaging approach. Through adoption of the proposed imaging approach, clear target images are available, provided with the shapes and location information of the knife, full bottle of water, half bottle of water and bottles of Coco-cola in the chamber.

Figure 20.

InISAR imaging results of anechoic chamber target with 20% data. (a) Traditional imaging processing; (b) imaging processing of proposed approach (mixed Euclidean norm); complex images of channels 1 and 2 (first and second layers); interferometric phase images (third layer); final 3-D imaging results (last layer).

5. Conclusions

Focusing on the near-field ISAR imaging, this paper puts forward an interferometric near-field 3-D imaging approach for joint sparsity reconstruction. Since scattering characteristics of targets in different channels are effectively made use of in joint sparsity, the imaging results feature a combination of interferometric processing and sparsity optimization. In addition to acquisition of near-field high-resolution 3-D images with less observation echoes applied, it can also accurately reflect the position information of scattering points. Moreover, it can effectively solve the problem that the accuracy of target scattering azimuth is not high in different directions by adopting sub-aperture synthesis. As verified by tests, target 3-D views with higher quality can be obtained by applying the imaging approach as proposed in this paper, so as to provide reliable judgment basis for target identification and other applications. Since this paper adopts an OMP-based reconstruction approach, the calculation complexity is not high. Also, it requires more research on rapid InISAR near-field 3-D imaging approach in combination with the traditional near-field imaging approach for future study.

Author Contributions

Y.F. was responsible for all of the theoretical work, performed the simulation and analyzed experimental data; B.W., Z.S. and C.S. conceived and designed the experiments; S.W. and J.H. revised the paper.

Funding

This research was funded by [National Natural Science Foundation of China] grant number [61472324, 61771369] And The APC was funded by [61472324].

Acknowledgments

The authors would like to thank the journal manager, the handling editor, and the anonymous reviewers for their valuable and helpful comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yu, D.; Liu, W.L.; Zhang, Z.H. Near field scattering measurement based on ISAR imaging technique. In Proceedings of the IEEE International Symposium on Antennas, Propagation & EM Theory, Xi’an, China, 22–26 October 2012; pp. 725–728. [Google Scholar]

- Kharkovsky, S.; Zoughi, R. Microwave and millimeter wave nondestructive testing and evaluation—Overview and recent advances. IEEE Instrum. Meas. Mag. 2007, 10, 26–38. [Google Scholar] [CrossRef]

- Yang, X.H.; Zheng, Y.R.; Ghasr, T.; Donnell Kn, M. Microwave imaging from sparse measurements for near-field synthetic aperture radar. IEEE Trans. Instrum. Meas. 2017, 66, 2680–2692. [Google Scholar] [CrossRef]

- Sheen, D.M.; McMarkin, D.L.; Hall, T.E. Near-field three-dimensional radar imaging techniques and applications. Appl. Opt. 2010, 49, E83–E93. [Google Scholar] [CrossRef] [PubMed]

- Sheen, D.M.; McMarkin, D.L.; Hall, T.E. Three-dimensional millimeter-wave imaging for concealed weapon detection. IEEE Trans. Microw. Theory Tech. 2001, 49, 1581–1592. [Google Scholar] [CrossRef]

- Sheen, D.M.; McMarkin, D.L.; Hall, T.E. Combined illumination cylindrical millimeter-wave imaging technique for concealed weapon detection. In Passive Millimeter-Wave Imaging Technology IV, Proceedings of the AeroSense, Orlando, FL, USA, 24–28 April 2000; SPIE: Washington, DC, USA, 2000; pp. 52–60. [Google Scholar]

- Sheen, D.M.; McMarkin, D.L. Three-dimensional radar imaging techniques and systems for near-field applications. In Proceedings of the SPIE Defense + Security, Baltimore, MD, USA, 12 May 2016. [Google Scholar]

- Fang, Y.; Wang, B.P.; Sun, C.; Song, Z.X.; Wang, S.Z. Near field 3-D imaging approach for joint high-resolutionimaging and phase error correction. J. Syst. Eng. Electron. 2017, 28, 199–211. [Google Scholar] [CrossRef]

- Fallahpour, M.; Zoughi, R. Fast 3-D qualitative method for through-wall imaging and structural health monitoring. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2463–2467. [Google Scholar] [CrossRef]

- Jalilvand, M.; Li, X.Y.; Zwirello, L.; Zwick, T. Ultra wideband compact near-field imaging system for breast cancer detection. IET Microw. Antennas Propag. 2015, 9, 1009–1014. [Google Scholar] [CrossRef]

- Fear, E.C.; Hagness, S.C.; Meaney, P.M.; Okoniewski, M.; Stuchly, M.A. Enhancing breast tumor detection with near-field imaging. IEEE Microw. Mag. 2002, 3, 48–56. [Google Scholar] [CrossRef]

- Zhu, R.Q.; Zhou, J.X.; Jiang, G.; Fu, Q. Range migration algorithm for near-field MIMO-SAR imaging. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2280–2284. [Google Scholar] [CrossRef]

- Zhuge, X.D.; Yarovoy, A.G. Three-dimensional near-field MIMO array imaging using range migration techniques. IEEE Trans. Image Process. 2012, 21, 3026–3033. [Google Scholar] [CrossRef] [PubMed]

- Fortuny, J. Efficient Algorithms for Three-Dimensional Near-Field Synthetic Aperture Radar Imaging. Ph.D. Thesis, University of Karslruhe, Karslruhe, Germany, 2001; pp. 15–43. [Google Scholar]

- Demirci, S.; Cetinkaya, H.; Tekbas, M.; Yigit, E.; Ozdemir, C.; Vertiy, A. Back-projection algorithm for ISAR imaging of near-field concealed objects. In Proceedings of the 2011 XXXth URSI General Assembly and Scientific Symposium, Istanbul, Turkey, 13–20 August 2011; pp. 1–4. [Google Scholar]

- Zhang, Y.; Deng, B.; Yang, Q.; Gao, J.K.; Qin, Y.L.; Wang, H.Q. Near-field three-dimensional planar millimeter-wave holographic imaging by using frequency scaling algorithm. Sensors 2017, 17, 2438. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Bond, E.J.; van Veen, B.D. An overview of ultra-band microwave imaging via space-time beamforming for early-stage breast-cancer detection. IEEE Antennas Propag. Mag. 2005, 47, 19–34. [Google Scholar]

- Kan, Y.Z.; Zhu, Y.F.; Tang, L.; Fu, Q.; Pei, H.C. FGG-NUFFT-based method for near-field 3-D imaging using millimeter waves. Sensors 2016, 16, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Li, S.Y.; Zhu, B.C.; Sun, H.J. NUFFT-Based Near-Field Imaging Technique for Far-Field Radar Cross Section Calculation. IEEE Antennas Wirel. Propag. Lett. 2010, 9, 550–553. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Li, S.Y.; Zhao, G.Q.; Li, H.M.; Ren, B.L. Near-field radar imaging via compressive sensing. IEEE Trans. Antenna Propag. 2015, 63, 828–833. [Google Scholar] [CrossRef]

- Bi, D.J.; Xie, Y.L.; Ma, L.; Li, X.F.; Yang, X.H.; Zheng, Y.R. Multifrequency compressed sensing for 2-D near-field synthetic aperture radar image reconstruction. IEEE Trans. Instrum. Meas. 2017, 66, 777–791. [Google Scholar] [CrossRef]

- Liu, Y.B.; Li, N.; Wang, R.; Deng, Y.K. Achieving high-quality three-dimensional InISAR imaging of maneuvering via super-resolution ISAR imaging by exploiting sparseness. IEEE Geosci. Remote Sens. Lett. 2014, 11, 828–832. [Google Scholar]

- Zhang, Q.; Yeo, T.S.; Du, G.; Zhang, S.H. Estimation of three-dimensional motion parameters in interferometric ISAR imaging. IEEE Trans. Geosci. Remote Sens. 2004, 42, 292–300. [Google Scholar] [CrossRef]

- Andon, D.L.; Chavdar, N.M. ISAR imaging reconstruction technique with stepped frequency modulation and multiple receivers. In Proceedings of the 24th Digital Avionics Systems Conference (DASC’05), Washington, DC, USA, 30 October–3 November 2005. 14E2-11. [Google Scholar]

- Morabito, A.F.; Palmeri, R.; Isernia, T. A Compressive-Sensing-inspired procedure for array antenna diagnostics by a small number of phaseless measurements. IEEE Trans. Antennas Propag. 2016, 64, 3260–3265. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, T.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Antoin, B.; Josep, P.; Luis, J.; Angel, C. Spherical wave near-field imaging and radar cross-section measurement. IEEE Trans. Antennas Propag. 1998, 46, 730–735. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).