Abstract

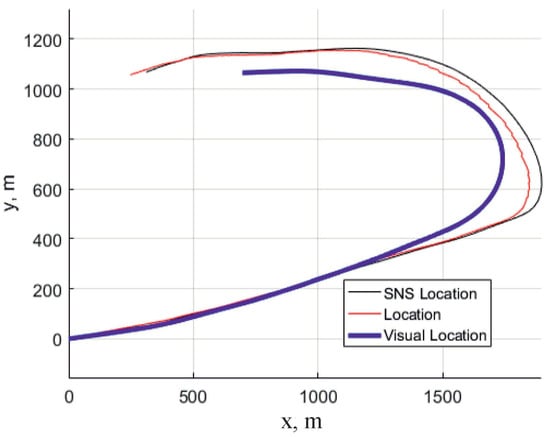

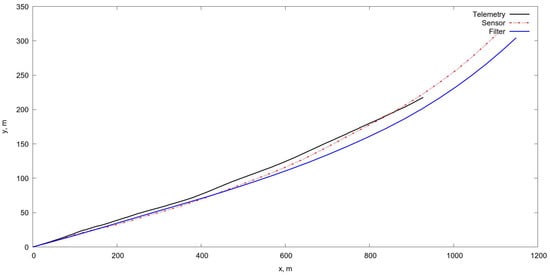

The article presents an overview of the theoretical and experimental work related to unmanned aerial vehicles (UAVs) motion parameters estimation based on the integration of video measurements obtained by the on-board optoelectronic camera and data from the UAV’s own inertial navigation system (INS). The use of various approaches described in the literature which show good characteristics in computer simulations or in fairly simple conditions close to laboratory ones demonstrates the sufficient complexity of the problems associated with adaption of camera parameters to the changing conditions of a real flight. In our experiments, we used computer simulation methods applying them to the real images and processing methods of videos obtained during real flights. For example, it was noted that the use of images that are very different in scale and in the aspect angle from the observed images in flight makes it very difficult to use the methodology of singular points. At the same time, the matching of the observed and reference images using rectilinear segments, such as images of road sections and the walls of the buildings look quite promising. In addition, in our experiments we used the projective transformation matrix computation from frame to frame, which together with the filtering estimates for the coordinate and angular velocities provides additional possibilities for estimating the UAV position. Data on the UAV position determining based on the methods of video navigation obtained during real flights are presented. New approaches to video navigation obtained using the methods of conjugation rectilinear segments, characteristic curvilinear elements and segmentation of textured and colored regions are demonstrated. Also the application of the method of calculating projective transformations from frame-to-frame is shown which gives estimates of the displacements and rotations of the apparatus and thereby serves to the UAV position estimation by filtering. Thus, the aim of the work was to analyze various approaches to UAV navigation using video data as an additional source of information about the position and velocity of the vehicle.

1. Introduction

The integration of observation channels in control systems of objects subjected to perturbations and measurement errors of the motion is based on on the observations control theory started in the early 1960s. The first works on this topic were based on the simple Kalman filter property, namely: the possibility of determining the root-mean-square estimation error in advance, without observations, by solving the Riccati equation for the error covariance matrix [1]. The development of this methodology allowed solving problems with a combination of discrete and continuous observations for stochastic systems of discrete-continuous type. At the same time, methods were developed for solving problems with constraints imposed on the composition of observations, temporal and energy constraints both on separate channels and on aggregate. For a wide class of problems with convex structure, necessary and sufficient conditions for optimality were obtained, both in the form of dynamic programming equations and the generalized maximum principle, which opens the possibility of a numerical solution [2,3]. The tasks of integrating surveillance and control systems for UAVs open a new wide field of application of the observation control methods, especially when performing autonomous flight tasks. One of the most important problems is the detection of the erroneous operation of individual observation subsystems, in which the solution of navigational tasks should be redistributed or transferred to backup subsystems or other systems operating on other physical principles [4].

A typical example: navigation through satellite channels such as global positioning system (GPS), which is quite reliable in simple flight conditions, but in a complex terrain (mountains, gorges), it is necessary to use methods to determine your position with the help of other systems based, for example, on landmarks observed either with optoelectronic cameras, or radar [5,6,7,8]. Here the serious problem of converting the signals of these systems into data suitable for navigation arises. The human-operator copes with this task on the basis of training. That is the serious problem in computer vision area and it is one of the mainstream in the UAV autonomous flight [9]. Meanwhile, the prospects for creating artificial intelligence systems of this level for UAV applications are still far from reality.

At the same time, the implementation of simple flight tasks, such as either access to the aerial survey area or tracking the reference trajectory [10,11,12] the organization of data transfer in conditions of limited time and energy storage [13,14] and even landing [15], are quite accessible for performing UAVs in the autonomous mode with reliable navigation aids.

Unmanned aerial, land-based and underwater-based vehicles that perform the autonomous missions use, as a rule, an on-board navigation system supplemented by sensors of various physical nature. At the same time, unlike remote control systems in which these sensors present information in the form as operator-friendly as possible, the measurement results should be converted into the input signals of the control system, which requires other approaches. This is especially evident in the example of an optical or optoelectronic surveillance system, whose purpose in remote control mode is to provide the operator with the best possible image of the surrounding terrain. At the same time, in an autonomous flight, the observing system should be able to search for the characteristic objects in the observed landscape and give the control system their coordinates and estimate the distances between them. Of course, the issue of providing an exellent image and determining the metric properties of the observed images are connected, and in no case cancel one another. However, what a human operator does automatically basing on a sufficiently high-quality image of the terrain, the readings of other sensors and undoubtedly on previous experience, the control system algorithm must do by using data from video and other systems, with the same accuracy as the human operator.

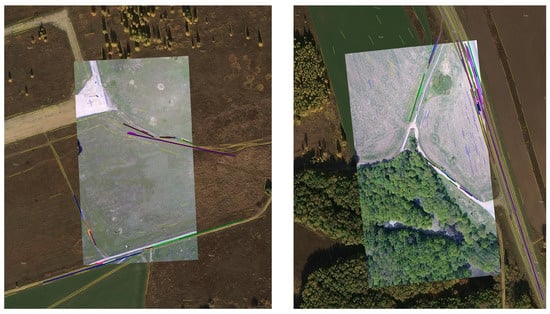

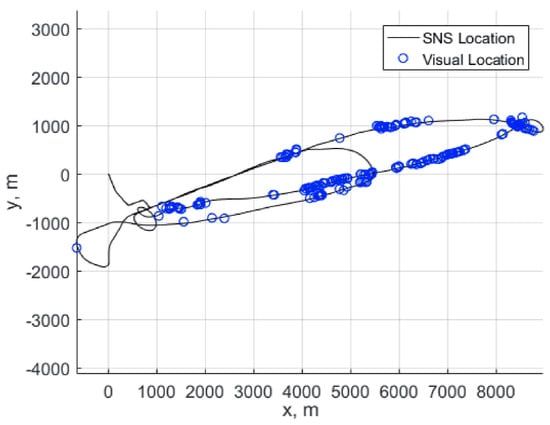

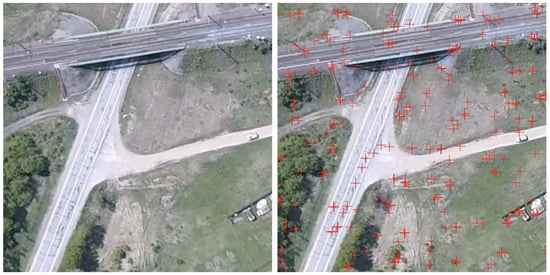

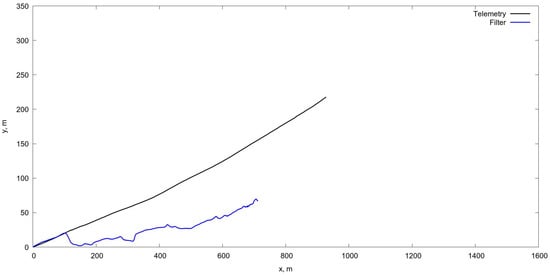

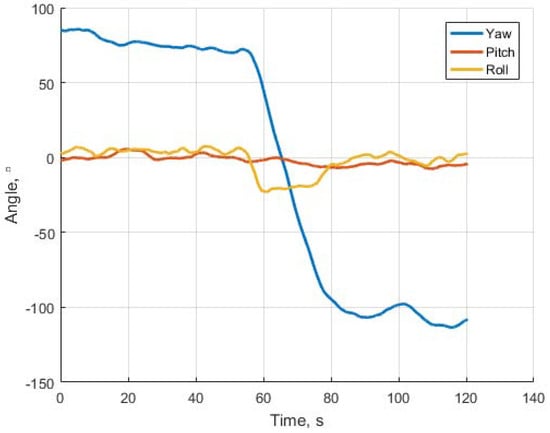

In this paper, various approaches to the integration of surveillance channels for UAVs are presented. Some of which were tested on real data obtained during flights with registration of the underlying earth’s surface by on-board video-camera. In our previous works [16,17] we examined the approach to video navigation using the methodology of detecting special “points”, the coordinates of which are predetermined on a reference image of the terrain formed on the basis of satellite or aerial imagery [12]. However, in the practical use of this method, difficulties were encountered in identifying these specific points. These difficulties are associated with a significant difference in the quality of the on-board and reference images with the essential difference in scales. Therefore, when using real images, the best results were obtained by matching images not by points, but by rectilinear segments, such as images of road sections, walls of buildings [18]. This algorithm is a new modification of the navigation for the “sparse” optical flow (OF). Moreover, in areas of non-conjugation of template and on-board images for tracing the trajectory of UAV, it is necessary to apply methods based on so-called “dense” OF with the determination of the angular and linear velocities of the camera.

In the next Section 2 we present a review of various approaches applied to a video navigation and tested during work performed by a research group joined in the IITP RAS. Then in Section 3 we consider various approach to filtering in estimation of the UAV position. The approach to navigation based on computing of projective matrices between successive frames registered by on-board camera presented with the joint filtering algorithm in Section 4. Section 5 is conclusion.

We should underline that the main contribution of the paper is a review of different approaches to the UAV video navigation along with results of some experiments related to possible new developments which look promising in implementation of long-term autonomous missions performed by multi-purpose UAV.

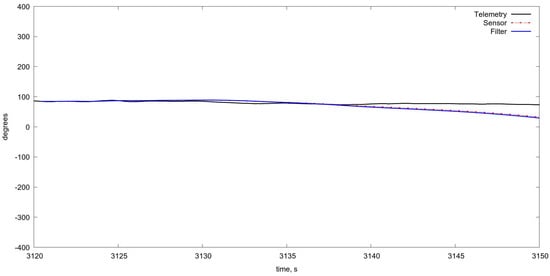

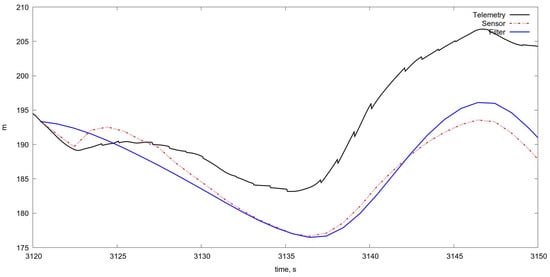

3. Joint Estimation of the UAV Attitude with the Aid of Filtering

3.1. Visual-Based Navigation Approaches

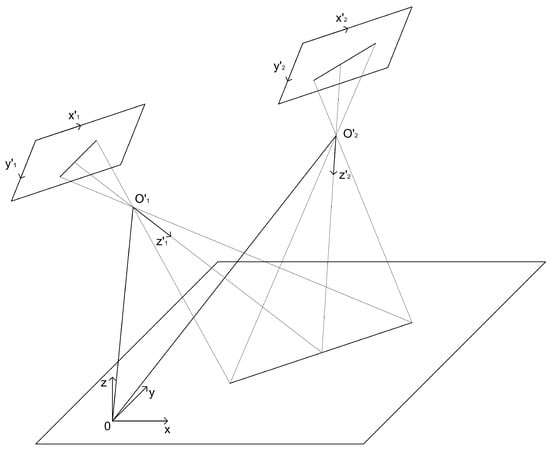

Several studies have demonstrated the effectiveness of approaches based on motion field estimation and feature tracking for visual odometry [70]. Vision based methods have been proposed even in the context of autonomous landing management [12]. In [47] a visual odometry based on geometric homography was proposed. However, the homography analysis uses only 2D reference points coordinates, though for evaluation of the current UAV altitude the 3D coordinates are necessary. All such approaches presume the presence of some recognition system in order to detect the objects nominated in advance. Examples of such objects can be special buildings, crossroads, tops of mountains and so on. The principal difficulties are the different scale and aspect angles of observed and stored images which leads to the necessity of huge templates library in the UAV control system memory. Here one can avoid this difficulty, by using another approach based on observation of so-called feature points [71] that are the scale and the aspect angle invariant. For this purpose the technology of feature points [23] is used. In [10] the approach based on the coordinates correspondence of the reference points observed by on-board camera and the reference points on the map loaded into UAV’s memory before the mission start had been suggested. During the flight these maps are compared with the frame of the land, directly observed with the help of on-board video camera. As a result one can detect current location and orientation without time-error accumulation. These methods are invariant to some transformations and also are noise-stable so that predetermined maps can be different in scale, aspect angle, season, luminosity, weather conditions, etc. This technology appeared in [72]. The contribution of work [16] is the usage of modified unbiased pseudomeasurements filter for bearing only observations of some reference points with known terrain coordinates.

3.2. Kalman Filter

In order to obtain metric data from visual observations one needs first to make observations from different positions (i.e., triangulation) and then to use nonlinear filtering. However, all nonlinear filters either have unknown bias [48] or are very difficult for on-board implementation like the Bayesian type estimation [27,73]. Approaches for a position estimation based on bearing-only observations had been analyzed long ago especially for submarine applications [49] and nowadays for UAV applications [46].

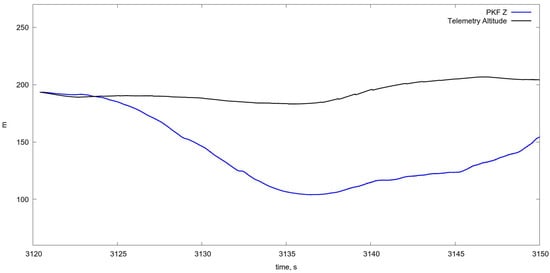

Comparison of different nonlinear filters for bearing-only observations in the issue of the ground-based object localization [74] shows that EKF (extended Kalman filter), unscented Kalman filter, particle filter and pseudomeasurement filter give almost the same level of accuracy, while the pseudomeasurement filter is usually more stable and simple for on-board implementation. This observation is in accordance with older results [49], where all these filters were compared in the issue of moving objects localization. It has been mentioned that all these filters have bias which makes their use in data fusion issues rather problematic [45]. The principle requirement for such filters in data fusion is the non-biased estimate with known mean square characterization of the error. Among the variety of possible filters the pseudomeasurement filter can be easily modified to satisfy the data fusion demands. The idea of such nonlinear filtering has been developed by V. S. Pugachev and I. Sinitsyn in the form of so-called conditionally-optimal filtering [50], which provides the non-biased estimation within the class of linear filters with the minimum mean squared error. In our previous works we developed such a filter (so called Pseudomeasurement Kalman Filter (PKF)) for the UAV position estimation and give the algorithm for path planning along with the reference trajectory under external perturbations and noisy measurements [16,53].

3.3. Optical Absolute Positioning

Some known aerospace maps of a terrain in a flight zone are loaded into the aircraft memory before a start of flight. During the flight these maps are compared with the frame of the land, directly observed with the help of on-board video camera. For this purpose the technology of feature points [23] is used. As a result one can detect current location and orientation without time-error accumulation. These methods are invariant to some transformations and also are noise-stable so that predetermined maps can vary in height, season, luminosity, weather conditions, etc. Also from the moment of previous plane surveying the picture of this landscape can be changed due to human and natural activity. All approaches based on the capturing of the objects assigned in advance presume the presence of some on-board recognition system in order to detect and recognise such objects. Here we avoid this difficulty by using the observation of feature points [71] that are the scale and the aspect angle invariant. In addition, the modified pseudomeasurements Kalman Filtering (PKF) is used for estimation of UAV positions and control algorithm.

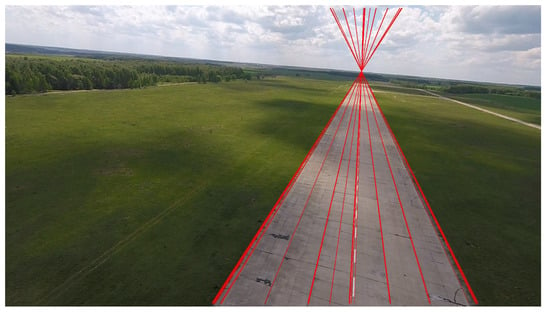

One should mention also the epipolar position estimation for absolute positioning [75], where it helps at landing on runway (see Figure 4).

Figure 4.

Landing on runway with well-structured epipolar lines.

5. Conclusions

The article describes a number of approaches to video navigation based on observation of the earth’s surface during an autonomous UAV flight. It should be noted that their performance depends on external conditions and the observed landscape. Therefore, it is diffcult to choose the most promising approach in advance and most likely it is necessary to rely on the use of various algorithms taking into account the enviromental conditions and computational and energy limitations of real UAV. However, there is an important problem, namely, the determination of the quality of the evaluation and the selection of the most reliable observation channels during the flight. The theory of control of observations opens the way to a solution, since the theory of filtering, as a rule, uses a discrepancy between the predicted and observed values and videos could help to detect them. This is probably, most of all, the main direction of future research in the field of video integration with standard navigation systems.

Author Contributions

The work presented here was carried out in collaboration among all authors. All authors have contributed to, seen and approved the manuscript. B.M. is the main author, having conducted the survey and written the content. A.P. and I.K. developed and analyzed the projective matrix approach to UAV navigation. K.S. and A.M. were responsible for the parts related to the usage of stochastic filtering in the UAV position and attitude estimation. D.S. performed the analysis of videoseqiences for computation the sequence of projective matrices. E.K. developed the algorithm of geolicalization on the basis linear objects.

Funding

This research was funded by Russian Science Foundation Grant 14-50-00150.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Miller, B.M. Optimal control of observations in the filtering of diffusion processes I,II. Autom. Remote Control 1985, 46, 207–214, 745–754. [Google Scholar]

- Miller, B.M.; Runggaldier, W.J. Optimization of observations: A stochastic control approach. SIAM J. Control Optim. 1997, 35, 1030–1052. [Google Scholar] [CrossRef]

- Miller, B.M.; Rubinovich, E.Y. Impusive Control in Continuous and Discrete-Continuous Systems; Kluwer Academic/Plenum Publishres: New York, NY, USA, 2003. [Google Scholar]

- Kolosov, K.S. Robust complex processing of UAV navigation measurements. Inf. Process. 2017, 17, 245–257. [Google Scholar]

- Andreev, K.; Rubinovich, E. UAV Guidance When Tracking a Ground Moving Target with Bearing-Only Measurements and Digital Elevation Map Support; Southern Federal University, Engineering Sciences: Rostov Oblast, Russia, 2015; pp. 185–195. (In Russian) [Google Scholar]

- Sidorchuk, D.S.; Volkov, V.V. Fusion of radar, visible and thermal imagery with account for differences in brightness and chromaticity perception. Sens. Syst. 2018, 32, 14–18. (In Russian) [Google Scholar]

- Andreev, K.V. Optimal Trajectories for Unmanned Aerial Vehicle Tracking the Moving Targets Using Linear Antenna Array. Control Sci. 2015, 5, 76–84. (In Russian) [Google Scholar]

- Abulkhanov, D.; Konovalenko, I.; Nikolaev, D.; Savchik, A.; Shvets, E.; Sidorchuk, D. Neural Network-based Feature Point Descriptors for Registration of Optical and SAR Images. In Proceedings of the 10th International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13–15 November 2017; Voulme 10696. [Google Scholar]

- Kanellakis, C.; Nikolakopoulos, G. Survey on Computer Vision for UAVs: Current Developments and Trends. J. Intell. Robot. Syst. 2017, 87, 141–168. [Google Scholar] [CrossRef]

- Konovalenko, I.; Miller, A.; Miller, B.; Nikolaev, D. UAV navigation on the basis of the feature points detection on underlying surface. In Proceedings of the 29th European Conference on Modelling and Simulation (ECMS 2015), Albena, Bulgaria, 26–29 May 2015; pp. 499–505. [Google Scholar]

- Karpenko, S.; Konovalenko, I.; Miller, A.; Miller, B.; Nikolaev, D. Visual navigation of the UAVs on the basis of 3D natural landmarks. In Proceedings of the Eighth International Conference on Machine Vision ICMV 2015, Barcelona, Spain, 19–20 November 2015; Volume 9875, pp. 1–10. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks. J. Intell. Robot. Syst. 2010, 57, 233–257. [Google Scholar] [CrossRef]

- Miller, B.; Miller, G.; Semenikhin, K. Optimization of the Data Transmission Flow from Moving Object to Nonhomogeneous Network of Base Stations. IFAC PapersOnLine 2017, 50, 6160–6165. [Google Scholar] [CrossRef]

- Miller, B.M.; Miller, G.B.; Semenikhin, V.K. Optimal Channel Choice for Lossy Data Flow Transmission. Autom. Remote Control 2018, 79, 66–77. [Google Scholar] [CrossRef]

- Miller, A.B.; Miller, B.M. Stochastic control of light UAV at landing with the aid of bearing-only observations. In Proceedings of the 8th International Conference on Machine Vision (ICMV), Barcelona, Spain, 19–21 November 2015; Volume 9875. [Google Scholar] [CrossRef]

- Konovalenko, I.; Miller, A.; Miller, B.; Popov, A.; Stepanyan, K. UAV Control on the Basis of 3D Landmark Bearing-Only Observations. Sensors 2015, 15, 29802–29820. [Google Scholar]

- Karpenko, S.; Konovalenko, I.; Miller, A.; Miller, B.; Nikolaev, D. Stochastic control of UAV on the basis of robust filtering of 3D natural landmarks observations. In Proceedings of the Conference on Information Technology and Systems, Olympic Village, Sochi, Russia, 7–11 September 2015; pp. 442–455. [Google Scholar]

- Kunina, I.; Terekhin, A.; Khanipov, T.; Kuznetsova, E.; Nikolaev, D. Aerial image geolocalization by matching its line structure with route map. Proc. SPIE 2017, 10341. [Google Scholar] [CrossRef]

- Pestana, J.; Sanchez-Lopez, J.L.; Saripalli, S.; Campoy, P. Vision based GPS-denied Object Tracking and following for unmanned aerial vehicles. In Proceedings of the 2013 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Linkoping, Sweden, 21–26 October 2013. [Google Scholar] [CrossRef]

- Pestana, J.; Sanchez-Lopez, J.L.; Saripalli, S.; Campoy, P. Computer Vision Based General Object Following for GPS-denied Multirotor Unmanned Vehicles. In Proceedings of the 2014 American Control Conference (ACC), Portland, OR, USA, 4–6 June 2014; pp. 1886–1891. [Google Scholar]

- Pestana, J.; Sanchez-Lopez, J.L.; Saripalli, S.; Campoy, P. A Vision-based Quadrotor Swarm for the participation in the 2013 International Micro Air Vehicle Competition. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 617–622. [Google Scholar]

- Pestana, J.; Sanchez-Lopez, J.L.; de la Puente, P.; Carrio, A.; Campoy, P. A Vision-based Quadrotor Multi-robot Solution for the Indoor Autonomy Challenge of the 2013 International Micro Air Vehicle Competition. J. Intell. Robot. Syst. 2016, 84, 601–620. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Voulme 2, pp. 1150–1157. [Google Scholar]

- Lowe, D. Distinctive image features from scale-invariant key points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Morel, J.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- 2012 opencv/opencv Wiki GitHub. Available online: https://github.com/Itseez/opencv/blob/master/samples/python2/asift.ru (accessed on 24 September 2014).

- Bishop, A.N.; Fidan, B.; Anderson, B.D.O.; Dogancay, K.; Pathirana, P.N. Optimality analysis of sensor-target localization geometries. Automatica 2010, 46, 479–492. [Google Scholar] [CrossRef]

- Ethan, R.; Vincent, R.; Kurt, K.; Gary, B. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Işik, Ş.; Özkan, K. A Comparative Evaluation of Well-known Feature Detectors and Descriptors. Int. J. Appl. Math. Electron. Comput. 2015, 3, 1–6. [Google Scholar] [CrossRef]

- Adel, E.; Elmogy, M.; Elbakry, H. Image Stitching System Based on ORB Feature-Based Technique and Compensation Blending. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 55–62. [Google Scholar] [CrossRef]

- Karami, E.; Prasad, S.; Shehata, M. Image Matching Using SIFT, SURF, BRIEF and ORB: Performance Comparison for Distorted Images. arXiv, 2017; arXiv:1710.02726. [Google Scholar]

- Burdziakowski, P. Low Cost Hexacopter Autonomous Platform for Testing and Developing Photogrammetry Technologies and Intelligent Navigation Systems. In Proceedings of the 10th International Conference on “Environmental Engineering”, Vilnius, Lithuania, 27–28 April 2017; pp. 1–6. [Google Scholar]

- Burdziakowski, P.; Janowski, A.; Przyborski, M.; Szulwic, J. A Modern Approach to an Unmanned Vehicle Navigation. In Proceedings of the 16th International Multidisciplinary Scientific GeoConference (SGEM 2016), Albena, Bulgaria, 28 June–6 July 2016; pp. 747–758. [Google Scholar]

- Burdziakowski, P. Towards Precise Visual Navigation and Direct Georeferencing for MAV Using ORB-SLAM2. In Proceedings of the 2017 Baltic Geodetic Congress (BGC Geomatics), Gdansk, Poland, 22–25 June 2017; pp. 394–398. [Google Scholar]

- Ershov, E.; Terekhin, A.; Nikolaev, D.; Postnikov, V.; Karpenko, S. Fast Hough transform analysis: Pattern deviation from line segment. In Proceedings of the 8th International Conference on Machine Vision (ICMV 2015), Barcelona, Spain, 19–21 November 2015; Volume 9875, p. 987509. [Google Scholar]

- Ershov, E.; Terekhin, A.; Karpenko, S.; Nikolaev, D.; Postnikov, V. Fast 3D Hough Transform computation. In Proceedings of the 30th European Conference on Modelling and Simulation (ECMS 2016), Regensburg, Germany, 31 May–3 June 2016; pp. 227–230. [Google Scholar]

- Savchik, A.V.; Sablina, V.A. Finding the correspondence between closed curves under projective distortions. Sens. Syst. 2018, 32, 60–66. (In Russian) [Google Scholar]

- Kunina, I.; Teplyakov, L.M.; Gladkov, A.P.; Khanipov, T.; Nikolaev, D.P. Aerial images visual localization on a vector map using color-texture segmentation. In Proceedings of the International Conference on Machine Vision, Vienna, Austria, 13–15 November 2017; Volume 10696, p. 106961. [Google Scholar] [CrossRef]

- Teplyakov, L.M.; Kunina, I.A.; Gladkov, A.P. Visual localisation of aerial images on vector map using colour-texture segmentation. Sens. Syst. 2018, 32, 19–25. (In Russian) [Google Scholar]

- Kunina, I.; Gladilin, S.; Nikolaev, D. Blind radial distortion compensation in a single image using a fast Hough transform. Comput. Opt. 2016, 40, 395–403. [Google Scholar] [CrossRef]

- Kunina, I.; Terekhin, A.; Gladilin, S.; Nikolaev, D. Blind radial distortion compensation from video using fast Hough transform. In Proceedings of the 2016 International Conference on Robotics and Machine Vision, ICRMV 2016, Moscow, Russia, 14–16 September 2016; Volume 10253, pp. 1–7. [Google Scholar]

- Kunina, I.; Volkov, A.; Gladilin, S.; Nikolaev, D. Demosaicing as the problem of regularization. In Proceedings of the Eighth International Conference on Machine Vision (ICMV 2015), Barcelona, Spain, 19–20 November 2015; Volume 9875, pp. 1–5. [Google Scholar]

- Karnaukhov, V.; Mozerov, M. Motion blur estimation based on multitarget matching model. Opt. Eng. 2016, 55, 100502. [Google Scholar] [CrossRef]

- Bolshakov, A.; Gracheva, M.; Sidorchuk, D. How many observers do you need to create a reliable saliency map in VR attention study? In Proceedings of the ECVP 2017, Berlin, Germany, 27–31 August 2017; Voulme 46. [Google Scholar]

- Aidala, V.J.; Nardone, S.C. Biased Estimation Properties of the Pseudolinear Tracking Filter. IEEE Trans. Aerosp. Electron. Syst. 1982, 18, 432–441. [Google Scholar] [CrossRef]

- Osborn, R.W., III; Bar-Shalom, Y. Statistical Efficiency of Composite Position Measurements from Passive Sensors. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 2799–2806. [Google Scholar] [CrossRef]

- Wang, C.-L.; Wang, T.-M.; Liang, J.-H.; Zhang, Y.-C.; Zhou, Y. Bearing-only Visual SLAM for Small Unmanned Aerial Vehicles in GPS-denied Environments. Int. J. Autom. Comput. 2013, 10, 387–396. [Google Scholar] [CrossRef]

- Belfadel, D.; Osborne, R.W., III; Bar-Shalom, Y. Bias Estimation for Optical Sensor Measurements with Targets of Opportunity. In Proceedings of the 16th International Conference on Information, Fusion Istanbul, Turkey, 9–12 July2013; pp. 1805–1812. [Google Scholar]

- Lin, X.; Kirubarajan, T.; Bar-Shalom, Y.; Maskell, S. Comparison of EKF, Pseudomeasurement and Particle Filters for a Bearing-only Target Tracking Problem. Proc. SPIE 2002, 4728, 240–250. [Google Scholar]

- Pugachev, V.S.; Sinitsyn, I.N. Stochastic Differential Systems. Analysis and Filtering; Wiley: Hoboken, NJ, USA, 1987. [Google Scholar]

- Miller, B.M.; Pankov, A.R. Theory of Random Processes; Phizmatlit: Moscow, Russian, 2007. (In Russian) [Google Scholar]

- Amelin, K.S.; Miller, A.B. An Algorithm for Refinement of the Position of a Light UAV on the Basis of Kalman Filtering of Bearing Measurements. J. Commun. Technol. Electron. 2014, 59, 622–631. [Google Scholar] [CrossRef]

- Miller, A.B. Development of the motion control on the basis of Kalman filtering of bearing-only measurements. Autom. Remote Control 2015, 76, 1018–1035. [Google Scholar] [CrossRef]

- Volkov, A.; Ershov, E.; Gladilin, S.; Nikolaev, D. Stereo-based visual localization without triangulation for unmanned robotics platform. In Proceedings of the 2016 International Conference on Robotics and Machine Vision, Moscow, Russian, 14–16 September 2016; Voulme 10253. [Google Scholar] [CrossRef]

- Ershov, E.; Karnaukhov, V.; Mozerov, M. Stereovision Algorithms Applicability Investigation for Motion Parallax of Monocular Camera Case. Inf. Process. 2016, 61, 695–704. [Google Scholar] [CrossRef]

- Ershov, E.; Karnaukhov, V.; Mozerov, M. Probabilistic choice between symmetric disparities in motion stereo for lateral navigation system. Opt. Eng. 2016, 55, 023101. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Zuliani, M.; Kenney, C.S.; Manjunath, B.S. The MultiRANSAC Algorithm and its Application to Detect Planar Homographies. Proceedings of 12th the IEEE International Conference on Image Processing (ICIP 2005), Genova, Italy, 11–14 September 2005; Volume 3, pp. 2969–2972. [Google Scholar]

- Civera, J.; Grasa, O.G.; Davison, A.J.; Montiel, J.M.M. 1-Point RANSAC for EKF-Based Structure from Motion. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 3498–3504. [Google Scholar]

- Popov, A.; Miller, A.; Miller, B.; Stepanyan, K. Application of the Optical Flow as a Navigation Sensor for UAV. In Proceedings of the 39th IITP RAS Interdisciplinary Conference & School, Olympic Village, Sochi, Russia, 7–11 September 2015; pp. 390–398, ISBN 978-5-901158-28-9. [Google Scholar]

- Popov, A.; Miller, A.; Miller, B.; Stepanyan, K.; Konovalenko, I.; Sidorchuk, D.; Koptelov, I. UAV navigation on the basis of video sequences registered by on-board camera. In Proceedings of the 40th Interdisciplinary Conference & School Information Technology and Systems 2016, Repino, St. Petersburg, Russia, 25–30 September 2016; pp. 370–376. [Google Scholar]

- Popov, A.; Stepanyan, K.; Miller, B.; Miller, A. Software package IMODEL for analysis of algorithms for control and navigations of UAV with the aid of observation of underlying surface. In Proceedings of the Abstracts of XX Anniversary International Conference on Computational Mechanics and Modern Applied Software Packages (CMMASS2017), Alushta, Crimea, 24–31 May 2017; pp. 607–611. (In Russian). [Google Scholar]

- Popov, A.; Miller, A.; Miller, B.; Stepanyan, K. Optical Flow as a Navigation Means for UAVs with Opto-electronic Cameras. In Proceedings of the 56th Israel Annual Conference on Aerospace Sciences, Tel-Aviv and Haifa, Israel, 9–10 March 2016. [Google Scholar]

- Miller, B.M.; Stepanyan, K.V.; Popov, A.K.; Miller, A.B. UAV Navigation Based on Videosequences Captured by the Onboard Video Camera. Autom. Remote Control 2017, 78, 2211–2221. [Google Scholar] [CrossRef]

- Popov, A.K.; Miller, A.B.; Stepanyan, K.V.; Miller, B.M. Modelling of the unmanned aerial vehicle navigation on the basis of two height-shifted onboard cameras. Sens. Syst. 2018, 32, 26–34. (In Russian) [Google Scholar]

- Popov, A.; Miller, A.; Miller, B.; Stepanyan, K. Estimation of velocities via Optical Flow. In Proceedings of the International Conference on Robotics and Machine Vision (ICRMV), Moscow, Russia, 14 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Miller, A.; Miller, B. Pseudomeasurement Kalman filter in underwater target motion analisys & Integration of bearing-only and active-range measurement. IFAC PapersOnLine 2017, 50, 3817–3822. [Google Scholar]

- Popov, A.; Miller, A.; Miller, B.; Stepanyan, K. Optical Flow and Inertial Navigation System Fusion in UAV Navigation. In Proceedings of the Conference on Unmanned/Unattended Sensors and Sensor Networks XII, Edinburgh, UK, 26 September 2016; pp. 1–16. [Google Scholar] [CrossRef]

- Caballero, F.; Merino, L.; Ferruz, J.; Ollero, A. Vision-based Odometry and SLAM for Medium and High Altitude Flying UAVs. J. Intell. Robot. Syst. 2009, 54, 137–161. [Google Scholar] [CrossRef]

- Konovalenko, I.; Kuznetsova, E. Experimental Comparison of Methods for Estimation of the Observed Velocity of the Vehicle in Video Stream. Proc. SPIE 2015, 9445. [Google Scholar] [CrossRef]

- Guan, X.; Bai, H. A GPU accelerated real-time self-contained visual navigation system for UAVs. Proceeding of the IEEE International Conference on Information and Automation, Shenyang, China, 6–8 June 2012; pp. 578–581. [Google Scholar]

- Jauffet, C.; Pillon, D.; Pignoll, A.C. Leg-by-leg Bearings-Only Target Motion Analysis Without Observer Maneuver. J. Adv. Inf. Fusion 2011, 6, 24–38. [Google Scholar]

- Miller, B.M.; Stepanyan, K.V.; Miller, A.B.; Andreev, K.V.; Khoroshenkikh, S.N. Optimal filter selection for UAV trajectory control problems. In Proceedings of the 37th Conference on Information Technology and Systems, Kaliningrad, Russia, 1–6 September 2013; pp. 327–333. [Google Scholar]

- Ovchinkin, A.A.; Ershov, E.I. The algorithm of epipole position estimation under pure camera translation. Sens. Syst. 2018, 32, 42–49. (In Russian) [Google Scholar]

- Le Coat, F.; Pissaloux, E.E. Modelling the Optical-flow with Projective-transform Approximation for Lerge Camera Movements. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 199–203. [Google Scholar]

- Longuet-Higgins, H.C. The Visual Ambiguity of a Moving Plane. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1984, 223, 165–175. [Google Scholar] [CrossRef]

- Raudies, F.; Neumann, H. A review and evaluation of methods estimating ego-motion. Comput. Vis. Image Underst. 2012, 116, 606–633. [Google Scholar] [CrossRef]

- Yuan, D.; Liu, M.; Yin, J.; Hu, J. Camera motion estimation through monocular normal flow vectors. Pattern Recognit. Lett. 2015, 52, 59–64. [Google Scholar] [CrossRef]

- Babinec, A.; Apeltauer, J. On accuracy of position estimation from aerial imagery captured by low-flying UAVs. Int. J. Transp. Sci. Technol. 2016, 5, 152–166. [Google Scholar] [CrossRef]

- Martínez, C.; Mondragón, I.F.; Olivares-Médez, M.A.; Campoy, P. On-board and Ground Visual Pose Estimation Techniques for UAV Control. J. Intell. Robot. Syst. 2011, 61, 301–320. [Google Scholar] [CrossRef]

- Pachauri, A.; More, V.; Gaidhani, P.; Gupta, N. Autonomous Ingress of a UAV through a window using Monocular Vision. arXiv, 2016; arXiv:1607.07006v1. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).