Simultaneous Ship Detection and Orientation Estimation in SAR Images Based on Attention Module and Angle Regression

Abstract

1. Introduction

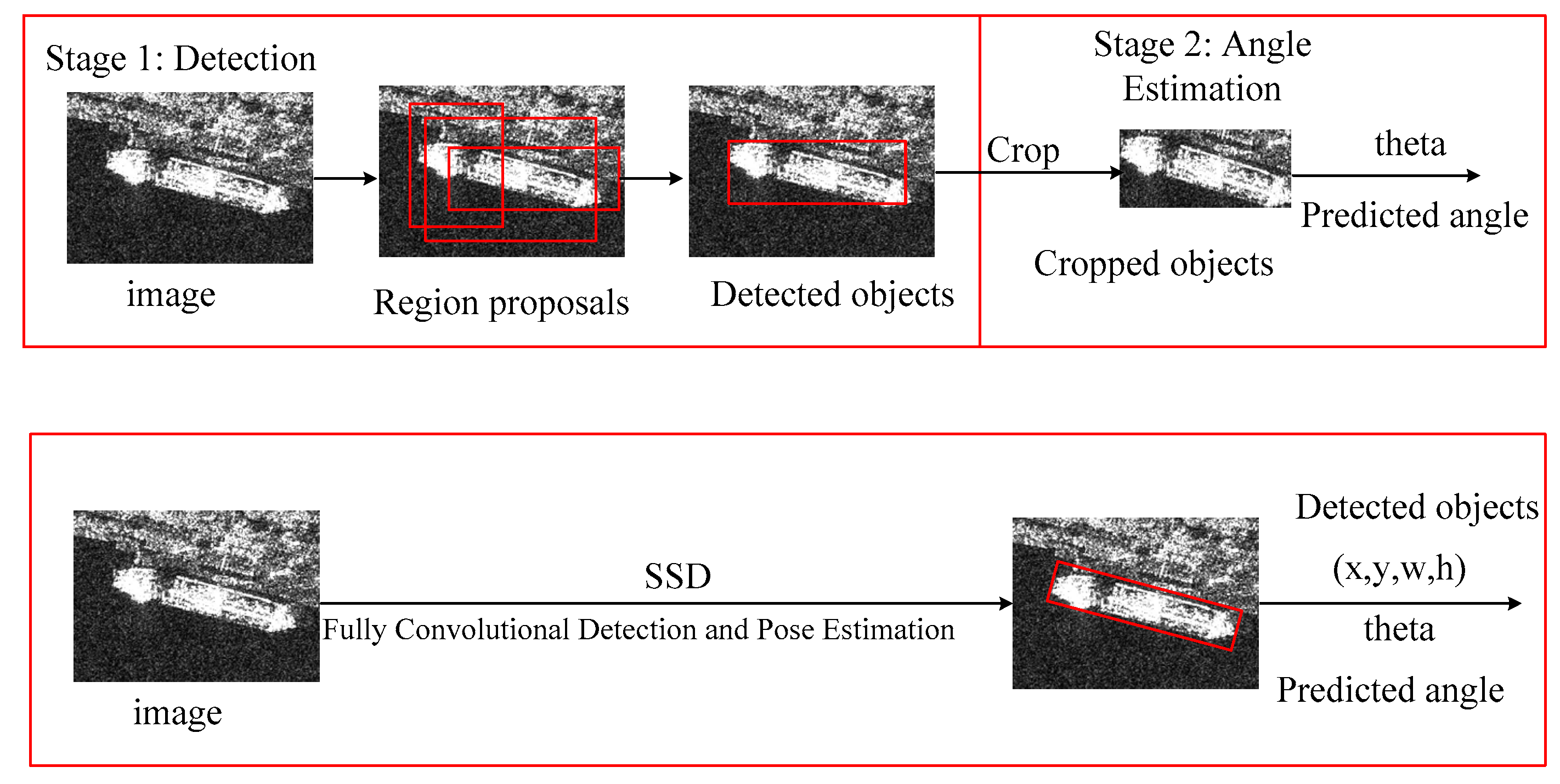

- We adopt an end-to-end framework to detect ships and estimate their orientations simultaneously. This method can output the location, category, and orientation in a model, without tedious pipelines.

- The rotatable bounding box is tighter and contains less pixels of background. Therefore, it is easy to distinguish them from the background, especially near the dock.

- In order to boost the performance further, we propose a semantic aggregation module, which can add semantic information to every layer in a top-down way.

- An attention module is used for adaptively selecting meaningful features for classification and location.

- Angular regression is used for predicting angles without increasing the computational load.

2. Simultaneous Detection and Angle Estimation

3. Proposed Method

3.1. Overall Architecture

3.2. Attention Module

3.3. Rotatable Bounding Box

- The aspect ratio and size of vertical bounding boxes are not identical to the real shape of ships. The width and height of the rotatable bounding box show the real size of ships. Therefore, we can design reasonable prior boxes, as shown in the first row of Figure 4.

- The vertical bounding box cannot separate the ship and its background pixels in comparison to rotatable bounding boxes. Generally, most of the region inside the vertical bounding box belongs to background pixels. Therefore, it is easy to perform the classification task, as shown in the second row of Figure 4.

- Rotatable bounding boxes can efficiently separate dense objects with no overlapped areas between nearby targets. Dense objects are difficult to separate. The rotatable bounding box can detect and estimate orientation simultaneously, in a totally end-to-end way, without pipelines, as shown in the first row of Figure 4.

3.4. Multi-Orientation Anchors

3.5. Loss Function with Angle Regression

4. Results

4.1. Dataset

4.2. Details

4.3. Experiments

4.3.1. Evaluation Indicator

4.3.2. Overall Performance

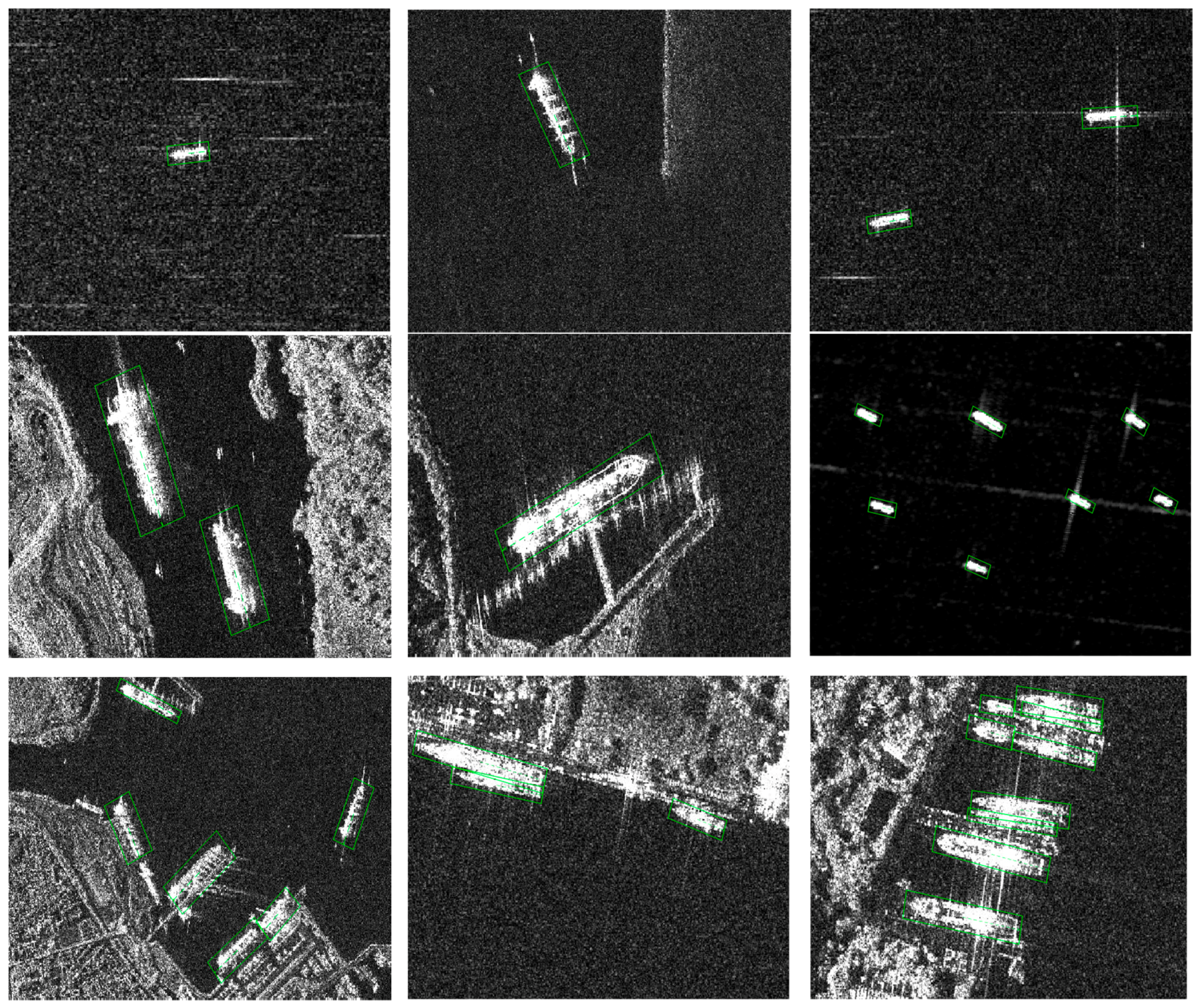

4.3.3. Detected Ships

4.4. False Alarms and Misses

5. Discussions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Crisp, D.J. The state-of-the-art in ship detection in Synthetic Aperture Radar imagery. Org. Lett. 2004, 35, 2165–2168. [Google Scholar]

- Song, S.; Xu, B.; Yang, J. SAR target recognition via supervised discriminative dictionary learning and sparse representation of the SAR-HOG feature. Remote Sens. 2016, 8, 683. [Google Scholar] [CrossRef]

- Cui, Z.; Dang, S.; Cao, Z.; Wang, S.; Liu, N. SAR Target Recognition in Large Scene Images via Region-Based Convolutional Neural Networks. Remote Sens. 2018, 10, 776. [Google Scholar] [CrossRef]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P. Synthetic Aperture Radar Ship Detection Using Haar-Like Features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 154–158. [Google Scholar] [CrossRef]

- Wackerman, C.C.; Friedman, K.S.; Pichel, W.G.; Clemente-Colón, P.; Li, X. Automatic detection of ships in RADARSAT-1 SAR imagery. Can. J. Remote Sens. 2001, 27, 568–577. [Google Scholar] [CrossRef]

- Banerjee, A.; Burlina, P.; Chellappa, R. Adaptive target detection in foliage-penetrating SAR images using alpha-stable models. IEEE Trans. Image Process. 1999, 8, 1823–1831. [Google Scholar] [CrossRef] [PubMed]

- Leng, X.; Ji, K.; Zhou, S.; Xing, X.; Zou, H. An adaptive ship detection scheme for spaceborne SAR imagery. Sensors 2016, 16, 1345. [Google Scholar] [CrossRef] [PubMed]

- Huo, W.; Huang, Y.; Pei, J.; Zhang, Q.; Gu, Q.; Yang, J. Ship Detection from Ocean SAR Image Based on Local Contrast Variance Weighted Information Entropy. Sensors 2018, 18, 1196. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Marino, A.; Sanjuan-Ferrer, M.; Hajnsek, I.; Ouchi, K. Ship detection with spectral analysis of synthetic aperture radar: A comparison of new and well-known algorithms. Remote Sens. 2015, 7, 5416–5439. [Google Scholar] [CrossRef]

- Tuermer, S.; Kurz, F.; Reinartz, P.; Stilla, U. Airborne vehicle detection in dense urban areas using HoG features and disparity maps. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2327–2337. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Sun, H.; Sun, X.; Wang, H.; Li, Y.; Li, X. Automatic target detection in high-resolution remote sensing images using spatial sparse coding bag-of-words model. IEEE Geosci. Remote Sens. Lett. 2012, 9, 109–113. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Wen, C.; Teng, X.; Chen, Y.; Guan, H.; Luo, H.; Cao, L.; Li, J. Vehicle detection in high-resolution aerial images via sparse representation and superpixels. IEEE Trans. Geosci. Remote Sens. 2016, 54, 103–116. [Google Scholar] [CrossRef]

- Chen, X.; Gong, R.-X.; Xie, L.-L.; Xiang, S.; Liu, C.-L.; Pan, C.-H. Building regional covariance descriptor s for vehicle detection. IEEE Geosci. Remote Sens. Lett. 2017, 14, 524–528. [Google Scholar] [CrossRef]

- Biondi, F. Low-Rank Plus Sparse Decomposition and Localized Radon Transform for Ship-Wake Detection in Synthetic Aperture Radar Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 117–121. [Google Scholar] [CrossRef]

- Biondi, F. Low rank plus sparse decomposition of synthetic aperture radar data for maritime surveillance. In Proceedings of the 2016 4th International Workshop on Compressed Sensing Theory and Its Applications to Radar, Sonar and Remote Sensing (CoSeRa), Aachen, Germany, 19–22 September 2016; pp. 75–79. [Google Scholar]

- Biondi, F. (L + S)-RT-CCD for Terrain Paths Monitoring. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1209–1213. [Google Scholar] [CrossRef]

- Wang, C.; Bi, F.; Zhang, W.; Chen, L. An intensity-space domain CFAR method for ship detection in HR SAR images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 529–533. [Google Scholar] [CrossRef]

- Hwang, S.-I.; Ouchi, K. On a novel approach using MLCC and CFAR for the improvement of ship detection by synthetic aperture radar. IEEE Geosci. Remote Sens. Lett. 2010, 7, 391–395. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the 13th European Conference Computer Vision, Zürich, Switzerland, 6–12 September 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Ross, G.; Piotr, D.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Francois, C. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Huang, G.; Liu, Z.; Weinberger, K.Q.; van der Maaten, L. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2014), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Van de Sande, K.E.; Uijlings, J.R.; Gevers, T.; Smeulders, A.W. Segmentation as selective search for object recognition. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1879–1886. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Fu, C.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; Murphy, K. Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors; IEEE: Piscataway, NJ, USA, 2016; pp. 3296–3297. [Google Scholar]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the BIGSARDATA, Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- Zhao, H.; Wang, Q.; Huang, J.; Wu, W.; Yuan, N. Method for inshore ship detection based on feature recognition and adaptive background window. J. Appl. Remote Sens. 2014, 8, 083608. [Google Scholar] [CrossRef]

- Zhai, L.; Li, Y.; Su, Y. Inshore ship detection via saliency and context information in high-resolution SAR images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1870–1874. [Google Scholar] [CrossRef]

- Wang, Q.; Zhu, H.; Wu, W.; Zhao, H.; Yuan, N. Inshore ship detection using high-resolution synthetic aperture radar images based on maximally stable extremal region. J. Appl. Remote Sens. 2015, 9, 095094. [Google Scholar] [CrossRef]

- Liu, L.; Pan, Z.; Lei, B. Learning a Rotation Invariant Detector with Rotatable Bounding Box. arXiv 2017, arXiv:1711.09405.v1. [Google Scholar]

- Liu, Z.; Hu, J.; Weng, L.; Yang, Y. Rotated region based CNN for ship detection. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2018; pp. 900–904. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in vhr optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1–5. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. arXiv, 2017; arXiv:1709.01507. [Google Scholar]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent Spatial and Channel Squeeze & Excitation in Fully Convolutional Networks. arXiv, 2018; arXiv:1803.02579. [Google Scholar]

- Shen, Z.; Shi, H.; Feris, R.; Cao, L.; Yan, S.; Liu, D.; Wang, X.; Xue, X.; Huang, T.S. Learning object detectors from scratch with gated recurrent feature pyramids. arXiv, 2017; arXiv:1712.00886. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM Convolutional Block Attention Module. arXiv, 2018; arXiv:1807.06521. [Google Scholar]

- Poirson, P.; Ammirato, P.; Fu, C.Y.; Liu, W.; Kosecka, J.; Berg, A.C. Fast Single Shot Detection and Pose Estimation. In Proceedings of the Fourth International Conference on 3D Vision, Standford, CA, USA, 25–28 October 2016; pp. 676–684. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Xu, Y.; Zhu, M.; Xin, P.; Li, S.; Qi, M.; Ma, S. Rapid Airplane Detection in Remote Sensing Images Based on Multilayer Feature Fusion in Fully Convolutional Neural Networks. Sensors 2018, 18, 2335. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Li, F.F.; Deng, J.; Li, K. ImageNet: Constructing a large-scale image database. J. Vis. 2009, 9, 1037. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J. Caffe: Convolutional Architecture for Fast Feature Embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014. [Google Scholar]

| NoS | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NoI | 725 | 183 | 89 | 47 | 45 | 16 | 15 | 8 | 4 | 11 | 5 | 3 | 3 | 0 |

| Method | AAP (%) | |

|---|---|---|

| Two-stage | Faster R-CNN + AlexNet | 77.8 |

| SSD + AlexNet | 78.5 | |

| One-stage | DRBox | 81.9 |

| Proposed | 84.2 | |

| Semantic Aggregation | Attention | AAP (%) |

|---|---|---|

| × | × | 81.1 |

| √ | × | 82.0 |

| × | √ | 83.7 |

| √ | √ | 84.2 |

| Method | Detector (FPS) | Angle (FPS) | Total (FPS) | |

|---|---|---|---|---|

| Two-stage | Faster R-CNN + AlexNet | 7 (Faster R-CNN) | 80 | 6 |

| SSD + AlexNet | 48 (SSD) | 80 | 30 | |

| One-stage | Proposed | - | - | 40 |

| No. of Anchors | 8732 × 6 | 8732 × 2 | 9102 × 6 | 9102 × 2 |

|---|---|---|---|---|

| AAP (%) | 80.2 | 81.7 | 84.6 | 84.2 |

| FPS | 15 | 45 | 14 | 40 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Lu, C.; Jiang, W. Simultaneous Ship Detection and Orientation Estimation in SAR Images Based on Attention Module and Angle Regression. Sensors 2018, 18, 2851. https://doi.org/10.3390/s18092851

Wang J, Lu C, Jiang W. Simultaneous Ship Detection and Orientation Estimation in SAR Images Based on Attention Module and Angle Regression. Sensors. 2018; 18(9):2851. https://doi.org/10.3390/s18092851

Chicago/Turabian StyleWang, Jizhou, Changhua Lu, and Weiwei Jiang. 2018. "Simultaneous Ship Detection and Orientation Estimation in SAR Images Based on Attention Module and Angle Regression" Sensors 18, no. 9: 2851. https://doi.org/10.3390/s18092851

APA StyleWang, J., Lu, C., & Jiang, W. (2018). Simultaneous Ship Detection and Orientation Estimation in SAR Images Based on Attention Module and Angle Regression. Sensors, 18(9), 2851. https://doi.org/10.3390/s18092851