1. Introduction

1.1. Background and Motivation

With the active development of computer and communication technologies, the estimation problem in multi-sensor network stochastic systems has become an important research topic in the last few years. The significant advantages of multi-sensor systems in practical situations (low cost, remote operation, simple installation, and maintenance) are obvious, and have triggered wide use of these systems in many areas, such as target tracking, communications, the manufacturing industry, etc. Moreover, they usually provide more information than traditional communication systems with a single sensor alone. In spite of these advantages, a sensor network is not generally a reliable communication medium, and together with the communication capacity limitations (network bandwidths or service capabilities, among others), may yield different uncertainties during data transmission, such as missing measurements, random delays, and packet dropouts.

The development of sensor networks motivates the necessity to desig fusion estimation algorithms which integrate the information from the different sensors and take these network-induced uncertainties into account to achieve a satisfactory performance. Depending on the way the information fusion is performed, there are two fundamental fusion techniques: the centralized fusion approach, in which the measurements from all sensors are sent to a central processor where the fusion is performed, and the distributed fusion approach, in which the measurements from each sensor are processed independently to obtain local estimators before being sent to the fusion center. The survey papers [

1,

2,

3] can be examined for a wide view of these and other multi-sensor data fusion techniques.

As already indicated, centralized fusion architecture is based on a fusion centre that is able to receive, fuse, and process the data coming from every sensor; hence, centralized fusion estimation algorithms provide optimal signal estimators based on the measured outputs from all sensors and, consequently, when all of the sensors work correctly and the connections are perfect, they have the optimal estimation accuracy. In light of these concerns, it is not surprising that the study of the centralized and distributed fusion estimation problems in multi-sensor systems with network-induced uncertainties (in both the sensor measured outputs and the data transmission) has become an active research area in recent years. The estimation problem in systems with uncertainties in the sensor outputs (such as missing measurements, stochastic sensor gain degradation and fading measurements) is addressed in refs. [

4,

5,

6], among others. In refs. [

7,

8,

9,

10], systems with failures during transmission (such as uncertain observations, random delays, and packet dropouts) are considered. Also, recent advances in the estimation, filtering, and fusion of networked systems with network-induced phenomena can be reviewed in refs. [

11,

12], where detailed overviews on this field are presented.

Since our aim in this paper is the design of centralized fusion estimators in multi-sensor network systems with measurements perturbed by random parameter matrices subject to random transmission failures (delays and packet dropouts), and multi-packet processing is considered, we discuss the research status of the estimation problem in networked systems with some of these characteristics.

1.2. Multi-Sensor Measured Outputs with Random Parameter Matrices

It is well known that in sensor-network environments, the measured outputs can be subject not only to additive noises, but also to multiplicative noise uncertainties due to several reasons, such as the presence of an intermittent sensor or hardware failure, natural or human-made interference, etc. For example, measurement equations that model the above-mentioned situations involving degradation of the sensor gain, or missing or fading measurements must include multiplicative noises described by random variables with values of

. So, random measurement parameter matrices provide a unified framework to address different simultaneous network-induced phenomena, and networked systems with random parameter matrices are used in different areas of science (see, e.g., refs. [

13,

14]). Also, systems with random sensor delays and/or multiple packet dropouts are transformed into equivalent observation models with random measurement matrices (see, e.g., ref. [

15]). Hence, the estimation problem for systems with random parameter matrices has experienced increasing interest due to its diverse applications, and many estimation algorithms for such systems have been proposed over the last few years (see, e.g., refs. [

16,

17,

18,

19,

20,

21,

22,

23,

24], and references therein).

1.3. Transmission Random Delays and Losses: Observation Predictor Compensation

Random delays and packet dropouts in the measurement transmissions are usually unavoidable and clearly deteriorate the performance of the estimators. For this reason, much effort has been made towards the study of the estimation problem to incorporate the effects of these transmission uncertainties, and several modifications of the standard estimation algorithms have been proposed (see, e.g., refs. [

25,

26,

27], and references therein). In the estimation problem from measurements subject to transmission losses, when a packet drops out, the processor does not recieve a valid measurement and the most common compensation procedure is the hold-input mechanism which consists of processing the last measurement that was successfully transmitted. Unlike the approach to deal with losses, in ref. [

28] the estimator of the lost measurement based on the information received previously is proposed as the compensator; this methodology significantly improves the estimations, since in cases of loss, all the previously received measurements are considered, instead of using only the last one. In view of this clear improvement of the estimators, the compensation strategy developed in ref. [

28] has been adopted in some other recent investigations (see, e.g., refs. [

29,

30], and references therein).

1.4. Multi-Packet Processing

Another concern at the forefront of research in networked systems subject to random delays and packet dropouts is the number of packets that are processed to update the estimator at each moment, and different observation models have been proposed to deal with this issue. For example, to avoid losses as much as possible, in ref. [

16] it is assumed that each packet is transmitted several times. In contrast, to avoid the network congestion that may be caused by multiple transmissions, ref. [

31] the packets are sent just once. These papers also assume that each packet is either received on time, delayed for, at most, one sampling time, or lost, and only one packet or no packets are processed to update the estimator at each moment. However, in refs. [

32,

33,

34] two packets were able to arrive at each sampling time, in which case, both were used to update the estimators, thus improving their performance. In these papers, different packet dropout compensation procedures have been employed. The last available measurement was used as compensation in refs. [

32,

34], while the observation predictor was considered in ref. [

34].

1.5. Addressed Problem and Paper Contributions

Based on the considerations made above, we were motivated to address the study of the centralized fusion estimation problem for multi-sensor networked systems perturbed by random parameter matrices. This problem is discussed under the following assumptions: (a) Each sensor transmits their measured outputs to a central processor over different communication channels and random delays, and packet dropouts are assumed to occur during the transmission; (b) in order to avoid the network congestion, at each time instant, the different sensors send their packets only once, but due to the transmission random failures, the processing center can receive more than one packet; specifically, either one packet, two packets, or nothing; and (c) the measurement output predictor is used as a loss compensation strategy.

The main contributions and advantages of the current work are summarized as follows: (1) A unified framework to deal with different network-induced phenomena in the measured outputs, such as missing measurements or sensor gain degradation, is provided by the use of random measurement matrices. (2) Besides the uncertainties in the measured outputs, random one-step delays and packet dropouts are assumed to occur during the transmission at different rates at each sensor. Unlike previous authors’ papers concerning random measurement matrices and random transmission delays and losses where only one packet is processed to update the estimator at each moment, in this paper, the estimation algorithms are obtained under the assumption that either one packet, two packets, or nothing may arrive at each sampling time. (3) Concerning the compensation strategy, the use of the measurement predictor as the loss compensator combined with the simultaneous processing of delayed packets provides better estimators in comparison to other approaches where the last measurement successfully received is used to compensate the data packets and only one packet is processed to update the estimator at each moment. (4) The centralized fusion estimation problem is addressed using covariance information, without requiring full knowledge of the state-space model generating the signal process, thus providing a general approach to deal with different kinds of signal processes. (5) The innovation approach is used to obtain recursive prediction, filtering, and fixed-point smoothing algorithms which are recursive and computationally simple, and thus aresuitable for online implementation. In contrast to the approaches where the state augmentation technique is used, the proposed algorithms are deduced without making use of augmented systems; therefore, they have lower computational costs than those based on the augmentation method.

1.6. Paper Structure and Notation

The remaining sections of the paper are organized as follows.

Section 2 presents the assumptions for the signal process, the mathematical models of the multi-sensor measured outputs with random parameter matrices, and the measurements received by the central processor with random delays and packet losses.

Section 3 provides the main results of the research, namely, the covariance-based centralized least-squares linear prediction and filtering algorithm (Theorem 1) and fixed-point smoothing algorithm (Theorem 2). A numerical example is presented in

Section 4 to show the performance of the proposed centralized estimators, and some concluding remarks are drawn in

Section 5. The proofs of Theorems 1 and 2 are presented in the

Appendix A and

Appendix B, respectively.

The notations used throughout the paper are standard. and denote the n-dimensional Euclidean space and the set of all real matrices, respectively. and denote the transpose and inverse of a matrix (A), respectively. and denote the identity matrix and zero matrix, respectively. denotes the all-ones vector. Finally, ⊗ and ∘ are the Kronecker and Hadamard products, respectively, and is the Kronecker delta function.

2. Observation Model and Preliminaries

The aim of this section is to design a mathematical model to allow the observations to be processed in the least-squares (LS) linear estimation problem of discrete-time signal processes from multi-sensor noisy measurements transmitted through imperfect communication channels where random one-step delay and packet dropouts may arise in the transmission process. More specifically, in order to avoid the network congestion, at every sampling time, it is assumed that the measured outputs from each sensor, which are perturbed by random parameter matrices, are transmitted just once to a central processor, and due to random delays and losses, the processing center (PC) may receive, from each sensor, either one packet, two packets, or nothing at each time instant.

In this context, our goal is to find recursive algorithms for the LS linear prediction, filtering, and fixed-point smoothing problems using the centralized fusion method. We assume that only information about the mean and covariance functions of the signal process is available, and this information is specified in the following hypothesis:

- (H1)

The -dimensional signal process, , has a zero-mean, and its autocovariance function is expressed in a separable form, , , where are known matrices.

2.1. Multi-Sensor Measured Outputs with Random Parameter Matrices

We assume that there are

m sensors which provide measured outputs of the signal process that are affected by random parameter matrices according to the following model:

where

is the signal measurement in the

i-th sensor at time

k,

are random parameter matrices, and

are the measurement noises. We assume the following hypotheses for these proceses:

- (H2)

, for , are independent sequences of independent random parameter matrices. For and , we denote as the -th entry of , which has known first and second order moments, and .

- (H3)

The measurement noises , , are zero-mean second-order white processes with .

2.2. Observation Model. Properties

To address the estimation problem with the centralized fusion method, the observations coming from the different sensors are gathered and jointly processed at each sampling time to yield the optimal signal estimator. So, the problem is addressed by considering, at each time (

), the vector constituted by the measurements received from all sensors and for this purpose, Equations (

1) were combined to yield the following stacked measured output equation:

where

As already indicated, random one-step delays and packet dropouts occur during the transmissions to the PC. To model these failures, we introduced the following sequences of random variables:

, are sequences of Bernoulli random variables. Each , means that the output at the current sampling time, , arrives on time to be processed for the estimation, while means that this output is either delayed or dropped out; and

, are sequences of Bernoulli random variables. For each , means that is processed at sampling time k (because it was one-step delayed) and means that is not processed at sampling time k (because it was either received at time or dropped out). Since implies , it is clear that the value of is conditioned by that of .

For the previous sequences of Bernoulli variables, we assume the following hypothesis:

- (H4)

, are independent sequences of independent random vectors, such that

, are sequences of Bernoulli random variables with known probabilities, , ; and

, are sequences of Bernoulli random variables such that the conditional probabilities (

) are known. Thus,

Moreover, the mutual independence hypothesis of the involved processes is also necessary:

- (H5)

For , the signal process , the random matrices , and the noises and are mutually independent.

Remark 1. From hypothesis (H4), for , the following correlations are clear: In order to write jointly the sensor measurements to be processed at each sampling time, we defined the matrices

. and

. From the definition of variables

, it is clear that the non-zero components of vector

are those of

that arrive on time at the PC and, consequently, those processed at time

k. The other components of

are delayed or lost, and as compensation, the corresponding components of the predictor

, specified in

, are processed. Similarly, the non-zero components of

are those of

that are affected by one-step delay, and consequently, they are also processed at time

k. Hence, the processed observations at each time are expressed by the following model:

or equivalently,

where

and

Remark 2. For a better understanding of Model (4) for the measurements processed after the possible transmission one-step delays and losses, a single sensor is considered in the following comments. On the one hand, note that means that the output at the current sampling time () arrives on time to be processed. Then, if , the measurement processed at time k is , while if , then . On the other hand, if , the output is either delayed or dropped out, and its predictor is processed at time k. Then, if , the measurement processed at time k is , while if , then . Table 1 displays ten iterations of a specific simulation of packet transmission. From Table 1, it can be observed that , , , , and arrive on time to be processed; , and are one-step delayed; and and are lost. So, Model (4) describes possible one-step random transmission delays and packet dropouts in networked systems, where one or two packets can be processed for the estimation. Finally, note that the predictors , are used to compensate for the measurements that do not arrive on time. The problem is then formulated as that of obtaining the LS linear estimator of the signal,

based on the observations

given in (

5). Next, some statistical properties of the processes involved in observation models (

2) and (

5), which are necessary to address the LS linear estimation problem, are specified:

- (P1)

is a sequence of independent random matrices with known means: .

- (P2)

The sequence is a zero-mean second-order process with where .

- (P3)

The random matrices

are independent, and their means are given by

- (P4)

The signal process, and the processes and are mutually independent.

- (P5)

is a zero-mean process with covariance matrices

, for

which, from (P4), are given by

with

, for

, and

where the

-th entries of the matrices

are given by

Remark 3. By denoting and , it is clear that and are known matrices whose entries are given in (3). Now, by definingand taking the Hadamard product properties into account, it is easy to check that the covariance matrices () are given by 3. Centralized Fusion Estimators

This section is concerned with the problem of obtaining recursive algorithms for the LS linear centralized fusion prediction, filtering, and fixed-point smoothing estimators. For this purpose, we used an innovation approach. Also the estimation error covariance matrices, which are used to measure the accuracy of the proposed estimators when the LS optimality criterion is used, were derived.

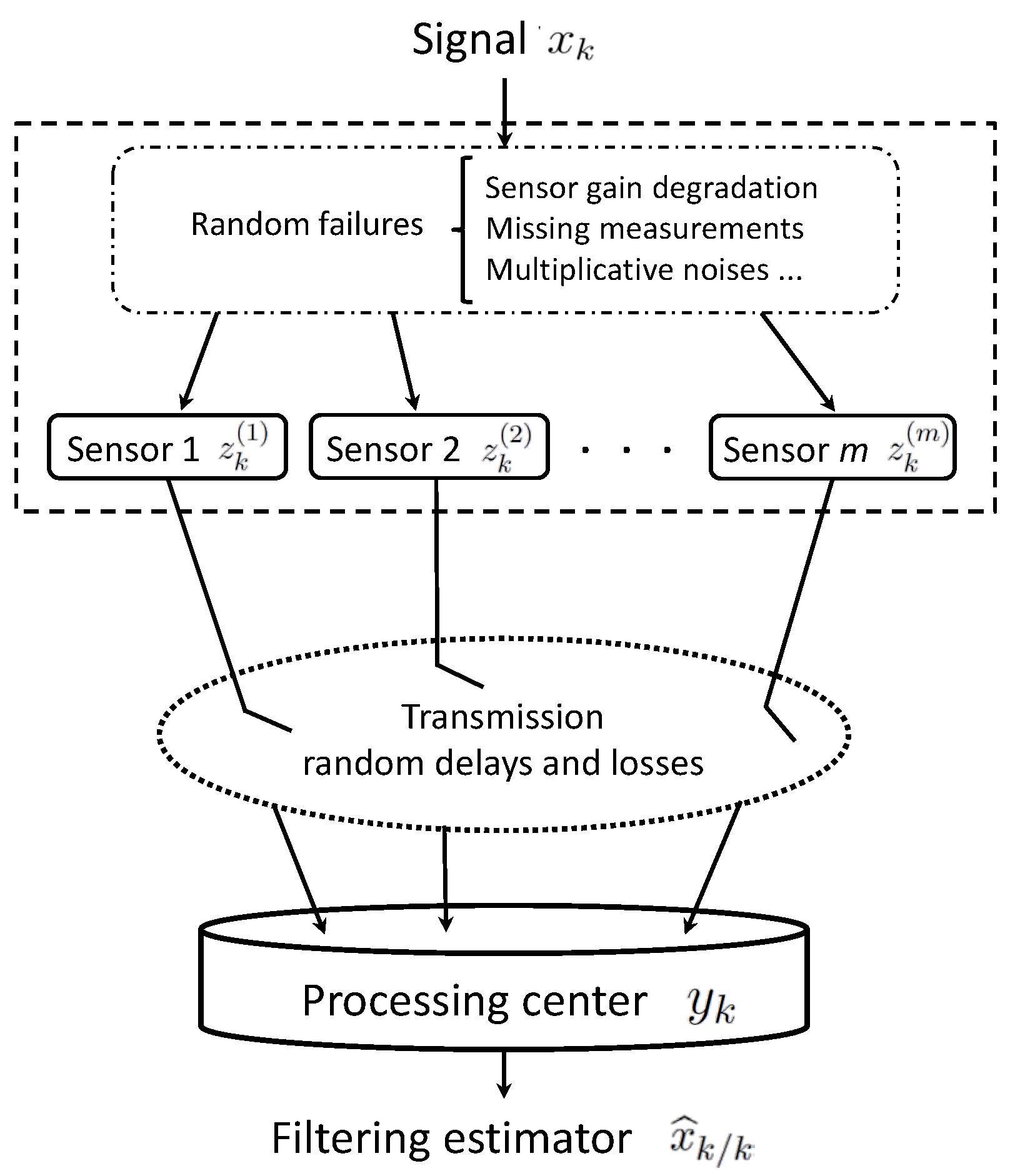

The centralized fusion structure for the considered networked systems with random uncertainties in the measured outputs and transmission is illustrated in

Figure 1.

3.1. Innovation Technique

As indicated above, our aim was to obtain the optimal LS linear estimators,

, of the signal

based on the measurements

, given in (

5), by recursive algorithms. Since the estimator

is the orthogonal projection of the signal

onto the linear space spanned by the nonorthogonal vectors

, we used an innovation approach in which the observation process

was transformed into an equivalent one (

innovation process) of orthogonal vectors

; the equivalence means that each set

spans the same linear subspace as

.

The innovation at time

k is defined as

, where

and, for

,

, the one-stage linear predictor of

is the projection of

onto the linear space generated by

. Due to the orthogonality property of the innovations and since the innovation process is uniquely determined by the observations, by replacing the observation process by the innovation one, the following general expression for the LS linear estimators of any vector

based on the observations

was obtained

This expression is derived from the uncorrelation property of the estimation error with all of the innovations, which is guaranteed by the Orthogonal Projection Lemma (OPL). As shown by (

8), the first step to obtain the signal estimators is to find an explicit formula for the innovation or, equivalently, for the one-stage linear predictor of the observation.

One-Stage Observation Predictor

To obtain

, the projection of

onto the linear space generated by

, we used (

5) and we note that

and

are correlated with the innovation

. So, to simplify the derivation of

, the observations (

5) were rewritten as follows:

Taking into account that

and

are independent of

, for

, it is easy to see that

for

. So, from the general expression (

8), we obtained

, where

. Hence, according to the projection theory,

satisfies

This expression for the one-stage observation predictor along with (

8) for the LS linear estimators are the starting points to get the recursive prediction, filtering, and fixed-point smoothing algorithms.

3.2. Centralized Fusion Prediction, Filtering, and Smoothing Algorithms

The following theorem presents a recursive algorithm for the LS linear centralized fusion prediction and filtering estimators

,

, of the signal

based on the observations

given in (

5) or equivalently, in (

9).

Theorem 1. Under hypotheses (H1)–(H5), the LS linear centralized predictors and filter , and the corresponding error covariance matrices are obtained bywhere the vectors and the matrices are recursively obtained fromand the matrices satisfywhere , for is defined by The innovations are given byand the coefficients are obtained bywhere , whose entries are given in (3). The innovation covariance matrices satisfywhere the matrices are given in (7), , whose entries are obtained by (3), and the matrices and are given by Next, a recursive algorithm for the LS linear centralized fusion smoothers at the fixed point k for any is established in the following theorem.

Theorem 2. Under hypotheses (H1)–(H5), the LS linear centralized fixed-point smoothers are calculated bywhose initial condition is given by the centralized filter , and the matrices are obtained by The matrices satisfy the following recursive formula: The fixed-point smoothing error covariance matrices, , are calculated bywith the initial condition given by the filtering error covariance matrix . The filter , the innovations , their covariance matrices and , and the matrices and were obtained from Theorem 1.

4. Numerical Simulation Example

The performance of the proposed centralized filtering and fixed-point smoothing algorithms was analyzed in a numerical simulation example which also shows how some of the sensor uncertainties covered by the current measurement model (

1) with random parameter matrices influence the accuracy of the estimators. Also, the effect of the random transmission delays and packet losses on the performance of the estimators was analyzed.

4.1. Signal Process

Consider a discrete-time scalar signal process generated by the following model with the state-dependent multiplicative noise

where

is a standard Gaussian variable, and

are zero-mean Gaussian white processes with unit variance. Assuming that

,

and

are mutually independent, the signal covariance is given by

where

is obtained by

Hence, the hypothesis (H1) is satisfied with, for example, y .

This signal process has been considered in the current authors’ previous papers and shows that hypothesis (H1) regarding the signal autocovariance function is satisfied for uncertain systems with state-dependent multiplicative noise. Also, situations where the system matrix in the state-space model is singular are covered by hypothesis (H1) (see, e.g., ref. [

9]). Hence, this hypothesis provides a unified general context to deal with different situations, thus avoiding the derivation of specific algorithms for each of them.

4.2. Sensor Measured Outputs

As in ref. [

20], let us consider four sensors that provide scalar measurements with different random failures, which are described using random parameters according to the theoretical model (

1). Namely, sensor 1 has continuous gain degradation, sensor 2 has discrete gain degradation, sensor 3 has missing measurements and sensor 4 has both missing measurements and multiplicative noise. Specifically, the scalar measured outputs are described according to the model

where the additive noises are defined as

, with

,

,

, and

is a Gaussian white sequence with a mean of 0 and variance of

. The additive noises are correlated with

The random measurement matrices are defined by

, for

, where

,

,

, and

, where the sequence

is a standard Gaussian white process, and

, are also white processes with time-invariant probability distributions that are given as follows:

are uniformly distributed over .

For are Bernoulli random variables with .

4.3. Model for the Measurements Processed

Now, according to the theoretical study, we assume that the sensor measurements,

, that are processed to update the estimators are modeled by

where

and

, and for

,

and

are sequences of independent Bernoulli random variables whose distributions are determined by the following probabilities:

: probability that the measurement is not received at time k because it is delayed or lost.

: probability that the measurement is received at the current time (k), conditioned to the fact that it is not received on time.

: probability that the measurement is received and processed at the current time k.

Finally, in order to apply the proposed algorithms, it was assumed that all the processes involved in the observation equations satisfy the independence hypotheses imposed on the theoretical model.

To illustrate the feasibility and effectiveness of the proposed algorithms, they were implemented in MATLAB, and fifty iterations of the filtering and fixed-point smoothing algorithms were performed. The estimation accuracy was examined by analyzing the error variances for different probabilities of the Bernoulli variables modeling the random failures in sensors 3 and 4 (). Also, different values of the probabilities , corresponding to the transmission uncertainties, and different conditional probabilities , , were considered in order to analyze the effect of these failures on the estimation accuracy.

In the study of the performance of the centralized estimators, they were compared with local ones, which were computed using only the measurements received from each single sensor. In that case, the measurements processed at each local processor can be described by

where

is the one-stage predictor of

based on

, and the corresponding local estimators are obtained via recursive algorithms similar to those in Theorems 1 and 2.

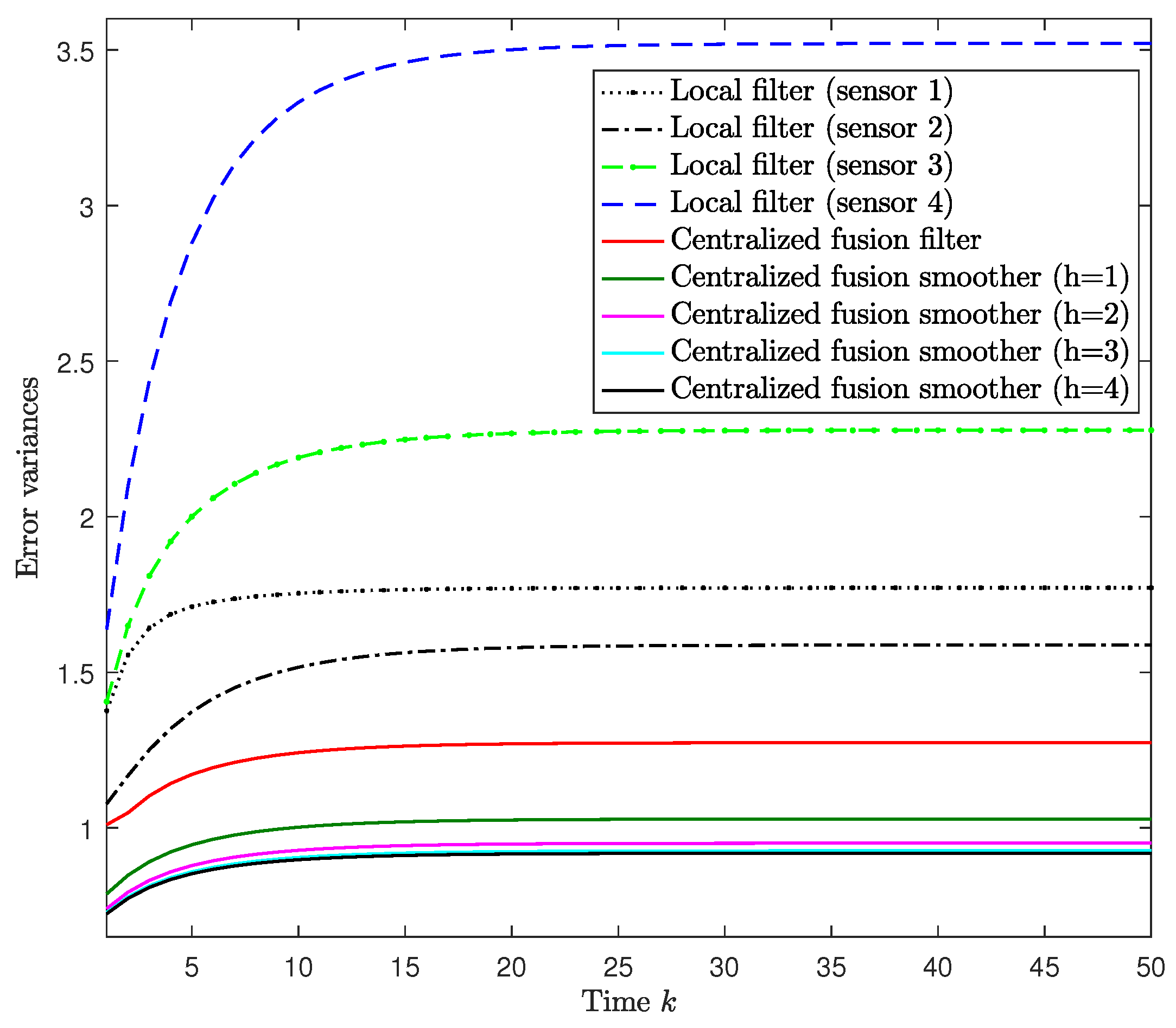

4.4. Performance of the Centralized Fusion Filtering and Smoothing Estimators

For

, we assumed that

, and that the missing probabilities

had the same value in sensors

, namely,

,

. The error variances of the local filtering estimators and both the centralized filtering and smoothing error variances are displayed in

Figure 2. This figure shows, on the one hand, that the error variances of the centralized fusion filtering estimators are significantly smaller than those of every local estimator. Consequently, agreeing with what is theoretically expected, the centralized fusion filter has better accuracy than the local ones, as it is the optimal one based on the information from all the contributing sensors. On the other hand,

Figure 2 also shows that as more observations are considered in the estimation, the error variances are lower and consequently, the performance of the centralized estimators becomes better. In other words, the smoothing estimators are more accuracy than the filtering ones, and the accuracy of the smoothers at each fixed-point

k is better as the number of available observations

increases, although this improvement is practically imperceptible for

. Similar results were obtained for other values of the probabilities

,

and

.

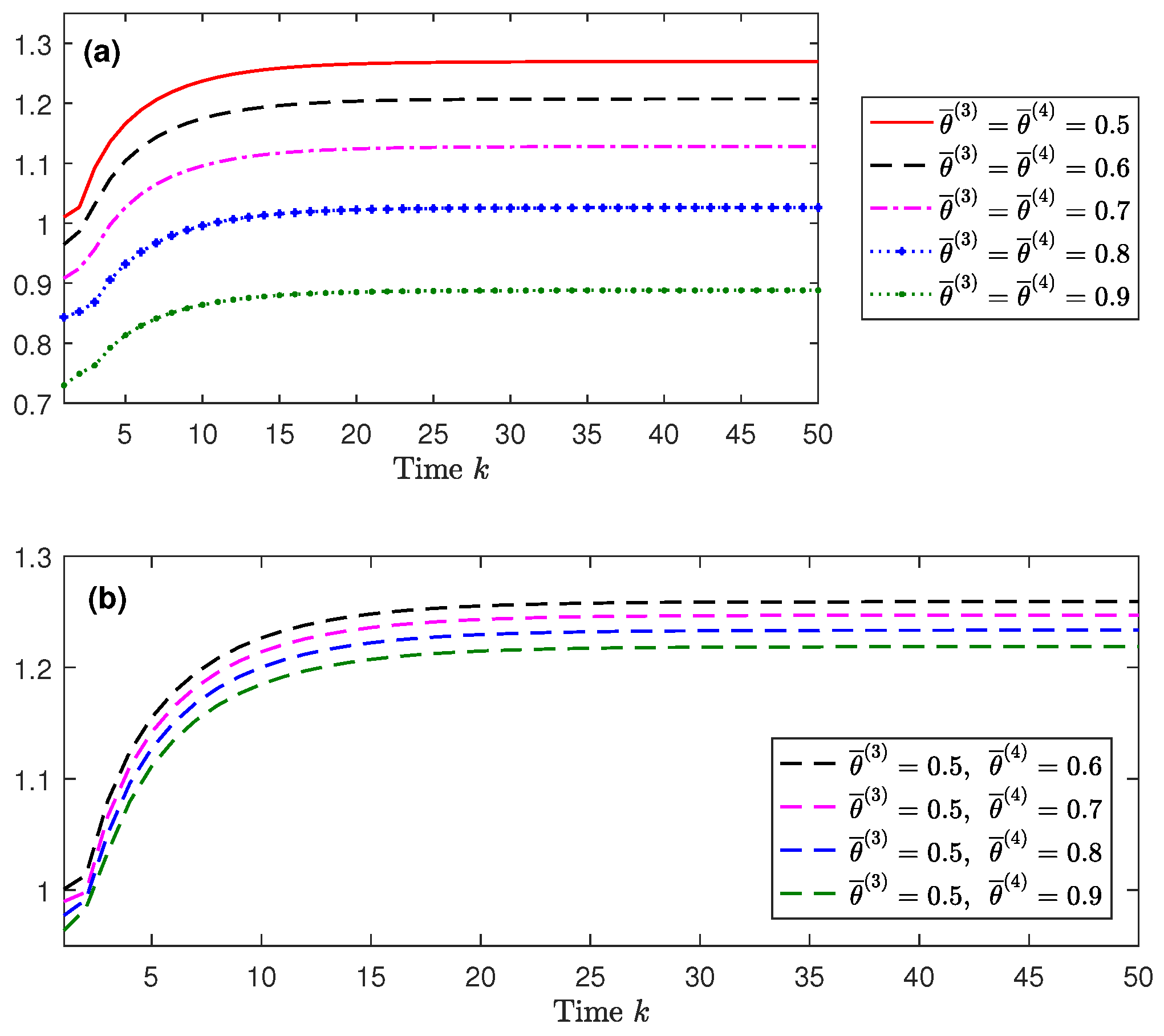

4.5. Influence of the Missing Measurement Phenomenon in Sensors 3 and 4

Considering

,

again, in order to show the effect of the missing probabilities in sensors

, the centralized filtering error variances are displayed in

Figure 3 for different values of these probabilities

. Specifically, in

Figure 3a, it is assumed that

with a range of values from 0.5 to 0.9, and in

Figure 3b,

and

varies from 0.6 to 0.9. From these figures, it is clear that the performance of the centralized fusion filter is indeed influenced by the probabilities

. Specifically, the performance of the centralized filter is poorer as

decreases, which means that, as expected, the lower the probability of missing measurements is, the better performance the filter has. Analogous results were obtained for the centralized smoothers and for other values of the probabilities.

Considering that the behavior of the error variances was analogous in all of the iterations, only the results at a specific iteration () are displayed in the following figures.

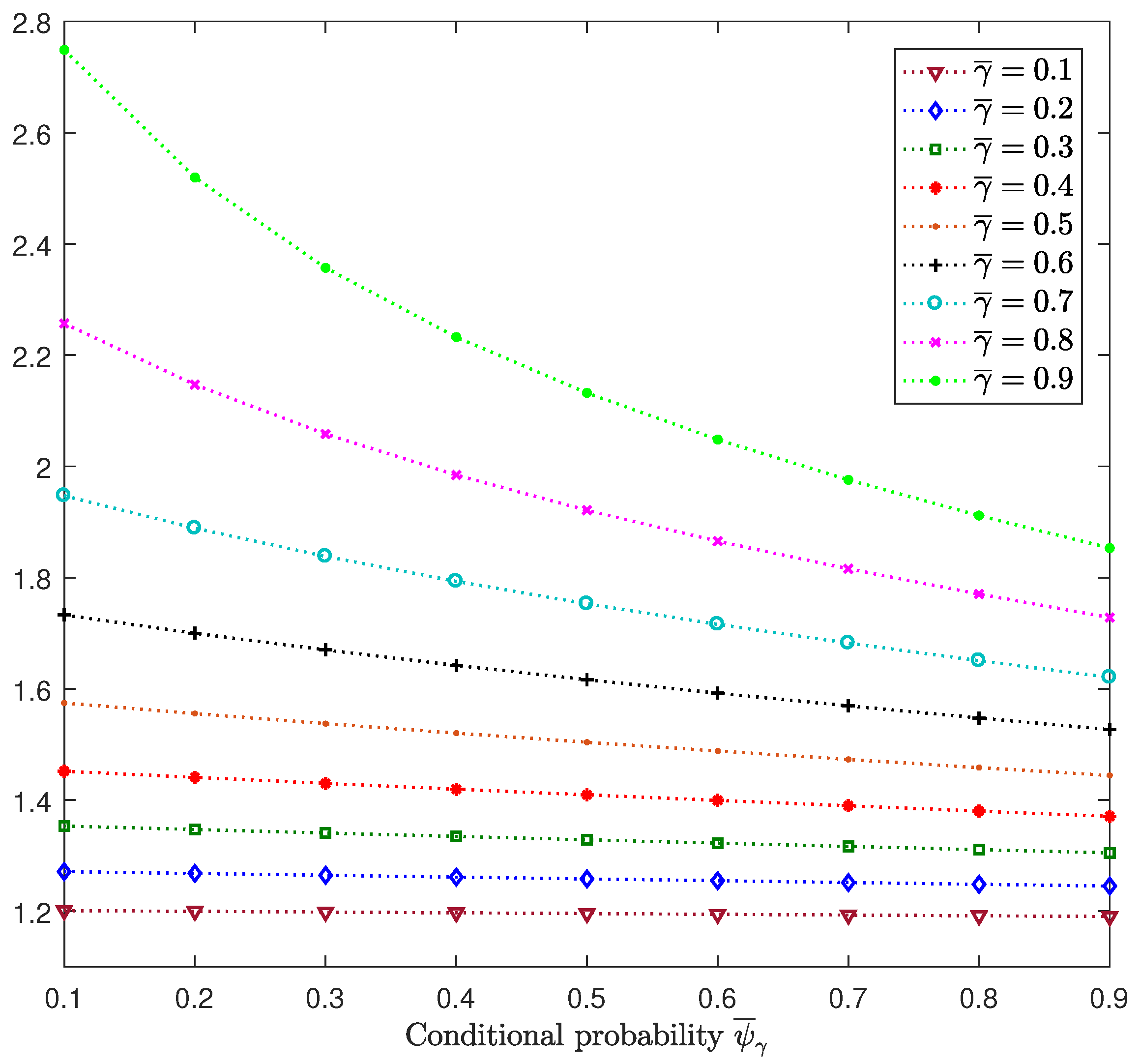

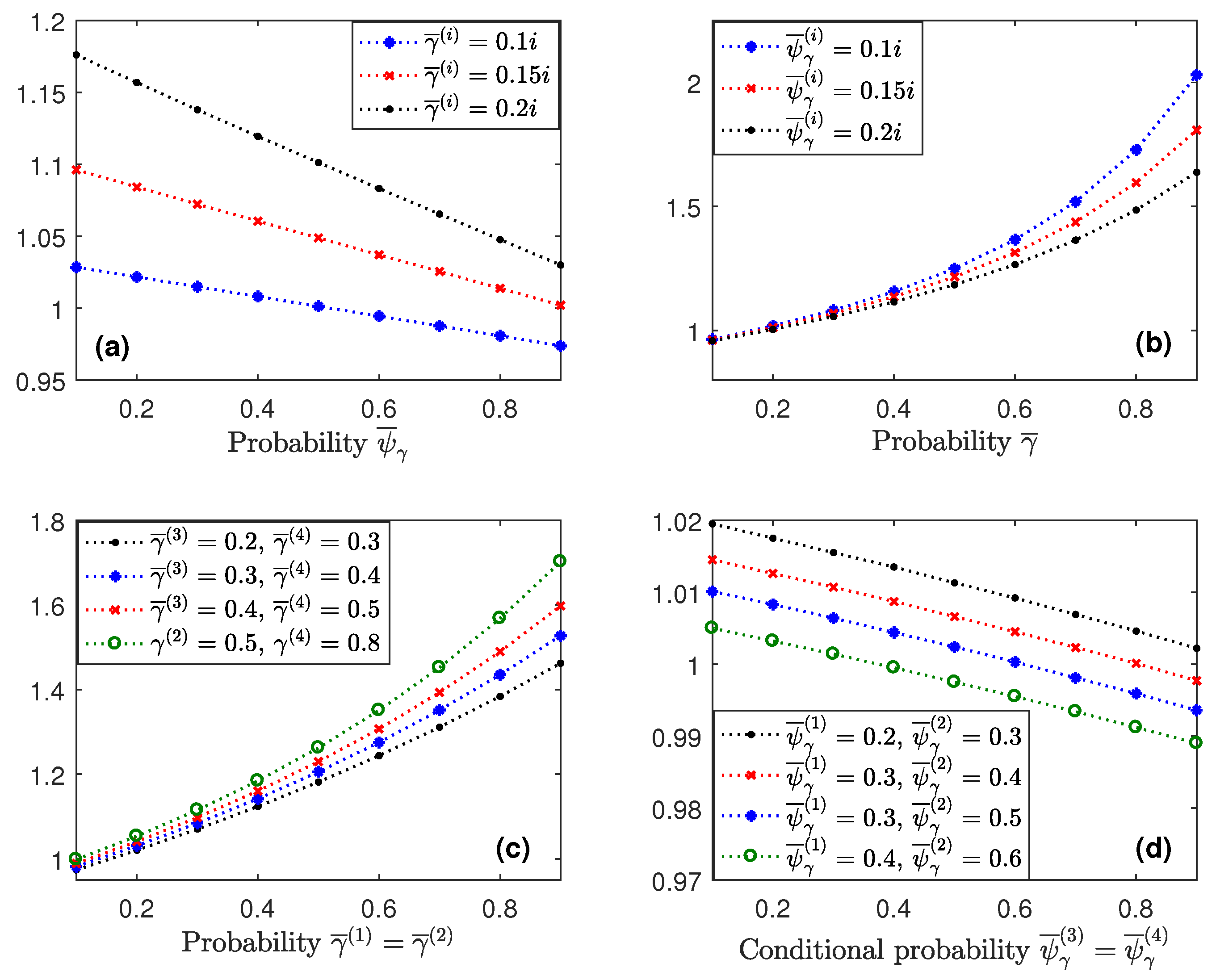

4.6. Influence of the Probabilities and

Considering

,

, as in

Figure 2, we analyze the influences of the random delays and packet dropouts on the performance of the centralized filtering estimators. We assume that the four sensors have the same probability of measurements not arriving on time (

) and also the same conditional probability (

,

).

Figure 4 displays the centralized filtering error variances at

versus

for

varying from 0.1 to 0.9. This figure shows that for each value of

, the error variances decrease when the conditional probability increases. This result was expected since, for a fixed arbitrary value of

, the increase in

entails that of

, which is the probability of processing the delayed measurement at the previous time at the current time. Also, we observed that a decrease in the error variances was more evident for higher values of

, which was also expected since

and hence,

specifies the increasing rate of

with respect to

.

Similar results to the previous ones and consequently, analogous conclusions, were deduced for the smoothing estimators and for different values of the probabilities

and

at each sensor. By way of example, the smoothing error variances

are displayed in

Figure 5 for some of the situations considered above.

5. Concluding Remarks

In this paper, recursive algorithms were designed for the LS linear centralized fusion prediction, filtering, and smoothing problems in networked systems with random parameter matrices in the measured outputs. At each sampling time, every sensor sends its measured output to the fusion centre where the data packets coming from all the sensors are gathered. Every data packet is assumed to be transmitted just once, but random delays and packet dropouts can occur during this transmission, so the estimator may receive either one packet, two packets, or nothing. When the current measurement of a sensor does not arrive punctually, the corresponding component of the stacked measured output predictor is used as the compensator in the design of the estimators.

Some of the main advantages of the current approach are the following ones:

The consideration of random measurement matrices provides a general framework to address different uncertainties, such as missing measurements, multiplicative noise, or sensor gain degradation, as has been illustrated by a simulation example.

The covariance-based approach used to design the estimation algorithms does not require the knowledge of the state-space model, even though it is also applicable to the classical formulation using this model.

In contrast to most estimation algorithms dealing with random delays and packet dropouts in the literature, the proposed ones do not require any state vector augmentation technique, and thus are computationally more simple.

The current estimation algorithms were designed using the LS optimality criterion by a innovation approach and no particular structure of the estimators is required.

Author Contributions

All authors have contributed equally to this work. R.C.-Á., A.H.-C. and J.L.-P. provided original ideas for the proposed model and they all collaborated in the derivation of the estimation algorithms; they participated equally in the design and analysis of the simulation results; and the paper was also written and reviewed cooperatively.

Funding

This research is supported by Ministerio de Economía, Industria y Competitividad, Agencia Estatal de Investigación and Fondo Europeo de Desarrollo Regional FEDER (grant no. MTM2017-84199-P).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1. Based on the general expression (

8), to obtain the LS linear estimators

,

, it is necessary to calculate the coefficients

Using (

5) for

, the independence hypotheses and the factorization of the signal covariance (H1) lead to

, and

, with

given in (

15). Now, using expression (

10) for

together with (

8) for

and

, the coefficients

,

, are expressed as follows:

which guarantees that

, with

given by

Then, by defining

and

, for

, and taking into account that

, for

, it is easy to obtain expressions (

11)–(

16).

Next, the expression (

17) for

is derived. Using (

9) for

, we write

, and we calculate each of these expectations:

From (

5), we write

, and from the independence properties, it is clear that

Now, from the Hadamard product properties, we obtain

; from property (P5),

, and using the OPL and (

2),

. Then, using (

11) and the definition of

, the following expression is obtained:

Using (

2), (

5) again and the OPL, together with Hypothesis (H1) and (

15) for

, we have

From the above items and using (

15),

expression (

17) is deduced with no difficulty.

To obtain expression (

18) for

, we apply the OPL to write

.

On the one hand, using the OPL again, we express

which, takes (

16) into account for

, and the definitions of

and

, clearly satisfy

On the other hand, to obtain

, we use (

9) and (

6) to write

, and since

, the following expression is obtained from the definition of

after some manipulations:

From the above expectations, again, after some manipulations, expression (

18) for

is obtained.

To complete the proof, expression (

19) for

and

is derived. Using the OPL, we have

and

, and from (

16) for

, expression (

19) is straightforward. Then, the proof of Theorem 1 is complete.

Appendix B

Proof of Theorem 2. Using (

8), the signal estimators are written as

, from which it is immediately deduced that the smoothers are recursively obtained by (

20) from the filter

.

Taking into account that

, the recursive relation (

21) is derived by just calculating each of these expectations as follows:

Hypothesis (H1) together with (

15), leads to

From (

16) for

, it is clear that

From the above items, (

21) is proven simply by denoting

, whose recursive expression (

22) is also obvious by using (

12) for

.

Finally, using (

20) for the smoothers

, the recursive formula for the fixed-point smoothing error covariance matrices

is immediately deduced. ☐

References

- Castanedo, F. A review of data fusion techniques. Sci. World J. 2013, 2013, 704504. [Google Scholar] [CrossRef] [PubMed]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inform. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Bark, M.; Lee, S. Distributed multisensor data fusion under unknown correlation and data inconsistency. Sensors 2017, 17, 2472. [Google Scholar]

- Lin, H.; Sun, S. State estimation for a class of non-uniform sampling systems with missing measurements. Sensors 2016, 16, 1155. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Wang, Z.; He, X.; Zhou, D.H. Minimum-variance recursive filtering over sensor networks with stochastic sensor gain degradation: Algorithms and performance analysis. IEEE Trans. Control Netw. Syst. 2016, 3, 265–274. [Google Scholar] [CrossRef]

- Li, W.; Jia, Y.; Du, J. Distributed filtering for discrete-time linear systems with fading measurements and time-correlated noise. Digit. Signal Process. 2017, 60, 211–219. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Centralized fusion estimators for multisensor systems with random sensor delays, multiple packet dropouts and uncertain observations. IEEE Sens. J. 2013, 13, 1228–1235. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, W.; Yu, L. Distributed fusion estimation with missing measurements, random transmission delays and packet dropouts. IEEE Trans. Autom. Control 2014, 59, 1961–1967. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Fusion estimation using measured outputs with random parameter matrices subject to random delays and packet dropouts. Signal Process. 2016, 127, 12–23. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Least-squares estimation in sensor networks with noise correlation and multiple random failures in transmission. Math. Probl. Eng. 2017, 2017, 1570719. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Z.; Chen, D.; Alsaadi, F.E. Estimation, filtering and fusion for networked systems with network-induced phenomena: New progress and prospects. Inform. Fusion 2016, 31, 65–75. [Google Scholar] [CrossRef]

- Sun, S.; Lin, H.; Ma, J.; Li, X. Multi-sensor distributed fusion estimation with applications in networked systems: A review paper. Inform. Fusion 2017, 38, 122–134. [Google Scholar] [CrossRef]

- Luo, Y.; Zhu, Y.; Luo, D.; Zhou, J.; Song, E.; Wang, D. Globally optimal multisensor distributed random parameter matrices Kalman filtering fusion with applications. Sensors 2008, 8, 8086–8103. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.J.; Luo, Y.T.; Zhu, Y.M.; Song, E.B. Globally optimal distributed Kalman filtering fusion. Sci. China Inf. Sci. 2012, 55, 512–529. [Google Scholar] [CrossRef]

- Wang, S.; Fang, H.; Tian, X. Minimum variance estimation for linear uncertain systems with one-step correlated noises and incomplete measurements. Digit. Signal Process. 2016, 49, 126–136. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Z.; Gao, H. Recursive filtering with random parameter matrices, multiple fading measurements and correlated noises. Automatica 2013, 49, 3440–3448. [Google Scholar] [CrossRef]

- Linares-Pérez, J.; Caballero-Águila, R.; García-Garrido, I. Optimal linear filter design for systems with correlation in the measurement matrices and noises: Recursive algorithm and applications. Int. J. Syst. Sci. 2014, 45, 1548–1562. [Google Scholar] [CrossRef]

- Yang, Y.; Liang, Y.; Pan, Q.; Qin, Y.; Yang, F. Distributed fusion estimation with square-root array implementation for Markovian jump linear systems with random parameter matrices and cross-correlated noises. Inf. Sci. 2016, 370–371, 446–462. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Networked fusion filtering from outputs with stochastic uncertainties and correlated random transmission delays. Sensors 2016, 16, 847. [Google Scholar] [CrossRef] [PubMed]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Optimal fusion estimation with multi-step random delays and losses in transmission. Sensors 2017, 17, 1151. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Tian, T.; Honglei, L. State estimators for systems with random parameter matrices, stochastic nonlinearities, fading measurements and correlated noises. Inf. Sci. 2017, 397–398, 118–136. [Google Scholar] [CrossRef]

- Wang, W.; Zhou, J. Optimal linear filtering design for discrete time systems with cross-correlated stochastic parameter matrices and noises. IET Control Theory Appl. 2017, 11, 3353–3362. [Google Scholar] [CrossRef]

- Han, F.; Dong, H.; Wang, Z.; Li, G.; Alsaadi, F.E. Improved Tobit Kalman filtering for systems with random parameters via conditional expectation. Signal Process. 2018, 147, 35–45. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J.; Wang, Z. A new approach to distributed fusion filtering for networked systems with random parameter matrices and correlated noises. Inform. Fusion 2019, 45, 324–332. [Google Scholar] [CrossRef]

- Guo, Y. Switched filtering for networked systems with multiple packet dropouts. J. Franklin Inst. 2017, 354, 3134–3151. [Google Scholar] [CrossRef]

- Yang, C.; Deng, Z. Robust time-varying Kalman estimators for systems with packet dropouts and uncertain-variance multiplicative and linearly correlated additive white noises. Int. J. Adapt. Control Signal Process. 2018, 32, 147–169. [Google Scholar]

- Xing, Z.; Xia, Y.; Yan, L.; Lu, K.; Gong, Q. Multisensor distributed weighted Kalman filter fusion with network delays, stochastic uncertainties, autocorrelated, and cross-correlated noises. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 716–726. [Google Scholar] [CrossRef]

- Silva, E.I.; Solis, M.A. An alternative look at the constant-gain Kalman filter for state estimation over erasure channels. IEEE Trans. Autom. Control 2013, 58, 3259–3265. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. New distributed fusion filtering algorithm based on covariances over sensor networks with random packet dropouts. Int. J. Syst. Sci. 2017, 48, 1805–1817. [Google Scholar] [CrossRef]

- Ding, J.; Sun, S.; Ma, J.; Li, N. Fusion estimation for multi-sensor networked systems with packet loss compensation. Inform. Fusion 2019, 45, 138–149. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Covariance-based fusion filtering for networked systems with random transmission delays and non-consecutive losses. Int. J. Gen. Syst. 2017, 46, 752–771. [Google Scholar] [CrossRef]

- Zhu, C.; Xia, Y.; Xie, L.; Yan, L. Optimal linear estimation for systems with transmission delays and packet dropouts. IET Signal Process. 2013, 7, 814–823. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Linear estimators for networked systems with one-step random delay and multiple packet dropouts based on prediction compensation. IET Signal Process. 2017, 1, 197–204. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Distributed fusion filter for networked stochastic uncertain systems with transmission delays and packet dropouts. Signal Process. 2017, 130, 268–278. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).