Abstract

Under dynamic conditions, motion blur is introduced to star images obtained by a star sensor. Motion blur affects the accuracy of the star centroid extraction and the identification of stars, further reducing the performance of the star sensor. In this paper, a star image restoration algorithm is investigated to reduce the effect of motion blur on the star image. The algorithm includes a blur kernel calculation aided by a MEMS gyroscope, blur kernel correction based on the structure of the star strip, and a star image reconstruction method based on scaled gradient projection (SGP). Firstly, the motion trajectory of the star spot is deduced, aided by a MEMS gyroscope. Moreover, the initial blur kernel is calculated by using the motion trajectory. Then, the structure information star strip is extracted by Delaunay triangulation. Based on the structure information, a blur kernel correction method is presented by utilizing the preconditioned conjugate gradient interior point algorithm to reduce the influence of bias and installation deviation of the gyroscope on the blur kernel. Furthermore, a speed-up image reconstruction method based on SGP is presented for time-saving. Simulated experiment results demonstrate that both the blur kernel determination and star image reconstruction methods are effective. A real star image experiment shows that the accuracy of the star centroid extraction and the number of identified stars increase after restoration by the proposed algorithm.

1. Introduction

Being highly precise and highly reliable, a star sensor is one of the main devices of a celestial navigation system [1]. It is widely used in spacecraft attitude control [2], missile guidance [3], and ship and aircraft navigation [4,5], and is small in size and light in weight [6,7]. By executing star centroid extraction, star identification, and attitude estimation, the attitude information can be determined [8]. In spacecraft attitude determination, when the spacecraft is rapidly maneuvering, the relative motion between the star and imaging device during the exposure time makes the star spot move on the image plane, dispersing the energy of the stars, which causes motion blur. As a result, the signal to noise ratio of the star image decreases, and the accuracy of the centroid position of the star seriously reduces. Motion blur limits the performance of a star sensor under highly dynamic conditions, and can hardly be compensated effectively by traditional methods, such as mechanical, optical, or electronic approaches [9,10,11].

In the past years, many works have been done based on image processing, in order to improve the performance of star sensors under dynamic conditions. According to the working principle, they can be divided into three types.

Some researchers consider the location of the area with some special properties on the star strip as the position of the star spot. As in the literature [12], the position of a star is the centroid of the area between two negative correlation peaks on the gradient autocorrelation curve of the star strip. In the literature [13], the position of the corner points on the strip take the place of the position of the star strip. These two methods can only be performed well in the case of strips with uniform energy distribution. From the perspective of energy accumulation, the energy of a strip is accumulated from one endpoint to another along a specific direction [14,15,16]. However, in this type of method, the direction and length of the accumulation should be known precisely.

There is an offset between the centroid of the strip and its actual position. In this case, some researchers draw support from other sensors to obtain this offset. In the literature [17], the author derives the offset between the centroid of the strip and the actual position of the strip according the relationship between the angular velocity and the motion of the star spot in the image. A deeply-coupled method with a gyroscope and star sensor is proposed by the authors of [18], in which the error of the gyroscope and the offset of the star spot position are estimated simultaneously by an extended Kalman filter. This type of method is easy to implement, but the performance is limited by the cooperative sensors.

The most common method is image restoration. There are two problems that should be solved in star image restoration. One is blur kernel determination, and the other is the image reconstruction method. A large number of work has been done regarding these two issues. For blur kernel determination, it is assumed that the star strips are composed of a set of parallel lines, so the Radon transform can be used to obtain the length and angle of the star strip, and then the blur kernel is calculated [19,20]. Once the star strip cannot be considered as a set of parallel lines (this case often happens in boresight rotation), error will be introduced to the blur kernel. In the literature [21,22,23,24], the motion model of the star sensor is used to determine the blur kernel. These methods are very effective, and they can determine the blur kernel in any motion. However, the methods cannot work well when the inputs of the motion model are inaccurate. For star image reconstruction, there are three types of common methods. The first one is the Wiener filter [25,26,27]. Quan and Zhang [25] and Zhang et al. [26] use weight allocation between the background pixels and strip pixels to reduce the ring phenomenon in the restored image. Wang et al. [27] use the idea of descending dimension to decompose the two-dimensional Wiener filter into two one-dimensional (1D) Wiener filters according to the movement of the pixel on the image plane. This method can double the speed of calculation. Even though the Wiener filter can run very fast, it will amplify the image noise. The Richardson–Lucy (RL) method can effectively suppress noise [19,20,21,22]. For the shortcoming of the long, heavy computation for the RL method, Jiang et al. [19] adopt vector extrapolation to accelerate the RL method. However, the ringing phenomenon is enhanced with iteration by using the RL method. To overcome the ringing phenomenon, Zhang et al. [24] use a regularization method. A mathematical model of motion blur with a regularization item is deduced according to the sparsity of the star image, then a convex optimization is performed to restore the blurred image. This method is sensitive to the errors of the blur kernel.

As discussed above, the Radon transform can only be used on the condition that there is no rotation along the boresight. As a star strip is mainly caused by the rotation of the star sensor, the MEMS gyroscope can be used at any angular velocity (including boresight rotation) for angular velocity measurement, because it is small in size, light in weight, and easy to integrate. In this study, a MEMS gyroscope is used as an assistant instrument for blur kernel determination. In order to mitigate the influence of the bias of the MEMS gyroscope and the installation deviation between the gyroscope and the star sensor on the blur kernel, the structure information of the star strip is extraction using Delaunay triangulation. By utilizing the structure information, the preconditioned conjugate gradient interior point method is used to correct the blur kernel from the gyroscope. For star image reconstruction, the current method used for star image reconstruction is either time-consuming or results in unwanted artifacts. The maximum likelihood estimate with scaled gradient projection (SGP) is used to reconstruct the star image to improve the reconstruction speed and quality.

This paper is organized as follows: The introduction is given in Section 1. The problem introduced by motion blur and the mathematical model of star image restoration are outlined in Section 2. The overall scheme of the proposed algorithm is presented in Section 3. The blur kernel determination method is described in detail in Section 4. Section 5 reports the star image reconstruction. In Section 6, the results of simulation and real image experience are shown. At the end, conclusions are drawn in Section 7.

2. Problem Formulation

2.1. Problem Introduced by Motion Blur

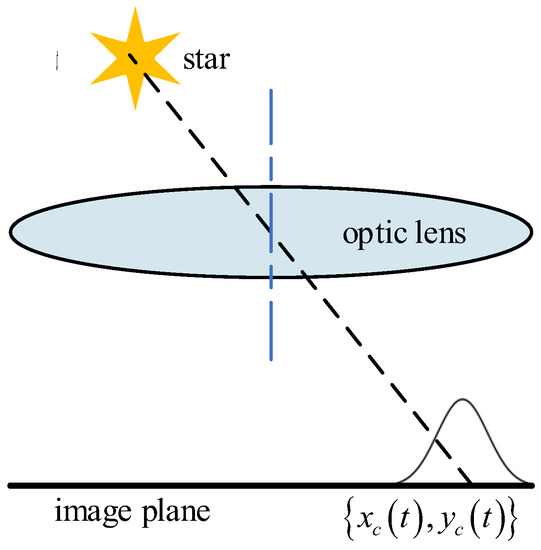

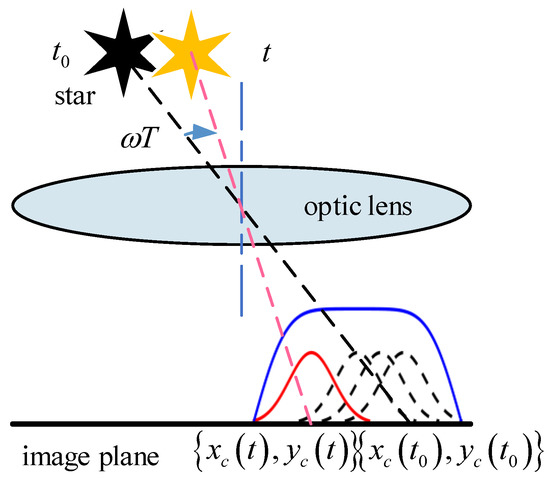

Extracting the centroid of a star spot in a star image accurately is the necessary precondition for attitude, which is determined by the star sensor. Like camera imaging, the star spot in a star image is generated by accumulating star energy in a certain exposure time. Under static conditions the energy of a star spot can be described as a two-dimension Gaussian distribution [28], as shown in Figure 1. When the star sensor is under dynamic conditions, star spots move on the image plane, and a star stripe is generated, as shown in Figure 2. This process leads to the motion blur of the star image. In this case, the energy of the star spot disperses over the star strip and makes the strip dim [29]. When the rotation of the star sensor becomes faster and faster, more and more stars in the image will break into pieces or submerge into the background. This causes the centroid of the star to be shifted by several pixels and reduces the number of detectable stars, which makes it difficult for star identification and attitude determination. Therefore, it is necessary to restore the blurred star image and reconstruct the star spot from the star strip.

Figure 1.

Imaging process of a star spot under static conditions.

Figure 2.

Imaging process of a star spot under dynamic conditions.

2.2. Mathematical Model of Star Image Restoration

According to the authors of [30], motion blur of a star image is one type of image degradation. It is assumed that the degeneration process is linear space invariant, and that the process of image degeneration can be modeled by the convolution operation between the original image and the degradation function, as follows:

where is the blur star image that is obtained by the star sensor, is the original image, is the blur kernel that is the matrix form of the degradation function, and is the convolution operation. In this paper, it describes the motion of the star spot in the image plane during the exposure time. is the image noise.

On the contrary, image restoration uses prior information of image degradation to restore the blur image , and the result of restoration is the estimation of the original image. In this paper, the prior information of the image degradation is , so the star image restoration is a non-blind deconvolution. By representing a two-dimensional image as a vector , the entirety of X is stacked column by column. is the circulate matrix of the blur kernel K. is the same operation of Y as X.

Therefore, the image restoration can be modeled as a minimum problem, as follows:

where is the -norm. Equation (2) is the mathematical model of the star image restoration, A (or ) plays a key role in restoration; its accuracy will directly affect the result of the restoration.

2.3. Mathematical Model of the Blur Kernel

The energy distribution model of a star strip can describe the blur process explicitly, so the blur kernel can be determined by this model.

According to the authors of [28], the star spot on the image plane is static under static conditions. It is assumed that the energy of a star spot can be described as a two-dimension Gaussian distribution, so the function of its energy distribution is as follows:

where is the incident flux of the star in the image plane, T is the exposure time, is the variance of the Gaussian distribution, and represents the coordinates of the center of the star spot.

The position of a star spot on the image plane will change with time in a dynamic condition, and the function of its energy distribution is as follows:

The integral in Equation (4) expresses that the process of the star spot as it moves on the image plane according to the motion trajectory , which generates a strip. Therefore, the motion trajectory in Equation (4) is the mathematical model of the blur kernel.

3. Overview of the Proposed Method

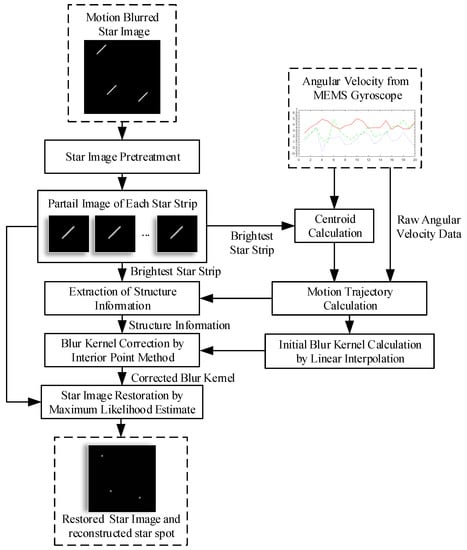

As mentioned above, a blur kernel is necessary to restore the blurred star image. The blur kernel is concerned with motion trajectory. As a star strip is mainly caused by the rotation of the star sensor, a MEMS gyroscope can be used as an assistant device to obtain the blur kernel at any angular velocity. In ideal conditions, the blur kernel can be deduced from the precious angular velocity. As the bias and installation deviation of the gyroscope can affect the accuracy of the blur kernel, a correction process is need. The strip in the star image is the intuitionistic behavior of motion blur, the structure information of the star strip is used to correct the blur kernel from the gyroscope in this paper. Afterwards, a fast star spot reconstruction is carried out with the corrected blur kernel. The flow chart is shown in Figure 3.

Figure 3.

The flowchart of proposed method

Firstly, it is assumed that the brightest star strip can be detected. Before the proposed method is performed, denoising and star segmentation operations are required to obtain partial images, and each partial image contains a star strip. The motion trajectory points can be calculated with the centroid of the brightest strip and the angular velocity data from the gyroscope. With these trajectory points, the blur kernel is generated by linear interpolation. The result of this step is the initial blur kernel.

Then, as the initial blur kernel is not precise, a correction process is needed. The strip in the star image is the intuitionistic behavior of motion blur, and the structure information of the star strip can be used to correct the blur kernel. In this paper, the structure information of the brightest star strip is extracted by Delaunay triangulation. The brightest strip may break into pieces when the rotation is fast enough. The pieces that belong to the same star strip are determined aided by the motion trajectory. After the structure information is extracted, a preconditioned conjugate gradient interior point method is used to obtain an optimized blur kernel with this structure information. This process achieves the purpose of correction. The result of the correction is the corrected blur kernel.

Finally, a maximum likelihood estimate is used to reconstruct the star image with the corrected blur kernel. To improve the reconstruction speed and reconstruction quality without introducing significant additional costs and unwanted artifacts, SGP is used in the reconstruction process. This completes the star image restoration process.

4. Blur Kernel Determination

4.1. Initial Blur Kernel Calculation Aided by the Gyroscope

During exposure, the trajectory of the star spot on image plane reflects the process of motion blur, so, the trajectory contains information about the blur kernel.

For the sake of convenience, the exposure time is divided into several short time intervals, . Let be the attitude matrix of star sensor at present moment, the observed vector of a star in star sensor coordinates is defined as , and , is the corresponding vector in inertial coordinate, so the relationship of these variance is as follows:

where is the attitude matrix of the next moment, it can be calculated from Equation (5), as follows:

where is the cross-product matrix populated with the components of the angular velocity vector . Then, the relationship between and in Equation (7) can be obtained in terms of Equations (5) and (6) as follows:

where can be calculated with the star position in the image plane coordinates. Suppose that during the period of , the angular velocity is constant. For the ith star in the image plane, its trajectory can be calculated by the following:

In Equation (8), the focus length f is much greater than , so the denominator of Equation (8) is approximate to 1, and Equation (8) can be rewritten as follows:

From Equation (9), the motion trajectory of a star spot on the image plane can be obtained during the rotation. Thus, to calculate the position of the star spot in the image plane before exposure, a set of angular velocities and time intervals during exposure are variables that must necessarily be known. As the position of the star spot is difficult to obtain when the exposure begins, herein, the centroid of the star strip is chosen as a substitute. Suppose this centroid can be taken as the position when time , which is exactly at half of the exposure time. Then, the trajectory after can be calculated by Equation (9), and the trajectory before can be calculated by Equation (10), as follows:

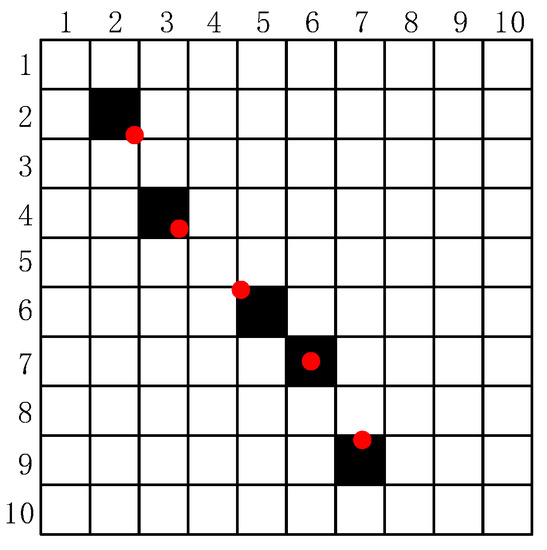

Suppose there are n trajectory points after exposure. The coordinates of each trajectory point are not integers, so a rounding operation is done and expresses them in a matrix. As shown in Figure 4, the red dots are the actual position of the trajectory points, the black squares are their position by rounding operation, and the white squares are zeros elements. As the trajectory points in the matrix are disconnected, and the value of non-zero elements in the matrix are unknown, a post-process is needed.

Figure 4.

The trajectory points in image.

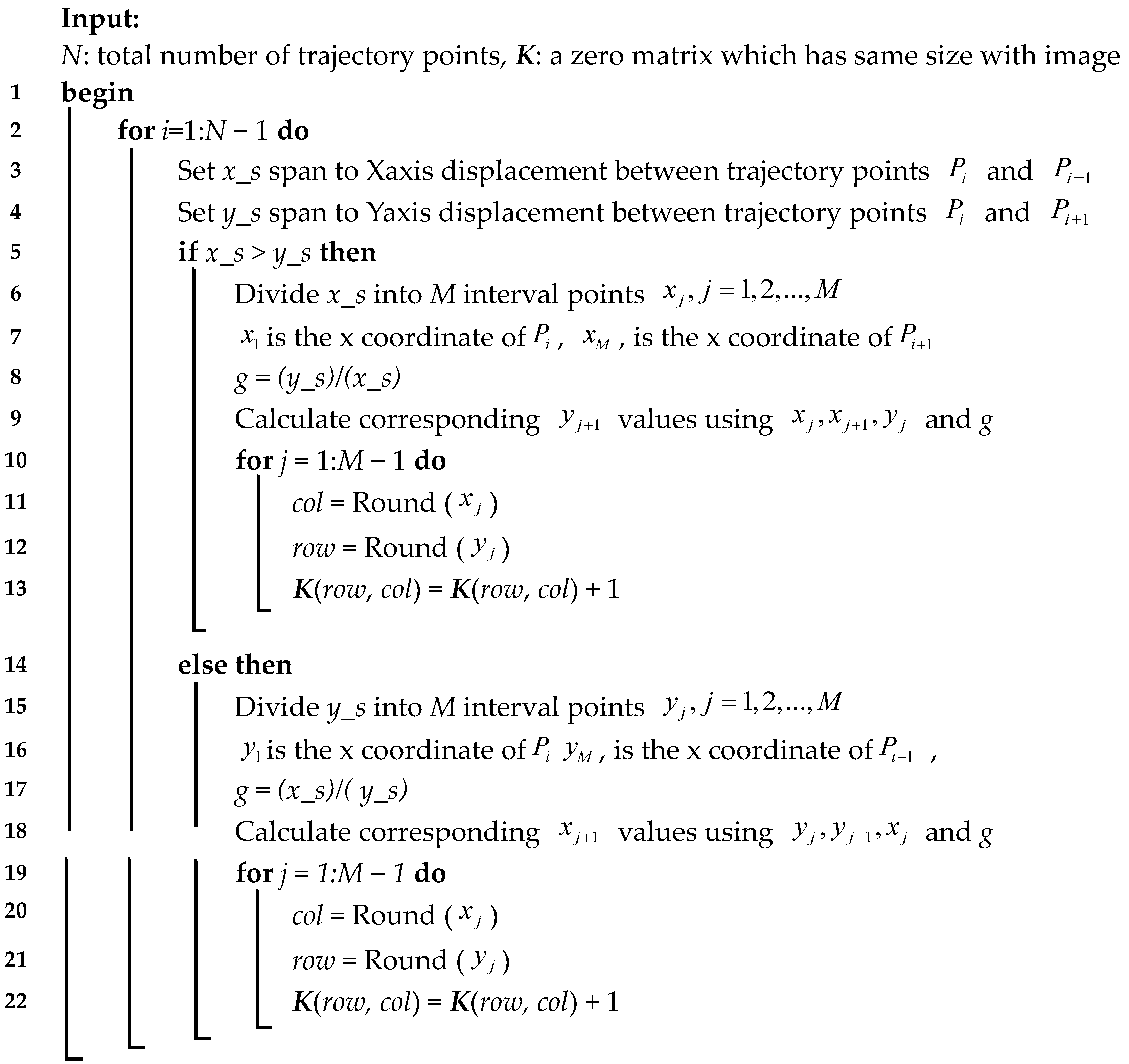

To solve the problem above, the adjacent two trajectory points of P are divided into several interval points. The coordinates of each interval point are calculated by using the linear interpolation method. After a round operation, if there are interval points at the position of a certain pixel, the value of this pixel is . The details of are shown in Algorithm 1.

| Algorithm 1: linear interpolation between adjacent two trajectory points |

|

After the zero elements around the trajectory points in K are deleted, the initial blur kernel is obtained.

4.2. Structure Information Extraction of the Star Strip

The bias of the gyroscope data and the installation deviation between the gyroscope and the star sensor can affect the accuracy of the blur kernel from the gyroscope. The star strip is the intuitionistic behavior of motion blur, so, the structure information of the strip is used to correct the blur kernel. As the strip is an elongated shape, its centerline contains the structure information. The skeleton is an important feature in describing the geometrical shapes of the star strips. It is a one-pixel width polyline that crosses the center of the region. Thus, the skeleton of the strip can be considered as the centerline and the structure information of the star strip can be obtained by skeletonization.

As the structure information is extracted in the binary image, it is assumed that the binarization of the partial star image has been done. If the strip breaks into pieces, a pretreatment is need before skeletonization. The pieces that are crossed by the same motion trajectory belong to the same strip. Then, morphological dilation and erosion are used to connect these pieces to a whole strip.

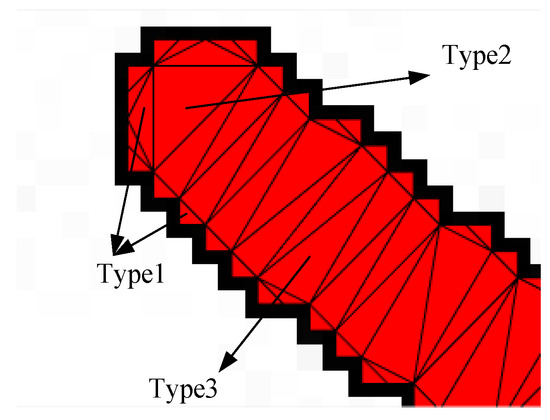

To obtain the skeleton of a star strip quickly, a meshing method is adopted. As triangles have a great deal of flexibility in the representation of geometric shapes, they are chosen as the element of the mesh. Delaunay triangulation is the most well-developed and effective method in all two-dimensional planar triangulation methods. It has the advantage of simplicity for implementation, little memory expense, and it can adapt to a variety of irregular shapes. Therefore, it is suitable for the skeletonization of a star strip. The detail of the Delaunay triangulation can be found in the literature [31]. For the triangle mesh of star strip, there are three types of triangle after triangulation, as shown in Figure 5.

Figure 5.

Triangle mesh of a star strip.

The first type is small-sized triangles, which mainly appear at the border of the strip area. The second type is the end triangles, which are large in size, and mainly appear at both ends of the strip area. The third type is structure triangles, which form the body of the strip. The first type of triangles has no contribution to the skeletonization, but do the opposite. So, they should be ignored.

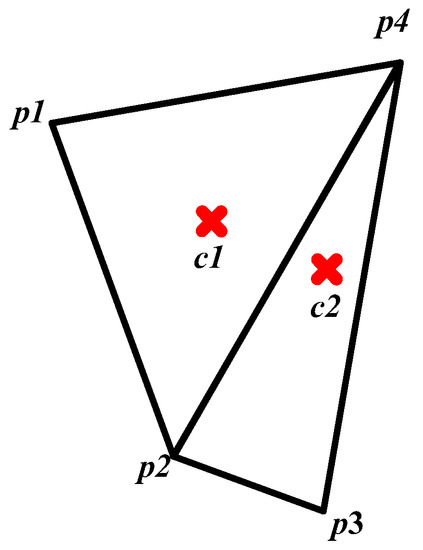

The skeleton is formed by the local symmetry points of the local area [32]. Thus, to each triangle, a point that reflects the local symmetry should be selected as the skeleton. As shown in Figure 6, the end triangle is similar to the equilateral triangle, so its circumcenter, c1, is the skeleton point, as follows:

Figure 6.

Skeleton points of a triangle mesh.

The structure triangle is similar to the isosceles triangle; its height is much longer than its base-side, so the skeleton point c2 is the middle of the line which connects the midpoints of two hypotenuses, as follows:

Expressing the skeleton points in a matrix T, the value of each matrix element is determined as follows:

where I is a partial star image that contains only one star strip. In the next section, a correction method is adopted to correct the blur kernel with matrix T.

4.3. Blur Kernel Correction with Structure Information of a Star Strip

The blur kernel correction can be expressed as a small-scaled convex optimization model, as follows:

where is the vector form of the blur kernel to be estimated, A is the data matrix, is the observed item, here, the vector form of matrix T. is the regularization coefficient.

Equation (14) is a -regularized least square problem, and the objective function is convex, but not differentiable. There are no analytic formulae or expressions for the optimal solution; its solution must be computed numerically.

To solve Equation (14), the interior point method is adopted. Firstly, a logarithmic barrier for the bound constraints is defined, replacing the inequality constraint, as follows:

Substituting Equation (15) into Equation (14), when , the 1-norm item in Equation (14) can be replaced by Therefore, the objective function can be transformed into the following:

where t > 0 is the penalty factor. Equation (16) can be minimized by the Newton method. For any t > 0, the central path consists of the unique minimizer, , of the convex function of Equation (16).

For faster convergence, a preconditioned conjugate gradient algorithm is used to compute the search step as the exact solution to the Newton system, which avoids the calculation of the Hessian, as follows:

where, .

As a stopping criterion, a duality gap is defined as the lower bound of the difference between the primal function value and the dual function value, as Equation (18), as follows:

where is the dual function, and is the dual feasible point, which can be expressed, respectively, as follows:

The detail of the dual function is in Appendix A. In Equation (20), s is the step length, and can be determined by the following:

The lower bound of the optimal value of the primal function can be given through the dual feasible point. Thus, the optimal value of is the optimal lower bound of the primal function. According to weak duality, the ratio of duality gap to the dual function value is the optimal upper bound, as follows:

where is the optimal value of the objective function, and f(x) is the primal objective computed with the point. When , the iteration is stopped, or t is updated by the following rule, and continues iterating as follows:

where is the scale factor and .

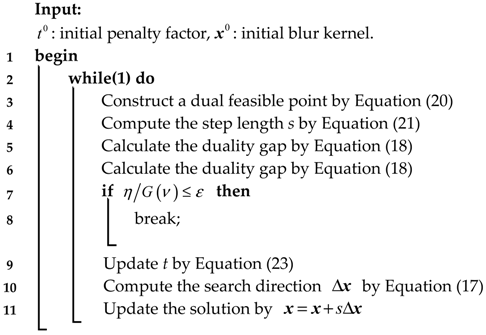

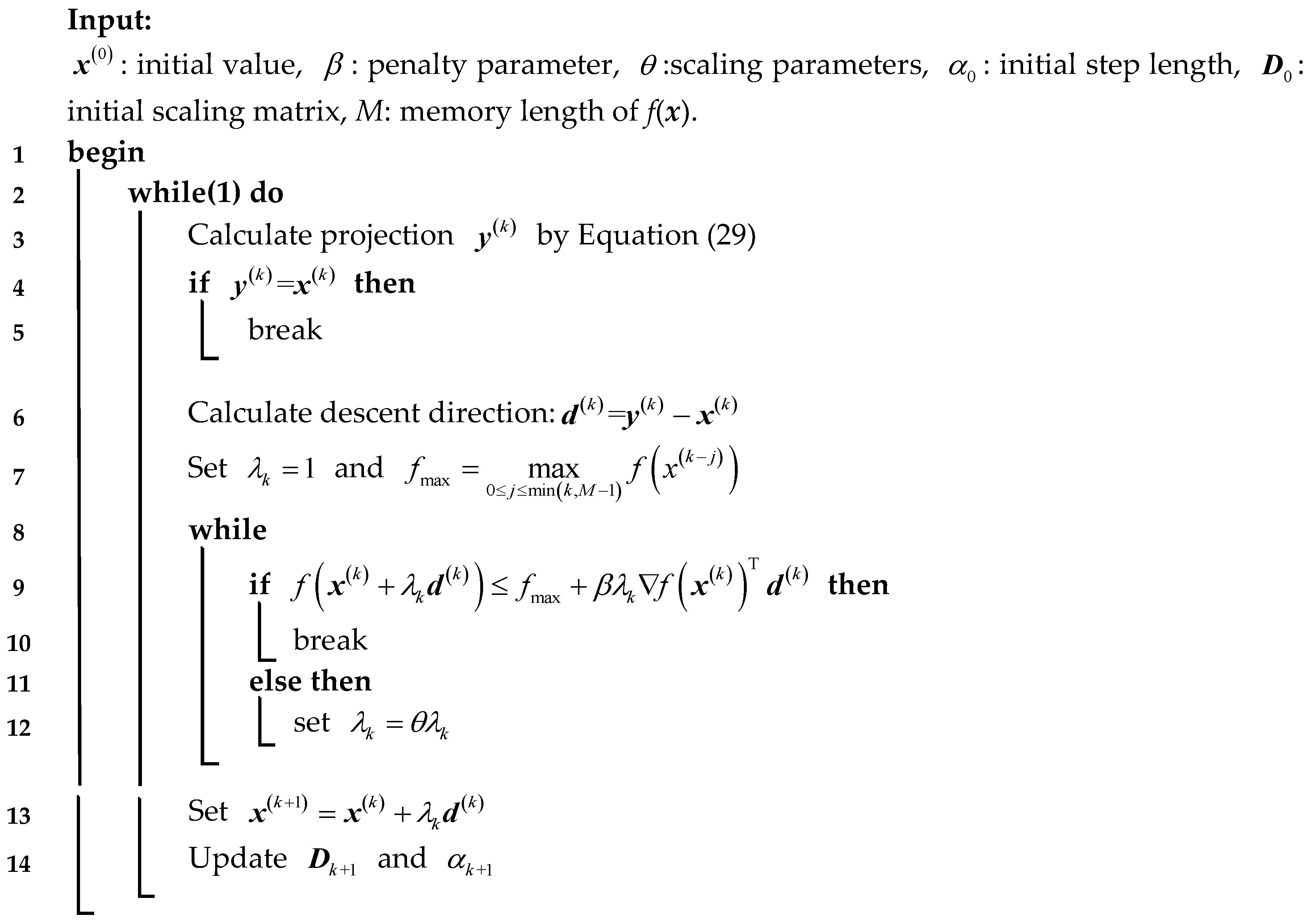

The whole process to solve Equation (14) is shown in Algorithm 2.

| Algorithm 2: preconditioned conjugate gradient interior point algorithm |

|

5. Star Image Reconstruction

In Equation (2), its objective function is a continuously differentiable convex function, and the constraints force the non-negativity of the solution. It can be solved by a maximum likelihood estimate. b is the sum of two terms, the blur image and Poisson noise . Thus, the maximum likelihood estimate to solve the star image reconstruction can be transformed to a minimized problem of the Kullback–Leibler divergence [33,34], the objective function is as follows:

To improve the calculation speed without introducing an additional cost, here, a SGP is used to solve the minimized problem of Equation (24). The gradient and hessian of are as follows:

In Equations (25) and (26), is a vector whose elements are all equal to one, and and are diagonal matrices. In the kth iteration, the solution is updated by the following:

where is the line search parameter, used to reduce the size of the step along the feasible descent direction . It can be determined by a non-monotone strategy [35]. is with respect to the elements of the matrix, D, in a non-negative quadrant, when x ≥ 0, can be written as follows:

In Equation (29), is the step-length, is a diagonal scaling matrix, with its elements satisfying the following:

The update rule of scaling matrix is as follows:

For the step-length parameter, a selection strategy derived by an updating rule is introduced in the literature [36]. This step-length selection is based on an adaptive alternation of the two Barzilai and Borwein (BB) rules [37], which, in the case of the scaled gradient directions, are defined by the following:

In Equation (32) , . The step-length is determined by the following:

where is the alternating parameter of , is a positive integer, which controls the memory length of , and and are calculated by the following:

The whole process to solve the minimization of Equation (24) is shown in Algorithm 3.

| Algorithm 3: Image reconstruction by SGP |

|

6. Experiment and Results

The experiments in this section aim to show the performance of the proposed method. All of the experiments are conducted with MATLAB on a computer with Intel(R) Core(TM) i5-480M processor and 8 GB of random-access memory (RAM).

6.1. Simulated Experiment

There are three simulated experiments. Firstly, the comparison of different blur kernel determination methods is conducted to verify the performance of the proposed blur kernel determination method. Then, the star images are reconstructed based on the blur kernels which are determined by different methods to further verify the superiority of the proposed blur kernel determination method. All of the images are reconstructed by the same reconstruction method. Finally, the comparison of the different reconstruction methods with the same blur kernel is conducted to verify the performance of the proposed star image reconstruction method.

6.1.1. Simulation Condition

In this experiment, the hardware parameters of the simulated star sensor are firstly set, as follows: the angle of view is 23° (H) × 23° (V), the focal length is 18 mm, the image size is 1024 × 1024 pixels, the size of each pixel is 10 μm × 10 μm, the sampling frequency is 5 Hz, and the time of exposure is 100 ms. The star images are simulated using the Smithsonian Astrophysical Observatory (SAO) star catalogue. Only the stars with a magnitude no greater than 4 Mv are simulated. All of the images are added by Gaussian noise with 0 mean and 0.001 variance.

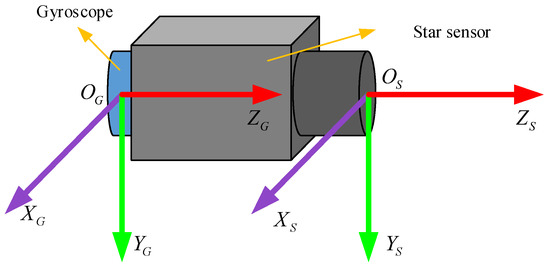

It is assumed that the installation parameters between the star sensor and gyroscope are known. As shown in Figure 7, the axis of the gyroscope coincides with the boresight of the star sensor, and and are parallel to and , respectively.

Figure 7.

Installation of the star sensor and gyroscope.

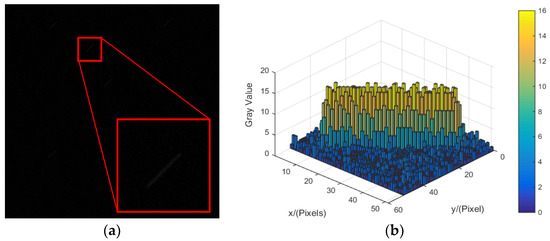

The actual rotation angular velocity of the star sensor is In this condition, the blurred star image is simulated, as shown in Figure 8.

Figure 8.

The simulated blur star image and its energy distribution. (a) The simulated blur star image. (b) The energy distribution of the blur star image.

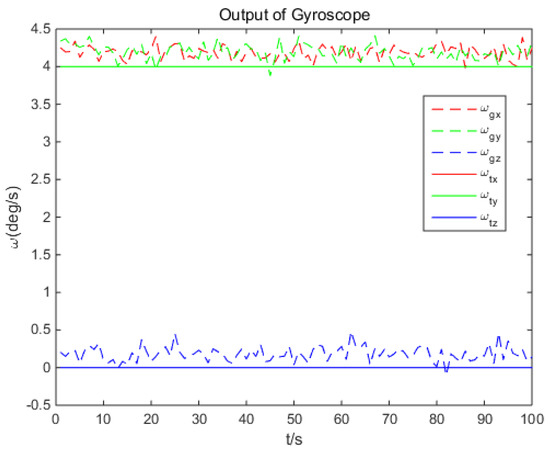

The simulated output of gyroscope is based on , with an additional noise of 0.2°/s bias and 0.1 (°/s)2 variance, as shown in Figure 9. The output rate of gyroscope is 100 Hz.

Figure 9.

Simulated output data of gyroscope.

6.1.2. Results

(1) Comparison of Different Blur Kernel Determination Methods

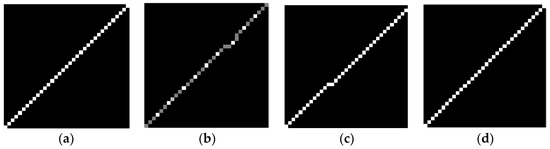

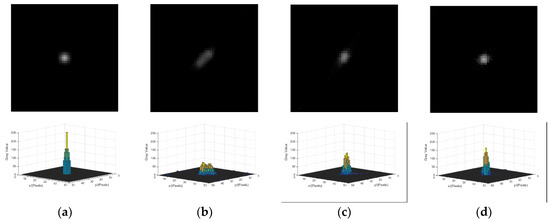

This experiment is conducted to verify the error between the blur kernels determined by different methods and the truth. The true blur kernel of the star strip in Figure 8 is shown in Figure 10a. The blur kernel that is calculated directly by the angular velocity from the MEMS gyroscope is shown in Figure 10b. Figure 10c shows the blur kernel by the improved Radon transform from the literature [19]. In the proposed correction method, A is the identity matrix; the structure information T is the observer; , , , ; and the corrected blur kernel is shown in Figure 10d. As the shape and size of the blur kernel are both the factors that influence restoration, the mean square error (MSE) and size are chosen as the evaluation indicator to evaluate the similarity to the truth. The MSE of the three blur kernels are ,, and , and the result of the proposed method is the lowest. As the blur kernels and the truth are not a coincident, the MSEs of the blur kernel obtained by the improved Radon transform method and gyroscope are higher. However, the overall shape is closer to the truth. Additionally, as the width of the blur kernel is equal to one, the length is the size parameter. The length of the three blur kernels are 33 pixels, 35 pixels, and 35 pixels, and the errors are 2 pixels, 0 pixels, and 0 pixels. The proposed method and the improved Radon transform are the same as the truth.

Figure 10.

Blur kernel determined by a different method. (a) The true blur kernel. (b) The blur kernel obtained by gyroscope. (c) The blur kernel obtained by improved Radon transform. (d) The blur kernel obtained by the proposed method.

To verify the performance of the different blur kernel determination methods at different angular velocities, these methods are carried out at six groups of angular velocity , , , , , and .

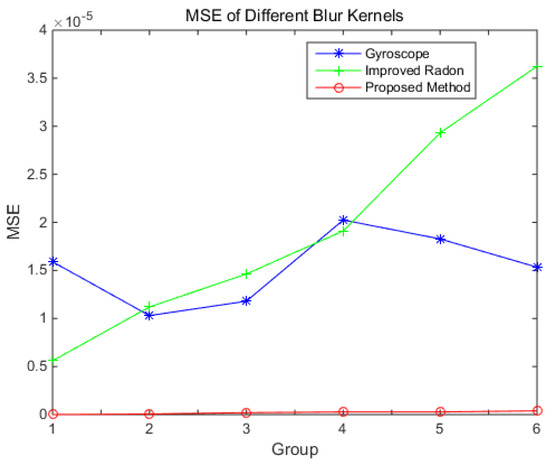

Figure 11.

Mean square error (MSE) of the blur kernel by different method.

Figure 12.

Length of Blur Kernel by different method.

It can be known that the MSE of the blur kernel from the gyroscope is irregular with the angular velocity. The MSE of the blur kernel from the improved Radon transform method increases with the increase of the angular velocity. The MSE of the blur kernel obtained by the proposed method is the lowest, and it increases slowly with the angular velocity. The length of the blur kernel obtained by the gyroscope method is always shorter than the truth, and it may cause the image to not be fully reconstructed. The length of the blur kernel obtained by the improved Radon transform method is longer than the truth, and the length error increases with the increase of the angular velocity. The length of the blur kernel obtained by the proposed method is similar to the truth.

From the result above, the error of the blur kernel obtained from the gyroscope is only related to the gyroscope’s bias. The improved Radon transform method is based on the image. When the angular velocity increases, the strip becomes dim, which induces the error of the blur kernel increase. When the star sensor rotates along the boresight (e.g., groups 3 and 5), the error is larger than that without rotating along the boresight. In the proposed method, the blur kernel is corrected by the structure of the star strip. As the energy of the strip has little effect on its structure, the influence of the angular velocity on the error of the blur kernel is slight.

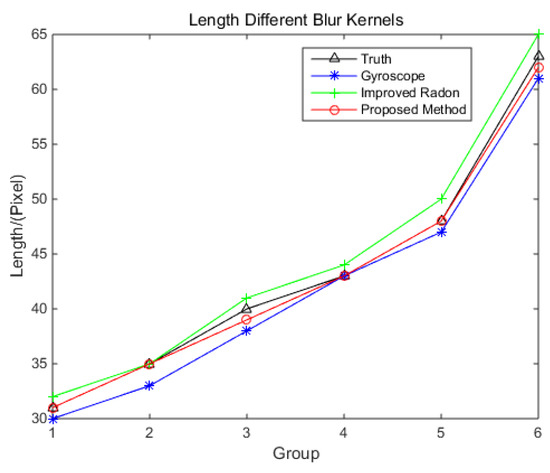

The computation times of the different blur kernel determination methods are shown in Figure 13.

Figure 13.

The computation time of different blur kernel determination methods

As the blur kernel determined by the gyroscope is the motion trajectory, it can be calculated very quickly. So, the computation time is short. The improved Radon transform needs image enhancement, so the computation time is longer, and increases with the angular velocity. As the size of the blur kernel increases with the angular velocity, the calculation becomes larger and larger. At a low angular velocity, the proposed method is faster than the improved Radon transform. At a high low-angular velocity, the contrary is the case.

(2) Star Images Reconstruction with Different Blur Kernels

The comparison of the star image reconstructions with different blur kernels are carried out in this experiment.

The parameters of the reconstruction method are set as , , , , , , and M = 1, and the initial value of the iteration is the blur image. As the star image is sparse, it is not necessary to reconstruct the whole image. In order to improve the experiment efficiency, only partial images that contain a stripe are reconstructed. To compare the quality of the result using different reconstruction methods, the energy distribution of the reconstructed star spot, the centroid position error of the star spot, and the peak signal noise ratio (PSNR) of the reconstructed star image is chosen as the evaluation indicator. Figure 14a shows the star spot in the static condition. The reconstructed results with the three blur kernels above are shown in Figure 14b–d, respectively. The result with the blur kernel achieved by the gyroscope is shown in Figure 14b. The reconstructed star is still a stripe with the smaller size, and the energy of the star is not concentrated. The result with the blur kernel achieved by the improved Radon transform is shown Figure 14c. The energy distribution of the reconstructed star spot in Figure 14c is better than that in Figure 14b, but there is still a darker trailing at end of the star spot. The result with blur kernel achieved by the proposed method is shown in Figure 14d. The shape of star spot is approximately a round spot, and the energy distribution of the star is more concentrated which is close to the Gaussian. Therefore, the reconstructed result with the corrected blur kernel is more similar to the star spot in the static condition.

Figure 14.

Result of energy distribution after reconstruction with different blur kernels. (a) The star spot in the static condition. (b) The reconstruction result with blur kernel obtained by the gyroscope. (c) The reconstruction result with the blur kernel obtained by the improved Radon transform. (d) The reconstruction result with the blur kernel obtained by the proposed method.

In view of the centroid position error of the star spot, the centroid position of the reconstructed stars is calculated after the star spots segmentation. Table 1 shows the results of the average centroid position error with the three blur kernels at different angular velocities. The first column is the result with the blur kernel obtained by the gyroscope. The second column is the result with the blur kernel obtained by the improved Radon transform. The third column is the result with the blur kernel achieved by the proposed method. The results show that using the corrected blur kernel can obtain a higher accuracy of the centroid position.

Table 1.

Average centroid position error after reconstruction with different blur kernels.

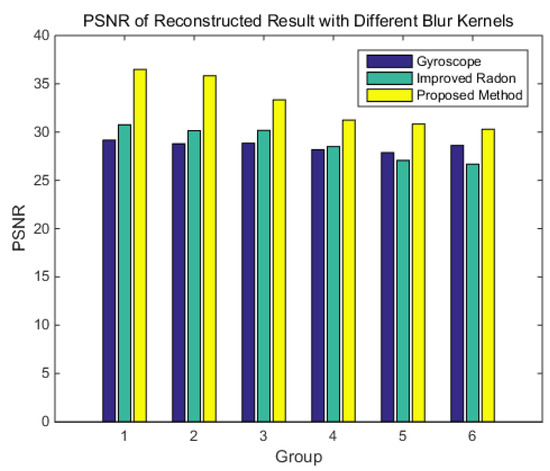

PSNR is a parameter used to compare the quality of the reconstructed image with the original image. The greater the PSNR, the more similarity exists between the two images. The result of the PSNR is shown in Figure 15. The results show that the PSNR of the reconstructed image with the blur kernel obtained by the gyroscope is not affected by angular velocity. The PSNR of the reconstructed image with the blur kernel obtained by the improved Radon transform decreases with the increase of the angular velocity. The reconstructed image with the blur kernel obtained by the proposed method are higher than those of the other two methods, that is, the reconstructed star image is closer to the original image.

Figure 15.

PSNR result after reconstruction by different blur kernels.

The results of the above experiments prove that the proposed blur kernel correction method is effective.

(3) Star Spot Reconstruction with Different Reconstruction Methods

The methods involved in the comparison are the Wiener filter [25], accelerated RL [19] method, and -regularization [24], and the parameters of the comparison methods are set according to [19,24,25]. All of the reconstruction methods above are carried out with the corrected blur kernel as the uniform condition.

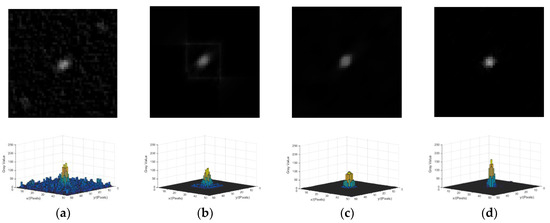

As the Wiener filter can amplify noise, the noise is enhanced in Figure 16a. Figure 16b is the result of accelerated RL method, and the image is reconstructed gradually with the increase in the number of iterations. However, the ringing phenomenon around the spot is more obvious. Figure 16c shows the result of -regularization, and it is a maximum posteriori probability method that utilizes the prior information of the energy distribution of the star. -regularization has good robustness to noise, but the overall brightness of the reconstructed star spot is low. The energy concentration of the reconstructed star spot using the -regularization method is better than that using the accelerated RL method and the Wiener filter. Figure 16d shows the result of the proposed method. The energy concentration and the overall brightness of the reconstructed star spot by using the proposed method are better than the other three methods, and the proposed method is robust to noise.

Figure 16.

Reconstruction results of different methods. (a) Reconstruction result of the Wiener filter. (b) Reconstruction result of the accelerated Richardson–Lucy (RL) method. (c) Reconstruction result of -regularization. (d) Reconstruction result of the proposed method.

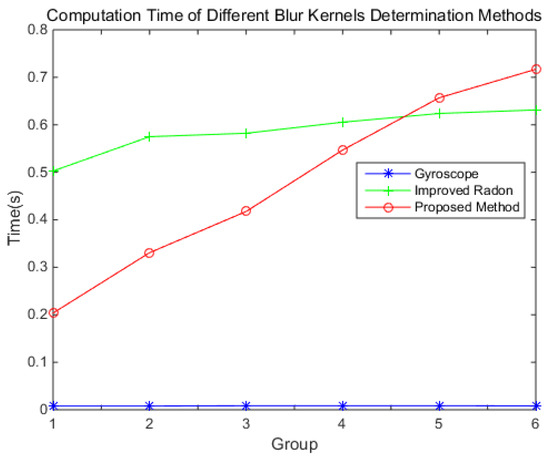

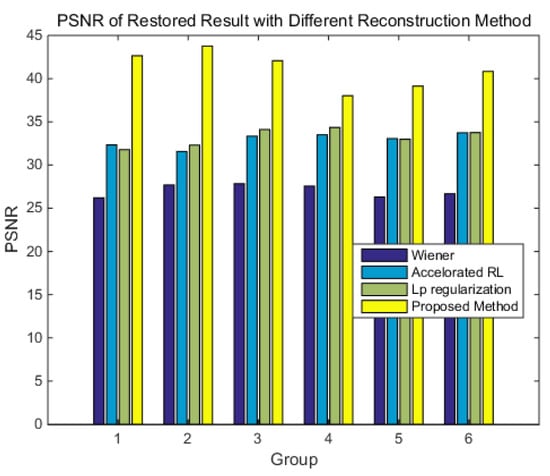

To verify the performance of the reconstruction methods for the different blurred star images, the comparison experiment is carried out at different angular velocities. The results are evaluated based on the centroid position error, PSNR, and the computational speed. Table 2 is the average centroid position error of the different reconstruction methods at different angular velocities.

Table 2.

The average centroid position error of different reconstruction methods.

The result shows that all of the reconstruction methods can guarantee sub-pixel accuracy at different angular velocities. The first column is the result of the Wiener filter, and the Wiener filter amplifies the noise in the image, which enlarges the error. The second column is the result of the accelerated RL method. The accelerated RL method is robust to noise, but the ringing phenomenon limits its accuracy. The third column is the result of -regularization, which solves the ringing phenomenon. Its accuracy is higher than the accelerated RL method, however, the overall brightness is low, which limits the accuracy improvement. The forth column is the method proposed in this paper. As the SGP method can always find the optimal solution along the feasible descent direction, using the proposed method can obtain a higher precision centroid position.

Figure 17 is the results of PSNR, the results show that the reconstructed image is more similar to the truth using the method proposed in this paper. Therefore, the results above show that the proposed reconstruction method has better performance.

Figure 17.

The PSNR of the different reconstruction methods.

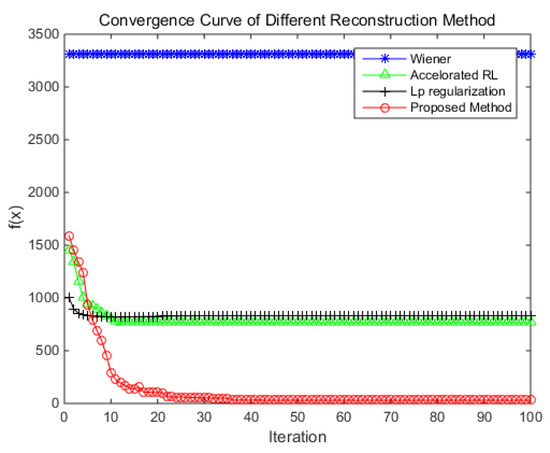

For the computation speed, the convergence rate and the total computation time are chosen as the evaluation indicators. The convergence curve of each method is shown in Figure 18, where the horizontal axis is the number of iterations. The vertical axis is the value of function f(x); the function represents the similarity between the reconstruction result after blur processing and observed blur image. It is defined as follows:

where is the circulate matrix of the blur kernel; x is a vector where the entirety of restored image is stacked column by column; b is the same operation of the observed blur image; and is the 1-norm of the vector. When the difference between the function values of the current two iterations is less than 1, the objective function is considered to be converged. The experiment result shows that the Wiener filter is a non-iterative method. Therefore, its convergence curve is a horizontal line, and the value of the target function is the maximum. The convergence value of the accelerated RL method and -regularization are similar. For the accelerated RL method, the objective function value reaches 768.78 after 26 iterations. For -regularization, the objective function value reaches 831.11 after 28 iterations. For the proposed method, the objective function value reaches 50.78 after 35 iterations. Although it takes slightly more iterations, the value of the objective function is much lower. This shows that the proposed reconstruction result is closer to the truth.

Figure 18.

The convergence curve of different methods.

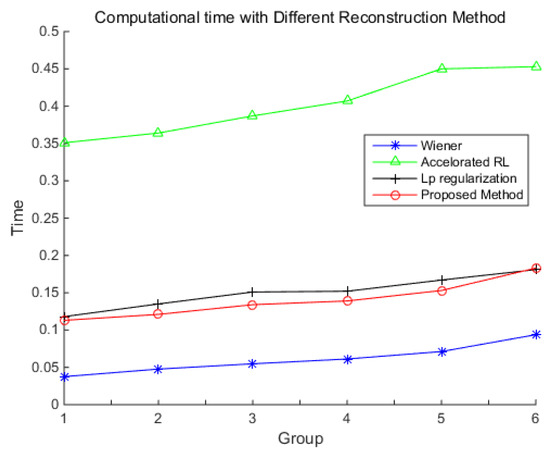

The computation time of each method at the angular velocities in Table 2 is shown in Figure 19. The result shows that the Wiener filter is the fastest, and the accelerated RL method is the slowest. The proposed method is slightly faster than -regularization, and offers a speedup of nearly three times more than the accelerated RL method. As the length of the star strip increases with the increase of the angular velocity, the size of the partial image to be reconstructed is larger, which makes the computation time increase, and this can be also found in Figure 18.

Figure 19.

The computation time of different methods.

The results above show that, in the acceptable calculation time, the result of the proposed reconstruction method is the most similar to the truth, and the position accuracy is the highest. Therefore, this reconstruction method is effective and available for blurred star images.

6.2. Star Extraction and Identification in Real Star Image

In this experiment, the proposed algorithm is performed entirely to restore the whole blurred star image. The brightness enhancement of stars, centroid position error, and number of identified stars before and after restoration are the evaluation indicators to verify the proposed algorithm.

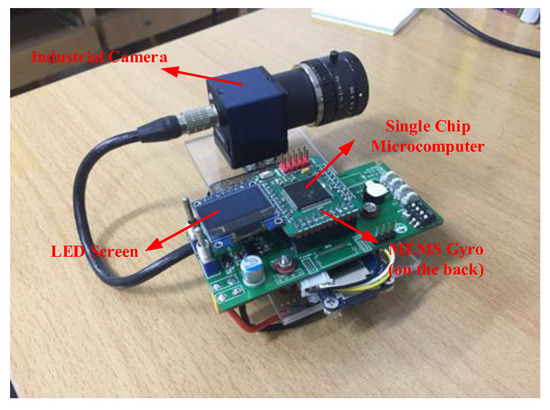

The star image and angular velocity are obtained by an integrated system that includes an industrial camera (taking the place of the star sensor), a MEMS gyroscope, and a single-chip microcomputer system, as shown in Figure 20. The angular velocity is sampled by the single-chip microcomputer and recorded in a memory card. The star image is sampled by the host computer software on the PC. The synchronization between the camera and the gyroscope is realized by the hardware trigger signal of single-chip microcomputer. The parameters of the integrated system are shown in Table 3.

Figure 20.

The integrated system.

Table 3.

The parameters of integrated system.

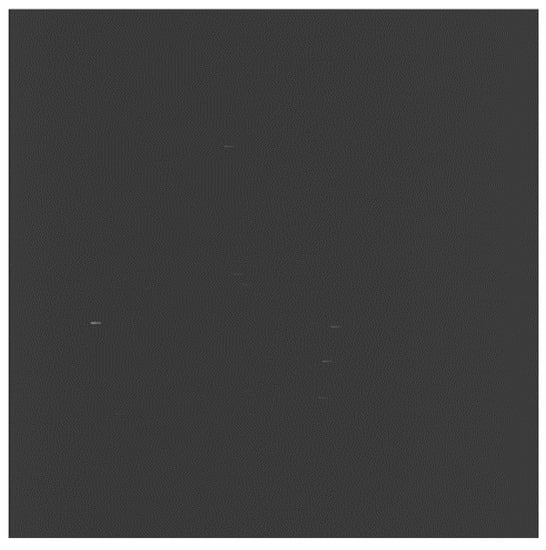

The integrated system is fixed on the turntable. When the turntable turns, the camera begins to capture the image, and angular velocity data from the gyroscope is recorded at the same time. The blurred star image is shown in Figure 21.

Figure 21.

Real blur star image.

After restoration, the centroid extraction and star identification are performed with both the blurred star image and the reconstructed star image, and the comparison results are shown in Figure 22, Table 4 and Table 5.

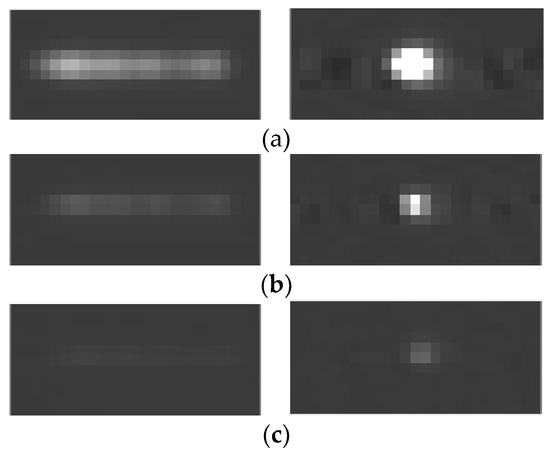

Figure 22.

Brightness comparison before and after restoration. (a) Brightness comparison of high brightness star strip before and after restoration. (b) Brightness comparison of mid brightness star strip before and after restoration. (c) Brightness comparison of low brightness star strip before and after restoration.

Table 4.

Centroid position error before and after restoration.

Table 5.

Star identification before and after restoration.

Figure 22 shows the brightness comparison before and after restoration. In the left column of Figure 22, the star strips are dim before the restoration. Some of the star strips are broken into several pieces and submerged in the background, which cannot be detected. In the right column of Figure 22, the dim pieces of the strip get together after restoration, so the brightness of the star after restoration is enhanced, which becomes easier to detect. The average value of the background pixels is 61. In Figure 22a, the maximum value of the pixel on the stripe is 177, and this value becomes 255 after restoration, and many pixels are saturation. In Figure 22b, the maximum value of pixel on the stripe is 91, and this value become 255 after restoration. In Figure 22c, the maximum value of the pixel on the stripe is 67, and this value become 97 after restoration.

For the centroid position error, it is difficult to determine the truth of the centroid position of the restored star spot. Here, the centroid of the brightest star is chosen as the fiducial point, and the centroid of each star spot relative to the fiducial point is calculated as the relative position. The truth is the relative position in the static star image, and the error with the truth before and after restoration is shown in Table 4.

Table 4 shows that the number of the detectable stars obviously increases after restoration. As the false detection caused by fracture decreases, the centroid accuracy improves.

The detailed information of the identified stars, including the index number and the corresponding magnitude in the Smithsonian Astrophysical Observatory (SAO) Star Catalog of the experiment, is shown in Table 5. It can be observed that the number of identified stars increases from 10 to 24, while the identification rate rises from 37.04% to 88.89%, and the magnitude rises from 4.2 to 5.2. Therefore, the identification rate of the star in the dynamic condition significantly improves after restoration. The performance of the star sensor in the dynamic condition is improved.

7. Conclusions

To overcome the problem of the decreased accuracy of the centroid extraction and the failure of the star identification introduced by motion blur, a new image restoration algorithm is investigated in this paper. The new algorithm includes an initial blur kernel calculation, blur kernel correction, and star image reconstruction. In the initial blur kernel calculation, the motion trajectory of the star in the image is calculated, aided by the angular velocity from a MEMS gyroscope. The initial blur kernel is calculated by linear interpolation with the motion trajectory points. In the blur kernel correction, the structure information of the star strip is extracted by Delaunay triangulation. Based on the structure information, the initial blur kernel is corrected by the preconditioned conjugate gradient interior point. In the star image reconstruction, the SGP is used to reduce the computation time. Experiments with simulated star images have been conducted to compare the proposed restoration algorithm with others. The MSE and the length of the blur kernels at different angular velocities show that the proposed blur kernel determination method has a better MSE and length error than those determined by other methods. The same image reconstruction method is carried out for a further comparison of the different blur kernels. The reconstruction results show that the centroid position error, PSNR, energy concentration, and visual quality are better when using the proposed blur kernel determination method. The influence of angular velocity on the blur kernel has also been analyzed. Subsequently, the same blur kernel is used in the comparison of different reconstruction methods. The reconstruction results at different angular velocities show that the proposed reconstruction method is superior in the centroid position error, PSNR, energy concentration, and visual quality. The computation speed of the proposed reconstruction method offers a speedup of nearly three times over the accelerated RL method without introducing unwanted artifacts. In the result of the experiment with a real star image, the accuracy of the centroid has been improved and more stars have been detected after restoration. In addition, the identification rate is up to 88.9%. Therefore, the star sensor can provide attitude information more accurately in the dynamic condition by using the proposed method.

Author Contributions

S.W. performed the experiments and finished the draft manuscript. S.Z. proposed the main idea. M.N. reviewed and edited the manuscript. B.Z. contributed to the hardware and software design in real star image experiment.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

An equality constrain is introduced into Equation (14). The Equation (14) is transformed into the following:

For the dual feasible point , the Lagrangian is as follows:

The dual function of (A1) as the minimum value of Lagrangian over x and z is as follows:

As the is a convex quadratic function of z, the minimizing z from the optimality condition as follows:

which yields . According to the literature [38] (Equation (5.2) in page 216)

According to the literature [38] (Equation (5.12) in pages 221–222), the dual function for problem (A1) is as follows:

where is the dual objective of (A1). According to the literature [39], the dual feasible point is multiplied by a step size s.

References

- Liebe, C.C. Accuracy performance of star trackers-A Tutorial. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 587–599. [Google Scholar] [CrossRef]

- Mayuresh, S.; Mathew, J.; Sreejith, A.G. A software package for evaluating the performance of a star sensor operation. Exp. Astron. 2017, 43, 99–117. [Google Scholar]

- Hou, B.; He, Z.; Li, D. Maximum correntropy unscented kalman filter for ballistic missile navigation system based on SINS/CNS deeply integrated mode. Sensors 2018, 18, 1724. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.Y.; Cui, X.F.; Li, Y.B. Performance enhancement of a USV INS/CNS/DVL integration navigation system based on an adaptive information sharing factor federated filter. Sensors 2017, 17, 239. [Google Scholar] [CrossRef] [PubMed]

- Ning, X.; Zhang, J.; Gui, M. A fast calibration method of the star sensor installation error based on observability analysis for the tightly coupled SINS/CNS integrated navigation system. IEEE Sens. J. 2018. [Google Scholar] [CrossRef]

- Muruganandan, V.A.; Park, J.H.; Lee, S. Star Selection algorithm for Arcsecond Pico Star Tracker. In Proceedings of the AIAA Aerospace Sciences Meeting, Kissimmee, FL, USA, 8–12 January 2018; p. 2199. [Google Scholar] [CrossRef]

- Saleem, R.; Lee, S. Reflective curved baffle for micro star trackers. In Proceedings of the 2017 IEEE International Symposium on. Robotics Intelligent Sensors, Ottawa, ON, Canada, 5–7 October 2017. [Google Scholar]

- Li, J.; Wei, X.; Zhang, G. An extended kalman filter-based attitude tracking algorithm for star sensors. Sensors 2017, 17, 1921. [Google Scholar] [CrossRef]

- Bezooijen, R.W.H.V. SIRTF autonomous star tracker. IR Space Telesc. Instrum. 2003, 4850, 108–121. [Google Scholar] [CrossRef]

- Bezooijen, R.W.H.V.; Kevin, A.A. Performance of the AST-201 star tracker for the microwave anisotropy probe. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Monterey, CA, USA, 5–8 August 2002; pp. 2002–4582. [Google Scholar]

- Gong, D.Z.; Wu, Y.P.; Lu, X. An attempt at improving dynamic performance of star tracker by motion compensation. Aerosp. Control Appl. 2009, 35, 19–23. [Google Scholar]

- Sun, T.; Xing, F.; You, Z. Motion-blurred star acquisition method of the star tracker under high dynamic conditions. Opt. Express 2013, 21, 20096–20110. [Google Scholar] [CrossRef] [PubMed]

- Sease, B.; Koglin, R.; Flewelling, B. Long-integration star tracker image processing for combined attitude-attitude rate estimation. In Proceedings of the Sensors. Systems. Space Applications VI, Baltimore, MD, USA, 29 April–3 May 2013; p. 87390. [Google Scholar] [CrossRef]

- Hou, W.; Liu, H.; Lei, Z. Smeared star spot location estimation using directional integral method. Appl. Opt. 2014, 53, 2073–2086. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Jiang, J.; Zhang, G. Multiexposure imaging and parameter optimization for intensified star trackers. Appl. Opt. 2016, 55, 10187–10197. [Google Scholar] [CrossRef] [PubMed]

- Pasetti, A.; Habinc, S.; Creasey, R.C. Dynamical binning for high angular rate star tracking. Spacecr. Guid. Navig. Control Syst. 2000, 425, 255. [Google Scholar]

- Liao, Y.; Liu, E.; Zhong, J. Processing centroids of smearing star image of star sensor. Math. Prob. Eng. 2014, 1–8. [Google Scholar] [CrossRef]

- Fei, X.; Nan, C.; Zheng, Y. A novel approach based on MEMS-gyro’s data deep coupling for determining the centroid of star spot. Math. Prob. Eng. 2012, 1–20. [Google Scholar] [CrossRef]

- Jiang, J.; Huang, J.; Zhang, G. An accelerated motion blurred star restoration based on single image. IEEE Sens. J. 2017, 17, 1306–1315. [Google Scholar] [CrossRef]

- Zhang, H.; Niu, Y.; Lu, J. Accurate and autonomous star acquisition method for star sensor under complex conditions. Math. Prob. Eng. 2017, 1–12. [Google Scholar] [CrossRef]

- Sun, T.; Xing, F.; You, Z. Smearing model and restoration of star image under conditions of variable angular velocity and long exposure time. Opt. Express 2014, 22, 6009–6024. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Bernelli-Zazzera, F.; Jiang, G. Region-confined restoration method for motion-blurred star image of the star sensor under dynamic conditions. Appl. Opt. 2016, 55, 4621–4631. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.J.; Wang, X.L. Multiple blur of star image and the restoration under dynamic conditions. Acta Astronaut. 2011, 68, 1903–1913. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, J.; Yu, T. Fast restoration of star image under dynamic conditions via lp regularized intensity prior. Aerosp. Sci. Technol. 2017, 61, 29–34. [Google Scholar] [CrossRef]

- Quan, W.; Zhang, W.N. Restoration of motion-blurred star image based on Wiener filter. In Proceedings of the 2011 Fourth International Conference on Intelligent Computation Technology and Automation, Shenzhen, Guangdong, China, 28–29 May 2011; pp. 691–694. [Google Scholar] [CrossRef]

- Zhang, W.; Quan, W.; Guo, L. Blurred star image processing for star sensors under dynamic conditions. Sensors 2012, 12, 6712–6726. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Xia, X.; Zhang, Z. Star image processing of SINS/CNS integrated navigation system based on 1DWF under high dynamic conditions. In Proceedings of the Position, Location and Navigation Symposium IEEE, Savannah, GA, USA, 11–14 April 2016; pp. 514–518. [Google Scholar] [CrossRef]

- Yan, J.; Jiang, J.; Zhang, G. Dynamic imaging model and parameter optimization for a star tracker. Opt. Express 2016, 24, 5961–5983. [Google Scholar] [CrossRef] [PubMed]

- Liebe, C.C.; Gromov, K.; Meller, D.M. Toward a stellar gyroscope for spacecraft attitude determination. J. Guid. Control Dyn. 2004, 27, 91–99. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 2nd ed.; Prentice Hall: Englewood Cliffs, NJ, USA, 2002. [Google Scholar]

- Lee, D.T.; Schachter, B.J. Two algorithms for constructing a Delaunay triangulation. Int. J. Comput. Inf. Sci. 1980, 9, 219–242. [Google Scholar] [CrossRef]

- Brady, M. Smoothed local symmetries and their implementation. Int. J. Robot. Res. 1984, 3, 36–61. [Google Scholar] [CrossRef]

- Bonettini, S.; Zanella, R.; Zanni, L. A scaled gradient projection method for constrained image deblurring. Inverse Prob. 2008, 25, 015002. [Google Scholar] [CrossRef]

- Bonettini, S.; Prato, M. New convergence results for the scaled gradient projection method. Inverse Prob. 2015, 31, 095008. [Google Scholar] [CrossRef]

- Grippo, L.; Lampariello, F.; Lucidi, S. A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 1986, 23, 707–716. [Google Scholar] [CrossRef]

- Frassoldati, G.; Zanni, L.; Zanghirati, G. New adaptive stepsize selections in gradient methods. J. Ind. Manag. Opt. 2008, 4, 299–312. [Google Scholar] [CrossRef]

- Barzilai, J.; Borwein, J.M. Two-point step size gradient methods. IMA J. Numer. Anal. 1988, 8, 141–148. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: New York, NY, USA, 2017. [Google Scholar]

- Kim, S.J.; Koh, K.; Lustig, M. An interior-point method for large-scale l1-regularized least squares. IEEE J. Sel. Top. Sign Process 2007, 1, 606–617. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).