A Novel Method for Estimating Free Space 3D Point-of-Regard Using Pupillary Reflex and Line-of-Sight Convergence Points

Abstract

1. Introduction

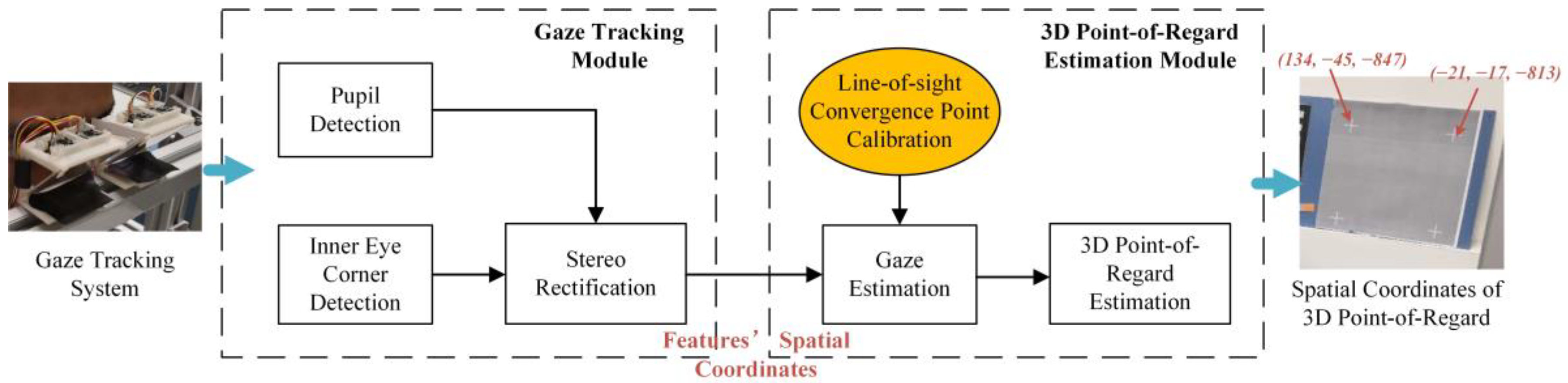

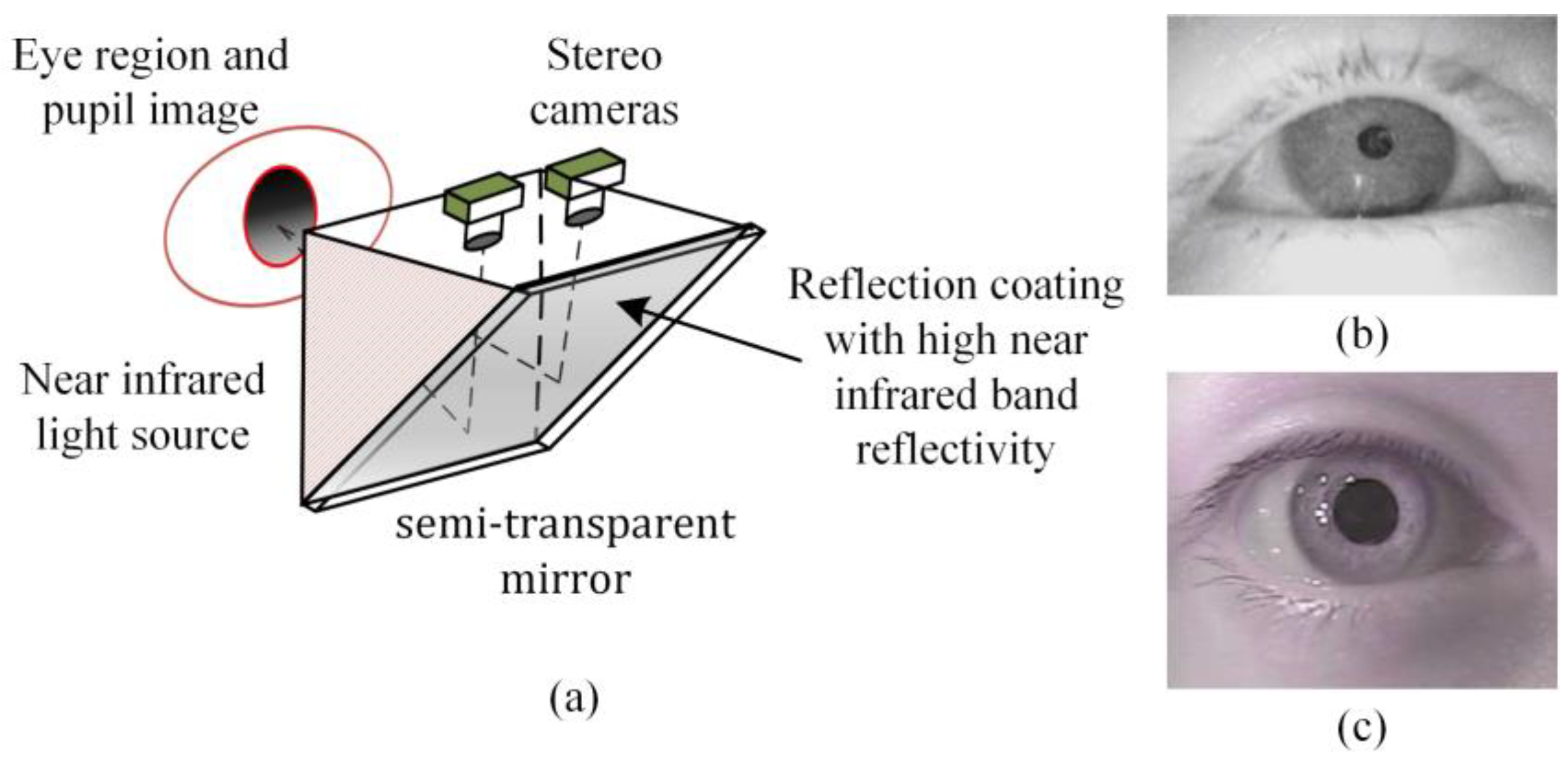

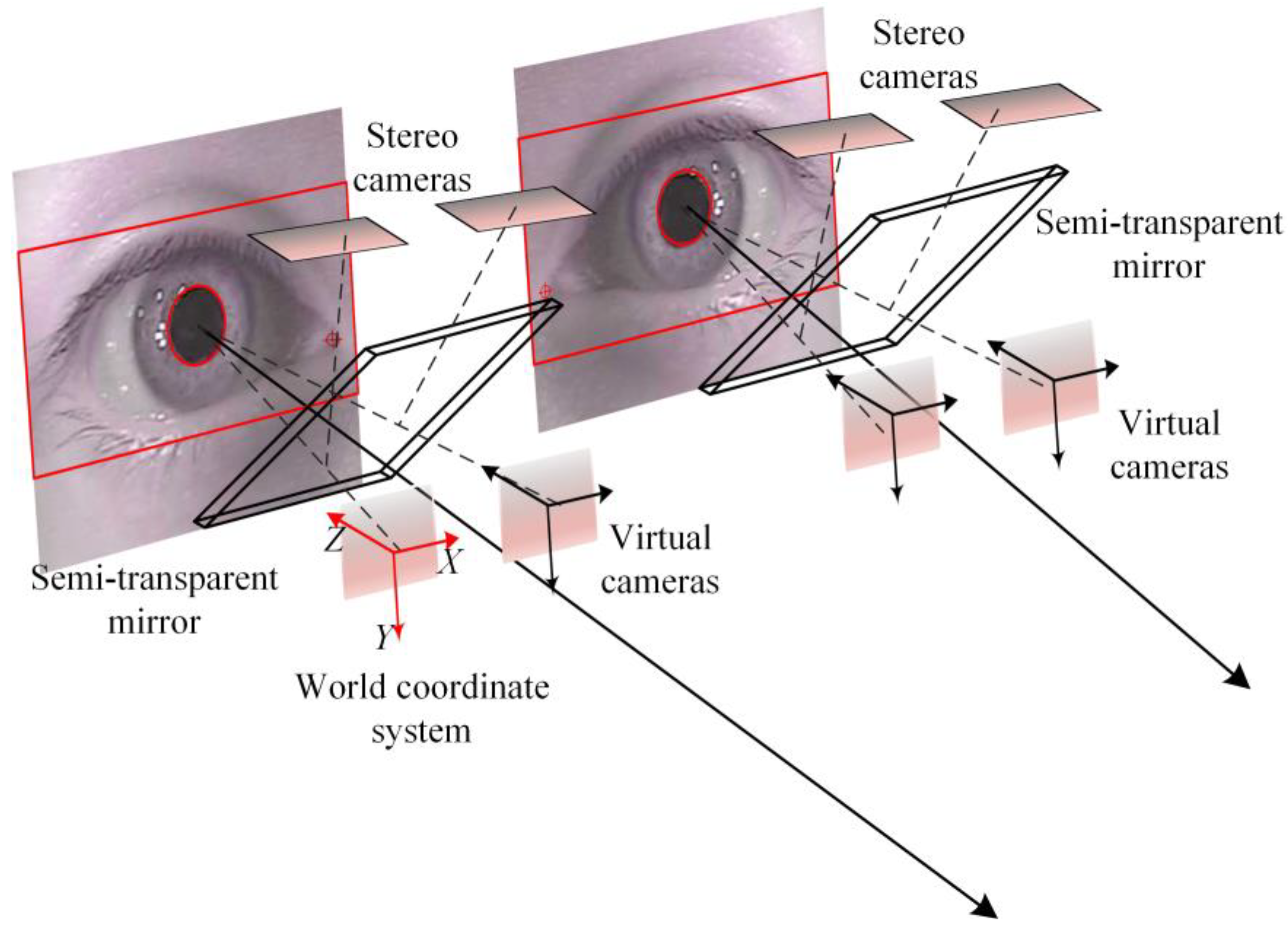

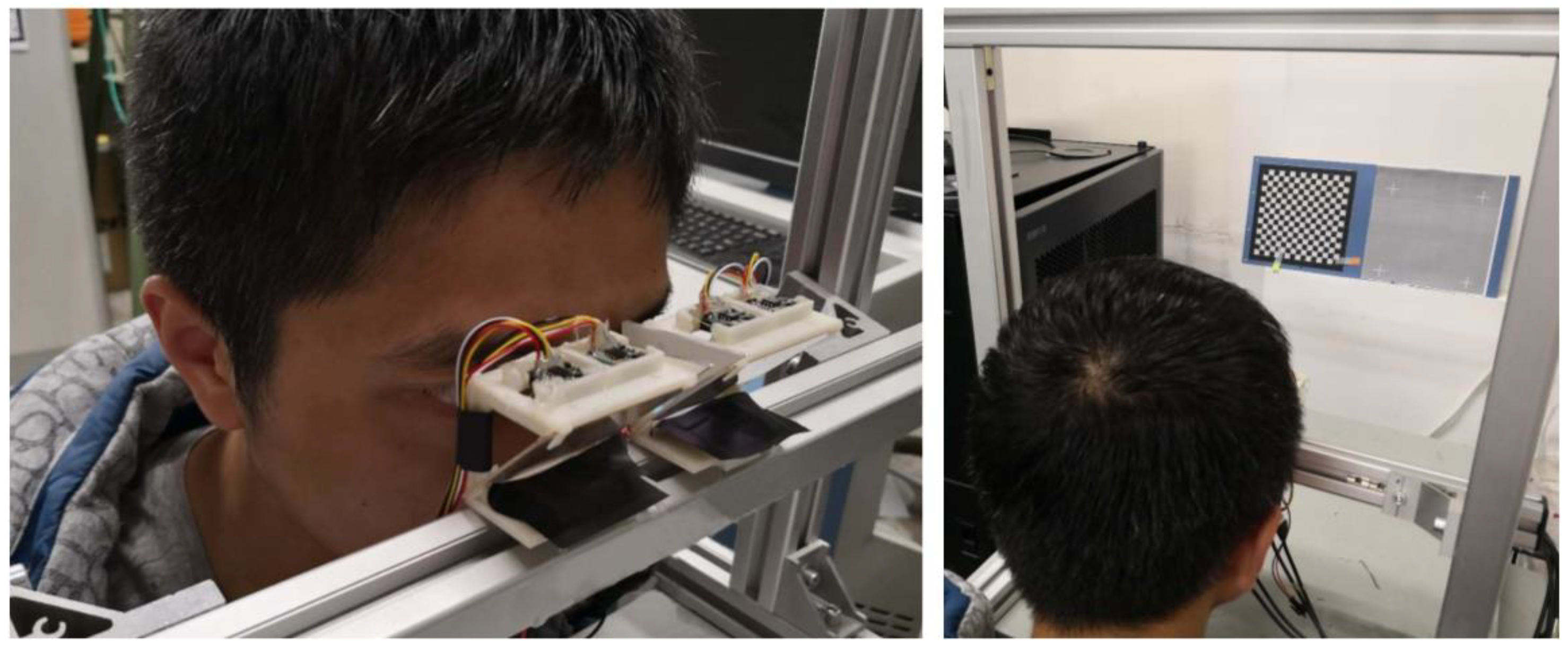

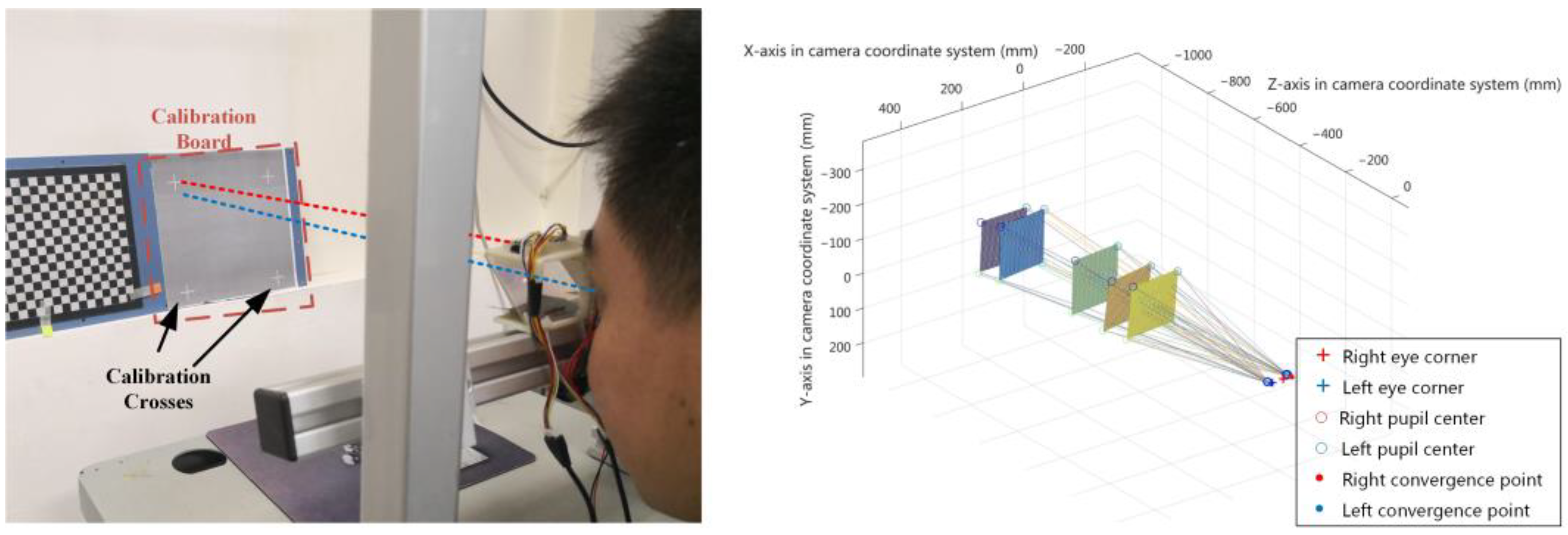

- A novel stereo-camera based gaze tracking system is proposed to accurately track a person’s eyes. This gaze tracking system is equipped with a pair of novel light source and semi-transparent mirrors. This specific device aims to capture clearer human eye images without affecting the natural observation of the human eye. This paper also describes the key parameters of the stereo cameras and the stereo vision model of these cameras. The form of this gaze tracking system has the potential to be transformed into a head-mounted gaze tracking device.

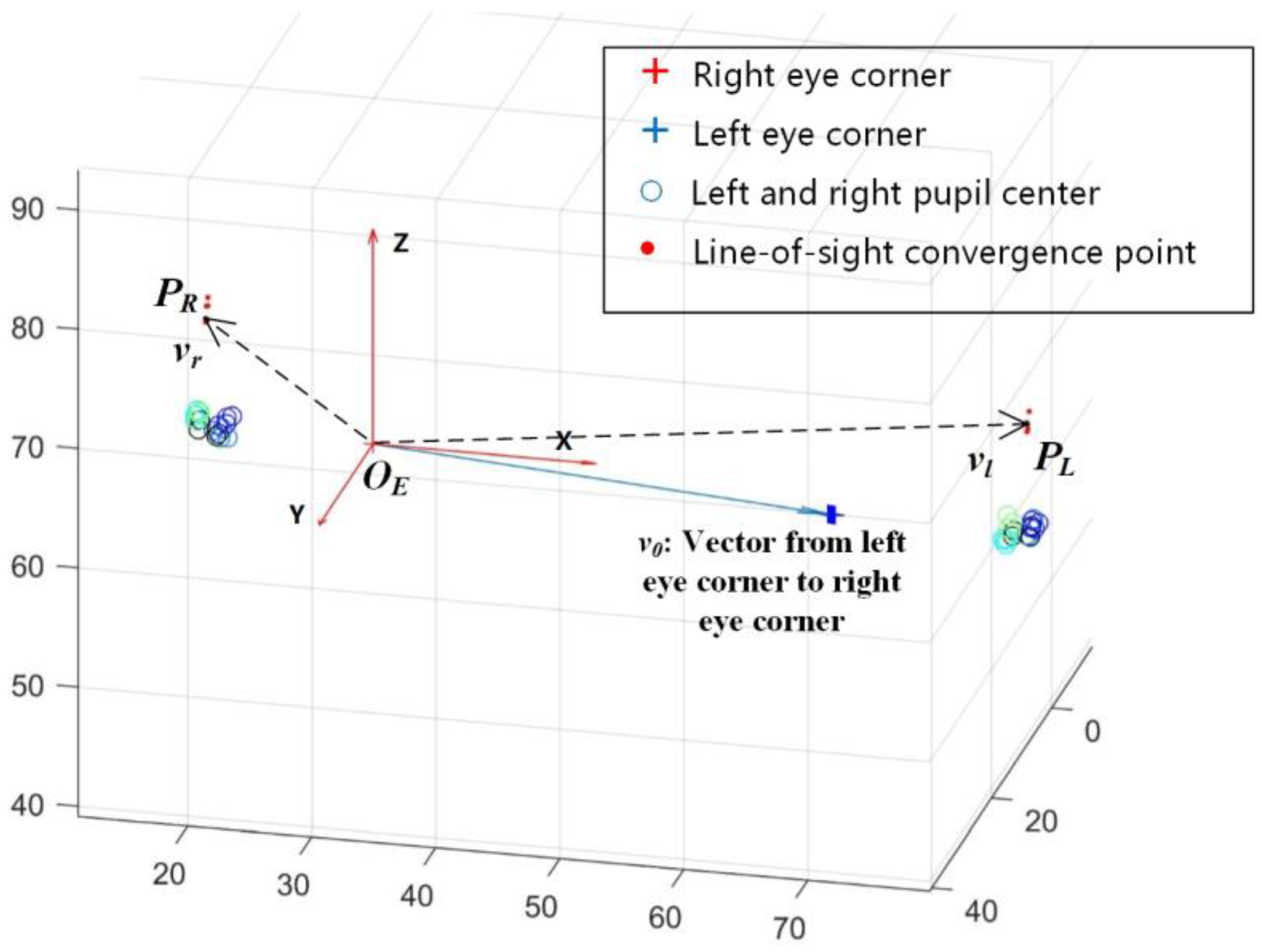

- This paper demonstrates a simplified geometry-model. The key feature of this model is that the light entering the eye will converge in the eye at a line of sight convergence point. In this model, the pupil edge and the inner eye corner are the utilized features. The spatial coordinates of these eye features can be solved by using stereo cameras, thus we can directly use the spatial coordinates of these features to determine the spatial coordinates of the line-of-sight convergence point. This model matches with a relatively simple calibration process, and it achieves the calculation of the spatial intersection point of the binocular line-of-sight.

- This paper also proposes a calibration process. A specific calibration target is placed in several different positions and a person is allowed to watch the four calibration points on the target. These calibration data are then processed by a search approach to obtain the line-of-sight convergence point, and the relationship between the pupil size and the line-of-sight convergence point position is solved. This calibration process can become a general framework of the system calibration method for the free-space gaze tracking system.

2. Related Works

3. Methods

3.1. Customized Gaze Estimation System

- Ensure that users have a large field of view;

- The cameras avoid or minimize the influence of environmental illumination;

- Head-mounted devices should be as light as possible.

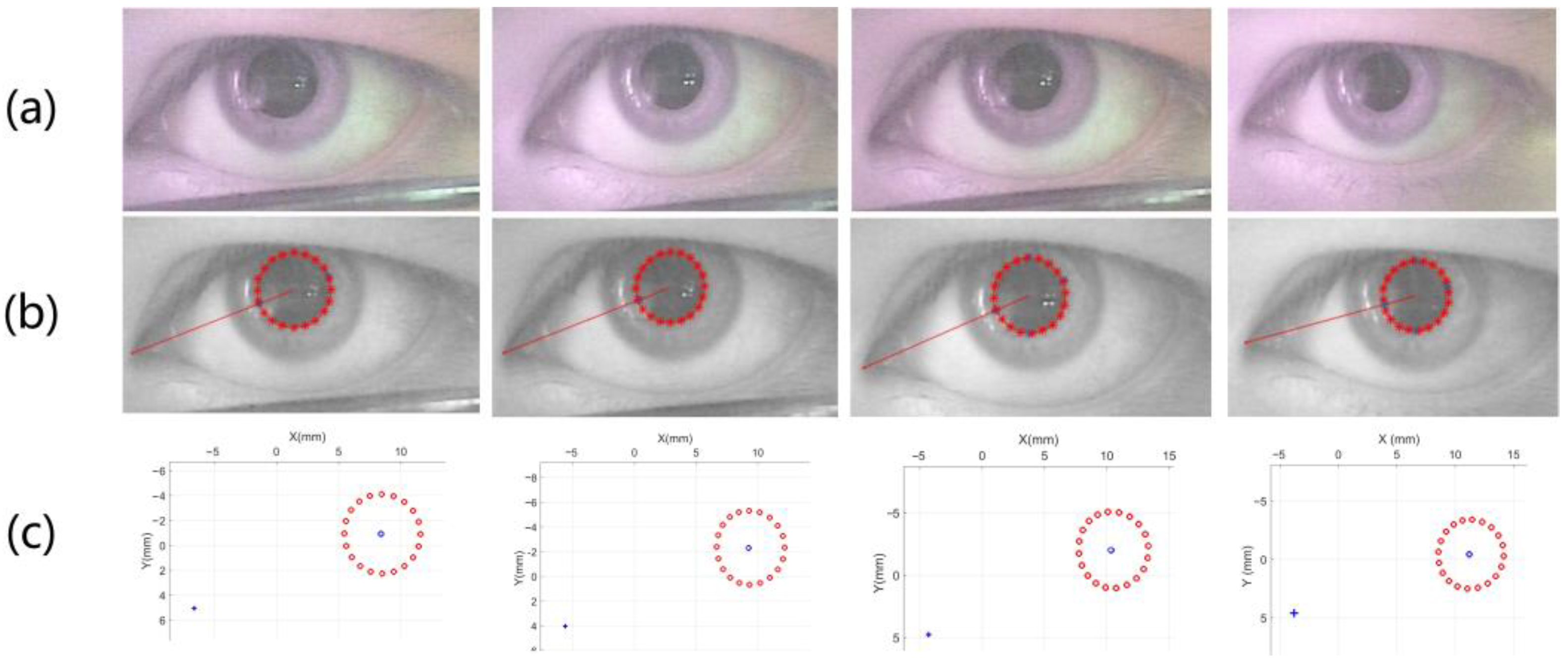

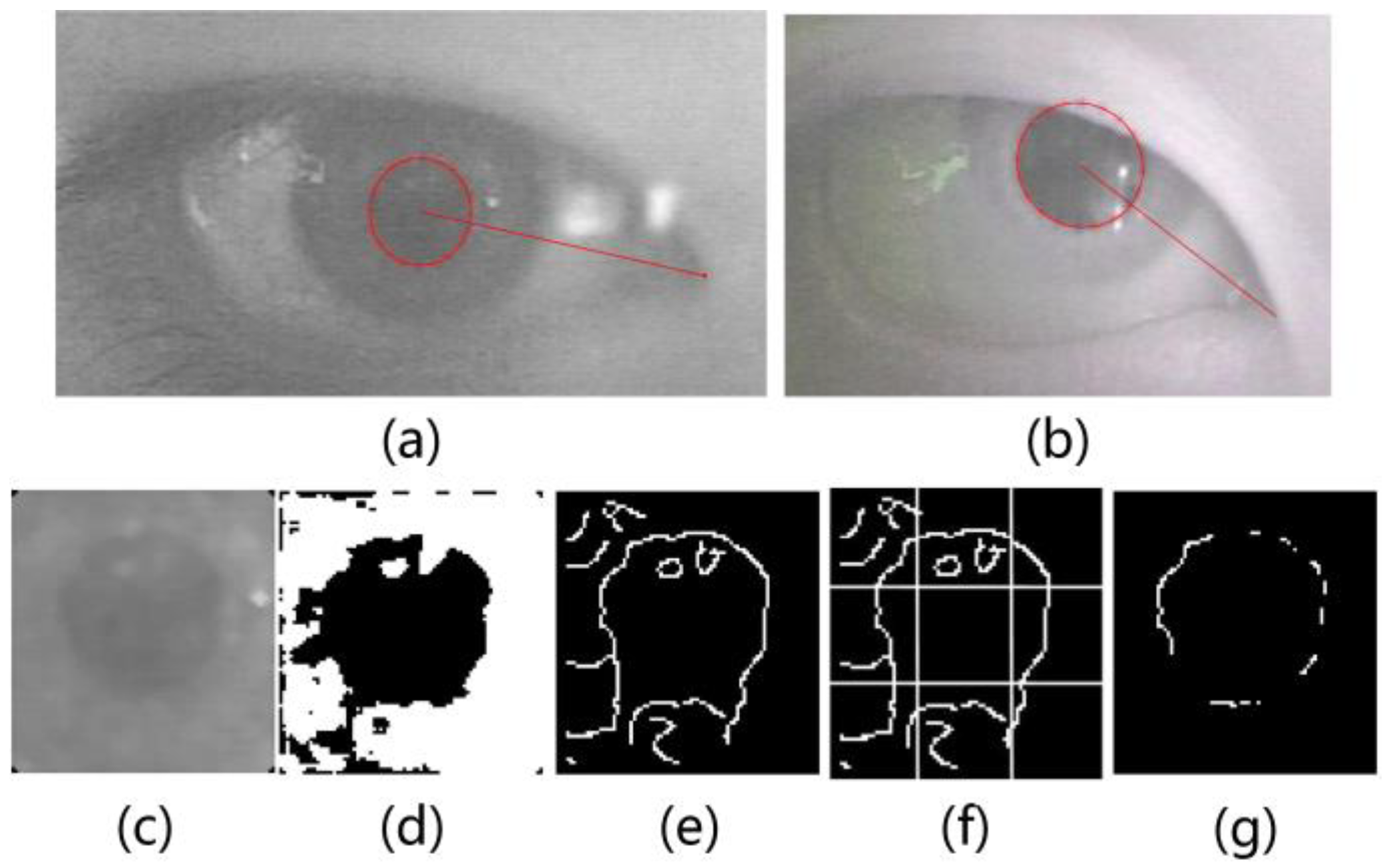

3.2. Eye Features for Gaze Estimation

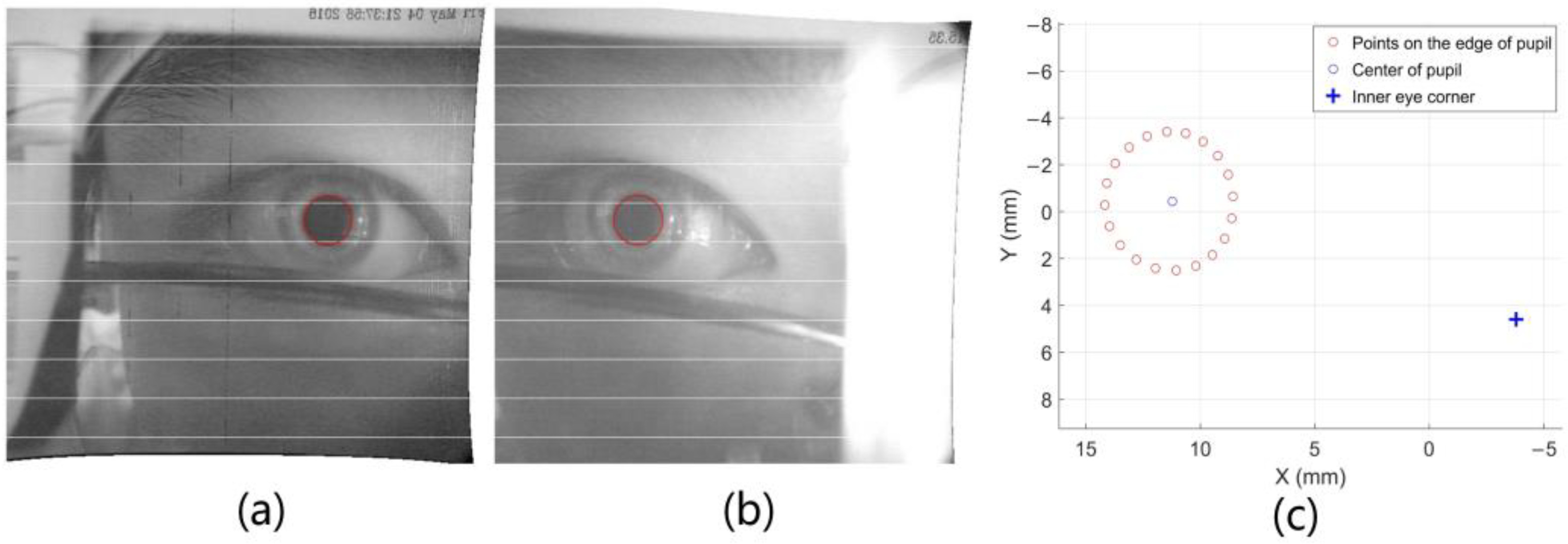

3.3. Estimation of the 3D Point-of-Regard and the System Calibration

3.3.1. Calibration Process

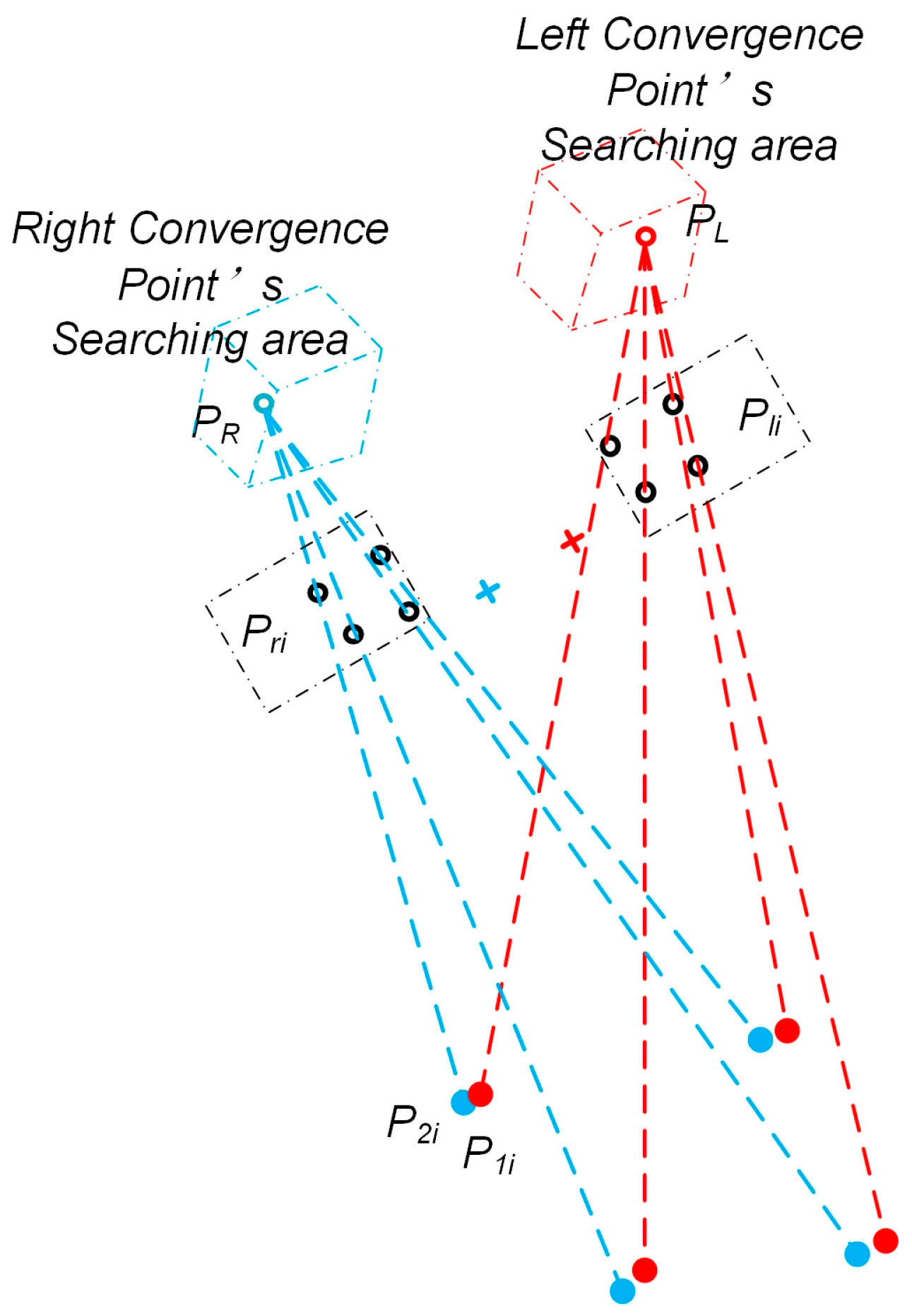

- First set an initial value of PL and PR, the initial search areas Ainit1 and Ainit2, and the search step length st.

- Calculate the judgment value of each search point according to Equation (14) and take the best PL and PR values in the current search area as the center point of the next search.

- The current search area Acur1, Acur2 and the search step length st are redefined, wherein the boundary width of Acur1 and Acur2 is half of that in the previous search, and the value of st is also half of that in the previous search.

- Repeat step (2) to obtain the best PL and PR values in the current search area and use them as the center of next search area for the next search.

- Repeat step (3). If st < 0.0005 mm, the calculation is finished, and current PL and PR values are the final calculation results. If not, repeat step (2).

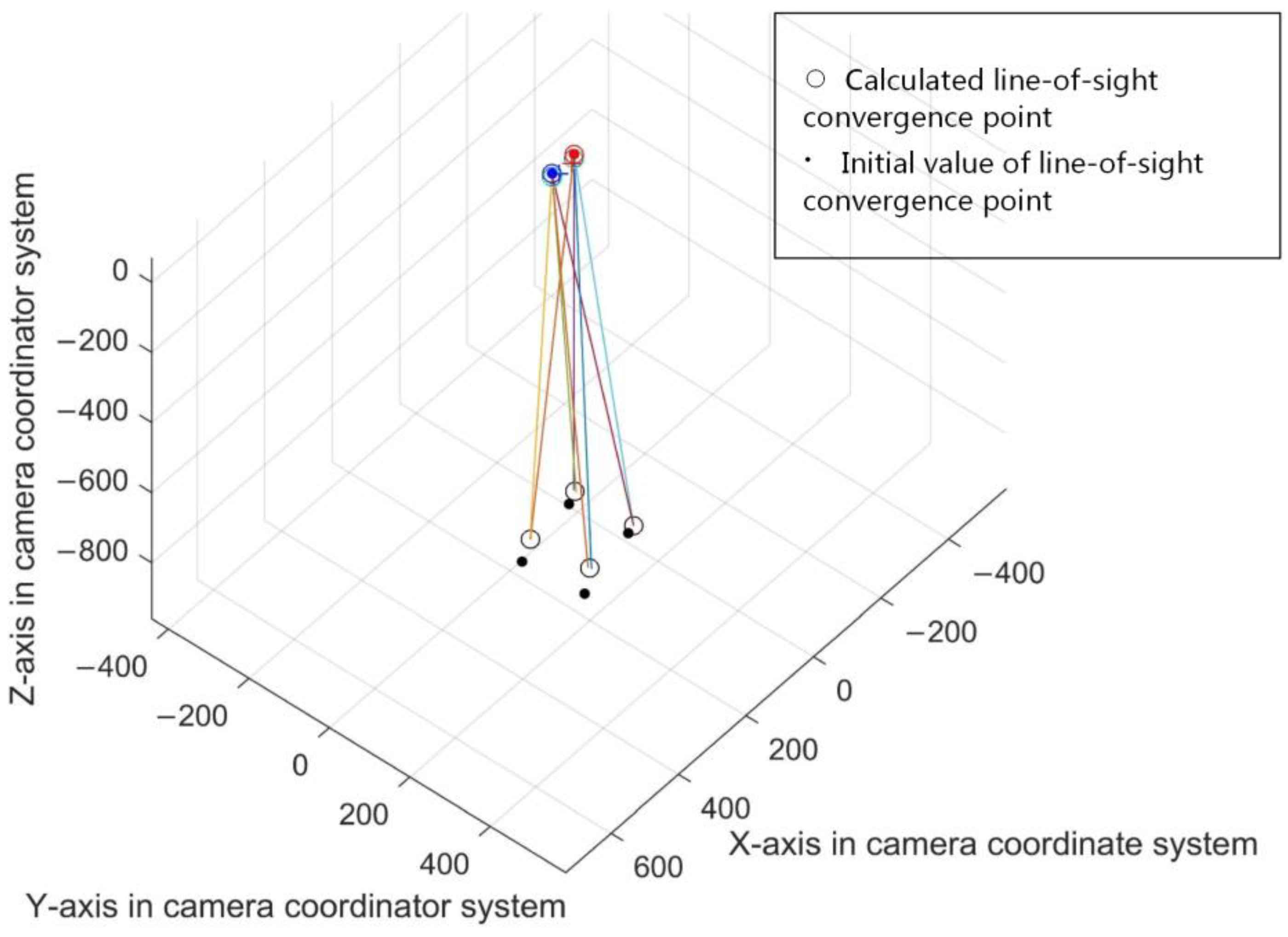

3.3.2. Coordinate Alignment and Line-of-Sight Convergence Point Fitting Method

4. Experiments

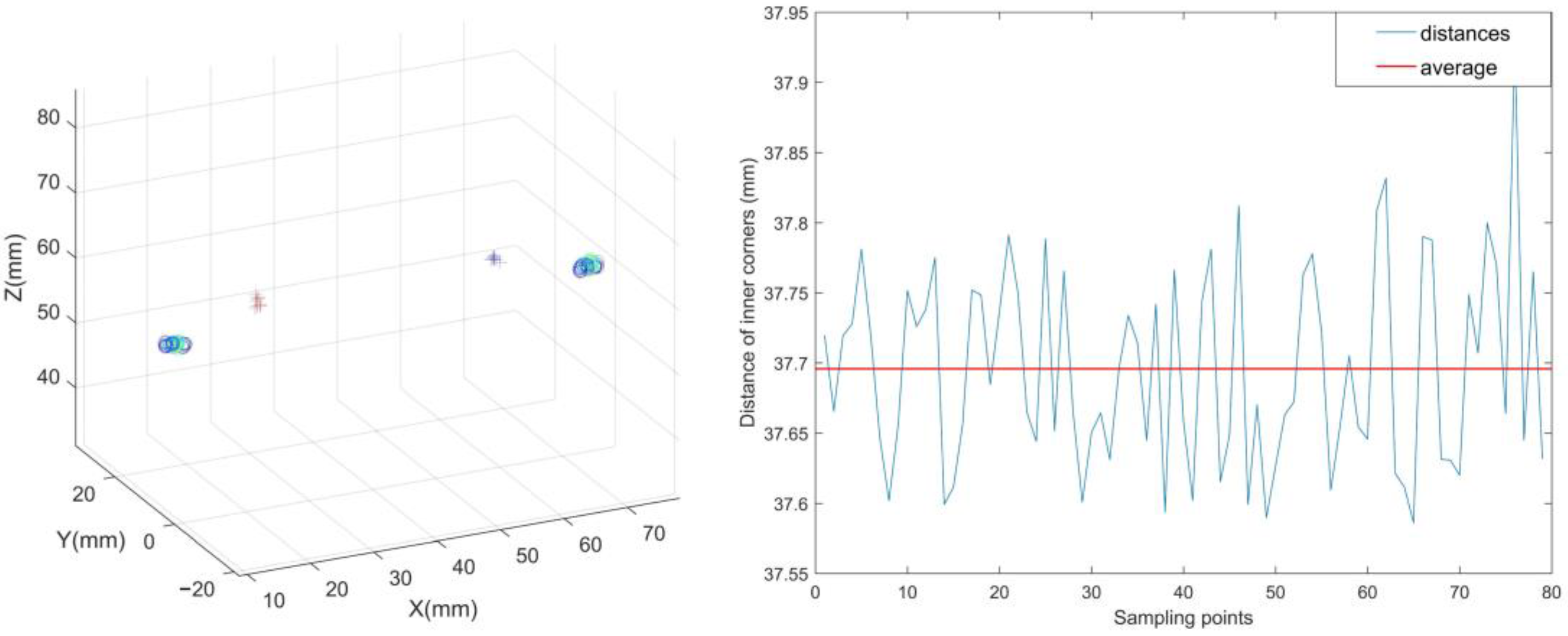

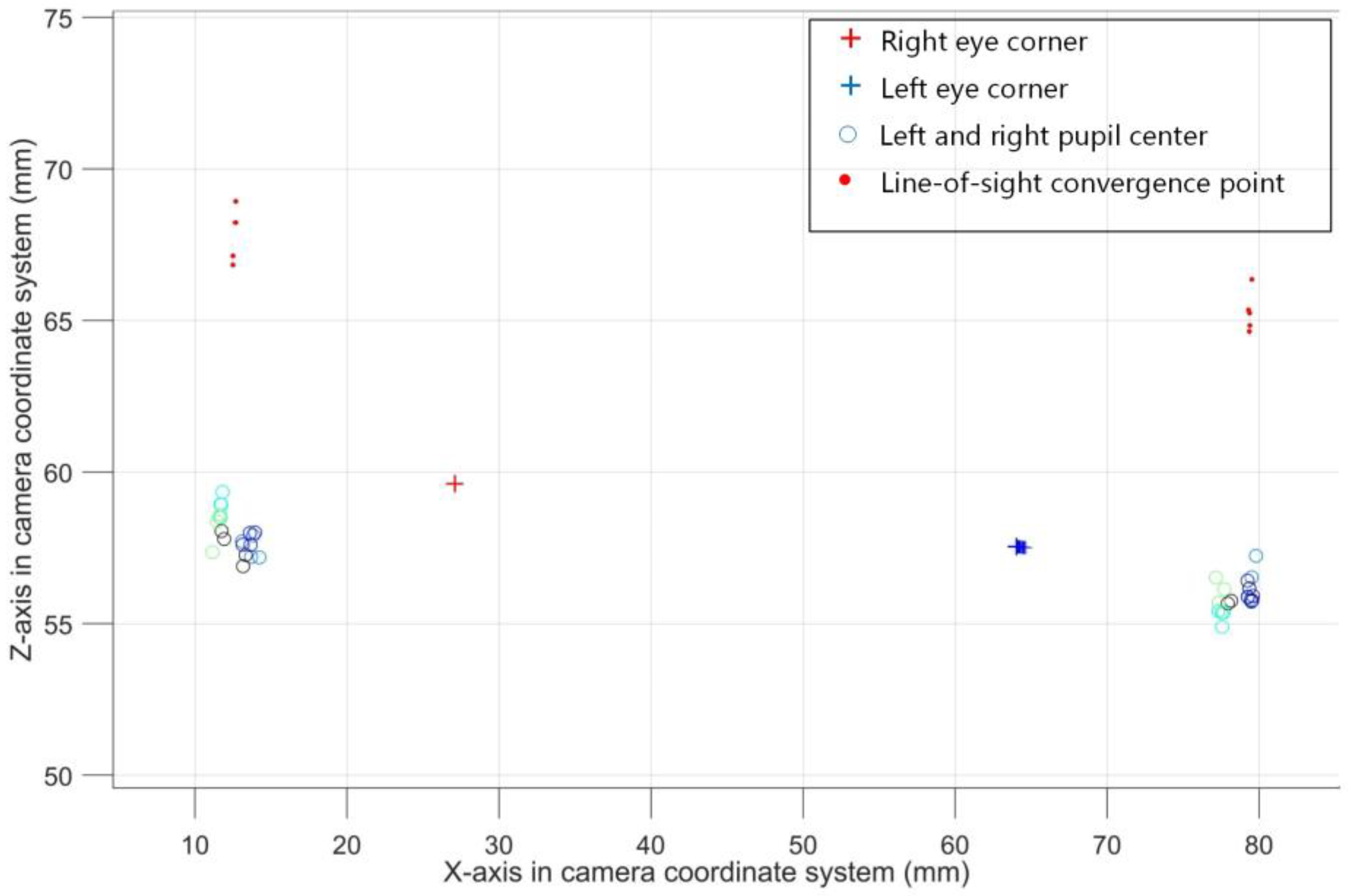

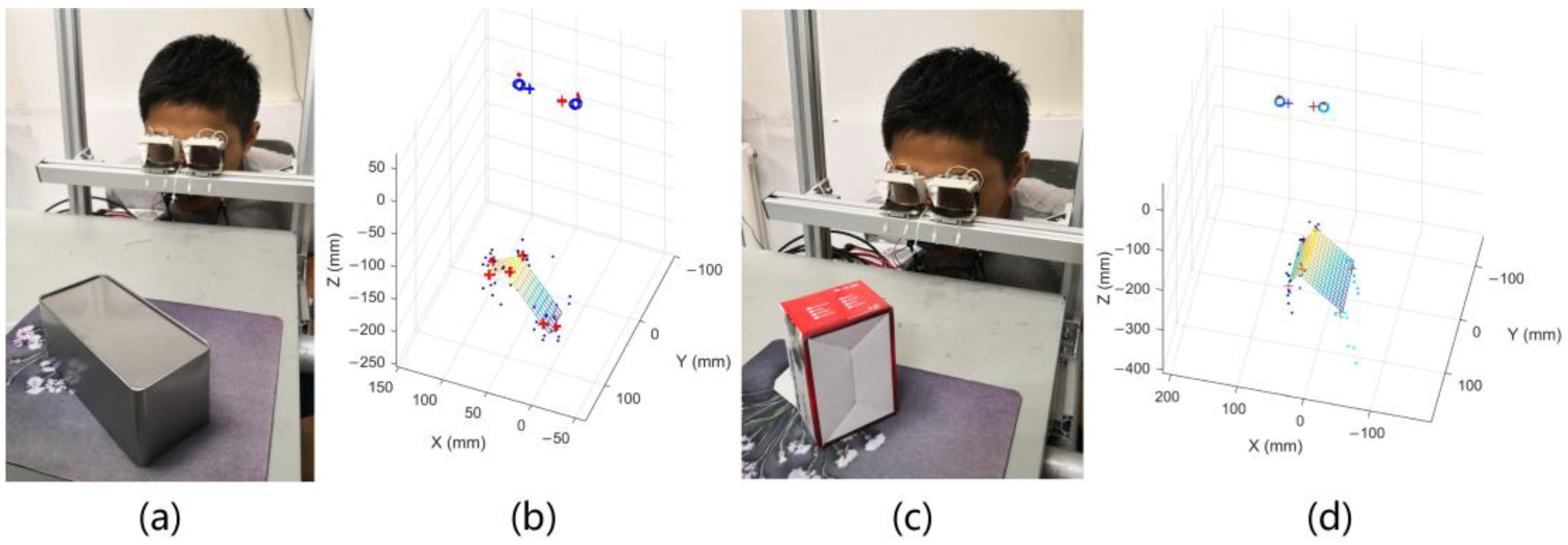

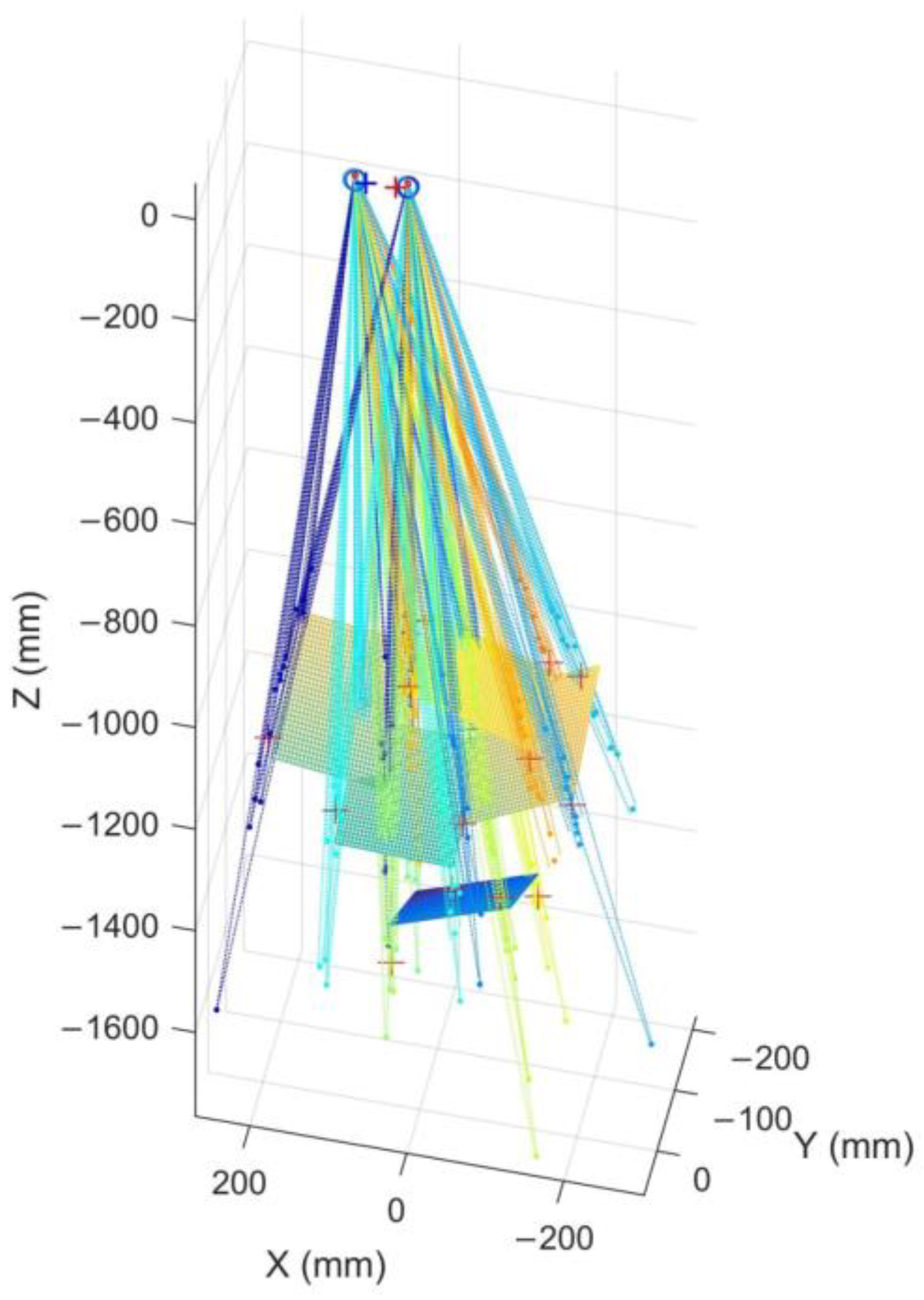

4.1. Intuitive 3D Point-of-Regard Estimating Experiments

4.2. Influences of Different People or in Different Illumination Condition

- The illumination condition of this experiment was the same as that of the calibration process. We invited nine testers to look at the calibration board respectively. Each one was allowed to participate in one group of experiments, and there were eight repeated experiments in each group. The interval between each experiment were 30 s. According to the distribution of the solved 3D PoR in each group, the average Cartesian error of every tester’s 3D PoR was listed in Table 1.

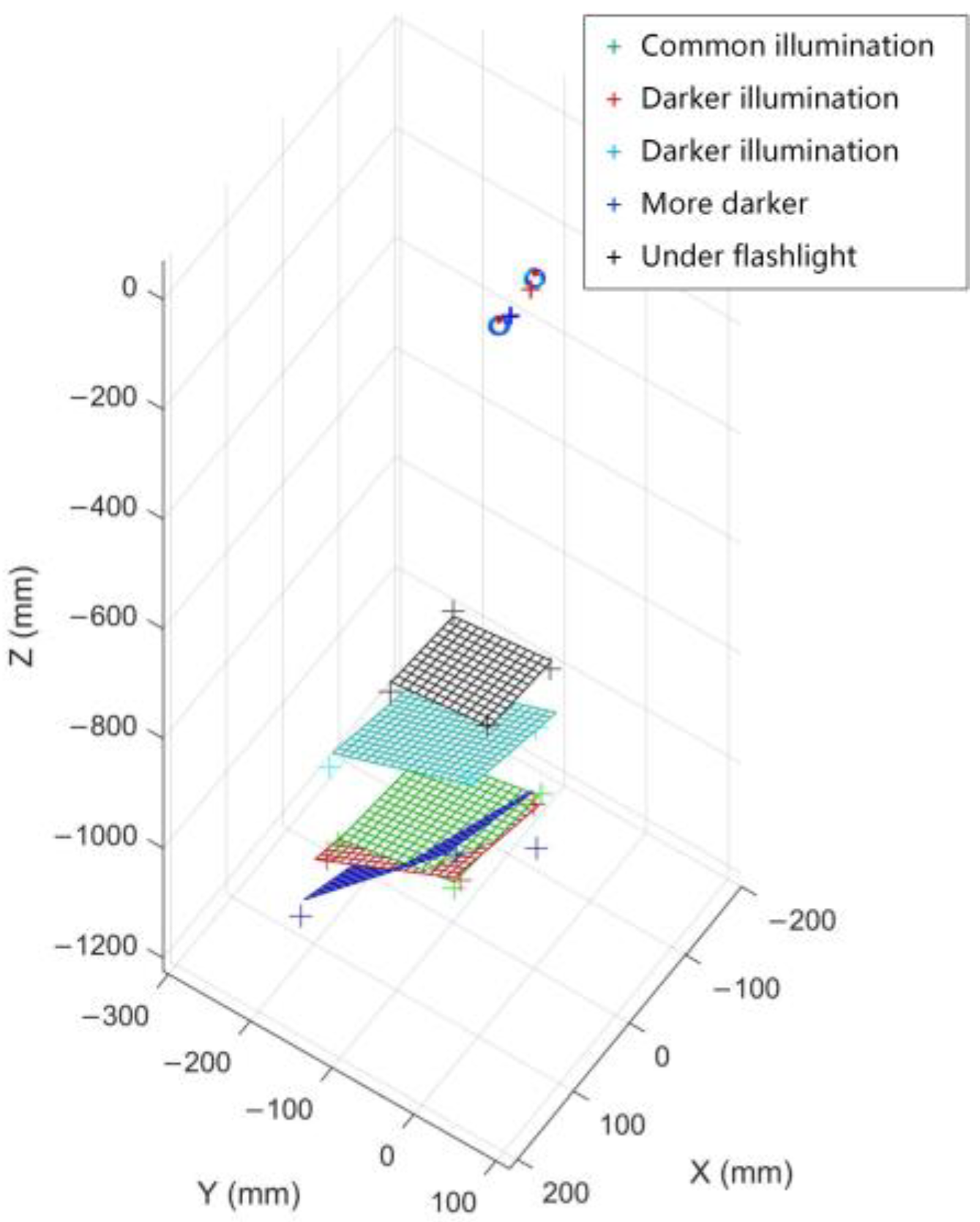

- We let a tester look at the calibration board under different lighting conditions and observe the distribution of 3D PoR. During the experiment, the indoor illumination changed from common to dark for four times. After adjusting to the dark, we used a flashlight to illuminate the calibration board, and then took one more experiment to observe the experimental results. The intuitive experimental result is shown in Figure 16.

4.3. Error of the Method in Different Distances

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Jacob, R.J.K. Hot topics-eye-gaze computer interfaces: What you look at is what you get. Computer 1993, 26, 65–66. [Google Scholar] [CrossRef]

- Talmi, K.; Liu, J. Eye and gaze tracking for visually controlled interactive stereoscopic displays. Signal Process. Image Commun. 1999, 14, 799–810. [Google Scholar] [CrossRef]

- D’Orazio, T.; Leo, M.; Distante, A. Eye detection in face images for a driver vigilance system. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 95–98. [Google Scholar]

- Sesin, A.; Adjouadi, M.; Cabrerizo, M.; Ayala, M.; Barreto, A. Adaptive eye-gaze tracking using neural-network-based user profiles to assist people with motor disability. J. Rehabil. Res. Dev. 2008, 45, 801–817. [Google Scholar] [CrossRef] [PubMed]

- Ji, Q.; Wechsler, H.; Duchowski, A.; Flickner, M. Special issue: Eye detection and tracking. Comput. Vis. Image Underst. 2005, 98, 1–3. [Google Scholar] [CrossRef]

- Hansen, D.W.; Ji, Q. In the Eye of the Beholder: A Survey of Models for Eyes and Gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 478–500. [Google Scholar] [CrossRef] [PubMed]

- Beymer, D.; Flickner, M. Eye gaze tracking using an active stereo head. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003. [Google Scholar]

- Cho, D.C.; Yap, W.S.; Lee, H.; Lee, I.; Kim, W.Y. Long range eye gaze tracking system for a large screen. IEEE Trans. Consum. Electron. 2012, 58, 1119–1128. [Google Scholar] [CrossRef]

- Cho, C.W.; Lee, J.W.; Shin, K.Y.; Lee, E.C.; Park, K.R.; Lee, H.; Cha, J. Gaze Detection by Wearable Eye-Tracking and NIR LED-Based Head-Tracking Device Based on SVR. ETRI J. 2012, 34, 542–552. [Google Scholar] [CrossRef]

- Villanueva, A.; Cabeza, R. A novel gaze estimation system with one calibration point. IEEE Trans. Syst. Man Cybern. Part B 2008, 38, 1123–1138. [Google Scholar] [CrossRef] [PubMed]

- Dmitri, M.; Eizenman, M. An Automatic Personal Calibration Procedure for Advanced Gaze Estimation Systems. IEEE Trans. Biomed. Eng. 2010, 57, 1031–1039. [Google Scholar]

- Chen, J.; Ji, Q. Probabilistic gaze estimation without active personal calibration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 609–616. [Google Scholar]

- Chi, J.N.; Xing, Y.-Y.; Liu, L.-N.; Gou, W.-W.; Zhang, G.-S. Calibration method for 3D gaze tracking systems. Appl. Opt. 2017, 56, 1536–1541. [Google Scholar] [CrossRef]

- Baluja, S.; Pomerleau, D. Non-Intrusive Gaze Tracking Using Artificial Neural Networks; Carnegie Mellon University: Pittsburgh, PA, USA, 1994. [Google Scholar]

- Ishikawa, T. Passive Driver Gaze Tracking with Active Appearance Models; Robotics Institute: Pittsburgh, PA, USA, 2004. [Google Scholar]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. Appearance-based gaze estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4511–4520. [Google Scholar] [CrossRef]

- Sugano, Y.; Matsushita, Y.; Sato, Y. Learning-by-synthesis for appearance-based 3d gaze estimation. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1821–1828. [Google Scholar]

- Wood, E.; Baltrušaitis, T.; Morency, L.P.; Robinson, P.; Bulling, A. Learning an appearance-based gaze estimator from one million synthesised images. In Proceedings of the Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 131–138. [Google Scholar]

- Sugano, Y.; Matsushita, Y.; Sato, Y.; Koike, H. An Incremental Learning Method for Unconstrained Gaze Estimation. In Proceedings of the 10th European Conference on Computer Vision: Part III, Marseille, France, 12–18 October 2008; pp. 656–667. [Google Scholar]

- Lu, F.; Sugano, Y.; Okabe, T.; Sato, Y. Adaptive Linear Regression for Appearance-Based Gaze Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2033–2046. [Google Scholar] [CrossRef] [PubMed]

- George, A.; Routray, A. Fast and accurate algorithm for eye localisation for gaze tracking in low-resolution images. IET Comput. Vis. 2016, 10, 660–669. [Google Scholar] [CrossRef]

- Wang, C.; Shi, F.; Xia, S.; Chai, J. Realtime 3D eye gaze animation using a single RGB camera. ACM Trans. Graph. 2016, 35, 118. [Google Scholar] [CrossRef]

- Takemura, K.; Takahashi, K.; Takamatsu, J.; Ogasawara, T. Estimating 3-D Point-of-Regard in a Real Environment Using a Head-Mounted Eye-Tracking System. IEEE Trans. Hum. Mach. Syst. 2014, 44, 531–536. [Google Scholar] [CrossRef]

- Su, D.; Li, Y.F. Toward flexible calibration of head-mounted gaze trackers with parallax error compensation. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Macau, China, 5–8 December 2017; pp. 491–496. [Google Scholar]

- Li, B.; Fu, H.; Wen, D.; LO, W. Etracker: A Mobile Gaze-Tracking System with Near-Eye Display Based on a Combined Gaze-Tracking Algorithm. Sensors 2018, 18, 1626. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Liu, Z.; Sun, M.T. Real time gaze estimation with a consumer depth camera. Inf. Sci. 2015, 320, 346–360. [Google Scholar]

- Li, J.; Li, S. Gaze Estimation from Color Image Based on the Eye Model with Known Head Pose. IEEE Trans. Hum.-Mach. Syst. 2016, 46, 414–423. [Google Scholar] [CrossRef]

- Cazzato, D.; Leo, M.; Distante, C. An Investigation on the Feasibility of Uncalibrated and Unconstrained Gaze Tracking for Human Assistive Applications by Using Head Pose Estimation. Sensors 2014, 14, 8363–8379. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhang, X.; Webb, J. 3D-Gaze-based Robotic Grasping through Mimicking Human Visuomotor Function for People with Motion Impairments. IEEE Trans. Biomed. Eng. 2017, 64, 2824–2835. [Google Scholar] [CrossRef] [PubMed]

- Pirri, F.; Pizzoli, M.; Rudi, A. A general method for the point of regard estimation in 3D space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 921–928. [Google Scholar]

- Ferhat, O.; Vilariño, F. Low Cost Eye Tracking: The Current Panorama. Comput. Intell. Neurosci. 2016, 2016, 8680541. [Google Scholar] [CrossRef] [PubMed]

- Loewenfeld, I.E.; Lowenstein, O. The Pupil: Anatomy, Physiology, and Clinical Applications; Iowa State University Press: Ames, IA, USA; Wayne State University Press: Detroit, MI, USA, 1993. [Google Scholar]

- Schaeffel, F. Kappa and Hirschberg ratio measured with an automated video gaze tracker. Optom. Vis. Sci. 2002, 79, 329–334. [Google Scholar] [CrossRef] [PubMed]

- Fuhl, W.; Tonsen, M.; Bulling, A.; Kasneci, E. Pupil detection for head-mounted eye tracking in the wild: An evaluation of the state of the art. Mach. Vis. Appl. 2016, 27, 1275–1288. [Google Scholar] [CrossRef]

- Zhang, X.; Li, B.; Yang, D. A novel harris multi-scale corner detection algorithm. J. Electron. Inf. Technol. 2007, 29, 1735–1738. [Google Scholar]

- Toates, F.M. Accommodation function of the human eye. Physiol. Rev. 1972, 52, 828–863. [Google Scholar] [CrossRef] [PubMed]

- Van Nes, F.L.; Koenderink, J.J.; Nas, H.; Bouman, M.A. Spatiotemporal modulation transfer in the human eye. J. Opt. Soc. Am. 1967, 57, 1082–1088. [Google Scholar] [CrossRef] [PubMed]

| Unit: cm | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| Error on X | 0.2 ± 0.2 | 0.4 ± 0.2 | 0.4 ± 0.4 | 0.4 ± 0.3 | 0.4 ± 0.3 | 0.3 ± 0.2 | 0.4 ± 0.3 | 0.4 ± 0.2 | 0.5 ± 0.4 |

| Error on Y | 0.4 ± 0.3 | 0.6 ± 0.3 | 0.6 ± 0.5 | 0.5 ± 0.4 | 0.6 ± 0.5 | 0.5 ± 0.4 | 0.6 ± 0.5 | 0.5 ± 0.5 | 0.5 ± 0.5 |

| Error on Z | 3.8 ± 2.6 | 4.8 ± 2.9 | 5.1 ± 3.1 | 4.3 ± 2.8 | 5.1 ± 3.4 | 4.1 ± 3.0 | 4.8 ± 3.1 | 4.6 ± 3.1 | 5.3 ± 4.0 |

| Overall | 3.8 ± 2.6 | 4.9 ± 2.9 | 5.2 ± 3.1 | 4.3 ± 2.8 | 5.1 ± 3.4 | 4.2 ± 2.9 | 4.9 ± 3.1 | 4.7 ± 3.1 | 5.3 ± 3.9 |

| Unit: cm | 0.8 m | 1.5 m | 2 m | 2.5 m | 3 m | 4 m | 6 m |

|---|---|---|---|---|---|---|---|

| Error on X | 0.4 ± 0.5 | 0.6 ± 0.5 | 0.7 ± 0.6 | 1.1 ± 0.6 | 0.9 ± 0.5 | 1.3 ± 0.6 | 2.3 ± 0.8 |

| Error on Y | 0.5 ± 0.5 | 0.8 ± 0.7 | 0.6 ± 0.4 | 0.8 ± 0.6 | 0.8 ± 0.5 | 1.3 ± 1.1 | 3.0 ± 0.4 |

| Error on Z | 5.2 ± 3.1 | 6.1 ± 3.8 | 8.3 ± 6.0 | 9.6 ± 7.1 | 13.8 ± 7.8 | 13.3 ± 10.5 | 23.7 ± 12.5 |

| Overall | 5.3 ± 3.3 | 6.3 ± 4.3 | 8.4 ± 5.9 | 9.9 ± 7.0 | 13.9 ± 7.7 | 14.5 ± 10.9 | 24.3 ± 12.5 |

| Overall in [30] | 2.1 ± 0.3 | / | 3.6 ± 0.3 | / | 5.7 ± 0.5 | / | 16.9 ± 1.2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, Z.; Wang, X.; Zhou, K.; Chen, X.; Wang, X. A Novel Method for Estimating Free Space 3D Point-of-Regard Using Pupillary Reflex and Line-of-Sight Convergence Points. Sensors 2018, 18, 2292. https://doi.org/10.3390/s18072292

Wan Z, Wang X, Zhou K, Chen X, Wang X. A Novel Method for Estimating Free Space 3D Point-of-Regard Using Pupillary Reflex and Line-of-Sight Convergence Points. Sensors. 2018; 18(7):2292. https://doi.org/10.3390/s18072292

Chicago/Turabian StyleWan, Zijing, Xiangjun Wang, Kai Zhou, Xiaoyun Chen, and Xiaoqing Wang. 2018. "A Novel Method for Estimating Free Space 3D Point-of-Regard Using Pupillary Reflex and Line-of-Sight Convergence Points" Sensors 18, no. 7: 2292. https://doi.org/10.3390/s18072292

APA StyleWan, Z., Wang, X., Zhou, K., Chen, X., & Wang, X. (2018). A Novel Method for Estimating Free Space 3D Point-of-Regard Using Pupillary Reflex and Line-of-Sight Convergence Points. Sensors, 18(7), 2292. https://doi.org/10.3390/s18072292