Manipulation of Unknown Objects to Improve the Grasp Quality Using Tactile Information

Abstract

1. Introduction

- From the hand point of view, the optimization of the hand configuration, i.e., searching for a particular hand configuration satisfying some specific constraints that can be arbitrarily defined.

- From the grasp point of view (relation hand-object), the optimization of the grasp quality, i.e., searching for a grasp that can resist external force perturbations on the object.

- From the object point of view, the optimization of the object configuration, i.e., searching for an appropriate object position and orientation that satisfy the requirements of a given task.

2. Proposed Approach

2.1. Problem Statement, Approach Overview and Assumptions

- The robotic hand has tactile sensors to obtain information about the contacts with the manipulated object, and no other feedback source is available, as, for instance, visual information.

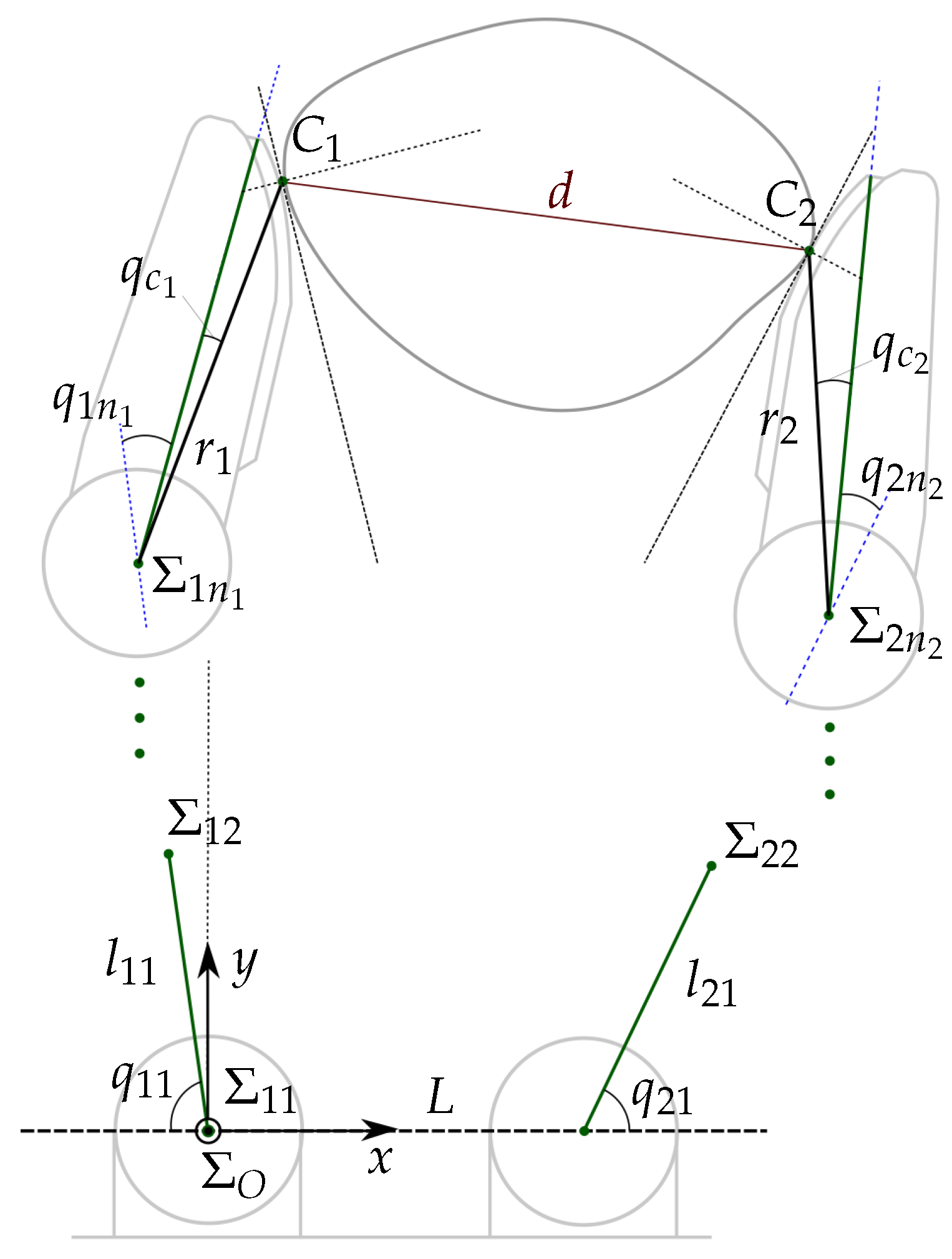

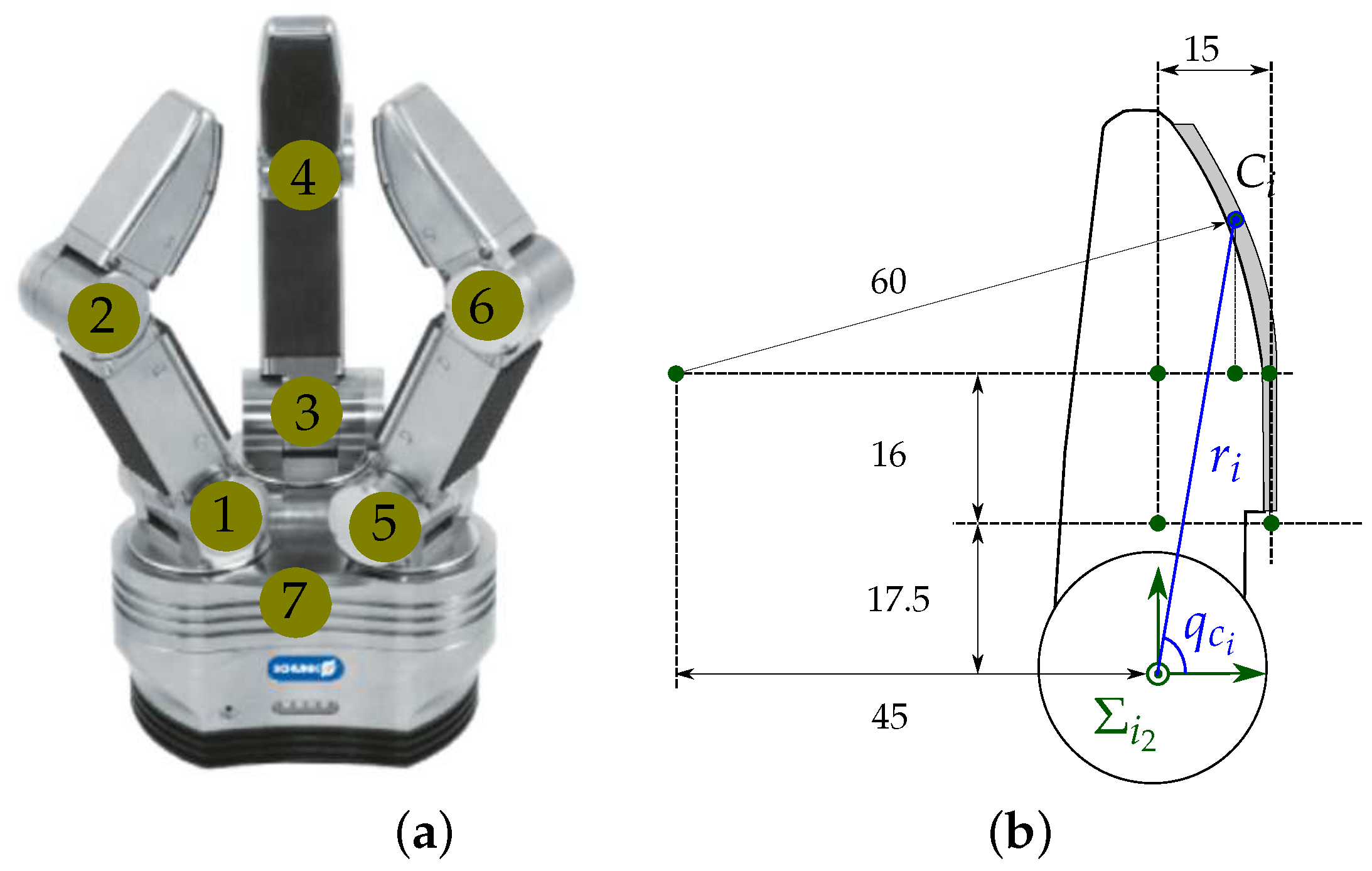

- Two fingers of the hand are used for the manipulation. These fingers perform a grasp comparable with a human grasp using the thumb and index fingers with the fingertips movements lying on plane [37]. This type of grasp limits the movement of the object to a plane but it allows different actions in every-day and industrial tasks, like, for instance, matching the orientation of two pieces to be assembled or inspecting an object [38,39].

- The manipulated objects are rigid bodies and their shape is unknown. The approach could work also for soft objects, there is not any specific constraint for it, but we did not determine in this work any limit for the acceptable softness.

- The friction coefficient is not identified during the manipulation. It is assumed to be above a minimum security value which can be roughly determined considering the object material and the rubber surface of the fingertips. In the experimentation we compute the movements using the minimum value of the friction coefficient between the material of the fingertips and the used objects, i.e., a value below the real friction coefficient.

- The finger joints have a low-level position control to make them reach the commanded positions, which is the most frequent case in a commercial hand with a closed controller. No force control is required at the level of the hand controller. The proposed approach uses the tactile measurements to generate commanded positions, thus it is actually acting as an implicit upper level force control loop.

2.2. Grasp Modeling

2.3. Main Manipulation Algorithm

- Computation of the relevant variables of the current grasp state (lines 6 to 7). and are obtained using the hand kinematics and the tactile information, and the magnitude of the grasping force is obtained as the average of the contact forces and measured on each fingertip. Although and should have the same magnitude and opposite direction, the use of the average of both measured contact forces minimizes potential measurement errors, thus

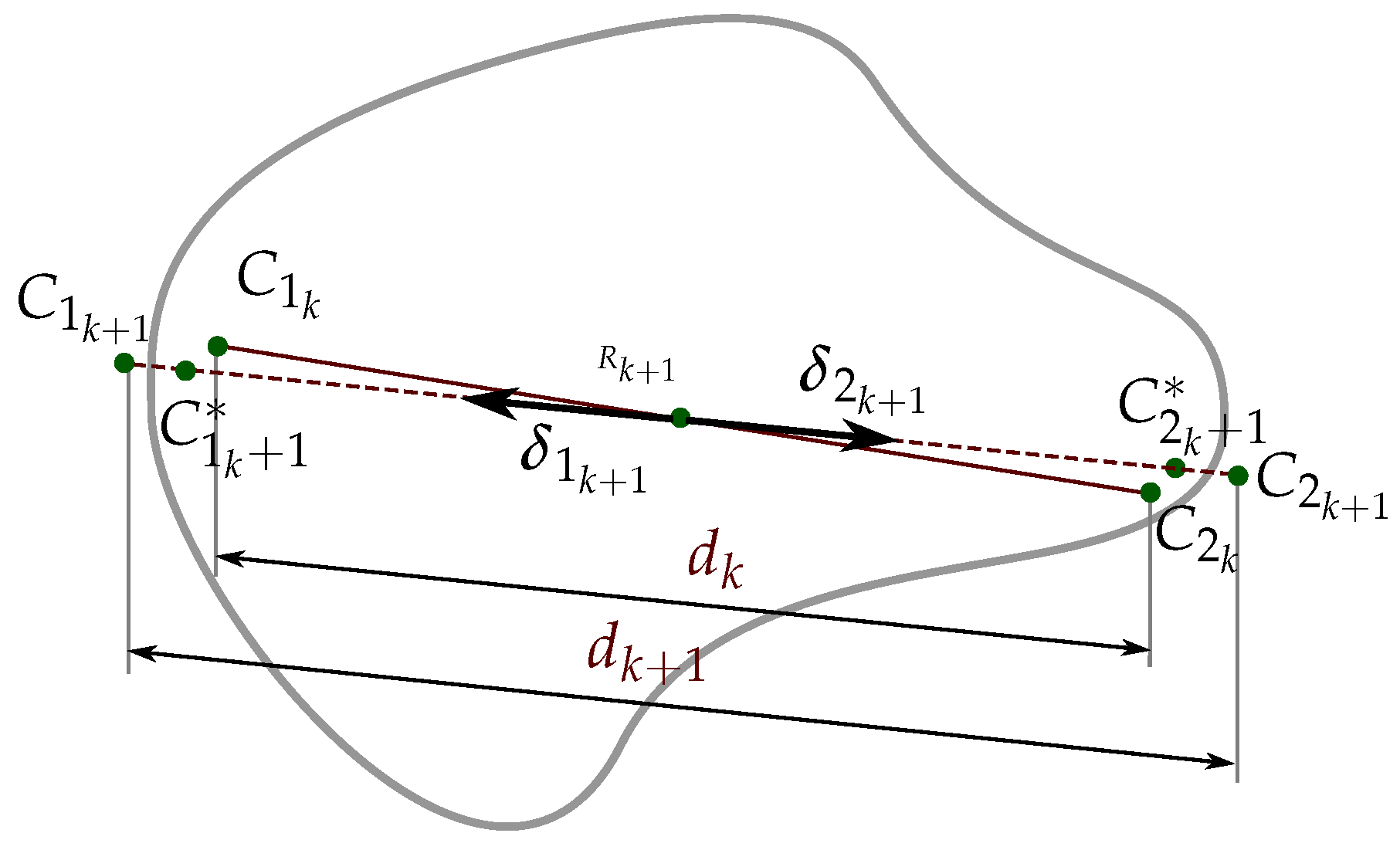

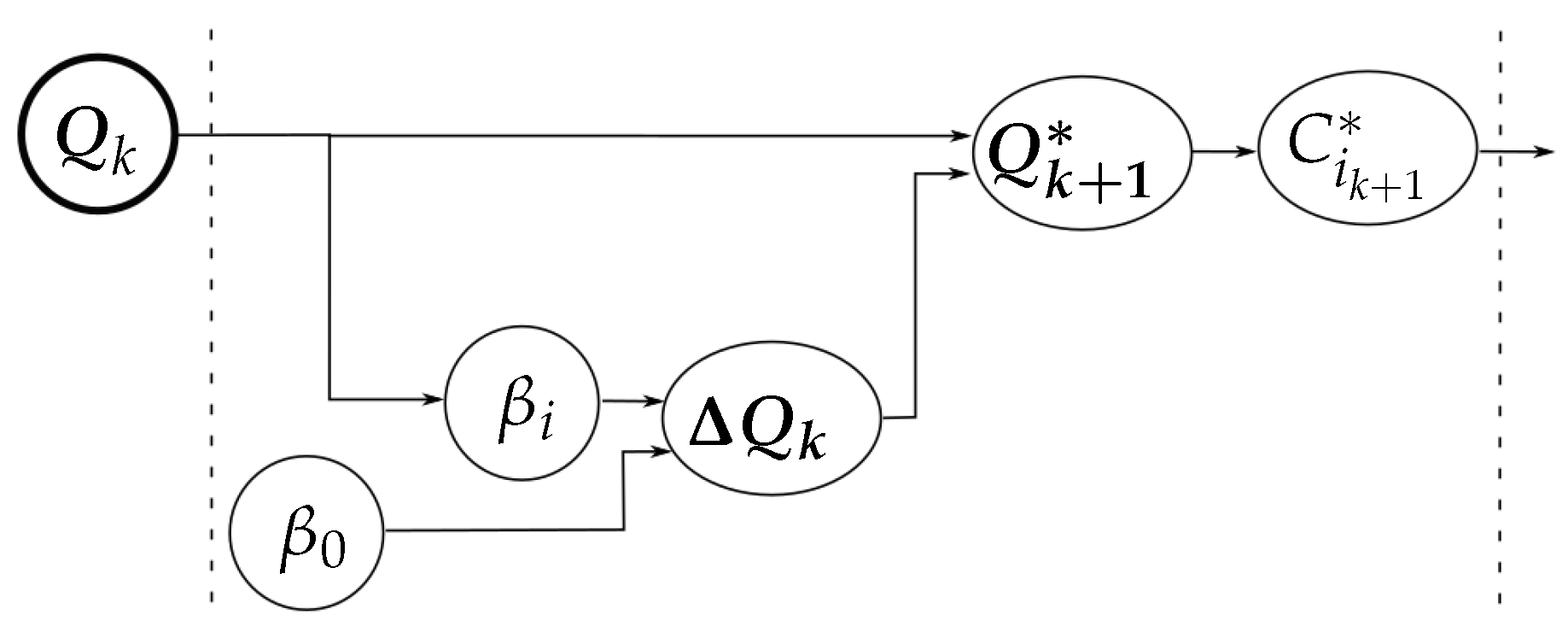

- Computation of two virtual contact points and (line 8). These points are such that the movements of the fingers to make them be the new contact points changes the grasp towards the selected goal. The computation of and from and according to each manipulation strategy are detailed in the next section.

- Computation of the new hand configuration (lines 10 to 12). Since the shape of the object is unknown, any movement of the fingers may alter the contact force allowing potential damage of the object or the hand if it increases or allowing a potential fall of the object if it decreases. In order to reduce the error , the distance is adjusted in each iteration aswithwhere and are user defined functions. In this work, we use and , with being a predefined constant. The reason for this is that a potential fall of the object () is considered more critical that a potential application of large grasping forces (), and therefore has larger gain, specially for large .

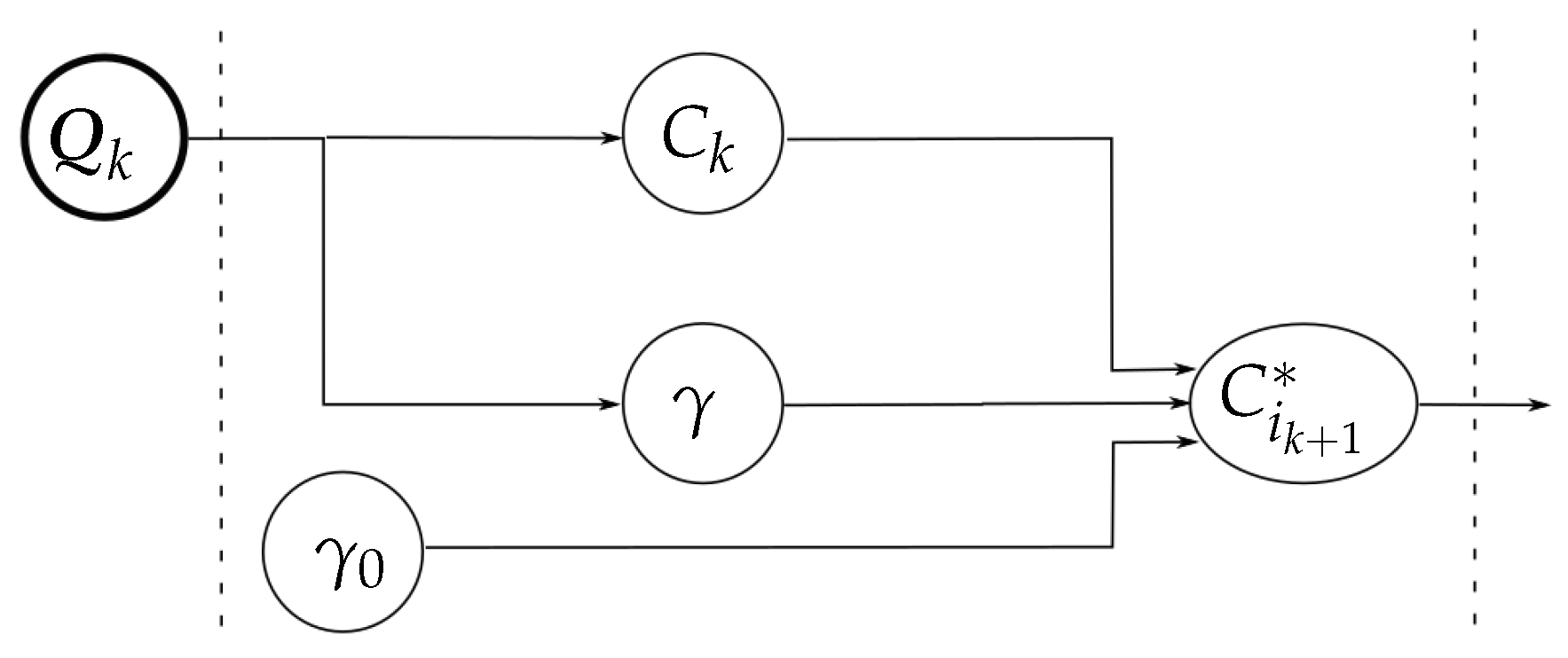

Algorithm 1: Tactile Manipulation Now, and are adjusted along the line they define to obtain the actual target contact points and at a distance ,where is the central point between and and is the unitary vector from to (see Figure 2).Finally, using the inverse kinematics of the fingers, from the points and it is possible to obtain the corresponding hand configuration .Figure 3 illustrates the relationship between the measured variables, the role played by the manipulation strategy in the computation of the auxiliary variables , and the variables involved in the final adjustment to obtain the new hand configuration (with independence of the manipulation strategy). - Termination conditions (line 13). The iterative manipulation procedure is applied until any of the following four stop conditions is activated, two of them associated with the quality index and the other two with the motion constraints:

- The quality index reaches the optimal value.

- The current optimal value of the quality index is not improved during a predetermined number of iterations. Note that the index may not be improved monotonically, it could may become worst or oscillate alternating small improvements and worsening.

- The expected grasp at the computed contact points does not satisfy the friction constraints.

- The computed contact points do not belong to the workspace of the fingers. This condition is activated when the computed target contact points and are not reachable by the fingers, i.e., does not lie within the hand workspace.

- Finger movements (line 14). When none of the termination conditions is activated, the hand is moved towards to make the fingers reach the desired target contact points and . After the finger movements a new manipulation iteration begins.

3. Manipulation Strategies

3.1. Optimizing the Hand Configuration

3.1.1. Index to be Optimized

3.1.2. Optimization Strategy

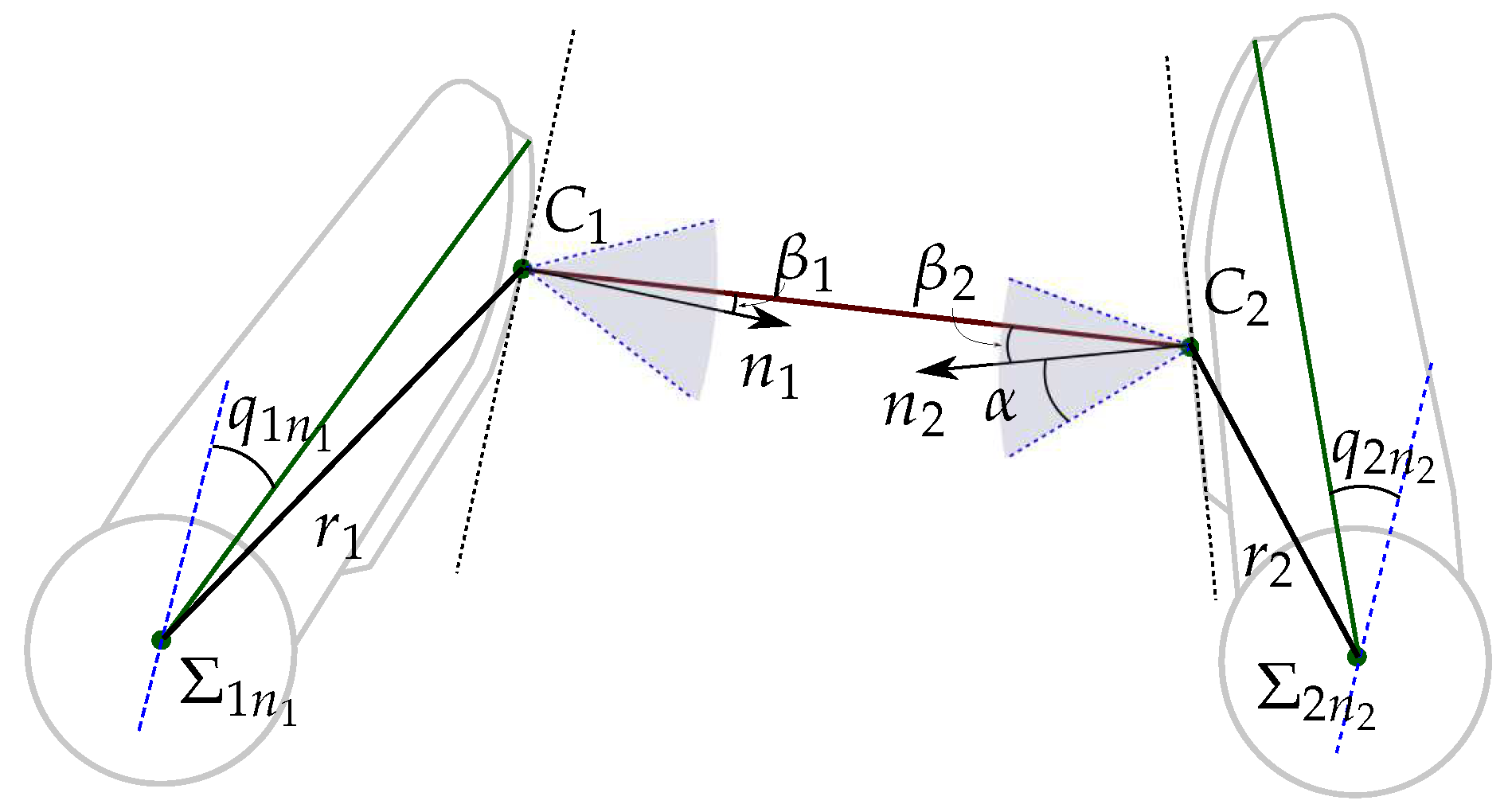

3.2. Optimizing the Grasp Quality

3.2.1. Index to be Optimized

3.2.2. Optimization Strategy

3.3. Optimizing the Object Orientation

3.3.1. Index to be Optimized

3.3.2. Optimization Strategy

- the average of the two angles between an arbitrary reference axis attached to the object and the directions normal to each fingertip at the corresponding contact point,

- the value of at the initial grasp (i.e., for ),

- the current value of the -th joint (i.e., joint of finger ),

- the value of the -th joint at the initial grasp (i.e., for ),

- the distance between the contact points, and

- R

- the radius of the fingertip.

3.4. Combining Manipulation Strategies

3.4.1. Index to be Optimized

3.4.2. Optimization Strategy

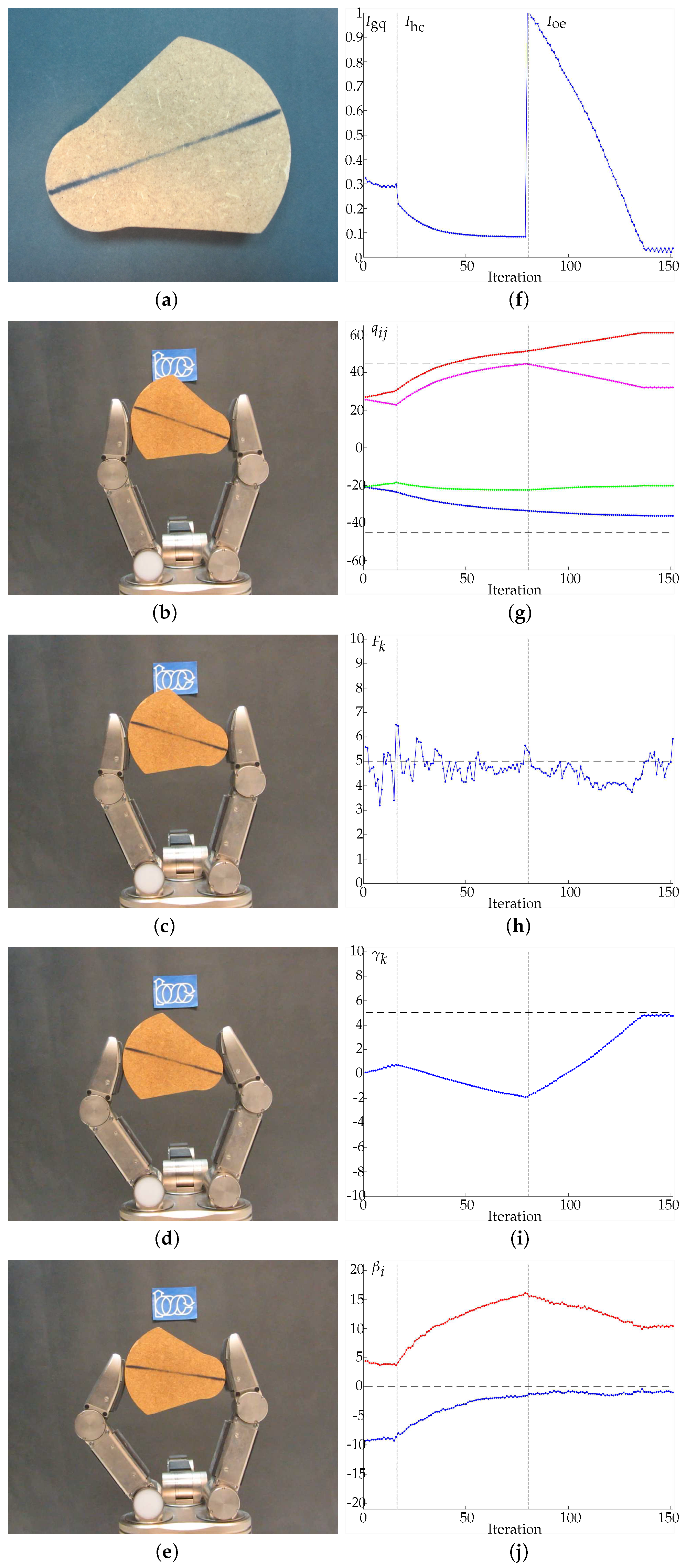

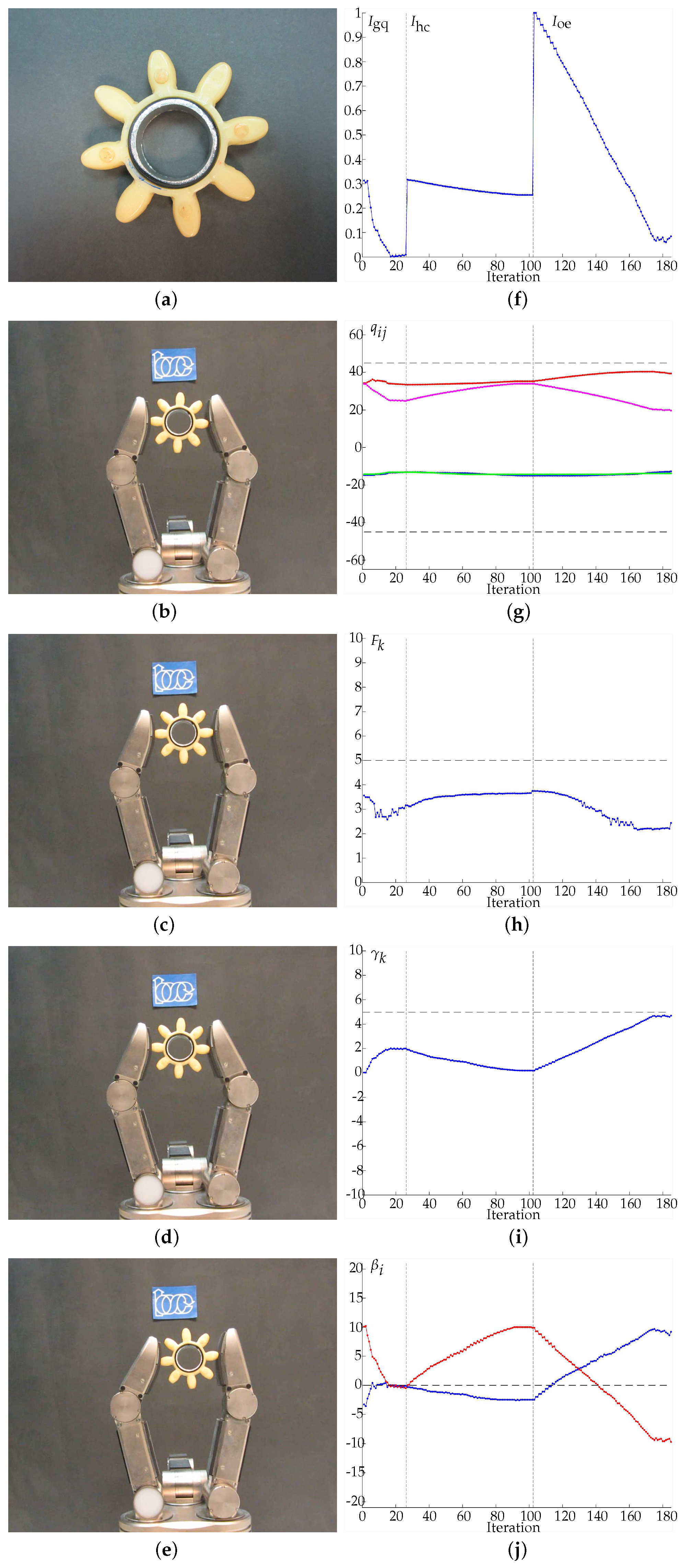

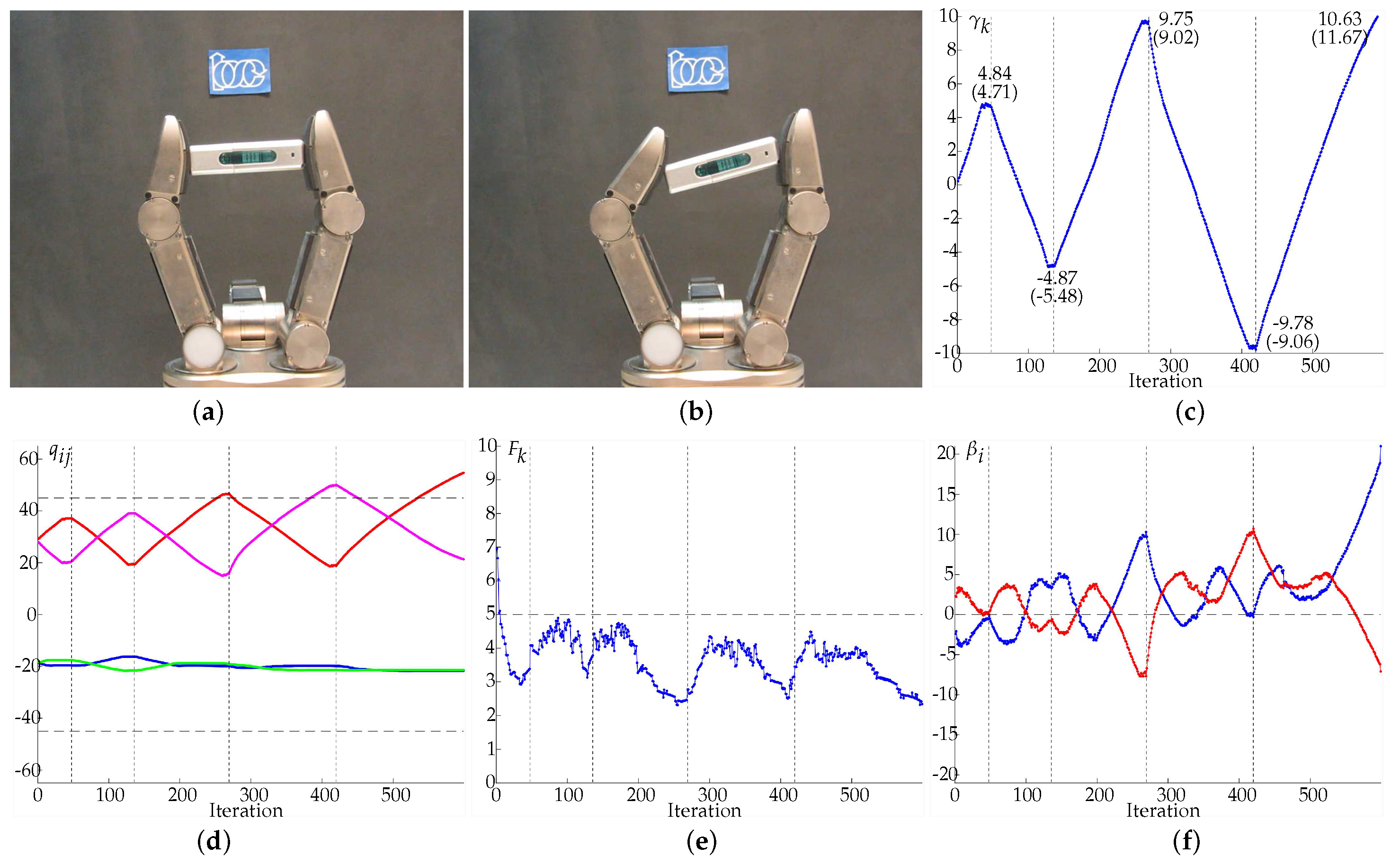

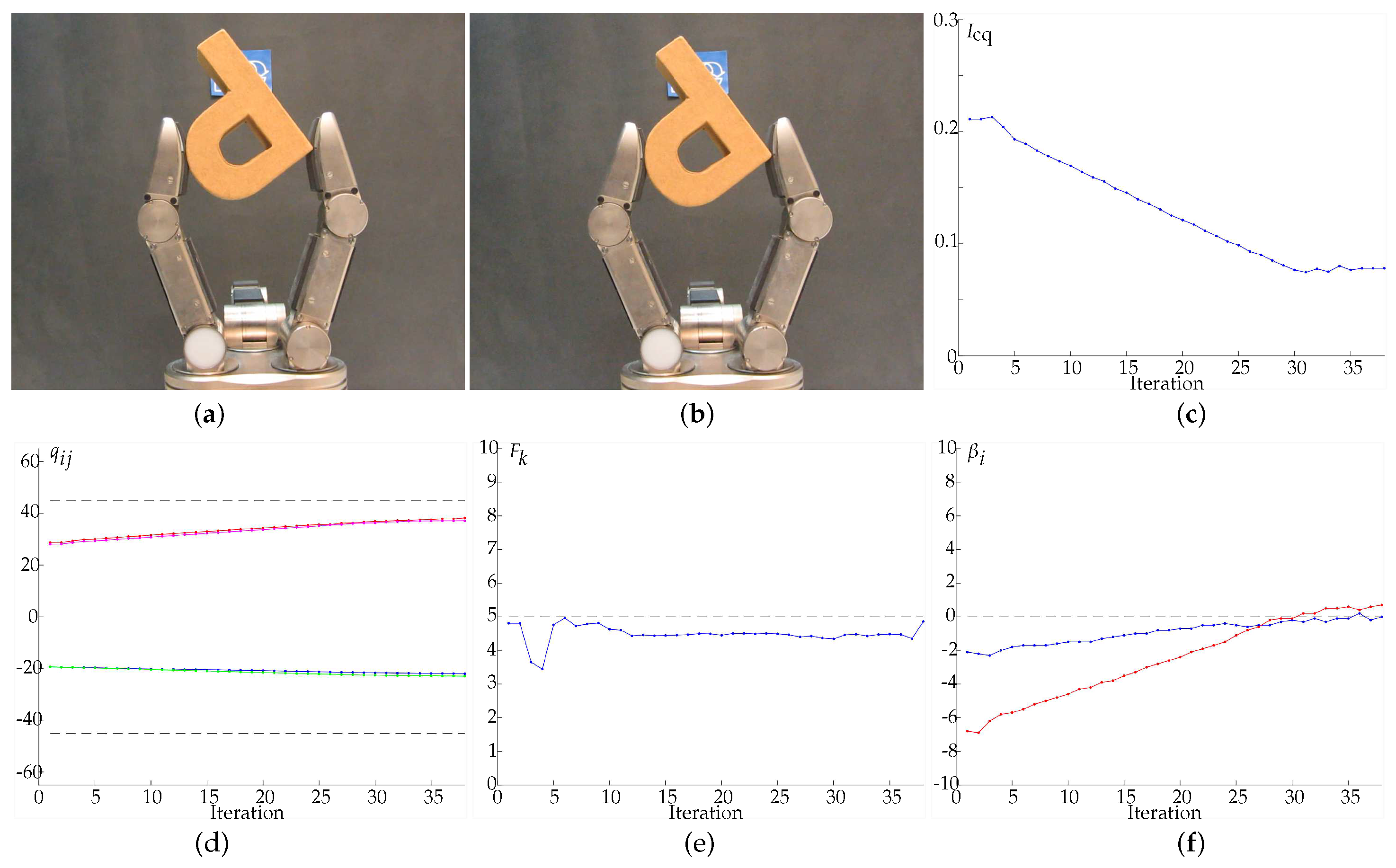

4. Experimental Validation

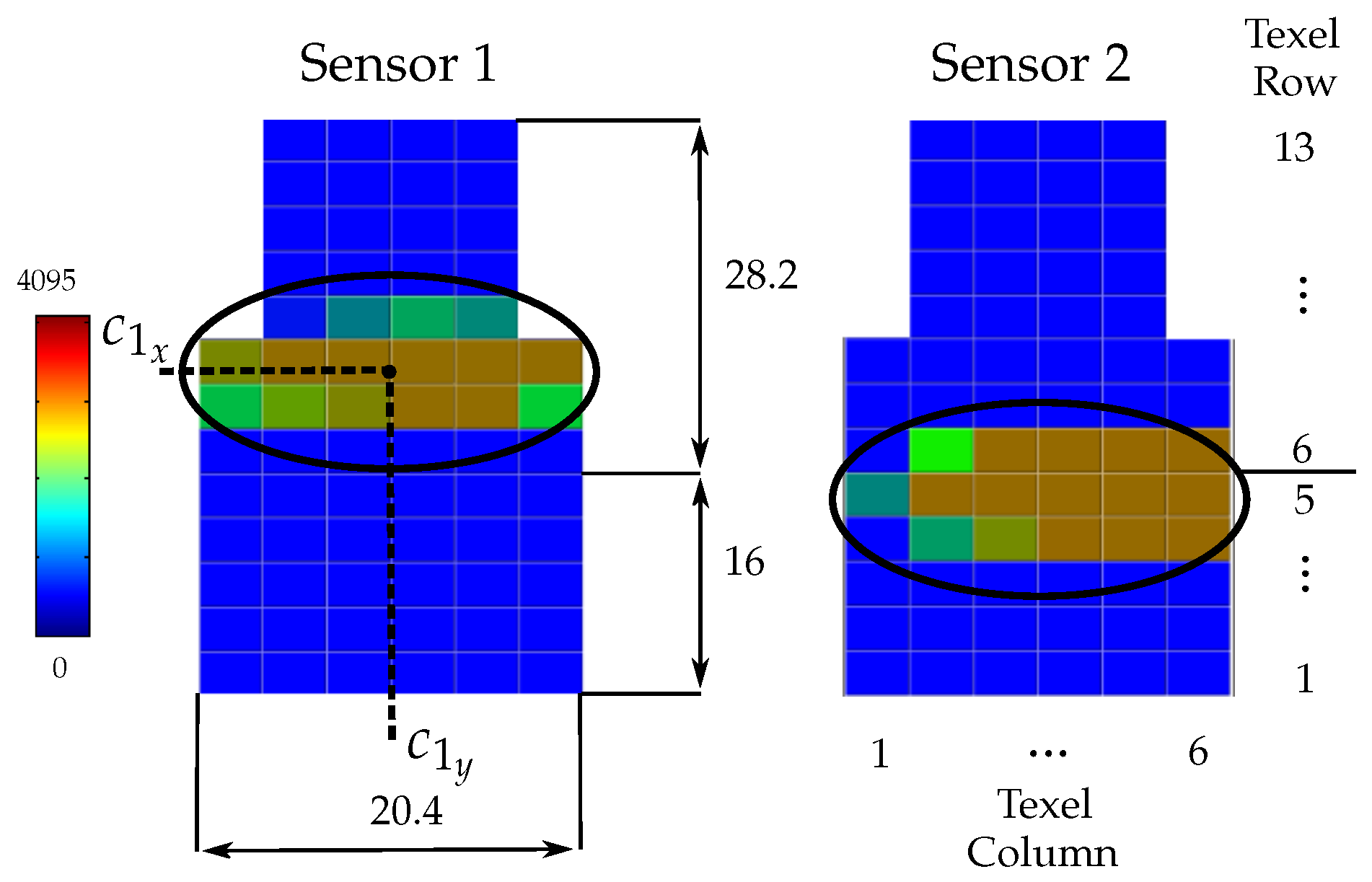

4.1. System Setup

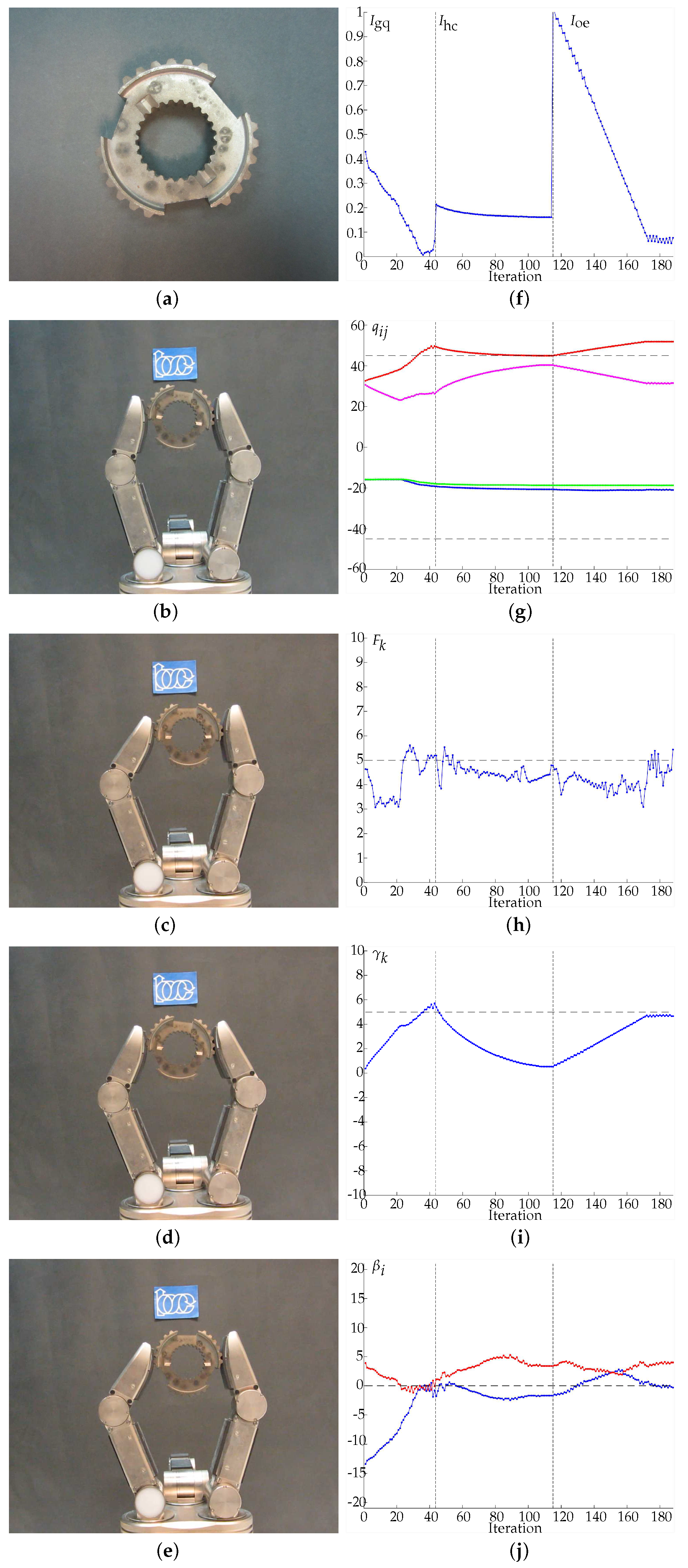

4.2. Experimental Results

5. Summary and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Bicchi, A. Hands for Dextrous Manipulation and Powerful Grasping: A Difficult Road Towards Simplicity. IEEE Trans. Robot. Autom. 2000, 16, 652–662. [Google Scholar] [CrossRef]

- Montaño, A.; Suárez, R. Unknown object manipulation based on tactile information. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 5642–5647. [Google Scholar]

- Bohg, J.; Morales, A.; Asfour, T.; Kragic, D. Data-Driven Grasp Synthesis—A Survey. IEEE Trans. Robot. 2014, 30, 289–309. [Google Scholar] [CrossRef]

- Miller, A.T.; Allen, P.K. GraspIt!: A Versatile Simulator for Robotic Grasping. IEEE Robot. Autom. Mag. 2004, 11, 110–122. [Google Scholar] [CrossRef]

- Diankov, R.; Kuffner, J. OpenRAVE: A Planning Architecture for Autonomous Robotics; Technical Report July; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2008. [Google Scholar]

- Vahrenkamp, N.; Kröhnert, M.; Ulbrich, S.; Asfour, T.; Metta, G.; Dillmann, R.; Sandini, G. Simox: A robotics toolbox for simulation, motion and grasp planning. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2013; Volume 1, pp. 585–594. [Google Scholar]

- Rosales, C.; Suárez, R.; Gabiccini, M.; Bicchi, A. On the synthesis of feasible and prehensile robotic grasps. In Proceedings of the IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 550–556. [Google Scholar]

- Cornella, J.; Suarez, R. Efficient Determination of Four-Point Form-Closure Optimal Constraints of Polygonal Objects. IEEE Trans. Autom. Sci. Eng. 2009, 6, 121–130. [Google Scholar] [CrossRef]

- Prado, R.; Suárez, R. Heuristic grasp planning with three frictional contacts on two or three faces of a polyhedron. In Proceedings of the IEEE International Symposium on Assembly and Task Planning, Montreal, QC, Canada, 19–21 July 2005; Volume 2005, pp. 112–118. [Google Scholar]

- Roa, M.A.; Suárez, R. Finding locally optimum force-closure grasps. Robot. Comput. Integr. Manuf. 2009, 25, 536–544. [Google Scholar] [CrossRef]

- Tovar, N.A.; Suárez, R. Grasp analysis and synthesis of 2D articulated objects with n links. Robot. Comput. Integr. Manuf. 2015, 31, 81–90. [Google Scholar] [CrossRef]

- Hang, K.; Li, M.; Stork, J.A.; Bekiroglu, Y.; Pokorny, F.T.; Billard, A.; Kragic, D. Hierarchical Fingertip Space: A Unified Framework for Grasp Planning and In-Hand Grasp Adaptation. IEEE Trans. Robot. 2016, 32, 960–972. [Google Scholar] [CrossRef]

- Przybylski, M.; Asfour, T.; Dillmann, R. Unions of balls for shape approximation in robot grasping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 1592–1599. [Google Scholar]

- Goldfeder, C.; Allen, P.K. Data-driven grasping. Auton. Robots 2011, 31, 1–20. [Google Scholar] [CrossRef]

- Li, M.; Hang, K.; Kragic, D.; Billard, A. Dexterous grasping under shape uncertainty. Robot. Auton. Syst. 2016, 75, 352–364. [Google Scholar] [CrossRef]

- Nogueira, J.; Martinez-Cantin, R.; Bernardino, A.; Jamone, L. Unscented Bayesian optimization for safe robot grasping. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1967–1972. [Google Scholar]

- Chen, D.; Dietrich, V.; Liu, Z.; von Wichert, G. A Probabilistic Framework for Uncertainty-Aware High-Accuracy Precision Grasping of Unknown Objects. J. Intell. Robot. Syst. 2018, 90, 19–43. [Google Scholar] [CrossRef]

- Sommer, N.; Billard, A. Multi-contact haptic exploration and grasping with tactile sensors. Robot. Auton. Syst. 2016, 85, 48–61. [Google Scholar] [CrossRef]

- Cornellà, J.; Suárez, R.; Carloni, R.; Melchiorri, C. Dual programming based approach for optimal grasping force distribution. Mechatronics 2008, 18, 348–356. [Google Scholar] [CrossRef]

- Roa, M.A.; Suárez, R. Grasp quality measures: Review and performance. Auton. Robots 2014, 38, 65–88. [Google Scholar] [CrossRef] [PubMed]

- Roa, M.A.; Suárez, R.; Cornellà, J. Medidas de calidad para la prensión de objetos. Rev. Iberoam. Autom. Inf. Ind. 2008, 5, 66–82. [Google Scholar] [CrossRef]

- Ferrari, C.; Canny, J. Planning optimal grasps. In Proceedings of the IEEE International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; pp. 2290–2295. [Google Scholar]

- Bicchi, A. On the Closure Properties of Robotic Grasping. Int. J. Robot. Res. 1995, 14, 319–334. [Google Scholar] [CrossRef]

- Garcia, G.J.; Corrales, J.A.; Pomares, J.; Torres, F. Survey of Visual and Force/Tactile Control of Robots for Physical Interaction in Spain. Sensors 2009, 9, 9689–9733. [Google Scholar] [CrossRef] [PubMed]

- Zou, L.; Ge, C.; Wang, Z.J.; Cretu, E.; Li, X. Novel Tactile Sensor Technology and Smart Tactile Sensing Systems: A Review. Sensors 2017, 17. [Google Scholar] [CrossRef] [PubMed]

- Kappassov, Z.; Corrales-Ramón, J.A.; Perdereau, V. Tactile sensing in dexterous robot hands—Review. Robot. Auton. Syst. 2015, 74, 195–220. [Google Scholar] [CrossRef]

- Bekiroglu, Y.; Detry, R.; Krajic, D. Learning Tactile Characterizations Of Object-And Pose-specfic Grasps. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1554–1560. [Google Scholar]

- Dang, H.; Weisz, J.; Allen, P.K. Blind grasping: Stable robotic grasping using tactile feedback and hand kinematics. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5917–5922. [Google Scholar]

- Boutselis, G.I.; Bechlioulis, C.P.; Liarokapis, M.; Kyriakopoulos, K.J. An integrated approach towards robust grasping with tactile sensing. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 3682–3687. [Google Scholar]

- Montaño, A.; Suárez, R. Object shape reconstruction based on the object manipulation. In Proceedings of the IEEE International Conference on Advanced Robotics, Montevideo, Uruguay, 25–29 November 2013; pp. 1–6. [Google Scholar]

- Jara, C.A.; Pomares, J.; Candelas, F.A.; Torres, F. Control Framework for Dexterous Manipulation Using Dynamic Visual Servoing and Tactile Sensors’ Feedback. Sensors 2014, 14, 1787–1804. [Google Scholar] [CrossRef] [PubMed]

- Cole, A.B.A.; Hauser, J.E.; Sastry, S.S. Kinematics and control of multifingered hands with rolling contact. IEEE Trans. Autom. Control 1989, 34, 398–404. [Google Scholar] [CrossRef]

- Li, Q.; Haschke, R.; Ritter, H.; Bolder, B. Towards unknown objects manipulation. IFAC Proc. Volumes 2012, 45, 289–294. [Google Scholar] [CrossRef]

- Song, S.K.; Park, J.B.; Choi, Y.H. Dual-fingered stable grasping control for an optimal force angle. IEEE Trans. Robot. 2012, 28, 256–262. [Google Scholar] [CrossRef]

- Platt, R. Learning and Generalizing Control-Based Grasping and Manipulation Skills. Ph.D. Thesis, University of Massachusetts Amherst, Amherst, MA, USA, 2006. [Google Scholar]

- Tahara, K.; Arimoto, S.; Yoshida, M. Dynamic object manipulation using a virtual frame by a triple soft-fingered robotic hand. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4322–4327. [Google Scholar]

- MacKenzie, C.L.; Iberall, T. The Grasping Hand; Elsevier: New York, NY, USA, 1994; Volume 104, p. 482. [Google Scholar]

- Chang, L.Y.; Pollard, N.S. Video survey of pre-grasp interactions in natural hand activities. RSS Workshop: Understanding the Human Hand for Advancing Robotic Manipulation, Seattle, WA, USA, 28 June–1 July 2009; pp. 1–2. [Google Scholar]

- Toh, Y.P.; Huang, S.; Lin, J.; Bajzek, M.; Zeglin, G.; Pollard, N.S. Dexterous telemanipulation with a multi-touch interface. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Osaka, Japan, 29 November–1 December 2012; pp. 270–277. [Google Scholar]

- Nguyen, V.D. Constructing Force- Closure Grasps. Int. J. Robot. Res. 1988, 7, 3–16. [Google Scholar] [CrossRef]

- Wörn, H.; Haase, T. Force approximation and tactile sensor prediction for reactive grasping. In Proceedings of the World Automation Congress, Puerto Vallarta, Mexico, 24–28 June 2012; pp. 1–6. [Google Scholar]

- Liégeois, A. Automatic Supervisory Control of the Configuration and Behavior of Multibody Mechanisms. IEEE Trans. Syst. Man Cybern. 1977, 7, 868–871. [Google Scholar]

- Ozawa, R.; Arimoto, S.; Nguyen, P.; Yoshida, M.; Bae, J.H. Manipulation of a circular object in a horizontal plane by two finger robots. In Proceedings of the 2004 IEEE International Conference on Robotics and Biomimetics, Shenyang, China, 22–26 August 2004; pp. 517–522. [Google Scholar]

- Montaño, A.; Suárez, R. Commanding the object orientation using dexterous manipulation. In Advances in Intelligent Systems and Computing; Springer Verlag: Berlin, Germany, 2016; Volume 418, pp. 69–79. [Google Scholar]

- Tomo, T.P.; Schmitz, A.; Wong, W.K.; Kristanto, H.; Somlor, S.; Hwang, J.; Jamone, L.; Sugano, S. Covering a Robot Fingertip With uSkin: A Soft Electronic Skin With Distributed 3-Axis Force Sensitive Elements for Robot Hands. IEEE Robot. Autom. Lett. 2018, 3, 124–131. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Montaño, A.; Suárez, R. Manipulation of Unknown Objects to Improve the Grasp Quality Using Tactile Information. Sensors 2018, 18, 1412. https://doi.org/10.3390/s18051412

Montaño A, Suárez R. Manipulation of Unknown Objects to Improve the Grasp Quality Using Tactile Information. Sensors. 2018; 18(5):1412. https://doi.org/10.3390/s18051412

Chicago/Turabian StyleMontaño, Andrés, and Raúl Suárez. 2018. "Manipulation of Unknown Objects to Improve the Grasp Quality Using Tactile Information" Sensors 18, no. 5: 1412. https://doi.org/10.3390/s18051412

APA StyleMontaño, A., & Suárez, R. (2018). Manipulation of Unknown Objects to Improve the Grasp Quality Using Tactile Information. Sensors, 18(5), 1412. https://doi.org/10.3390/s18051412