Abstract

In a group of general geometric primitives, plane-based features are widely used for indoor localization because of their robustness against noises. However, a lack of linearly independent planes may lead to a non-trivial estimation. This in return can cause a degenerate state from which all states cannot be estimated. To solve this problem, this paper first proposed a degeneracy detection method. A compensation method that could fix orientations by projecting an inertial measurement unit’s (IMU) information was then explained. Experiments were conducted using an IMU-Kinect v2 integrated sensor system prone to fall into degenerate cases owing to its narrow field-of-view. Results showed that the proposed framework could enhance map accuracy by successful detection and compensation of degenerated orientations.

1. Introduction

Plane features have been widely used for simultaneous localization and mapping (SLAM) of indoor environments due to the following two reasons: (1) there are abundant planes in man-made indoor spaces; and (2) sensor noise can be sufficiently reduced by conventional plane extraction algorithms.

However, the use of plane features can fall into degenerate cases when the number of linearly independent information of detected planes is insufficient for pose estimation. In other words, if less than three independent planes are detected from two consecutive 3D poses, the relative geometric relationship cannot be fully estimated. For example, for a long and flat corridor, the amount of translation along the corridor’s direction cannot be detected even with wide field-of-view (FoV) LiDAR sensors. If one uses narrow FoV sensors such as Kinect (Microsoft), these degeneracies are frequently encountered, even in sufficiently complex indoor spaces [1].

In this paper, we proposed a way to handle these degeneracy cases using a commercially efficient sensor combination: a Kinect v2 with an inertial measurement unit (IMU). As is well known, a lot of research has been conducted regarding the degeneracy problems as in [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21]. This research can be categorized in two ways: (1) directly eliminating degeneracy by employing constrained motion [2,3], sensor fusion [4,5,6,7], additional or new types of feature(s) [8,9,10,11,12,13], online calibration [14], determining system configurations [15], or probabilistic depth map [16]; and (2) indirectly reducing the effect of degeneracy by minimizing null-space components in the direction of the degeneracy. Pathak et al. [17] have proposed a method that can substitute null-space components by odometry reading. Our method is complementary to the work of Pathak et al. in that our method considers the orientation while Pathak et al. handle the translation. Recently, Zhang et al. [18] have suggested a method that covers degeneracies both in rotation and translation. However, their method is designed for LiDAR, which has a wider FoV than Kinect v2 used in the present study.

The contribution of this paper consists of two parts. One is detection of degeneracy between two sets of plane features by evaluating ratios of eigenvalues of a second moment matrix. Here, we used the fact that degeneracy could induce significant uncertainty. This uncertainty is reflected in the second moment matrix as a relatively small or infinitesimal eigenvalue. The other is to compensate orientations that encountered degeneracy by projecting the orientation value of IMU to those of corresponding plane features. This is possible due to orthogonal properties of the second moment matrix’s subspaces. This enables projection only to the degenerate direction.

On the basis of the proposed detection and compensation method, we implemented a seamless 3D SLAM as shown in Figure 1 using a Kinect v2 and IMU. Experiments were conducted in a typical indoor place where more than 30% of place indices corresponded to the degeneracy case. Results showed that the proposed framework enhanced map accuracy compared to the method of Pathak et al. [17] by successfully detecting and compensating degenerated orientations.

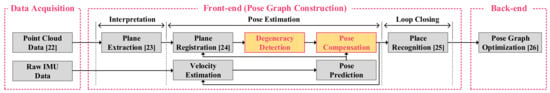

Figure 1.

Schematic diagram of our proposed continuous 3D SLAM framework [22,23,24,25,26]. Yellow-colored boxes are contributions of this paper.

2. Seamless 3D SLAM Framework

The proposed pipeline in Figure 1 has the conventional Graph SLAM structure with three main modules: data acquisition, front-end construction, and back-end optimization. In the first block (data acquisition), raw 3D point cloud data from Kinect v2 and 6-DoF (degrees of freedom) measurements from the IMU are acquired. Those data are passed to the second block and plane features are extracted by using algorithms in [23,24]. Here, an -th plane feature () consists of unit normal (), which is orthogonal to the surface, and a perpendicular distance () from the origin to the surface.

At this point, the degeneracy can be induced if less than three independent plane-pairs are used. As these degeneracies provide clear evidence in the second moment matrix, the degeneracy detection algorithm (in Section 2.1, our first contribution) can analyze the matrix and pinpoint the degeneracy direction. Then, the pose compensation algorithm (in Section 2.2, our second contribution) can compensate for the degeneracy by projecting it to that of the IMU. Remaining procedures are conventional loop detection and back-end optimization adopted from methods in [25,26], respectively.

2.1. Degeneracy Detection Algorithm

Relative pose estimation with plane features are illustrated in Figure 2, where sets of plane features are associated with each other. When there are more than three pairs of planes (as in Figure 2a), all 6-DoF parameters (3-DoF for rotation and 3-DoF for translation) can be estimated. However, when one pair of planes with identical normals exist (as in Figure 2d), 1- and 2-DoF degeneracy arises for rotation and translation, respectively. These two cases are out of the scope of this paper as noise (from motion as well as sensors) either does not significantly affect pose estimation or does not exist. However, in the case of 2-pair correspondence, noises can convert a 1-pair correspondence into a false 2-pair correspondence as shown in Figure 2c. The role of this subsection is to propose an algorithm that can discriminate false 2-pair correspondence from real 2-pair correspondence cases.

Figure 2.

l̃eft to r̃ight point clouds for possible situations of plane feature based pose estimation. (a) 3-pair correspondence; (b) 2-pair correspondence; (c) fake 2-pair correspondence; (d) 1-pair correspondence with identical normals.

For that purpose, we adopted the second moment matrix, in which the rank and ratio of eigenvalues indicate the number of linearly independent plane correspondences and distinctiveness among independent correspondences, respectively.

Let us assume that there are corresponding planes () between two consecutive frames from l̃eft to r̃ight. On the l̃eft coordinate, a stack of unit normals () of are defined as . Here, the second moment matrix () is constituted by

To detect the rank and ratio of its eigenvalues, let us conduct the eigenvalue decomposition as

where is the orthonormal square matrix whose columns are eigenvectors and is the diagonal matrix whose elements are associated eigenvalues (). For convenient representation, eigenvectors are sorted according to their eigenvalues in descending order (). These eigenvalues are equal to or greater than 0 because is a positive semi-definite matrix.

Now, let us exploit the ratio of eigenvalues (). Its purpose is to discriminate the effective rank. Note that the real and false 2-pair correspondences can be detected by evaluating this ratio as its value tends to be close to 0 (i.e., ) for false cases but higher than a certain threshold for real cases.

Last but not the least, the remaining task is to select a proper threshold considering sensor noise level. For Kinect v2, it has been shown that its depth distortion yields a fluctuation error within ±6 mm [27]. With this amount of distortion, rotational covariance between poses is evaluated to be 4° for our plane-based method. In accordance with the empirical analysis, let us assume that there are two unit normals and of which the included angle is 4°. Then, the value of the second moment matrix for and can be calculated as

Here, note that the dimensionality of the second moment matrix is reduced from 3 to 2 because the number of the sample (, ) is smaller than the dimension of vector space (). Thus, using these eigenvalues in Equation (3), we set the threshold of the ratio to be 1.2 × 10 (≒ 1.2 × 10/9.988 × 10).

2.2. Compensation Method for Degenerate Rotation

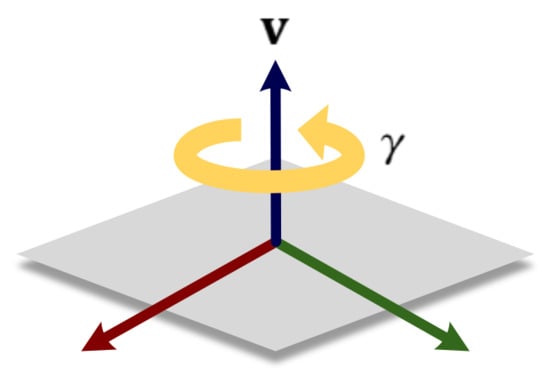

In the 1-pair correspondence case, as shown in Figure 3, the amount of rotation () normal to the plane () cannot be estimated, where corresponds to the one effective eigenvector of the second moment matrix in Equation (2). Here, IMU measurements are only used for compensating an ill-conditioned component regarding of the state that cannot be estimated by the features. For other well-conditioned components of which value can be estimated from the feature, measurements of IMU are not utilized due to their inaccuracy compared to those of features.

Figure 3.

Illustration of rotational singularity under the constraint of 1-pair correspondence.

Before looking deep into mathematical details, let us show some quaternion definitions. A unit quaternion () for the relative rotation is represented by

where and are scalar and vector parts, respectively. The conjugate of is

The product of two quaternions and is

where · is the dot product and × is the cross product.

The main idea of this subsection is to set by projecting the IMU’s estimation in a way that it becomes parallel to the plane. In other words, this idea is a hybrid of two different estimations. One prediction is derived from the plane-based estimation. It keeps the plane constraint. However, it has an uncertain value. The other is derived from the IMU estimation. It does not necessarily keep the plane constraint. However, it has accurate value (at least for the given short time interval).

Now, let us denote the IMU prediction to be and the amount of rotation that projects parallel to the plane to be . Here, can be derived from two normal vectors, and . They are updated normal vectors by IMU () and plane information (), respectively:

Now, can be calculated by projecting parallel to in the shortest arc length as

2.3. Further Processes

Although the orientation in the degeneracy direction is compensated for by Equation (9), a translation in that direction is still in degeneracy. Pathak et al. [17] have proposed a method that corrects uncertain translation by using IMU’s acceleration measurements. We also adopted this method for translation.

For loop-closure, we used a 3D Gestalt descriptor based method proposed in [25]. Finally, for back-end optimization, we implemented IRLS (iteratively reweighted least squares) that excluded less accurate outliers as in [26].

3. Experiments

For performance validation, SLAM experiments were conducted with two different sensor systems as shown in Figure 4. One (Figure 4a) is a low-cost sensor system with a Kinect v2 and cheap IMU (CH-UM7: ±4° for dynamic pitch/roll accuracy, ±8° for dynamic yaw accuracy). The other (Figure 4b) is a high-cost sensor system [28] with Velodyne LiDAR (HDL-32E) and MicroStrain IMU (3DM-GX3-45: ±2° for dynamic pitch/roll/yaw accuracy). The purpose of the high-cost system is to extract the ground truth.

Figure 4.

Two different sensor systems used in the experiment. (a) a hand-held low-cost sensor system; (b) a backpack high-cost sensor system. The high-cost system is rigidly equipped with the low-cost system for rigorous validation.

For data acquisition, an operator carried the system (as shown in Figure 5) through a small-sized building with narrow pathways. The operator navigated 62 m. A total of 410 place indices were generated. As a result, a graph was built where 255, 145, and nine edges were in 3-pair, 2-pair, and 1-pair plane correspondences, respectively.

Figure 5.

The sensor system used to acquire several datasets.

Given the information, two methods were applied. One was a plane-IMU integrated (pl-IMU) method, which was an extension of the plane-based method [17] in a way that IMU information could be embedded. The other was our proposed method, which was identical to the previous method except that 1-pair corresponding degeneracy was detected and compensated for.

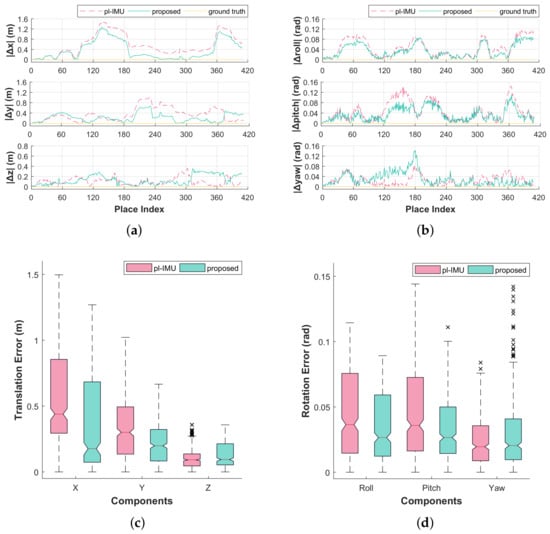

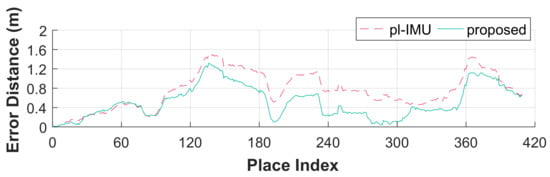

As shown in Figure 6, the accuracy of all components was increased except for the two components z and yaw (of which median values are similar, respectively, in both methods). Figure 7 shows error distances of the two methods relative to the ground truth for 3D pose states. Error averages were significantly reduced using the proposed method as shown in Table 1.

Figure 6.

Graphs and box-plots for local pose error compared to the ground truth for each compensation method (pl-IMU and our proposed method). (a) local errors of translational components; (b) local errors of rotational components; (c) box-plot of translation error; (d) box-plot of rotation error.

Figure 7.

Local error distances compared to the ground truth for each compensation method.

Table 1.

Average errors of 3D poses for two different methods.

Here, note that compensating rotation decreased both translation and rotation errors. This is a natural consequence as graph optimization increases the overall map accuracy due to rotational updates.

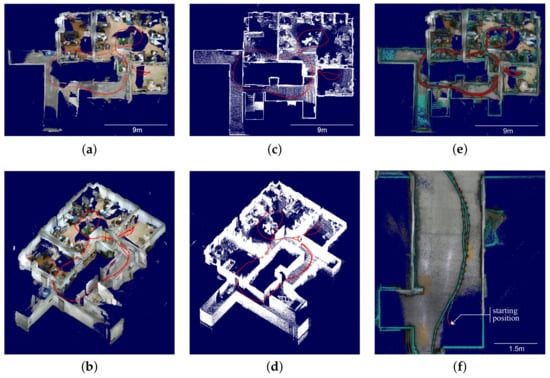

The performance can also be verified by 3D mapping. The map created by the proposed method showed high-quality consistency (Figure 8a,b), similar to that shown by the ground truth (Figure 8c,d). Figure 8e shows a top view where two trajectories, corresponding to the ground truth (green line) and the estimation of the proposed method (red dot), are superimposed. In Figure 8f, it can be verified that the loop closure is successfully conducted where accumulated errors in Figure 8e are significantly redeemed.

Figure 8.

Trajectories and mapping results with truncated ceiling. (a) optimized map top view; (b) optimized map bird’s-eye view; (c) ground truth map top view; (d) ground truth map bird’s-eye view; (e) superimposed map top view; (f) superimposed map close-up view. (a,b) display RGB-D map optimized by the proposed method using low-cost sensor system. (c,d) show ground truth acquired using high-cost sensor system. (e,f) show superimposed map between the optimized map and the ground truth, where green-colored point cloud and trajectory are the ground truth. To improve depth visualization, an eye-dome lighting shader is applied to (e,f) where the point cloud of ground truth is randomly subsampled by 10%.

4. Conclusions

This paper proposed a degeneracy detection and compensation method for orientations that could arise when 3D SLAM algorithms used plane features. This degeneracy is induced when less than three plane-pairs are detected for two consecutive poses. It has significant correlation with the narrowness of a sensor’s field of view or the target environment. Our experiment showed that, when a 550 m2 indoor space was mapped with a Kinect v2, 37.7% of data acquisition poses were in a degeneracy situation.

The proposed method detected degeneracy by using the rank of the second moment matrix constituted by plane normals. To compensate orientations encountered by the degeneracy, IMU orientations were projected in a direction that was orthogonal to non-degenerate orientations.

Acknowledgments

This work was partly supported by the Brain Korea 21 Plus Project of School of Electrical Engineering, Korea University, a National Research Foundation of Korea (NRF) grant (No. 2011-0031648) funded by the Ministry of Science and ICT (MSIT), a Korea Evaluation Institute of Industrial Technology (KEIT) grant (No. 10073166) funded by the Ministry of Trade, Industry and Energy (MOTIE), and a grant (No. 17NSIP-B135746-01) funded by the Ministry of Land, Infrastructure and Transport (MOLIT), Republic of Korea.

Author Contributions

HyunGi Cho and Nakju Doh conceived the ideas and concepts. HyunGi Cho and Suyong Yeon implemented these ideas. HyunGi Cho and Hyunga Choi performed the experiments. HyunGi Cho, Suyong Yeon, and Nakju Doh analyzed the data. HyunGi Cho and Nakju Doh wrote the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cho, H.G.; Yeon, S.; Choi, H.; Doh, N.L. 3D Pose Estimation with One Plane Correspondence using Kinect and IMU. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1970–1975. [Google Scholar]

- Tardioli, D.; Villarroel, J. Odometry-less Localization in Tunnel-like Environments. In Proceedings of the 2014 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Espinho, Portugal, 14–15 May 2014; pp. 65–72. [Google Scholar]

- Johannsson, H.; Kaess, M.; Fallon, M.; Leonard, J.J. Temporally Scalable Visual SLAM using a Reduced Pose Graph. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 54–61. [Google Scholar]

- Rosell-Polo, J.R.; Gregorio, E.; Gené, J.; Llorens, J.; Torrent, X.; Arnó, J.; Escolà, A. Kinect v2 Sensor-Based Mobile Terrestrial Laser Scanner for Agricultural Outdoor Applications. IEEE/ASME Trans. Mechatron. 2017, 22, 2420–2427. [Google Scholar] [CrossRef]

- Aghili, F.; Su, C.Y. Robust Relative Navigation by Integration of ICP and Adaptive Kalman Filter Using Laser Scanner and IMU. IEEE/ASME Trans. Mechatron. 2016, 21, 2015–2026. [Google Scholar] [CrossRef]

- Panahandeh, G.; Jansson, M. Vision-Aided Inertial Navigation Based on Ground Plane Feature Detection. IEEE/ASME Trans. Mechatron. 2014, 19, 1206–1215. [Google Scholar]

- Aghili, F.; Salerno, A. Driftless 3D Attitude Determination and Positioning of Mobile Robots By Integration of IMU With Two RTK GPSs. IEEE/ASME Trans. Mechatron. 2013, 18, 21–31. [Google Scholar] [CrossRef]

- Raposo, C.; Lourenço, M.; Antunes, M.; Barreto, J.P. Plane-based Odometry using an RGB-D Camera. In Proceedings of the British Machine Vision Conference (BMVC 2013), Bristol, UK, 9–13 September 2013. [Google Scholar]

- Taguchi, Y.; Jian, Y.D.; Ramalingam, S.; Feng, C. Point-plane SLAM for Hand-held 3D Sensors. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 5182–5189. [Google Scholar]

- Li, R.; Liu, Q.; Gui, J.; Gu, D.; Hu, H. A Novel RGB-D SLAM Algorithm Based on Points and Plane-Patches. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 1348–1353. [Google Scholar]

- Proença, P.F.; Gao, Y. Probabilistic Combination of Noisy Points and Planes for RGB-D Odometry. In Proceedings of the Towards Autonomous Robotic Systems 18th Annual Conference, Guildford, UK, 19–21 July 2017; pp. 340–350. [Google Scholar]

- Sun, Q.; Yuan, J.; Zhang, X.; Sun, F. RGB-D SLAM in Indoor Environments with STING-Based Plane Feature Extraction. IEEE/ASME Trans. Mechatron. 2017. [Google Scholar] [CrossRef]

- Ma, K.; Zhu, J.; Dodd, T.J.; Collins, R.; Anderson, S.R. Robot Mapping and Localisation for Feature Sparse Water Pipes Using Voids as Landmarks. In Proceedings of the 16th Annual Conference Towards Autonomous Robotic Systems, Liverpool, UK, 8–10 September 2015; pp. 161–166. [Google Scholar]

- Keivan, N.; Sibley, G. Online SLAM with Any-time Self-calibration and Automatic Change Detection. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5775–5782. [Google Scholar]

- Tribou, M.J.; Wang, D.W.; Waslander, S.L. Degenerate Motions in Multicamera Cluster SLAM with Non-Overlapping Fields of View. Image Vis. Comput. 2016, 50, 27–41. [Google Scholar] [CrossRef]

- Zhou, Y.; Yan, F.; Zhou, Z. Probabilistic Depth Map Model for Rotation-Only Camera Motion in Semi-Dense Monocular SLAM. In Proceedings of the 2016 International Conference on Virtual Reality and Visualization (ICVRV), Hangzhou, China, 24–26 September 2016; pp. 8–15. [Google Scholar]

- Pathak, K.; Birk, A.; Vaskevicius, N.; Poppinga, J. Fast Registration based on Noisy Planes with Unknown Correspondences for 3D Mapping. IEEE Trans. Robot. 2010, 26, 424–441. [Google Scholar] [CrossRef]

- Zhang, J.; Kaess, M.; Singh, S. On Degeneracy of Optimization-based State Estimation Problems. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 809–816. [Google Scholar]

- Rong, Z.; Michael, N. Detection and Prediction of Near-Term State Estimation Degradation via Online Nonlinear Observability Analysis. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 28–33. [Google Scholar]

- Choi, D.G.; Bok, Y.; Kim, J.S.; Kweon, I.S. Extrinsic Calibration of 2-D Lidars Using Two Orthogonal Planes. IEEE Trans. Robot. 2016, 32, 83–98. [Google Scholar] [CrossRef]

- Kang, J.; Doh, N.L. Full-DOF Calibration of a Rotating 2-D LIDAR With a Simple Plane Measurement. IEEE Trans. Robot. 2016, 32, 1245–1263. [Google Scholar] [CrossRef]

- Wiedemeyer, T. IAI Kinect2. 2014–2015. Available online: https://github.com/code-iai/iai_kinect2 (accessed on 12 January 2018).

- Yeon, S.; Jun, C.; Choi, H.; Kang, J.; Yun, Y.; Doh, N.L. Robust-PCA-based Hierarchical Plane Extraction for Application to Geometric 3D Indoor Mapping. Int. J. Ind. Robot 2014, 41, 203–212. [Google Scholar] [CrossRef]

- Yun, Y.; Yeon, S.; Jun, C.; Choi, H.; Kang, J.; Doh, N.L. RANSAC-based Data Association Algorithm for the application to 3D SLAM. In Proceedings of the Korea Robotics Society Annual Conference, Gangwon-do, Korea, 21–23 June 2012; pp. 109–111. [Google Scholar]

- Bosse, M.; Zlot, R. Place Recognition using Keypoint Voting in Large 3D Lidar Datasets. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2677–2684. [Google Scholar]

- Zlot, R.; Bosse, M. Efficient Large-scale Three-dimensional Mobile Mapping for Underground Mines. J. Field Robot. 2014, 31, 758–779. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Rodriguez, D.; Kaestner, R.; Hutter, M.; Siegwart, R. Kinect v2 for Mobile Robot Navigation: Evaluation and Modeling. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 388–394. [Google Scholar]

- Lee, K.; Ryu, S.H.; Yeon, S.; Cho, H.G.; Jun, C.; Kang, J.; Choi, H.; Hyeon, J.; Baek, I.; Jung, W.; et al. Accurate Continuous Sweeping Framework in Indoor Spaces With Backpack Sensor System for Applications to 3D Mapping. IEEE Robot. Autom. Lett. 2016, 1, 316–323. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).