New Dark Area Sensitive Tone Mapping for Deep Learning Based Traffic Sign Recognition

Abstract

:1. Introduction

2. Related Works

2.1. Traffic Sign Detection

2.2. Detection Methods

2.3. Tone Mapping

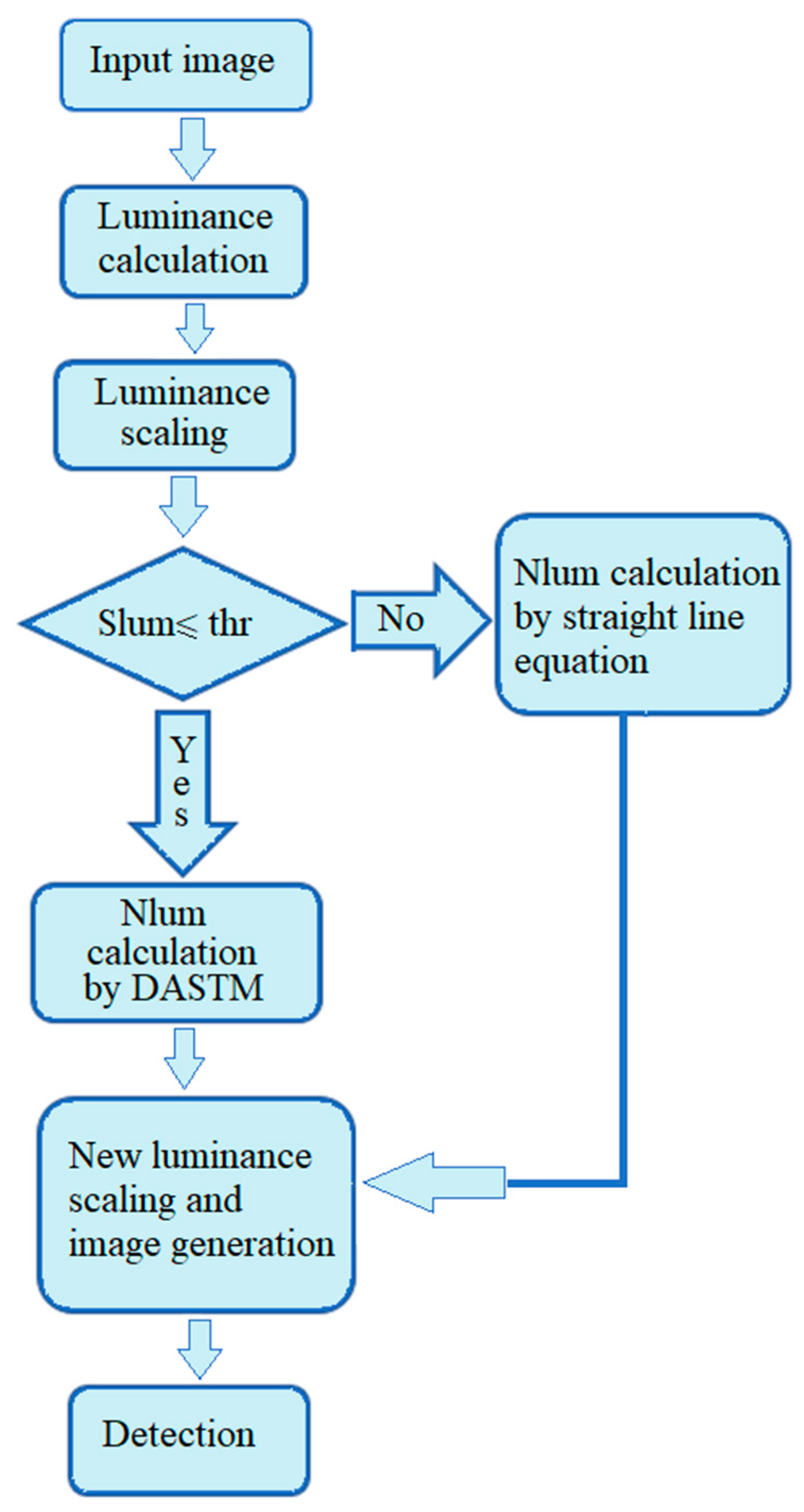

3. Proposed Intelligent Traffic Sign Recognition (ITSR) System

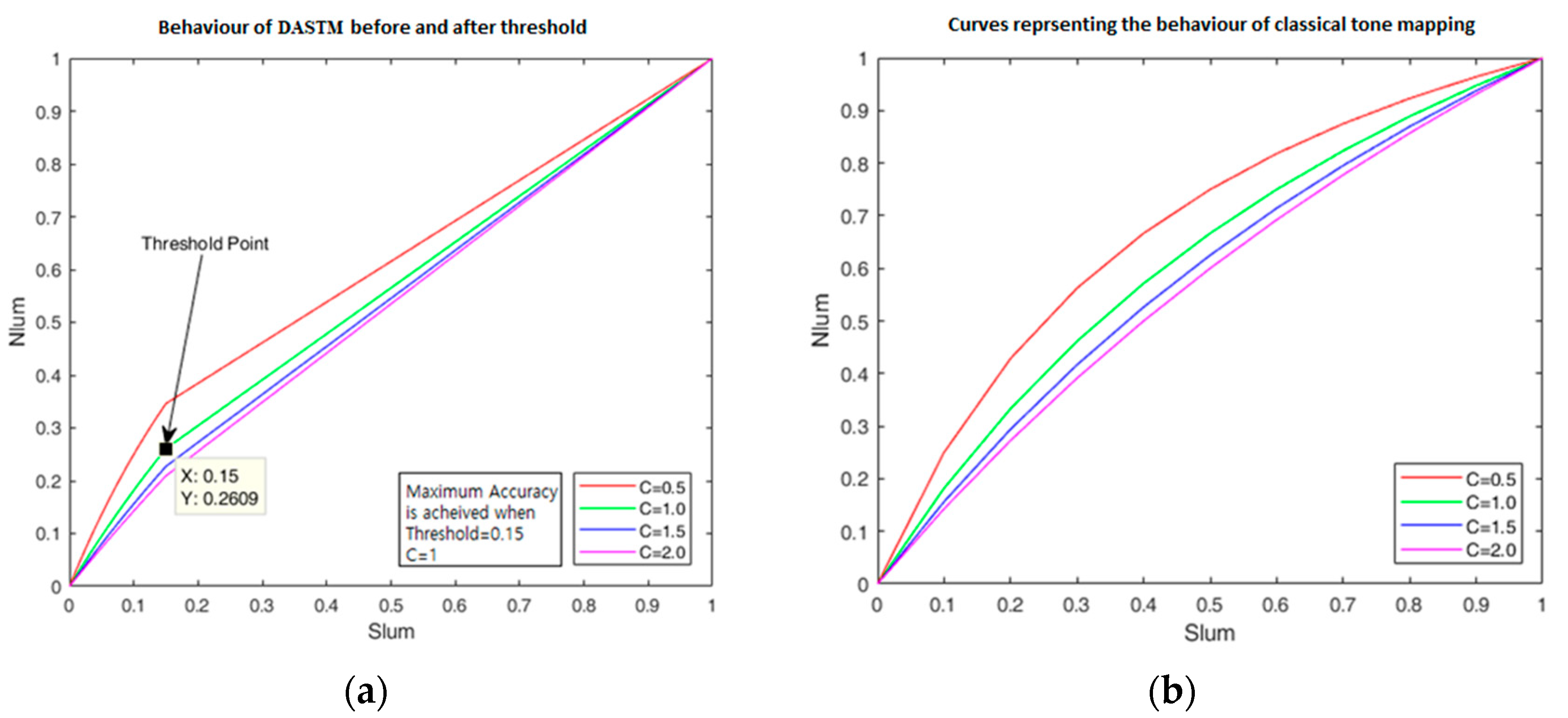

3.1. Failure of Classical Tone Mapping and Need of DASTM

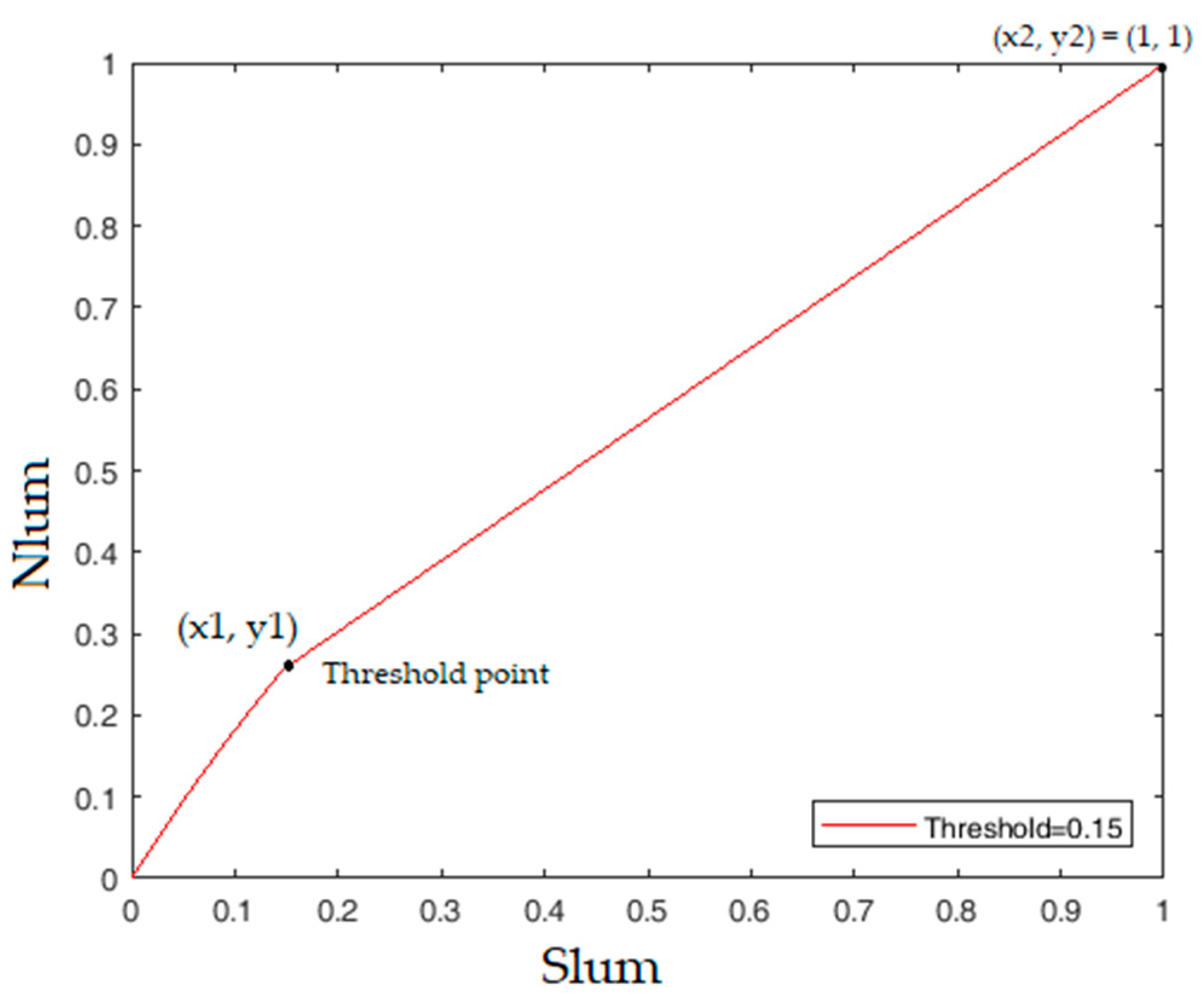

3.2. Dark Area Sensitive Tone Mapping (DASTM)

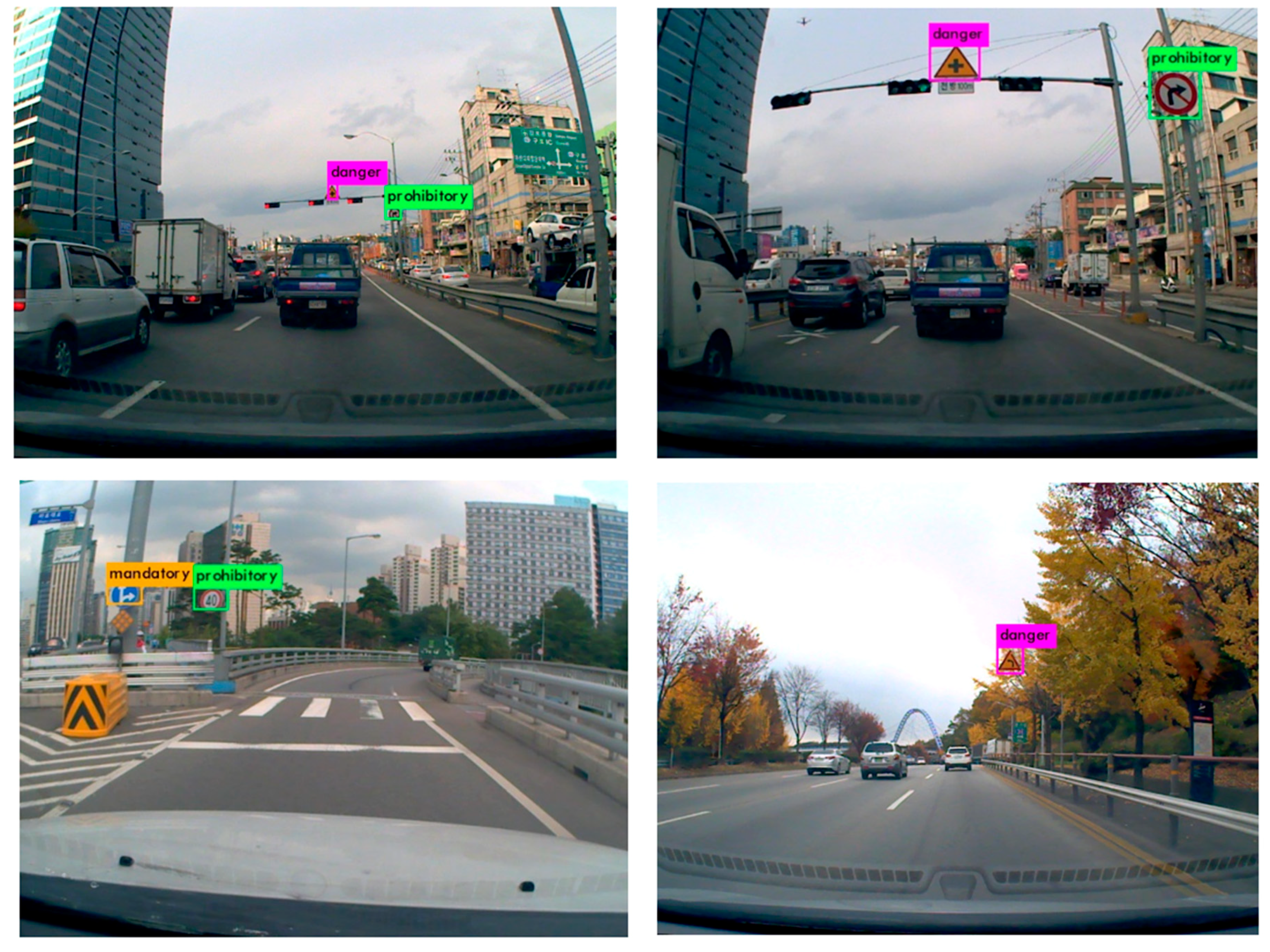

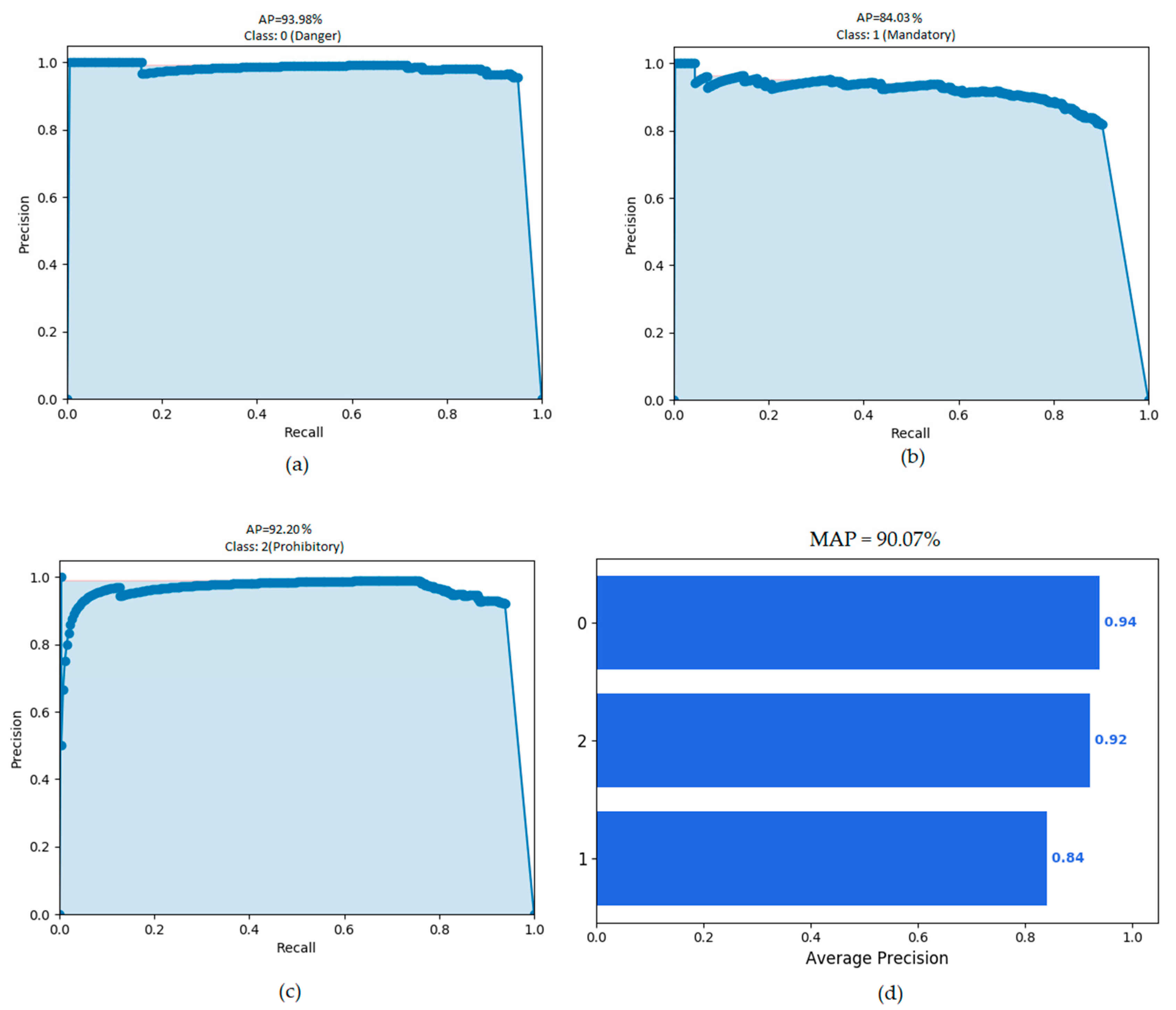

4. Experimental Results

4.1. Making New Training Dataset

4.2. Training and Testing

4.3. Evaluation Method

5. Discussion on Experimental Results

Possible Future Research

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Reinhard, E.; Stark, M.; Shirley, P.; Ferwerda, J. Photographic Tone Reproduction of Digital Images. In Proceedings of the 29th International Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), San Antonio, TX, USA, 21–26 July 2002; pp. 267–276. [Google Scholar] [CrossRef]

- Shin, H.; Yu, T.; Ismail, Y.; Saeed, B. Rendering high dynamic range images by using integrated global and local processing. Opt. Eng. 2011, 50, 117002. [Google Scholar] [CrossRef]

- Rehman, Y.; Riaz, I.; Shin, H. D-patches: Effective traffic sign detection with occlusion handling. IET Comput. Vis. 2017, 11, 368–377. [Google Scholar] [CrossRef]

- YOLOv3. Available online: https://pjreddie.com/yolo/ (accessed on 22 September 2018).

- Manocha, P.; Kumar, A.; Khan, J.A.; Shin, H. Korean Traffic Sign Detection Using Deep Learning; ISOCC: Daegu, Korea, November 2018. [Google Scholar]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of traffic signs in real- world images: The German Traffic Sign Detection Benchmark. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013. [Google Scholar]

- Dollar, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramid for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Liang, D.; Zhang, S.; Huang, X.; Li, B.; Hu, S. Traffic-Sign Detection and Classification in Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Traffic Sign Detection Using You Only Look Once Framework. Available online: http://cs231n.stanford.edu/reports/2016/pdfs/263_Report.pdf (accessed on 27 September 2018).

- Belgium TS Dataset. Available online: https://btsd.ethz.ch/shareddata/ (accessed on 27 September 2018).

- Eichner, M.L.; Breckon, T.P. Integrated Speed Limit Detection and Recognition from Real-Time Video. In Proceedings of the 2018 IEEE Intelligent Vehicle Symposium, Eindhoven University of Technology, Eindhoven, The Netherlands, 4–6 June 2008. [Google Scholar]

- Ellahyani, A.; Ansari, M.E.; Jaafari, I.E. Traffic Sign Detection and Recognition using Features Combination and Random Forests. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 686–693. [Google Scholar] [CrossRef]

- Kumar, A.D.; Karthika, R.; Parameswaran, L. Novel Deep Learning Model for Traffic Sign Detection Using Capsule Networks. Int. J. Pure Appl Math. 2018, 118, 4543–4548. [Google Scholar]

- Liu, C.; Chang, F.; Chen, Z.; Liu, D. Fast Traffic Sign Recognition via High-Contrast Region Extraction and Extended Sparse Representation. IEEE Trans. Intell. Transp. Syst. 2016, 17, 79–92. [Google Scholar] [CrossRef]

- Zuo, Z.; Yu, K.; Zhou, Q.; Wang, X.; Li, T. Traffic Sign Detection Based on Faster R-CNN. In Proceedings of the IEEE 37th International Conference on Distributed Computing Systems Workshops, Atlanta, GA, USA, 5–8 June 2017. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Available online: https://arxiv.org/abs/1506.01497 (accessed on 27 September 2018).

- Zeng, Z.; Li, Z.; Cheng, D.; Zhang, H.; Zhan, K.; Yang, Y. Two-Stream Multirate Recurrent Neural Network for Video-Based Pedestrian Reidentification. IEEE Trans. Ind. Inform. 2018, 14, 3179–3186. [Google Scholar] [CrossRef]

- Chang, X.; Nie, F.; Wang, S.; Yang, Y. Compound Rank-k Projection for Bilinear Analysis. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1502–1513. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. Available online: https://arxiv.org/pdf/1612.08242.pdf (accessed on 27 September 2018).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016. [Google Scholar]

- IMAGENET. Available online: http://image-net.org/ (accessed on 27 September 2018).

- Drago, F.; Myszkowski, K.; Annen, T.; Chiba, N. Adaptive Logarithmic Mapping for Displaying High Contrast Scenes. Comput. Graph. Forum 2003, 22, 419–426. [Google Scholar] [CrossRef]

- CUDA Processing Flow. Available online: https://commons.wikimedia.org/wiki/File:CUDA_processing_flow_ (accessed on 27 October 2018).

- GitHub—Cartucho/mAP: Mean Average Precision. Available online: https://github.com/Cartucho/mAP (accessed on 27 Sepetmber 2018).

| Tone Mapping Method | Dataset | Maximum MAP |

|---|---|---|

| DASTM | KTSD | 90.07% |

| Classical | KTSD | 83.35% |

| DASTM | GTSBD | 100% |

| Classical | GTSBD | 95.26% |

| Detection Method | MAP on KTSD ± STD | MAP on GTSBD ± STD |

|---|---|---|

| ITSR (DASTM) | 90.07% ± 4.33 | 100% ± 0 |

| D-Patches | 79.60% ± 5.82 | 100% ± 0 |

| YOLOv3 | 73.94% ± 6.12 | 96.53% ± 2.31 |

| TS detector | 86.61 ± 5.33 | 97.82% ± 1.91 |

| Detection Method | Resolution | Time to Process 1 Frame |

|---|---|---|

| ITSR (DASTM) | 800 × 600 | 0.134 s (on GPU) |

| D-Patches | 800 × 600 | 2.2 s (on CPU) |

| YOLOv3 | 800 × 600 | 0.050 s (on GPU) |

| TS detector | 800 × 600 | 0.059 s (on GPU) |

| C | Threshold | Nlum at Point Slum = thr | MAP |

|---|---|---|---|

| 0.5 | 0.05 | 0.13 | 82.40% |

| 0.5 | 0.10 | 0.25 | 89.71% |

| 0.5 | 0.15 | 0.34 | 84.50% |

| 0.5 | 0.20 | 0.42 | 83.33% |

| 1 | 0.10 | 0.18 | 86.16% |

| 1 | 0.15 | 0.26 | 90.07% |

| 1 | 0.20 | 0.33 | 84.50% |

| 1.5 | 0.10 | 0.15 | 84.50% |

| 1.5 | 0.15 | 0.22 | 87.50% |

| 1.5 | 0.20 | 0.29 | 87.20% |

| 2 | 0.10 | 0.14 | 84.01% |

| 2 | 0.15 | 0.20 | 86.02% |

| 2 | 0.20 | 0.27 | 88.06% |

| 2 | 0.25 | 0.33 | 84.50% |

| C | MAP |

|---|---|

| 0.5 | 82.91% |

| 1.0 | 83.20% |

| 1.5 | 83.35% |

| 2.0 | 82.88% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, J.A.; Yeo, D.; Shin, H. New Dark Area Sensitive Tone Mapping for Deep Learning Based Traffic Sign Recognition. Sensors 2018, 18, 3776. https://doi.org/10.3390/s18113776

Khan JA, Yeo D, Shin H. New Dark Area Sensitive Tone Mapping for Deep Learning Based Traffic Sign Recognition. Sensors. 2018; 18(11):3776. https://doi.org/10.3390/s18113776

Chicago/Turabian StyleKhan, Jameel Ahmed, Donghoon Yeo, and Hyunchul Shin. 2018. "New Dark Area Sensitive Tone Mapping for Deep Learning Based Traffic Sign Recognition" Sensors 18, no. 11: 3776. https://doi.org/10.3390/s18113776

APA StyleKhan, J. A., Yeo, D., & Shin, H. (2018). New Dark Area Sensitive Tone Mapping for Deep Learning Based Traffic Sign Recognition. Sensors, 18(11), 3776. https://doi.org/10.3390/s18113776