Abstract

This paper considers the parameter estimation problem under non-stationary environments in sensor networks. The unknown parameter vector is considered to be a time-varying sequence. To further promote estimation performance, this paper suggests a novel diffusion logarithm-correntropy algorithm for each node in the network. Such an algorithm can adopt both the logarithm operation and correntropy criterion to the estimation error. Moreover, if the error gets larger due to the non-stationary environments, the algorithm can respond immediately by taking relatively steeper steps. Thus, the proposed algorithm achieves smaller error in time. The tracking performance of the proposed logarithm-correntropy algorithm is analyzed. Finally, experiments verify the validity of the proposed algorithmic schemes, which are compared to other recent algorithms that have been proposed for parameter estimation.

1. Introduction

Sensor networks are useful tools for disaster relief management, target localization and tracking, and environment monitoring [1,2,3,4]. Distributed parameter estimation plays an essential role in sensor networks [5,6,7]. The objective of the parameter estimation is to estimate some essential parameters from noisy observation measurements through cooperation between nodes. Moreover, distributed strategies are of great significance to solve the problem of parameter estimation in sensor networks, due to their robustness against imperfections, low complexity, and low power demands.

Among these distributed schemes, in the incremental strategy [8], a cyclic path is defined over the nodes and data are processed in a cyclic manner through the network until optimization is achieved. However, determining a cyclic path that runs across all nodes is generally a challenging (NP-hard) task to perform. In the consensus strategy [9], vanishing step sizes are used to ensure that nodes can reach consensus and converge to the same optimizer in steady-state. In the diffusion strategy, information is processed locally and simultaneously at all nodes. The processed data are diffused through a real-time sharing mechanism that ripples through the network continuously [10,11]. The diffusion strategies are particularly attractive because they are robust [12,13,14,15], flexible, and fully distributed compared with incremental and consensus strategies, so we adopt diffusion strategies in this paper.

Most prior literature is mainly concerned with the case where nodes estimate the parameter vector collaboratively in the stationary case over sensor networks [10,16]. However, in the real world, the non-stationary case is normal. In this work, we mainly consider the parameter estimation in the non-stationary case, where the parameter is always time-varying. The observation data are nonlinear and non-Gaussian, since the data may be disturbed by changing communication links or outliers under the non-stationary environments.

Inspired by the differentiability and mathematical tractability of logarithm functions, we introduce the logarithm function as the error cost function [17]. Moreover, the correntropy criterion is a nonlinear measure of similarity between two random variables [18], which is a robust optimality criterion has been successfully used in the field of non-Gaussian signal processing. To make the error cost function more suitable for non-stationary environments, we propose a diffusion signal processing framework with a logarithm-correntropy cost function to solve the parameter estimation problem, which can elegantly and gradually adjust the cost function in its optimization based on the error amount.

A. Related Works

The tracking behavior of a wide range of adaptive networks under non-stationary conditions was thoroughly investigated in [19,20,21,22]. In stationary conditions, based on the p norm error criterion, a diffusion minimum average p-power (dLMP) was proposed to estimate the parameters in wireless sensor networks [23]. To estimate the mean-square weight deviations under the zero-mean stationary measurement noise, the proportionate-type normalized least mean square algorithms were proposed in [24]. The diffusion normalized least-mean-square algorithm (dNLMS) was proposed for parameter estimation in a distributed network [25], and the variable step size of the dNLMS algorithm was obtained by minimizing the mean-square deviation to achieve fast convergence rate. The gradient-descent total least-squares (dTLS) algorithm is a stochastic-gradient adaptive filtering algorithm that compensates for error in both input and output data [26]. The steady-state analysis of gradient-descent total least-squares was inspired by the energy-conservation-based approach to the performance analysis of adaptive filters. When measurement noise involves impulsive interference, Ni, Chen, and Chen [27] designed a diffusion sign-error LMS (dSE-LMS) to solve the parameter estimation. The tracking performance of a variable step-size diffusion LMS algorithm is considered in non-stationary environment [28], but this research did not get the closed-form expression of steady-state mean-square deviation (MSD) or excess mean-square error (EMSE) of the network. Consequently, the theory and simulation do not match well. To date, the performance of distributed estimation algorithms has been predominantly studied under stationary conditions. However, the performances of these algorithms may degrade in non-stationary environments.

To find the optimal adaptation step sizes over the networks, Abdolee, Vakilian, and Champagne [29] formulated a constrained nonlinear optimization problem and solved it through a log-barrier Newton algorithm in an iterative manner. By using the optimal step size at each node, the performance of diffusion least-mean squares (DLMS) could be improved in non-stationary signal environments. Compared with this research, the proposed algorithm can respond immediately by taking relatively steeper steps when the error gets larger, and as a result, the new algorithm can perform well in non-stationary environments without finding the optimal step size at each node.

B. Our Contributions and Organization

To further promote estimation performance in non-stationary environments over sensor networks, a novel algorithm needs to be designed. In this paper, the random-walk model is introduced for non-stationary environments. We proposed the logarithm-correntropy algorithm for parameter estimation in sensor networks under the non-stationary environments. This algorithm can adopt both the logarithm operation and correntropy criterion to the estimation error. Moreover, if the error gets larger due to the non-stationary environments, the algorithm can respond immediately by taking relatively steeper steps. Thus, the proposed algorithm achieves smaller error in time. The tracking performance of the proposed algorithm was analyzed. Simulation results are presented to evaluate the proposed algorithm.

The rest of this paper is organized as follows. In Section 2, we describe the estimation problem in a non-stationary environment. Section 3 introduces the adapt-then-combine (ATC) diffusion diffusion logarithmic-correntropy algorithm. In Section 4, the tracking performance analysis of the proposed algorithm is presented. Simulation results are presented in Section 5. Finally, conclusions are drawn in Section 6.

Notation: In what follows, let bold letters denote random variables and non-bold letters represent their realizations. Operators and denote transposition and expectation, respectively. denotes an identity matrix. is an all-unity vector. is the absolute value of a scalar.

2. Estimation Problem in a Non-Stationary Environment

Consider a network with N nodes (sensors) deployed to observe some physical phenomena and specific events in a special environment. It is fundamentally necessary to consider and analyze parameter estimation under non-stationary conditions with the intent of employing them for practical applications. One challenge confronted in real-world applications is the non-stationary nature of the underlying parameters. For this purpose, a data model with a varying parameter is required. In this paper, we use the random walk model in [19] to depict the non-stationary condition.

Assumption 1.

(Random Walk Model): The parameter vector varies based on the following model:

where is a random variable with a constant mean, where i is the time index. is a zero-mean random sequence with a covariance matrix .

Assumption 2.

The sequence is independent of and for all k and i.

Assumption 3.

The initial conditions are independent of all , , , and .

At every time i, every node k can only exchange information with the nodes from its neighborhoods (including node k itself), and takes a scalar measurement according to:

where denotes the random regression input signal vector and we assume , is the Gaussian noise with zero mean and variance . The problem is to estimate an unknown varying vector at each node k from collected measurements. The objective of the network is to search for all unknown variable w and find the best estimation at the end by minimizing the MSE cost function in a distributed manner as follows:

The cost function of the global network can be described as:

The optimization problem in Equation (3) can be solved by the diffusion strategies proposed in [30,31]. In these strategies, the estimate for each node is generated through a fixed combination strategy, which refers to giving different weights to the estimation of k’s neighbors to minimize the local function as follows:

where is the combination coefficient. For simplicity and good performance, we use the Metropolis rule in our work. The description of the Metropolis rule is:

where is the degree of node k (the number of nodes connected to node k). The combining coefficients also satisfy the following conditions: and , where C is an matrix with non-negative real entries .

3. Diffusion Logarithmic-Correntropy Algorithm

In the non-stationary case, the parameter is always time-varying. We propose a new logarithmic-correntropy method to solve the parameter estimation problem. In order to solve Equation (3), since nodes in sensor networks have access to the observed data, we can take advantage of node cooperation by introducing a distributed diffusion learning manner.

In this paper, we are inspired from the recent developments in the information theoretic learning (ITL) related to the “logarithmic cost function” and the “correntropy”-based approaches [17,32]. The logarithmic function is differentiable, which makes it mathematically tractable. We introduce the logarithmic function as an efficient cost function in the adaptive algorithm. In this framework, we introduce an error cost function using the logarithmic function given by:

where is a a small systemic parameter and is a conventional cost function of the estimation error on each node k. The estimation error is . In this paper, we introduce the correntropy criterion to formulate the conventional cost function . The correntropy is a similarity measure based on the ITL criterion. Given two random variables X and Y, the corresponding correntropy between them can be defined by [33]:

where is a continuous, symmetric, positive-definite function with bandwidth , also called the Mercer kernel. E is an expectation operator. The joint distribution function of X and Y is . The Gaussian kernel is mainly concerned in this paper.

For each node k, based on the correntropy criterion, the instantaneous conventional cost function is:

In non-stationary conditions over networks, the communication among nodes is subject to link noise, and it is natural that the observation vectors are affected by noise. The total least squares (TLS) method for estimation can have desirable performance by reducing the noise effect from both the observation vector and the data matrix [34]. We briefly explain the TLS method as follows:

Consider the linear parameter estimation problem , where is the data matrix, is the observation vector, and is the unknown parameter vector. The least squares (LS) approach considers that the observation vector is noisy while the data matrix is noiseless. However, the total least squares (TLS) approach considers that both the observation vector and the data matrix are noisy [35]. The LS approach seeks the estimate of the unknown parameter vector by minimizing a sum of squared residuals expressed by:

while the TLS approach minimizes a sum of weighted squared residuals expressed by:

From the matrix algebra viewpoint, the total least squares (TLS) approach is a refinement of the LS method when there are errors in both the observation vector and the data matrix. Inspired by the desirable features of the TLS method, to make the logarithm-correntropy method more suitable for non-stationary environments, we rewrite the conventional cost function as:

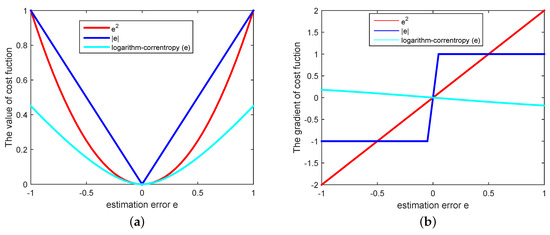

To demonstrate the superiority of the proposed logarithm-correntropy method, we introduce different stochastic cost functions, such as the least mean square cost and absolute difference cost . Figure 1 compares these cost functions with the proposed cost function logarithm-correntropy . It can be observed that the proposed cost function logarithm-correntropy is less sensitive to tiny interference on the error, and shows comparable steepness for quite large error interference. Furthermore, this new logarithm-correntropy cost function benefits from mapping the original input space into a potential higher-dimensional “feature space”, where linear methods can be employed. Particularly, if the error gets larger due to the non-stationary environments, the algorithm can respond immediately by taking relatively steeper steps. Thus, the proposed algorithm achieves smaller error in time and takes more gradual steps in space.

Figure 1.

(a) The value of cost function; (b) The gradient of cost error function.

Given the data model, all nodes can observe data generated by the data model in Equation (2). It is natural to expect collaboration between nodes to be beneficial for a distributed sensor network. This means that neighbor nodes can share information with each other as permitted by the network topology. Therefore, according to Equations (7) and (13), we define the global cost function so that all nodes in the sensor network can be adapted in a distributed manner, then the new global function can be built as follows:

To develop the distributed diffusion logarithm-correntropy algorithm in non-stationary environments over sensor networks, we can build the following new diffusion Logarithm-Correntropy Algorithm (dLCA) local cost function at every node k as:

where is the local estimate obtained by node k at time i, is the estimation error at node l, l denotes any neighbor node of node k, , and the denote combining coefficients, which also is subjected to the Metropolis rule.

To reach the minimum , it is a natural thought to use the steepest-descent method. Taking the derivative of Equation (15), we have

where and .

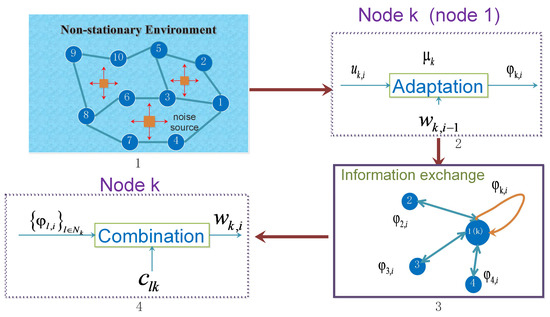

Since nodes in the sensor networks have access to all observed data, we can take advantage of node cooperation by introducing a diffusion strategy to estimate the parameter in a fully distributed manner. This paper concerns the Adapt-then-Combine (ATC) scheme of the diffusion strategy. As Figure 2 shows, in the ATC scheme, nodes in networks combine information from their immediate neighbors firstly, and then employ updates by the following steps:

Figure 2.

Adapt-then-Combine (ATC) diffusion strategies. Step 1 depicts a sensor network working in a non-stationary environment. In the adaptation stage 2, each node is using observed data to update its intermediate estimate . Step 3 shows the information exchanging process between nodes. In the combination stage 4, each node collects the intermediate estimates from its neighbors.

(1) Adaptation: In order to obtain an intermediate estimate, we introduce a step-size parameter . Each node updates its current estimate for the true parameter value by taking steepest-descent method. We can obtain an intermediate estimate as follows:

(2) Combination: This step is also called the diffusion step, to obtain a new estimate, each node aggregates its own intermediate estimate from all its neighbor nodes as follows:

For the purpose of clarity, we summarize the procedures of the diffusion Logarithm-Correntropy Algorithm (dLCA) (Algorithm 1) as follows:

| Algorithm 1: diffusion Logarithm-Correntropy Algorithm |

| Initialize: Start with for all l, initialize for each node |

| k,step-size , and cooperative coefficients . Set , . |

| for |

| for each node k: |

| Adaptation. |

| . |

| Communication. |

| Transmit the intermediate to all neighbors in . |

| Combination. |

| are the neighbor nodes of node k in the communication subnetwork. |

4. Tracking Performance Analysis

The tracking performance of the proposed diffusion logarithm-correntropy algorithm is analyzed in this section. The convergence condition is first studied with , defined as the error signal, which is a time-varying parameter under the random walk model:

It has been proven that subtracting from both sides of the update procedure on a node and then taking the expectation value leads to the following relation under stationary conditions in [10]:

Then, considering the Assumptions 2 and 3 of the random sequence , we observe that has a constant mean and hence under the relation in Equation (1). Taking the expectation value leads to the following relation under non-stationary conditions in Equation (1). We obtain

In Equation (21), is a zero-mean variable sequence with covariance matrix . Our purpose is to achieve mean square deviation (MSD) and excess mean square error (EMSE) for each node, which are defined as:

In the non-stationary case with , based on the definition in Equation (15), is twice continuous differentiable when . Then we obtain the Hessian matrix of , which is defined as .

From Lemma 1 and Theorem 1 in [12], the bound Hessian is: and .

In Equation (26), as a positive-definite random matrix, is defined as

Applying Jensen’s inequality to Equation (27), the variance of is bounded by

where is a convex function and represents the squared Euclidean norm.

According to [12], substituting Equation (32) into Equation (30), we get

where is a constant. The global MSD is introduced, which leads to

We collect the into matrices , such that . The is left-stochastic, that is, , means the all one vector. From Equations (29) and (35), it holds that

where ⪯ denotes element-wise ordering and

In order to ensure the stability of the proposed algorithm in the mean sense, according to Theorem 1 in Reference [12], it should hold that

Since . As , which indicates

where is the norm. When the step-sizes are sufficiently small, we can further yield the conclusion that

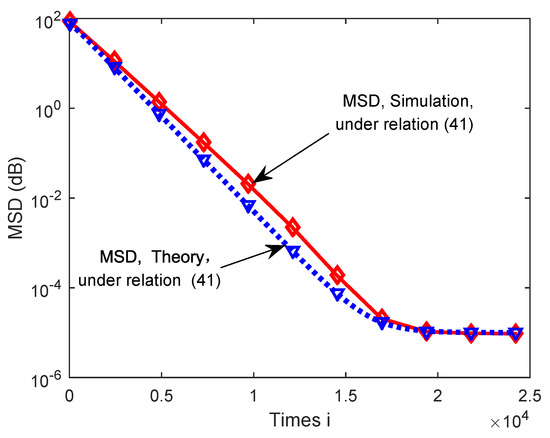

According to the bound in Equation (41), if step-sizes are sufficiently small, the MSD of each node is , which can become sufficiently small.

5. Simulation Results

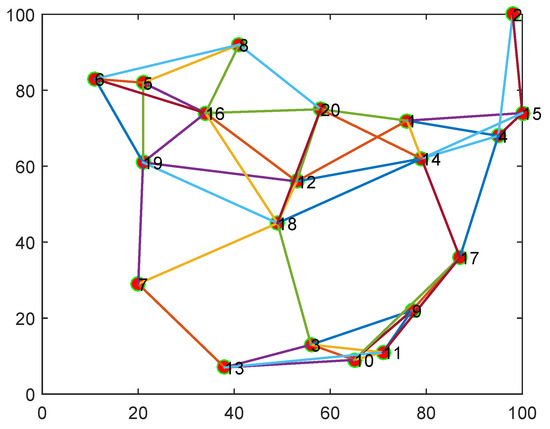

In this section, to verify the performance of the proposed diffusion logarithm-correntropy algorithm, we considered a network consisting of 20 nodes and 50 communication links. The topology is shown in Figure 3. The sensor nodes were randomly deployed in an area of and the communication distance between nodes was set as 35. All results below were averaged over 150 independent Monte Carlo simulations with randomly generated samples.

Figure 3.

The topology of sensor network with 20 nodes.

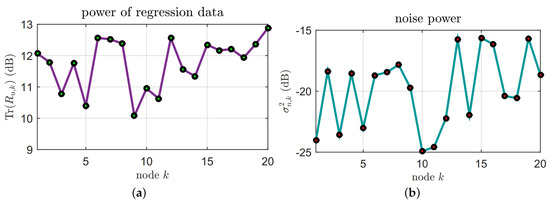

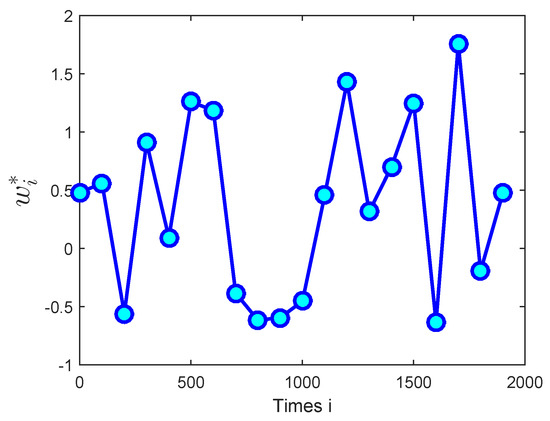

In this simulation part, firstly, the performance of the proposed algorithm was verified in a non-stationary environment over sensor networks and the communication links were ideal. In Figure 4, the regression inputs are independent identically distributed (i.i.d.), which are zero-mean Gaussian vectors with covariance matrices , and the is the input variance. The background noises are drawn independently of the regressors and are i.i.d. The unknown parameter vector is time-varying, as Figure 5 shows. The fixed step-size = is used in the simulations.

Figure 4.

(a) Regressor statistics; (b) Noise variances.

Figure 5.

The desired time-varying vector, .

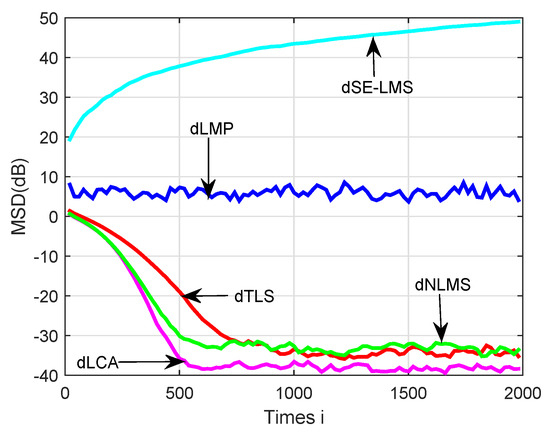

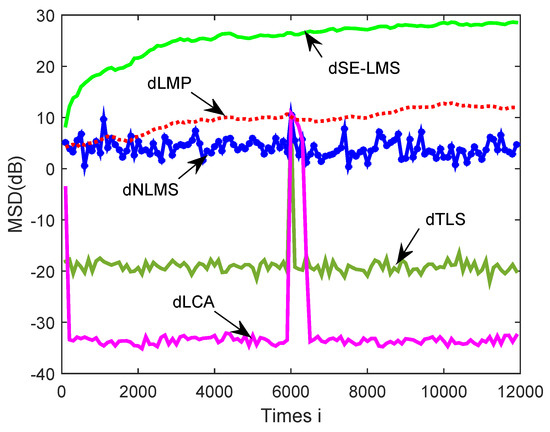

The MSD learning curves are plotted in Figure 6. It shows that the proposed dLCA algorithm obtained the fastest convergence rate when compared with the dSE-LMS, dLMP, dTLS, and dNLMS algorithms. It also shows that the dLCA algorithm could achieve relatively good performance in terms of the network MSD. The proposed algorithm had relatively smaller MSD than the mentioned algorithms. From these simulation results, it can be seen that diffusion logarithm-correntropy algorithm exhibited better tracking ability in non-stationary environments than the existing classical algorithms.

Figure 6.

A comparison of simulated MSD learning curves in a non-stationary environment over sensor networks for the diffusion sign-error least-mean-square (dSE-LMS), diffusion minimum average p-power (dLMP), gradient-descent total least-squares (dTLS), diffusion normalized least-mean-square algorithm (dNLMS), and diffusion logarithm-correntropy algorithm (dLCA) algorithms.

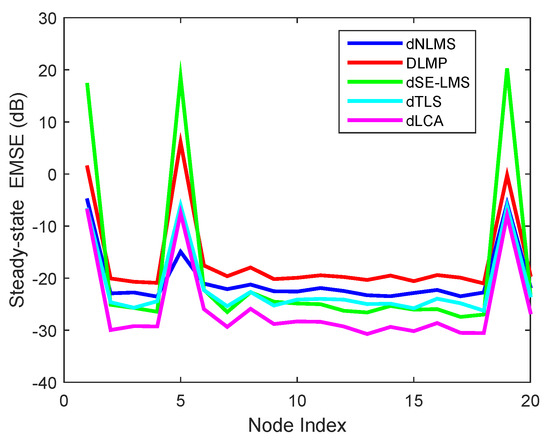

Figure 7 compares the steady-state EMSE performances of related algorithms on each node in the sensor networks. It can be observed that a large difference was observed at some nodes that achieved low EMSE. By averaging over 150 experiments and over 50 time samples after convergence, the steady-state EMSE values were obtained. The proposed algorithm captured a better trend of the steady-state performance than other algorithms.

Figure 7.

Estimated accuracy comparison in terms of excess mean-square error (EMSE) on each node for the dSE-LMS, dLMP, dTLS, dNLMS, and dLCA algorithms.

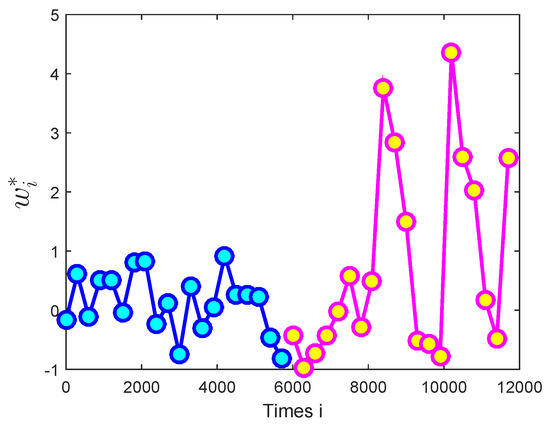

Secondly, to further simulate the non-stationary scenarios in sensor networks, the link was assumed to change at time 4000. The unknown parameter vector was time-varying with link changing, as Figure 8 shows.

Figure 8.

The desired time-varying vector with link changing, .

From the simulation results shown in Figure 9, in non-stationary environments over sensor networks with links changing, the diffusion logarithm-correntropy algorithm had smaller MSD than other related algorithms, such as dSE-LMS, dLMP, dTLS, and dNLMS algorithms. It further shows that the proposed dLCA algorithm had better tracking ability in non-stationary environments.

Figure 9.

A comparison of simulated MSD learning curves of the global network for the dSE-LMS, dLMP, dTLS, dNLMS, and dLCA algorithms in non-stationary environments over sensor networks with links changing.

Finally, we compared the simulated network MSD curves with theoretical results under Equation (41) in Figure 10. One can see that theoretical network MSD curves of the proposed algorithm showed good match with its simulated MSD curves.

Figure 10.

Theoretical and simulated MSD curves of the proposed dLCA algorithm under Equation (41).

6. Conclusions

To solve the problem of parameter estimation in non-stationary environments over sensor networks, each node in the sensor networks was equipped with the logarithm-correntropy cost function. The proposed algorithm can gradually adjust the cost function in its optimization based on the estimation error amount. We investigated the tracking behavior of the proposed algorithm under non-stationary conditions. Furthermore, the simulations were implemented in the non-stationary environments, where the parameters were time-varying with link changing. Simulation experiments were conducted to verify the analytical results, and illustrated that the proposed algorithm outperformed existing algorithms, such as dSE-LMS, dLMP, dTLS, and dNLMS algorithms.

Author Contributions

Data curation, F.C.; Funding acquisition, S.D.; Project administration, L.H.; Software, L.H.; Supervision, S.D. and L.W.; Writing–original draft, L.H.; Writing–review and editing, F.C. and L.W.

Funding

This work was supported in part by the Doctoral Fund of Southwest University (No. SWU113067), the Fundamental Research Funds for the Central Universities (Grant Nos. XDJK2017B053, XDJK2017D176, XDJK2017D180, XDJK2017D181), Chongqing Research Program of Basic Research and Frontier Technology (No. cstc2017jcyjAX0265) and Program for New Century Excellent Talents in University (Grant No. [2013] 47).

Acknowledgments

Authors would like to thank the editor and the anonymous reviewers for their constructive comments that improved the quality of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Safdarian, A.; Fotuhi-Firuzabad, M.; Lehtonen, M. A distributed algorithm for managing residential demand response in smart grids. IEEE Trans. Ind. Inf. 2014, 10, 2385–2393. [Google Scholar] [CrossRef]

- Talebi, S.P.; Kanna, S.; Mandic, D.P. A Distributed Quaternion Kalman Filter with Applications to Smart Grid and Target Tracking. IEEE Trans. Signal Inf. Process. Netw. 2016, 2, 477–488. [Google Scholar] [CrossRef]

- Harris, N.; Cranny, A.; Rivers, M. Application of distributed wireless chloride sensors to environmental monitoring: Initial results. IEEE Trans. Instrum. Meas. 2016, 4, 736–743. [Google Scholar] [CrossRef]

- Sayed, A.H. Adaptive Filters; John Wiley and Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Tan, T.H.; Gochoo, M.; Chen, Y.F.; Hu, J.J.; Chiang, J.Y.; Chang, C.S.; Hsu, J.C. Ubiquitous emergency medical service system based on wireless biosensors, traffic information, and wireless communication technologies: Development and evaluation. Sensors 2017, 17, 202. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Xu, Y.; Liu, J. A Distributed and Energy-Efficient Algorithm for Event K-Coverage in Underwater Sensor Networks. Sensors 2017, 17, 186. [Google Scholar] [CrossRef] [PubMed]

- Kang, X.; Huang, B.; Qi, G. A Novel Walking Detection and Step Counting Algorithm Using Unconstrained Smartphones. Sensors 2018, 18, 297. [Google Scholar] [CrossRef] [PubMed]

- Lopes, C.G.; Sayed, A.H. Incremental adaptive strategies over distributed networks. IEEE Trans. Signal Process. 2007, 55, 4064–4077. [Google Scholar] [CrossRef]

- Soatti, G.; Nicoli, M.; Savazzi, S. Consensus-Based Algorithms for Distributed Network-State Estimation and Localization. IEEE Trans. Signal Inf. Process. Netw. 2017, 3, 430–444. [Google Scholar] [CrossRef]

- Liu, Y.; Li, C.; Zhang, Z. Diffusion sparse least-mean squares over networks. IEEE Trans. Signal Process. 2012, 60, 4480–4485. [Google Scholar]

- Abdolee, R.; Vakilian, V. An Iterative Scheme for Computing Combination Weights in Diffusion Wireless Networks. IEEE Wirel. Commun. Lett. 2017, 6, 510–513. [Google Scholar] [CrossRef]

- Chen, J.; Sayed, A.H. Diffusion adaptation strategies for distributed optimization and learning over networks. IEEE Trans. Signal Process. 2012, 60, 4289–4305. [Google Scholar] [CrossRef]

- Chen, F.; Shao, X. Broken-motifs Diffusion LMS Algorithm for Reducing Communication Load. Signal Process. 2017, 133, 213–218. [Google Scholar] [CrossRef]

- Chouvardas, S.; Slavakis, K.; Theodoridis, S. Adaptive robust distributed learning in diffusion sensor networks. IEEE Trans. Signal Process. 2011, 10, 4692–4707. [Google Scholar] [CrossRef]

- Chen, F.; Shi, T.; Duan, S.; Wang, L. Diffusion least logarithmic absolute difference algorithm for distributed estimation. Signal Process. 2018, 142, 423–430. [Google Scholar] [CrossRef]

- Sayed, A.H. Fundamentals of Adaptive Filtering; Wiley: Hoboken, NJ, USA, 2003. [Google Scholar]

- Sayin, M.O.; Vanli, N.D.; Kozat, S.S. A Novel Family of Adaptive Filtering Algorithms Based on the Logarithmic Cost. IEEE Trans. Signal Process. 2014, 62, 4411–4424. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Liang, J.; Zheng, N.; Principe, J.C. Steady-state mean-square error analysis for adaptive filtering under the maximum correntropy criterion. IEEE Signal Process. Lett. 2014, 21, 880–884. [Google Scholar]

- Nosrati, H.; Shamsi, M.; Taheri, S.M.; Sedaagh, M.H. Adaptive networks under non-stationary conditions: Formulation, performance analysis, and application. IEEE Trans. Signal Process. 2015, 63, 4300–4314. [Google Scholar] [CrossRef]

- Predd, J.B.; Kulkarni, S.B.; Poor, H.V. Distributed learning in wireless sensor networks. IEEE Signal Process. Mag. 2006, 23, 56–69. [Google Scholar] [CrossRef]

- Bertsekas, D.P.; Tsitsiklis, J.N. Gradient convergence in gradient methods with errors. SIAM J. Optim. 2000, 10, 627–642. [Google Scholar] [CrossRef]

- Arablouei, R.; Dogancay, K. Adaptive Distributed Estimation Based on Recursive Least-Squares and Partial Diffusion. IEEE Trans. Signal Process. 2014, 14, 3510–3522. [Google Scholar] [CrossRef]

- Wen, F. Diffusion least-mean P-power algorithms for distributed estimation in alpha-stable noise environments. Electron. Lett. 2013, 49, 1355–1356. [Google Scholar] [CrossRef]

- Wagner, K.; Doroslovacki, M. Proportionate-type normalized least mean square algorithms with gain allocation motivated by mean-square-error minimization for white input. IEEE Trans. Signal Process. 2011, 59, 2410–2415. [Google Scholar] [CrossRef]

- Jung, S.M.; Seo, J.H.; Park, P. A variable step-size diffusion normalized least-mean-square algorithm with a combination method based on mean-square deviation. Circuits Syst. Signal Process. 2015, 34, 3291–3304. [Google Scholar] [CrossRef]

- Arablouei, R.; Werner, S. Analysis of the gradient-descent total least-squares adaptive filtering algorithm. IEEE Trans. Signal Process. 2014, 62, 1256–1264. [Google Scholar] [CrossRef]

- Ni, J.; Chen, J.; Chen, X. Diffusion sign-error LMS algorithm: Formulation and stochastic behavior analysis. Signal Process. 2016, 128, 142–149. [Google Scholar] [CrossRef]

- Zhao, X.; Tu, S.; Sayed, A.H. Diffusion adaptation over networks under imperfect information exchange and non-stationary data. IEEE Trans. Signal Process. 2012, 60, 3460–3475. [Google Scholar] [CrossRef]

- Abdolee, R.; Vakilian, V.; Champagne, B. Tracking Performance and Optimal Step-Sizes of Diffusion LMS Algorithms in Nonstationary Signal Environment. IEEE Trans. Control Netw. Syst. 2016, 5, 67–78. [Google Scholar] [CrossRef]

- Cattivelli, F.S.; Sayed, A.H. Diffusion LMS Strategies for Distributed Estimation. IEEE Trans. Signal Process. 2010, 58, 1035–1048. [Google Scholar] [CrossRef]

- Lopes, C.G.; Sayed, A.H. Diffusion least-mean squares over adaptive networks: Formulation and performance analysis. IEEE Trans. Signal Process. 2008, 56, 3122–3136. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Zhao, H.; Zheng, N.; Prı, J.C. Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 2016, 64, 3376–3387. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Xu, B.; Zhao, H.; Principe, J.C. Insights into the Robustness of Minimum Error Entropy Estimation. IEEE Trans. Neural Netw. Learn. Syst. 2016, 3, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Rahman, M.D.; Yu, K.B. Total least squares approach for frequency estimation using linear prediction. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 1440–1454. [Google Scholar] [CrossRef]

- Markovsky, I.; Van Huffel, S. Overview of total least-squares methods. Signal Process. 2007, 87, 2283–2302. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).