1. Introduction

Dey [

1] defined context knowledge in a broad sense as “any information that can be used to characterize the situation of an entity”. However, for intelligent service robots, the concrete context knowledge of the robot’s domain needs to be redefined. Turner [

2] defined context knowledge for intelligent agents into three categories, based on which Bloisi [

3] redefined robot-oriented context knowledge: environmental knowledge, task-related knowledge, and self-knowledge. Environmental knowledge includes physical-space information about the environment outside the robot, such as the locations of people and objects and an environmental map. Task-related knowledge includes tasks that can be performed by robots and the constraints of these tasks. Self-knowledge includes the internal conditions of robots, such as joint angle and battery level. According to the definition by Bloisi, the context knowledge of robots includes not only static knowledge, such as common sense, but also dynamic knowledge that has high time dependency as it continuously changes in real time. This context knowledge is indispensable for the generation of robot task plans (task planning) [

4,

5], human–robot collaboration or multiple robot collaboration [

6,

7], and context-knowledge-providing services [

8,

9]. Therefore, the performance and application scope of robots depends on how diverse and complex the context knowledge that the service robot can recognize and understand is.

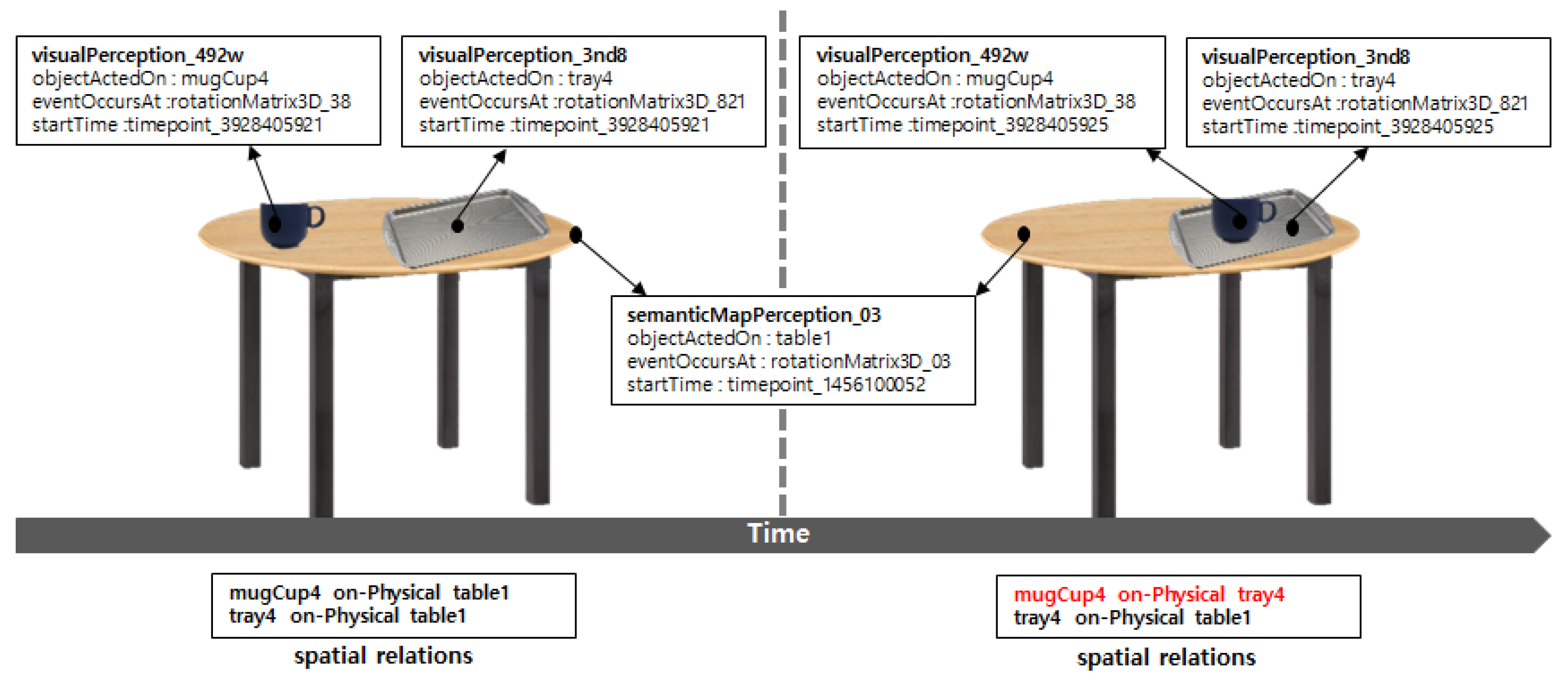

Among the many different types of context knowledge, this study focused on context knowledge related to the environment, and in particular, on the locational information of individual objects and the three-dimensional (3D) spatial relations among objects in a home environment. The locational information (pose of individual objects) is sub-symbolic knowledge obtained from sensors, such as RGB-depth (RGB-D) cameras, and 3D spatial relations are abstracted symbolic knowledge that must be derived from this locational information. Three-dimensional spatial relations are comprehensive knowledge commonly required in most robot domains and are essential prior knowledge for deriving complex context knowledge, which is more difficult to determine; for example, the intention of external agents [

10,

11]. However, 3D spatial relations must be tracked in real time because they continuously change time independently of the robot and must be able to store and retrieve past context knowledge.

To examine examples of applications of 3D spatial relations, first, the preconditions of each task must be satisfied for robots to generate a task plan. For example, to generate a task plan for delivering a drink to a person, the following preconditions must be satisfied: a cup is filled with the drink and the robot is holding this cup. In another example, a context-knowledge-providing service must provide various context knowledge requested by the user in an indoor environment. For example, the user requests context knowledge such as “is there orange juice in the refrigerator now?” and “where is the tumbler, which was on the table yesterday, now?” These examples show that in terms of service, the context knowledge ultimately required by a robot is abstracted symbolic knowledge.

A service robot must be able to retrieve context knowledge stored in the working memory anytime or infer abstracted context knowledge when necessary. The retrieval of context knowledge requires a context query language and processing method for accessing the working memory inside the robot and manipulating knowledge. The important requirements of a context query language can be found in [

12,

13]. Typically, context knowledge is closely related to the physical spatial relations and has high time dependency as it frequently changes over time. Therefore, the context query language must have high expressiveness to query context knowledge of various periods. Furthermore, as the main purpose of context query is to retrieve and infer abstracted symbolic knowledge, the grammatical structure of the query language must be designed to write highly concise and intuitive queries.

As the grammatical structure of a query language is greatly dependent on the knowledge representation model for storing context knowledge, the knowledge representation model must be defined first. The general knowledge representation method of knowledge-based agents, such as service robots, involves the use of description logic-based ontology [

14,

15,

16,

17]. Every knowledge in ontology is represented in a statement composed of a subject, a predicate, and an object. Thus, the query language and processing method for retrieving context knowledge depends on this triple format. However, as the triple format can only express one fact, representing when this fact occurs and whether it is valid is difficult. For example, the triple format of “<red_mug> <on> <table>” cannot express that this fact was valid yesterday but is no longer valid today because the red mug has been moved to the shelf.

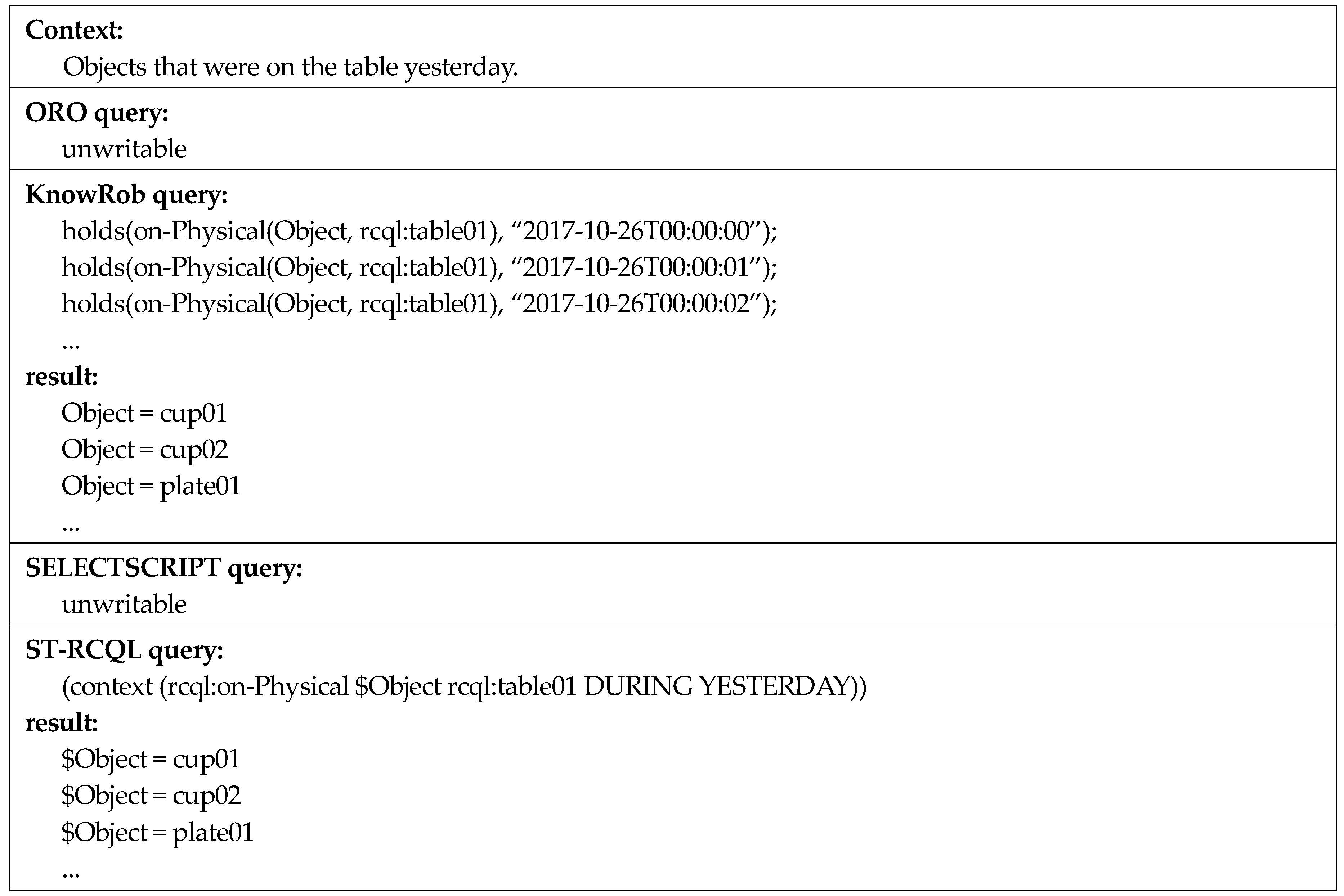

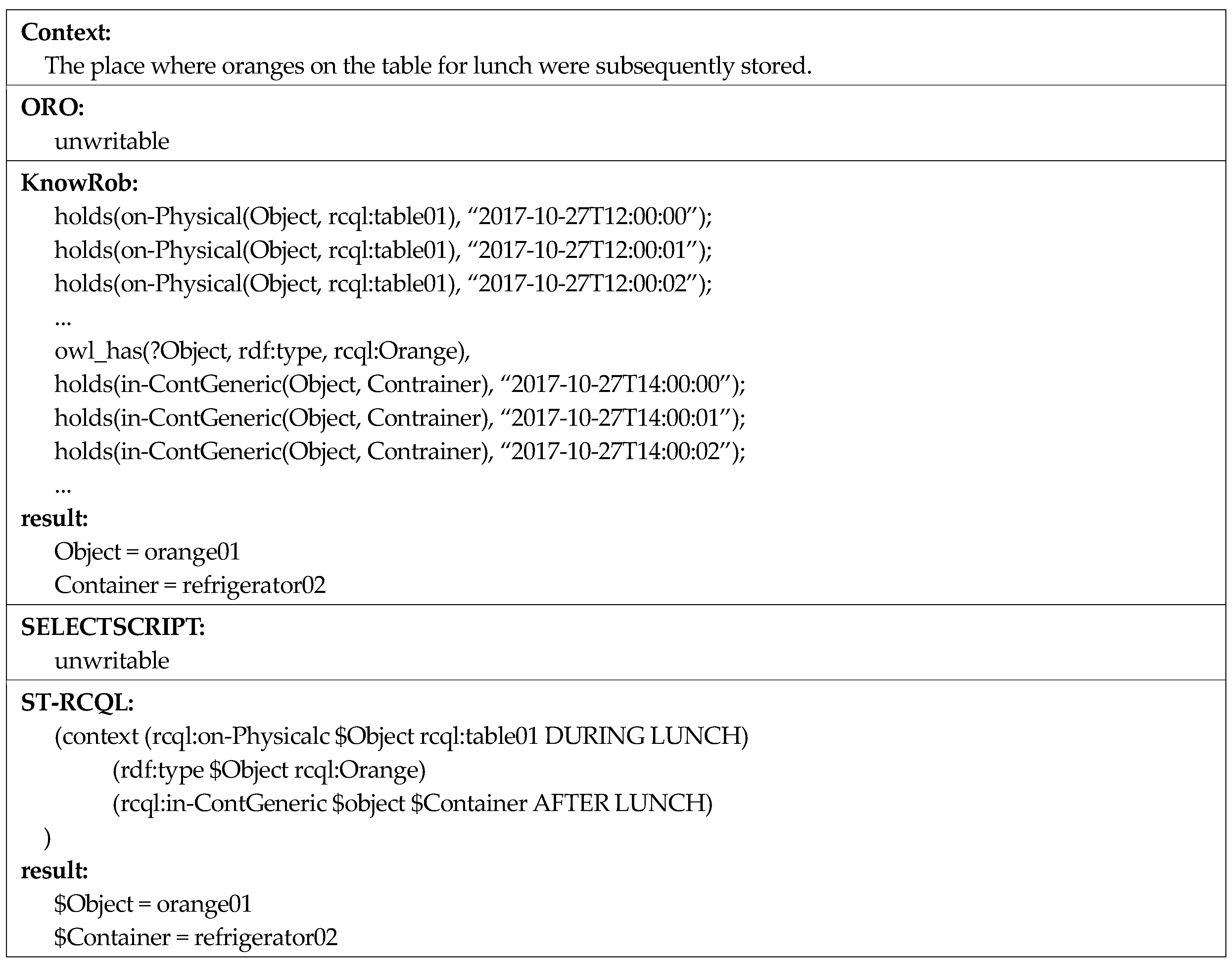

The existing work on robot context query includes OpenRobots Ontology (ORO) [

16], KnowRob [

17], and SELECTSCRIPT [

18]. ORO provides a knowledge-query API (Application Programming Interface) based on SPARQL (a semantic query language able to retrieve and manipulate data stored in RDF (Resource Description Framework)) [

19], which is a semantic Web query language, and ORO can thus retrieve and manipulate knowledge written in RDF (Resource Description Framework). However, ORO does not have a specific knowledge representation method to specify the valid time of knowledge and does not consistently maintain past context knowledge. Hence, ORO is limited in allowing only the current context knowledge to be queried. KnowRob provides prolog query predicates based on first-order logic in accordance with the semantic Web library of SWI-Prolog (a free implementation of the programming language Prolog) [

20]. KnowRob provides query predicates for expressing the valid times of perception information obtained from sensors and for querying and inferring the context knowledge of various periods. However, KnowRob does not support time operators and time constants for querying various periods and requires complex, inefficient queries. SELECTSCRIPT is an SQL (Structured Query Language)-inspired declarative query language of the script format. It provides embedded unary and binary operators to enable the context knowledge to be queried. However, as with ORO, SELECTSCRIPT does not have a specific knowledge representation method to specify the valid time of knowledge and does not consistently store past context knowledge. Thus, SELECTSCRIPT is also limited in allowing only the current context knowledge to be queried. For these three works, efficient query-processing methods, such as spatio-temporal indexing, have not been considered.

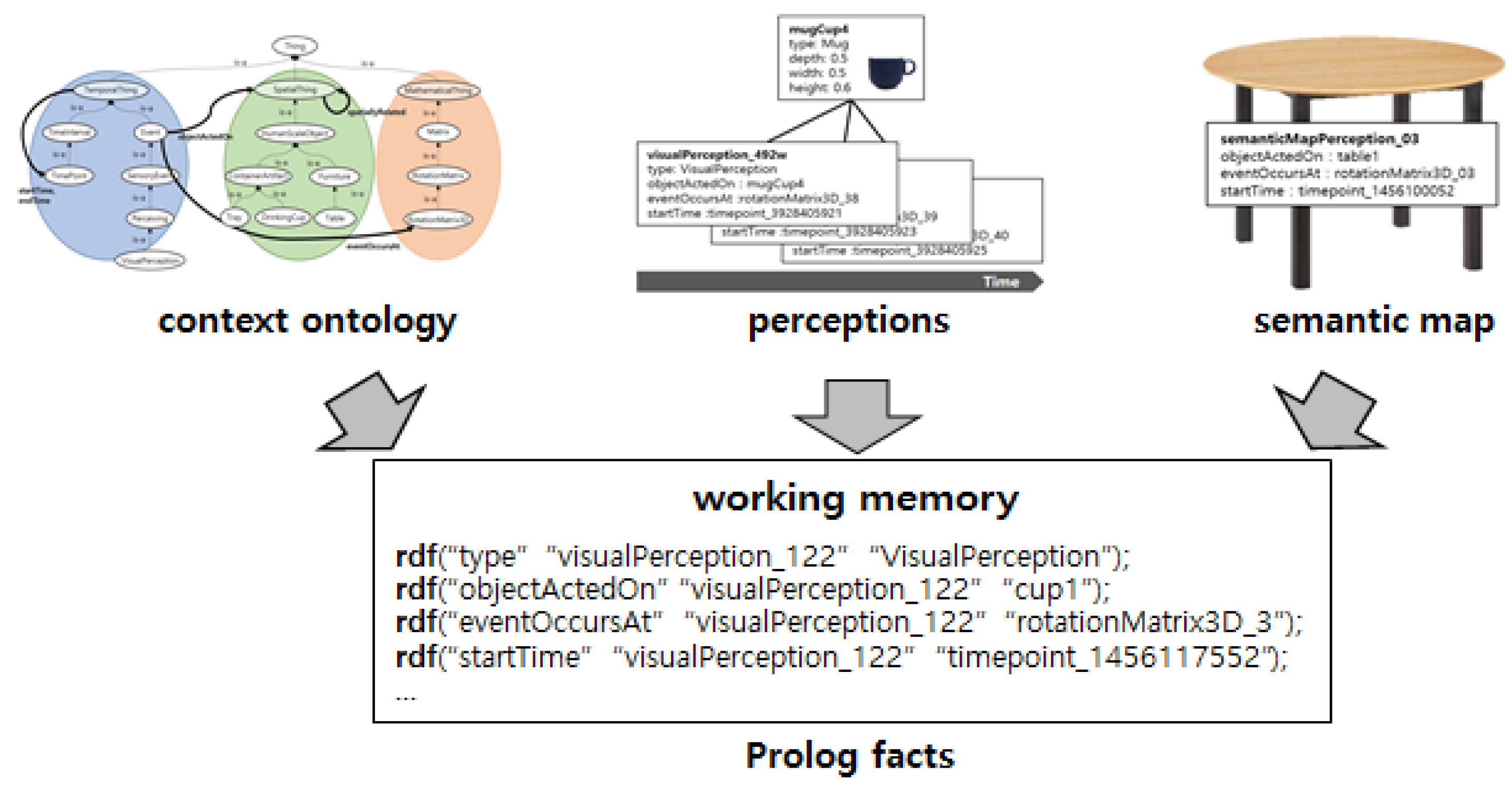

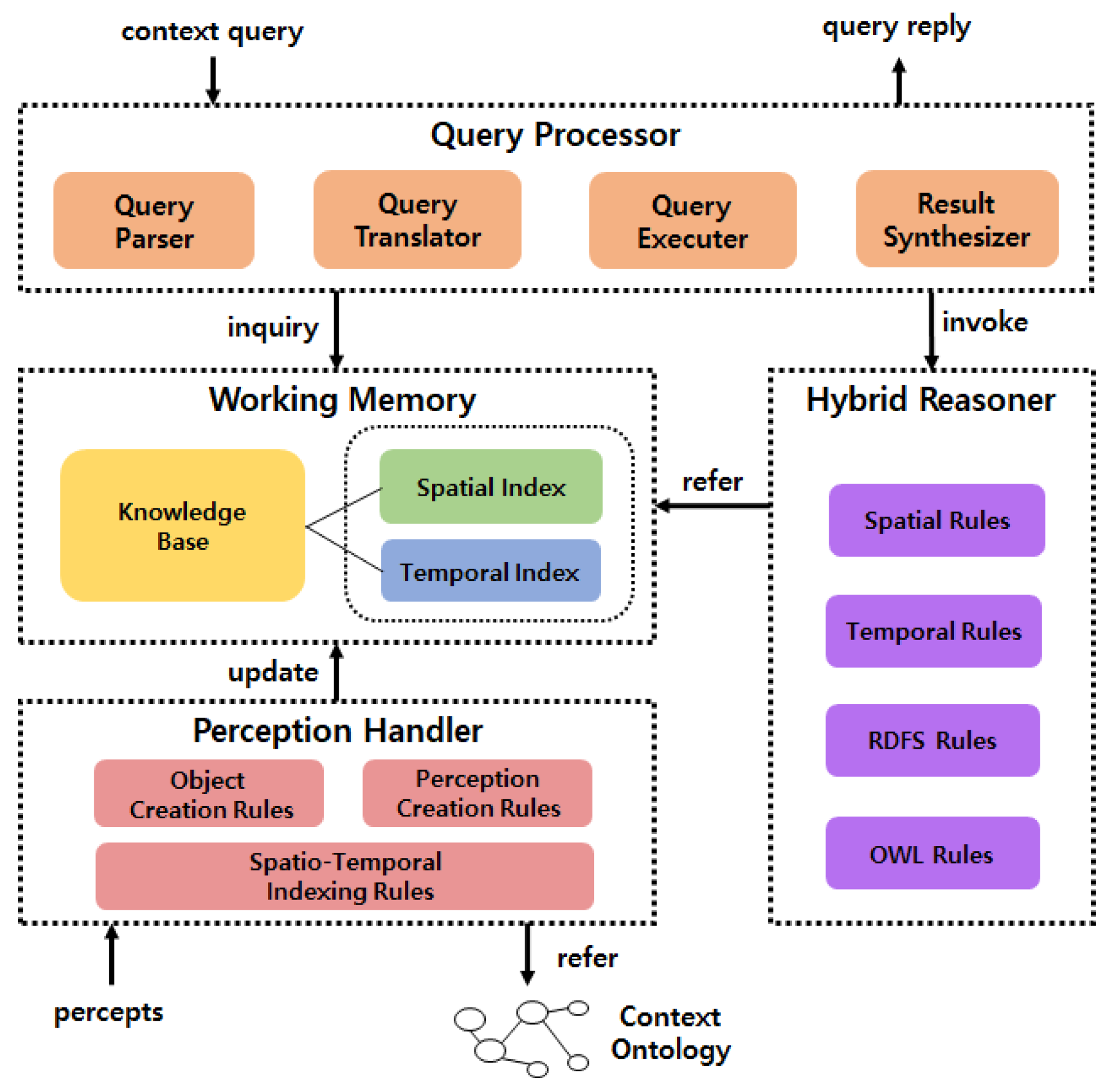

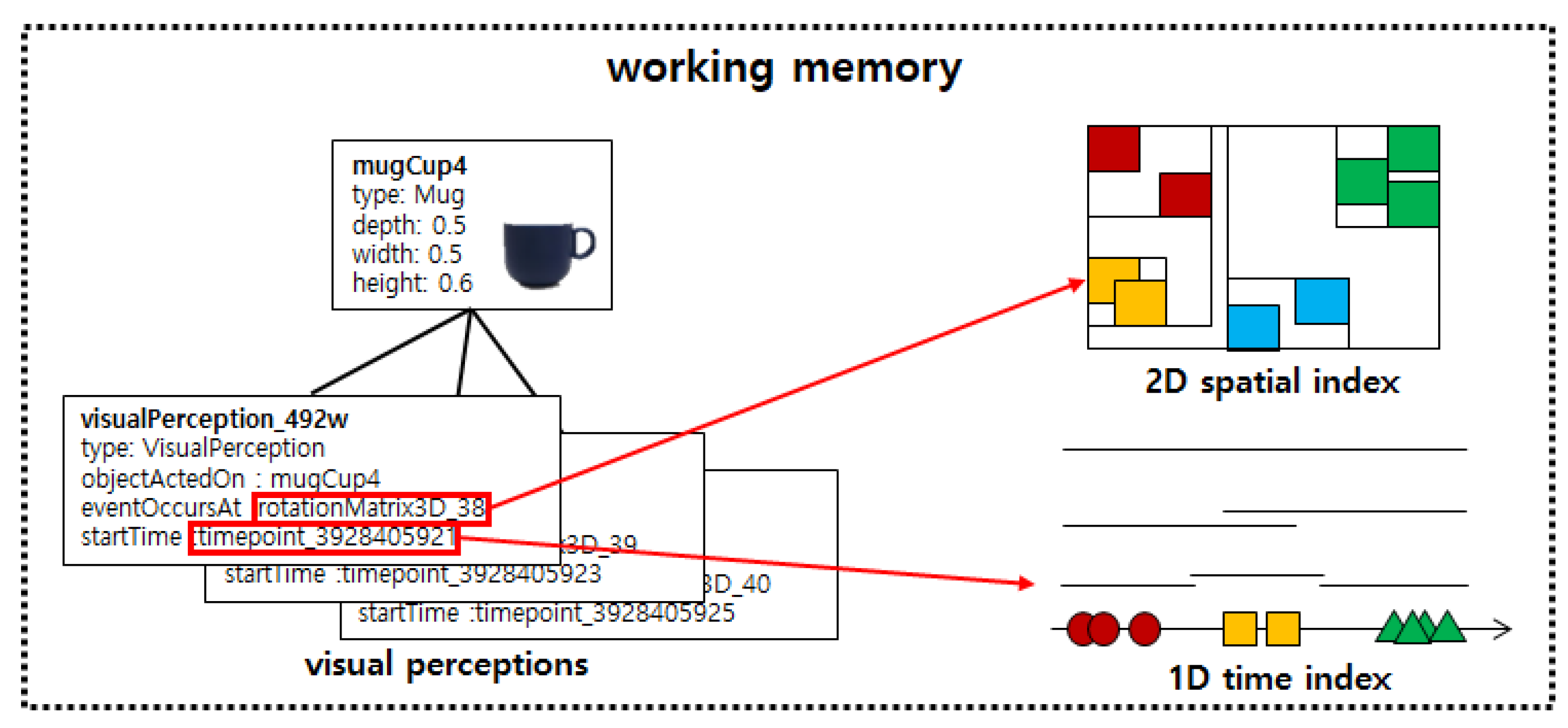

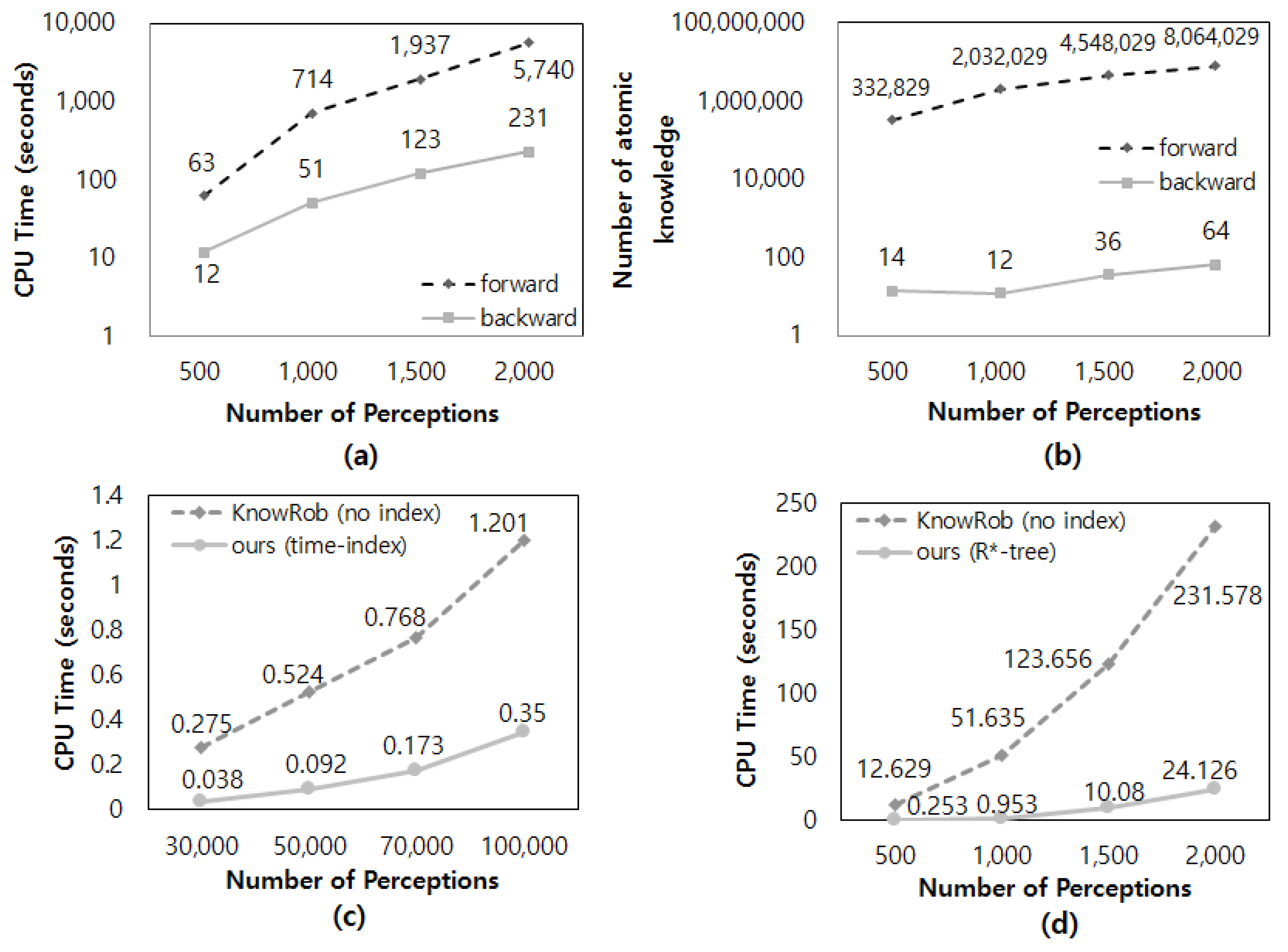

Against this backdrop, this study proposes the spatio-temporal robotic context query language (ST-RCQL), which allows the query of time-dependent context knowledge of service robots, and the spatio-temporal robotic context query-processing system (ST-RCQP), which allows the real-time query-processing. The ST-RCQL proposed in this paper assumes that the 3D spatial relations among objects are retrieved from the individual spatio-temporal perceptions of indoor environmental objects obtained from RGB-D cameras. However, although this type of assumption is adopted by many spatial query systems, it has a disadvantage in that a very complex query must be written to obtain symbolic knowledge [

21,

22]. As service robots require more abstract symbolic knowledge, in this study, query grammar was designed to allow very concise and intuitive queries instead of complex queries. For actual query-processing, query translation rules were designed to automatically translate queries to complex queries for internal processing. Furthermore, query grammar was designed to enable queries involving context knowledge of various periods by providing time operators based on Allen’s interval algebra. The query-processing was accelerated using a spatio-temporal index by considering the characteristics of service robots with a real-time property from the perspective of a query-processing system. Furthermore, it is more appropriate for robots to obtain specific context knowledge required at some time point rather than always inferring and accumulating all context knowledge explicitly. Therefore, we adopted a query-processing method based on backward chaining [

23]. To verify the suitability of the proposed ST-RCQL as a robot context query language and the efficiency of ST-RCQP, a query-processing system was implemented using SWI-Prolog and JAVA programming language, and the results of qualitative and quantitative experiments using this system are introduced in the following sections.

2. Related Works

2.1. Repesentation and Storage of Context Knowledge

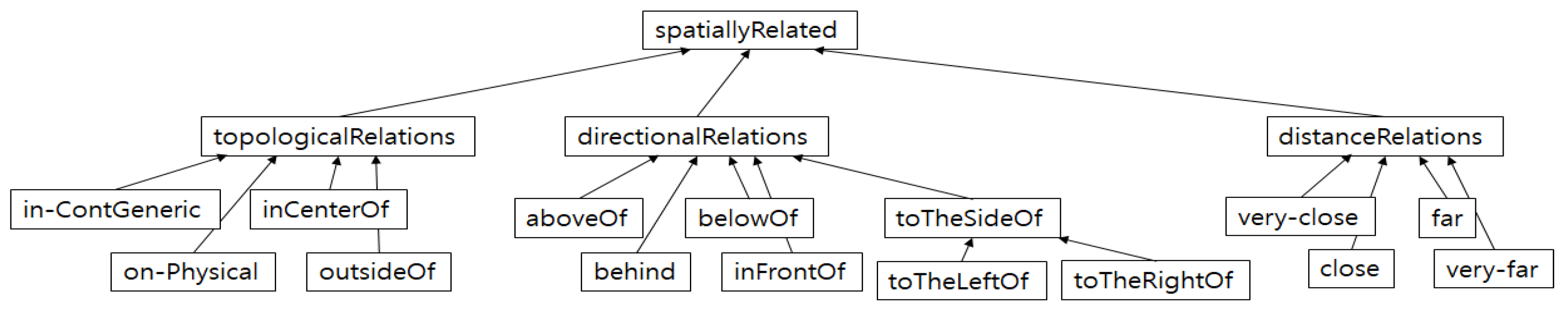

The OpenCyc [

14] provides OWL-DL (a sublanguage of OWL (Web Ontology Language) based on description logic)-based upper ontology that the semantic Web community agrees on. To express knowledge about the specific domain of a robot, ORO [

16] and Knowrob [

17] expand the upper ontology of OpenCyc in a robot-oriented manner. ORO expands the upper ontology of OpenCyc to represent specific objects and actions that appear in scenarios, such as packing and cleaning a table. The context knowledge stored by ORO comprises spatial relations, including topological, directional, and distance relations, as well as abstracted symbolic knowledge such as visibility of agents and reachability of objects. For storing context knowledge in working memory, the triple store of OpenJena is used. The perception information collected from sensors is used for inferring context knowledge but is not stored in the working memory. For efficient management of working memory, ORO separately stores short-term, episodic, and long-term knowledge. The short-term and episodic knowledge are deleted every 10 seconds and five minutes, respectively, but the long-term knowledge is not deleted. Examples of short-term, episodic, and long-term knowledge are spatial relations, actions, and TBox, which is an ontology schema, respectively.

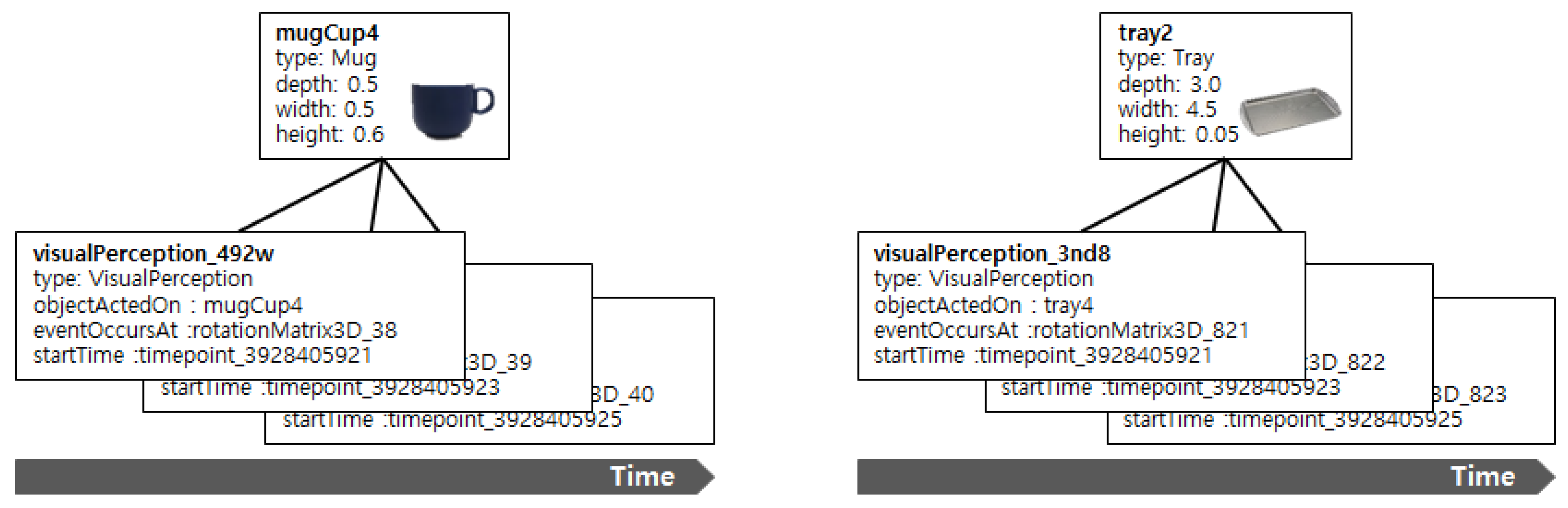

KnowRob expands the upper ontology of OpenCyc for specific objects, tasks, actions, and perception information, which are observed in scenarios occurring at home, such as making a pancake in the kitchen. The context knowledge stored by KnowRob is mostly perception information, such as the pose and bounding box of objects obtained from sensors. It does not store abstracted symbolic knowledge such as spatial relations. For storing context knowledge in the working memory, rdf_db triple store, which is included in the semantic Web library of SWI-Prolog, is used.

Ontology-based Unified Robot Knowledge (OUR-K) [

15] is another context knowledge representation model. OUR-K categorizes knowledge into context, spaces, objects, actions, and features classes and each class has three layers. Among them, the bottom layer inside the context class represents a spatial context, which represents the spatial relations between objects. The spatial context combines with the temporal context in the middle layer and leads to more abstracted contexts.

2.2. Context Query Language

ORO provides an API for context query, with find as the main function. As a SPARQL engine is present at the backend of the find function, context knowledge can be retrieved from the triple pattern by inheriting the expressive power of the SPARQL as it is. However, ORO cannot query past context knowledge because it always stores and maintains only recent spatial relations.

For context query, KnowRob expands the semantic Web library of SWI-Prolog and supports the prolog predicates that can construct queries that include the valid time of knowledge. The main predicate for knowledge retrieval is holds; it can retrieve context knowledge valid at a specific time in the present or past. This is because it is possible to enter the context predicate and valid time of the triple pattern as arguments. However, in the holds predicate, the valid time can be entered only as a time point and only at is supported for time operator, resulting in very complex queries.

Another query language is SELECTSCRIPT [

18], which constructs queries for XML (eXtensible Markup Language)-based simulation files that are continuously updated. In particular, SELECTSCRIPT supports embedded binary operators that can query spatial relations in the WHERE clause and supports the function to obtain the results in the form of prolog predicate logic. However, one limitation of SELECTSCRIPT is that it cannot query past context knowledge because it always updates and maintains the simulation file as latest information.

2.3. Context Reasoning

From the spatial reasoning and knowledge (SPARK), which is a geometric reasoning module, ORO infers abstracted context knowledge. SPARK infers spatial relations among objects, robots, and the user, as well as visibility and reachability from the perception information obtained from sensors, such as 2D fiducial marker tracking and human skeleton tracking, whenever perception information is inputted from sensors. SPARK is a forward reasoning method that infers context knowledge, delivers the result to the ontology module of ORO and stores it in the working memory. When storing context knowledge in the working memory, it performs consistency checks by using the Pellet reasoner.

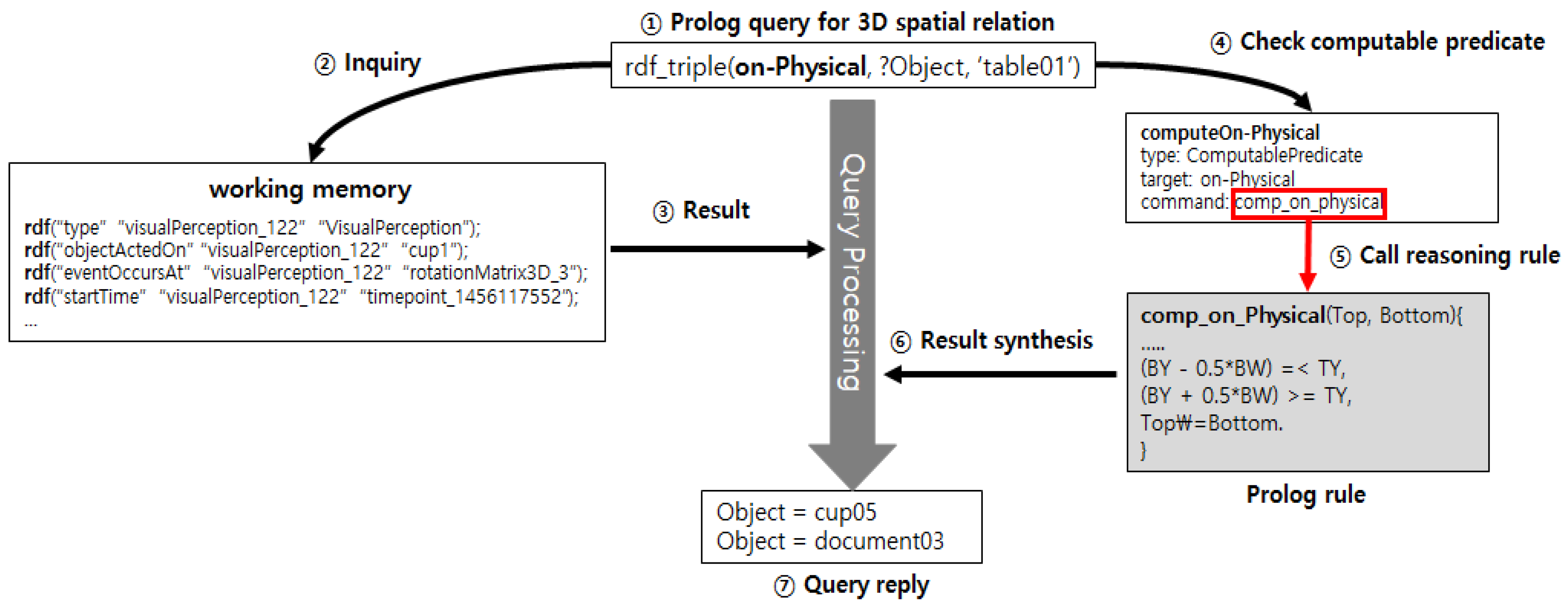

KnowRob infers abstracted context knowledge from a spatio-temporal reasoning module developed through SWI-Prolog. This module infers spatial relations between objects from the bounding box and the center of objects obtained from sensors. Unlike the forward reasoning method of ORO, KnowRob employs the backward reasoning method, which infers context knowledge only when requested by a query using a computable predicate.

Another estimator is the QSRlib (a library that allows computation of qualitative spatial relations and calcul) [

24], which is a software library implemented using Python, and it can be embedded in various intelligent systems. QSRlib provides geometric reasoning for distance relations, such as qualitative distance calculus [

25]; directional relations, such as cardinal direction (CD) [

26] and ternary point configuration calculus [

27]; and topological relations, such as rectangle algebra [

28] and region connection calculus [

29], from video information obtained from RGB-D cameras. In addition, it also provides geometric reasoning for the movements of objects such as qualitative trajectory calculus [

30].

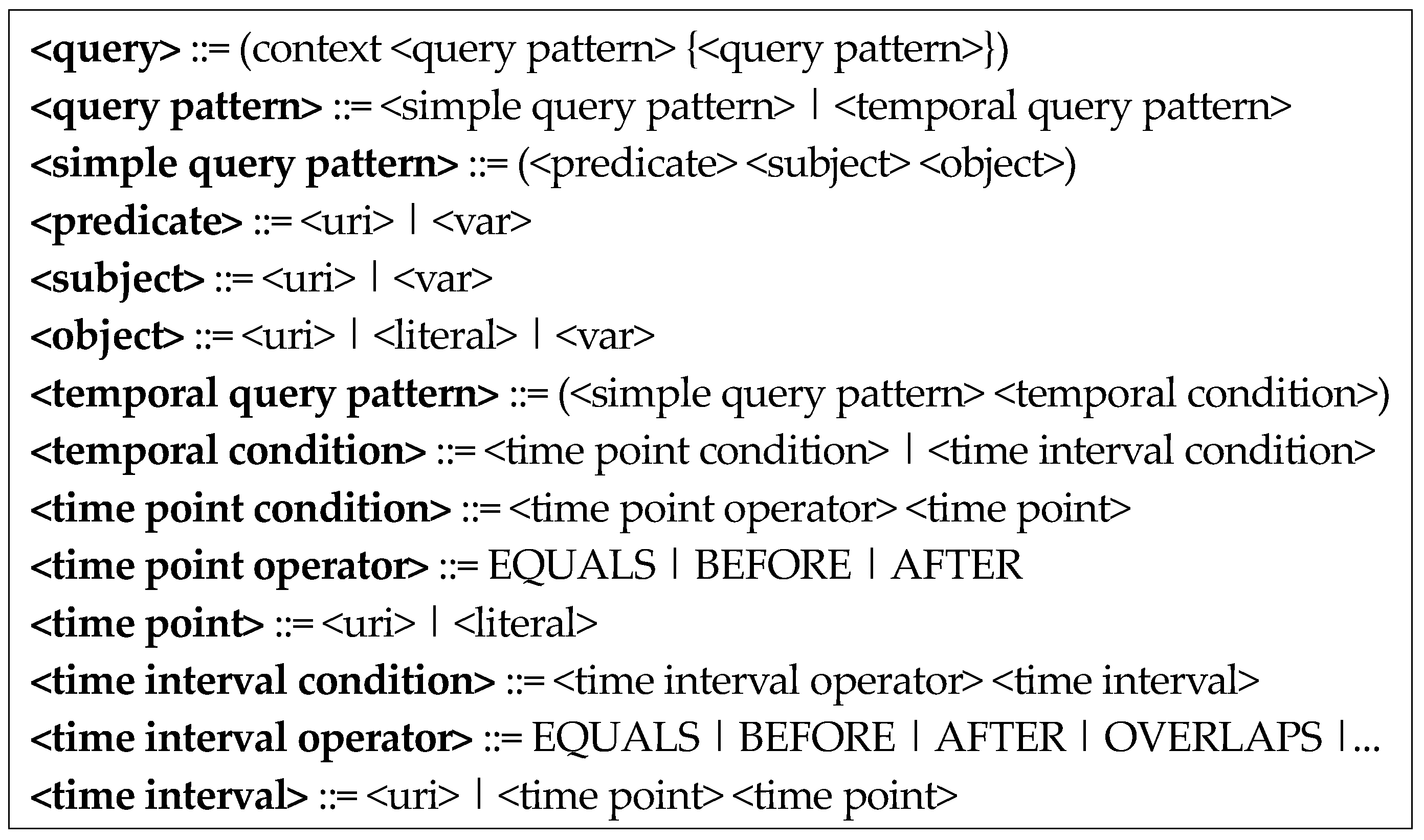

4. Design of Robot Context Query Language

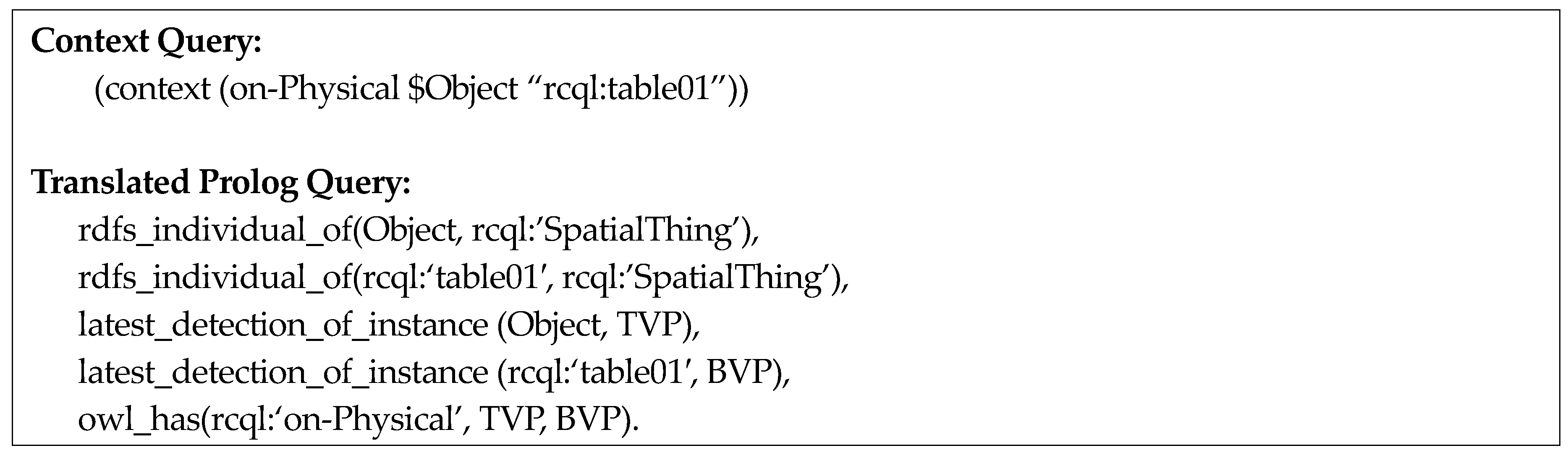

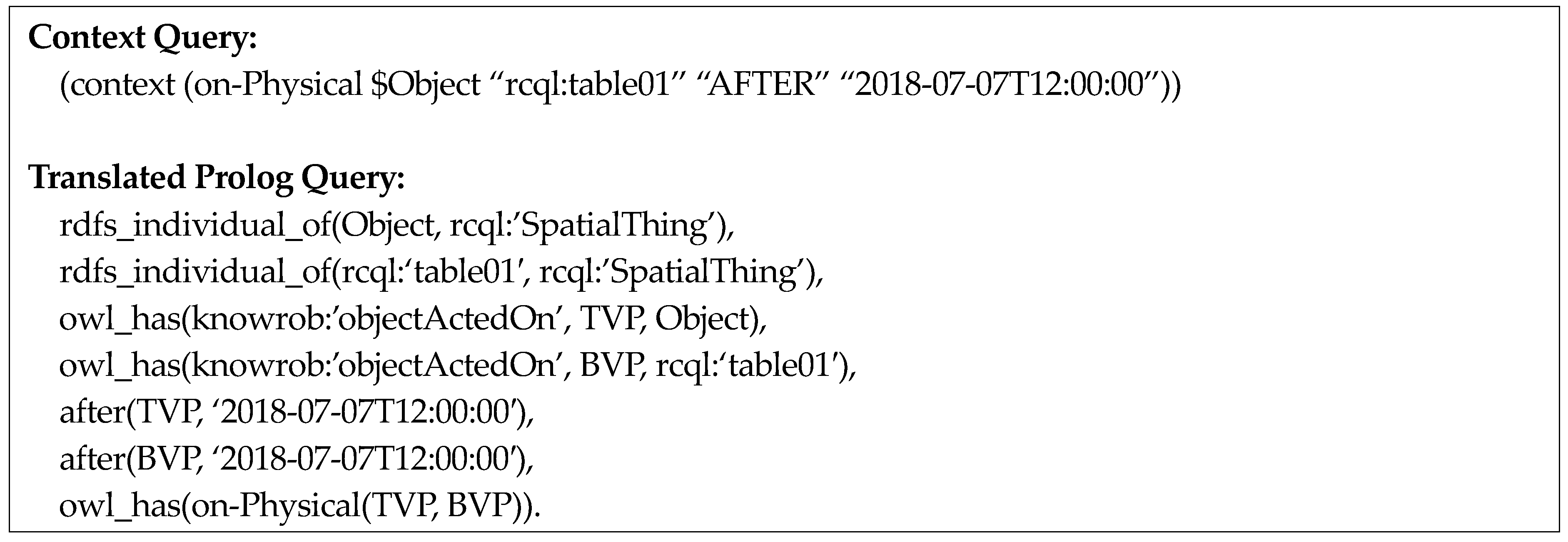

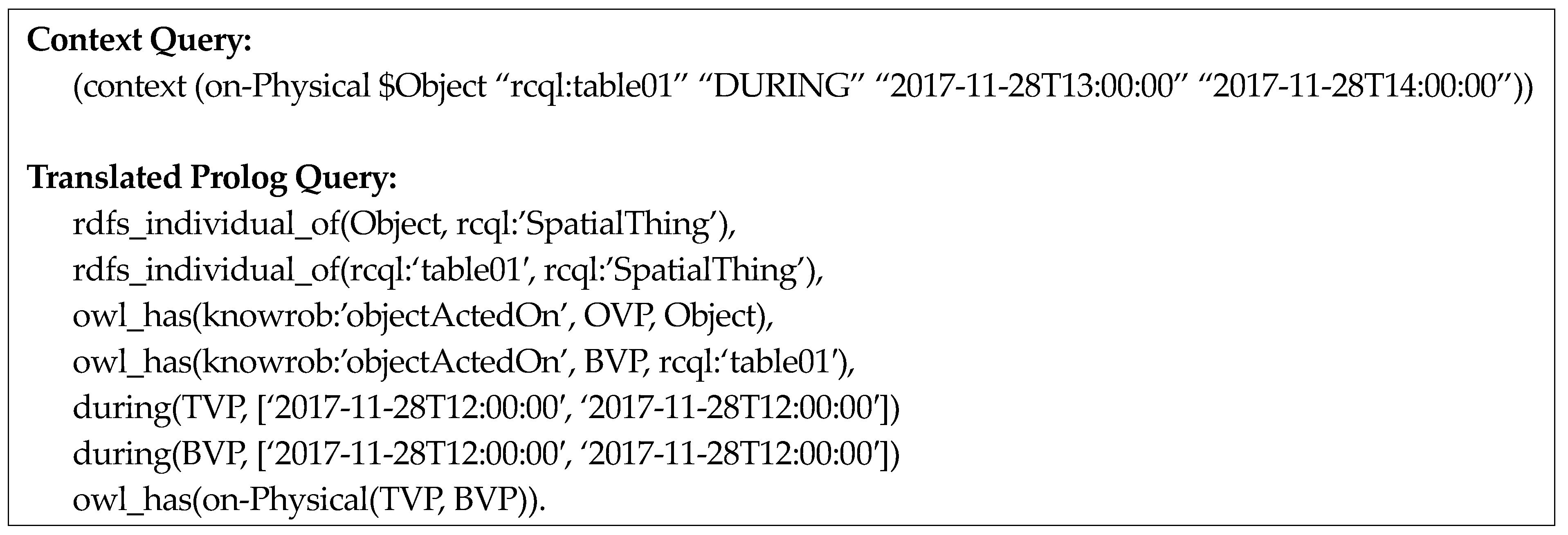

As the 3D spatial relations change continuously over time, a context query language with time dependency is required. Furthermore, as the context knowledge mainly required of robots in terms of service is abstracted symbolic knowledge, such as 3D spatial relations rather than low-level values, such as object poses, the context query language must be written very concisely and intuitively. To satisfy these requirements of the context query language, we propose the grammatical structure of the context query language in

Figure 7; this structure is written in the extended Backus Naur form.

This grammatical structure is interpreted as follows. A query is a repetition of a query pattern, which is either a simple or temporal query pattern. A simple query pattern is a triple format consisting of a predicate, a subject, and an object. The predicate and subject are a URI (uniform resource identifier) or variable, and the object is a URI, literal, or variable. The temporal query pattern is composed of a simple query pattern and a temporal condition, which is composed of a time-point condition and a time-interval condition. The time point condition is composed of a time-point operator and a time point. The time-point operators include EQUALS, BEFORE, and AFTER, while the time point is a URI or literal. The time-interval condition is composed of a time-interval operator and a time interval. Thirteen time-interval operators are present and are based on Allen’s theory; these include EQUALS, BEFORE, AFTER, and OVERLAPS. The time interval is a URI or consists of two time points. The grammatical structure of the context query language in

Figure 7 can be used to write context queries, as shown in

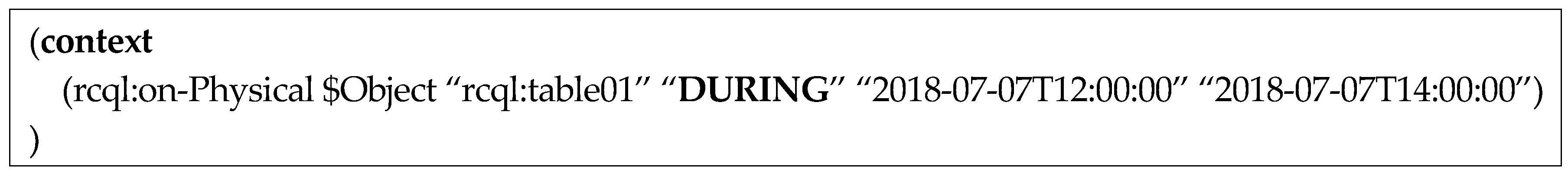

Figure 8,

Figure 9 and

Figure 10.

Figure 8 shows a context query using a time-point operator.

Figure 8 represents the query “What is the object on the table at 12:00?” In this example, the first three elements after the header

context are a predicate (

rcql:on-Physical), a subject

($Object), and an object (

rcql:table01), respectively. Here, the subject is a variable. The fourth element is the time-point operator EQUAL and the last fifth element is the time literal value (

2018-07-07T12:00:00), which is the operand of the time-point operator.

Figure 9 illustrates a context query using a time-interval operator.

Figure 9 represents the query, “What is the object that was on the table between 12:00 and 14:00?” The fourth element in this query is the time-interval operator DURING and the fifth and sixth elements represent the start time (

2018-07-07T12:00:00) and end time (

2018-07-07T14:00:00), respectively, for the operand of the time-interval operator. Finally,

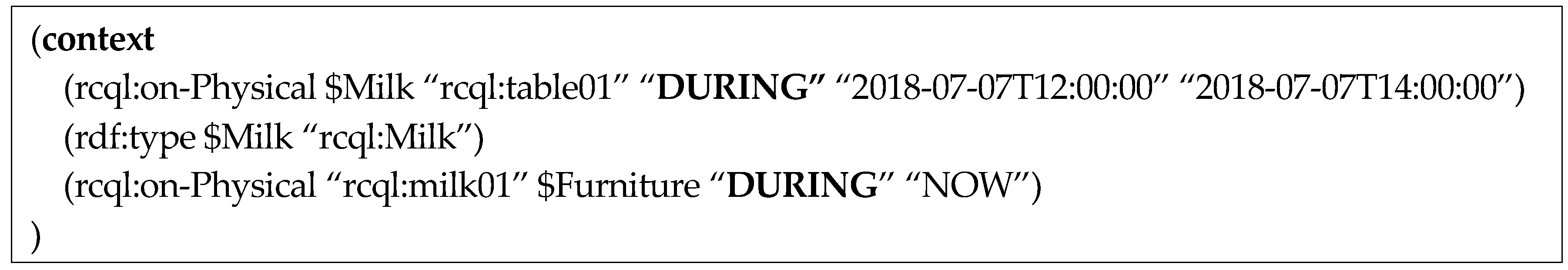

Figure 10 illustrates a multiple context query using time-interval operators.

As shown in

Figure 10, a multiple context query refers to a query consisting of two or more query patterns. The query in this figure represents “Where is the milk that was on the table during lunch between 12:00 and 14:00 now?” In this query, the first query pattern queries about the objects that were on the table during lunch by using a time-interval operator. The second query pattern only queries about the milk among the objects queried in the first query pattern. The last query pattern queries about the furniture on which the milk (queried about in the second query pattern), is placed presently. Here, NOW is the time constant that dynamically receives the current time.

The query languages of ORO and SELECTSCRIPT can only query about the current context knowledge because the valid time of context knowledge cannot be specified. However, ST-RCQL can query context knowledge that is valid at specific times both in the past and present. Similarly, KnowRob allows the query of a valid past context knowledge. However, KnowRob provides only one time-operator, whereas ST-RCQL provides a rich set of 13 time-operators following the Allen’s interval theory, thus allowing very efficient queries of context knowledge in different periods. Furthermore, more concise and abstracted queries are possible as ST-RCQL supports time constants such as NOW and TODAY.

7. Conclusions

In this paper, we proposed the context query language ST-RCQL and query-processing system ST-RCQP for service robots working in an indoor environment. The proposed context query language ST-RCQL was designed to query 3D spatial relations among objects at various periods based on Allen’s interval algebra. Furthermore, the automatic query-translation rules were designed to write very concise and intuitive queries by considering the nature of service robots, which mainly handle abstracted symbolic knowledge in terms of service. Furthermore, to support the real-time property of service robots, this study proposed a query-processing method of backward reasoning and a method of accelerating query-processing by the building of spatio-temporal indices for the individual perception information of objects. The suitability of ST-RCQL as a robot context query language and the efficient performance of ST-RCQP were verified through various experiments.

From the perspective of storing and retrieving context knowledge, one of the problems that must be dealt with as much care as time dependence is uncertainty, which was not addressed in this study. In the future, we plan to research a context query language and processing method that considers both time dependence and uncertainty of a context language.