Abstract

Impairments in gait occur after alcohol consumption, and, if detected in real-time, could guide the delivery of “just-in-time” injury prevention interventions. We aimed to identify the salient features of gait that could be used for estimating blood alcohol content (BAC) level in a typical drinking environment. We recruited 10 young adults with a history of heavy drinking to test our research app. During four consecutive Fridays and Saturdays, every hour from 8 p.m. to 12 a.m., they were prompted to use the app to report alcohol consumption and complete a 5-step straight-line walking task, during which 3-axis acceleration and angular velocity data was sampled at a frequency of 100 Hz. BAC for each subject was calculated. From sensor signals, 24 features were calculated using a sliding window technique, including energy, mean, and standard deviation. Using an artificial neural network (ANN), we performed regression analysis to define a model determining association between gait features and BACs. Part (70%) of the data was then used as a training dataset, and the results tested and validated using the rest of the samples. We evaluated different training algorithms for the neural network and the result showed that a Bayesian regularization neural network (BRNN) was the most efficient and accurate. Analyses support the use of the tandem gait task paired with our approach to reliably estimate BAC based on gait features. Results from this work could be useful in designing effective prevention interventions to reduce risky behaviors during periods of alcohol consumption.

1. Introduction

Acute alcohol intoxication is associated with numerous health risks. For example, impaired driving due to alcohol was implicated in 31% of the 33,000 deaths from motor vehicle accidents in the USA in 2014 [1]. These consequences largely stem from alcohol’s impairing effects on psychomotor performance [2]. Compounding this risk are impaired decision-making [3] and lack of awareness of the degree of alcohol-related impairment during drinking episodes [4]. Strategies to measure alcohol-related psychomotor impairments and provide real-time feedback to individuals could deter involvement in activities that require psychomotor function (i.e., driving), thus reducing likelihood of injury [5].

One measure of psychomotor performance that is sensitive to alcohol is walking (gait). Gait requires coordination of multiple sensory and motor systems. Research in a controlled laboratory setting has shown that alcohol affects both postural stability [6] and gait [7]. Although law enforcement professionals have used subjective performance on a heel-to-toe tandem gait task as a field sobriety test for years, there is no current process to objectively measure aspects of gait during drinking occasions. The rapid growth of smartphone ownership [8] suggests that these devices could be useful to objectively measure gait impairment during drinking episodes. Although a couple of small field studies have used smartphone accelerometers and gyroscopes to detect gait abnormalities during alcohol consumption [9,10], none has determined the association of gait features with blood alcohol content (BAC) levels.

The purpose of this work was to identify the features of movement patterns (gait) that can be measured through a smartphone’s 3-axis accelerometer and gyroscope that could be used for estimating the BAC level in a typical drinking environment. To accomplish this aim, we designed an iPhone app (DrinkTRAC) to collect smartphone sensor-based data on gait (3-axis accelerometer, gyroscope, magnetometer) and ecological momentary assessment (EMA) measures of self-reported number of drinks consumed each hour, from 8 p.m. to 12 a.m., during weekend evenings (Fridays and Saturdays). We did not collect detailed data on drinking in the hours prior to 7 p.m. start (1 h prior to 8 p.m.), which could have under-estimated BAC in some cases. However, based on prior research in a similar cohort of young adults [11], we found that less than 15% of drinking occasions start before 7 p.m. We enrolled 10 young adults with a history of heavy drinking in a repeated-measures study to provide smartphone sensor and self-report data over a period of four consecutive weeks. We used a Bayesian regularized neural network (BRNN) to perform regression analysis to estimate BAC. Results from this work could be useful in designing effective prevention interventions to reduce risky behaviors during periods of alcohol intoxication.

In this paper, we propose a novel approach for analyzing the movement patterns of people and develop a supervised learning model to associate their gait anomalies with BAC levels. The gait features are captured using smartphone-based inertial measurements, using accelerometer, gyroscope, and magnetometer sensors. By exploring the aspects of gait and extracting their salient features, we input them to a supervised machine learning technique. Thus, the primary goal of this work is to develop and implement a gait analyzing system, using the inertial sensors of smartphones inside a user’s pocket, while simultaneously capturing their movement pattern for the detection of alcohol-induced changes in gait patterns. The results of this work provide us with an in-depth understanding of the spatiotemporal properties of human gait that are affected by alcohol. In summary, the contributions of the paper are:

- Exploring and identifying gait properties and extracting some features from gait signals measured by smartphone sensors that could estimate BAC values in a typical drinking environment.

- Comparing different machine-learning techniques to predict BAC values.

- Demonstrating the feasibility of smartphone sensors measurements in estimating BAC value.

The rest of the paper is structured as follows: in Section 2, we explain methods to measure alcohol consumption and the ways in which BAC levels are computed. In Section 3, we describe our method for collecting data through the smartphone-based application. In Section 4, we explain sensor data processing for movement pattern analysis and extracting gait features. We also discuss some details of our network architecture and training procedures. In Section 5, we present the results comparing different training techniques for the artificial neural network and the process of finding the most efficient technique. Furthermore, we evaluate two other regression algorithms. In Section 6, we discuss some related work. Finally, in Section 7, we provide concluding remarks and future directions.

2. Background: Methods to Measure Alcohol Consumption

The ability to accurately measure BAC in the real world is vital for understanding the relationship between alcohol consumption patterns and the impairments of normal functioning that occur (such as those related to gait). BACs vary as a function of gender, total amount of alcohol consumed, type of alcohol, time spent drinking, food consumption, body weight and individual differences in absorption and metabolism rates [12]. Available methods to measure BAC outside of healthcare facilities include self-reporting and non-invasive monitoring methods, i.e., breathalyzers or transdermal alcohol monitors [13]. Due to limitations in feasibility of measuring in-vivo BACs using monitors, the majority of scientific literature on alcohol consumption has been based on retrospective self-reports [14]. In 1932, the first equation to estimate blood-alcohol content from self-reported alcohol consumption was published [15]. Since then, researchers have identified ways to improve the accuracy of that formula, such as modifications primarily in how they adjust body weight to account for gender differences in water content of the body and secondarily, in how the overall dose of alcohol is calculated. One of the most accurate formulas was created by Matthews and Miller in 1979 [16]:

Here, BAC is blood alcohol concentration expressed in g/dL; c is the number of standard drinks reported; GC is a gender constant (9.0 for women and 7.5 for men); β60 is the metabolism rate of alcohol per hour (0.017 g/dL); and t is the number of hours spent drinking. This formula was found to have a significantly stronger intraclass correlation with breath alcohol concentrations (criterion standard) than did the other equations when measured after an uncontrolled episode of drinking [17]. Still, the accuracy of self-reported eBAC values are dependent on a respondents’ ability to recall the number of drinks they consumed, knowledge of standardized drink sizes, and the absence of reporting biases due to minimizing sensitive information [18].

In this study, we chose not to use transdermal alcohol monitors or breathalyzers for several reasons. First, transdermal alcohol monitors (e.g., WrisTAS, SCRAM) are relatively costly to acquire and maintain, which can limit their wide use. Second, transdermal alcohol monitors and breathalyzers involve some burden for participants (e.g., possible minor skin irritation from SCRAM, need to carry breathalyzer) [19,20,21,22]. By comparison, smartphone sensor data can be collected with relatively low burden unobtrusively on an individual’s personal phone [23]. Third, transdermal alcohol monitors have been found to be less useful in detecting lower drinking quantities, as compared to self-reports, and content readings tend to lag behind consumption by up to several hours [23,24].

We used retrospective self-reports and the Matthews and Miller formula to estimate eBAC. To assist with recall, we asked participants to report their number of drinks per hour. The use of experience sampling methods to collect self-reports of alcohol use that is more proximal to drinking occasions can minimize any biases associated with retrospective reporting [25]. To ensure the standardization of drink amounts, the DrinkTRAC app presented participants with a color picture of “standard drink” sizes (based on National Institute on Alcohol Abuse and Alcoholism guidelines [26]: 12 oz of beer, 5 oz of wine, 1.5 oz of liquor) and asked: “How many standard drinks did you have in the past hour?” with a drop-down menu ranging from 0 to 30. In this way, participants reported alcohol consumption in standard drink units, in order to minimize error in self-report of alcohol consumption. Self-report of alcohol use using EMA has shown validity [27,28]. To reduce reporting biases, we used a technology platform to collect sensitive data, which has been shown to be more accurate than in-person reporting [29].

3. Data Collection

3.1. Smartphone Application (“DrinkTRAC”) for Data Collection

This prospective study recruited a convenience sample of young adults who were identified in the emergency department (ED) as reporting past hazardous drinking between 19 February and 9 May 2016. All participants completed informed consent protocols prior to study procedures and were provided with resources for alcohol treatment.

3.2. Participants

Participants were young adults (aged 21–26 years) who presented to an urban ED. A total of 28 medically stable ED patients who were not seeking treatment for substance use, not intoxicated, and who were going to be discharged to home, were approached by research staff. Among those eligible to be approached, 23 patients provided consent to complete an alcohol use severity screen. Those who reported recent hazardous alcohol consumption based on an Alcohol Use Disorder Identification Test for Consumption (AUDIT-C) score of ≥3 for women or ≥4 for men [30] and who drank primarily on weekends were eligible for participation. We excluded those who reported any medical condition that resulted in impaired thinking or memory or gait, those who reported past treatment for alcohol use disorder, and those without an iOS phone. A total of 10 participants met the study enrollment criteria and uploaded the DrinkTRAC app to their phone. We instructed participants to refrain from any non-drinking substance use (excluding cigarette use) during the sampling days. We also informed participants that they would receive $10 for completing the baseline survey and app-based tasks in the ED, $10 for completing the exit survey at four weeks, and $1 per completed EMA (up to an additional $40). Table 1 shows the results for sample descriptive statistics.

Table 1.

Sample descriptive statistics.

3.3. Smartphone Application Design

The DrinkTRAC app was developed using Apple’s ResearchKit platform, as it allowed for convenient and professional-appearing modular builds that incorporated timed psychomotor tasks. Baseline survey questions included socio-demographic measures and severity of alcohol use. The app then presented participants with EMA, including two questions (cumulative number of drinks consumed and perceived intoxication) followed by psychomotor tasks, including a 5-step tandem gait task. The research associate was present to ensure understanding and to observe compliance with instructions on the initial trial of the app’s tasks, which were conducted in the ED.

Over four consecutive Fridays and Saturdays, every hour from 8 p.m. to 12 a.m., participants were sent an electronic notification to log in to the DrinkTRAC app and complete the EMA. We chose to sample data on weekend evenings, given that this is a time when young adults typically drink alcohol [31]. We collected EMAs hourly from 8 p.m. to 12 a.m. on those nights, with an intention of capturing both the ascending and descending limbs of alcohol intoxication. We used fixed hourly assessment times, given that they would provide a predictable framework for participants and would allow us to more easily calculate eBAC changes over the course of the evening. Given the deleterious effect of alcohol on memory, we chose to collect drinks consumed since last report as opposed to cumulative drinks over an entire drinking occasion. We designed the tandem gait task to take less than 45 s to optimize completion and reduce potential for disruptions that could interfere with task performance. Basic text instructions were given prior to the tandem gait task, and when the task was completed, participants were presented with a figure of their completion rates for the day.

3.4. Estimated Blood Alcohol Concentration

We calculated eBAC during each hour when data was available using the aforementioned formula, created by Matthews and Miller [16]. Estimates produced by this formula correlate with breath alcohol concentration and were found to perform best, relative to estimates from other commonly used eBAC formulas [17]. When drinks consumed in any prior hours were missing, we assumed no drinks were consumed during that period. When drinks had been consumed in prior hours, we incorporated those drinks into BAC calculations (with alcohol clearance taken into account). As shown in related research using the same data set [32], participants completed 32% of EMA. Higher rates of missing EMA data occurred later in the evening and over time in the study. Within the 128 completed EMA, we captured 38 unique drinking episodes, with each participant reporting at least three drinking episodes. Almost half of the EMA (n = 60, 46.9%) were completed either prior to drinking or on non-drinking evenings, 55 EMA (43.0%) were completed on the ascending eBAC limb, and 13 (10.1%) were completed on the descending eBAC limb. However, there were a number of occasions with missing data on alcohol consumption in hours prior to a given hour were missing and thus assumed to be zero. This may have resulted in under-estimations of BAC. On a drinking day, participants reported consuming a mean of 3.6 (SD = 2.2; range: 1–10 drinks). The mean eBAC was 0.04 (SD = 0.05), with a peak of 0.23.

3.5. Inertial Data Acquisition During Tandem Gait Task

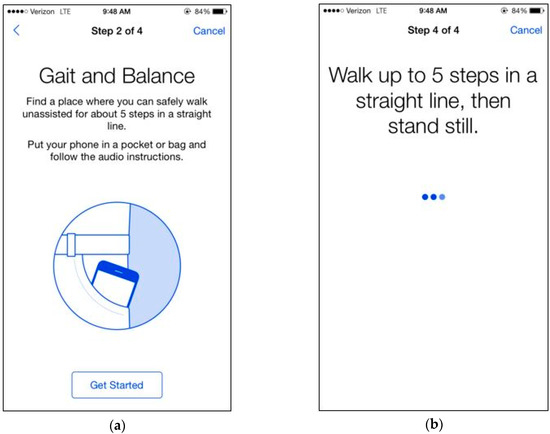

In the tandem gait task, participants were instructed to walk in a straight line for five steps. We advised participants not to continue if they felt that they could not safely walk five steps in a straight line unassisted. If participants clicked “next”, they were shown a picture of a phone in a front pocket and told: “Find a place where you can safely walk unassisted for about five steps in a straight line”, followed by the text: “Put the phone in a pocket or bag and follow the audio instructions. If you do not have somewhere to put the phone, keep it in your hand”. When the participant clicked “Get Started”, the app displayed a timer and played an audio recording of a voice counting down from 5 to 1. If the audio option was turned on, participants heard “Walk up to five steps in a straight line, then stand still”. We recorded the acceleration with gyroscope sensors embedded in the phone to collect 3-axis acceleration and angular velocity at a sampling frequency of 100 Hz for 30 s. Figure 1a,b are DrinkTRAC app screen shots of the tandem gait task.

Figure 1.

DrinkTRAC app screenshots of the tandem gait task. (a) Screenshot showing an example instruction screen from the Gait Task; (b) Screenshot showing another example instruction screen from the Gait Task.

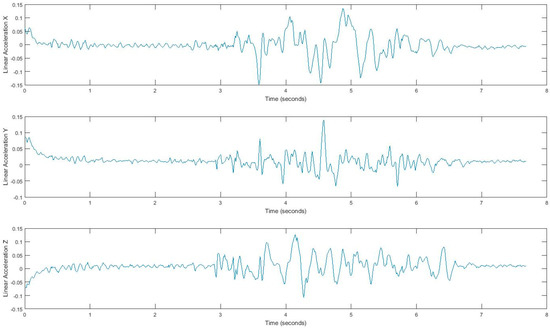

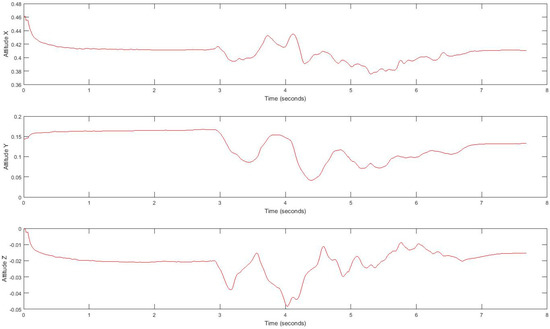

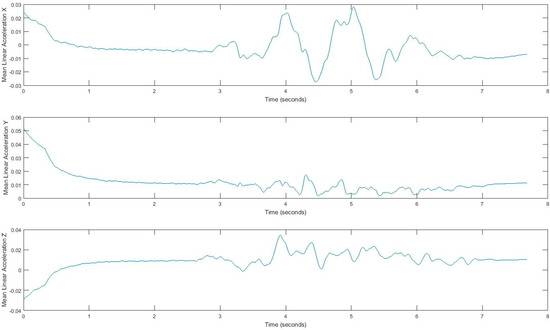

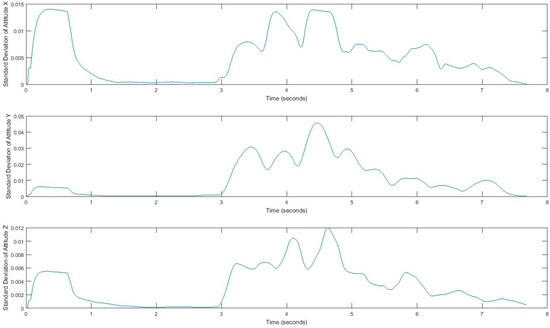

In our experiment, subjects’ movement generates linear acceleration and attitude signals measured by the smartphone. Raw acceleration data incorporates both gravity and the acceleration of the device. We removed the effects of gravity to measure acceleration of the device called linear acceleration which is determined by enhancing the gravity measurements with sensor fusion. Thus, the best result for computing linear acceleration needs not only an accelerometer, but also a gyroscope. We also measured the attitude of the device, which is the computed device orientation using the accelerometer, magnetometer, and gyroscope. These values yield the Euler angles of the device. Figure 2 shows linear acceleration signal for one subject and Figure 3 shows a three-axis signal of device attitude for the same subject.

Figure 2.

Tandem gait linear acceleration signal.

Figure 3.

Tandem gait linear attitude signal.

4. BAC Regression with Movement Pattern and Gait Features

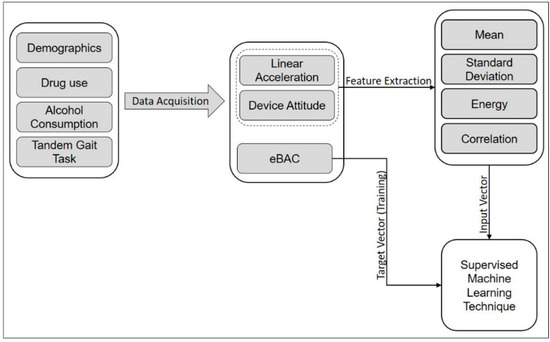

Using the sensor observations collected by the smartphone app (DrinkTRAC), inertial data are obtained during the tandem gait task. This set of data is used to explore gait and extract features to find the relationship between movement patterns and eBAC. We used these features as input vector into a supervised learning model that performs regression analysis to find the target value of eBAC. Figure 4 shows a schematic diagram of the data flow for eBAC estimation. The procedures of data acquisition and feature extraction are explained in more detail in subsequent sections.

Figure 4.

Schematic diagram of the data flow for eBAC estimation.

4.1. Feature Extraction for Gait Exploration.

Acquired inertial data is noisy and less informative, rather than processed signals, which can reveal more information. Hence, we extract features that can describe the properties of each demonstrating gait signal. We consider four features, i.e., mean, standard deviation, correlation, and energy, by using a sliding window over signals. Extracted features belong to either the time domain or the frequency-domain. The first three features, mean, standard deviation, and correlation, are time domain features, and energy is from the frequency domain. All measurements are in three dimensions; thus, resulting in a total of 24 possible features. The efficiency of these features has been discussed in [33,34,35]. Energy in the frequency domain is computed by using a fast Fourier transform (FFT), which converts the signal to frequency. Using the window size of 128 samples enables us to quickly calculate these parameters. In fact, energy feature is the sum of the squared discrete FFT coefficient magnitudes of the signal. The sum was divided by the window length of the window for normalization [33,34,35]. If are the FFT components of the sliding window, then . Energy and mean values differ for each movement pattern. Also, correlation effectively demonstrates translation in one dimension. Figure 5 and Figure 6 show the extracted features for a gait signal.

Figure 5.

Mean of linear acceleration, using a sliding window.

Figure 6.

Standard deviation of the attitude signal, computed using a sliding window.

4.2. Bayesian Regularized Neural Network (BRNN) for BAC Regression

Neural networks can model the relationship between an input vector x and an output y. The learning process involves adjusting the parameters in a way that enables the network to predict the output value for new input vectors. One of the advantages of using neural networks for regression and predicting values is that it uses a nonlinear sigmoid function in a hidden layer, which enhances its computational flexibility, as compared to a standard linear regression model [36]. Thus, we applied a neural network model to estimate eBAC. In order to efficiently design and train a neural network, we must find an appropriate network architecture, determine a suitable training set, and compute the corresponding parameters of the network (such as weights and learning rate) by using an efficient and effective algorithm. In the rest of this section, we explain the overall system architecture and the training process of the parameter.

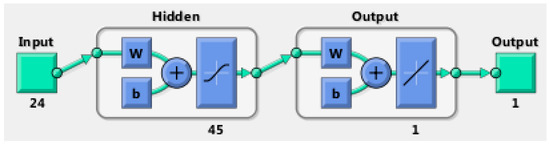

4.3. Neural Network Architecture

In this work, we used multilayer perceptron (MLP), a BRNN, to model the nonlinear relationships between input vectors, the extracted gait features, and the output (eBAC value), with nonlinear transfer functions. The basic MLP network is designed by arranging units in a layered structure, where each neuron in a layer takes its input from the output of the previous layer or from an external input. Figure 7 shows a schematic diagram of our MLP structure. The transfer functions of the hidden layer in our feedforward network are a sigmoid function Equation (2). Since we use MLP as a regression technique, we should produce reasonable output values that are outside the range of [−1,1]. Hence, in the output layer, we use a linear transfer function. Therefore, we may use this type of network as a general function approximator which approximates the eBAC as a function of gait features. The mathematical model to compute this is as shown in Equations (2) and (3):

where is the input, is nonlinear weights that connect input neurons to hidden layer neurons, and linear weights that connect the hidden neurons with the output layer.

Figure 7.

The architecture of the feedforward neural network.

4.4. Training

Numerous training algorithms and learning rules have been proposed for setting the weights and parameters in neural networks; however, it is not possible to determine a global minimum solution. Therefore, training a network is one of the most crucial steps for neural network design. Backpropagation, which is basically a gradient descent optimization technique, is a standard and basic technique for training feedforward neural networks; however, it has some limitations, such as slow convergence, local search nature, overfitting data, and being overtrained, which can cause a loss of the network’s ability to correctly estimate the output [36]. As a result, the validation of the models can be problematic. Moreover, optimization of the network architecture is sometimes time-consuming. There are some modifications to the backpropagation, such as conjugate-gradient and Levenberg—Marquardt algorithms, that are faster than any variant of the backpropagation algorithm [37,38]. The Levenberg—Marquardt algorithm is for minimizing a sum of squared error [39,40] and to overcome some of the limitations in the standard backpropagation algorithm, such as an overfitting problem.

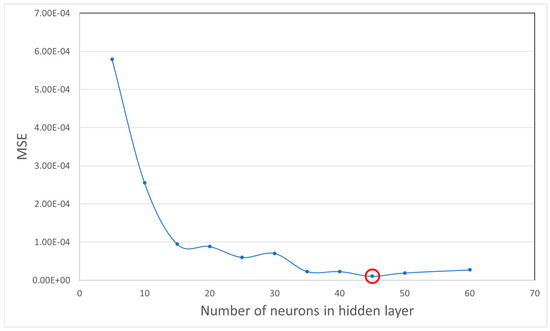

Avoiding the overfitting problem in network architectures can be a serious challenge, because we try to achieve an accurate estimation of the modeled function by a neural network with a minimum number of input variables and parameters. Having too many neurons in the hidden layer can cause overfitting, since the noise of the data is modeled along with the trends. Furthermore, an insufficient number of neurons in the hidden layer can cause problems with the learning data. For the purpose of finding the optimum number of neurons in the hidden layer, we conducted a model selection experiment with different number of neurons ranging from 5 to 60 and the cross-validation error for each setting was calculated. Figure 8 presents the value of error for networks with one hidden layer where X-axis shows number of neurons and Y-axis represents error value. As it can be seen and highlighted, a hidden layer with 45 neurons is is a good fit for the dataset.

Figure 8.

Mean square error for differnt numbers of neurons in hidden layers.

Using universal approximation theorem, it has been theoretically proven that a neural network with only one hidden layer using a bounded, continuous activation function can approximate any function [41,42]. Hence, in all configurations in the experiment and tests we only used one single hidden layer. Mackay [43] proposed a Bayesian regularization algorithm to meet such an overfitting challenge. Moreover, irrelevant and highly correlated parameters are another problem that can deteriorate the capability of the network to approximate the function, which can be solved by considering regularization [36]. Regularization can be modeled by incorporating Bayesian statistics. Through this method, we can remove most of the disadvantages of the feedforward neural network. In this study, we use a Bayesian regression neural network (BRNN) for the regression analysis. Thus, below we review this technique, which is a modification to the Levenberg—Marquardt algorithm.

4.5. Levenberg—Marquardt Algorithm

The Levenberg—Marquardt algorithm is an iterative algorithm that finds the minimum of a multivariate function. It is the sum of squares of non-linear real-valued functions [44]. Levenberg-Marquardt is widely used for solving non-linear least-squares problems and is usually considered as a standard technique for doing so. This algorithm is a curve-fitting method, a combination of gradient descent update and the Gauss-Newton update, two minimization methods. Equation (4) represents gradient descent equations, and a normal equation for the Gauss-Newton update is shown by Equation (5):

where:

As seen in Equation (5), the Levenberg—Marquardt algorithm is a linear combination of the gradient descent update and the Gauss-Newton update, where the parameter updates adaptively vary between them. λ determines this variation, and whenever the value of λ is small, then it tends toward the Gauss-Newton update. Otherwise, when the λ value is large, it will be closer to the gradient descent update. We started with a large λ value, therefore the first updates were small values in the steepest-descent direction, just as the gradient descends:

4.6. Bayesian Regularization of Neural Networks

Using a neural network for regression problems is preferable, as compared to other regression techniques. The first reason is the use of universal approximators, which can model any continuous nonlinear function [43], although having appropriate training data is essential to this process. Nevertheless, it is likely to have both an overfitting and an overtraining problem. Overfitting behavior of the update function occurs in a way that causes it to decrease at the beginning as expected, but after overfitting the data, it starts to increase again. Therefore, the model overfits the data and generalizes poorly.

This problem is addressed by using the Bayesian approach, where the weights of the network are considered as random variables. It enables us to apply statistical techniques to estimate distribution parameters [45]. Furthermore, because Bayesian regularization considers not only the weight, but also the network structure as a probabilistic framework, it makes neural networks insensitive to the architecture of the network if a minimal architecture has been provided [36]. In other words, Bayesian regularization can avoid overfitting by converting nonlinear systems into “well posed” problems [36,43]. In conventional training, an optimal set of weights is chosen by minimizing the sum squared error of the model output and target value; in the Bayesian regularization, one more term is added to the objective function:

where is the sum of squared errors, and , which is called weight decay, is sum of square of the weights in the network. , the decay rate, and are the objective function parameters [46]. Considering the objective function in Equation (8) and according to Bayes’s rule, the posterior distribution of the neural network weights can be written as in [47]:

where D is the dataset, M is the network, and w is the weight vector. Also, is the prior density, which represents our knowledge of the weights before any data is collected. is the likelihood function, which is the probability of the data occurring, given weights w. The denominator of Equation (9) is a normalization factor, which makes the summation of all probability 1 [45]:

where:

so we have:

Foresee and Hagan [45] demonstrated that maximizing the posterior probability is equivalent to minimizing the regularized objective function . By using Bayes’s rule, the objective function parameters are optimized as follows [45]:

where is the Hessian matrix () of the objective function, and MAP stands for maximum a posteriori. By substituting the H matrix, we are able to solve for the optimal values for and :

where is the effective number of parameters and calculated as and N is the total number of parameters in the network. Hessian matrix of F(w) must be computed; however, Foresee and Hagan [45] proposed using the Levenberg-Marquardt optimization algorithm to find the minimum point.

5. Results and Validation

In this section, we evaluate the designed network and present the results. First, we compare different training algorithms; then we conduct an experiment to see how effective an artificial neural network would be when applied to this dataset, and compared the BRNN to Support vector machine (SVM) and linear regression.

5.1. Comparison of Different Training Algorithms

We compared three training algorithms to show that BRNN approach is the most effective. Table 2 shows the result of mean squared error (MSE) and R values for conjugated gradient, Levenberg—Marquardt, and Bayesian regularization. Although the results of MSE and R for the training data set are close, using an independent data set that was separate from all the computations and testing the networks reveals that Bayesian regularization outperforms two other algorithms. Moreover, we prefer to use Bayesian regularization, since it also adjusts the effective parameters and influences the architecture of the network. Table 2 and Table 3 show the results for comparing different training and optimization algorithms.

Table 2.

Testing training algorithms with testing data.

Table 3.

Testing training algorithms with independent samples.

5.2. Performance

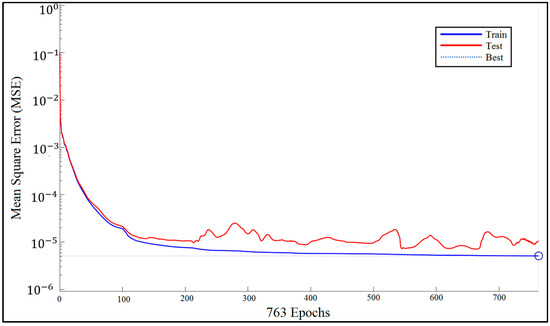

Figure 9 shows how network performance is improved during the training procedure. We measured the MSE for each of the training and test data sets. The BRNN algorithm does not use validation data.

Figure 9.

Bayesian regularized neural network (BRNN) performance.

5.3. Error Histogram

The blue bars represent training data and the red bars represent testing data (Figure 10). The histogram can give an indication of outliers, which are data points where the fit is significantly worse than that of most of the data.

Figure 10.

Error histogram visualized errors between target values and predicted values after training a feedforward neural network with 20 bins.

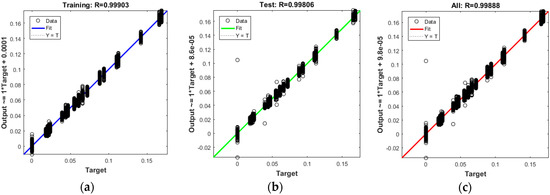

In this case, we can see that while most errors fall between −0.012 and +0.012, there are some training points and just a few test points that are outside of that range. These outliers are also visible on the testing regression plot (Figure 11). If the outliers are valid data points but are unlike the rest of the data, then the network is extrapolating for these points. It means more data similar to the outlier points should be considered in training analysis and that the network should be retrained.

Figure 11.

The best fit linear regression between outputs and targets for (a) Training data; (b) Test data; (c) All data.

5.4. Regression Results

The plots in Figure 11 demonstrate the training, testing, and all data. There is a dashed line in each plot that represents the perfect result − outputs = targets, which can be seen on the regression diagrams. The solid line in each plot represents the best fit linear regression line between outputs and targets. On top of each plot, we also mentioned the R value as an indication of the relationship between the outputs and the targets. If R = 1, this indicates that there is an exact linear relationship between the outputs and the targets. If R is close to zero, then there is no linear relationship between the outputs and the targets. In Figure 11, as well as Table 2 and Table 3, the R values show a reliable fit. The test results also show R values that are greater than 0.9. However, the scatter plot is helpful in showing that certain data points have poor fits.

5.5. Comparison with Other Regression Techniques

We compared MLP with SVM and linear regression. We evaluated these techniques using the extracted features. We calculated their correlation coefficient, their mean absolute error, their root mean squared error, their relative absolute error, and their root relative squared error. Table 4 shows the results of the comparisons. Based on these results, and most importantly on the root mean squared error, MLP outperforms SVM and linear regression.

Table 4.

Comparison of different regression techniques for BAC estimation.

6. Related Work

Smartphone-based alcohol consumption detection that evaluates a gait pattern captured by inertial sensors was proposed by [48], which labeled each gait signal with a Yes or a No in relation to alcohol intoxication. The study by Kao and colleagues [48] did not examine the quantity of drinks consumed, but focused its analyses solely on classifying a subject as intoxicated or not, thus limiting applicability across different ranges of BAC. Park et al. [49] used a machine learning classifier to distinguish sober walking and alcohol-impaired walking by measuring gait features from a shoe-mounted accelerometer, which is impractical to use in the real world. Arnold et al. [9] also used smartphone inertial sensors to determine the number of drinks (not BAC), an approach which could be prone to errors given that the association between number of drinks and BACs varies by sex and weight.

Kao et al. [48] conducted a gait anomaly detection analysis by processing acceleration signals. Arnold et al. [9] utilized naive Bayes, decision trees, SVMs, and random forest methods, where random forest turned out to be the best classifier for their task. Also, in Virtual Breathalyzer [50] AdaBoost, gradient boosting, and decision trees were used for classifying whether the subject was intoxicated (yes or no). Furthermore, they implemented AdaBoost regression and regression trees (RT), as well as Lasso for estimation of BrAC.

Our approach differs from prior work in several ways. First, we use a widely available software platform for collecting movement data (Apple ResearchKit). Second, we calculate eBAC using established formulas, thus providing a more accurate representation of actual blood alcohol content than drink counting alone. Third, we standardized the gait task, thus removing random variability in naturalistic walking. Fourth, we use not only accelerometers to understand movement data, but also consider gyroscope and magnetometer measurements. Fifth, we use a sliding window technique for extracting features and feeding MLP, which outperformed the other evaluated approaches. Finally, instead of simply modeling association of gait with drinking (yes/no values), we examine these relationships across a range of BAC values.

7. Conclusions and Future Directions

This work provides initial support for the utility of using movement analysis in the real world to detect alcohol intoxication in terms of eBAC. We designed and used a smartphone application (DrinkTRAC) for collecting both self-reported alcohol consumption data to calculate eBAC and smartphone sensor-based movement data during a tandem gait task to understand gait impairments. We processed the raw data and extracted some features using a sliding window. These features were fed into a Bayesian regularized neural network, and we were able to model and fit a curve to the function of eBAC with movement pattern. The results indicated that the approach is reliable and that it can be used to identify the level of blood alcohol content during naturalistic drinking occasions. However, there are some limitations.

Some other aspects of body movement such as body sway can also be used for detecting intoxication where these aspects can be captured using the same phone sensor data. In order to detect not only new aspects of body movement, also improving the efficiency of the current model, it is needed to study and evaluate some other sophisticated gait features such as THD and harmonic distortion to select the best feature set for achieving better results. These various studies of body movement and gait-related feature selection can be considered as a possible direction for future research.

This pilot study is limited by its small sample size (n = 10) and by the amount of missing EMA data (~70%), particularly for the descending limb of alcohol intoxication. Nevertheless, as a proof-of-concept study, it demonstrates the potential of accurate detection of drinking episodes using phone sensor data in the natural environment. Our findings may not be applicable to other populations, such as young adults with lighter alcohol use, or to other age groups, such as adolescents. The majority of participants were female and white, which limits our study’s overall generalizability. Therefore, there is a need to replicate the model in larger samples as future work. Furtheremore, individual differences in tolerance to alcohol, which were not examined in this study, might affect the accuracy of the model, and warrant future research. Moreover, due to the importance of testing the relationship between BAC and gait in a more controlled environment, a crucial next step is to conduct a similar study while more tightly controlling alcohol intake.

Since participants should refrain from any non-drinking substance use during the sampling days, it is another source of limitation that in-the-moment data on other substance use was not collected, and there was no self-report or objective verification of other substance use, which might have affected eBAC and model accuracy. Additionally, the DrinkTRAC app was made only for iOS devices, which affected study eligibility, and limits the generalizability of results to other mobile devices. Self-reporting of alcohol use using EMA has demonstrated reliability and validity [51], but may be subject to bias. Future work could use transdermal alcohol sensors to validate findings and EMA schedule flexibility to reduce missing data.

Acknowledgments

The study was approved by the University of Pittsburgh IRB.

Author Contributions

Brian Suffoletto and Tammy Chung conceived, designed, and conducted the experiments. Hassan A. Karimi and Pedram Gharani designed the analysis approach and analyzed the final results. Pedram Gharani used existing tools and developed the necessary code to analyze the data.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| ANN | Artificial neural network |

| BAC | Blood Alcohol Content |

| BRNN | Bayesian Regularized Neural Network |

| eBAC | Estimated Blood Alcohol Content |

| EMA | Ecological momentary assessment |

| FFT | Fast Fourier transform |

| MLP | Multilayer perceptron |

| SVM | Support Vector Machine |

References

- National Center for Statistics and Analysis. Alcoholimpaired Driving: 2014 Data; Traffic Safety Facts, DOT HS 812 231; National Highway Traffic Safety Administration: Washington, DC, USA, 2015. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/Publication/812231 (accessed on 13 December 2017).

- Christoforou, Z.; Karlaftis, M.G.; Yannis, G. Reaction times of young alcohol-impaired drivers. Accid. Anal. Prev. 2013, 61, 54–62. [Google Scholar] [CrossRef] [PubMed]

- Steele, C.M.; Josephs, R.A. Alcohol myopia: Its prized and dangerous effects. Am. Psychol. 1990, 45, 921–933. [Google Scholar] [CrossRef] [PubMed]

- Morris, D.H.; Treloar, H.R.; Niculete, M.E.; McCarthy, D.M. Perceived danger while intoxicated uniquely contributes to driving after drinking. Alcohol. Clin. Exp. Res. 2014, 38, 521–528. [Google Scholar] [CrossRef] [PubMed]

- Shults, R.A.; Elder, R.W.; Sleet, D.A.; Nichols, J.L.; Alao, M.O.; Carande-Kulis, V.G.; Zaza, S.; Sosin, D.M.; Thompson, R.S. Reviews of evidence regarding interventions to reduce alcohol-impaired driving. Am. J. Prev. Med. 2001, 21, 66–88. [Google Scholar] [CrossRef]

- Nieschalk, M.; Ortmann, C.; West, A.; Schmal, F.; Stoll, W.; Fechner, G. Effects of alcohol on body-sway patterns in human subjects. Int. J. Leg. Med. 1999, 112, 253–260. [Google Scholar] [CrossRef]

- Jansen, E.C.; Thyssen, H.H.; Brynskov, J. Gait analysis after intake of increasing amounts of alcohol. Int. J. Leg. Med. 1985, 94, 103–107. [Google Scholar] [CrossRef]

- Pew Research Center Internet & Technology. Available online: http://www.pewinternet.org/2015/10/29/the-demographics-of-device-ownership/ (accessed on 12 December 2017).

- Arnold, Z.; Larose, D.; Agu, E. Smartphone Inference of Alcohol Consumption Levels from Gait. In Proceedings of the 2015 International Conference on Healthcare Informatics, Dallas, TX, USA, 21–23 October 2015; pp. 417–426. [Google Scholar]

- Aiello, C.; Agu, E. Investigating postural sway features, normalization and personalization in detecting blood alcohol levels of smartphone users. In Proceedings of the IEEE Wireless Health (WH), Bethesda, MD, USA, 25–27 October 2016; pp. 1–8. [Google Scholar]

- Bae, S.; Chung, T.; Ferreira, D.; Dey, K.A.; Suffoletto, B. Mobile phone sensors and supervised machine learning to identify alcohol use events in young adults: Implications for just-in-time adaptive interventions. Addict. Behav. 2017. [Google Scholar] [CrossRef] [PubMed]

- Winek, C.L.; Esposito, F.M. Blood alcohol concentrations: Factors affecting predictions. Leg. Med. 1985, 34–61. Available online: http://europepmc.org/abstract/med/3835425 (accessed on 13 December 2017).

- Greenfield, T.K.; Bond, J.; Kerr, W.C. Biomonitoring for improving alcohol consumption surveys: The new gold standard? Alcohol Res. Curr. Rev. 2014, 36, 39–45. [Google Scholar]

- Wechsler, H.; Lee, J.E.; Kuo, M.; Seibring, M.; Nelson, T.F.; Lee, H. Trends in college binge drinking during a period of increased prevention efforts: Findings from 4 Harvard School of Public Health College Alcohol Study surveys: 1993–2001. J. Am. Coll. Health 2002, 50, 203–217. [Google Scholar] [CrossRef] [PubMed]

- Widmark, E.M.P. Die Theoretischen Grundlagen und die Praktische Verwendbarkeit der Gerichtlich-Medizinischen Alkoholbestimmung; Urban & Schwarzenberg: Berlin, Germany, 1932. (In German) [Google Scholar]

- Matthews, D.B.; Miller, W.R. Estimating blood alcohol concentration: Two computer programs and their applications in therapy and research. Addict. Behav. 1979, 4, 55–60. [Google Scholar] [CrossRef]

- Hustad, J.T.P.; Carey, K.B. Using calculations to estimate blood alcohol concentrations for naturally occurring drinking episodes: A validity study. J. Stud. Alcohol 2005, 66, 130–138. [Google Scholar] [CrossRef] [PubMed]

- Babor, T.F.; Steinberg, K.; Anton, R.A.Y.; del Boca, F. Talk is cheap: Measuring drinking outcomes in clinical trials. J. Stud. Alcohol 2000, 61, 55–63. [Google Scholar] [CrossRef] [PubMed]

- Alessi, S.M.; Barnett, N.P.; Petry, N.M. Experiences with SCRAMx alcohol monitoring technology in 100 alcohol treatment outpatients. Drug Alcohol Depend. 2017, 178, 417–424. [Google Scholar] [CrossRef] [PubMed]

- Simons, J.S.; Wills, T.A.; Emery, N.N.; Marks, R.M. Quantifying alcohol consumption: Self-report, transdermal assessment, and prediction of dependence symptoms. Addict. Behav. 2015, 50, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Alessi, S.M.; Petry, N.M. A randomized study of cellphone technology to reinforce alcohol abstinence in the natural environment. Addiction 2013, 108, 900–909. [Google Scholar] [CrossRef] [PubMed]

- Suffoletto, B.; Gharani, P.; Chung, T.; Karimi, H. Using Phone Sensors and an Artificial Neural Network to Detect Gait Changes During Drinking Episodes in the Natural Environment. Gait Posture 2017. [Google Scholar] [CrossRef] [PubMed]

- Leffingwell, T.R.; Cooney, N.J.; Murphy, J.G.; Luczak, S.; Rosen, G.; Dougherty, D.M.; Barnett, N.P. Continuous objective monitoring of alcohol use: Twenty-first century measurement using transdermal sensors. Alcohol. Clin. Exp. Res. 2013, 37, 16–22. [Google Scholar] [CrossRef] [PubMed]

- Karns-Wright, T.E.; Roache, J.D.; Hill-Kapturczak, N.; Liang, Y.; Mullen, J.; Dougherty, D.M. Time Delays in Transdermal Alcohol Concentrations Relative to Breath Alcohol Concentrations. Alcohol Alcohol. 2017, 52, 35–41. [Google Scholar] [CrossRef] [PubMed]

- Muraven, M.; Collins, R.L.; Shiffman, S.; Paty, J.A. Daily fluctuations in self-control demands and alcohol intake. Psychol. Addict. Behav. 2005, 19, 140–147. [Google Scholar] [CrossRef] [PubMed]

- US Department of Health and Human Services. Helping Patients Who Drink Too Much: A Clinician’s Guide; National Institute on Alcohol Abuse and Alcoholism: Bethesda, MD, USA, 2005. Available online: http://pubs.niaaa.nih.gov/publications/Practitioner/CliniciansGuide2005/guide.pdf (accessed on 13 December 2017).

- Shiffman, S. Ecological momentary assessment (EMA) in studies of substance use. Psychol. Assess. 2009, 21, 486–497. [Google Scholar] [CrossRef] [PubMed]

- Wray, T.B.; Merrill, J.E.; Monti, P.M. Using Ecological Momentary Assessment (EMA) to Assess Situation-Level Predictors of Alcohol Use and Alcohol-Related Consequences. Alcohol Res. 2014, 36, 19–27. [Google Scholar] [PubMed]

- Lucas, G.M.; Gratch, J.; King, A.; Morency, L.-P. It’s only a computer: Virtual humans increase willingness to disclose. Comput. Hum. Behav. 2014, 37, 94–100. [Google Scholar] [CrossRef]

- Babor, T.F.; de la Fuente, J.R.; Saunders, J.B.; Grant, M. AUDIT: The Alcohol Use Disorders Identification Test: Guidelines for Use in Primary Health Care; World Health Organization: Geneva, Switzerland, 1992. [Google Scholar]

- Del Boca, F.K.; Darkes, J.; Greenbaum, P.E.; Goldman, M.S. Up close and personal: Temporal variability in the drinking of individual college students during their first year. J. Consult. Clin. Psychol. 2004, 72, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Suffoletto, B.; Goyal, A.; Puyana, J.C.; Chung, T. Can an App Help Identify Psychomotor Function Impairments During Drinking Occasions in the Real World? A Mixed Method Pilot Study. Subst. Abus. 2017, 38, 438–449. [Google Scholar] [CrossRef] [PubMed]

- Bao, L.; Intille, S.S. Activity Recognition from User-Annotated Acceleration Data. In Pervasive Computing; Pervasive 2004; Ferscha, A., Mattern, F., Eds.; Springer: Heidelberg, Germany, 2004. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M.L. Activity recognition from accelerometer data. In Proceedings of the 17th Conference on Innovative Applications of Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; Volume 5, pp. 1541–1546. [Google Scholar]

- Gharani, P.; Karimi, H.A. Context-aware obstacle detection for navigation by visually impaired. Image Vis. Comput. 2017, 64, 103–115. [Google Scholar] [CrossRef]

- Burden, F.; Winkler, D. Bayesian regularization of neural networks. In Artificial Neural Network: Method and Application; Livingstone, D.J., Ed.; Humana Press: Totowa, NJ, USA, 2008; pp. 25–44. [Google Scholar]

- Masters, T. Advanced Algorithms for Neural Networks: A C++ Sourcebook; John Wiley & Sons, Inc.: New York, NY, USA, 1995. [Google Scholar]

- Mohanty, S.; Jha, M.K.; Kumar, A.; Sudheer, K.P. Artificial neural network modeling for groundwater level forecasting in a river island of eastern India. Water Resour. Manag. 2010, 24, 1845–1865. [Google Scholar] [CrossRef]

- Roweis, S. Levenberg-Marquardt Optimization; Notes; University of Toronto: Toronto, ON, Canada, 1996. [Google Scholar]

- Gavin, H.P. The Levenberg-Marquardt Method for Nonlinear Least Squares Curve-Fitting Problems; Duke Civil and Environmental Engineering—Duke University: Durham, NC, USA, 2013; pp. 1–17. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Hornik, K. Some new results on neural network approximation. Neural Netw. 1993, 6, 1069–1072. [Google Scholar] [CrossRef]

- Mackay, D.J.C. Bayesian Methods for Adaptive Models. Ph.D. Thesis, California Institute of Technology, Pasadena, CA, USA, 1991; p. 98. [Google Scholar]

- Lourakis, M.I.A. A Brief Description of the Levenberg-Marquardt Algorithm Implemened by levmar. Matrix 2005, 3, 2. [Google Scholar]

- Foresee, F.D.; Hagan, M.T. Gauss-Newton approximation to Bayesian regularization. In Proceedings of the International Conference on Neural Networks, Houston, TX, USA, 12 June 1997; pp. 1930–1935. [Google Scholar]

- MacKay, D.J.C. A Practical Bayesian Framework for Backpropagation Networks. Neural Comput. 1992, 4, 448–472. [Google Scholar] [CrossRef]

- Kayri, M. Predictive Abilities of Bayesian Regularization and Levenberg–Marquardt Algorithms in Artificial Neural Networks: A Comparative Empirical Study on Social Data. Math. Comput. Appl. 2016, 21, 20. [Google Scholar] [CrossRef]

- Kao, H.-L.; Ho, B.-J.; Lin, A.C.; Chu, H.-H. Phone-based gait analysis to detect alcohol usage. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; p. 661. [Google Scholar]

- Park, E.; Lee, S.I.; Nam, H.S.; Garst, J.H.; Huang, A.; Campion, A.; Arnell, M.; Ghalehsariand, N.; Park, S.; Chang, H.J.; et al. Unobtrusive and Continuous Monitoring of Alcohol-impaired Gait Using Smart Shoes. Methods Inf. Med. 2017, 56, 74–82. [Google Scholar] [CrossRef] [PubMed]

- Nassi, B.; Rokach, L.; Elovici, Y. Virtual Breathalyzer. arXiv, 2016; arXiv:1612.05083. Available online: https://arxiv.org/abs/1612.05083 (accessed on 12 December 2017).

- Piasecki, T.M.; Alley, K.J.; Slutske, W.S.; Wood, P.K.; Sher, K.J.; Shiffman, S.; Heath, A.C. Low sensitivity to alcohol: Relations with hangover occurrence and susceptibility in an ecological momentary assessment investigation. J. Stud. Alcohol Drugs 2012, 73, 925–932. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).