Consistently Sampled Correlation Filters with Space Anisotropic Regularization for Visual Tracking

Abstract

1. Introduction

- (1)

- We propose a new DCF-based tracking model which integrates two strategies (anisotropic spatially-regularized constraints and consistent sampling) into a unified DCF-based tracking model.

- (2)

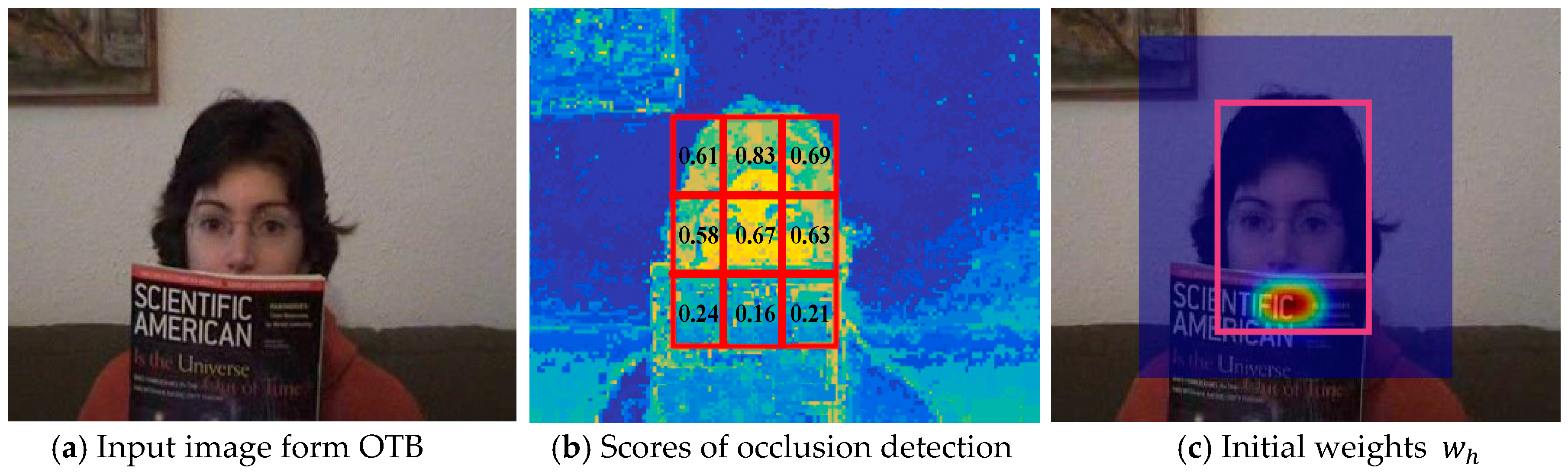

- We propose to study an anisotropic spatially-regularized filter, which is used to penalize the response of occluded areas of the target.

- (3)

- We propose to use a spatially-weighted function for every training and detection sample. This strategy can alleviate redundancies in training samples caused by cyclical shifts and eliminate inconsistencies between training samples and detection samples.

- (4)

- We propose to further develop an optimization strategy including a closed-form solution and an iterative method. The iterative method is based on the Gauss-Seidel method which can make online learning robust and efficient.

2. Related Works

2.1. Generative Trackers

2.2. Discriminative Trackers

2.3. DCF-Based Trackers

3. Proposed Method

3.1. Standard Discriminative Correlation Filter

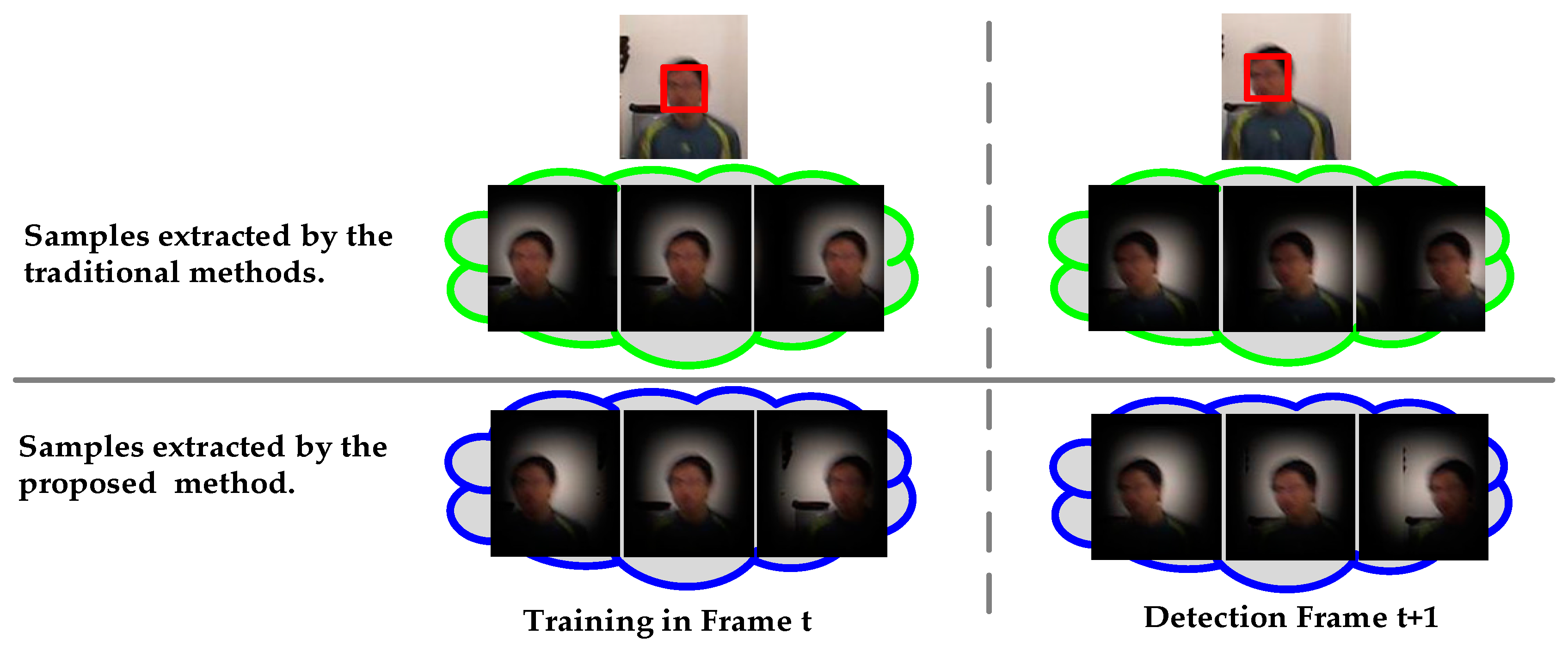

3.2. Consistently Sampled Correlation Filters

3.2.1. Spatially-Consistent Sampling in Training Steps

3.2.2. Temporally-Consistent Sampling in the Detection Step

3.3. Anisotropic Spatially-Regularized Correlation Filters

3.4. Solutions to the Proposed CSSAR Problem

4. Experiments

4.1. Features and Parameters

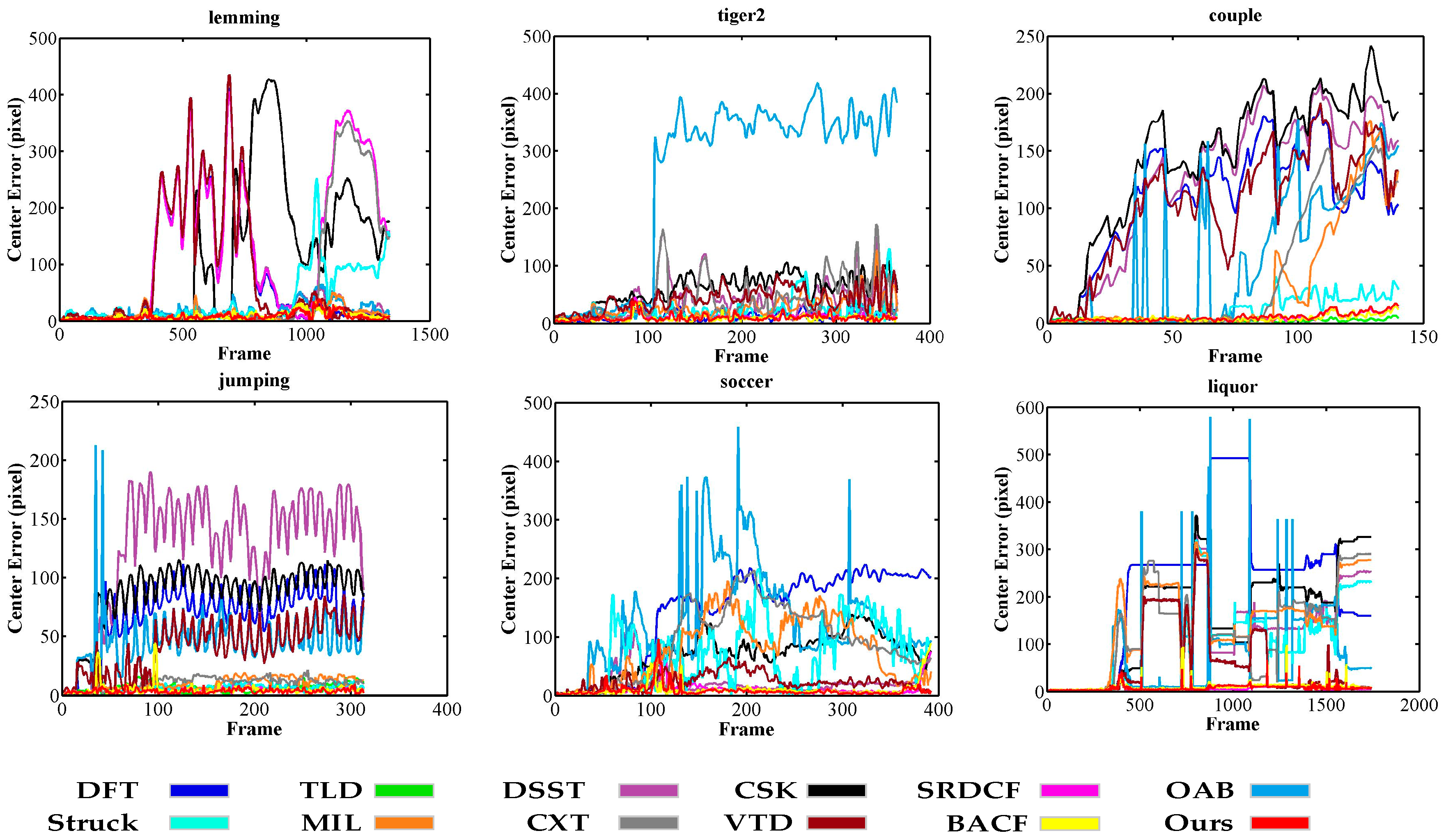

4.2. Qualitative Evaluation

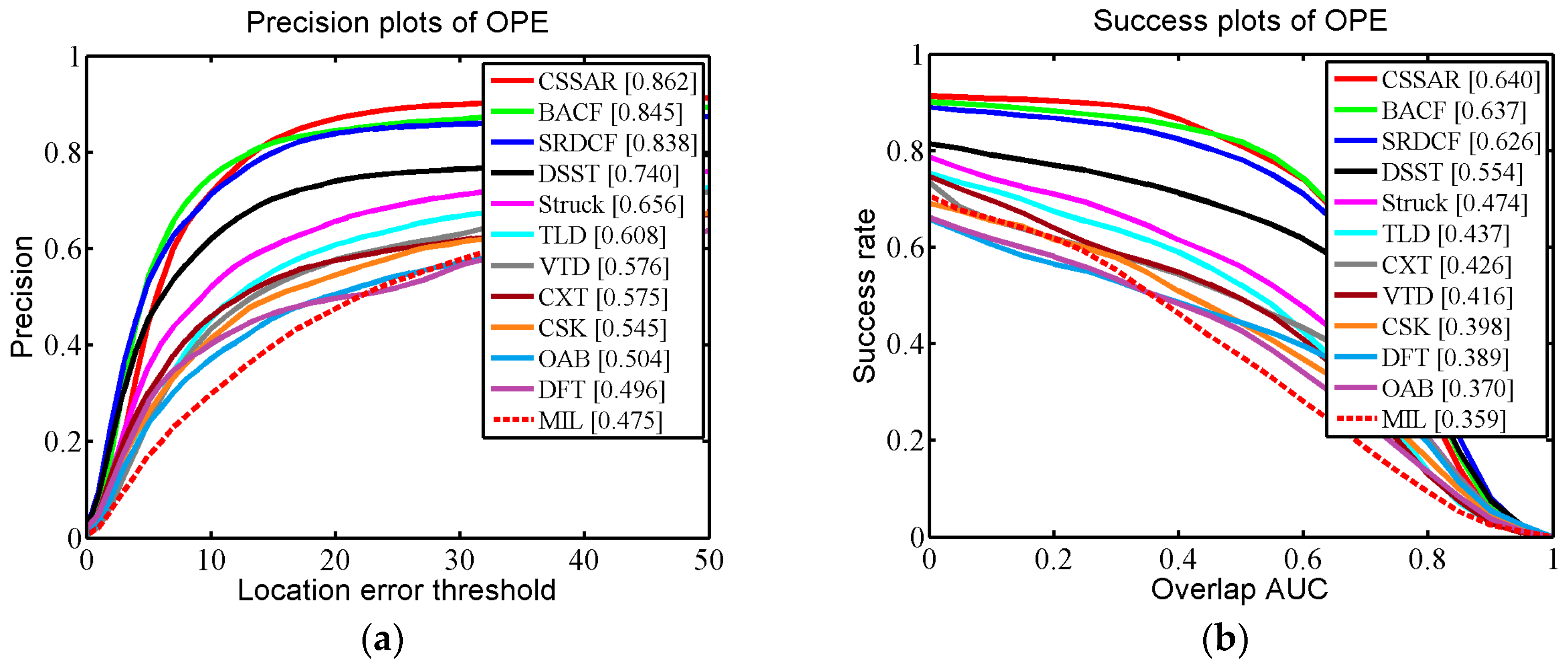

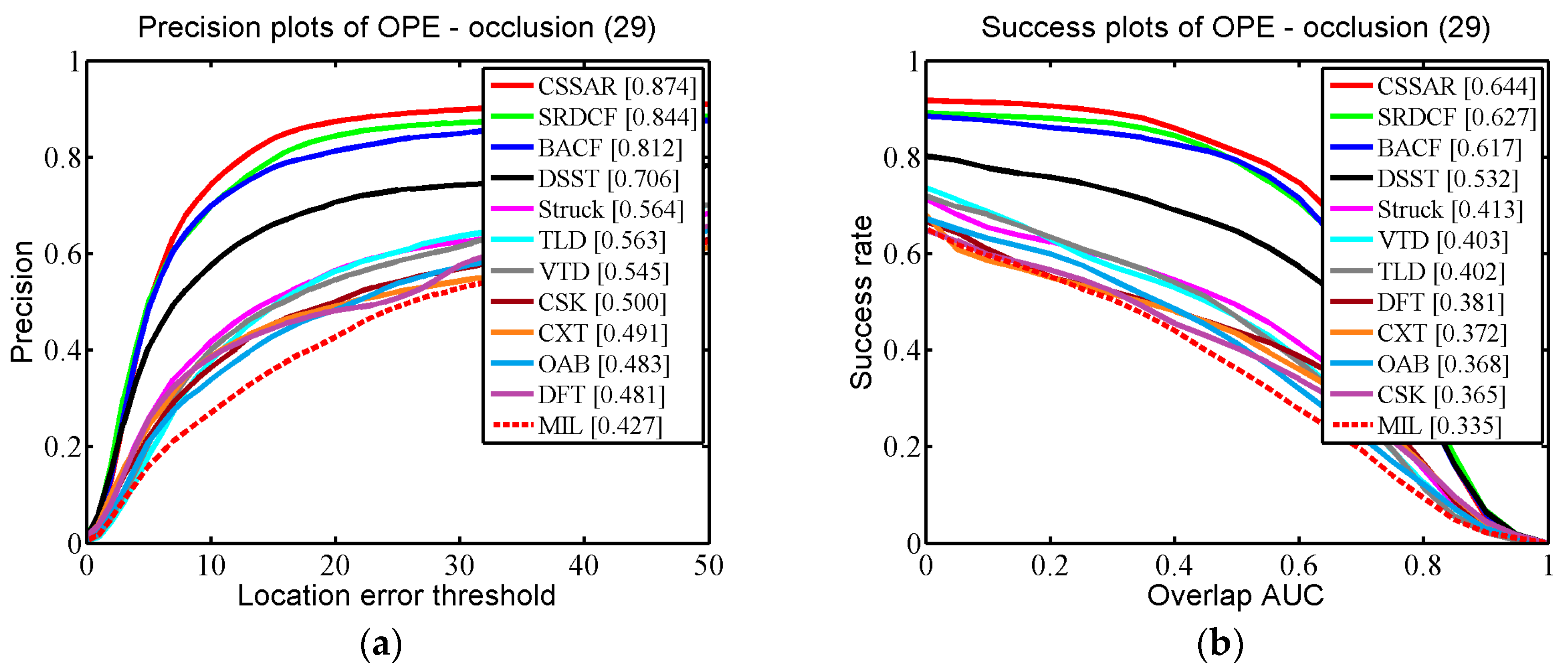

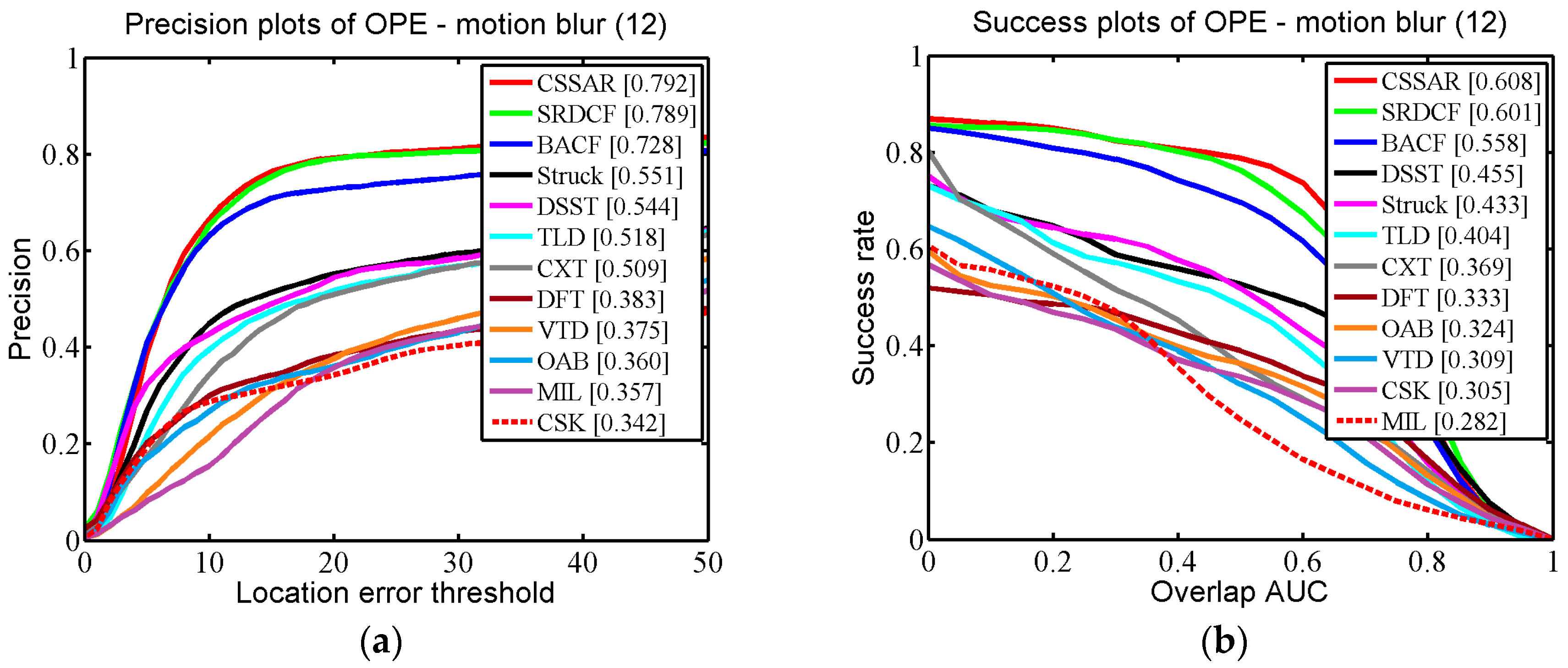

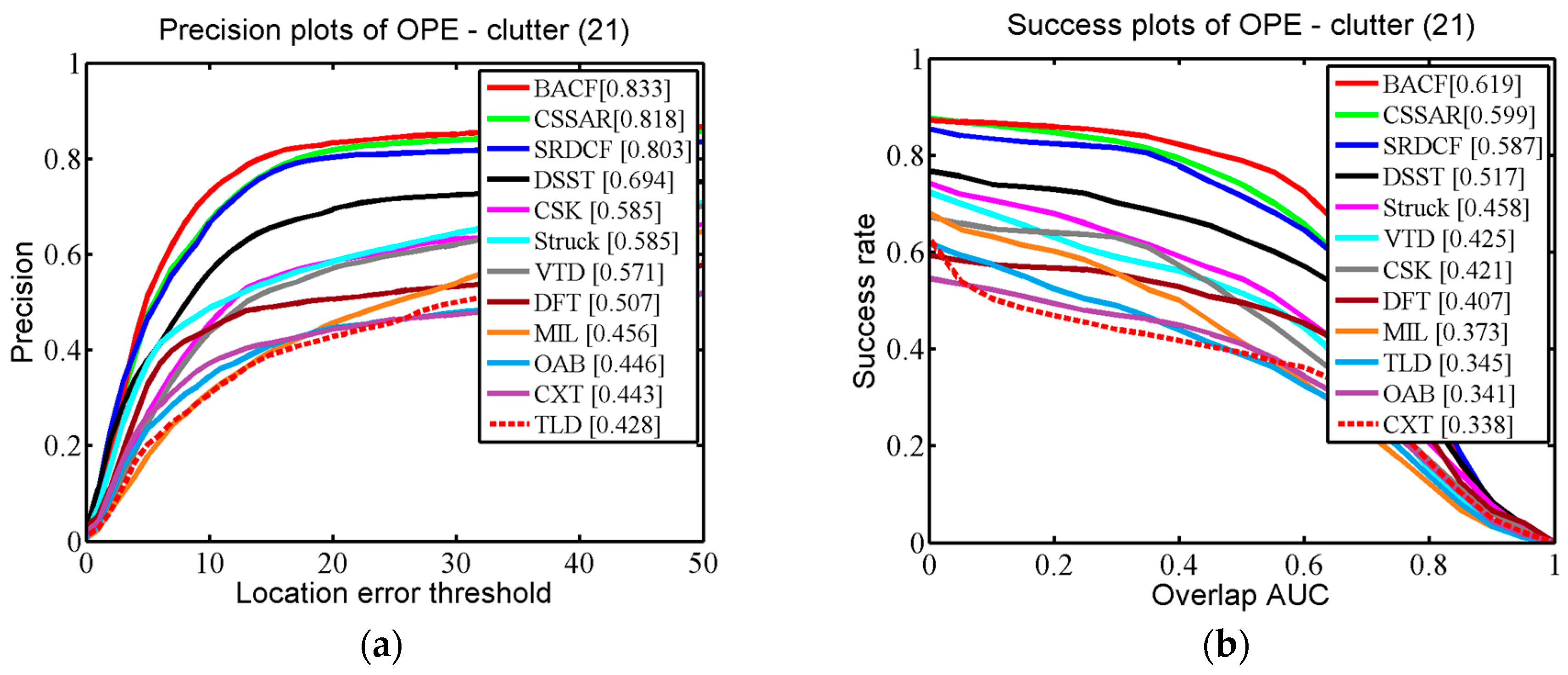

4.3. Quantitative Evaluation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Comaniciu, D.; Ramesh, V.; Meer, P. Kernel-based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–575. [Google Scholar] [CrossRef]

- Ross, D.A.; Lim, J.; Lin, R.S.; Yang, M.H. Incremental learning for robust visual tracking. Int. J. Comput. Vis. 2008, 77, 125–141. [Google Scholar] [CrossRef]

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust fragments-based tracking using the integral histogram. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 798–805. [Google Scholar]

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Robust visual tracking via multi-task sparse learning. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2042–2049. [Google Scholar]

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Robust visual tracking via structured multi-task sparse learning. Int. J. Comput. Vis. 2013, 101, 367–383. [Google Scholar] [CrossRef]

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Low-Rank Sparse Learning for Robust Visual Tracking; Springer: Berlin/Heidelberg, Germany, 2012; pp. 470–484. [Google Scholar]

- Zhang, T.; Ghanem, B.; Liu, S.; Xu, C.; Ahuja, N. Robust visual tracking via exclusive context modeling. IEEE Trans. Cybern. 2015, 46, 51–63. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Ghanem, B.; Xu, C.; Ahuja, N. Object tracking by occlusion detection via structured sparse learning. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 1033–1040. [Google Scholar]

- Zhang, T.; Liu, S.; Xu, C.; Yan, S.; Ghanem, B.; Ahuja, N.; Yang, M.H. Structural sparse tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 150–158. [Google Scholar]

- Babenko, B.; Yang, M.H.; Belongie, S. Visual tracking with online multiple instance learning. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; Volume 33, pp. 983–990. [Google Scholar]

- Grabner, H.; Grabner, M.; Bischof, H. Real-time tracking via on-line boosting. In Proceedings of the British Machine Vision Conference 2006, Edinburgh, UK, 4–7 September 2006; pp. 47–56. [Google Scholar]

- Avidan, S. Support vector tracking. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume181, pp. I-184–I-191. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Saffari, A.; Leistner, C.; Santner, J.; Godec, M. On-line random forests. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops (ICCV Workshops), Kyoto, Japan, 27 September–4 October 2009; pp. 1393–1400. [Google Scholar]

- Gao, J.; Ling, H.; Hu, W.; Xing, J. Transfer learning based visual tracking with gaussian processes regression. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8691, pp. 188–203. [Google Scholar]

- Zhang, J.; Ma, S.; Sclaroff, S. Meem: Robust tracking via multiple experts using entropy minimization. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8694, pp. 188–203. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Volume 7575, pp. 702–715. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.; Wang, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4902–4912. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-term correlation tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary learners for real-time tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 38, pp. 1401–1409. [Google Scholar]

- Possegger, H.; Mauthner, T.; Bischof, H. In defense of color-based model-free tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2113–2120. [Google Scholar]

- Gundogdu, E.; Alatan, A.A. Good features to correlate for visual tracking. arXiv, 2017; arXiv:1704.06326. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9909, pp. 472–488. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient convolution operators for tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1135–1143. [Google Scholar]

- Lukežič, A.; Vojíř, T.; Čehovin, L.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Bibi, A.; Mueller, M.; Ghanem, B. Target Response Adaptation for Correlation Filter Tracking; Springer: Cham, Switzerland, 2016; Volume 9910, pp. 419–433. [Google Scholar]

- Montero, A.S.; Lang, J.; Laganiere, R. Scalable kernel correlation filter with sparse feature integration. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 587–594. [Google Scholar]

- Boddeti, V.N.; Kanade, T.; Kumar, B.V.K.V. Correlation filters for object alignment. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2291–2298. [Google Scholar]

- Meshgi, K.; Maeda, S.I.; Oba, S.; Ishii, S. Data-driven probabilistic occlusion mask to promote visual tracking. In Proceedings of the 2016 13th Conference on Computer and Robot Vision (CRV), Victoria, BC, Canada, 1–3 June 2016; pp. 178–185. [Google Scholar]

- Hare, S.; Saffari, A.; Torr, P.H.S. Struck: Structured output tracking with kernels. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 263–270. [Google Scholar]

- Dinh, T.B.; Vo, N.; Medioni, G. Context tracker: Exploring supporters and distracters in unconstrained environments. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1177–1184. [Google Scholar]

- Kwon, J.; Lee, K.M. Visual tracking decomposition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1269–1276. [Google Scholar]

- Learnedmiller, E.; Sevillalara, L. Distribution fields for tracking. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1910–1917. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

| Sequences | DFT | TLD | DSST | CSK | SRDCF | OAB | Struck | MIL | CXT | VTD | BACF | Ours |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Lemming | 77.75 | 15.74 | 81.89 | 114.2 | 134.5 | 18.05 | 37.75 | 12.06 | 61.39 | 79.22 | 9.170 | 8.130 |

| Tiger2 | 12.22 | 73.16 | 41.45 | 59.56 | 11.62 | 252.7 | 21.64 | 27.17 | 41.44 | 40.88 | 8.660 | 9.220 |

| Couple | 108.6 | 2.540 | 125.2 | 144.6 | 3.970 | 57.62 | 11.33 | 34.53 | 41.76 | 104.3 | 4.110 | 5.140 |

| Jumping | 67.08 | 5.940 | 125.5 | 85.97 | 4.470 | 46.35 | 6.550 | 9.990 | 9.990 | 41.39 | 4.830 | 3.320 |

| Soccer | 139.5 | 136.2 | 20.25 | 70.51 | 10.83 | 127.5 | 71.36 | 77.85 | 89.22 | 23.56 | 10.28 | 7.910 |

| Liquor | 221.1 | 55.95 | 98.53 | 160.6 | 4.730 | 71.07 | 90.99 | 141.9 | 131.8 | 60.17 | 9.010 | 7.210 |

| Average | 104.4 | 48.26 | 82.12 | 105.9 | 28.35 | 95.55 | 39.94 | 50.58 | 62.60 | 58.25 | 7.677 | 6.822 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, G.; Xu, T.; Guo, J.; Luo, J.; Li, Y. Consistently Sampled Correlation Filters with Space Anisotropic Regularization for Visual Tracking. Sensors 2017, 17, 2889. https://doi.org/10.3390/s17122889

Shi G, Xu T, Guo J, Luo J, Li Y. Consistently Sampled Correlation Filters with Space Anisotropic Regularization for Visual Tracking. Sensors. 2017; 17(12):2889. https://doi.org/10.3390/s17122889

Chicago/Turabian StyleShi, Guokai, Tingfa Xu, Jie Guo, Jiqiang Luo, and Yuankun Li. 2017. "Consistently Sampled Correlation Filters with Space Anisotropic Regularization for Visual Tracking" Sensors 17, no. 12: 2889. https://doi.org/10.3390/s17122889

APA StyleShi, G., Xu, T., Guo, J., Luo, J., & Li, Y. (2017). Consistently Sampled Correlation Filters with Space Anisotropic Regularization for Visual Tracking. Sensors, 17(12), 2889. https://doi.org/10.3390/s17122889