The targets location data were obtained during a UAV flight in real time. The evaluation is based on UAV videos captured from a Changji highway from 9:40 to 11:10. The resolution of the videos is 1024 × 768 and the frame rate is 25 frames per second (fps).

5.4. Test 2: Multi-Target Geo-Location Using a Single Aerial Image with Distortion Correction

The data in

Table 1 are substituted into Equations (1)–(20) to calculate the geodesic coordinates of each target in a single image, as shown in

Table 3.

The calculated geodetic coordinates of each target in

Table 3 are compared with its nominal geodetic coordinates measured by GPS receiver on the ground, in order to obtain its geo-location error in a single image, as shown in

Table 4. It is found that the geo-location error of each target is within the expected error range through the comparison between the data in

Table 4 and those in

Table 2. The geodetic height geo-location errors of all the targets are about 18 m—that’s basically in line with the assumption in the

Section 3.2 that “the ground area corresponding to a single image is flat and the relative altitudes between targets and electro-optical stabilized imaging system are the same”.

The latitude and longitude geo-location errors of sub-targets are bigger than those of main targets for the following reasons: (1) the error arising from the slope distance difference between a main target and a sub-target. The longer the slope distance of a target to the image border, the bigger the geo-location error [

39]; (2) the coordinate transformation error caused by the attitude measurement error and the angle measurement error (measured by the electro-optical stabilized imaging system) during the calculation of altitude and distance of a sub-target relative to the electro-optical stabilized imaging system; (3) the pixel coordinate error of a sub-target found in target detection; and (4) the pixel coordinate error of a sub-target caused by image distortion.

The geo-location errors of a target can be reduced in three ways: by reduction in the flight height or an increase in the platform elevation angle (the horizontal forward direction is 0°) to shorten the slope distance, which needs to consider the flight conditions; the selection of a high-precision attitude measuring system and the improvement in angle measurement accuracy of the electro-optical stabilized imaging system, for which one needs to consider the hardware cost; and the distortion correction. Therefore, the influence of distortion correction on multi-target geo-location accuracy will be mainly discussed.

Sub-target pixel coordinate error is mainly caused by image distortion. The correction method is discussed in

Section 4.1 and

Section 5.1. We calculate the corrected pixel coordinates using Equation (25) to Equation (28). Then, we use corrected pixel coordinates to calculate geodetic coordinates of target. Geo-location errors of multi-target after distortion correction are shown in

Table 5.

It can be seen through the comparison between the data in

Table 5 and those in

Table 4 that, after the distortion correction, the latitude and longitude errors of each target are generally smaller than those before the correction, while the geo-location error of geodetic height remains basically unchanged. For the sub-targets farther from the image center, a more significant reduction in longitude and latitude errors can be obtained. Therefore, lens distortion correction can improve the geo-location accuracy of sub-targets and thus raise the overall accuracy of multi-target geo-location.

Target geo-location accuracy and missile hit accuracy are usually evaluated through the circular error probability (CEP) [

41]. CEP is defined as the radius of a circle with the target point as its center and with the hit probability of 50%. In ECEF frame, the geo-location error along

direction is

. The geo-location error along

direction is

.

and

can be calculated from longitude error and latitude error listed in

Table 4 and

Table 5. Suppose both x and y are subject to the normal distribution, the joint probability density function of

can be expressed as:

where

and

are the mean geo-location errors along

and

directions, respectively;

and

are the standard deviations of the geo-location errors along

and

directions, respectively;

is the correlation coefficient of geo-location errors along

and

directions,

.

Suppose

,

and

, the R satisfying the following equation will be CEP:

where:

,

.

If the mean geo-location errors , are unknown, they can be substituted by the sample mean geo-location errors , respectively. If the standard deviations of the geo-location errors , are unknown, they can be substituted by the sample standard deviation of the geo-location errors , respectively. If the correlation coefficients of the geo-location errors are unknown, they can be substituted by the sample correlation coefficients of the geo-location errors . If the total mean geo-location error is unknown, it can be substituted by the total sample mean geo-location error . If the total standard deviation of the geo-location error is unknown, it can be substituted by the total sample standard deviation of the geo-location errors .

Suppose the number of geo-location error samples is

:

,

,

, … ,

. The sample mean geo-location errors along

and

directions are:

The total sample mean geo-location error is:

The sample standard deviations of the geo-location errors along

and

directions are:

The total sample standard deviations of the geo-location errors is:

The sample correlation coefficient of the geo-location errors is:

When the number of geo-location error samples is more than 30, the confidence of CEP calculation result can reach 90% [

41]. Therefore, through the multi-target geo-location and distortion correction test for five aerial images, 32 samples of target geo-location errors are obtained before and after the distortion correction, respectively, including eight in the 1st image, six in the 2nd image, five in the 3rd image, five in the 4th image, and eight in the 5th image. The normality test and independence test of sample data reveal that, the samples conform to normal distribution but are not independent (the sample correlation coefficient before the distortion correction is

, and the sample correlation coefficient after the distortion correction is

).

Geo-location errors of the eight targets in the 1st image (which are parts of 32 targets) before the correction are shown in

Table 4. Geo-location errors of the eight targets in the 1st image (which are parts of 32 targets) after the correction are shown in

Table 5.

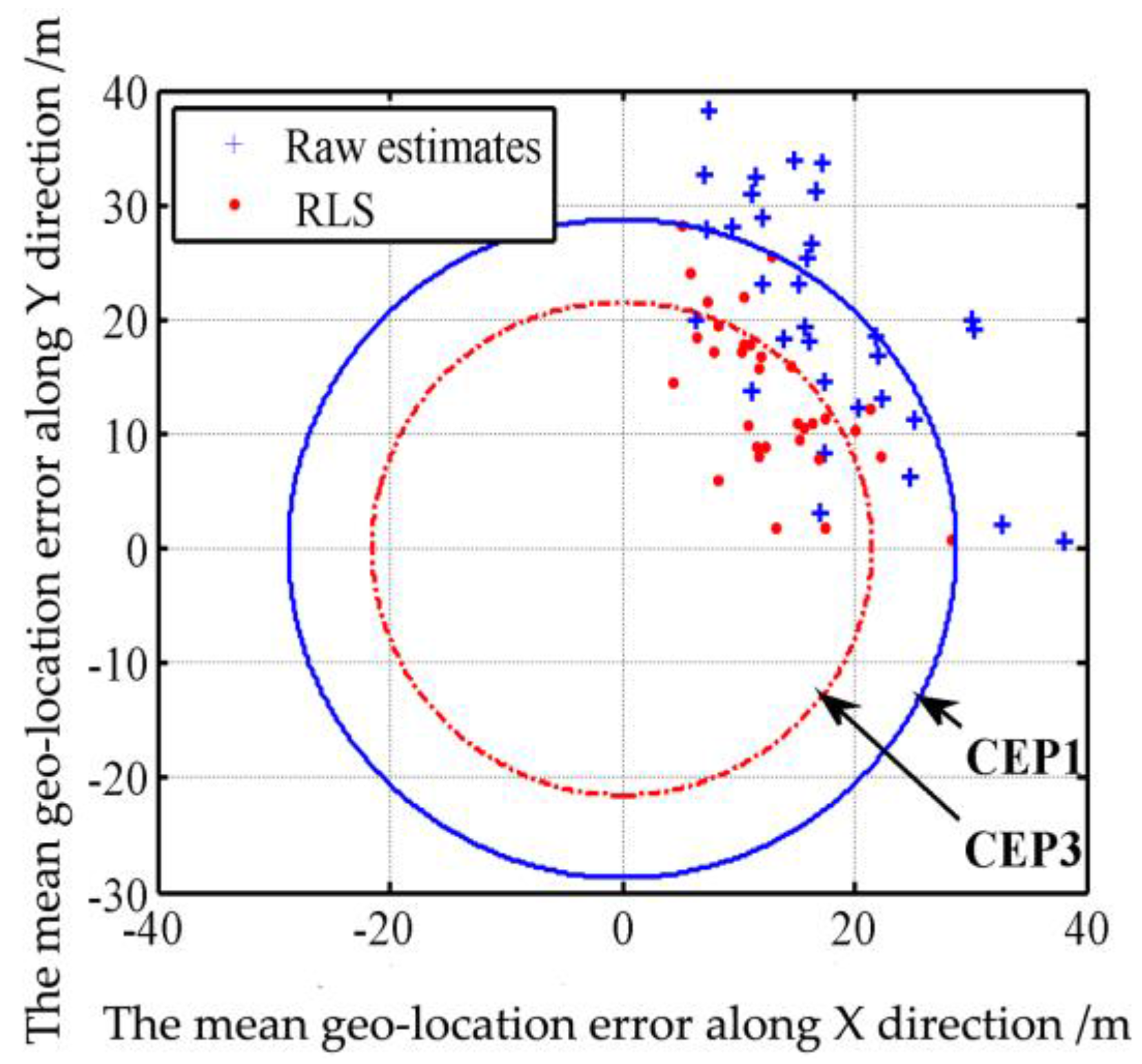

The multi-target geo-location errors before the distortion correction are processed through the Equations (43)–(50) to calculate the CEP, whereas the mean geo-location errors along the

and

directions are 17.41 m and 20.34 m, respectively, the standard deviations of the geo-location errors along the

and

directions are 7.77 m and 10.05 m, respectively, and the total mean geo-location error and total standard deviation are 28.98 m and 6.08 m, respectively. The calculation of Equation (46) through numerical integration finds that, among the 32 samples, 16 are in a circle with a radius of 28.74 m, which means the probability is 50%. The sizes of data samples inside and outside the solid circle in

Figure 10 also show that, the CEP1 of multi-target geo-location before the distortion correction is 28.74 m. Then the multi-target geo-location errors after the distortion correction are processed through Equations (43)–(50) to calculate the CEP, where the mean geo-location errors along

and

directions are 17.04 m and 18.63 m, respectively, the standard deviations of the geo-location errors along

and

directions are 6.86 m and 8.25 m, respectively, and the total mean geo-location error and total standard deviation are 26.91 m and 5.31 m, respectively. The calculation of Equation (43) through numerical integration finds that, among the 32 samples, 16 are in a circle with a radius of 26.80 m, which means the probability is 50%. The sizes of samples inside and outside the dotted circle in

Figure 10 also show that, the CEP2 of multi-target geo-location after the distortion correction is 26.80 m, 7% smaller than that before the distortion correction. Note: We use original geo-location error (32 samples scattered in the four quadrants) to calculate the CEP circle. Because there are 32 samples scattered in the four quadrants. It’s too scattered and not conducive to the analysis of the error. Therefore, we take the absolute value of each geo-location error, so all points are in the first quadrant in

Figure 12.

In order to compare the performance of our multi-target geo-location algorithm with that of other algorithms, these localization accuracy results have been compared with the accuracies of geo-location methods reported in [

7,

8,

9], as shown in the

Table 6. It can be seen from the

Table 6 that, the target geo-location accuracy in this paper is close to that reported in [

7]. However, the geo-location accuracy in [

7] depends on the distance between the projection centers of two consecutive images (namely baseline length). To obtain higher geo-location accuracy, the baseline length shall be longer, so in [

7], the time interval between two consecutive images used for geo-location is quite big. Meanwhile, as the SIFT algorithm is needed to extract feature points from multi-frame images for the purpose of 3D reconstruction, the algorithm in [

7] has a heavy calculation load in prejudice of real-time implementation. In [

8], the geo-location accuracy is about 20 m before filtering. The real-time geo-location accuracy of the two methods is almost the same. However, our UAV flight altitude is much higher than that in [

8]. In [

9], geo-location accuracy is about 39.1 m before compensation. Our real-time geo-location accuracy is higher than in [

9]. In [

9], after the UAV flies many around in an orbit, the geo-location accuracy can be increased to 8.58 m. However, this accuracy cannot be obtained in real-time. The algorithms in [

7,

8,

9] are implemented on a computer in the ground station. Since the images are transmitted to, and processed on a computer in the ground station, a delay occurs between the data capture moment and the time of completion of processing. In comparison, the geo-location algorithm in this paper is programmed and implemented on multi-target localization circuit board (model: THX-IMAGE-PROC-02, Changchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of Sciences, Changchun, China) with a TMS320DM642@ 720-M Hz Clock Rate and 32-Bit Instructions/Cycle and 1 GB DDR. It consumes 0.4 ms on average when calculating the geo-location of a single target and, at the same time, correcting zoom lens distortion. Therefore, this algorithm has great advantages in both geo-location accuracy and real-time performance. The multi-target location method in this paper can be widely applied in many areas such as UAVs and robots.

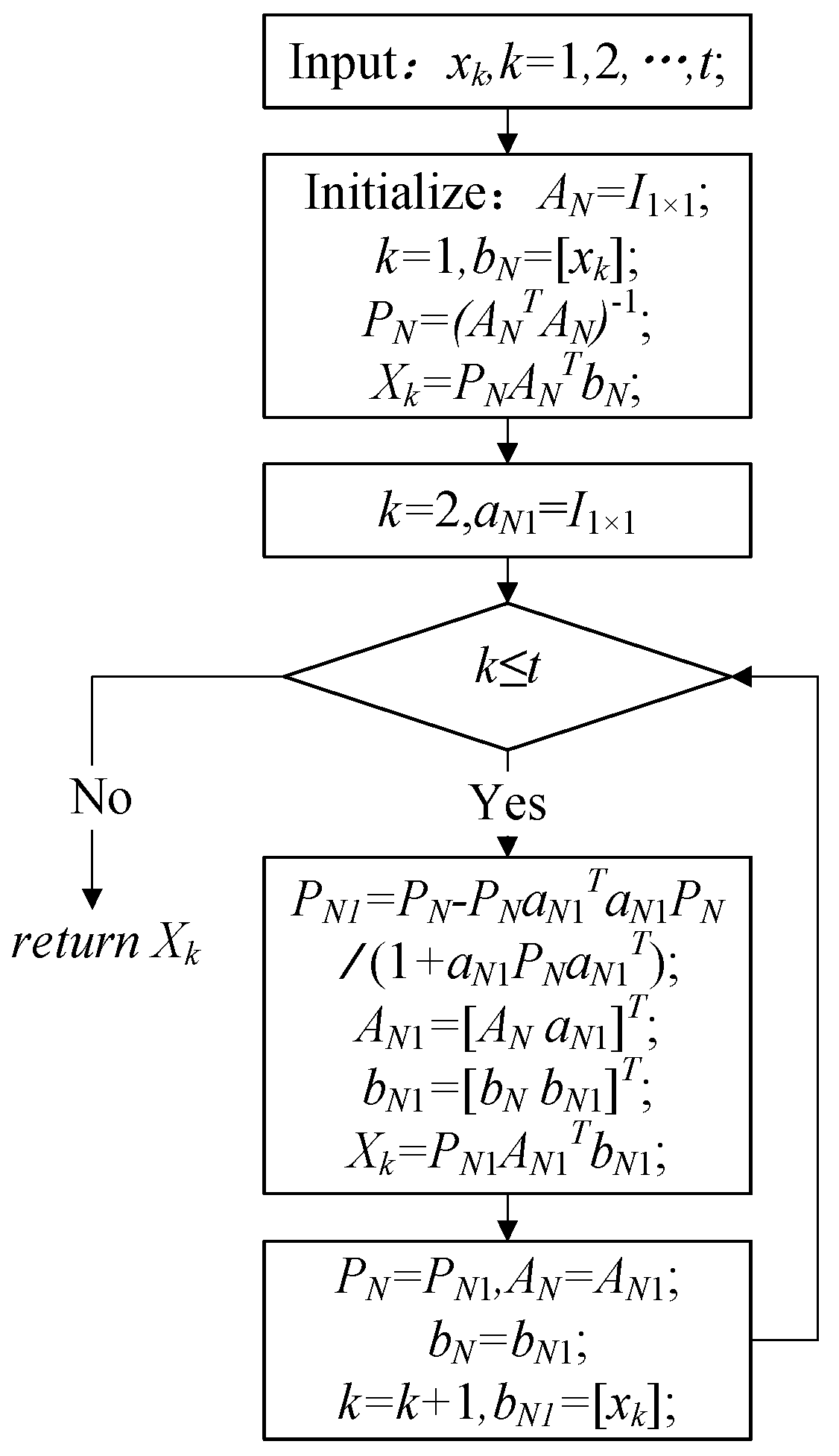

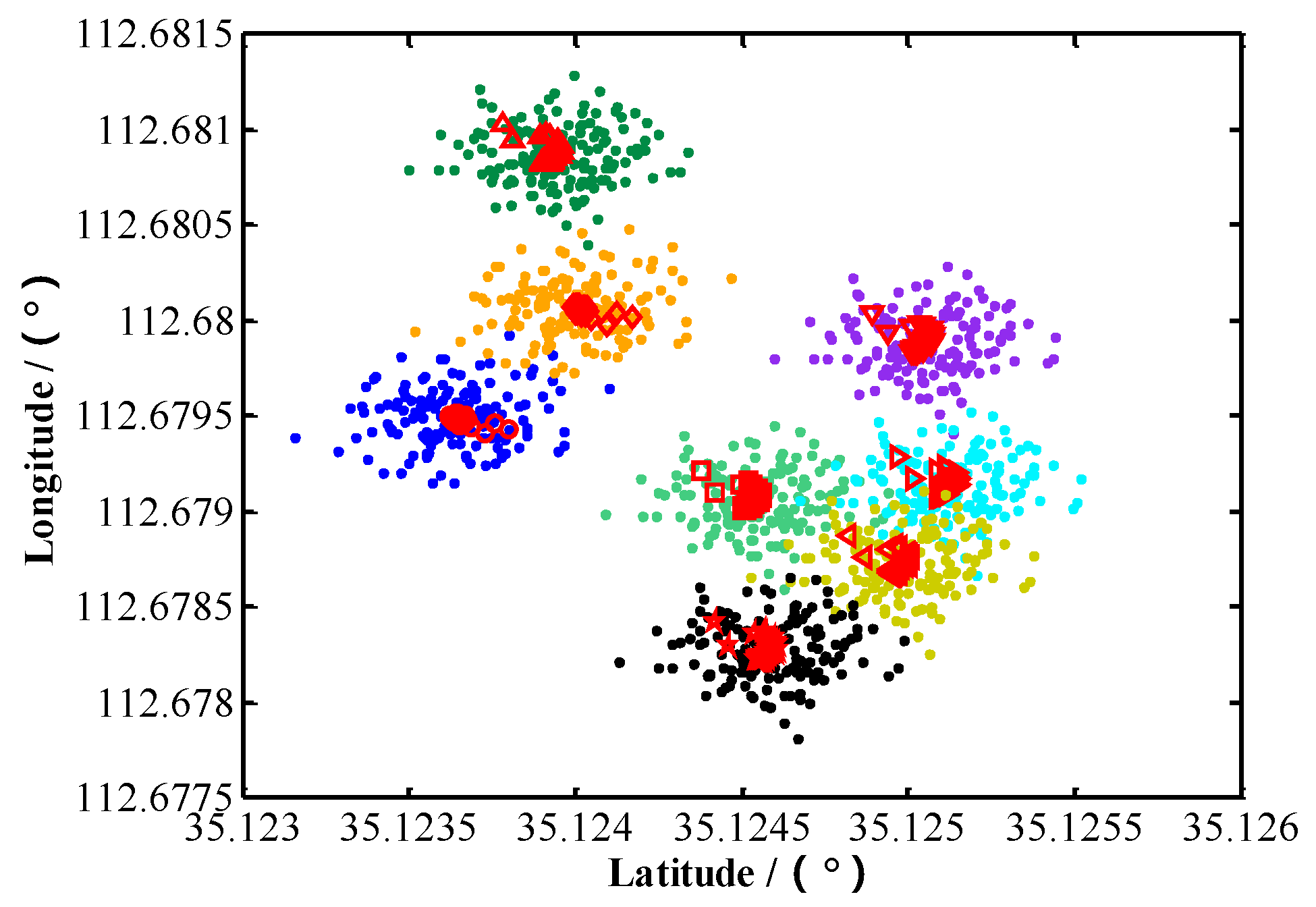

5.5. Test 3: RLS Filter for Geo-Location Data of Multiple Stationary Ground Targets

The targets in the above tests are all stationary ground targets. After lens distortion correction, we use the 1st aerial images (eight targets) as the initial frame for target tracking. For 150 frames starting from the 1st image, eight targets are tracked, respectively. The coordinates of the UAV are calculated using Equations (25) and (26). The update rate of UAV coordinate is synchronized with the camera frame rate. The geo-location data of each target are adaptively estimated by RLS algorithm respectively. The geo-location results of eight targets before and after RLS filtration are shown in

Figure 13 (the dots “•” in different colors in

Figure 13 represent original geo-location data of the eight targets, while □, ◁, ▷, ☆, ▽, ○, ◇ and △ represent the geo-location data of main target and sub-targets 1, 2, …, 7 after RLS filtration). It can be observed from

Figure 13 that, after RLS filtration, the dispersion of geo-location data decreases sharply for each target, converging rapidly to a small area adjacent to the true position of the target.

Figure 14 shows how the plane geo-location error of sub-target 2 changes with the number of image frames (the corresponding time) in the RLS filtration process. It can be seen from

Figure 14 that after filtering the geo-location data of 100~150 images (the corresponding time is 3~5 s), the geo-location errors of the target have converged to a stable value. So, we can obtain a more accurate stationary target location immediately after 150 images (it is no longer necessary to run RLS).

By comparing the results after the stabilization of RLS filtration with the nominal geodetic coordinates of each target, the geo-location errors of geodetic coordinates of the targets after RLS filtration can be determined, as shown in

Table 7.

It can be seen from the comparison of results between the data in

Table 7 and those in

Table 5 that, after RLS filtration, the longitude and latitude errors of each target are much smaller than the multi-target geo-location errors of a single image which is only processed by lens distortion correction. The geo-location error of geodetic height also decreases slightly.

After lens distortion correction, we use the other four aerial images (24 targets) as the initial frame for targets tracking, respectively (eight targets in the 1st image, six targets in the 2nd image, five targets in the 3rd image, five targets in the 4th image, and eight targets in the 5th image).

For 150 frames starting from the 2nd image, six targets are tracked, respectively. The geo-location data of each target are adaptively estimated by the RLS algorithm, respectively. For 150 frames starting from the 3rd image, five targets are tracked, respectively. The geo-location data of each target are adaptively estimated by the RLS algorithm, respectively. For 150 frames starting from the 4th image, five targets are tracked, respectively. The geo-location data of each target are adaptively estimated by the RLS algorithm, respectively. For 150 frames starting from the 5th image, eight targets are tracked, respectively. The geo-location data of each target are adaptively estimated by the RLS algorithm, respectively.

The multi-target geo-location data after RLS filtration are processed through the Equations (43)–(50) to calculate the CEP, where the mean geo-location errors along the

and

directions are 13.78 m and 14.53 m, respectively, and the standard deviations of the geo-location errors along the

and

directions are 5.79 m and 7.34 m, respectively. Among the 32 samples obtained through numerical integration of Equation (43), 17 are in a circle with a radius of 21.52 m, which means the probability is 53%. The sizes of samples inside and outside the dotted circle in

Figure 13 also show that, the CEP3 of multi-target geo-location after RLS filtration is 21.52 m, 25% smaller than the CEP1 of multi-target geo-location of a single image. Note: We use original geo-location error (32 samples scattered in the four quadrants) to calculate the CEP circle. Because there are 32 samples scattered in the four quadrants. It’s too scattered and not conducive to the analysis of the error. Therefore, we take the absolute value of each geo-location error, so all points are in the first quadrant in

Figure 15.

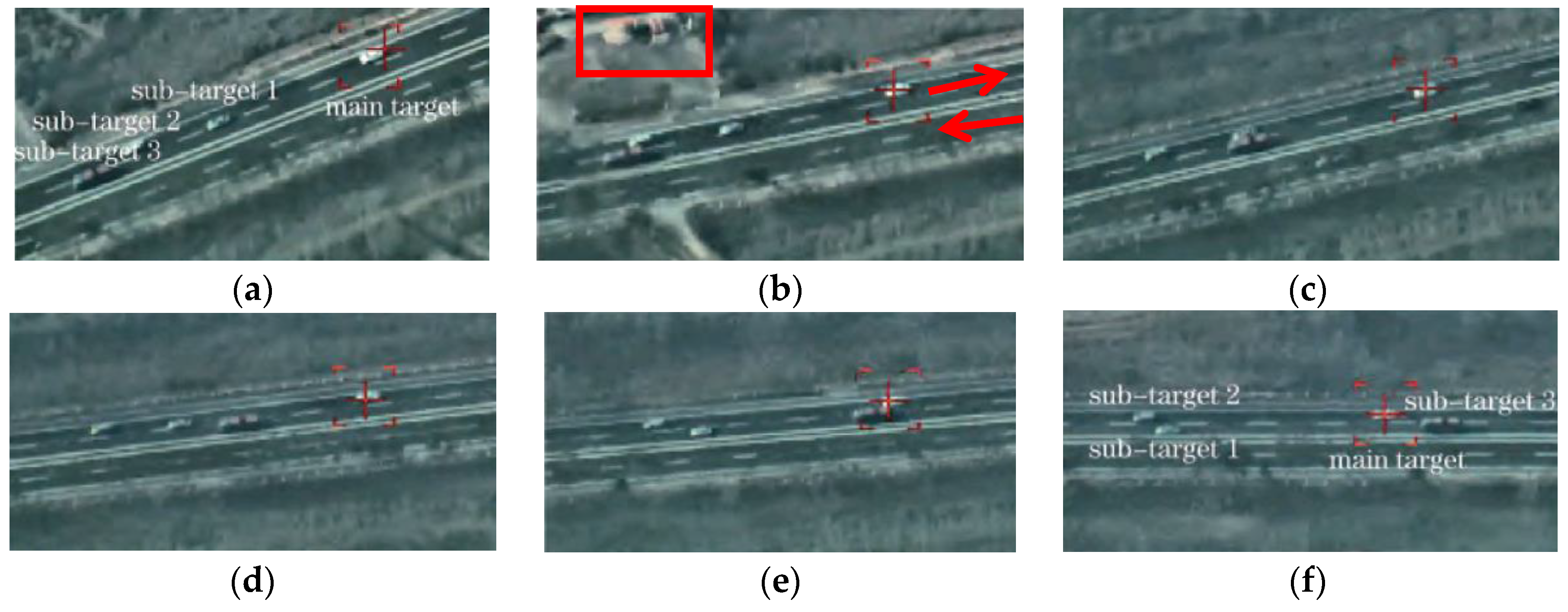

5.6. Test 4: Real-Time Geo-Location and Tracking of Multiple Moving Ground Targets

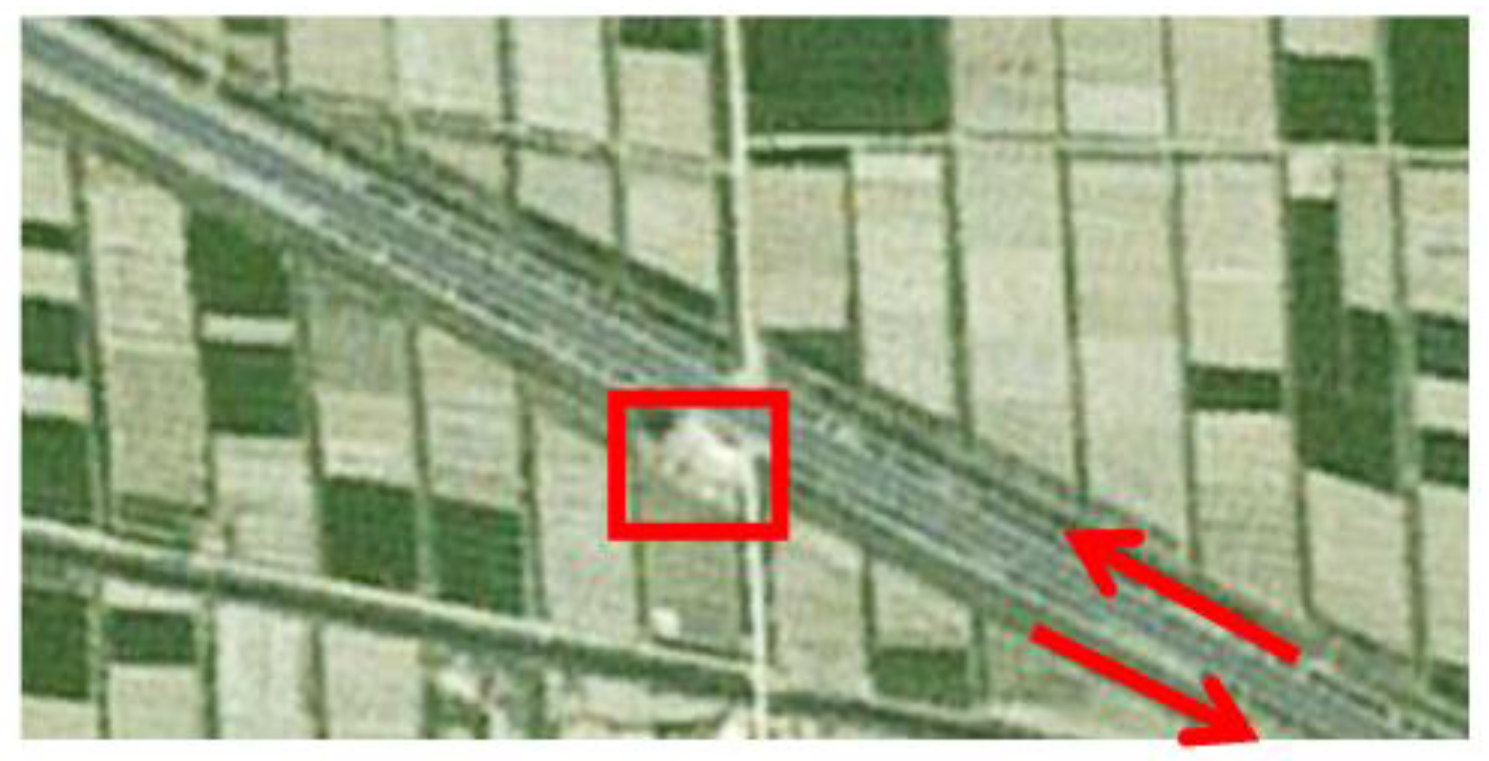

This test localizes and tracks four targets moving on a Changji highway in the video taken by the UAV, as shown in

Figure 16 (part of the image). The size of every image is

, the pixel size is

, and the focal length

. The target in the image center is chosen as main target, and three targets in other positions are chosen as sub-targets. The pixel coordinates of each target in the 1st image and the locations and attitudes data of the electro-optical stabilized imaging system at the corresponding time are shown in

Table 8.

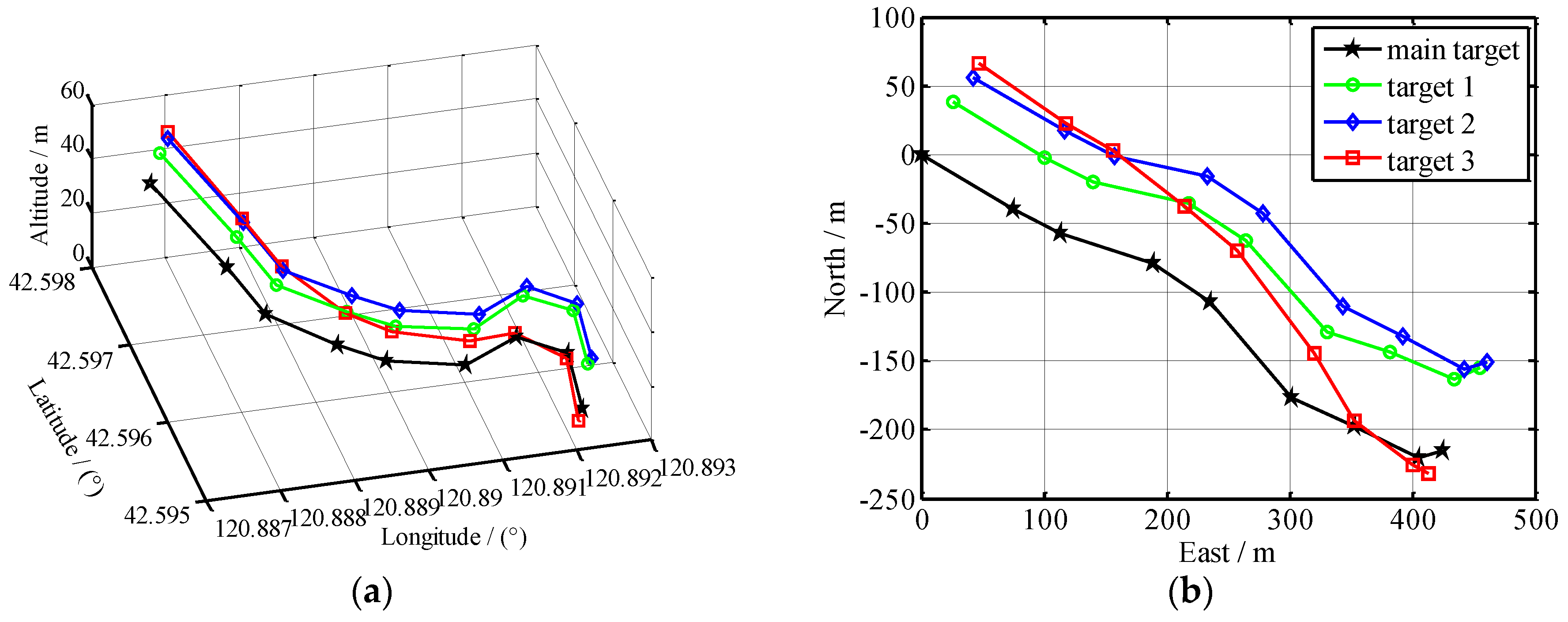

Nine chronological images are selected from video images to calculate the geo-location data of each target in every image. The resultant spatial position distribution of all the targets is shown in

Figure 17a. With the main target position in the 1st image as the origin of coordinates, the spatial positions of all the targets are projected earthwards to obtain their planar motion trails, as shown in

Figure 17b. The time span of those nine frames is 30 s.

Since both the UAV yaw and the electro-optical stabilized imaging system azimuth are not 0 in general, aerial images have been rotated and distorted somewhat. The aerial orthographic projection of the abovementioned highway, as shown in

Figure 18, is acquired from Google Maps. It can be known from

Figure 18 that, the actual direction of that highway is northwest–southeast. In China, cars drive on the right side of the road, so the house in

Figure 18 is near the highway which is from northwest to southeast direction. In

Figure 16b, we can see this house, so all the cars are on the road travelling in a northwest to southeast direction. This coincides with the localization results of

Figure 17.

On the basis of

Figure 17, the motion of each target has been analyzed as below: the targets in the 1st image, according to their positions from front to back, are sub-target 3, sub-target 2, sub-target 1 and main target in succession; in the 3rd–4th images, the sub-target 2 begins to catch up with and overtake the sub-target 3; in the 4th–6th images, the sub-target 1 begins to catch up with and overtake the sub-target 3; in the 7th–9th images, the main target begins to catch up with and overtake the sub-target 3; at last, all the targets, according to their positions from front to back, are sub-target 2, sub-target 1, main target and sub-target 3 in succession. This coincides completely with the motion law of all the targets in the video image shown in

Figure 14, demonstrating that this geo-location algorithm can correctly locate and track multiple moving targets. The speed of each target can also be determined. This test has further verified the correctness of our multi-target geo-location model.