Abstract

Multimodal medical image fusion (MIF) plays an important role in clinical diagnosis and therapy. Existing MIF methods tend to introduce artifacts, lead to loss of image details or produce low-contrast fused images. To address these problems, a novel spiking cortical model (SCM) based MIF method has been proposed in this paper. The proposed method can generate high-quality fused images using the weighting fusion strategy based on the firing times of the SCM. In the weighting fusion scheme, the weight is determined by combining the entropy information of pulse outputs of the SCM with the Weber local descriptor operating on the firing mapping images produced from the pulse outputs. The extensive experiments on multimodal medical images show that compared with the numerous state-of-the-art MIF methods, the proposed method can preserve image details very well and avoid the introduction of artifacts effectively, and thus it significantly improves the quality of fused images in terms of human vision and objective evaluation criteria such as mutual information, edge preservation index, structural similarity based metric, fusion quality index, fusion similarity metric and standard deviation.

1. Introduction

With the development of medical imaging technology, various imaging modals such as ultrasound (US) imaging, computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography (PET) and single-photon emission computed tomography (SPECT) are finding a range of applications in diagnosis and assessment of medical conditions that affect brain, breast, lungs, soft tissues, bones and so on [1]. Owing to the difference in imaging mechanism and the high complexity of human histology, medical images of different modals provide a variety of complementary information about the human body. For example, CT is well-suited for imaging dense structures like non-metallic implants and bones with relatively less distortion. Likewise, MRI can visualize the pathological soft tissues better whereas PET can measure the amount of metabolic activity at a site in the body. Multimodal medical image fusion (MIF) aims to integrate complementary information from multimodal images into a single new image to improve the understanding of the clinical information in a new space. Thus, MIF plays an important role in diagnosis and treatment of diseases and has found wide clinical applications, such as US-MRI for prostate biopsy [1], PET-CT in lung cancer [2], MRI-PET in brain disease [3] and SPECT-CT in breast cancer [4].

Numerous image fusion algorithms have been proposed by working at pixel level, feature level or decision level. Among these methods, the pixel-level fusion scheme has been investigated most widely due to its advantage of containing the original measured quantities, easy implementation and computational efficiency [5]. Existing pixel-level image fusion methods generally include substitution methods, multi-resolution fusion methods and neural network based methods. The substitution methods such as intensity hue saturation [6,7], principal component analysis [8] based methods can be implemented with high efficiency but at the expense of reduced contrast and distortion of the spectral characteristics. Image fusion methods based on the multi-resolution decomposition techniques can preserve important image features better than substitution methods via the decomposition of images at a different scale to several components using pyramid (e.g., contrast pyramid [9] and gradient pyramid [10]), empirical mode decomposition [11] or various transforms including wavelet transform [12,13,14], curvelet transform [15], ripplet transform [16], contourlet transform [17], non-subsampled contourlet transform (NSCT) [18,19,20,21,22] and shift-invariant shearlet transform [23,24]. However, the transform based fusion methods involve much higher computational complexity than the substitution methods, and it is challenging to adaptively determine the involved parameters in these methods for the different medical images.

The various neural networks such as self-generating neural network [25] and pulse coupled neural network (PCNN) have been used for image fusion. Different from some traditional neural networks, PCNN, as the third generation artificial neural network, has biological background and it is derived from the phenomena of synchronous pulse bursts in the visual cortex of mammals [26,27]. The PCNN based MIF method has gained much attention due to its great advantages of being very generic and requiring no training. The parallel image fusion method using multiple PCNNs has been proposed by Li et al. [28]. The multi-channel PCNN (m-PCNN) based fusion method has been proposed by Wang et al. [29], and it has been further improved by Zhao et al. [30]. Recently, PCNN has been combined with multi-resolution decomposition methods such as the wavelet transform [31], the NSCT [32,33,34,35,36], the shearlet transform [37,38] and the empirical mode decomposition [39]. These methods involve such disadvantages as high computational complexity, difficulty in adaptively determining PCNN parameters for various source images and image contrast reduction or loss of image details. In view of high computational complexity of PCNN, Zhan et al. [40] have recently proposed a computationally more efficient spiking cortical model (SCM), a single-layer, local-connected and two-dimensional neural network. Wang et al. [41] have presented a fusion method based on the firing times of the SCM (SCM-F). Despite the superiority of SCM over PCNN in computational efficiency, the SCM-F method will lead to loss of image details during fusion because it only utilizes the firing times of individual neurons in the SCM to establish the fusion rule, and employs a too simple fusion strategy.

To address the problem of unwanted image degradation during fusion for the above-mentioned fusion methods, we have proposed a distinctive SCM based weighting fusion method. In the proposed method, the weight is computed based on the multi-features of pulse outputs produced by SCM neurons in a neighborhood rather than the individual neurons. The multi-features include the entropy information of pulse outputs, which can characterize the gray-level information of source images, and the Weber local descriptor (WLD) feature [42] of firing mapping images produced from pulse outputs, which can represent the local structural information of source images. Compared with the PCNN based fusion method, the proposed SCM based method using the multi-features of pulse outputs (SCM-M) has such advantages as higher computational efficiency, simpler parameter tuning as well as less contrast reduction and loss of image details. Meanwhile, the proposed SCM-M method can preserve the details of source images better than the SCM-F method. Extensive experiments on CT and MR images demonstrate the superiority of the proposed method over numerous state-of-the-art fusion methods.

2. Spiking Cortical Model

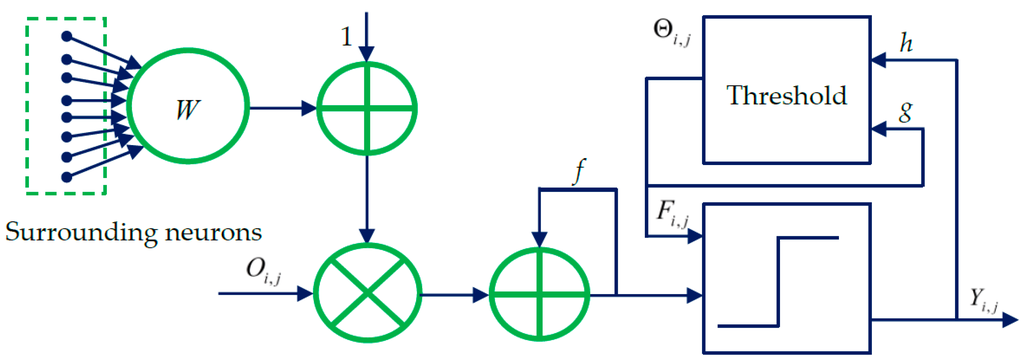

The spiking cortical model (SCM) is derived from several other visual cortex models such as Eckhorn’s model [26,27]. The SCM has been specially designed for image processing applications. The structural model of the SCM is presented in Figure 1. As shown in Figure 1, each neuron at (i,j) corresponds to one pixel in an input image, receiving its normalized intensity as feeding input and the local stimuli from its neighboring neurons as the linking input. The feeding input and the liking input are combined together as the internal activity of . The neuron will fire and a pulse output will be generated if exceeds a dynamic threshold . The above process can be expressed by [40]:

where f and g are decay constants less than 1; is the scalar of large value; n denotes the number of iterations (, is the maximum iteration times); and is the synaptic weight between and its linking neuron and it is defined as:

Figure 1.

The structural model illustrating the spiking cortical model (SCM).

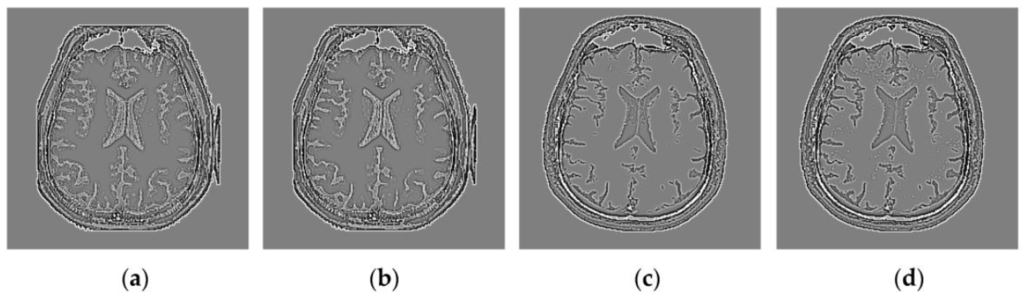

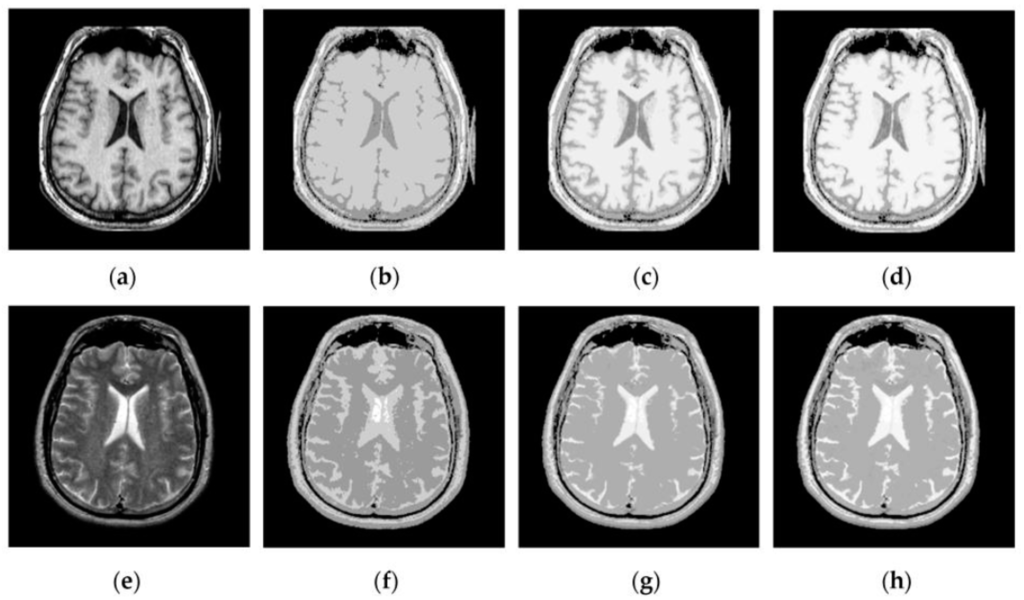

Through iterative computation, the SCM neurons output the temporal series of binary pulse images. The temporal series contain much useful information of input images. To explain this point better, Figure 2 shows the temporal series produced by the SCM with f = 0.9, g = 0.3, h = 20 and Nmax = 7 operating on an input MR image shown in Figure 2a. In Figure 2, we can see that during the various iterations, the output binary images contain different image information and the outputs of the SCM typically represent such important information as the segments and edges of the input image. The observation from Figure 2 indicates that the SCM can describe human visual perception. Therefore, the pulse outputs of the SCM can be utilized for image fusion.

Figure 2.

Temporal series of pulse outputs generated by the SCM operating on magnetic resonance (MR) image: (a) MR image; and (b–h) the binary pulse images from the first to the seventh iteration, respectively.

3. SCM Based Image Fusion

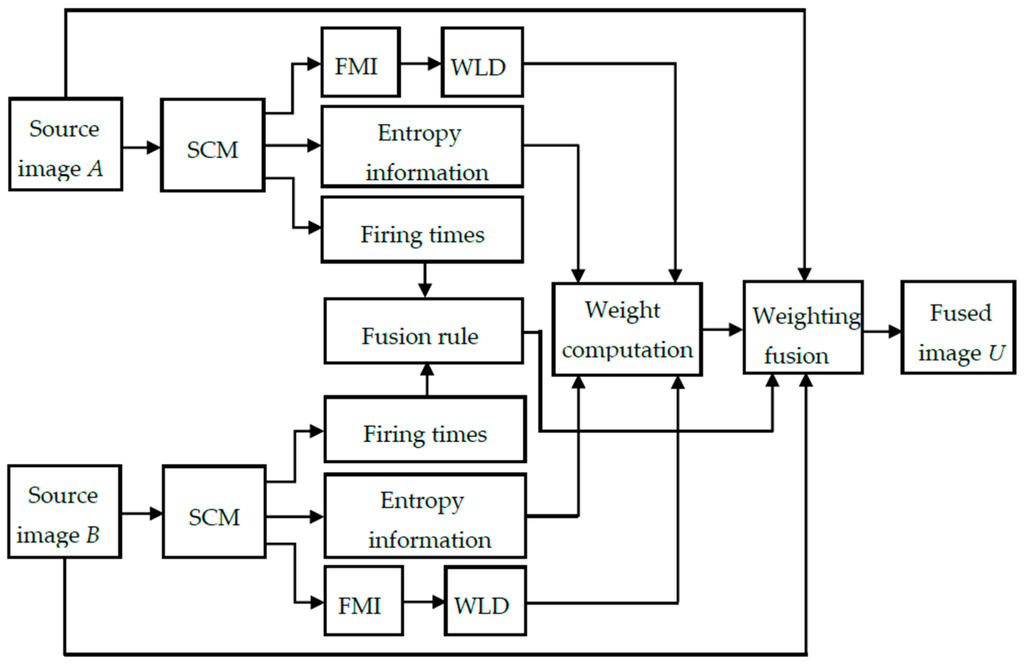

The weighting fusion framework of the proposed SCM-M method is given in Figure 3. The key components of this method include the fusion rule and the weight computation. In the proposed method, the fusion rule is established based on the firing times of pulse outputs generated by the SCM. The weight is computed based on the similarity between the two source images, which is determined by combining the entropy information of pulse outputs from the SCM with the WLD operating on the resultant firing mapping image (FMI).

Figure 3.

The flowchart of the SCM-M method.

3.1. Fusion Rule

The two source images, A and B, are normalized and fed into the two SCMs as the external stimulus. By running the SCMs for Nmax times, we will obtain the firing times and for each pixel at (i,j) in the source images as:

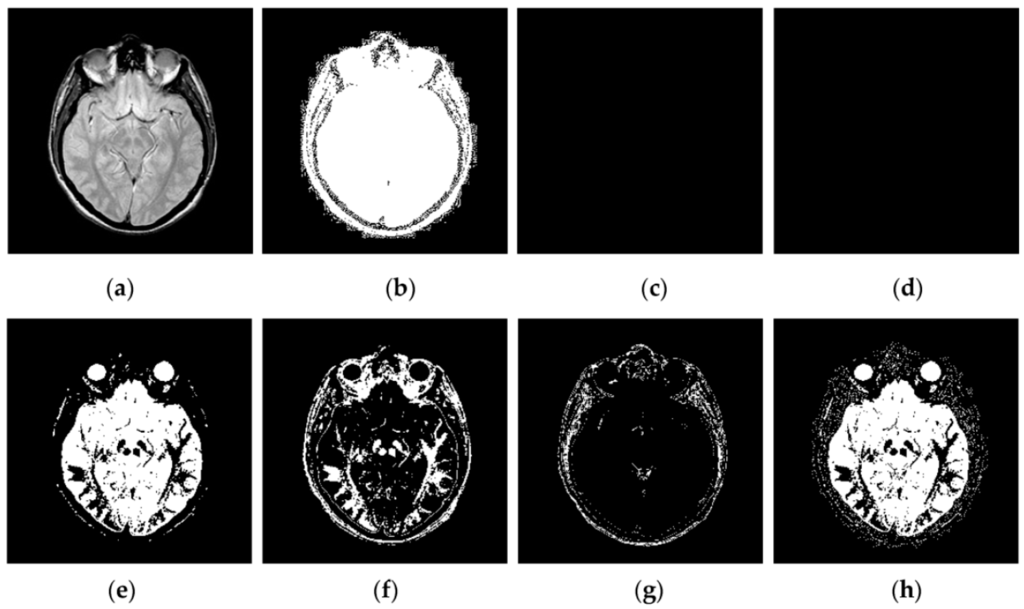

Based on the firing times and , two FMIs and will be produced. From Equations (5) and (6), we can see that the FMI is actually the sum of temporal series of pulse outputs produced by the SCM. Figure 4 shows the FMIs generated by the SCM with f = 0.9, g = 0.3, h = 20 and different Nmax operating on a pair of MR images. It should be noted that here the FMIs have been scaled linearly to fit the range [0, 255]. In Figure 4, we can see that the FMI provides a means for representing the information of source images. The representation ability is related to the parameters of the SCM, especially the parameter Nmax. A too small (e.g., Nmax = 10) or a too large Nmax (e.g., Nmax = 50) tends to produce the loss of important image details as shown in Figure 4b,d. A proper Nmax for the SCM can facilitate representing image details in the source images very well, which is of great significance for medical image fusion.

Figure 4.

Firing mapping images corresponding to MR-T2 and MR-T1 images: (a,e) MR-T2 and MR-T1 images; (b,f) FMIs with Nmax = 10 for (a,e); (c,g) FMIs with Nmax = 30 for (a,e); and (d,h) FMIs with Nmax = 50 for (a,e).

For each pixel at (i,j) in two FMIs and , two image patches of size (2Lp + 1) × (2Lp + 1) centered at this pixel will be considered in order to represent the local statistical characteristics, which is more advantageous for the effective fusion of source images than the characteristics of individual pixels. The statistical characteristics of the two image patches will be characterized by the local energy and defined as:

According to the relationship between and , the following fusion rule will be established and correspondingly the intensity of the pixel at (i,j) in the fused image U will be expressed as:

where and denote the pixel intensity at (i,j) in the source images A and B, respectively; denotes the weight, which has an important influence on the quality of the fused image because it will determine the contribution of source images to the fused result. To ensure good image fusion effect, will generally take a relatively big value to highlight the contribution of if , and otherwise it will take a relatively small value to underscore the contribution of .

3.2. Weight Computation

To obtain the suitable weight , the local neighborhood (i.e., image patch) of size (2Lp + 1) × (2Lp + 1) centered at (i,j) in any source image will be considered. It is desirable to compute this weight based on the similarity between two image patches and . To determine this similarity effectively, the gray-level information and the saliency of will be utilized simultaneously. Here, the entropy of pulse outputs and the Weber local descriptor proposed in [42] will be adopted to characterize the gray-level distribution of and its saliency, respectively.

3.2.1. Similarity Computation Based on the Entropy Information

For any pixel at (i,j) in each pulse image at the n-th iteration, the image patch of size (2Lp + 1) × (2Lp + 1) centered at this pixel is considered. To describe the information contained in , its Shannon entropy is utilized. The entropy is computed as:

where and denote the probability of the 1’s and 0’s in , respectively. Here, the probability is defined as:

where denotes the number of 1’s in .

The Shannon entropy from the various iterations will form the feature vector (). From Equation (10), we can see that if the image patch in any source image is a homogenous region, the Shannon entropy for all the iterations will be zero because or , thereby producing a zero vector . Otherwise, because will not be zero for some iteration times, will include the nonzero elements, whose values will depend on the gray-level distribution of . The above analysis indicates that the gray-level information of can be characterized by . Accordingly, can be considered as the feature extracted from .

The difference between the features of two image patches and is calculated as:

where denotes the Euclidean norm.

Based on the difference , the similarity between and based on the entropy information will be defined as:

where is a constant.

3.2.2. Similarity Computation Based on the WLD

In this paper, the WLD is adopted to extract the salient features of an image patch of interest in the firing mapping image, which can be utilized to represent the saliency of the image patch in any source image. The WLD is chosen due to its high computational efficiency and excellent ability in finding local salient patterns within an image to simulate the pattern perception of human beings [42]. Indeed, there are many other sparse and dense descriptors such as the scale invariant feature transform (SIFT) and the local binary pattern (LBP). Compared with the SIFT the LBP, the WLD is computed around a relatively small square region and it extracts the local salient patterns by means of the differential excitation [42]. Therefore, the WLD can capture more local salient patterns than the SIFT and the LBP.

The computation of the WLD stems from Weber’s Law that the ratio of the increment threshold (i.e., a just noticeable difference) to the background intensity is a constant. For the current pixel at (i,j) in the FMI or , the difference between this pixel and its neighbors in an image patch of size (2Lp + 1) × (2Lp + 1) is given by:

It can be seen from Equation (14) that the computation of is very similar to the Laplacian operation. Following the hints in Weber’s Law, the differential excitation of the current pixel for the WLD is computed as [42]:

where the arctangent function is used to prevent the output from increasing or decreasing too quickly when the input becomes larger or smaller [42].

As discussed in [42], the WLD can indicate the saliency of the local neighborhood very well because of its powerful representation ability for such important features as edges and textures. To explain this point better, Figure 5 shows the results of the WLD operating on the FMIs shown in Figure 4c,d,g,h. The comparisons between Figure 4 and Figure 5 show that both the strong and weak edges in Figure 4 have become more salient in the results of the WLD than in the FMIs. Therefore, the WLD operating on the FMIs can bring out the local image structural features of source images very well, which are highly beneficial for medical diagnosis based on different imaging modalities. It will be desirable to utilize these extracted salient image features to determine the similarity between and in the source images, i.e.,

where is a constant.

3.2.3. Weight Determining Based on the Combined Similarity

By combining with , the weight in Equation (9) will be presented as:

From Equation (17), we can see that if the two image patches centered at (i,j) in the source images have the same local structure, which means the same WLD, will be determined by which is related to the intensity distributions of image patches in the source images. Likewise, will depend on , which is related to the local image structure if the two image patches have the same gray-level distributions. The above analysis shows that can represent the similarity between two image patches effectively by combining their gray-level information with their local structure. It should be noted that is also likely to be computed using such non-Euclidean similarity measures as Cosine distance measure and Pearson correlation, which is scale and translation invariant. When Pearson correlation is used to measure the similarity between Shannon entropy feature vectors of two considered image patches, it can address scale and translation changes of feature vectors. However, this correlation requires that the variables follow a bivariate normal distribution. The possibility of utilizing non-Euclidean similarity measures for the similarity computation in the weighting fusion strategy will be explored in-depth in future work.

3.3. Implementation of the SCM-M Method

The implementation of the proposed SCM-M method can be summarized as the following steps:

- (1)

- The two source images A and B are input into two SCMs. After running the SCM for Nmax times, the series of binary pulse images will be obtained for the source images using Equations (1)–(4).

- (2)

- For each pixel at (i,j) in A and B, the Shannon entropy from the various iterations is computed on the output pulse images using Equation (10) to generate two feature vectors and . Based on the difference between the two feature vectors, the similarity between two image patches and centered at (i,j) is computed using Equation (13).

- (3)

- The output pulse images are utilized to generate the firing mapping images for two source images. For any pixel at (i,j) in two FMIs, the local energy and are computed on the considered two image patches centered at this pixel using Equations (7) and (8), respectively. Meanwhile, the WLD is computed for the two image patches using Equation (15) to determine the similarity between and using Equation (16).

- (4)

- The weight is determined by and using Equation (17).

- (5)

- According to the relationship between and , the fused image is produced by the weighted sum of two source images using Equation (9).

4. Experimental Results and Discussions

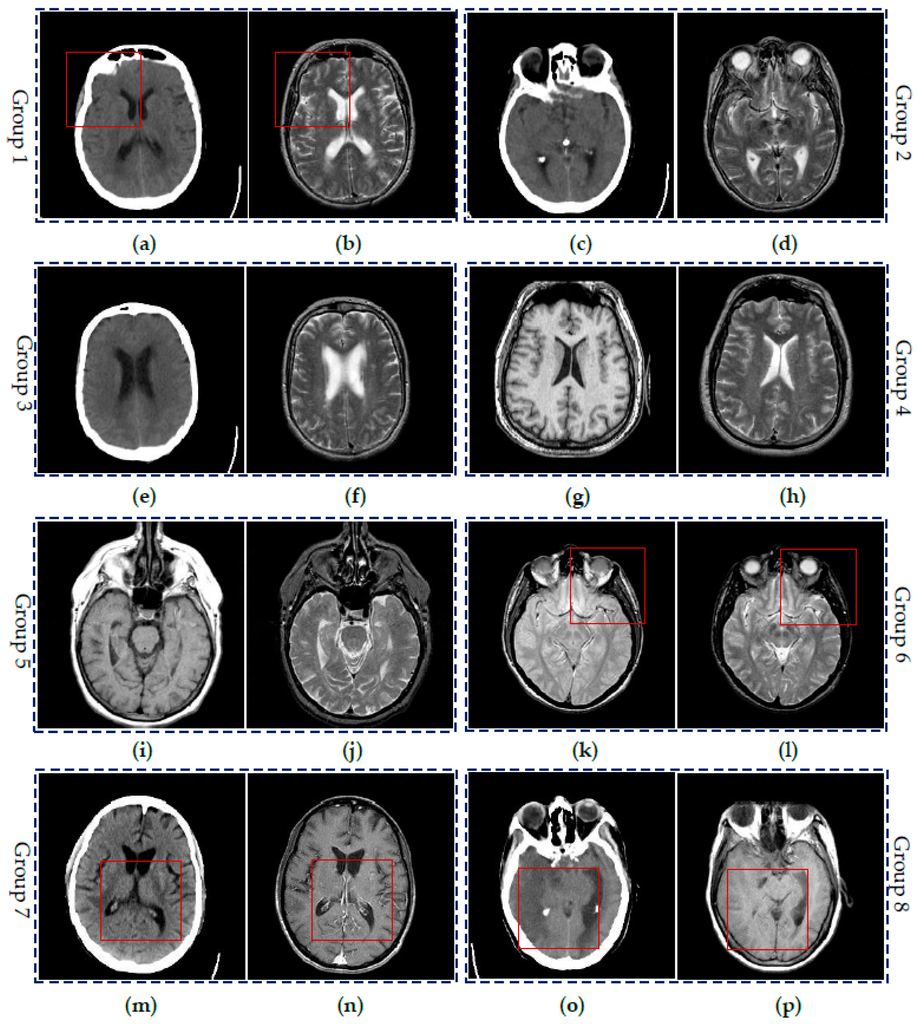

To demonstrate the effectiveness of the proposed SCM-M method, extensive experiments have been done on eight groups of CT and MR images shown in Figure 6. All the images are chosen from the website [43]. Each image is of size 256 × 256. Two images in each image pair include the complementary information. Here, Groups 1–3 are three pairs of CT and MR images of different regions in the brain of a patient with acute stroke. Group 4 includes the transaxial MR images of the normal brain. Groups 5 and 6 are MR images of the brain of patients with vascular dementia and AIDS dementia, respectively. Groups 7 and 8 are two pairs of CT and MR images of the brain of the patients with cerebral toxoplasmosis and fatal stroke. Please note that intensity standardization and inhomogeneity correction have been performed on all MR images by the above website.

Figure 6.

Six groups of source medical images: (a,c,e,m,o) CT images; (b,d,f,h,j,l) MR-T2 images; (g,i,p) MR-T1 images; (k) MR-PD image; and (n) MR-GAD image.

To verify the advantage of the SCM-M method, it has been compared with the discrete wavelet transform (DWT), the NSCT [21], the combination of NSCT and sparse representation (NSCT-SR) [21], m-PCNN [29], PCNN-NSCT [32] and SCM-F [41] based fusion methods. The code of the DWT and PCNN-NSCT methods is available on the websites [44,45], respectively. The code of the NSCT and NSCT-SR methods can be found on the website [46].

4.1. Parameter Settings

For the DWT fusion method, the wavelet and the number of decomposition levels are chosen to be DBSS(2,2) and 4, respectively. For the m-PCNN method and the SCM-F method, all the involved parameters are chosen as suggested in [29,41], respectively. For the NSCT and NSCT-SR methods, we have used the “pyrexc” as the pyramid filter and the “vk” as the directional filter. The number of directions of the four decomposition levels from coarse to fine is selected as 2, 3, 3, 4, respectively. For the PCNN-NSCT method, we have chosen the decay constant , the linking strength and the amplitude gain in the PCNN while keeping other parameters to be the same as those in [32]. As regards the proposed method, we have fixed f = 0.9, g = 0.3, h = 20, , CS1 = 3 × Nmax, CS2 = 5 × and chosen Nmax to be close to 20.

4.2. Visual Comparisons of Fused Results

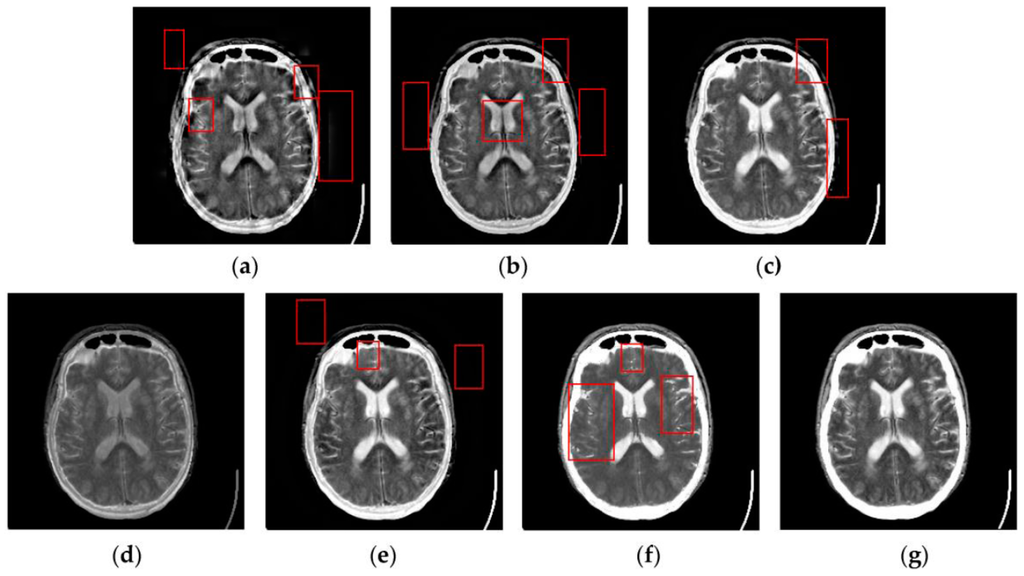

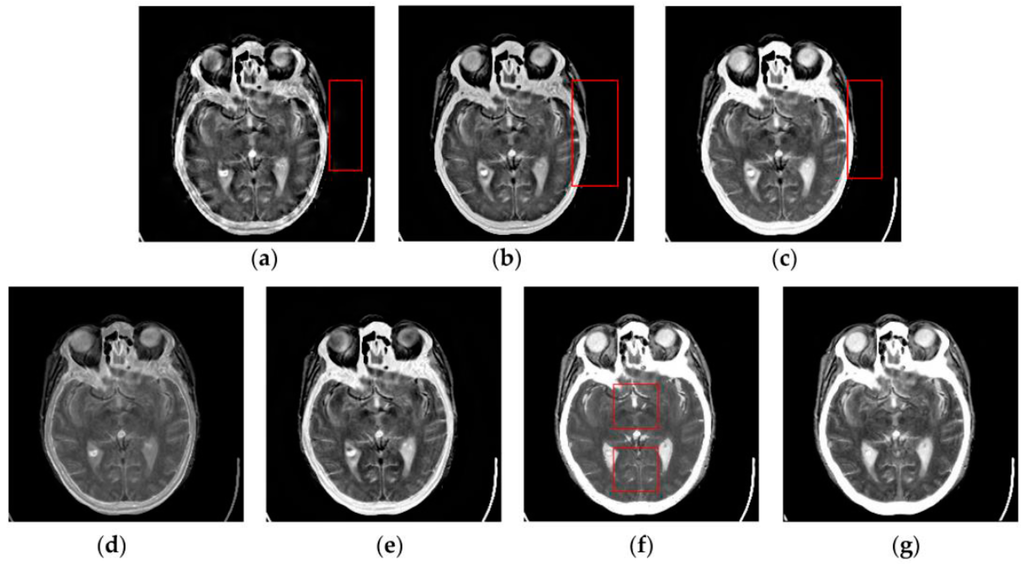

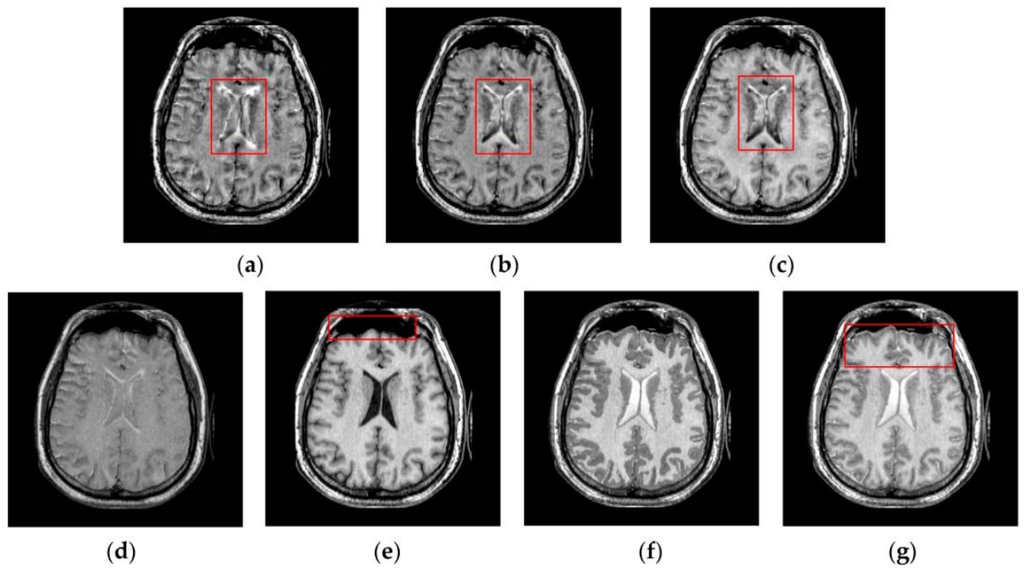

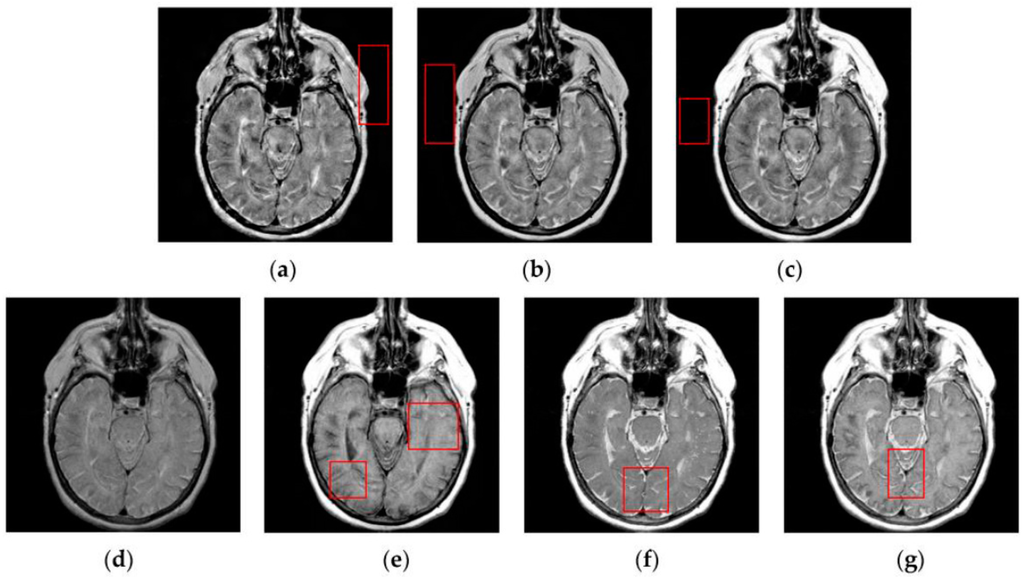

Figure 7, Figure 8, Figure 9 and Figure 10 show the fused results for the evaluated seven methods operating on such medical image pairs as Groups 1, 2, 4 and 5 shown in Figure 6, respectively. The observation from Figure 7, Figure 8 and Figure 10 shows that the DWT, NSCT and NSCT-SR methods introduce artifacts as well as false information in the fused results as indicated by the red boxes, which will greatly influence the quality of the fused images. Meanwhile, it is shown in Figure 7 and Figure 9 that the above three fusion methods cannot preserve image details well in that they produce the obvious distortion of image details marked by the red boxes in the fused results. The m-PCNN method cannot maintain the luminance of the fused results and it produces such low-contrast fused images that some important image details are difficult to identify, which is very disadvantageous for clinical diagnosis. The PCNN-NSCT method and the SCM-F method lead to loss of some important details in the source images to different extent. For example, for Groups 4 and 5, although almost all the details in the MR-T1 images can be transferred to the fused images by the PCNN-NSCT method very well, many details in the MR-T2 images have not been preserved by this method as indicated by the red boxes in the fused images shown in Figure 9e and Figure 10e. For Groups 1, 2, and 5, some image details have been seriously damaged by the SCM-F method as shown by the red boxes in Figure 7f, Figure 8f and Figure 10f.

Figure 7.

Fused results of the evaluated methods for the first group of source images shown in Figure 6a,b: (a) the discrete wavelet transform (DWT) method; (b) the non-subsampled contourlet transform (NSCT) method; (c) the NSCT-SR method; (d) the multi-channel pulse coupled neural network (m-PCNN) method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

Figure 8.

Fused results of the evaluated methods for the second group of source images shown in Figure 6c,d: (a) the DWT method; (b) the NSCT method; (c) the NSCT-SR method; (d) the m-PCNN method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

Figure 9.

Fused results of the evaluated methods for the fourth group of source images shown in Figure 6g,h: (a) the DWT method; (b) the NSCT method; (c) the NSCT-SR method; (d) the m-PCNN method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

Figure 10.

Fused results of the evaluated methods for the fifth group of source images shown in Figure 6i,j: (a) the DWT method; (b) the NSCT method; (c) the NSCT-SR method; (d) the m-PCNN method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

By comparison, the SCM-M method not only provides high contrast for the fused images, but also maintains important information from the various source images in the fused results effectively. In particular, the proposed method can preserve fine image details very well as shown by the red boxes in Figure 9g and Figure 10g without introducing artifacts or leading to edge blurring. The above comparisons demonstrate the superiority of the SCM-M method over other compared methods in that the fused images obtained by this method are more clear, informative, and have higher contrast.

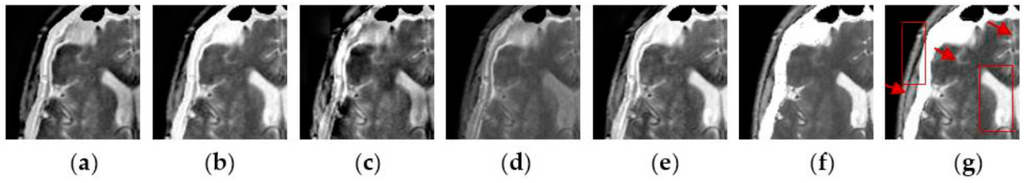

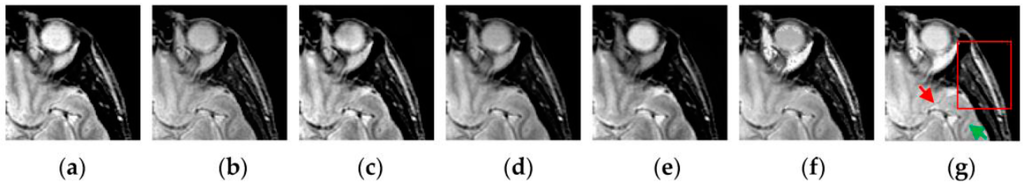

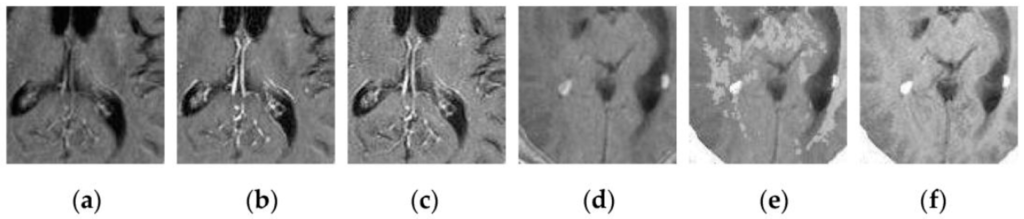

To further verify the advantage of the proposed SCM-M method in multimodal image fusion, Figure 11 and Figure 12 show the enlarged views of fused results for all evaluated methods operating on regions of interest (ROIs) denoted by the red boxes in Groups 1 and 6 in Figure 6, respectively. Figure 13 shows the enlarged views of fused results for the proposed method, the m-PCNN method and the SCM-F method operating on ROIs denoted by the red boxes in Groups 7 and 8 shown in Figure 6. In Figure 11 and Figure 12, we can see that the SCM-M method can maintain the salient information in the source images and provide better visual perception with less loss in luminance or contrast than other compared methods. To explain this point better, some edges and regions have been chosen from Figure 11g and Figure 12g. It can be seen from Figure 11 that the SCM-M method can provide better edge preservation than all other methods as pointed by the three red arrows. Meanwhile, compared with the DWT, NSCT and NSCT-SR methods, the SCM-M method can maintain the information in the MR image shown in Figure 6f better without introducing artifacts as indicated by the two red boxes. In Figure 12, we can see that the proposed method can keep the integrity of the edge marked by the red arrow best among all evaluated methods. Likewise, as pointed by the green arrow, the edge can be preserved very well by the proposed method while it has been damaged very seriously by other methods. Besides, the sharpness of the region shown by the red box can be maintained by the proposed method better than by the compared method. Furthermore, it can be seen in Figure 13 that compared with the m-PCNN and SCM-F methods, the SCM-M method can preserve fine image details and maintain image contrast better.

Figure 11.

Enlarged views of fused results of ROIs denoted by the red boxes in Group 1 in Figure 6 for the seven methods: (a) the DWT method; (b) the NSCT method; (c) the NSCT-SR method; (d) the m-PCNN method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

Figure 12.

Enlarged views of fused results of ROIs denoted by the red boxes in Group 6 in Figure 6 for the seven methods: (a) the DWT method; (b) the NSCT method; (c) the NSCT-SR method; (d) the m-PCNN method; (e) the PCNN-NSCT method; (f) the SCM-F method; and (g) the SCM-M method.

Figure 13.

Enlarged views of fused results of ROIs denoted by the red boxes in Groups 7 and 8 shown in Figure 6 for the m-PCNN, SCM-F and SCM-M methods: (a) the m-PCNN method for Group 7; (b) the SCM-F method for Group 7; (c) the SCM-M method for Group 7; (d) the m-PCNN method for Group 8; (e) the SCM-F method for Group 8; and (f) the SCM-M method for Group 8.

4.3. Quantitative Comparison of Fused Results

The performance of these compared methods is appreciated in terms of quantitative indexes including mutual information () [47], edge preservation index () [48], structural similarity (SSIM) [49] based metric () [50], fusion quality index () [51] and the fusion similarity metric () [52] and standard deviation (STD). Higher values for these indexes indicate better fusion results.

(1)

The metric reflects the total amount of information that the fused image contains about two source images, and it is defined as:

where

where and are the discrete joint probability, and are the marginal discrete probabilities of A, B and and are obtained by summing p over a, b, and u, respectively.

(2)

The metric measures the similarity between the edges transferred during the fusion process, and it is defined as:

where

where and denote the edge strength and orientation preservation values at (m, n) in the image A or B, respectively; and denotes the weight for and , respectively; and and are the width and the height of , respectively.

(3)

The metric employs the local SSIM between the source images as a match measure, according to which different operations are applied to the evaluations of different local regions [50]. This metric is defined as:

where

where is a sliding window, is the local weight, is the family of all sliding windows, is the cardinality of , is a measure for the similarity between the sliding window in A and that in , and a similar definition can be extended to and . For the computation of , all the involved parameters are kept to be same to those in [50] except that two constants C1 and C2 are chosen to be 2 × 10−6 for the computation of SSIM.

(4) s

The fusion quality index is computed as:

where , and denote the edge images of A, B and U, respectively. is defined as:

where represents the overall saliency of the sliding window and it is chosen as with , and denoting the variance of the window in the image A and that in the image B, and the difference between the maximum variance of all sliding windows in A and that in B, respectively.

(5)

The fusions similarity index is computed as:

where and are computed based on the universal image quality index [53]; and is dependent on the similarity in spatial domain between the input images and the fused image and it is defined as a piecewise function presented in [52].

(6)

The metric can measure the contrast of the fused image, and it is defined as:

where is the mean intensity of the fused image.

Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6 list , , , , and of fused results for seven evaluated methods operating on the six groups of multi-modal medical images, respectively. In these tables, the “bold” value denotes the highest one for each metric. From these Tables, we can see that among all evaluated methods, the DWT produces the lowest values and the NSCT method provides the smallest and for all test images. For the majority of medical image pairs, the m-PCNN method provides the lowest and values while the PCNN-NSCT method produces the lowest values. Compared with other evaluated methods, the SCM-M method provides higher six metrics values in all cases except that it is outperformed by the NSCT and NSCT-SR methods in terms of for Groups 7 and 8 and the SCM-F method in terms of for Group 6. The above comparisons demonstrate the superiority of the proposed method over the compared fusion methods in maintaining the information of source images, preserving the local image structure and image details, and ensuring the contrast of the fused image.

Table 1.

for the seven methods operating on the eight groups of medical images.

Table 2.

for the seven methods operating on the eight groups of medical images.

Table 3.

for the seven methods operating on the eight groups of medical images.

Table 4.

for the seven methods operating on the eight groups of medical images.

Table 5.

for the seven methods operating on the eight groups of medical images.

Table 6.

for the seven methods operating on the eight groups of medical images.

To further demonstrate the superiority of the proposed method over other compared methods, the paired t-tests have been performed based on the data in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6. The test results are listed in Table 7. The p values in Table 7 indicate that there exists very significant difference between the proposed method and other evaluated methods in terms of mutual information, structural similarity based metric, fusion similarity metric and standard deviation. As regards edge preservation index, there is no significant difference between the proposed method and the NSCT and NSCT-SR methods, but there still exists the significant difference between the proposed method and the DWT, PCNN-NSCT, and SCM-F methods. As for fusion quality index, the difference between the SCM-M method and the NSCT-SR and SCM-F methods is significant while the difference between the proposed method and the remaining four methods is very significant.

Table 7.

Paired t-test results for the compared methods operating on the eight groups of medical images.

Here we will make a simple analysis of the reason why the proposed method generally outperforms the m-PCNN method and the SCM-F method, which are similar to our method because of the utilization of the third generation neural networks. For the m-PCNN method, the fused image is produced based on the internal activity, which is only related to the pulse output of the individual neuron. In other words, only the individual neurons corresponding to the individual pixels in the two source images are used for image fusion while the characteristics of neighboring neurons of the considered neuron, which can facilitate representing the local image structure, has not been considered in this method. The same problem exists for the SCM-F method, in which only the firing times of the individual neurons is utilized to generate the fused image using the simple choosing and averaging strategy. By comparison, in the proposed SCM-M method, the characteristics of neurons in a local neighborhood are considered for the construction of the fusion rule and the determining of the fusion weight. As regards the fusion rule, the firing times of all the neurons in a neighborhood will be a more effective metric for the establishment of the fusion rule than that of the individual neuron. For the fusion weight, the WLD operating on the firing mapping image and the entropy information of pulse outputs of the SCM are computed in a local neighborhood to produce the weight. The combination of the WLD with the entropy information can facilitate determining the fusion weight effectively in that they can describe the local image structure and the gray-level information of source images very well.

5. Conclusions

A novel spiking cortical model based medical image fusion method is presented in this paper. The proposed method utilizes the pulse outputs of the SCM to realize pixel-level image fusion. By combining the gray-level image information represented by the entropy of pulse outputs with the local image structure represented by the Weber local descriptor operating on the firing mapping image, the proposed method can realize the effective weighted fusion of source images. Extensive experiments on the various CT and MR images demonstrate that the proposed method can produce clearer, more informative, higher contrast fused images than numerous existing methods in terms of human vision. Meanwhile, the objective comparison indicates that the proposed method outperforms the compared methods in terms of mutual information, edge preservations metric, structural similarity and standard deviation. Future work will be focused on extending our method to the fusion of multi-spectral medical images such as PET and SPECT images.

Acknowledgments

This work is partly supported by the Fundamental Research Funds for the Central Universities (No. 2015TS094) and Science and Technology Program of Wuhan, China (No. 2015060101010027).

Author Contributions

Xuming Zhang conceived the fusion method and designed the experiments; Jinxia Ren wrote the code of the fusion method; Zhiwen Huang analyzed all the data; and Fei Zhu performed the experiments.

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References

- Kaplan, I.; Oldenburg, N.E.; Meskell, P.; Blake, M.; Church, P.; Holupka, E.J. Real time MRI-ultrasound image guided stereotactic prostate biopsy. Magn. Reson. Imaging 2002, 20, 295–299. [Google Scholar] [CrossRef]

- Buck, A.K.; Herrmann, K.; Schreyögg, J. PET/CT for staging lung cancer: Costly or cost-saving? Eur. J. Nucl. Med. Mol. Imaging 2011, 38, 799–801. [Google Scholar] [CrossRef] [PubMed]

- Barra, V.; Boire, J.Y. A general framework for the fusion of anatomical and functional medical images. NeuroImage 2001, 13, 410–424. [Google Scholar] [CrossRef] [PubMed]

- Van der Ploeg, I.M.C.; Valdés Olmos, R.A.; Nieweg, O.E.; Rutgers, E.J.T.; Kroon, B.B.R.; Hoefnagel, C.A. The additional value of SPECT/CT in lymphatic mapping in breast cancer and melanoma. J. Nucl. Med. 2007, 48, 1756–1760. [Google Scholar] [CrossRef] [PubMed]

- Redondo, R.; Sroubek, F.; Fischer, S.; Cristoba, G. Multifocus image fusion using the log-Gabor transform and a multisize windows technique. Inf. Fusion 2009, 10, 163–171. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Daneshvar, S.; Ghassemian, H. MRI and PET image fusion by combining IHS and retina-inspired models. Inf. Fusion 2010, 11, 114–123. [Google Scholar] [CrossRef]

- Wang, H.Q.; Xing, H. Multi-mode medical image fusion algorithm based on principal component analysis. In Proceedings of the International Symposium on Computer Network and Multimedia Technology, Wuhan, China, 18–20 January 2009; pp. 1–4.

- Toet, A.; van Ruyven, J.J.; Valeton, J.M. Merging thermal and visual images by a contrast pyramid. Opt. Eng. 1989, 28, 789–792. [Google Scholar] [CrossRef]

- Petrovic, V.S.; Xydeas, C.S. Gradient-based multiresolution image fusion. IEEE Trans. Image Process. 2004, 13, 228–237. [Google Scholar] [CrossRef] [PubMed]

- Ehsan, S.; Abdullah, S.M.U.; Akhtar, M.J.; Mandic, D.P.; McDonald-Maier, K.D. Multi-scale pixel-based image fusion using multivariate empirical mode decomposition. Sensors 2015, 15, 10923–10947. [Google Scholar]

- Qu, G.; Zhang, D.; Yan, P. Medical image fusion by wavelet transform modulus maxima. Opt. Express 2001, 92, 184–190. [Google Scholar]

- Yang, Y.; Park, D.S.; Huang, S.; Rao, N. Medical image fusion via an effective wavelet-based approach. EURASIP J. Adv. Signal Process. 2010, 2010, 579341. [Google Scholar] [CrossRef]

- Singh, R.; Khare, A. Fusion of multimodal medical images using Daubechies complex wavelet transform—A multiresolution approach. Inf. Fusion 2014, 19, 49–60. [Google Scholar] [CrossRef]

- Ali, F.E.; El-Dokany, I.M.; Saad, A.A.; Abd El-Samie, F.E. Curvelet fusion of MR and CT images. Prog. Electromagn. Res. C 2008, 3, 215–224. [Google Scholar] [CrossRef]

- Das, S.; Chowdhury, M.; Kundu, M.K. Medical image fusion based on ripplet transform type-I. Prog. Electromagn. Res. B 2011, 30, 355–370. [Google Scholar] [CrossRef]

- Yang, L.; Guo, B.L.; Ni, W. Multimodality medical image fusion based on multiscale geometric analysis of contourlet transform. Neurocomputing 2008, 72, 203–211. [Google Scholar] [CrossRef]

- Li, T.; Wang, Y. Biological image fusion using a NSCT based variable-weight method. Inf. Fusion 2011, 12, 85–92. [Google Scholar] [CrossRef]

- Liu, C.; Chen, S.; Fu, Q. Multi-modality image fusion using the nonsubsampled contourlet transform. IEICE Trans. Inf. Syst. 2013, 96, 2215–2223. [Google Scholar] [CrossRef]

- Bhatnagar, G.; Wu, Q.M.J.; Liu, Z. Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans. Multimed. 2013, 15, 1014–1024. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Wang, J.; Lai, S.; Li, M. Improved image fusion method based on NSCT and accelerated NMF. Sensors 2012, 12, 5872–5887. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Li, B.; Tian, L. Multi-modal medical image fusion using the inter-scale and intra-scale dependencies between image shift-invariant shearlet coefficients. Inf. Fusion 2014, 19, 20–28. [Google Scholar] [CrossRef]

- Wang, L.; Li, B.; Tian, L. EGGDD: An explicit dependency model for multi-modal medical image fusion in shift-invariant shearlet transform domain. Inf. Fusion 2014, 19, 29–37. [Google Scholar] [CrossRef]

- Jiang, H.; Tian, Y. Fuzzy image fusion based on modified self-generating neural network. Expert Syst. Appl. 2011, 38, 8515–8523. [Google Scholar] [CrossRef]

- Eckhorn, R.; Reitboeck, H.J.; Arndt, M.; Dicke, P.W. Feature linking via synchronization among distributed assemblies: Simulation of results from cat cortex. Neural Comput. 1990, 2, 293–307. [Google Scholar] [CrossRef]

- Eckhorn, R.; Frien, A.; Bauer, R.; Woelbern, T.; Kehr, H. High frequency (60–90 Hz) oscillations in primary visual cortex of awake monkey. Neuroreport 1993, 4, 243–246. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Cai, W.; Tan, Z. A region-based multi-sensor image fusion scheme using pulse coupled neural network. Pattern Recognit. Lett. 2006, 27, 1948–1956. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y. Medical image fusion using m-PCNN. Inf. Fusion 2008, 9, 176–185. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, Q.; Hao, A. Multimodal medical image fusion using improved multi-channel PCNN. Bio-Med. Mater. Eng. 2014, 24, 221–228. [Google Scholar]

- Chai, Y.; Li, H.F.; Qu, J.F. Image fusion scheme using a novel dual-channel PCNN in lifting stationary wavelet domain. Opt. Commun. 2010, 283, 3591–3602. [Google Scholar] [CrossRef]

- Qu, X.; Yan, J.; Xiao, H.; Zhu, Z. Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Autom. Sin. 2008, 34, 1508–1514. [Google Scholar] [CrossRef]

- Kong, W.W.; Lei, Y.J.; Lei, Y.; Lu, S. Image fusion technique based on non-subsampled contourlet transform and adaptive unit-fast-linking pulse-coupled neural network. IET Image Process. 2011, 5, 113–121. [Google Scholar] [CrossRef]

- El-taweel, G.S.; Helmy, A.K. Image fusion scheme based on modified dual pulse coupled neural network. IET Image Process. 2013, 7, 407–414. [Google Scholar] [CrossRef]

- Das, S.; Kundu, M.K. NSCT-based multimodal medical image fusion using pulse-coupled neural network and modified spatial frequency. Med. Biol. Eng. Comput. 2012, 50, 1105–1114. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Kundu, M.K. A neuro-fuzzy approach for medical image fusion. IEEE Trans. Biomed. Eng. 2013, 60, 3347–3353. [Google Scholar] [CrossRef] [PubMed]

- Ganasala, P.; Kumar, V. Feature-motivated simplified adaptive PCNN-based medical image fusion algorithm in NSST domain. J. Digit. Imaging 2016, 29, 73–85. [Google Scholar] [CrossRef] [PubMed]

- Geng, P.; Wang, Z.; Zhang, Z.; Xiao, Z. Image fusion by pulse couple neural network with shearlet. Opt. Eng. 2012, 51, 067005. [Google Scholar] [CrossRef]

- Feng, K.; Zhang, X.; Li, X. A novel method of medical image fusion based on bidimensional empirical mode decomposition. J. Converg. Inf. Technol. 2011, 6, 84–89. [Google Scholar]

- Zhan, K.; Zhang, H.; Ma, Y. New spiking cortical model for invariant texture retrieval and image processing. IEEE Trans. Neural Netw. 2009, 20, 1980–1986. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Wu, Y.; Ding, M.; Zhang, X. Medical image fusion based on spiking cortical model. In Proceedings of the SPIE 8676, Medical Imaging 2013: Digital Pathology, Lake Buena Vista, FL, USA, 9–14 February 2013.

- Chen, J.; Shan, S.; He, C.; Zhao, G.; Pietikäinen, M.; Chen, X.; Gao, W. WLD: A robust local image descriptor. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1705–1720. [Google Scholar] [CrossRef] [PubMed]

- The Whole Brain Atlas. Available online: http://www.med.harvard.edu/aanlib/home.html (accessed on 1 July 2016).

- Image Fusion Toolbox. Available online: http://www.metapix.de/toolbox.html (accessed on 1 July 2016).

- NSCT-SF-PCNN-ImageFusion-Toolbox. Available online: http://dspace.xmu.edu.cn/dspace/handle/2288/8332 (accessed on 1 July 2016).

- MST_SR_Fusion_Toolbox. Available online: http://home.ustc.edu.cn/~liuyu1/publications/MST_SR_fusion_toolbox (accessed on 1 July 2016).

- Qu, G.; Zhang, D.; Yan, P. Information measurement for performance of image fusion. Electron. Lett. 2002, 38, 313–315. [Google Scholar] [CrossRef]

- Xydeas, C.S.; Petrovic, V. Objective image fusion performance measure. Electron. Lett. 2000, 36, 308–309. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Zhang, J.; Wang, X.; Liu, X. A novel similarity based quality metric for image fusion. Infusion Fusion 2008, 9, 156–160. [Google Scholar] [CrossRef]

- Piella, G.; Heijmans, H. A new quality metric for image fusion. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Barcelona, Spain, 14–18 September 2003; pp. 173–176.

- Cvejic, N.; Loza, A.; Bull, D.; Canagarajah, N. A similarity metric for assessment of image fusion algorithms. Int. J. Signal Process. 2005, 2, 178–182. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).