1. Introduction

Human Activity Recognition (HAR) systems are already integrated into many of our daily routine activities [

1]. Applications, such as Google Fit [

2] or Apple Health [

3], are able to detect some activities, such as walking and running, that are linked to health and fitness parameters. Many other HAR-related applications are also available either using the sensors embedded in smart phones or using wearable devices. Applications such as Lumo [

4] are developed to provide a gentle vibration when a person slouches to remind them to sit or stand straight and correct their posture [

5]. In fact, HAR algorithms based on applying machine learning techniques to data gathered from wearable devices [

6] have established themselves as a convenient alternative for vision-based activity detection algorithms [

7,

8]. Using wearable sensors provides a non-intrusive, always available companion compared to vision-based systems [

6].

Health monitoring systems based on recognising human activities from wearable devices are applied to remote health monitoring for long-term recording, management and clinical access to patient’s activity information [

9,

10]. Knowing the activities that a patient with long-term conditions, such as Cardio Vascular Disease (CVD), Chronic Obstructive Pulmonary Disease (COPD), Parkinson’s Disease (PD) or diabetes, is performing could help in providing assistance [

9]. In fact, the activity performed is part of the environment of a particular user, which provides valuable information for the implementation of personal recommender systems [

11]. Home monitoring, assisted living and sports and leisure are other applications that are benefiting from wearable sensors to detect human activities [

12].

Although detecting activities provides relevant contextual information for many applications, sometimes, it is desirable to go further in order to detect specific movements and gestures (either those made inside activities [

13] or those that occur in sporadic moments [

14]). Spotting sporadically-occurring movements from continuous data streams is a challenging task, especially when pre-detection techniques are to be deployed on the sensor systems themselves. Wearable sensor devices are relatively limited with their computational performance. In addition, battery consumption, as well as memory usage are other limitations [

6]. Having to recharge the batteries of wearable sensors too often is a major drawback for their adoption by many users. Communications over wireless interfaces should therefore be minimised as much as possible. This will require performing pre-detection computations on wearable sensor devices. However, extensive computations on limited devices are also linked with energy consumption, and therefore, simple, but reliable algorithms should be conceived of for pre-detecting segments in the data stream from each sensor in order to limit communications to those particular segments [

14]. The overall performance of an HAR system in terms of accuracy, precision and recall could later be improved by combining the information from several sensors [

11].

This paper proposes a novel approach to in-sensor pre-detection of movements using an algorithm that concentrates on the stochastic properties of local maxima and minima from the sensed data stream in order to detect specific movements. The hypothesis behind the design of the algorithm is that some atomic movements can be detected with sufficient accuracy. This can be achieved by considering the stochastic properties of the values and time variations of local maximum and minimum points from the sensed data (points that are easy to detect from raw sensed data by using simple mathematical operations and only requiring a limited amount of memory, adapted to low energy consumption and computational requirements when implemented on sensor devices). The algorithm could be trained for a particular individual or partially independent of users by considering data from different users when training the algorithm. The performance of the pre-detection of movements could be improved if several movements are performed together within a gesture. Combining movements in order to detect gestures is also a challenging task in general due to the variability of execution (and their related time series) both for intra- and inter-person data. Some approaches are based on requiring non-overlapping movements with pauses between consecutive movements [

15]. Moreover, using data from wearable sensors introduces additional challenges, such as noise and overlapping movements from the sensor itself if not tight to the body.

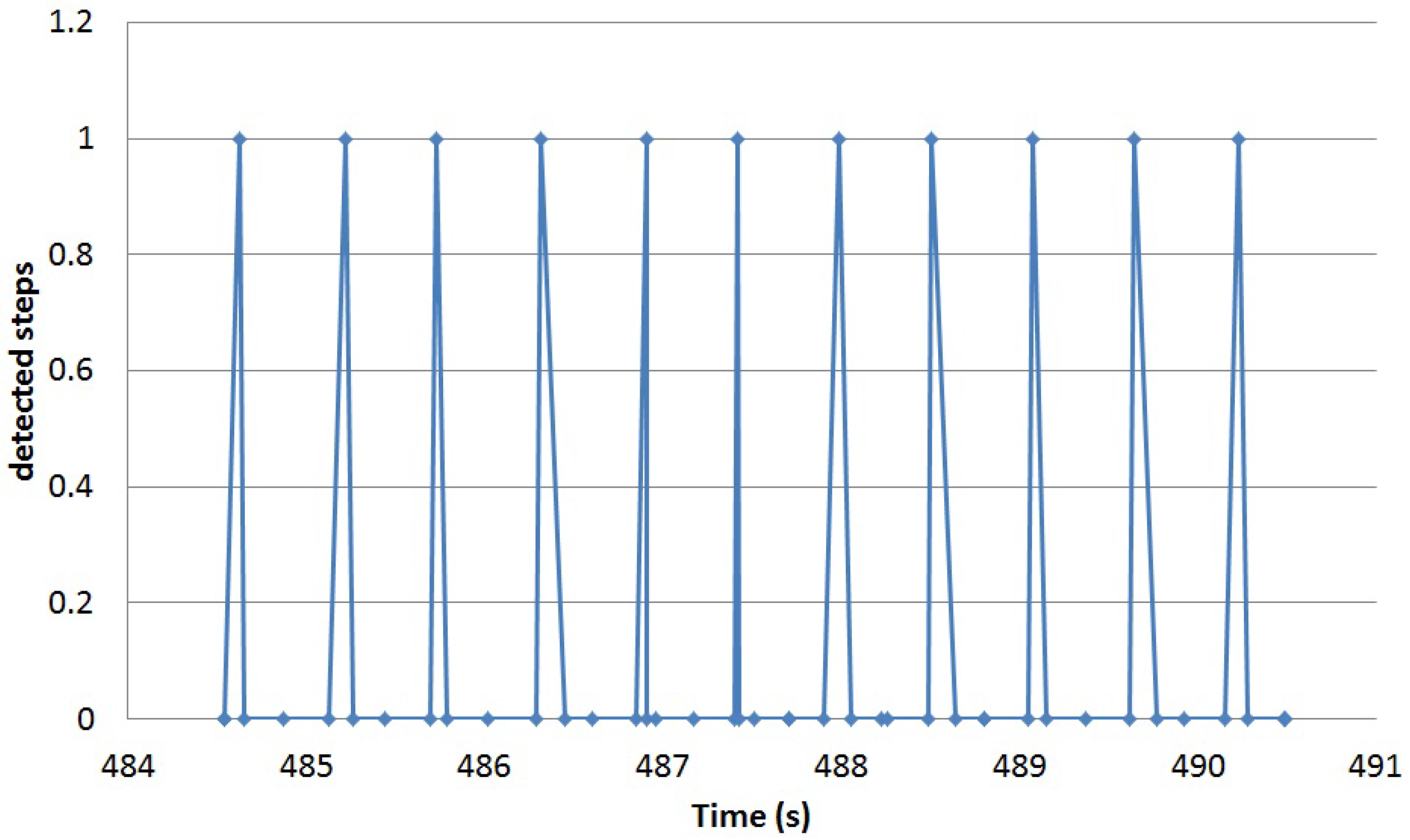

This paper will also present the results for two particular scenarios in which the proposed algorithm is implemented in order to (a) detect single steps and (b) identify and classify different types of falls using a single tri-axial accelerometer. The single step detection algorithm is trained to detect the behaviour when each foot initially comes in contact with the ground. By combining consecutive movements of “foot-ground contact”, a walking gesture can be detected. Moreover, sporadic failures in the detection of a particular step when walking could be used to fine tune the detection parameters of the algorithm by incorporating the missing steps into a new training phase of the parameters for a particular person. The fall detection and classification algorithms are able to detect and categorise the type of fall out of 30 falls in a public database.

The rest of the paper is organised as follows.

Section 2 presents an overview of the previous work and justifies the research in this paper.

Section 3 details the proposed algorithm.

Section 4 particularises the algorithm for the particular case of step detection.

Section 5 presents the adaptation of the algorithm for detecting and classifying falls.

Section 6 presents the details of the experiment carried out to validate the algorithm.

Section 7 captures the conclusions and the future work.

2. Related Work

The first papers on HAR were published in the late 1990s [

16]. There are two main approaches to implement HAR systems, using external and wearable sensors [

6]. In the former, the devices are fixed at predefined locations of observation in order to monitor the user (using cameras or microphones, for example). In the latter, the devices are attached (worn) by the user. Each approach has its advantages and disadvantages. However, some privacy, as well as availability issues are promoting a shift in research towards wearable sensors.

The availability of sensors, such as Microsoft Kinect [

17], PrimeSense Carmine and Leap Motion [

18], have helped with the advances in capturing human motion. The non-intrusive nature of sensors, their low cost and wide availability for developers have inspired numerous healthcare-related research projects in areas, such as medical disorder diagnosis [

19], assisted living [

20] and rehabilitation [

21]. However, most of these tools were originally developed for computer games.

In this paper, the concentration will be on wearable sensors to solve human movement detection problems. To detect activities with wearable sensors, two major approaches are adopted by researchers. The reviewed literature is presented below.

2.1. Sliding Window-Based Approaches

The data stream is divided into time overlapping windows to detect a particular activity among a set of pre-trained activities. The size of the window is defined so that enough information about the activity is present and only one activity is performed in that window (stationary). The window length is related to the achieved performance [

22]. In fact, most of the detection errors occur in windows that contain transitions from one activity into a different activity. The data stream is pre-processed into some pre-selected features. Two major sets of features could be extracted from the time series data; statistical and structural [

23]. In order to minimise the impact of the set of selected features, sparse representations could be used using the training samples directly as the basis to construct an over-complete dictionary [

24]. The application of different classification algorithms to the detected features, such as decision trees, Bayesian methods, Hidden Markov Models (HMM), nearest neighbours, Support Vector Machines (SVM) or ensembles of classifiers, could determine the activity being performed with accuracies greater than 95% if simple activities are being classified [

6,

25,

26]. Taking into account the uncertainty and flexibility in human activities, fuzzy rule-based systems [

27] or other forms of fuzzy classifiers [

28] are used and show their effectiveness in classifying activities.

The performance of existing HAR approaches based on inertial sensors for detecting activities and transitions between different activities and postures is affected by sensor placement and relative to body movements. To overcome these limitations, one possibility is to use additional information or sensor drift compensation techniques. The authors in [

27] propose to use the additional information from a barometric pressure sensor to improve the performance of a tri-axial accelerometer. The presented research in [

29] proposes the use of a combination of sensors to compensate for the weaknesses of either sensor in recognising various activities. In fact, to be able to classify more complex activities, such as taking a medicine or cooking, it could be essential to combine the information gathered from several sensors [

30]. In [

31], the authors propose a mechanism to compensate the accelerometer bias by computing the average of each acceleration component over the sliding window. A similar approach of using a low-band averaging filter to detect sensor relative movements with the body is used in this paper. The windowed average of each acceleration component is used to estimate the orientation of the sensor so that using rotation operations, vertical and longitudinal acceleration components could be extracted.

2.2. Decomposition of Activities into Basic Primitives

To decompose the activities into a sequence of elementary building blocks, two major approaches are adopted. A top-bottom approach first tries to identify the activity that a user is performing and then divides the activity into smaller segments containing specific movements inside the activity. A bottom-up approach focuses on detecting movements and tries to compose them into gestures and activities. The authors in [

32] propose a motion primitive-based model that captures the invariance aspects of the local features and provides insights for better understanding of human motion. The continuous activity signal is transformed into a string of symbols where each symbol represents a primitive. String-matching-based approaches can then be used to detect activities out of detected primitives or other methods, such as “a bag of features” could be used to improve the detection performance [

32]. The authors in [

13] also approach the problem of activity recognition by modelling it as a combination of movements. They use a two-step approach in which they first use a sliding window to detect activities and later try to classify movements inside activities. The authors in [

14] focus on directly detecting sporadic movements by directly processing the time series of the data sensed. They use a modified version of the Piecewise Linear Representation (PLR) algorithm in order to detect segments in the data stream that may contain movements of interest. They use different sensors that are combined in order to improve the detection accuracy. The approach is promising, but the algorithm deployed on sensor devices could be better optimised in order to reduce the complexity of the PLR algorithm, as well as to take into account the stochastic characteristics of the detected points of interest (linear pieces). The authors in [

33] also decompose activities into atomic pieces and propose the use of shapelets as the basis to classify atomic activities. Therefore, human movements are recognised by calculating the distance between the sensed time series and a dictionary of pre-recorded segments, which try to capture the particularities of each movement. They propose the use of the Euclidean distance, although recognise that other alternatives would also be applicable. Once atomic activities are recognised, they use atomic activities to assess sequential, concurrent and complex activities. Although the shapelet approach tries to simplify the information in the time series recorded from wearable sensors into significant fragments, the variations in intra- and inter-person execution could be big, and a measure based on the distance with pre-recorded segments will not take into account the variety of cases unless a complex and high volume training process is performed.

Taking into account the low energy constrains for on-sensor implementation, the approach presented in this paper is to generate a simple movement detection algorithm. This is based on a stochastic model characterising the local maxima and minima relationships from the time series measured from a single tri-axial accelerometer. The stochastic model will capture the information from the execution of a particular movement by different people and different execution speeds. Movement elasticity based on previous proposals, such as Dynamic Time Warping (DTW) [

34], do not properly consider non-linearities and do not provide the required flexibility for capturing rich human movements. The time elasticity model proposed in this paper will be based on the characterisation of the stochastic features of inter-time and amplitude variations among local maxima and minima when performing the same movement by different people at different speeds. The average gravity estimation and compensation method presented in [

31] will be used to minimize the impact of sensor placement and relative to the body over the time sensor movements. Estimating the gravity vector allows us to apply rotation operations to isolate vertical and longitudinal movement components.

3. Atomic Movement Detection Algorithm

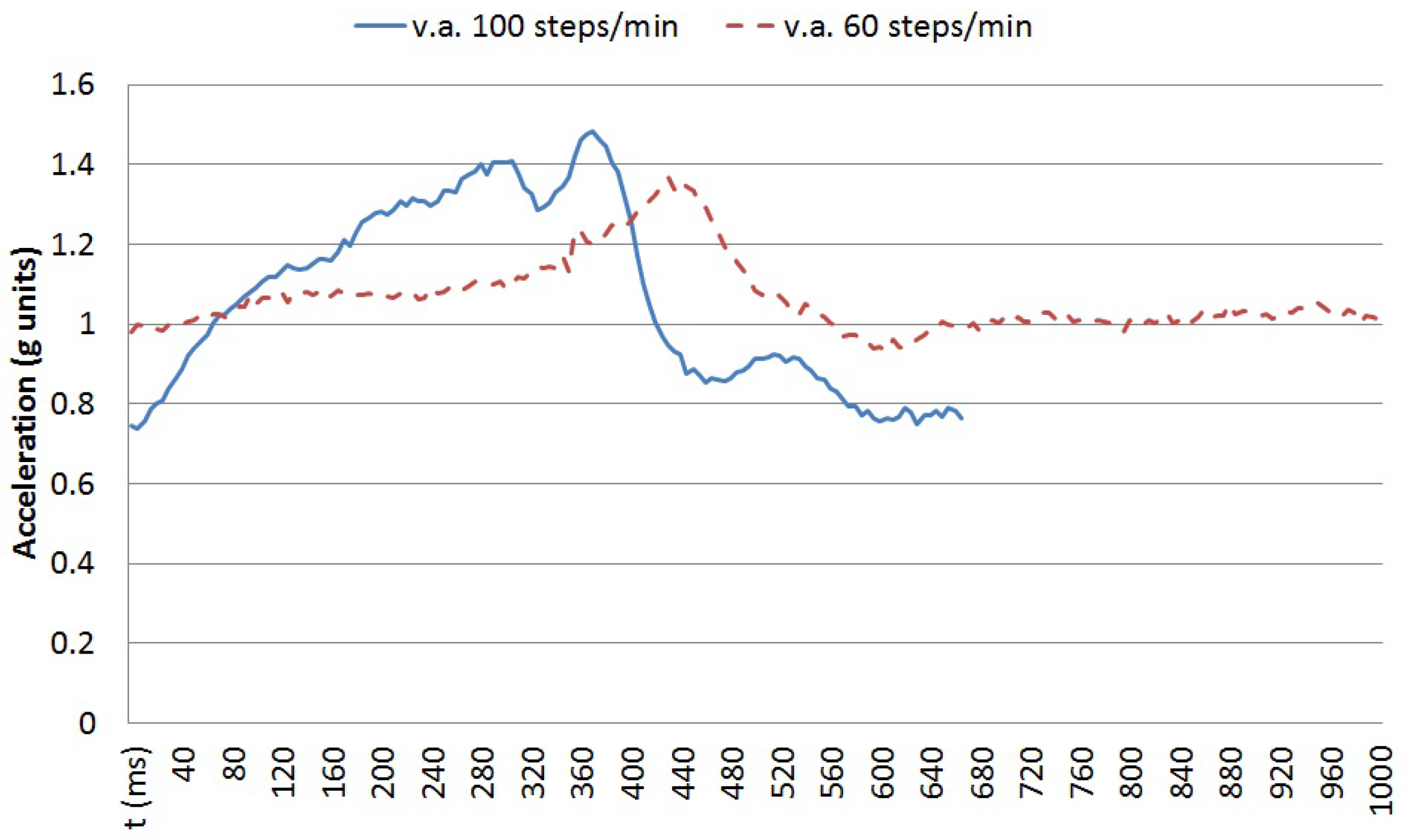

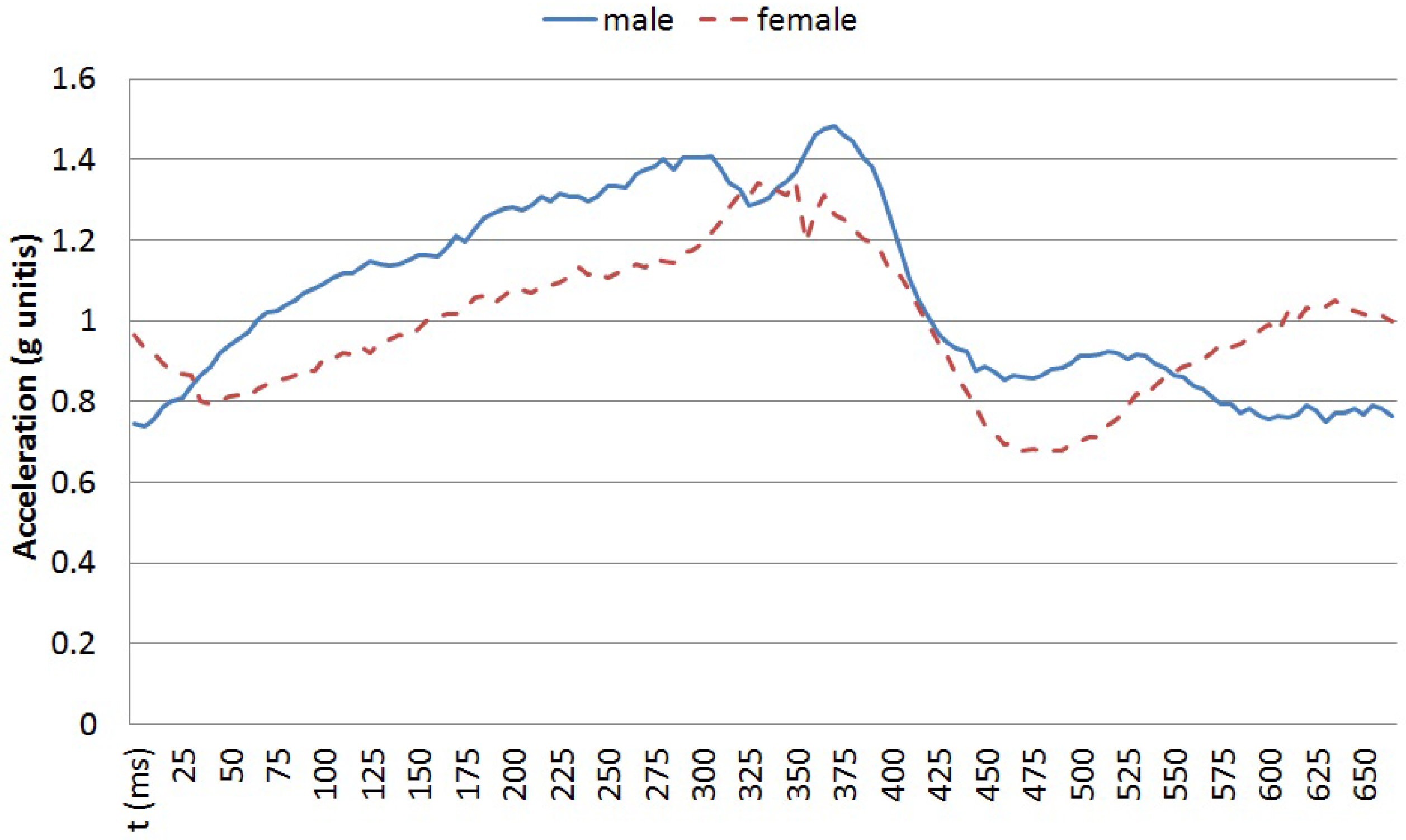

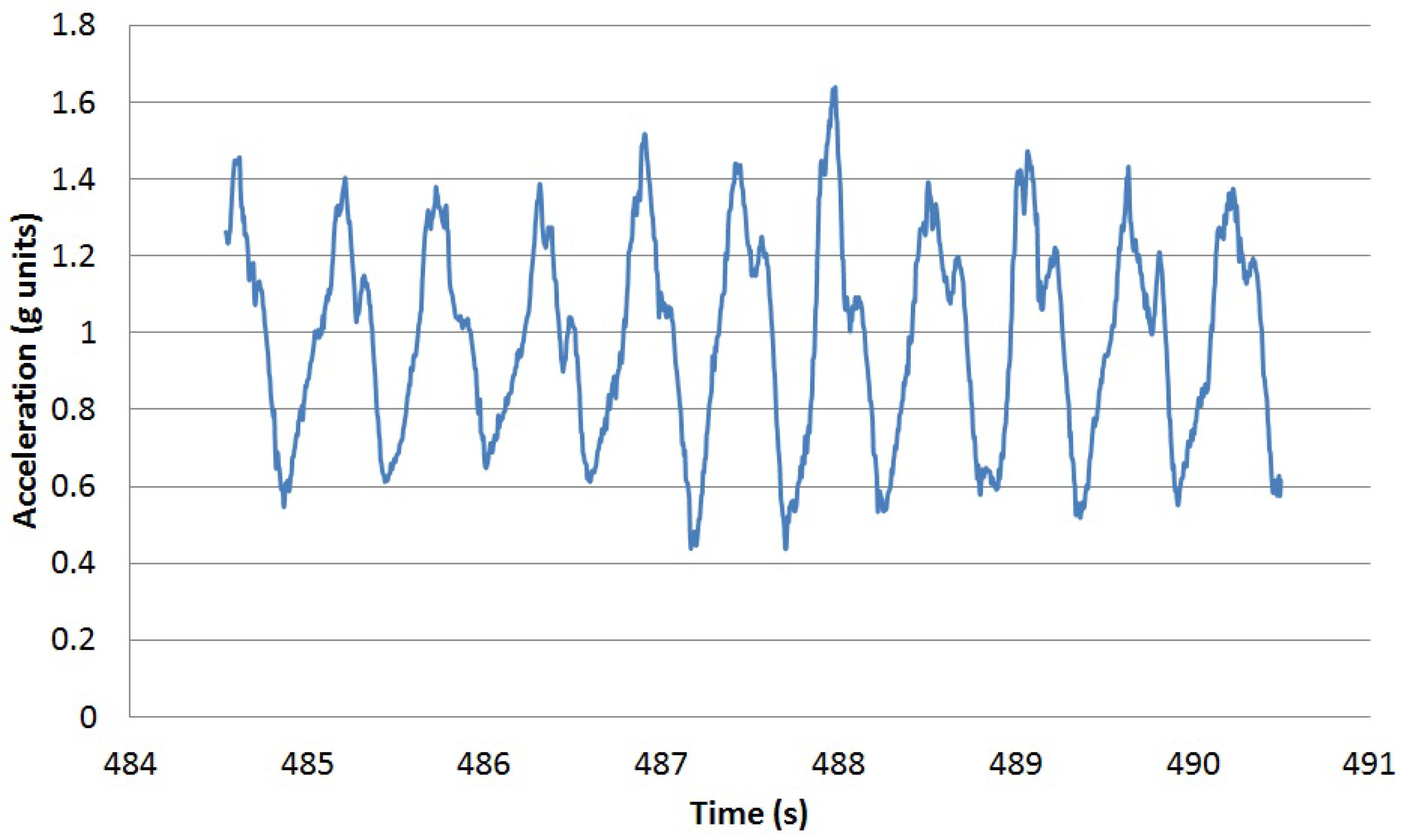

Atomic movements are short gestures or primitives executed when performing more complex activities or in sporadic ways. Walking a step or moving a spoon to one’s mouth are two simple examples. Each atomic movement can be performed at different speeds and following different trajectories by different people or even by the same person at different moments. The traces of the execution of each atomic movement can be captured by wearable sensors, such as tri-axial accelerometers. These sensors capture data as time series of scalar values. The vertical acceleration for a single step is shown in

Figure 1. Executing the same movement at different speeds does not provide a time-scaled version of the sensed data, but a non-linear variation over time.

Figure 2 captures the vertical acceleration for a single step at two speeds for the same person: 60 steps per minute and 100 steps per minute. In order to detect the execution of a particular movement, an algorithm based on the stochastic characterisation of the movement performed at different speeds by different users is proposed. Using a tri-axial accelerometer, the temporal elasticity of the movement is described by the changes both in amplitudes and time shifts among the different axes.

Let us call

the speed of executing a particular instance

i of a particular movement. Let us call

the duration of the execution of that movement and call

. For each segment of the sensed data of

duration, from which it is desired to estimate the speed of execution, the posterior distribution for the speed of execution of the atomic movement over the sensed data is given by Equation (

1).

In the above equation,

D represents the data extracted features in a particular segment. The probability of the data based on a particular speed of execution

could be estimated by extracting the stochastic properties from sample data by different users. In order to use Equation (

1), data could be described using different features as described in

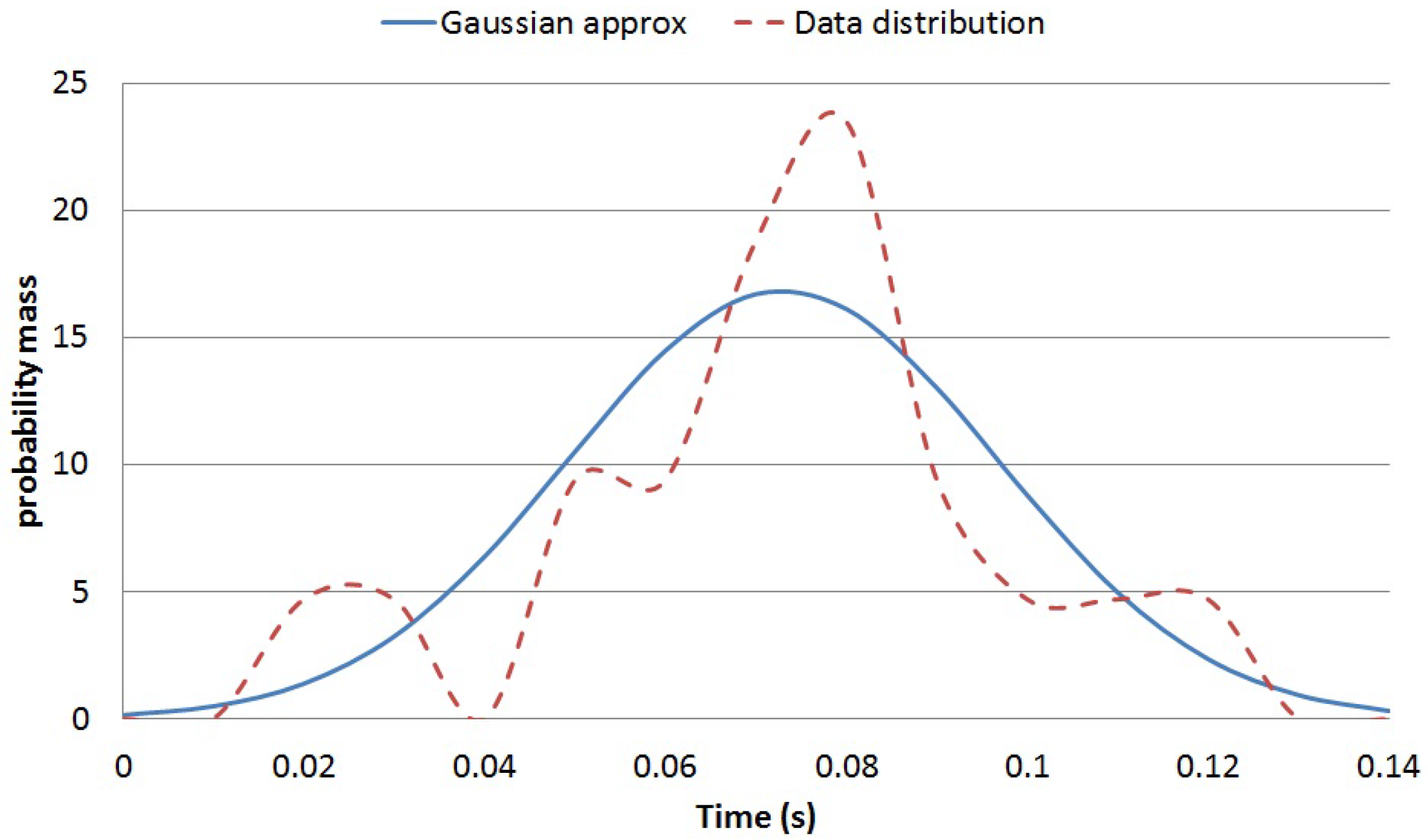

Section 2. Our approach is to characterise the time series using features that can be detected with low energy pre-processing algorithms. In particular, it is proposed to characterise the sensed time series by the times and amplitudes of the local maximum and minimum in a

time window. When using tri-axial accelerometers, different sub-features can be computed in order to capture the particularities of each movement, such as the time difference between the maximum value of the vertical acceleration and the horizontal acceleration or the relative variation in amplitude between vertical and horizontal accelerations. For each feature, a Gaussian mixture model will be used in order to simplify the stochastic representation and the required training phase. The probability mass of a particular feature extracted from the data samples

executed at speed

could be approximated by Equation (

2):

where

N is the normal distribution with

λ mean and

σ variance. In order to simplify the model, a combined multivariate Gaussian probability for all features could be approximated by assuming independence as captured in Equation (

3).

The prior distribution over could capture the prior knowledge of the particularities when performing the particular movement by a particular individual. In the case of step detection for example, the normal walking speed for a particular user could be used to generate a user-fitted prior distribution.

4. Atomic Movement Detection Algorithm for Step Detection

In order to validate the proposed approach, following the design criteria presented in

Section 3, a new algorithm is proposed here. This algorithm is used for the particular case of step detection.

The generic problem of detecting specific movements (or patterns) from sensor-generated time series can be approximated in two phases: feature extraction and movement classification based on the extracted features [

23]. Both phases could be implemented in the sensor device and/or on external computing systems. However, the final architecture should provide energy efficiency awareness in order to maximise the battery lifetime for sensors and therefore maximise their user acceptance. Deploying on-sensor algorithms will minimise communication energy costs at the expense of energy expended on embedded computations. On the other side, sending raw data to external systems will increase the communication energy requirements when trying to minimise sensor energy consumption on computations.

Our aim is to optimise energy requirements by performing on sensor pre-detection based on low energy features in order to minimise both computing and communication requirements from the energy perspective. Complex features based on time-frequency or wavelet transforms [

6] or full time-series computations based on approximated shapes [

14,

23] and distance-based similarities will penalise the energy required for on-sensor device computations. Approaches based on sparse dictionaries [

24] will require sending the complete sensed data to external servers, increasing the communication energy costs. In order to simplify the feature extraction from the acceleration time series, the proposed algorithm only uses the amplitudes and times of consecutive maxima and minima data points. A stochastic model for estimating the likelihood of a particular data segment to be a step based on the times and amplitudes of consecutive maxima and minima is developed and trained so that the operations required for detection are energy efficient. In particular, the algorithm proposed in this section has selected two simple features, which have shown good correlations with minimal complexity for step detection. The selected features are:

where

A refers to the amplitude of the sensed time series,

is the vertical acceleration,

is the acceleration in the movement direction,

T is the time difference and Max and Min represent the local maximum and minimum in the time window.

Assuming that features are independent of each other, at first, the speed of the execution of the movement is estimated by using the following equation:

In order to apply Equation (

6) to a dataset that includes segments of data containing steps and control segments in which no steps are executed (null class), the stochastic information for the null class has also to be taken into account. In our case, the null class is modelled as two single side distributions with an exponential shape as represented in Equation (

7):

where

represents the null class and

,

,

and

are the parameters of the distribution.

After estimating the values for

based on the training data, these values are used to assign a probability for a segment of validation data to contain a step using Equation (

8).

The output of Equation (

8) will provide a measure of likelihood that a step movement is performed. In order to simplify the proposed model, the

variable is sampled at particular points of interest that capture the range of walking speeds that will be detected. In our case, the stochastic distributions of

and

are characterised based on vales for

in the range of 60 to 100 steps per minute (corresponding to walking speeds between 3 and 5 km/s approximately). Equation (

6) is approximated by a Gaussian mixture model based on normal distributions for the selected values for

. In this model, computing Equation (

8) will be based on simple evaluations of the normal distribution based on the values of

,

and the result of Equation (

6).

In order to compensate the misplacement of the sensor device (a common issue with wearable sensors for daily activity monitoring) a low-band filter based on the averaging over a 2-s window is implemented (as proposed in [

31]). This moving average is able to detect both the original placement rotations of the sensor, as well as slow drifts in the sensor location relative to the human body. The average vector detects the gravity component. The projection of each accelerometer sample with the average vector is used to estimate the vertical acceleration component in our algorithm.

5. Atomic Movement Detection Algorithm for Detecting and Classifying Falls

In order to validate the proposed approach, following the design criteria presented in

Section 3, a second algorithm is proposed in this section. This algorithm is used for the particular case of fall detection and classification.

The proposed algorithm is divided into two consecutive phases: fall detection and fall classification. In order to detect falls, the algorithm proposed in this section has selected two simple features, which have shown good correlations with minimal complexity for fall detection:

where

A refers to the amplitude of the sensed time series,

G is the amplitude of the acceleration vector,

T is the time difference and Max and Min represent the local maximum and minimum in the time window.

In order to detect falls, the probability of having a fall conditioned to having measured

and

should exceed a certain threshold,

τ as captured in Equation (

11).

Assuming that

and

are independent variables, a fall is detected when the condition in Equation (

12) is met.

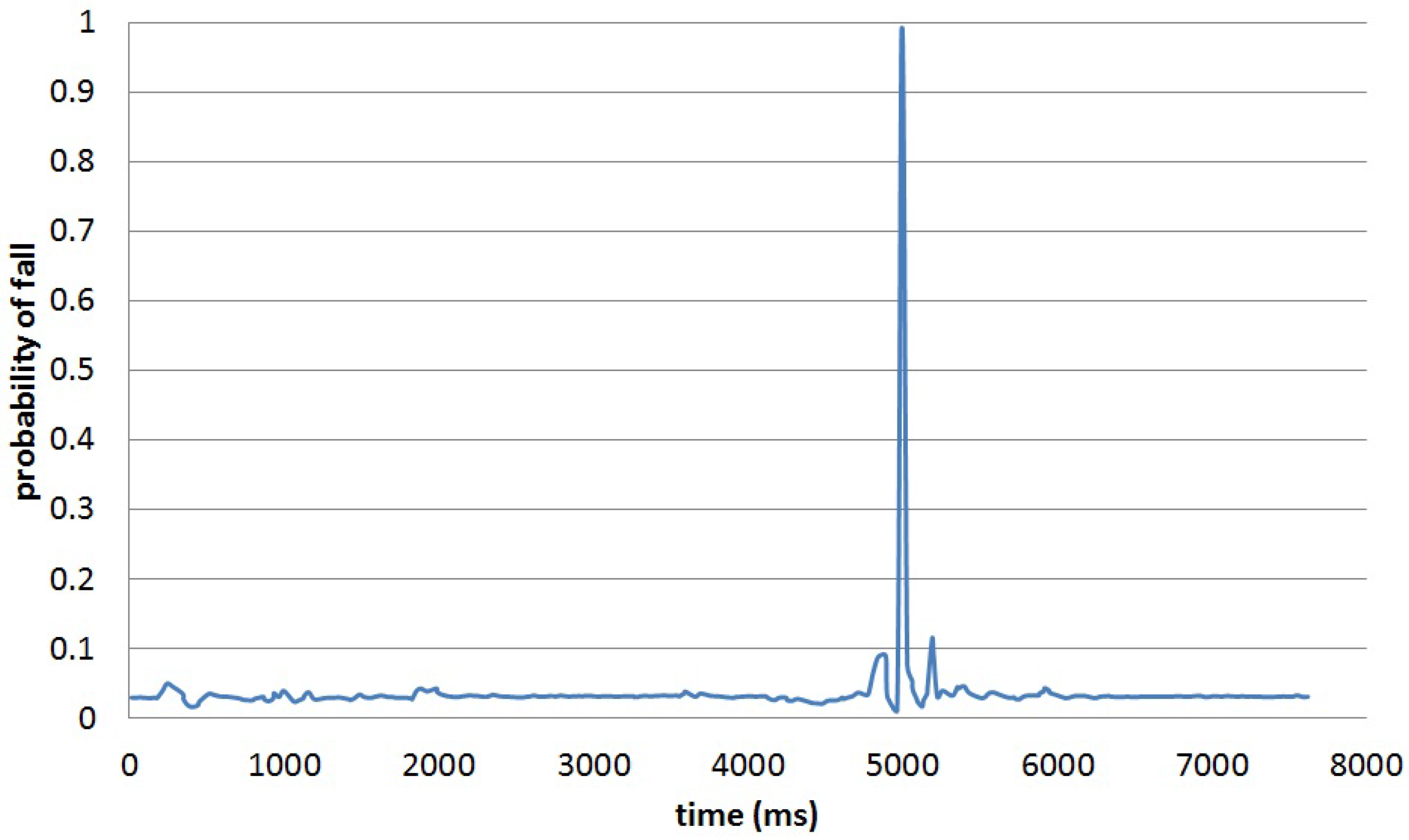

Based on the results in this study, a value of

for

has proven to be valid to distinguish 100% of falls in the database [

35].

Once a fall is detected, the proposed algorithm will estimate the class of fall that occurred. Based on the available experimental data, the attention is focused on detecting frontal falls. Falls have occurred when the user is walking or sitting on a chair. The database in [

35] contains 30 falls; six of them are frontal falls with no rolling over once the user contacts the ground. Out of these six falls, three are falls starting from a walking state and three falls start from a sitting position. The following three basic features are used:

where

A refers to the amplitude of the sensed time series,

G is the amplitude of the acceleration vector and

,

and

are the individual acceleration components.

All available 30 falls in the database are classified into three classes:

The detection of each class implies having the probability for the class conditioned to the measured feature above a certain threshold. The computation can be performed based on Equation (

19).

7. Conclusions

In this paper, a novel algorithm is presented to detect atomic human movements based on the stochastic properties of local maxima and minima of the sensed time series from a tri-axial accelerometer. The algorithm is designed to work on simple operations, such as storing the maximum and minimum values and times for the three acceleration axes and performing simple estimations for the probability of steps based on the normal distribution. The proposed algorithm is applied to two particular scenarios: detecting single steps while walking and detecting and classifying falls. The training set and the validation set for the step detection have used different individuals, hardware and software for the sensors (with location compensation). The fall classification is tested on a public available falls database.

The results validate that it is possible to detect atomic movements based on the stochastic characterisation of the elasticity of the sensed time series when performing the movement by different individuals at different speeds. The time elasticity is mapped into the deformations in the relative location and values for adjacent maxima and minima.

For future work, our model will be applied for the detection of other atomic movements and the detection of more complex activities by the recognition of a combination of atomic movements. We will also perform an on-sensor deployment to assess real-time detection.