Real-Time Robust Tracking for Motion Blur and Fast Motion via Correlation Filters

Abstract

:1. Introduction

2. Related Works

2.1. Correlation Filter Based Tracking

2.2. Blur Motion and Fast Motion Handling

2.3. Scale Variation Handling

3. Approach

3.1. Re-Formulate Kernelized Correlation Filter Tracking with Scale Handling

| Algorithm 1. The KCF tracker with target scale estimation |

|

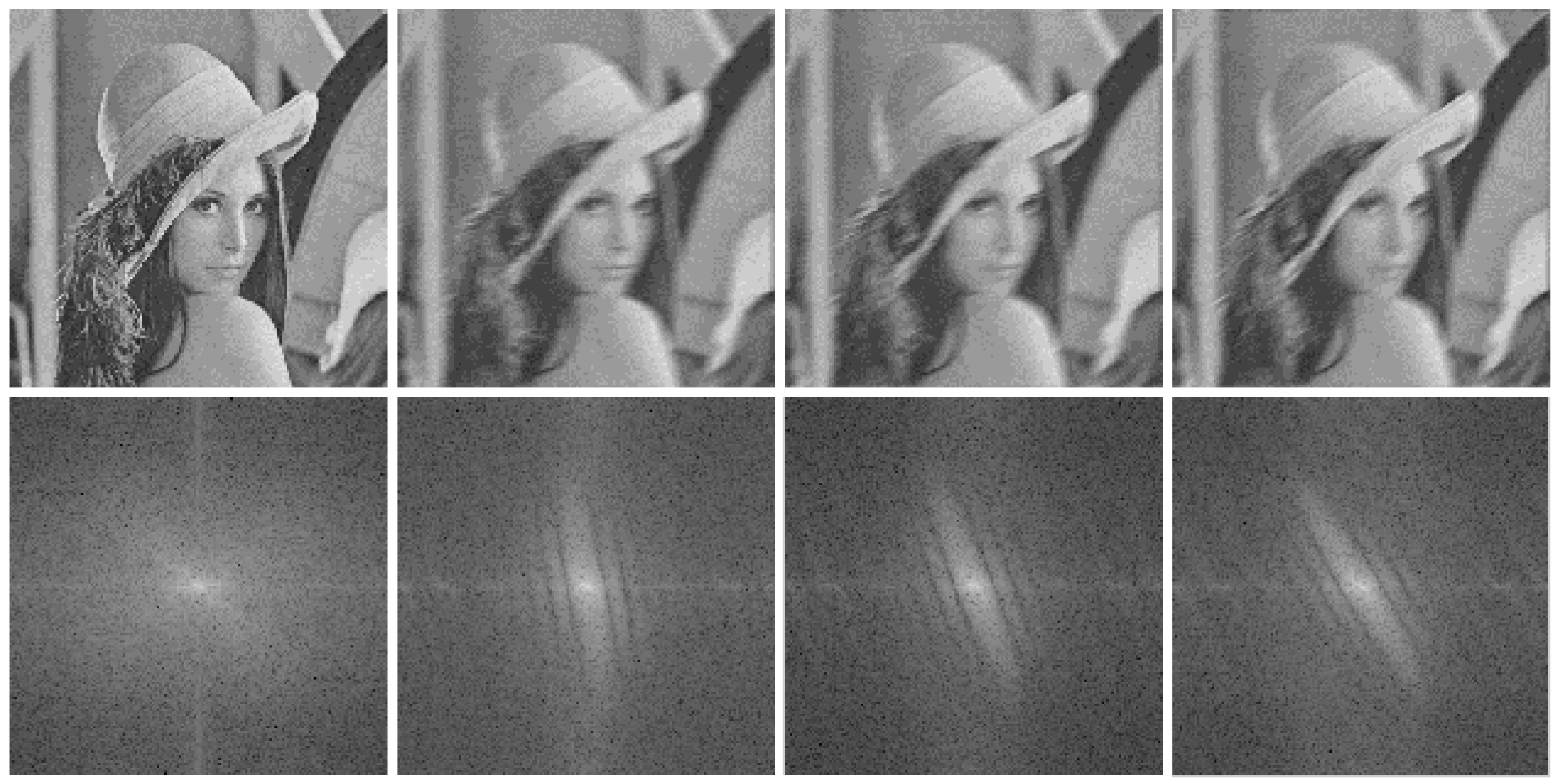

3.2. Analysis of Motion Blur and Fast Motion in Frequency Domain

3.3. The Tracker

| Algorithm 2. The Blur KCF tracker |

|

4. Experiments and Results

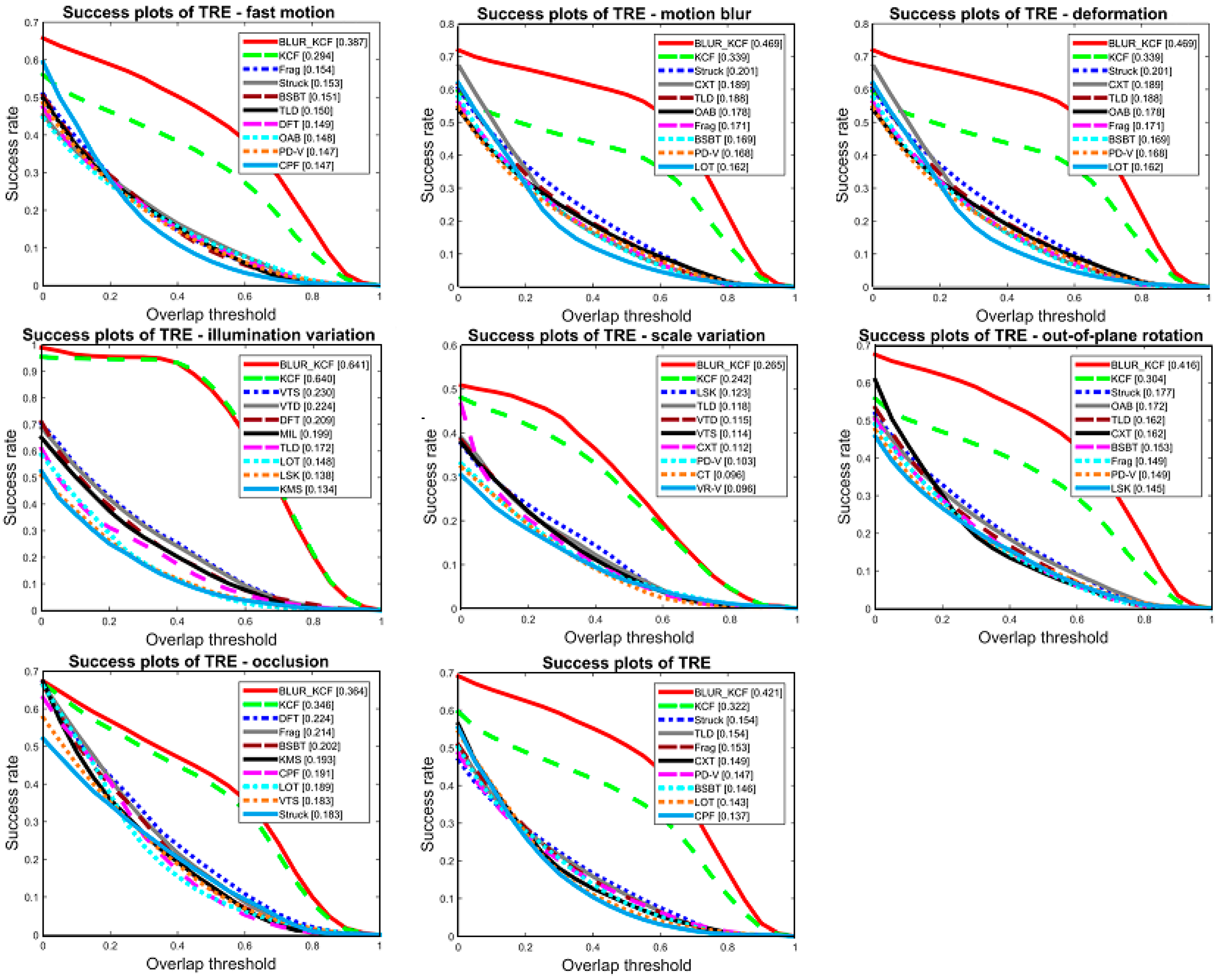

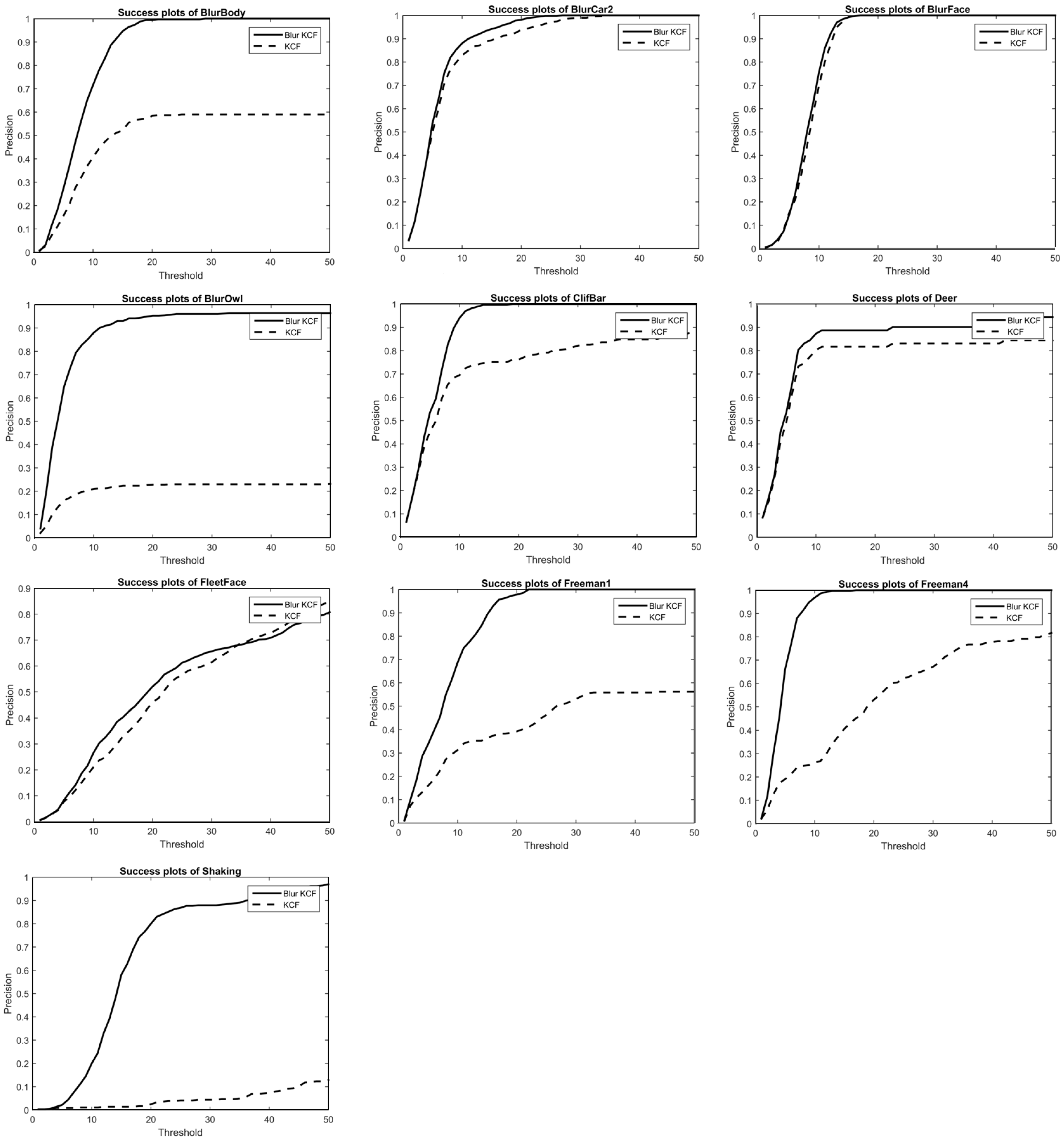

4.1. Quantitative Evaluation and Speed Analysis

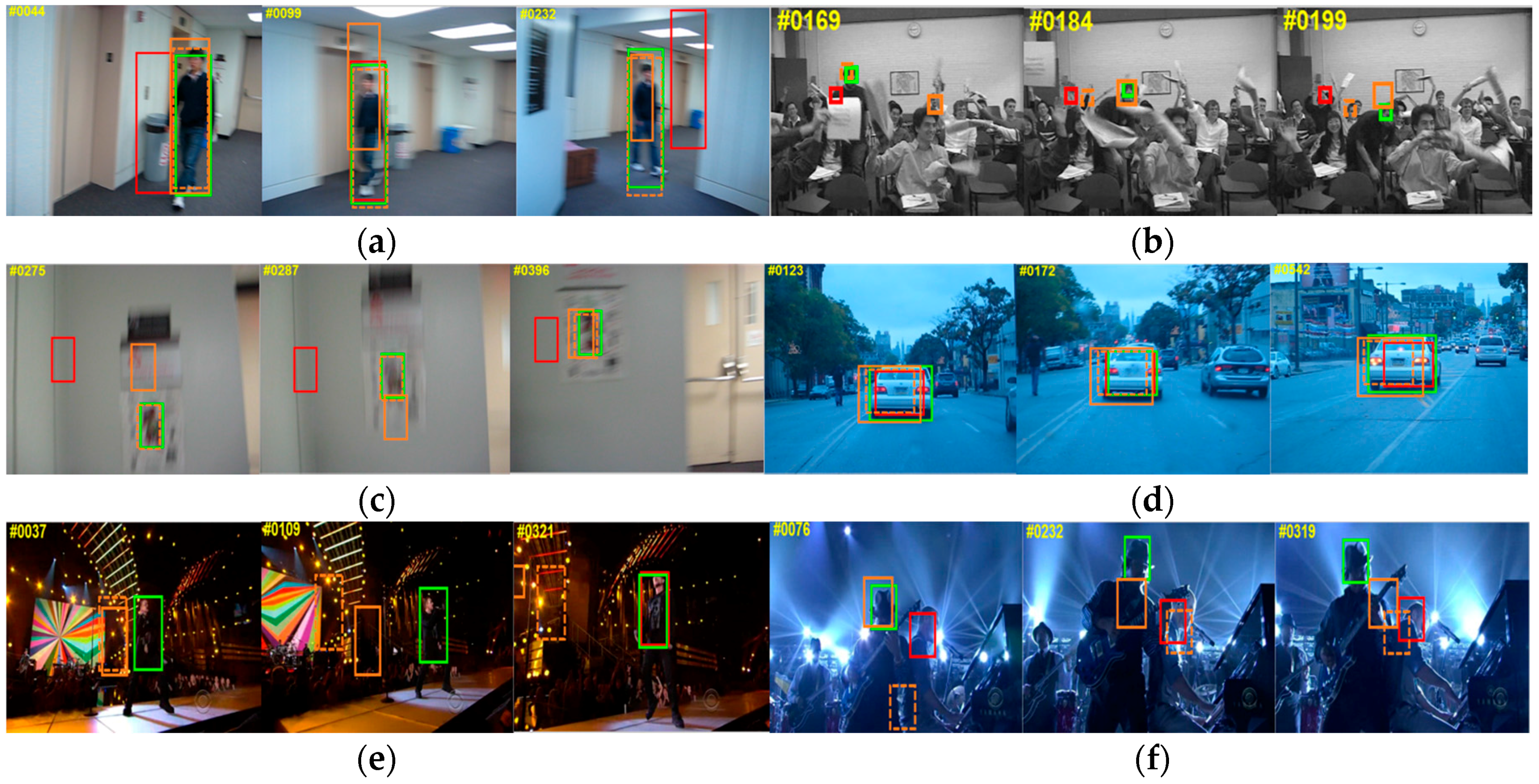

4.2. Qualitative Evaluation

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Menegatti, A.E.; Bennewitz, M.; Burgard, W.; Pagello, E. A visual odometry framework robust to motion blur. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA ‘09), Kobe, Japan, 12–17 May 2009; pp. 2250–2257.

- Luo, J.; Konofagou, E. A fast normalized cross-correlation calculation method for motion estimation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2010, 57, 1347–1357. [Google Scholar] [PubMed]

- Akbari, R.; Jazi, M.D.; Palhang, M. A Hybrid Method for Robust Multiple Objects Tracking in Cluttered Background. In Proceedings of the 2nd International Conference on Information & Communication Technologies (ICTTA ’06), Damascus, Syria, 24–28 April 2006; Volume 1, pp. 1562–1567.

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th international joint conference on Artificial intelligence (IJCAI ’81), Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679.

- Mei, X.; Ling, H. Robust Visual Tracking using L1 Minimization. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1436–1443.

- Bao, C.; Wu, Y.; Ling, H.; Ji, H. Real time robust L1 tracker using accelerated proximal gradient approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1830–1837.

- Yang, B.; Nevatia, R. An online learned CRF model for multi-target tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2034–2041.

- Kalal, Z.; Matas, J.; Mikolajczyk, K. P-N learning: Bootstrapping binary classifiers by structural constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 49–56.

- Hare, S.; Saffari, A.; Torr, P.H.S. Struck: Structured output tracking with kernels. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 263–270.

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Bolme, D.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 December 2010; pp. 2544–2550.

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation filters with limited boundaries. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4630–4638.

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2012; Volume 7575, Part 4; pp. 702–715. [Google Scholar]

- Danelljan, M.; Gustav, H.; Khan, F.S.; Felsberg, M. Convolutional Features for Correlation Filter Based Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 58–66.

- Liu, T.; Wnag, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4902–4912.

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2015; Volume 8926, pp. 254–265. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M. Long-term Correlation Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5388–5396.

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. Multi-Store Tracker (MUSTer): A cognitive psychology inspired approach to object tracking. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 749–758.

- Wu, Y.; Ling, H.; Yu, J.; Li, F.; Mei, X.; Cheng, E. Blurred target tracking by blur-driven tracker. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1100–1107.

- Dai, S.; Wu, Y. Motion from blur. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8.

- Jin, H.; Favaro, P.; Cipolla, R. Visual Tracking in the Presence of Motion Blur. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 20–25 June 2005; Volume 2, pp. 18–25.

- Tang, M.; Feng, J. Multi-Kernel Correlation Filter for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3038–3046.

- Zhang, K.; Zhang, L.; Liu, Q.; Zhang, D.; Yang, M.H. Fast visual tracking via dense spatio-temporal context learning. In Lecture Notes in Computer Science; Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin, Germany, 2014; Volume 8693, Part 5; pp. 127–141. [Google Scholar]

- Ferzli, R.; Karam, L.J. A no-reference objective image sharpness metric based on the notion of Just Noticeable Blur (JNB). IEEE Trans. Image Process. 2009, 18, 717–728. [Google Scholar] [CrossRef] [PubMed]

- Visual Tracking Benchmark. Available online: http://cvlab.hanyang.ac.kr/tracker_benchmark/datasets.html (accessed on 28 August 2016).

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418.

| Title | Blur Body | Blur Car2 | Blur Face | Blur Owl | Clif Bar | Deer | Fleetface | Freeman1 | Freeman4 | Shaking | Speed |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CXT | 25.94 | 26.8 | 19.29 | 57.33 | 33.08 | 19.99 | 57.3 | 20.41 | 67.46 | 157.39 | 9 fps |

| Struck | 12.86 | 19.36 | 21.65 | 12.86 | 20.08 | 12.51 | 43.39 | 24.7 | 59.14 | 65.14 | 15 fps |

| KCF | 64.12 | 6.81 | 8.36 | 92.2 | 36.7 | 21.16 | 26.37 | 94.88 | 27.11 | 112.5 | 360 fps |

| Ours | 11.95 | 5.82 | 8.01 | 8.88 | 6.04 | 9.46 | 26.37 | 8.06 | 4.5 | 17.5 | 186 fps |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, L.; Luo, H.; Hui, B.; Chang, Z. Real-Time Robust Tracking for Motion Blur and Fast Motion via Correlation Filters. Sensors 2016, 16, 1443. https://doi.org/10.3390/s16091443

Xu L, Luo H, Hui B, Chang Z. Real-Time Robust Tracking for Motion Blur and Fast Motion via Correlation Filters. Sensors. 2016; 16(9):1443. https://doi.org/10.3390/s16091443

Chicago/Turabian StyleXu, Lingyun, Haibo Luo, Bin Hui, and Zheng Chang. 2016. "Real-Time Robust Tracking for Motion Blur and Fast Motion via Correlation Filters" Sensors 16, no. 9: 1443. https://doi.org/10.3390/s16091443

APA StyleXu, L., Luo, H., Hui, B., & Chang, Z. (2016). Real-Time Robust Tracking for Motion Blur and Fast Motion via Correlation Filters. Sensors, 16(9), 1443. https://doi.org/10.3390/s16091443