A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images

Abstract

:1. Introduction

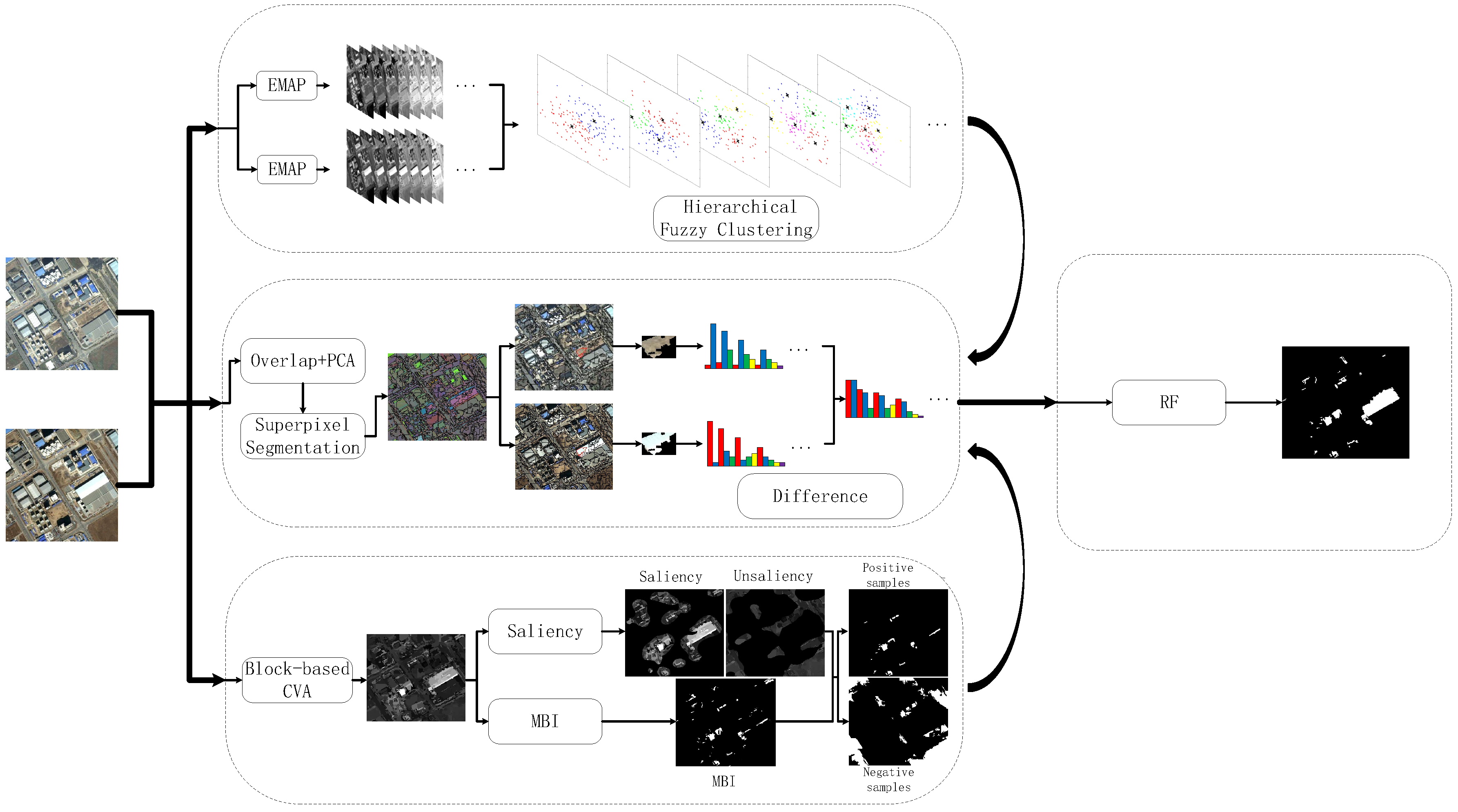

2. Methodology

2.1. Feature Extraction and Representation

- area of the region (a measure of the size of the regions, denoted as ‘a’);

- standard deviation (a measure of the homogeneity of the regions, denoted as ‘s’);

- diagonal of the box bounding the regions (another measure of the size of the regions, denoted as ‘d’);

- moment of inertia (a measure of the elongation of the regions, denoted as ‘i’).

2.2. Super-Pixel Segmentation and Hierarchical Fuzzy Histogram Construction

2.3. Saliency and MBI for Final Change Detection

- Calculation of brightness: The maximum value of multispectral bands for each pixels is denoted as:where indicates the intensity of the t-th pixel for the i-th band.

- Calculation of : Top-hat transformation is able to emphasize the locally bright structures. Additionally, buildings have high local contrast comparing with their spatially adjacent shadows. Therefore, the spectral-structural characteristics of buildings can be represented using the differential morphological profiles (DMPs) [27] of top-hat transformation with multiscale and multidirectional SE, i.e.,where indicates the top-hat transformation with d and being the direction and scale of a linear SE, respectively, represents the opening by reconstruction of the brightness v in (9) and is the interval of the profiles.

- Calculation of MBI: The MBI is calculated by the following formulawhere D and S are the total of directionality and scale. We consider four directions (i.e., 45, 90, 135 and 180) and eleven scales (i.e., , and ).

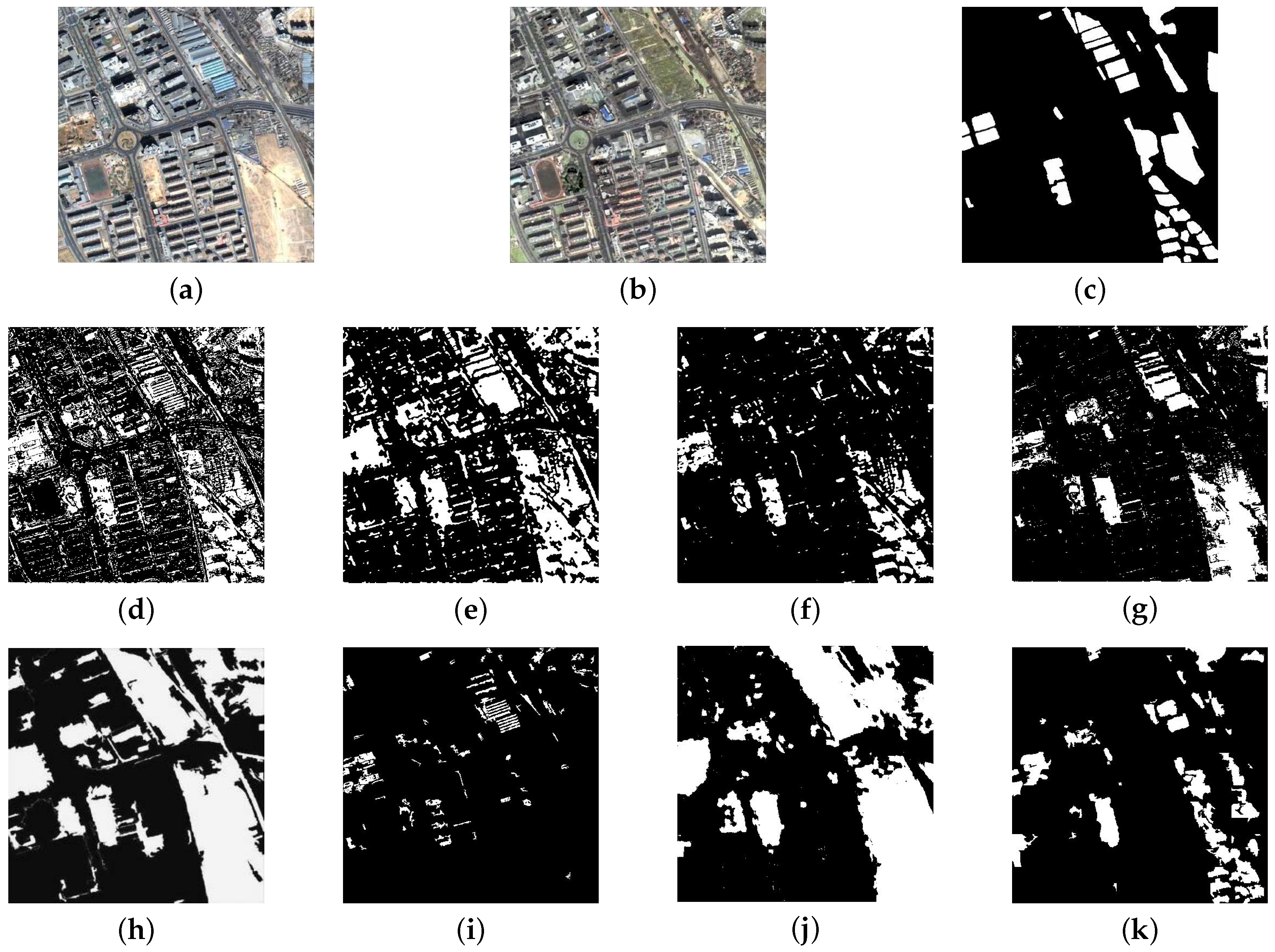

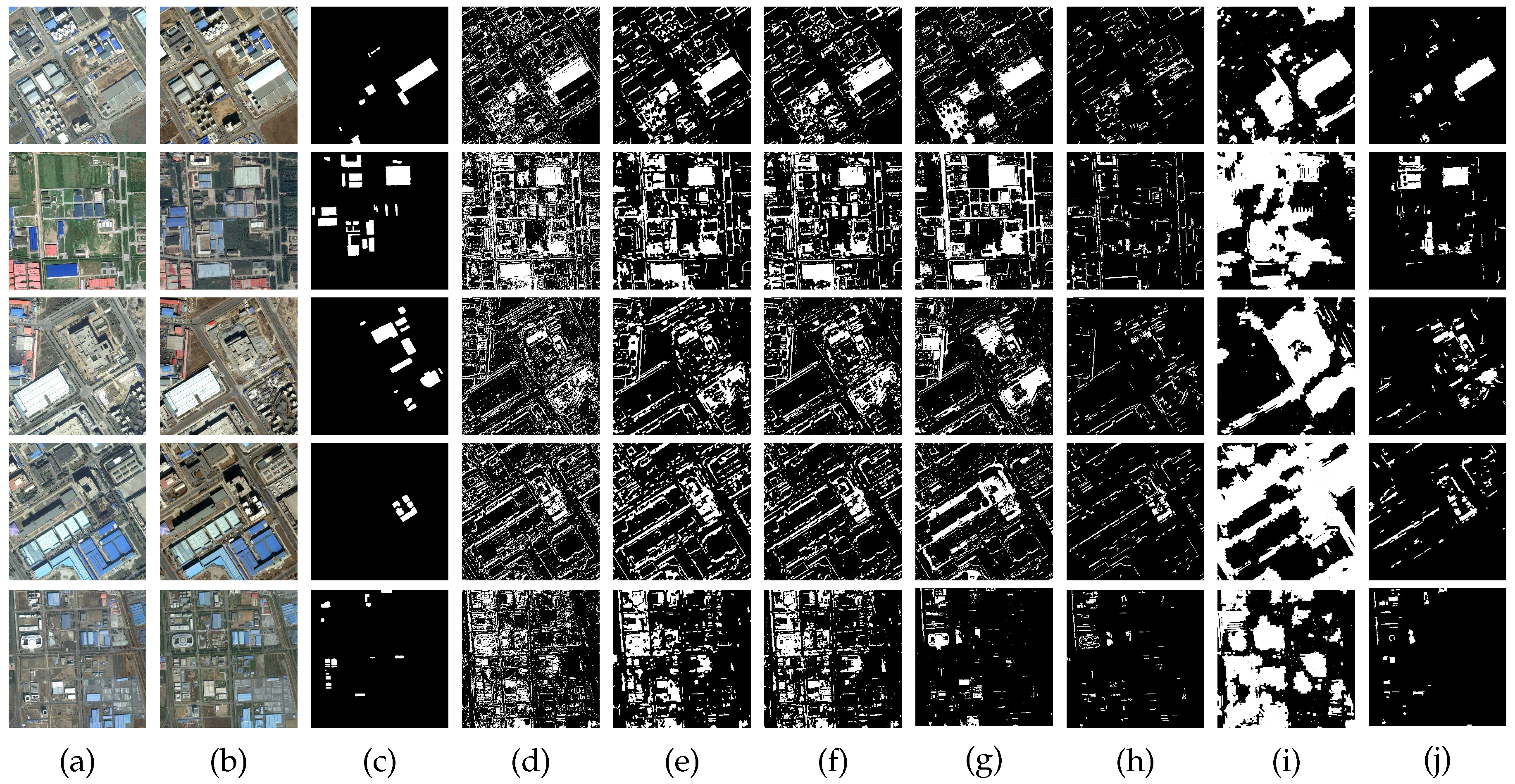

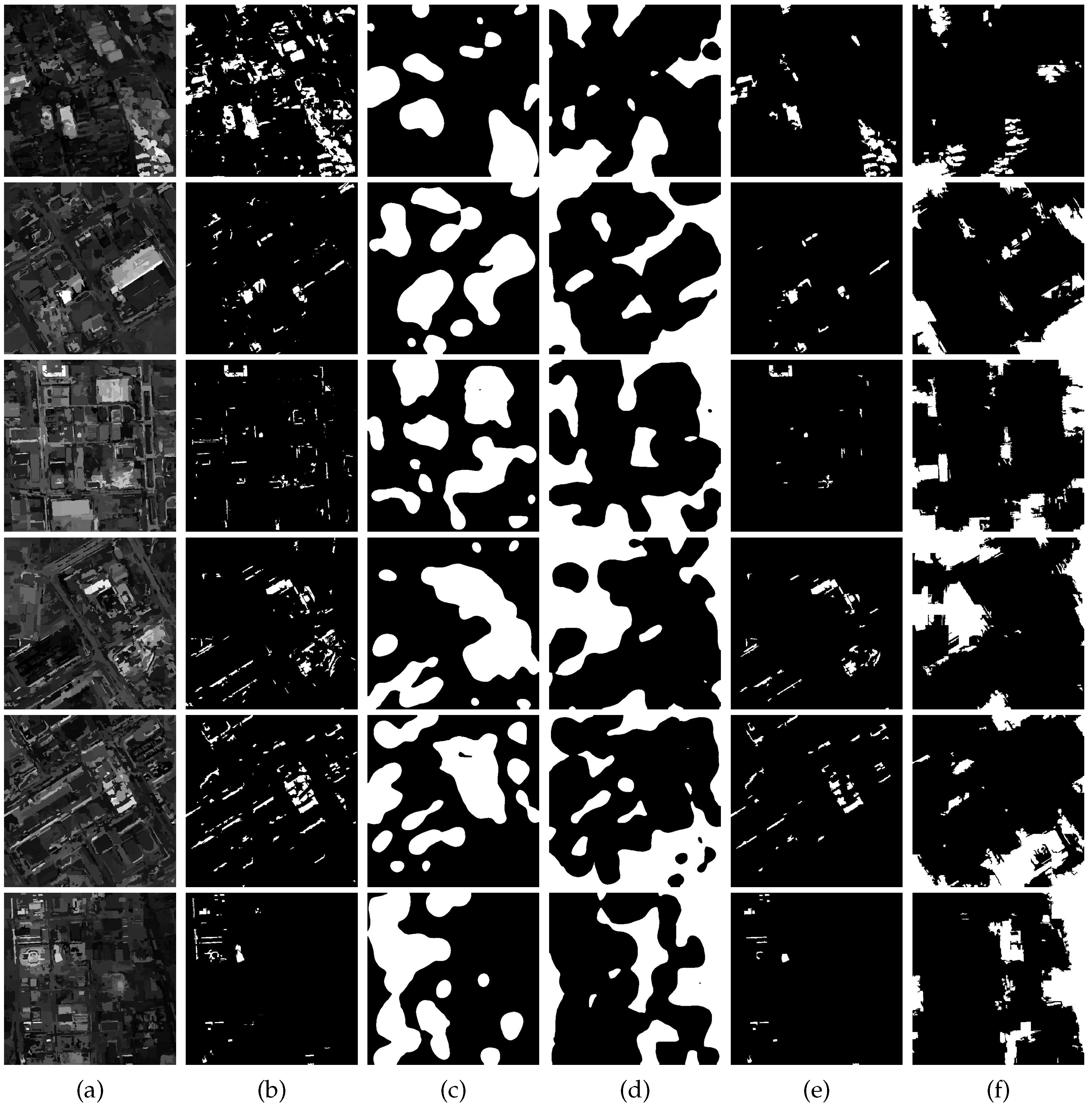

3. Results and Discussion

3.1. Datasets

3.2. Experiments

- Evaluation indexes:Five indexes are used to evaluate the accuracy of above-mentioned methods.

- False alarms (FAs): the number of unchanged pixels that are incorrectly detected as changed ones, i.e., . The false alarm rate (FAR) is calculated as , where is the total number of unchanged pixels;

- Missed alarms (MAs): the number of changed pixels that are incorrectly detected as unchanged ones, i.e., . The missed alarm rate (MAR) is calculated as , where is the total number of changed pixels;

- Overall alarms (OAs): the total number caused by FAs and MAs; the overall alarm rate (OAR) is calculated as ;

- Kappa coefficient (): the consistency between experimental results and the ground truth; it is expressed as , where indicates the real consistency and indicates the theoretical consistency.

- Parameter setting:The approaches used for comparison are implemented using the same set of parameters presented in their related papers. The EM-based method is free of parameters. The MRF-based method depends on the parameter β, which tunes the influence of the spatial contextual information, and we selected . The PCA-based method has two parameters, i.e., non-overlapping blocks h ( in our experiments) and the dimensions S ( in our experiments) of the eigenvector space. In the parcel-based method, the parameters in hierarchical segmentation are tuned to achieve the best performances as [15]. The MBI-based method is implemented as [19] where the thresholds of the spectral condition, the MBI condition, the area and the geometrical index are respectively 0.3, 0.2, 30 and 2.0. In the SHC-based method, we adopt the parameter setting the same as [21]. For the fast object-level based method, the parameter setting we used is also the same as [16].

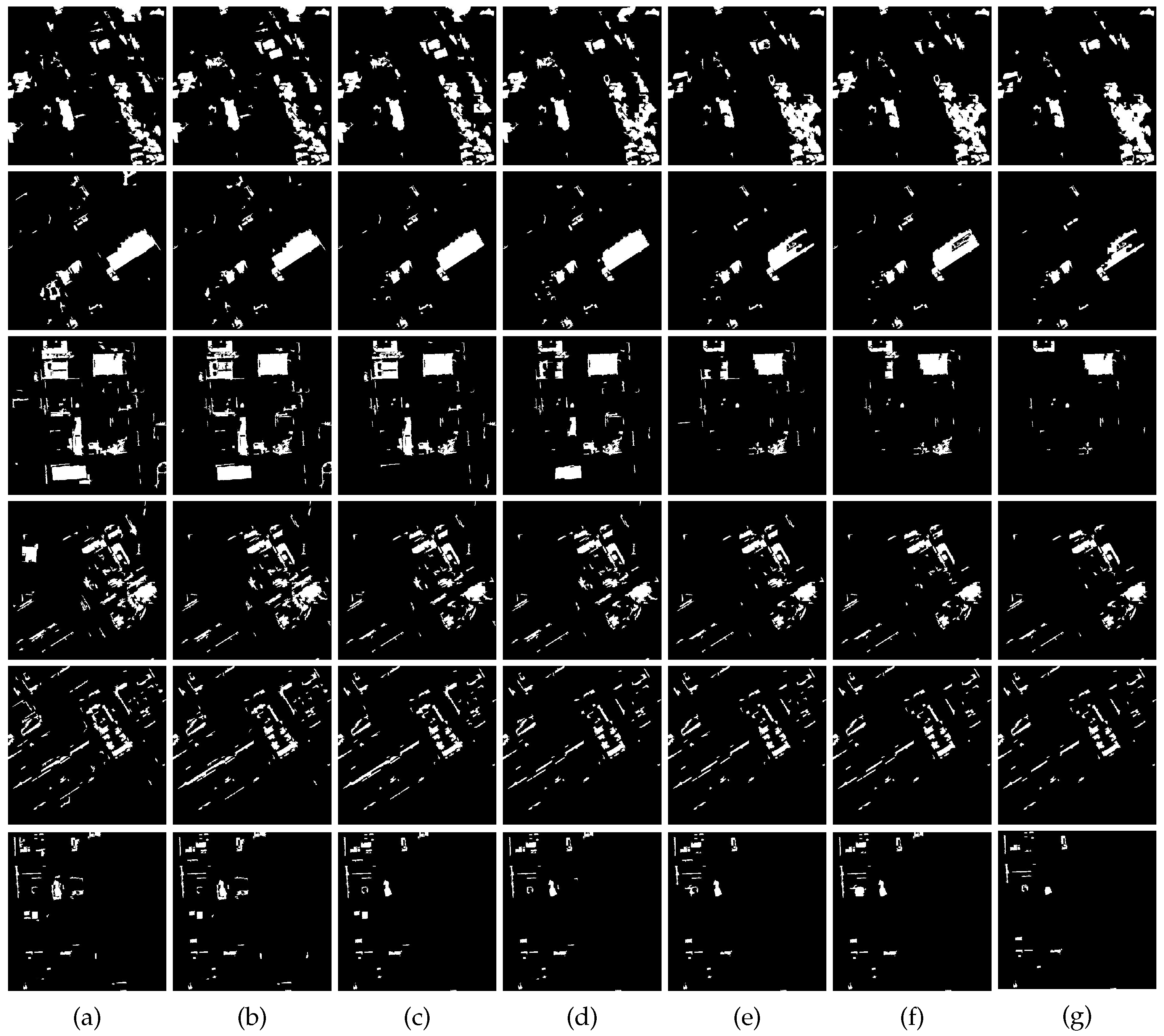

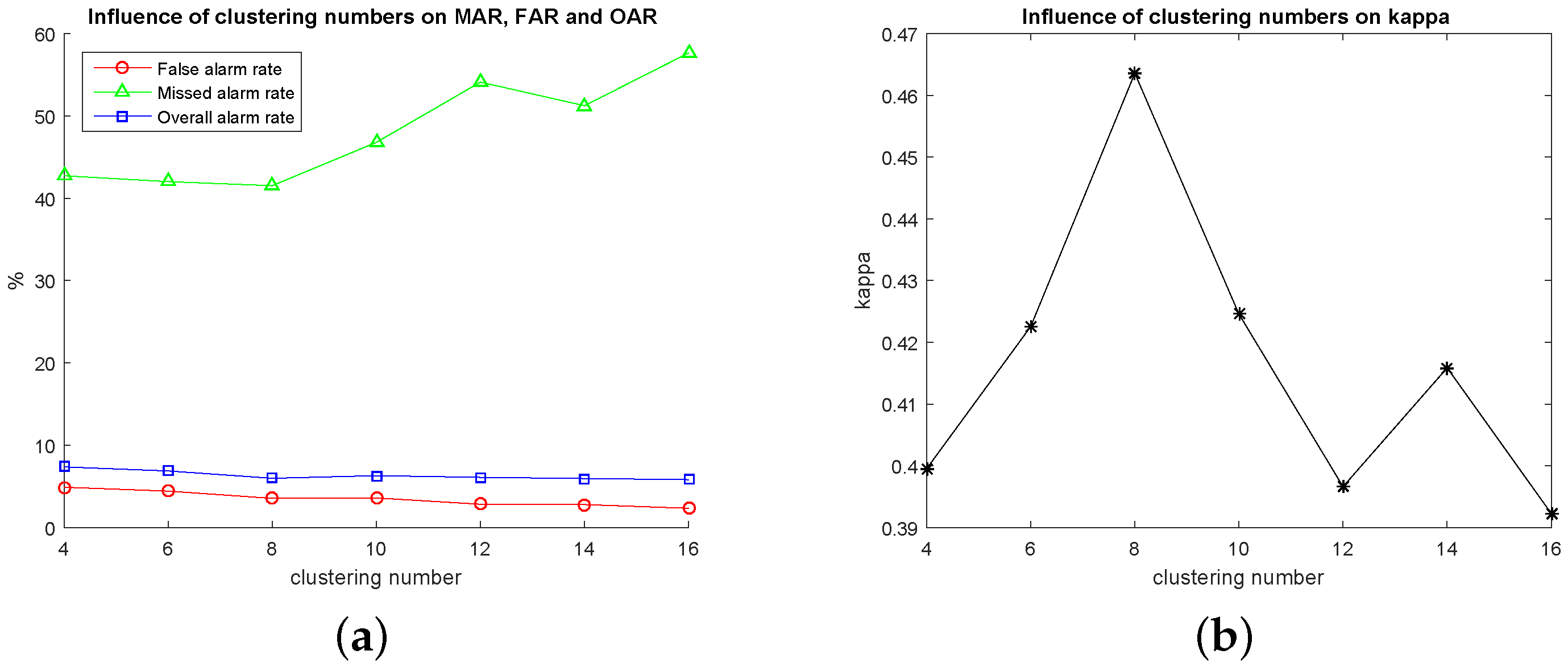

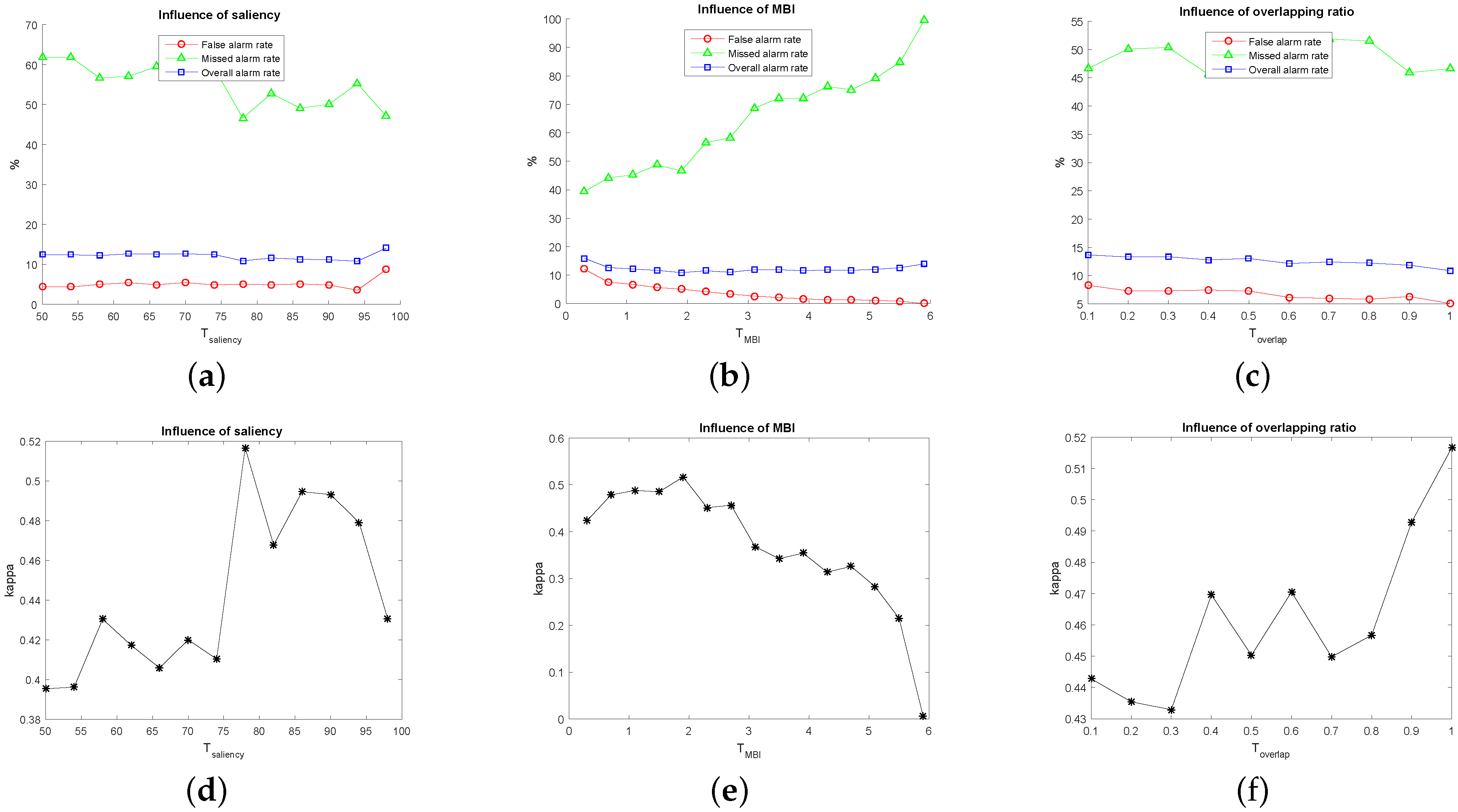

3.3. Results and Analyses

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Molina, I.; Martinez, E.; Arquero, A.; Pajares, G.; Sanchez, J. Evaluation of a change detection methodology by means of binary thresholding algorithms and informational fusion processes. Sensors 2012, 12, 3528–3561. [Google Scholar] [CrossRef] [PubMed]

- Yetgin, Z. Unsupervised change detection of satellite images using local gradual descent. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1919–1929. [Google Scholar] [CrossRef]

- Bruzzone, Z.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Yuan, F.; Sawaya, K.E.; Loeffelholz, B.C.; Bauer, M.E. Land cover classification and change analysis of the twin cities (Minnesota) metropolltan area by multitemporal landsat remote sensing. Remote Sens. Environ. 2005, 98, 317–328. [Google Scholar] [CrossRef]

- Ghosh, A.; Mishra, N.S.; Ghosh, S. Fuzzy clustering algorithms for unsupervised change detection in remote sensing images. Inf. Sci. 2011, 181, 699–715. [Google Scholar] [CrossRef]

- Seebach, L.; Strobl, P.; Vogt, P.; Mehl, W.; San-Miguel-Ayanz, J. Enhancing post-classification change detection through morphological post-processing—A sensitivity analysis. Int. J. Remote Sens. 2013, 34, 7145–7162. [Google Scholar] [CrossRef]

- Peiman, R. Pre-classification and post-classification change-detection techniques to monitor land-cover and land-use change using multi-temporal Landsat imagery: A case study on Pisa Province in Italy. Int. J. Remote Sens. 2011, 32, 4365–4381. [Google Scholar] [CrossRef]

- IM, J.; Jensen, J.; Tullis, J. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.T.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Zhou, W.; Troy, A.; Grove, M. Object-based land cover classification and change analysis in the Baltimore metropolitan area using multitemporal high resolution remote sensing data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef]

- Bovolo, F. A multilevel parcel-based approach to change detection in very high resolution multitemporal images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 33–37. [Google Scholar] [CrossRef]

- Huo, C.; Zhou, Z.; Lu, H. Fast object-level change detection for VHR images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 118–122. [Google Scholar] [CrossRef]

- Mura, M.D.; Benediktsson, J.A.; Bovolo, F.; Bruzzone, L. An unsupervised technique based on morphological filters for change detection in very high resolution images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 433–437. [Google Scholar] [CrossRef]

- Falco, N.; Mura, M.D.; Bovolo, F.; Benediktsson, J.A.; Bruzzone, L. Change detection in VHR images based on morphological attribute profiles. IEEE Geosci. Remote Sens. Lett. 2013, 10, 636–640. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Zhu, T. Building change detection from multitemporal high-resolution remotely sensed images based on a morphological building index. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 105–115. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological building/shadow index for building extraction from high-resolution imagery over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Ding, K.; Huo, C.; Xu, Y.; Zhong, Z.; Pan, C. Sparse hierarchical clustering for VHR image change detection. IEEE Geosci. Remote Sens. Lett. 2015, 12, 577–581. [Google Scholar] [CrossRef]

- Zhong, Y.; Liu, W.; Zhang, L. Change detection based on pulse-coupled neural networks and the NMI feature for high spatial resolution remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 537–541. [Google Scholar] [CrossRef]

- Robertson, L.D.; King, D.J. Comparison of pixel- and object-based classification in land cover change mapping. Int. J. Remote Sens. 2011, 32, 1505–1529. [Google Scholar] [CrossRef]

- Mura, M.D.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological attribute profiles for the analysis of very high resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef] [PubMed]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Pesaresi, M.; Benediktsson, J.A. A new approach for the morphological segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Palmason, J.A.; Benediktsson, J.A.; Sveinsson, J.R.; Chanussot, J. Classification of hyperspectral data from urban areas using morphological preprocessing and independent component analysis. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; pp. 176–179.

- Breen, E.J.; Jones, R. Attribute openings, thinnings and granulometries. Comput. Vis. Image Understand. 1996, 64, 377–389. [Google Scholar] [CrossRef]

- Salembier, P.; Oliveras, A.; Garrido, L. Antiextensive connected operators for image and sequence processing. IEEE Trans. Image Process. 1998, 7, 555–570. [Google Scholar] [CrossRef] [PubMed]

- Pedergnana, M.; Marpu, P.R.; Mura, M.D.; Benediktsson, J.A.; Bruzzone, L. A novel technique for optimal feature selection in attribute profiles based on genetic algorithms. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3514–3528. [Google Scholar] [CrossRef]

- Marpu, P.; Pedergnana, M.; Mura, M.D.; Benediktsson, J.A.; Bruzzone, L. Automatic generation of standard deviation attribute profiles for spectral-spatial classification of remote sensing data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 293–297. [Google Scholar] [CrossRef]

- Ghosh, S.; Mishra, N.S.; Ghosh, A. Unsupervised change detection of remotely sensed images using fuzzy clustering. In Proceedings of the 7th International Conference on Advances in Pattern Recognition, Kolkata, India, 4–6 February 2009; pp. 385–388.

- Wen, D.; Huang, X.; Zhang, L.; Benediktsson, J.A. A novel automatic change detection method for urban high-resolution remotely sensed imagery based on multiindex scene representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 609–625. [Google Scholar] [CrossRef]

- Zhou, L.; Zhou, Z.; Hu, D. Scene classification using a multiresolution bag-of-features model. Pattern Recognit. 2013, 46, 424–433. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, K.; Wang, C.; Tan, T. Exploring relations of visual codes for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011; pp. 1649–1656.

- Zhong, Y.; Zhu, Q.; Zhang, L. Scene classification based on the multifeature fusion probabilistic topic model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6207–6222. [Google Scholar] [CrossRef]

| Accuracy | EM-Based | MRF-Based | PCA-Based | Parcel-Based | Fast Object-Level | MBI-Based | SHC-Based | Proposed | |

|---|---|---|---|---|---|---|---|---|---|

| Total Pixels | Changed | 31,198 | 31,198 | 31,198 | 31,198 | 31,198 | 31,198 | 31,198 | 31,198 |

| Unchanged | 191,586 | 191,586 | 191,586 | 191,586 | 191,586 | 191,586 | 191,586 | 191,586 | |

| False Alarms | 43,113 | 40,298 | 12,505 | 19,347 | 51,372 | 3,672 | 47,912 | 9,711 | |

| (0.2250) | (0.2103) | (0.0653) | (0.1010) | (0.2681) | (0.0192) | (0.2501) | (0.0507) | ||

| Missed Alarms | 11,840 | 7,497 | 16,647 | 13,379 | 2,197 | 26,132 | 4,337 | 14,543 | |

| (0.3795) | (0.2403) | (0.5336) | (0.4288) | (0.0704) | (0.8376) | (0.1390) | (0.4662) | ||

| Overall Alarms | 54,953 | 47,795 | 29,152 | 32,726 | 53,569 | 29,804 | 52,249 | 24,254 | |

| (0.2467) | (0.2145) | (0.1309) | (0.1469) | (0.2405) | (0.1338) | (0.2345) | (0.1089) | ||

| 0.2786 | 0.3815 | 0.4247 | 0.4353 | 0.3985 | 0.2050 | 0.3855 | 0.5167 | ||

| Dataset | Accuracy | EM-Based | MRF-Based | PCA-Based | Parcel-Based | MBI-Based | SHC-Based | Proposed | |

|---|---|---|---|---|---|---|---|---|---|

| Image 1 | Total Pixels | Changed | 11,613 | 11,613 | 11,613 | 11,613 | 11,613 | 11,613 | 11,613 |

| Unchanged | 238,387 | 238,387 | 238,387 | 238,387 | 238,387 | 238,387 | 238,387 | ||

| False Alarms | 44,974 | 32,915 | 33,189 | 31,828 | 11,855 | 52,964 | 4,379 | ||

| (0.1887) | (0.1381) | (0.1392) | (0.1335) | (0.0497) | (0.2222) | (0.0184) | |||

| Missed Alarms | 1,507 | 855 | 1,464 | 1,913 | 8,394 | 312 | 1,836 | ||

| (0.1298) | (0.0736) | (0.1261) | (0.1647) | (0.7228) | (0.0269) | (0.1581) | |||

| Overall Alarms | 46,481 | 33,770 | 34,653 | 33,741 | 20,249 | 53,276 | 6,215 | ||

| (0.1859) | (0.1351) | (0.1386) | (0.1350) | (0.0810) | (0.2131) | (0.0249) | |||

| 0.2451 | 0.3408 | 0.3195 | 0.3154 | 0.1992 | 0.2379 | 0.7458 | |||

| Image 2 | Total Pixels | Changed | 22,402 | 22,402 | 22,402 | 22,402 | 22,402 | 22,402 | 22,402 |

| Unchanged | 227,598 | 227,598 | 227,598 | 227,598 | 227,598 | 227,598 | 227,598 | ||

| False Alarms | 84,846 | 55,480 | 61,075 | 63,925 | 15,245 | 98,316 | 9,343 | ||

| (0.3728) | (0.2438) | (0.2683) | (0.2809) | (0.0670) | (0.4320) | (0.0411) | |||

| Missed Alarms | 9,505 | 7,240 | 11,449 | 11,489 | 18,878 | 3,632 | 10,788 | ||

| (0.4243) | (0.3232) | (0.5111) | (0.5129) | (0.8427) | (0.1621) | (0.4816) | |||

| Overall Alarms | 94,351 | 62,720 | 72,524 | 75,414 | 34,123 | 101,948 | 20,131 | ||

| (0.3774) | (0.2509) | (0.2901) | (0.3017) | (0.1365) | (0.4078) | (0.0805) | |||

| 0.0806 | 0.2197 | 0.1104 | 0.1004 | 0.0974 | 0.1397 | 0.4917 | |||

| Image 3 | Total Pixels | Changed | 14,347 | 14,347 | 14,347 | 14,347 | 14,347 | 14,347 | 14,347 |

| Unchanged | 235,653 | 235,653 | 235,653 | 235,653 | 235,653 | 235,653 | 235,653 | ||

| False Alarms | 49,440 | 39,160 | 39,461 | 40,730 | 10,775 | 66,260 | 9,334 | ||

| (0.2098) | (0.1662) | (0.1675) | (0.1728) | (0.0457) | (0.2812) | (0.0396) | |||

| Missed Alarms | 7,082 | 6,851 | 6,398 | 5,184 | 11,971 | 879 | 8,250 | ||

| (0.4936) | (0.4775) | (0.4459) | (0.3613) | (0.8344) | (0.0613) | (0.5750) | |||

| Overall Alarms | 56,522 | 46,011 | 45,859 | 45,914 | 22,746 | 67,139 | 17,584 | ||

| (0.2261) | (0.1840) | (0.1834) | (0.1837) | (0.0910) | (0.2686) | (0.0703) | |||

| 0.1243 | 0.1732 | 0.1857 | 0.2153 | 0.1248 | 0.2094 | 0.3722 | |||

| Image 4 | Total Pixels | Changed | 3,384 | 3,384 | 3,384 | 3,384 | 3,384 | 3,384 | 3,384 |

| Unchanged | 246,616 | 246,616 | 246,616 | 246,616 | 246,616 | 246,616 | 246,616 | ||

| False Alarms | 49,500 | 51,376 | 38,816 | 43,902 | 13,646 | 133,047 | 11,489 | ||

| (0.2007) | (0.2083) | (0.1574) | (0.1780) | (0.0553) | (0.5395) | (0.0466) | |||

| Missed Alarms | 1,012 | 738 | 766 | 1,093 | 1,227 | 44 | 1,094 | ||

| (0.2991) | (0.2181) | (0.2264) | (0.3230) | (0.3626) | (0.0130) | (0.3233) | |||

| Overall Alarms | 50,512 | 52,114 | 39,582 | 44,995 | 14,873 | 133,091 | 12,583 | ||

| (0.2020) | (0.2085) | (0.1583) | (0.1800) | (0.0595) | (0.5324) | (0.0503) | |||

| 0.0620 | 0.0685 | 0.0942 | 0.0689 | 0.2072 | 0.0220 | 0.2506 | |||

| Image 5 | Total Pixels | Changed | 4,103 | 4,103 | 4,103 | 4,103 | 4,103 | 4,103 | 4,103 |

| Unchanged | 245,897 | 245,897 | 245,897 | 245,897 | 245,897 | 245,897 | 245,897 | ||

| False Alarms | 57,854 | 49,947 | 50,753 | 14,108 | 6,840 | 98,441 | 3,895 | ||

| (0.2353) | (0.2031) | (0.2064) | (0.0574) | (0.0278) | (0.4003) | (0.0158) | |||

| Missed Alarms | 1,153 | 808 | 1,244 | 3,516 | 3,290 | 812 | 2,001 | ||

| (0.2810) | (0.1969) | (0.3032) | (0.8569) | (0.8019) | (0.1979) | (0.4877) | |||

| Overall Alarms | 59,007 | 50,755 | 51,997 | 17,624 | 10,130 | 99,253 | 5,896 | ||

| (0.2360) | (0.2030) | (0.2080) | (0.0705) | (0.0405) | (0.3970) | (0.0236) | |||

| 0.0621 | 0.0871 | 0.0707 | 0.0378 | 0.1195 | 0.0316 | 0.4046 | |||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, B.; Wang, Y.; Liu, Q. A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images. Sensors 2016, 16, 1377. https://doi.org/10.3390/s16091377

Hou B, Wang Y, Liu Q. A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images. Sensors. 2016; 16(9):1377. https://doi.org/10.3390/s16091377

Chicago/Turabian StyleHou, Bin, Yunhong Wang, and Qingjie Liu. 2016. "A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images" Sensors 16, no. 9: 1377. https://doi.org/10.3390/s16091377

APA StyleHou, B., Wang, Y., & Liu, Q. (2016). A Saliency Guided Semi-Supervised Building Change Detection Method for High Resolution Remote Sensing Images. Sensors, 16(9), 1377. https://doi.org/10.3390/s16091377