Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control

Abstract

:1. Introduction

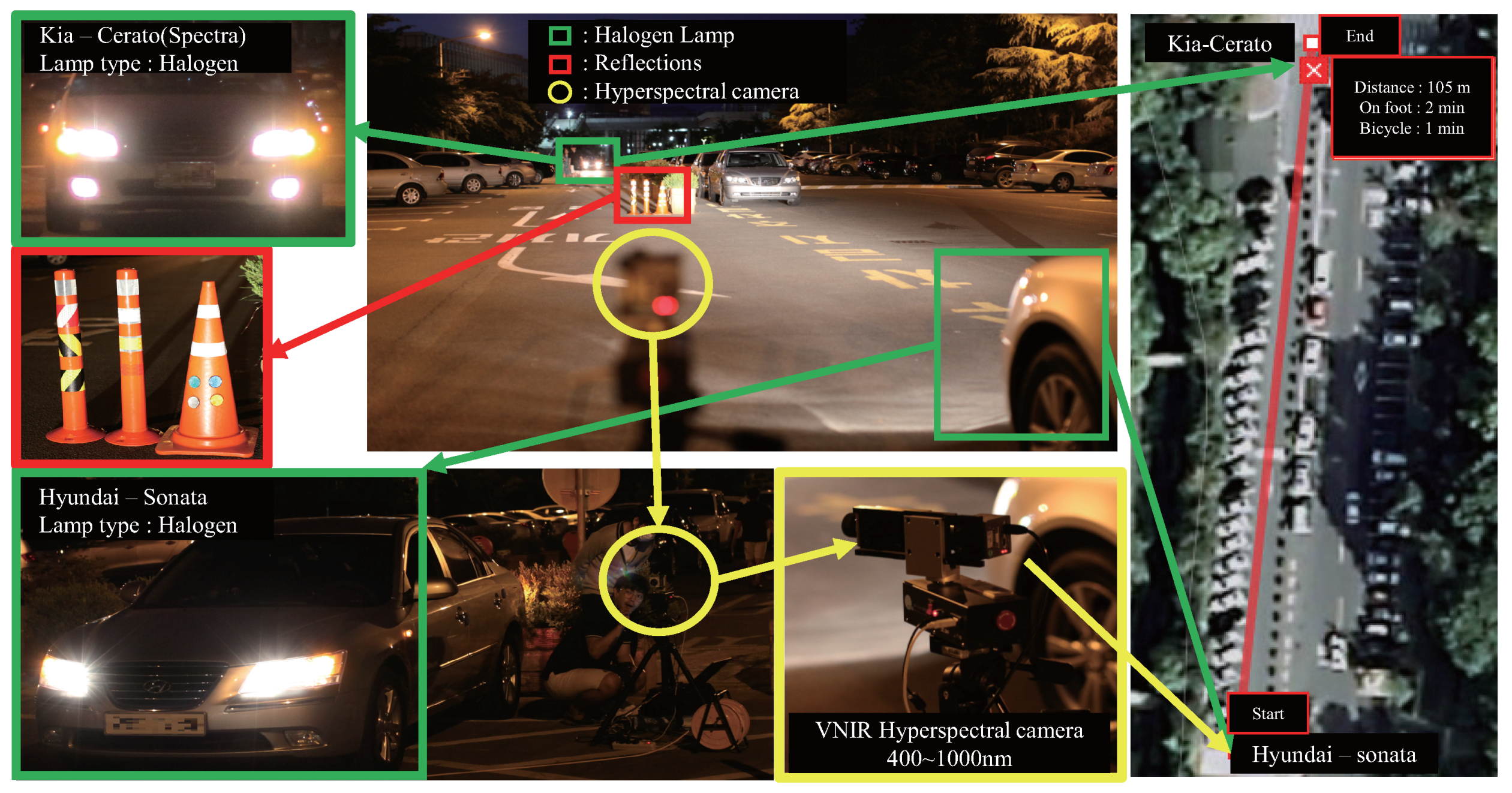

2. Research Objectives and Experiment Scenario

3. Proposed Vehicle Headlight Detection System Using Hyperspectral Image

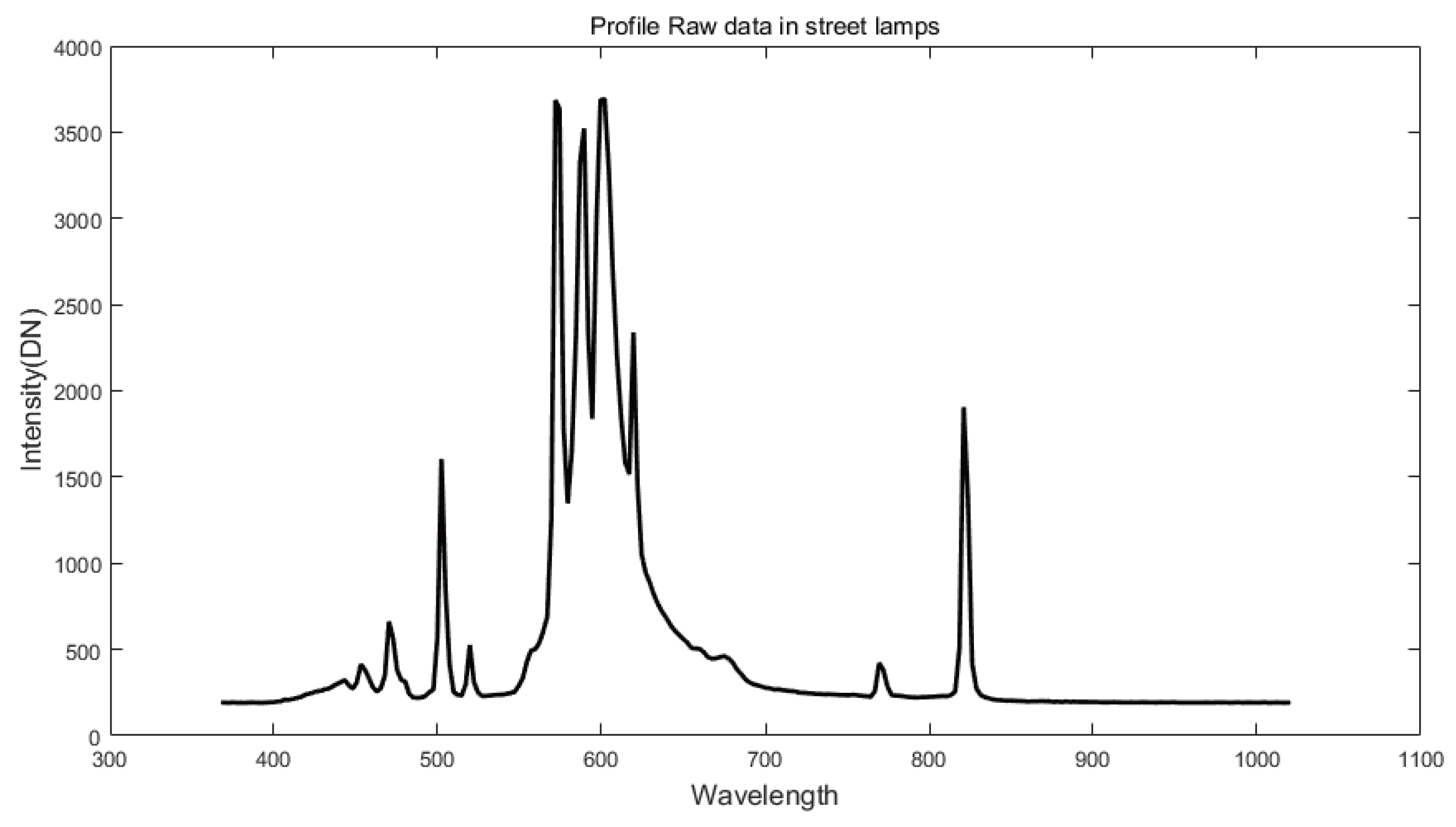

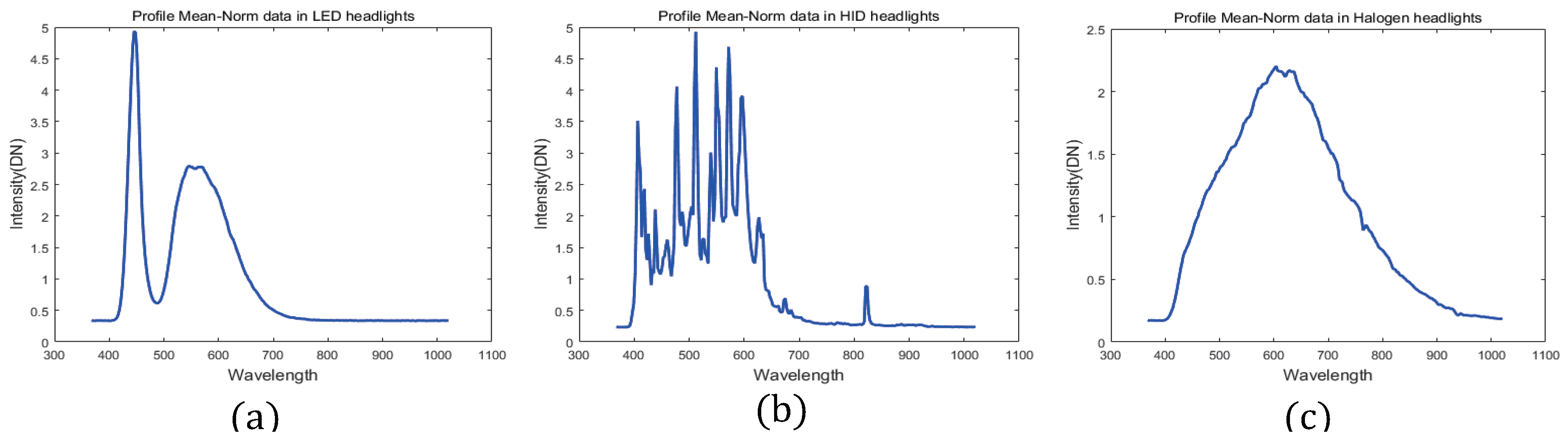

3.1. Preprocessing Spectral Profile (Normalized Spectral Profile)

3.2. Processing Headlight Detection

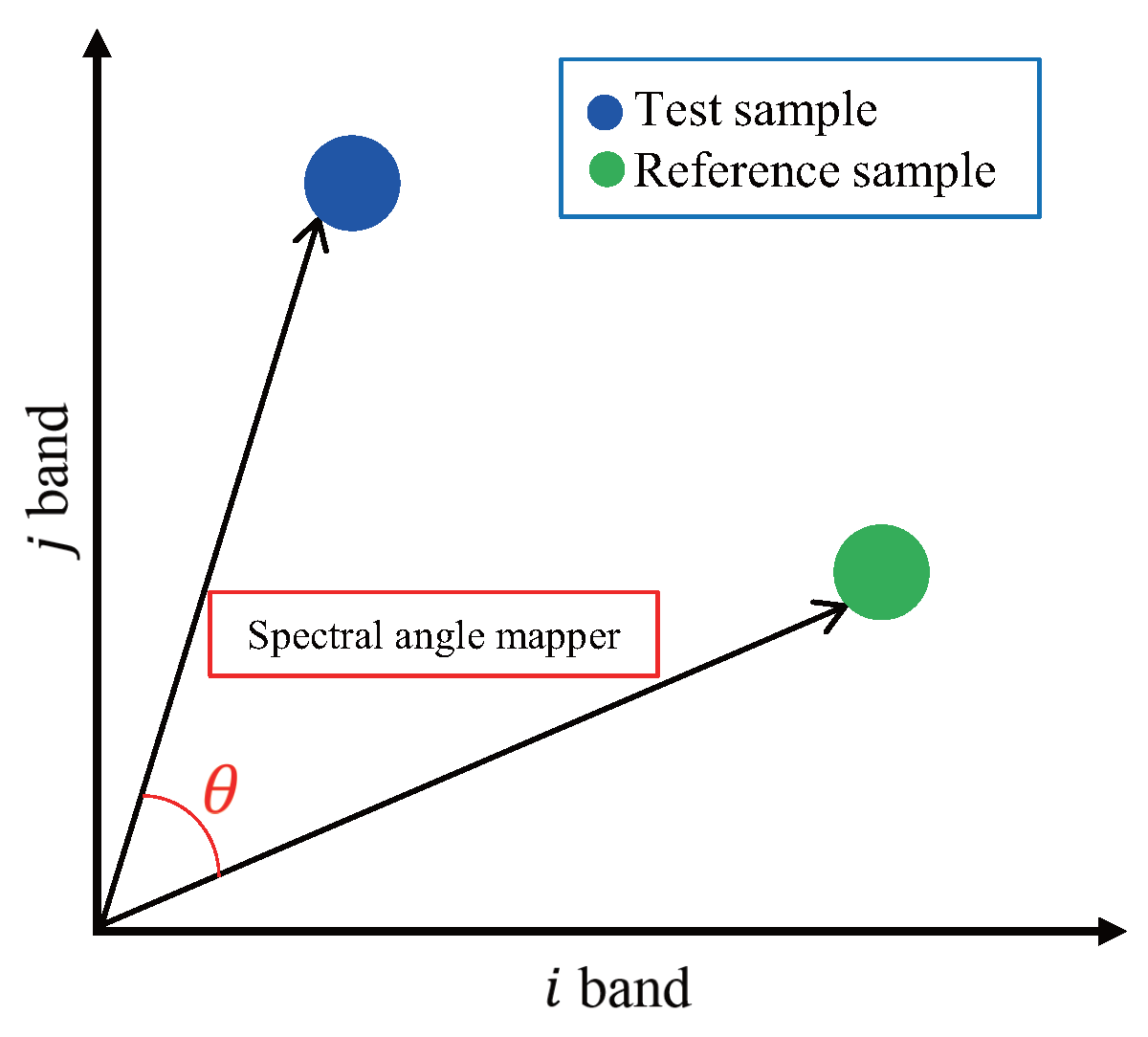

3.2.1. Spectral Angle Mapper (SAM)

3.2.2. Spectral Correlation Mapper (SCM)

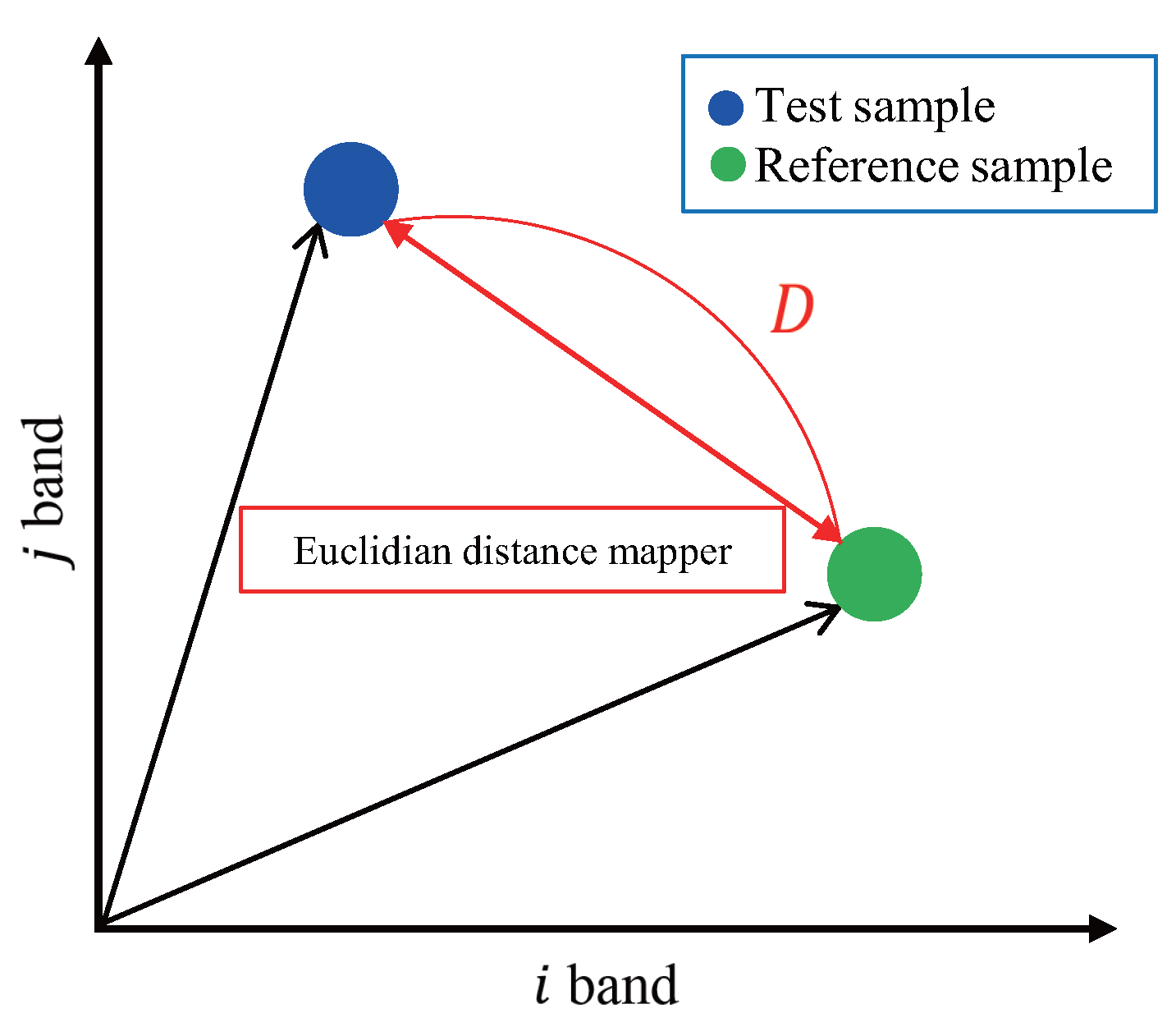

3.2.3. Euclidean Distance Mapper (EDM)

4. Experimental Results

4.1. Hyperspectral Image Acquisition System

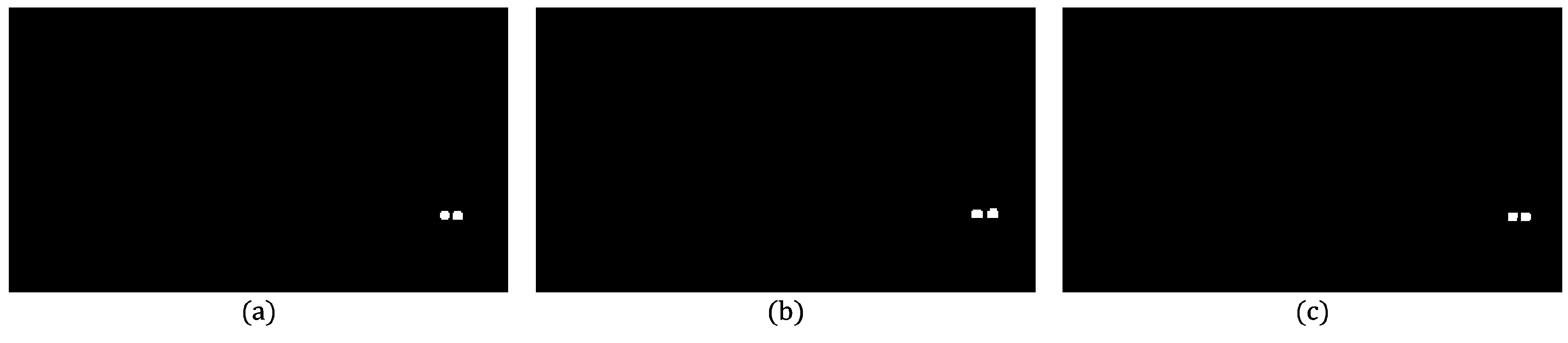

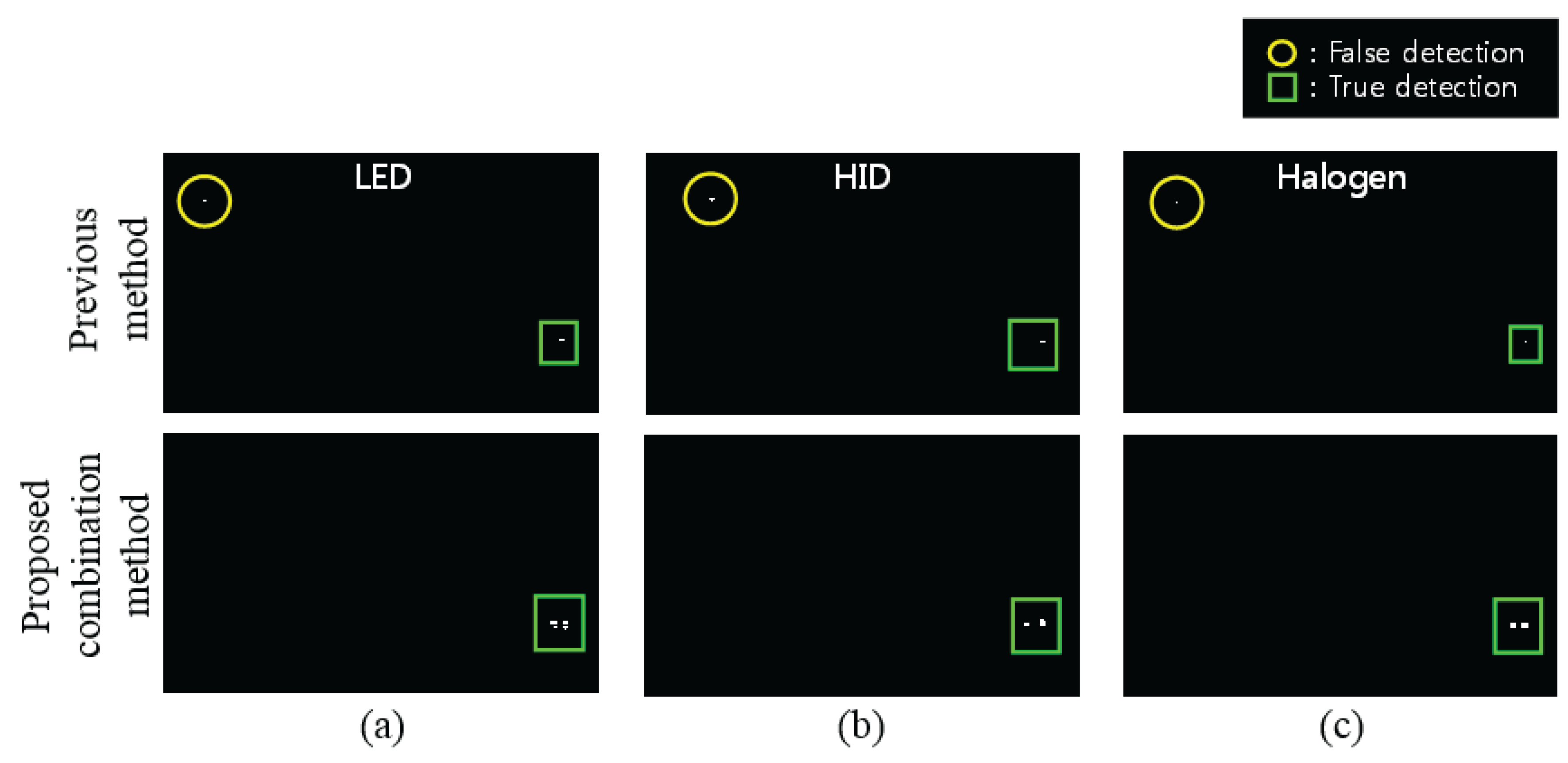

4.2. First Experiment for Three Type Lamps Detection

4.2.1. Evaluation Performance Using ROC and AUC in the First Experiment

4.2.2. Detection Performance Given the Same False Positive Rate (FPR)

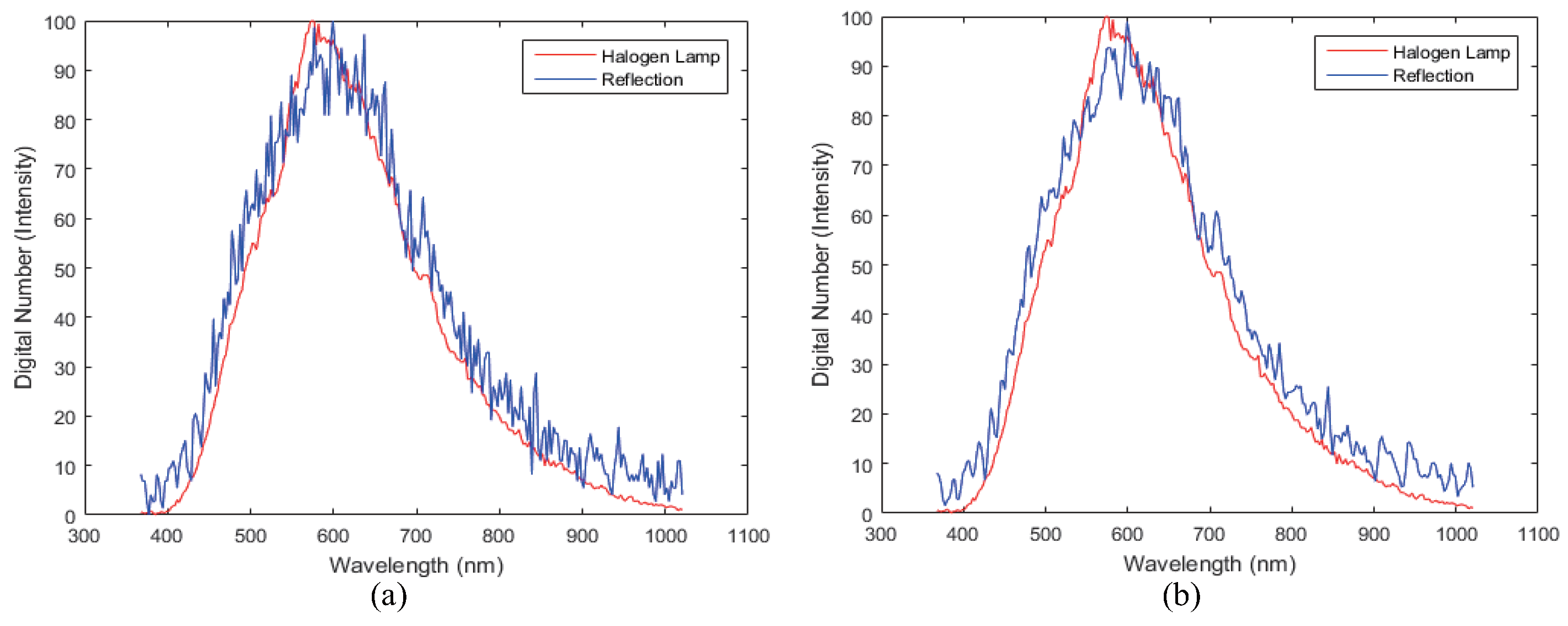

4.3. Second Experiment: Distinction of Headlights from the Reflections of Ego-Car

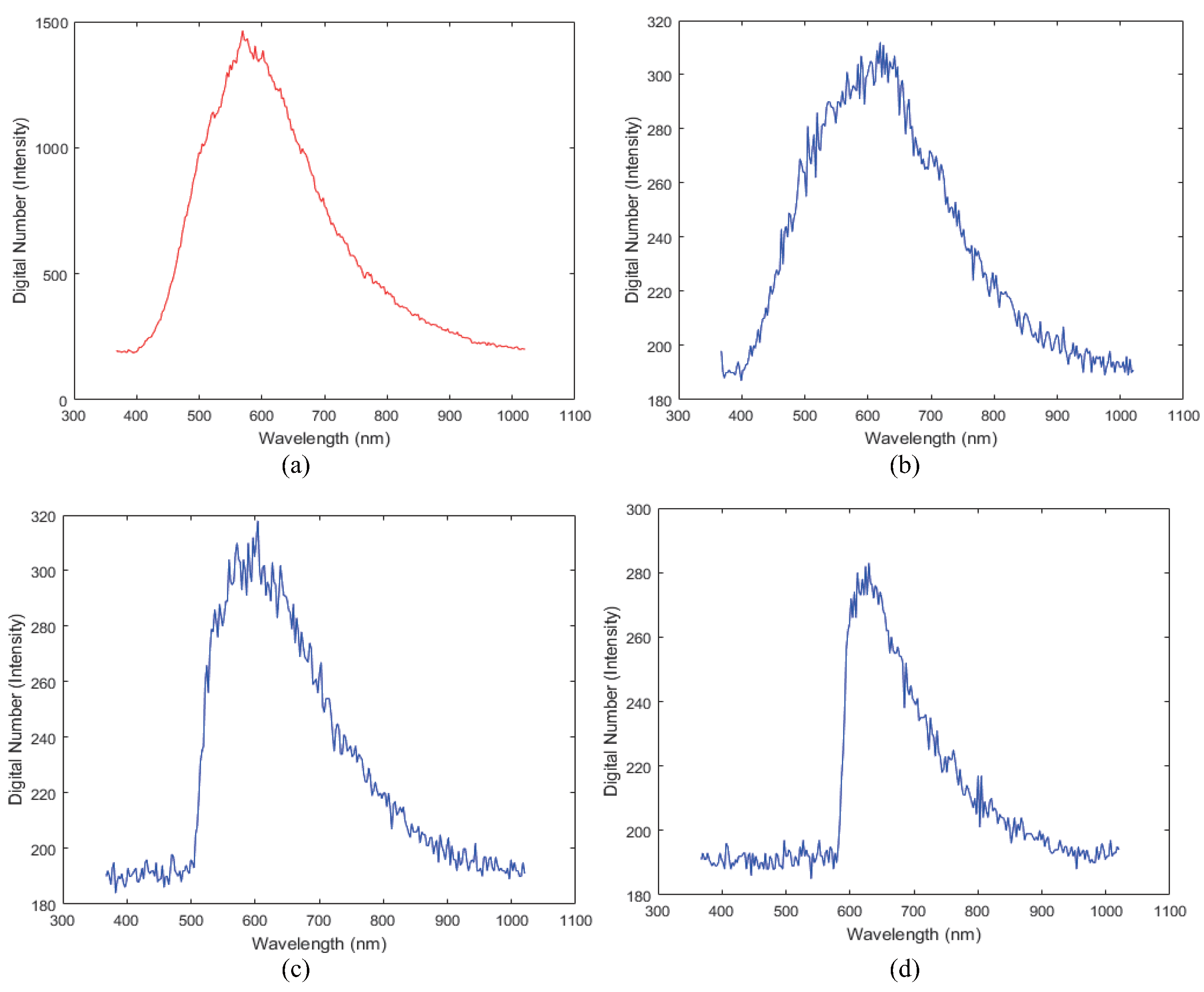

4.3.1. Comparison of the Spectral Information between Halogen Lamp and the Reflection from a Halogen Lamp

4.3.2. Headlight Detection Results in the Reflections of Ego-Car Environment

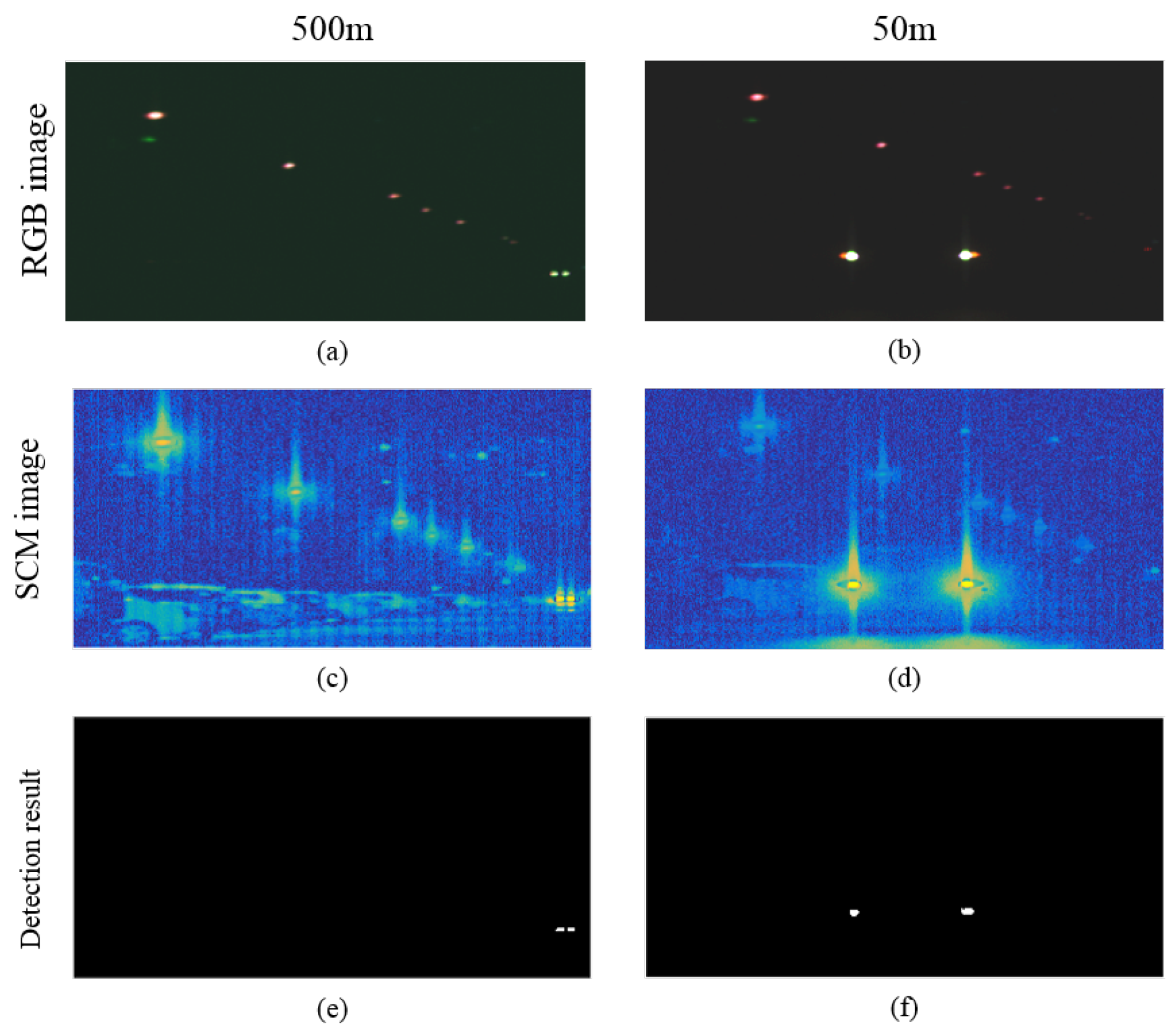

4.4. Third Experiment to Compare the Headlight Detection from a Near and Far Distance

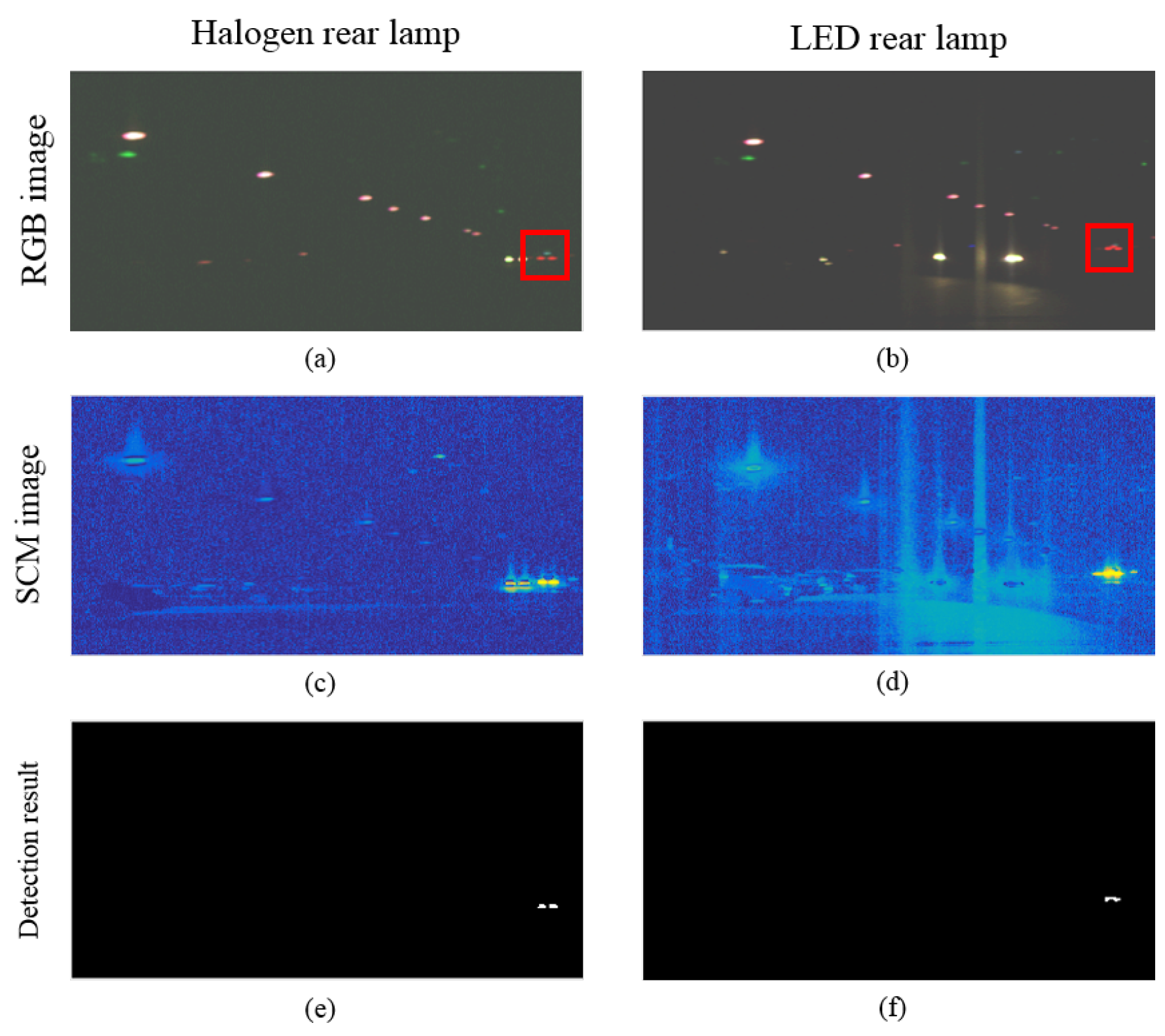

4.5. Fourth Experiment to Detect the Rear Lamps for the Distinction with Other Lamps

4.5.1. Comparison Spectral Information Rear Lamps (Halogen and LED)

4.5.2. Rear Lamp Detection Result with Other Lights

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Li, Y.; Pankanti, S. A performance study of an intelligent headlight control system. In Proceedings of the Winter Conference on Appilcation of Compter Vision, Kona, HI, USA, 5–7 January 2011; pp. 440–447.

- Mohite, H.; Mahangade, B.; Gholase, M.; Kattgihalimath, S.; Kumbhar, S. Intelligent and Adaptive Headlight with Electronic Controlled Power Steering System (IAEPS). In Proceedings of the IEEE Workshop on International Journal of Current Engineering and Technology (IJCET), Burbank, CA, USA, 2 April 2015; Volume 5, pp. 1026–1029.

- Büthorn, R.; Tadjine, H.H.; Auerbach, B.; Schulze, K. Advanced Headlight System: 3D High Beam. In Proceedings of the International Forum on Advanced Microsystems for Automotive Applications (AMAA2013), Berlin, Germany, 17–18 June 2013; pp. 77–88.

- Gavriilidis, A.; Muller, D.; Muller-Schneiders, S.; Velten, J.; Kummert, A. Sensor system blockage detection for night time headlight control based on camera and radar sensor information. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems (ITSC), Anchorage, AK, USA, 16–19 September 2012; pp. 78–83.

- Kurita, K. Non-contact Moving Vehicle Detection Method Based on Electrostatic Induction Technique. J. Sci. Eng. 2015, 6, 24–28. [Google Scholar]

- Shekhar, S.; Kamal, M.; Joshi, A.; Kumar, R.; Nigam, V. Antitheft System in Vehicle with Automatic Headlights. Int. J. Adv. Res. Innov. 2015, 3, 394–397. [Google Scholar]

- Salvi, G. An automated nighttime vehicle counting and detection system for traffic surveillance. In Proceedings of the International Conference on Computational Science and Computational Intelligence (CSCI2014), Las Vegas, NV, USA, 10–13 March 2014; Volume 1, pp. 131–136.

- Eum, S.; Jung, H.G. Enhancing light blob detection for intelligent headlight control using lane detection. IEEE Trans. Intell. Transp. Syst. 2013, 1, 1003–1011. [Google Scholar] [CrossRef]

- Zou, Q.; Ling, H.; Luo, S.; Huang, Y.; Tian, M. Robust Nighttime Vehicle Detection by Tracking and Grouping Headlights. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2838–2849. [Google Scholar] [CrossRef]

- Gang, L.; Zhang, M.; Zhao, X.; Wang, S. Improved Genetic Algorithm Optimization for Forward Vehicle Detection Problems. Information 2015, 6, 339–360. [Google Scholar] [CrossRef]

- Chen, Y.L.; Chiang, H.H.; Chiang, C.Y.; Liu, C.M.; Yuan, S.M.; Wang, J.H. A vision-based driver nighttime assistance and surveillance system based on intelligent image sensing techniques and a heterogamous dual-core embedded system architecture. Sensors 2012, 12, 2373–2399. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Wang, J.; Guo, X.; Yu, C.; Sun, X. Preceding vehicle detection and tracking adaptive to illumination variation in night traffic scenes based on relevance analysis. Sensors 2014, 14, 15325–15347. [Google Scholar] [CrossRef] [PubMed]

- Taktak, R.; Dufaut, M.; Husson, R. Vehicle detection at night using image processing and pattern recognition. In Proceedings of the IEEE International Conference Image Processing (ICIP 94), Austin, TX, USA, 13–16 November 1994.

- Sina, I.; Wibisono, A.; Nurhadiyatna, A.; Hardjono, B.; Jatmiko, W.; Mursanto, P. Vehicle counting and speed measurement using headlight detection. In Proceedings of the IEEE International Conference on Advanced Computer Science and Information Systems (ICACSIS 2013), Bali, Indonesia, 28–29 September 2013; pp. 149–154.

- Juric, D.; Loncaric, S. A method for on-road night-time vehicle headlight detection and tracking. In Proceedings of the International Conference on Connected Vehicles and Expo (ICCVE 2014), Vienna, Austria, 3–7 November 2014; Volume 6, pp. 339–360.

- Ogura, R.; Ohashi, G. Vehicles detection based on extremas in nighttime driving scene. In Proceedings of the 1st IEEE Global Conference on Consumer Electronics, Tokyo, Japan, 2–5 October 2012; pp. 679–682.

- Rubio, J.C.; Serrat, J.; López, A.M.; Ponsa, D. Multiple-target tracking for intelligent headlights control. IEEE Trans. Intell. Transp. Syst. 2012, 13, 594–605. [Google Scholar] [CrossRef]

- López, A.; Hilgenstock, J.; Busse, A.; Baldrich, R.; Lumbreras, F.; Serrat, J. Nighttime vehicle detection for intelligent headlight control. In Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany, 2008; pp. 113–124. [Google Scholar]

- Konda Naganathan, G.; Cluff, K.; Samal, A.; Calkins, C.; Jones, D.D.; Lorenzen, C.; Subbiah, J. A prototype on-line AOTF hyperspectral image acquisition system for tenderness assessment of beef carcasses. J. Food Eng. 2014, 154, 1–9. [Google Scholar] [CrossRef]

- Fu, X.; Kim, M.S.; Chao, K.; Qin, J.; Lim, J.; Lee, H.; Garrido-Varom, A.; Pérez-Marín, D.; Ying, Y. Detection of melamine in milk powders based on NIR hyperspectral imaging and spectral similarity analyses. J. Food Eng. 2014, 124, 97–104. [Google Scholar] [CrossRef]

- Rivera, N.V.; Gomez-Sanchis, J.; Chanona-Perez, J.; Carrasco, J.J.; Millan-Giraldo, M.; Lorente, D.; Cubero, S.; Blasco, J. Early detection of mechanical damage in mango using NIR hyperspectral images and machine learning. Biosyst. Eng. 2014, 122, 91–98. [Google Scholar]

- Du, B.; Zhang, L.; Tao, D.; Wang, N.; Chen, T. A spectral dissimilarity constrained nonnegative matrix factorization based cancer screening algorithm from hyperspectral fluorescence images. In Proceedings of the International Conference on Computerized Healthcare (ICCH 2012), Hong Kong, China, 17–18 December 2012; pp. 112–119.

- Liu, Z.; Wang, H.; Li, Q. Tongue tumor detection in medical hyperspectral images. Sensors 2011, 12, 162–174. [Google Scholar] [CrossRef] [PubMed]

- Boyce, P.; Hoffman, D.D.; Wallaschek, J. Automotive Lighting and Human Vision; Springer: Berlin, Germany, 2007. [Google Scholar]

- Yamazato, T.; Kinoshita, M.; Arai, S.; Souke, E.; Yendo, T.; Fujii, T.; Kamakura, K.; Okada, H. Vehicle Motion and Pixel Illumination Modeling for Image Sensor Based Visible Light Communication. Trans. ASAE 2015, 46, 551–558. [Google Scholar] [CrossRef]

- Ibrahim, I. Illumination Invariance and Shadow Compensation on Hyperspectral Images; Cranfield University: Cranfield, UK, 2014. [Google Scholar]

- Jung, E.D.; Lee, Y.L. Development of a heat dissipating LED headlamp with silicone lens to replace halogen bulbs in used cars. Appl. Therm. Eng. 2015, 86, 143–150. [Google Scholar] [CrossRef]

- Nsibi, W.; Chammam, A.; Nehdi, M.N.; Mrabet, B.; Sellami, A.; Zissis, G. HID lamps under low frequency square wave operation: Experimental Evaluation of Dimming effects. Light. Res. Technol. 2016. [Google Scholar] [CrossRef]

- Gaglianone, L.A.; Lima, A.F.; Goncalves, L.S.; Cavalcanti, A.N.; Aguiar, F.H.B.; Marchi, G.M. Mechanical properties and degree of conversion of etch-and-rinse and self-etch adhesive systems cured by a quartz tungsten halogen lamp and a light-emitting diode. J. Mech. Behav. Biomed. Mater. 2012, 12, 139–143. [Google Scholar] [CrossRef] [PubMed]

- Cheng, X.; Tao, Y.; Chen, Y.R.; Luo, Y. Nir/MIR dual–sensor machine vision system for online apple stem–end/calyx recognition. Trans. ASAE 2003, 46, 551–558. [Google Scholar] [CrossRef]

- Feilhauer, H.; Asner, G.P.; Martin, R.E.; Schmidtlein, S. Brightness-normalized partial least squares regression for hyperspectral data. J. Quant. Spectrosc. Radiat. Transf. 2010, 144, 1947–1957. [Google Scholar] [CrossRef]

- Sun, D.W. Hyperspectral Imaging for Food Quality Analysis and Control; Elsevier: Amsterdam, Nederland, 2010. [Google Scholar]

- Liu, X.; Yang, C. A kernel spectral angle mapper algorithm for remote sensing image classification. In Proceedings of the IEEE International Congress on Image and Signal Processing (CISP 2013), Hangzhou, China, 16–18 December 2013; pp. 814–818.

- Ibraheem, I. Comparative study of maximum likelihood and spectral angle mapper algorithms used for automated detection of melanoma. Skin Res. Technol. 2015, 21, 84–89. [Google Scholar] [CrossRef] [PubMed]

- van der Meero, F.; Bakker, W. Cross correlogram spectral matching: application to surface mineralogical mapping by using AVIRIS data from Cuprite, Nevada. Remote Sens. Environ. 1997, 61, 371–382. [Google Scholar] [CrossRef]

- Tuominen, J.; Lipping, T. Assessment of hyperspectral classification methods for benthic cover type mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2012), Munich, Germany, 22–27 July 2012; pp. 4837–4840.

- Su, H.; Sheng, Y.; Du, P.; Liu, K. Adaptive affinity propagation with spectral angle mapper for semi-supervised hyperspectral band selection. Appl. Opt. 2012, 51, 2656–2663. [Google Scholar] [CrossRef] [PubMed]

- Nagaoka, T.; Kiyohara, Y.; Koga, H.; Nakamura, A.; Saida, T.; Sota, T. Modification of a melanoma discrimination index derived from hyperspectral data: a clinical trial conducted in 2 centers between March 2011 and December 2013. Skin Res. Technol. 2015, 21, 278–283. [Google Scholar] [CrossRef] [PubMed]

| Item | Specifications |

|---|---|

| Spectral range | 400–1000 nm (VNIR) |

| Spectrograph | ImSpector V10E 30 μ slit, 2.8 nm spectral resolution |

| Camera | Kappa 1392 × 1040 pixels, 12 bits, 11 fps, Firewire interface |

| Scanner | Rotational tripod, scan angle: max 160 |

| Headlight Type | Detection | Raw | Mean-Norm |

|---|---|---|---|

| LED | SAM | 0.9949 | 0.9857 |

| (OTSU = 0.9964) | SCM | 0.9992 | 0.9992 |

| EDM | 0.9970 | 0.9858 | |

| HID | SAM | 0.9980 | 0.9981 |

| (OTSU = 0.9937) | SCM | 0.9965 | 0.9965 |

| EDM | 0.9970 | 0.9980 | |

| Halogen | SAM | 0.9366 | 0.9351 |

| (OTSU = 0.9800) | SCM | 0.9656 | 0.9654 |

| EDM | 0.9936 | 0.9334 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kwon, S.; Kim, S. Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control. Sensors 2016, 16, 1058. https://doi.org/10.3390/s16071058

Kim H, Kwon S, Kim S. Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control. Sensors. 2016; 16(7):1058. https://doi.org/10.3390/s16071058

Chicago/Turabian StyleKim, Heekang, Soon Kwon, and Sungho Kim. 2016. "Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control" Sensors 16, no. 7: 1058. https://doi.org/10.3390/s16071058

APA StyleKim, H., Kwon, S., & Kim, S. (2016). Hyperspectral Image-Based Night-Time Vehicle Light Detection Using Spectral Normalization and Distance Mapper for Intelligent Headlight Control. Sensors, 16(7), 1058. https://doi.org/10.3390/s16071058