Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor

Abstract

:1. Introduction

2. Aggressive Behavior Recognition of Pigs Using a Kinect Depth Sensor

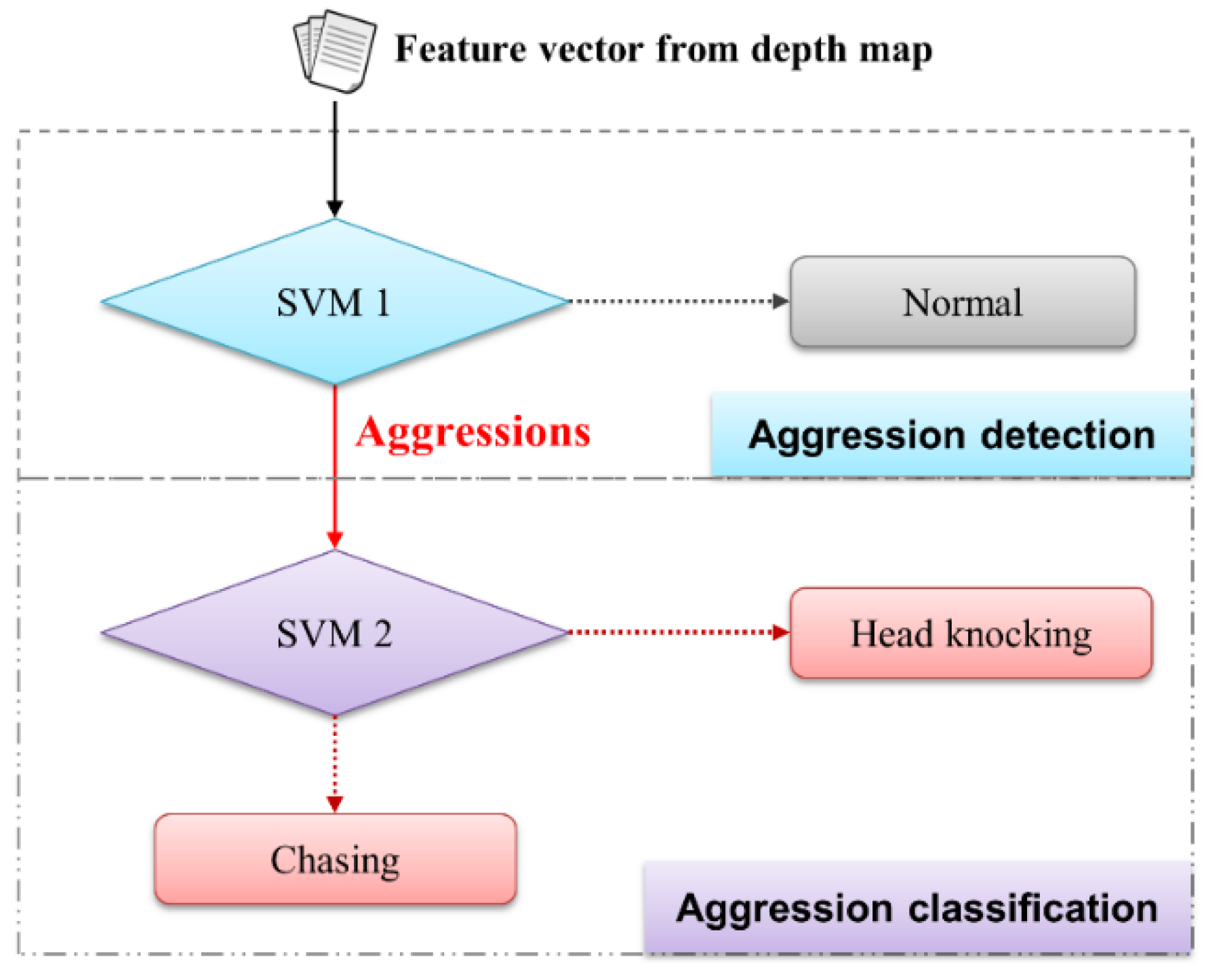

2.1. The Proposed Pig Aggression Recognition System

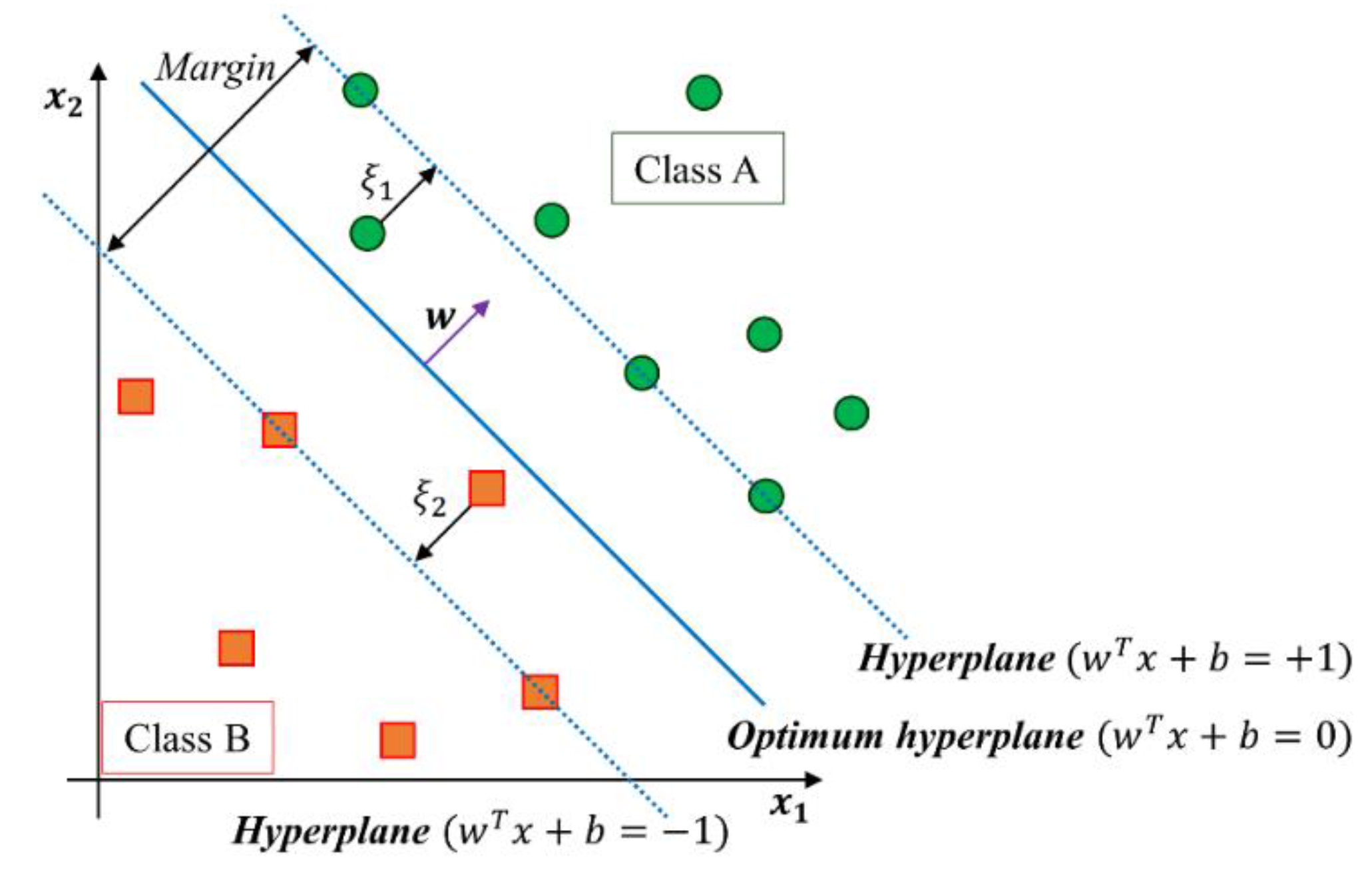

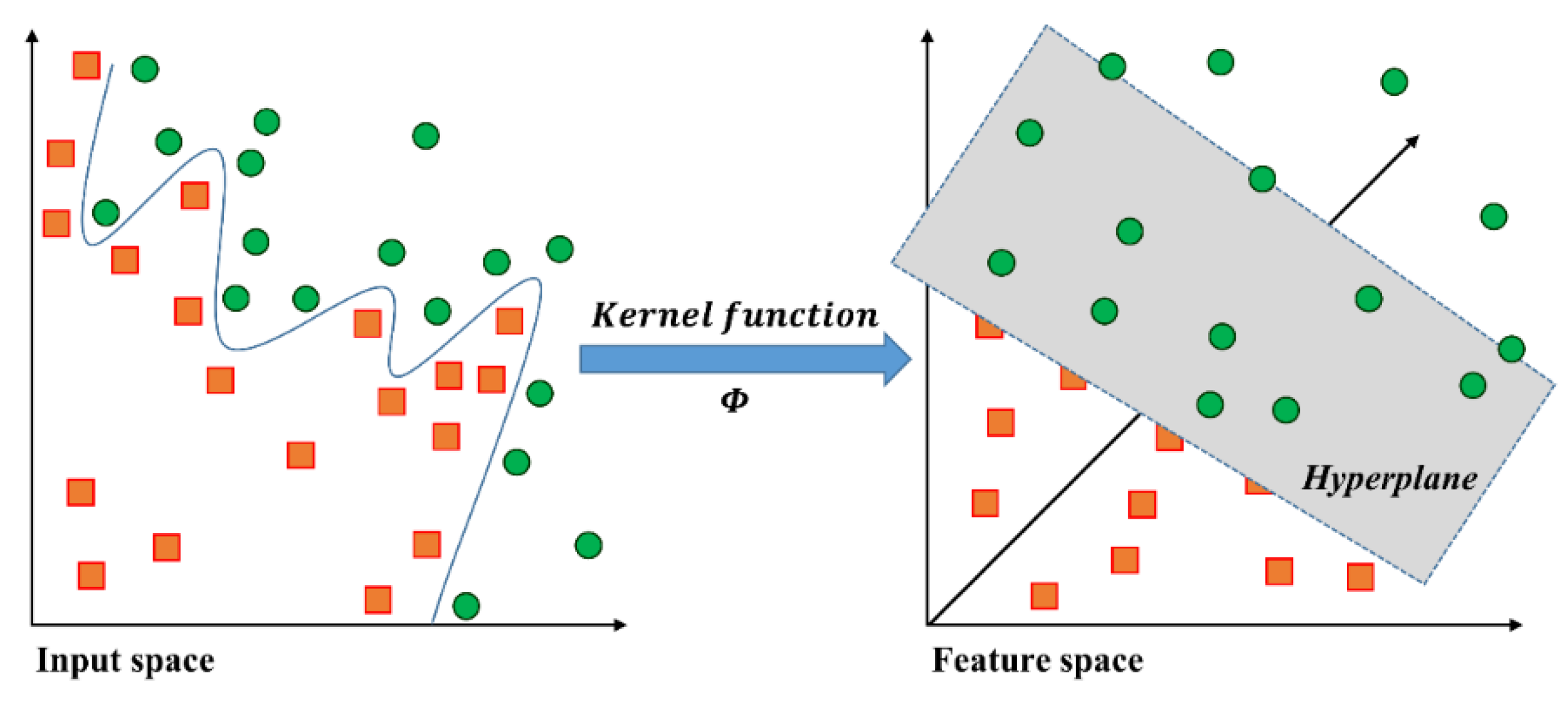

2.2. Binary Classifier Support Vector Machine (SVM)

3. Results

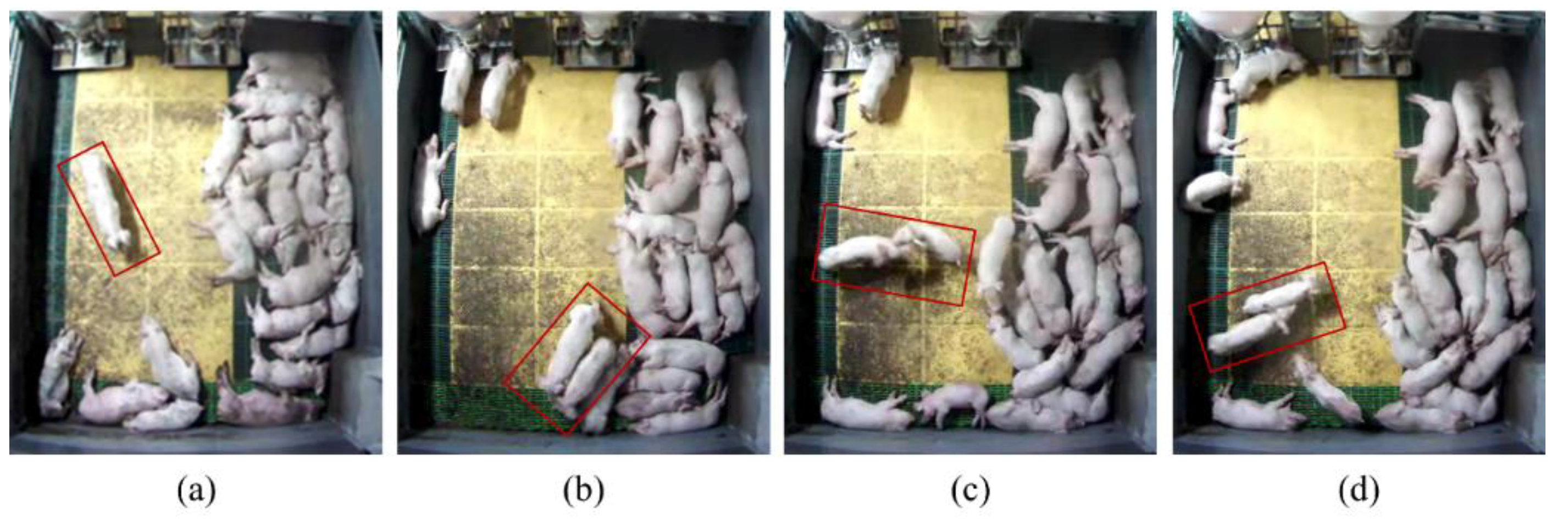

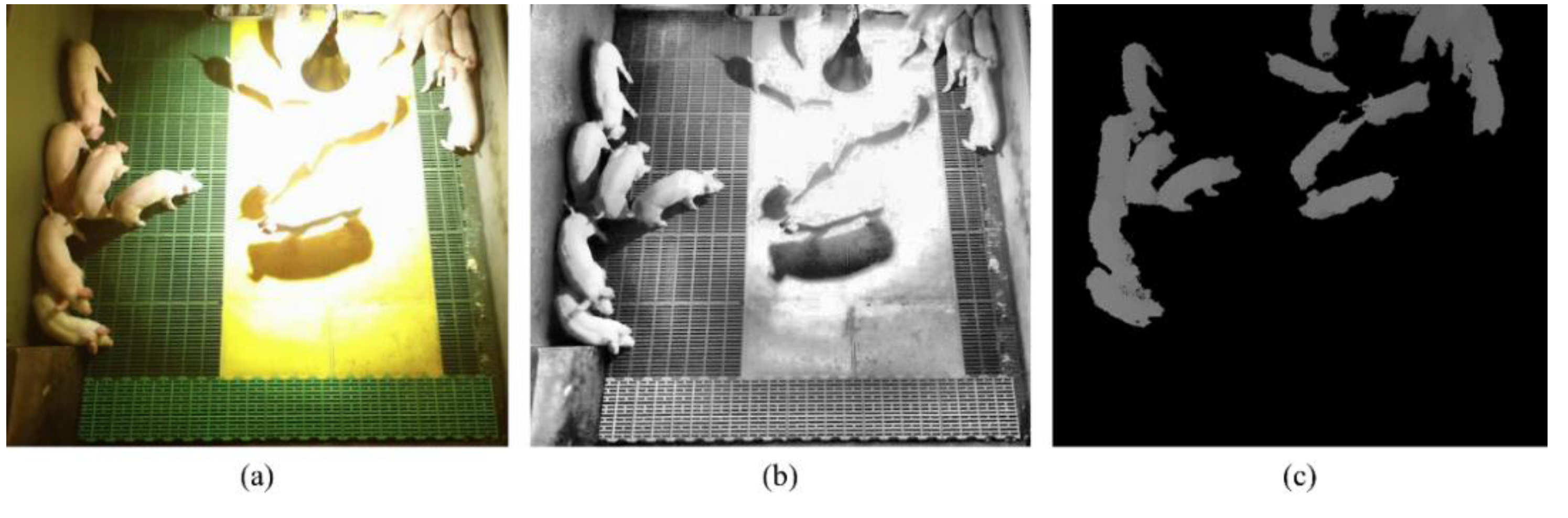

3.1. Data Collection and Data Sets

3.2. The Pig Aggression Detection and Classification Results

3.3. Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chung, Y.; Oh, S.; Lee, J.; Park, D.; Chang, H.; Kim, S. Automatic detection and recognition of pig wasting diseases using sound data in audio surveillance systems. Sensors 2013, 13, 12929–12942. [Google Scholar] [CrossRef] [PubMed]

- Chung, Y.; Lee, J.; Oh, S.; Park, D.; Chang, H.; Kim, S. Automatic detection of cow’s oestrus in audio surveillance system. Asian Austr. J. Anim. Sci. 2013, 26, 1030–1037. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Noh, B.; Jang, S.; Park, D.; Chung, Y.; Chang, H.-H. Stress detection and classification of laying hens by sound analysis. Asian Austr. J. Anim. Sci. 2015, 28, 592–598. [Google Scholar] [CrossRef] [PubMed]

- Mendes, L.B.; Ogink, N.W.; Edouard, N.; Van Dooren, H.J.C.; Tinôco, I.D.F.F.; Mosquera, J. NDIR gas sensor for spatial monitoring of carbon dioxide concentrations in naturally ventilated livestock buildings. Sensors 2015, 15, 11239–11257. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Zuo, S.; Chung, Y.; Park, D.; Chang, H.-H.; Kim, S. Formant-based acoustic features for cow's estrus detection in audio surveillance system. In Proceedings of the 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS 2014), Seoul, Korea, 26–29 August 2014; pp. 236–240.

- Ahmed, S.T.; Mun, H.S.; Islam, M.M.; Yoe, H.; Yang, C.J. Monitoring activity for recognition of illness in experimentally infected weaned piglets using received signal strength indication ZigBee-based wireless acceleration sensor. Asian Austr. J. Anim. Sci. 2016, 29, 149–156. [Google Scholar] [CrossRef] [PubMed]

- Camerlink, I.; Turner, S.P.; Ursinus, W.W.; Reimert, I.; Bolhuis, J.E. Aggression and affiliation during social conflict in pigs. PLoS ONE 2014, 9, e113502. [Google Scholar] [CrossRef] [PubMed]

- Mendl, M.; Zanella, A.J.; Broom, D.M. Physiological and reproductive correlates of behavioural strategies in female domestic pigs. Anim. Behav. 1992, 44, 1107–1121. [Google Scholar] [CrossRef]

- Forde, J.N.M. Piglet-and stockperson-directed sow aggression after farrowing and the relationship with a pre-farrowing, human approach test. Appl. Anim. Behav. Sci. 2002, 75, 115–132. [Google Scholar] [CrossRef]

- D’Eath, R.B.; Turner, S.P. The natural behaviour of the pig. In The Welfare of Pigs; Springer: Dordrecht, The Netherlands, 2009; pp. 13–45. [Google Scholar]

- Poletto, R.; Kretzer, F.C.; Hötzel, M.J. Minimizing aggression during mixing of gestating sows with supplementation of a tryptophan-enriched diet. Physiol. Behav. 2014, 132, 36–43. [Google Scholar] [CrossRef] [PubMed]

- McGlone, J.J.; Kelley, K.W.; Gaskins, C.T. Lithium and porcine aggression. J. Anim. Sci. 1980, 51, 447–455. [Google Scholar] [PubMed]

- Stookey, J.M.; Gonyou, H.W. The effects of regrouping on behavioral and production parameters in finishing swine. J. Anim. Sci. 1994, 72, 2804–2811. [Google Scholar] [PubMed]

- Faucitano, L. Causes of skin damage to pig carcasses. Can. J. Anim. Sci. 2001, 81, 39–45. [Google Scholar] [CrossRef]

- Bracke, M.B.M.; Spruijt, B.M.; Metz, J.H.M.; Schouten, W.G.P. Decision support system for overall welfare assessment in pregnant sows A: Model structure and weighting procedure. J. Anim. Sci. 2002, 80, 1819–1834. [Google Scholar] [PubMed]

- Viazzia, S; Ismayilovab, G.; Oczaka, M.; Sonodac, L.T.; Felsc, M.; Guarinob, M.; Vrankena, E.; Hartungc, J.; Bahra, C.; Berckman, D. Image feature extraction for classification of aggressive interactions among pigs. Comput. Electron. Agric. 2014, 104, 57–62. [Google Scholar]

- Oczak, M.; Viazzi, S.; Ismayilova, G.; Sonoda, L.T.; Roulston, N.; Fels, M.; Bahr, C.; Hartung, J.; Guarino, M.; Berckman, D.; Vranken, E. Classification of aggressive behaviour in pigs by activity index and multilayer feed forward neural network. Biosyst. Eng. 2014, 119, 89–97. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Kim, H.; Lee, S.; Lee, D.; Choi, S.; Ju, J.; Myung, H. Real-time human pose estimation and gesture recognition from depth images using superpixels and SVM classifier. Sensors 2015, 15, 12410–12427. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Choi, H.; Park, D.; Chung, Y.; Kim, H.-Y.; Yoon, S. Fault detection and diagnosis of railway point machines by sound analysis. Sensors 2016, 16, 549. [Google Scholar] [CrossRef] [PubMed]

- Kavzoglu, T.; Colkesen, I. A kernel functions analysis for support vector machines for land cover classification. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Zuo, S.; Jin, L.; Chung, Y.; Park, D. An index algorithm for tracking pigs in pigsty. WIT Trans. Eng. Sci. Southampt. 2014, 93, 797–803. [Google Scholar]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I. The WEKA data mining software: An update. SIGKDD Explor. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufman: San Francisco, CA, USA, 2012. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition, 4th ed.; Academic Press: Kidlington, UK, 2009. [Google Scholar]

- Jin, L. Automatic Detection of Abnormal Behavior in Video Monitoring System. Master’s Thesis, Department of Computer and Information Science, Korea University, Sejong, Korea, February 2015. [Google Scholar]

- Dalmau, A.; Temple, D.; Rodriguez, P.; Llonch, P.; Velarde, A. Application of the welfare quality® protocol at pig slaughterhouses. Anim. Welf. 2009, 18, 497–505. [Google Scholar]

- Ni, B.; Dat, N.C.; Moulin, P. RGBD-camera based get-up event detection for hospital fall prevention. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2012), Kyoto, Japan, 25–30 March 2012; pp. 1405–1408.

- Ni, B.; Wang, G.; Moulin, P. Rgbd-hudaact: A color-depth video database for human daily activity recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV 2011), Barcelona, Spain, 6–13 November 2011; pp. 193–208.

- Xia, L.; Aggarwal, J.K. Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR 2013), Portland, OR, USA, 23–28 June 2013; pp. 2834–2841.

- Antonakaki, P.; Kosmopoulos, D.; Perantonis, S.J. Detecting abnormal human behaviour using multiple cameras. Sign. Process. 2009, 89, 1723–1738. [Google Scholar] [CrossRef]

- Datta, A.; Shah, M.; Lobo, N.D.V. Person-on-person violence detection in video data. In Proceedings of the 16th International Conference on Pattern Recognition (ICPR 2002), Quebec, QC, Canada, 11–15 August 2002; pp. 433–438.

- Shen, Y.; Hao, Z.; Wang, P.; Ma, S.; Liu, W. A novel human detection approach based on depth map via Kinect. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR 2013), Portland, OR, USA, 23–28 June 2013; pp. 535–541.

- Xia, L.; Chen, C.C.; Aggarwal, J.K. Human detection using depth information by Kinect. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR 2011), Colorado Springs, CO, USA, 20–25 June 2011; pp. 15–22.

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems IV; Academic Press: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced computer vision with Microsoft Kinect sensor: A review. IEEE Trans. Cybernet. 2013, 43, 1318–1334. [Google Scholar]

| Aggressive Type | Behavior Label | Description |

|---|---|---|

| Head knocking | Head-to-head knocking | Hitting the snout against the head of the receiving pig |

| Head-to-body knocking | Hitting the snout against the body of the receiving pig | |

| Chasing | Chasing | Following another pig rapidly, usually with biting or attempted biting |

| Aggression Detector | ADR | FPR | FNR |

|---|---|---|---|

| SVM 1 | 95.7% | 4.2% | 4.3% |

| Parameter | Viazzi et al. [16] | Jin [26] | Proposed Method |

|---|---|---|---|

| Normal data size | 150 | 60 | 215 |

| Aggressive data size | 150 | 60 | 115 |

| Used data | Private | Private | Private |

| Camera type | Color | Color | Depth |

| Camera resolution | |||

| Tracking | N/A | Yes | Yes |

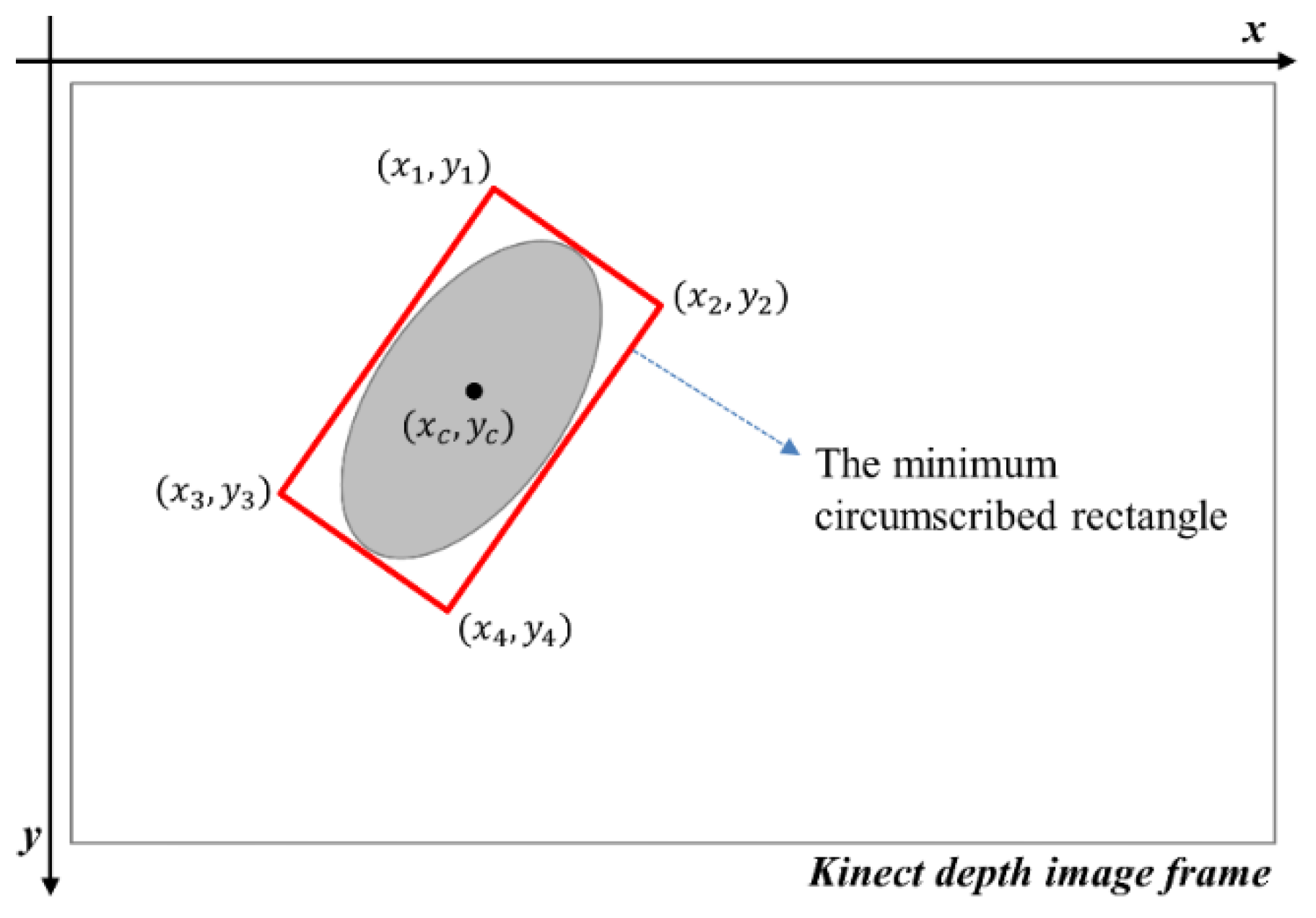

| Features | Mean activity and occupation index | Mean circumscribed rectangle and velocity | Minimum, maximum, average, standard deviation of velocity, and distance between the pigs |

| Feature vector dimension | 2 | 2 | 5 |

| Method | Linear discriminant analysis | SVM | SVM |

| ADR | 88.7% | 93.3% | 95.7% |

| FPR | 10.7% | 8.3% | 4.2% |

| FNR | 11.3% | 6.7% | 4.3% |

| Aggression Classifier | Class | Precision | Recall |

|---|---|---|---|

| SVM 2 | Head-to-head (or body) knocking | 88.9% | 92.3% |

| Chasing | 91.5% | 87.8% | |

| Average | 90.2% | 90.1% |

| Parameter | Oczak et al. [17] | Proposed Method |

|---|---|---|

| Aggressive behavior type | Medium and high aggression | Head-to-head (or body) knockingand chasing |

| Aggressive behavior data size | 634/1253 seconds | 61/54 episodes |

| Used data | Private | Private |

| Camera type | Color | Depth |

| Camera resolution | ||

| Tracking | N/A | Yes |

| Features | Average, maximum, minimum, summation and variance of activity index | Minimum, maximum, average, standard deviation of velocity, and distance between the pigs |

| Feature vector dimension | 5 | 5 |

| Method | Artificial neural network | SVM |

| Precision | 87.7% | 90.2% |

| Recall | 81.9% | 90.1% |

| Parameter | Execution Time (ms) | Frame Rate (fps) |

|---|---|---|

| Depth information acquisition (per frame) | 56 | 17.85 |

| Feature extraction (per interacting pigs) | 10 | 100 |

| Aggressive detection/classification (per episode) | 1 | 30,000 |

| Total * | 1981 (per episode) | 15.14 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Jin, L.; Park, D.; Chung, Y. Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor. Sensors 2016, 16, 631. https://doi.org/10.3390/s16050631

Lee J, Jin L, Park D, Chung Y. Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor. Sensors. 2016; 16(5):631. https://doi.org/10.3390/s16050631

Chicago/Turabian StyleLee, Jonguk, Long Jin, Daihee Park, and Yongwha Chung. 2016. "Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor" Sensors 16, no. 5: 631. https://doi.org/10.3390/s16050631

APA StyleLee, J., Jin, L., Park, D., & Chung, Y. (2016). Automatic Recognition of Aggressive Behavior in Pigs Using a Kinect Depth Sensor. Sensors, 16(5), 631. https://doi.org/10.3390/s16050631