3-D Imaging Systems for Agricultural Applications—A Review

Abstract

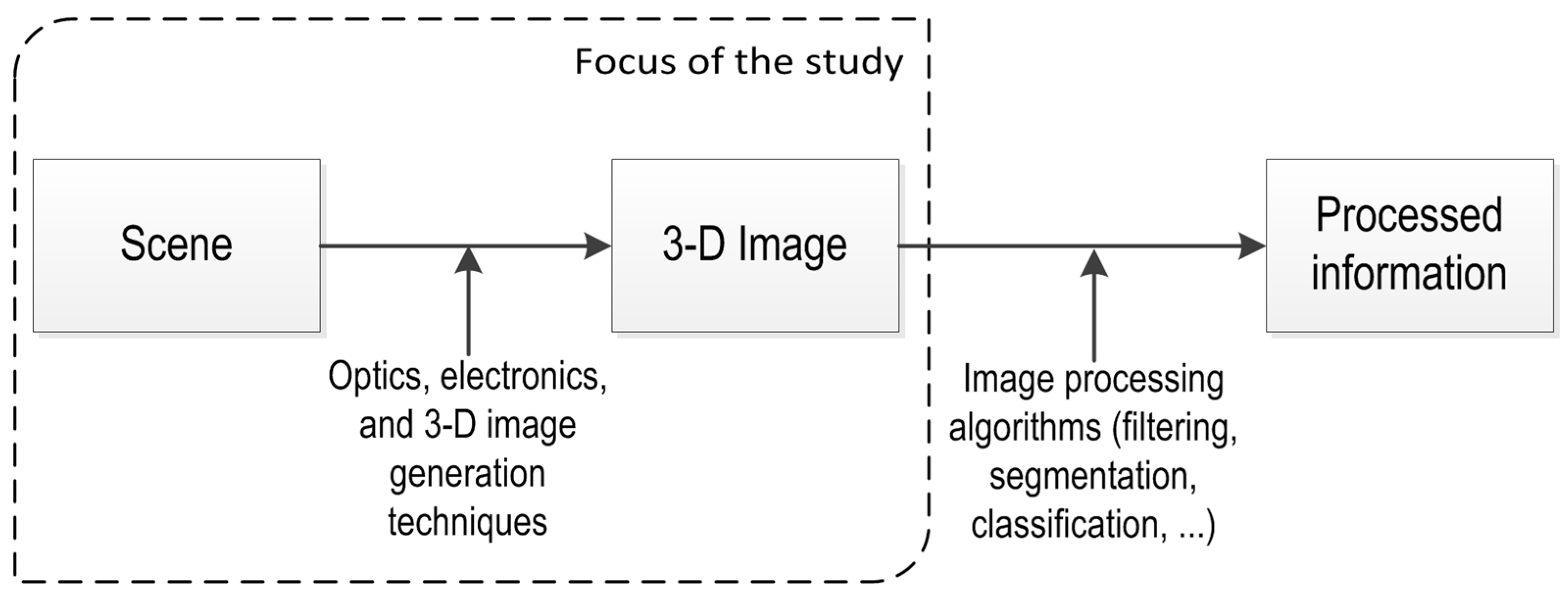

:1. Introduction

2. 3-D Vision Techniques

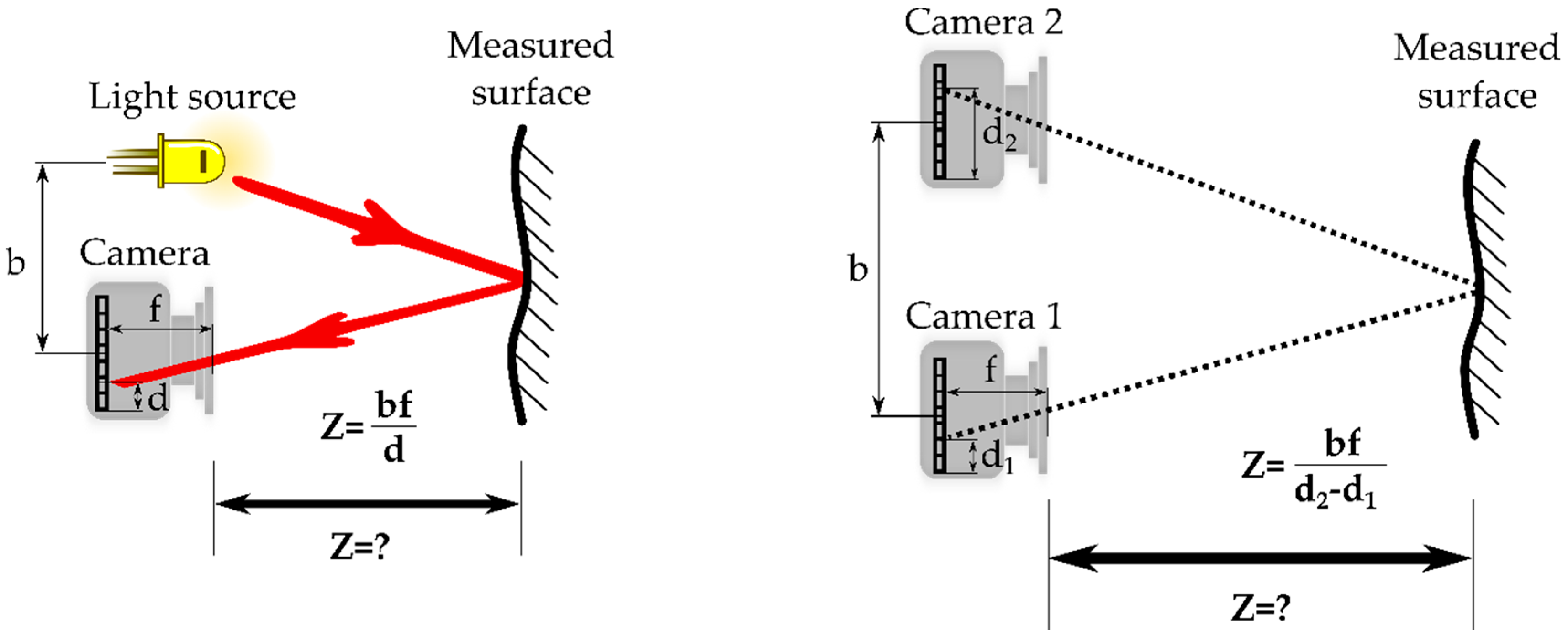

2.1. Triangulation

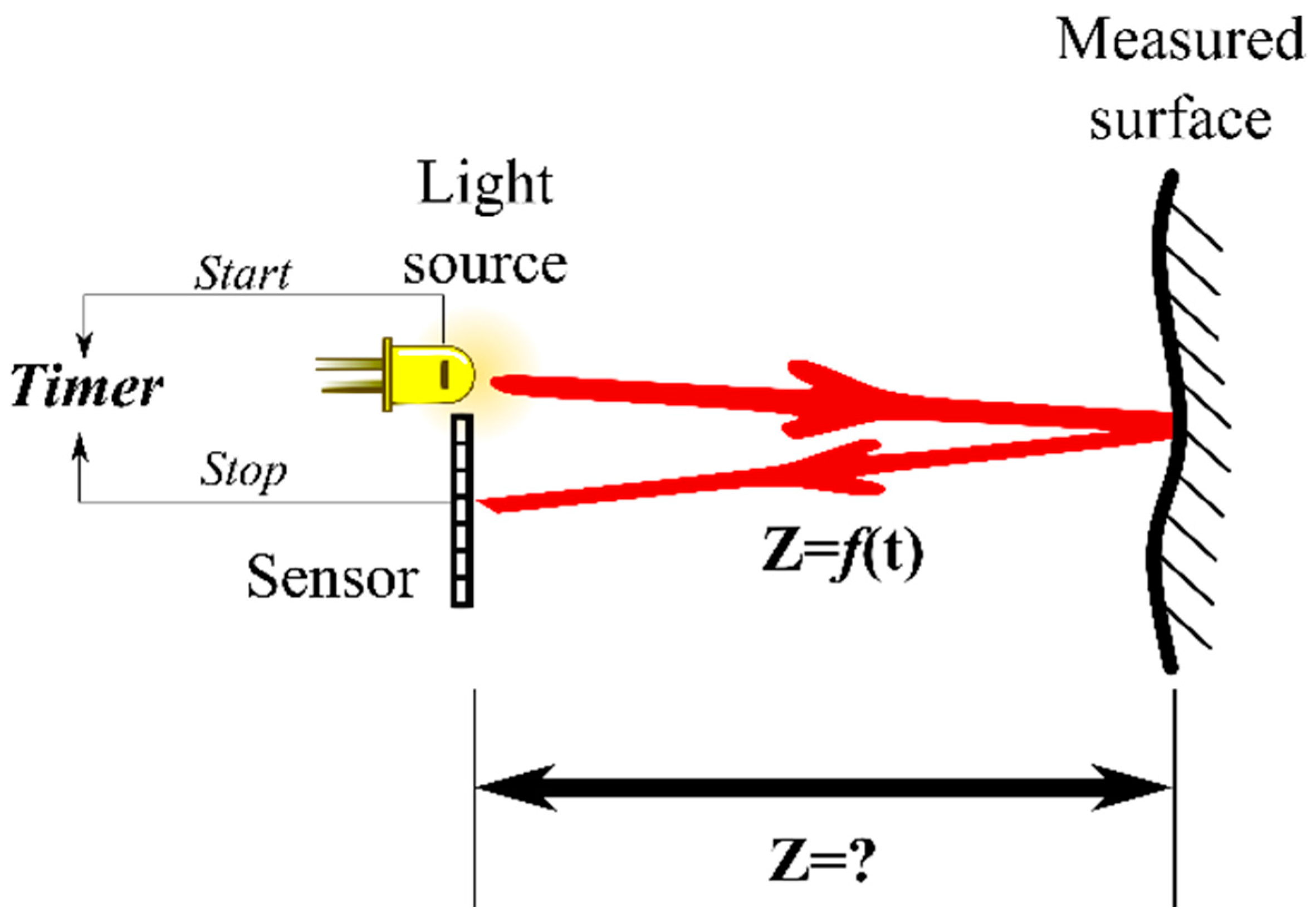

2.2. TOF

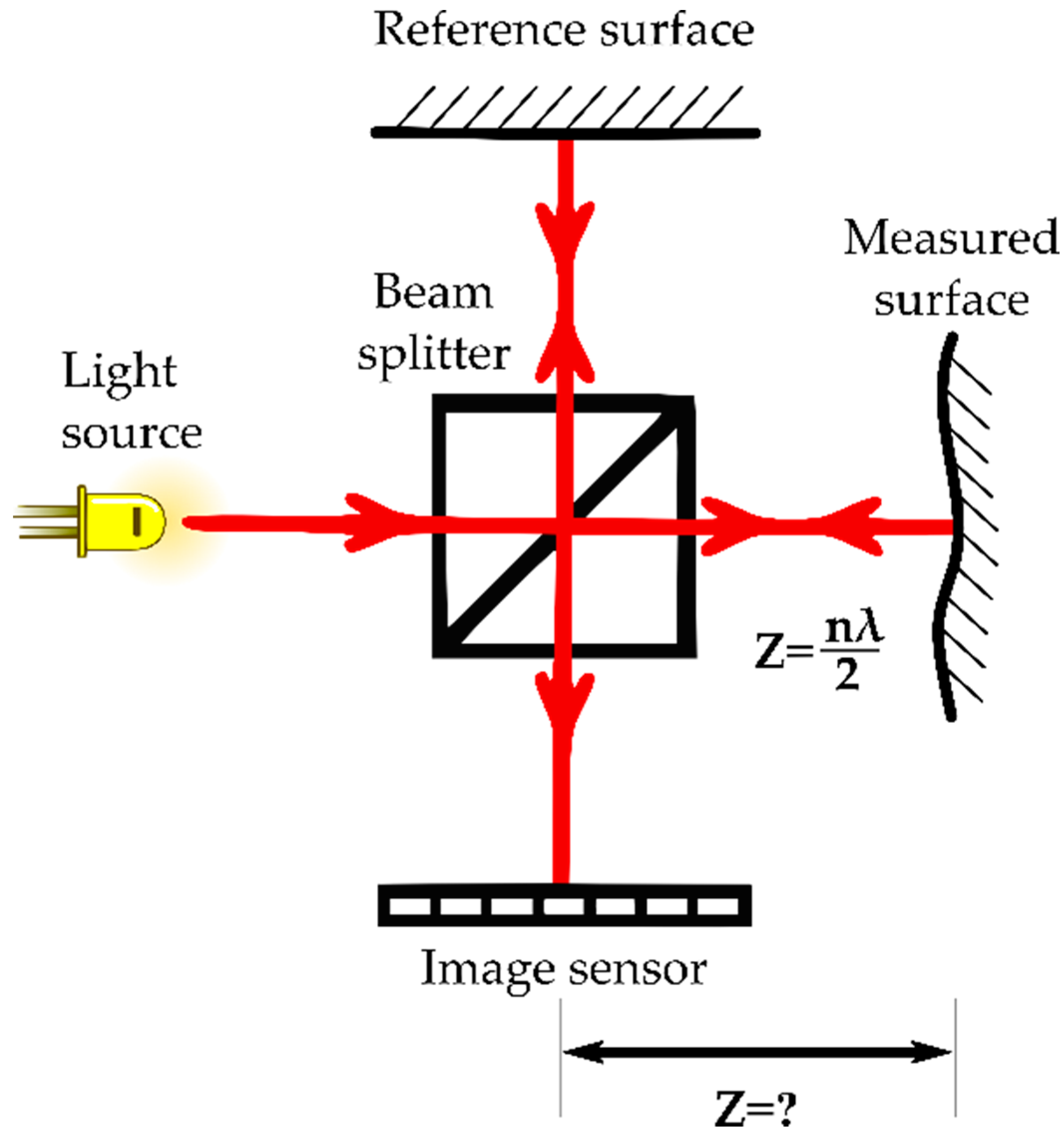

2.3. Interferometry

2.4. Comparison of the Most Common 3-D Vision Techniques

3. Applications in Agriculture

3.1. Vehicle Navigation

3.1.1. Triangulation

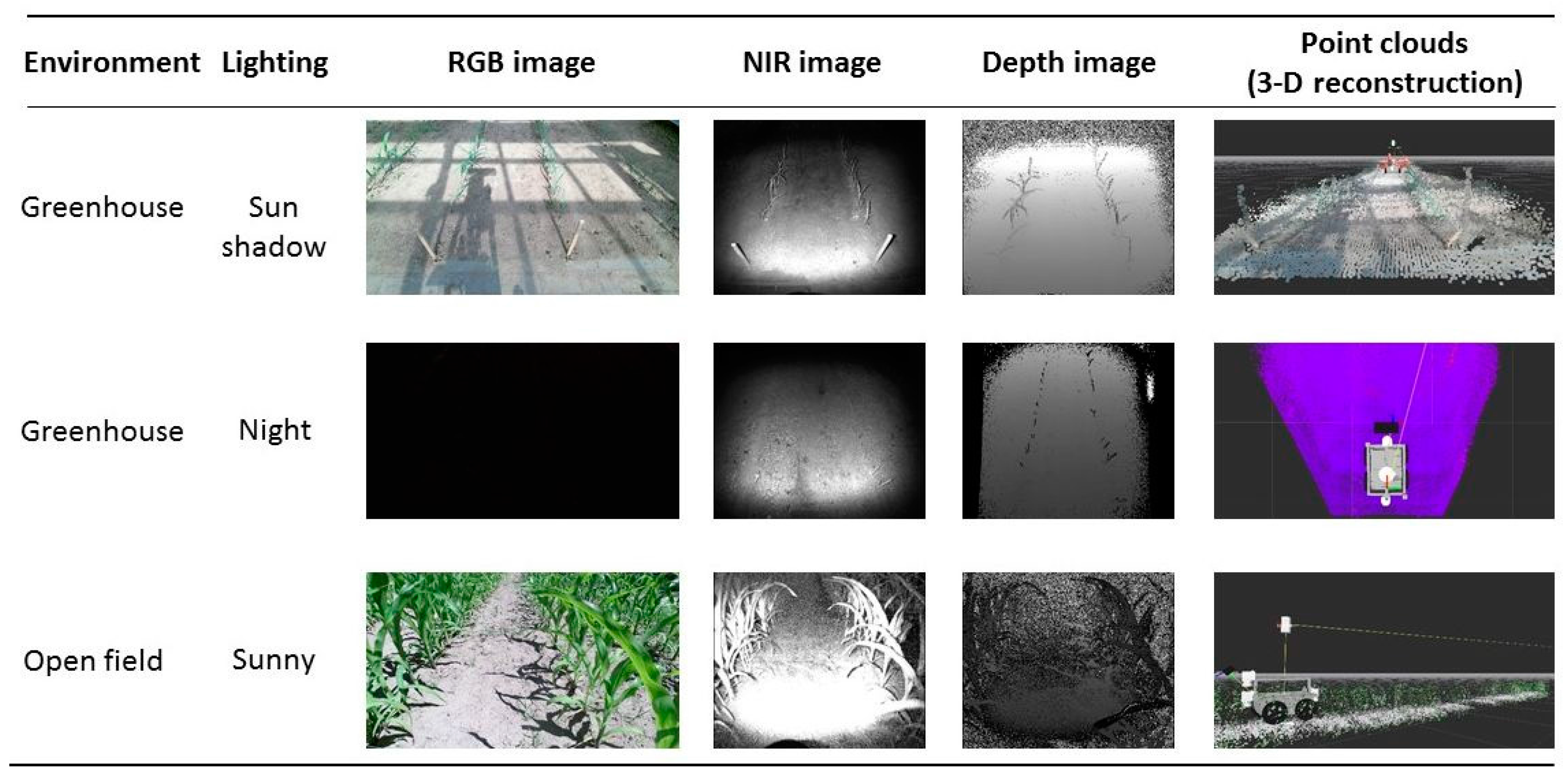

3.1.2. TOF

3.2. Crop Husbandry

3.2.1. Triangulation

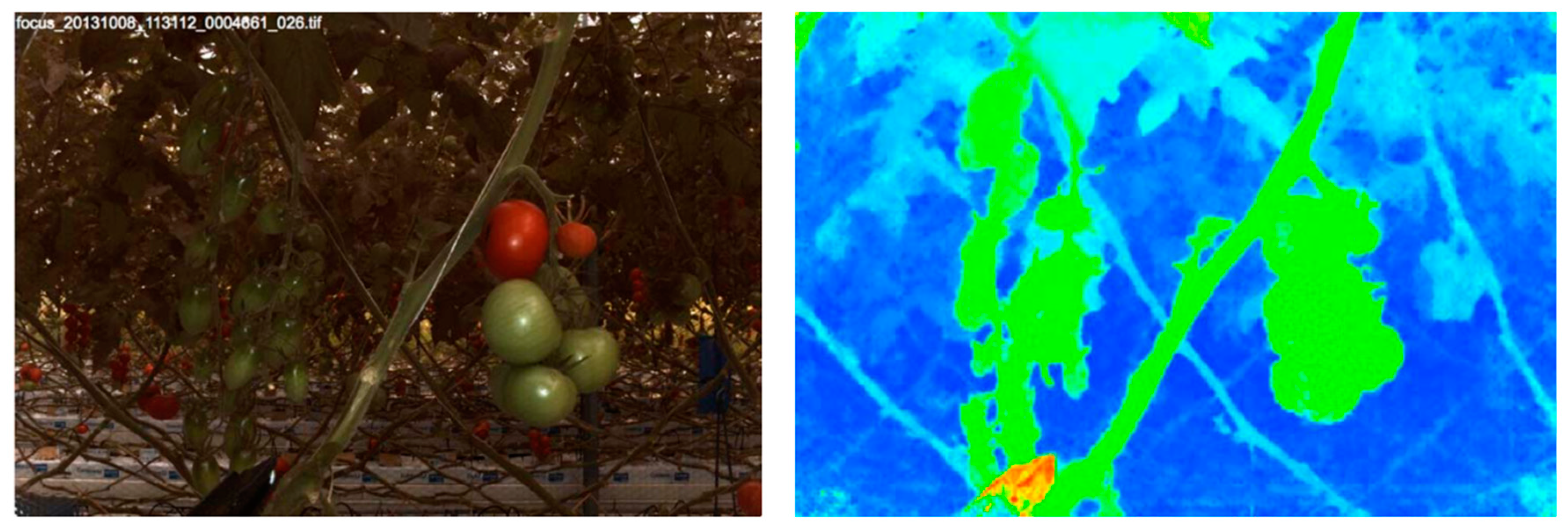

3.2.2. TOF

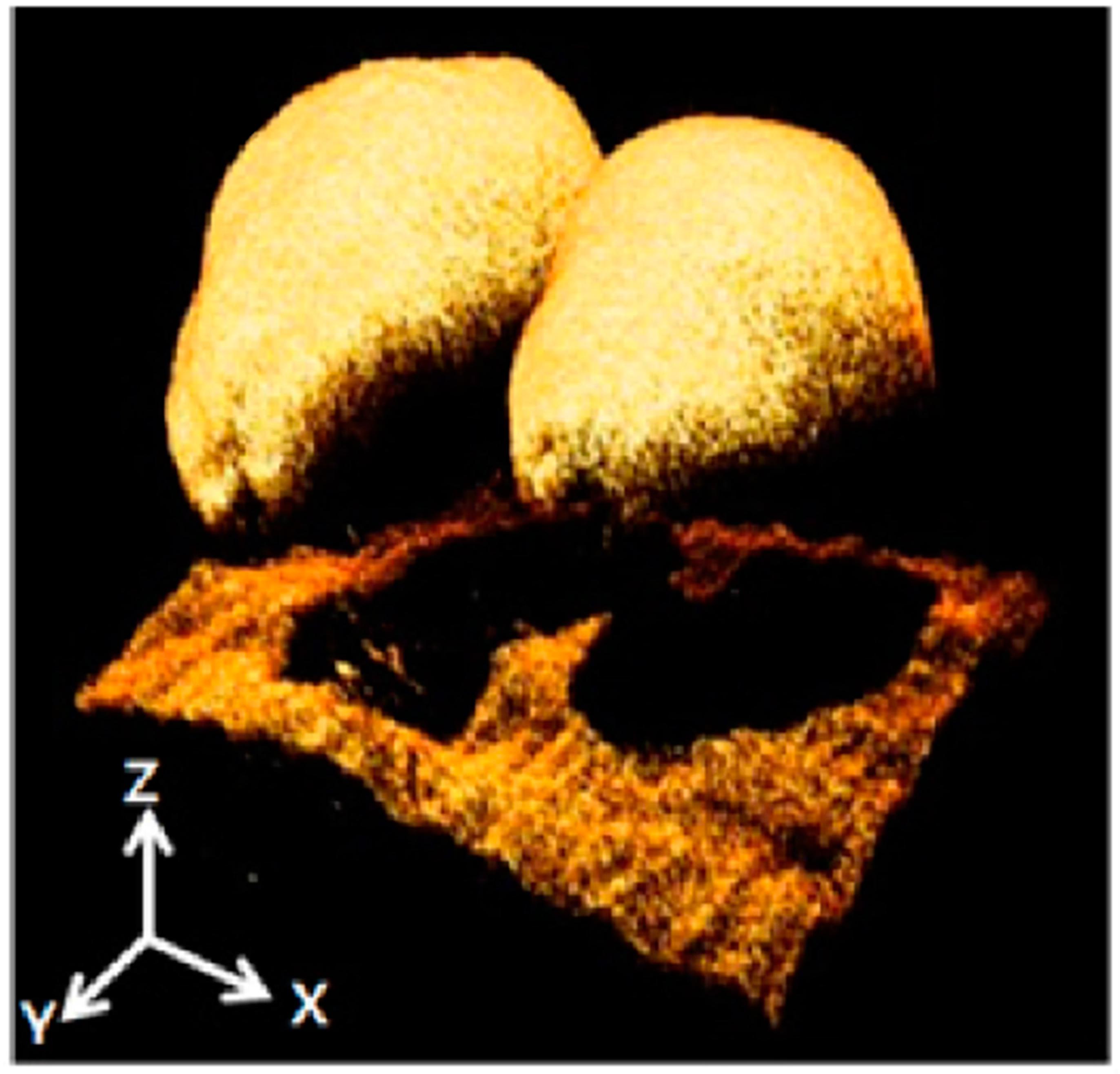

3.2.3. Interferometry

3.3. Animal Husbandry

3.3.1. Triangulation

3.3.2. TOF

3.4. Summary

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hertwich, E. Assessing the Environmental Impacts of Consumption and Production: Priority Products and Materials, A Report of the Working Group on the Environmental Impacts of Products and Materials to the International Panel for Sustainable Resource Management; UNEP: Nairobi, Kenya, 2010. [Google Scholar]

- Bergerman, M.; van Henten, E.; Billingsley, J.; Reid, J.; Mingcong, D. IEEE Robotics and Automation Society Technical Committee on Agricultural Robotics and Automation. IEEE Robot. Autom. Mag. 2013, 20, 20–24. [Google Scholar] [CrossRef]

- Edan, Y.; Han, S.; Kondo, N. Automation in agriculture. In Handbook of Automation; Nof, S.Y., Ed.; Springer: West Lafalette, IN, USA, 2009; pp. 1095–1128. [Google Scholar]

- Joergensen, R.N. Study on Line Imaging Spectroscopy as a Tool for Nitrogen Diagnostics in Precision Farming; The Royal Veterinary and Agricultural University: Copenhagen, Denmark, 2002. [Google Scholar]

- Eddershaw, T. IMAGING & Machine Vision Europe; Europa Science: Cambridge, UK, 2014; pp. 34–36. [Google Scholar]

- Ma, Y.; Kosecka, J.; Soatto, S.; Sastry, S. An Invitation to 3-D Vision: From Images to Geometric Models; Antman, S., Sirovich, L., Marsden, J.E., Wiggins, S., Eds.; Springer Science+Business Media: New York, NY, USA, 2004. [Google Scholar]

- Bellmann, A.; Hellwich, O.; Rodehorst, V.; Yilmaz, U. A Benchmarking Dataset for Performance Evaluation of Automatic Surface Reconstruction Algorithms. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Minneapolis, MN, USA, 2007; pp. 1–8. [Google Scholar]

- Jarvis, R. A perspective on range finding techniques for computer vision. IEEE Trans. Pattern Anal. Mach. Intell. 1983, PAMI-5, 122–139. [Google Scholar] [CrossRef]

- Blais, F. Review of 20 years of range sensor development. J. Electron. Imaging 2004, 13, 231–240. [Google Scholar] [CrossRef]

- Grift, T. A review of automation and robotics for the bioindustry. J. Biomechatron. Eng. 2008, 1, 37–54. [Google Scholar]

- McCarthy, C.L.; Hancock, N.H.; Raine, S.R. Applied machine vision of plants: A review with implications for field deployment in automated farming operations. Intell. Serv. Robot. 2010, 3, 209–217. [Google Scholar] [CrossRef]

- Besl, P.J. Active, Optical Range Imaging Sensors. Mach. Vis. Appl. 1988, 1, 127–152. [Google Scholar] [CrossRef]

- Büttgen, B.; Oggier, T.; Lehmann, M. CCD/CMOS lock-in pixel for range imaging: challenges, limitations and state-of-the-art. In 1st Range Imaging Research Day; Hilmar Ingensand and Timo Kahlmann: Zurich, Switzerland, 2005; pp. 21–32. [Google Scholar]

- Schwarte, R.; Heinol, H.; Buxbaum, B.; Ringbeck, T. Principles of Three-Dimensional Imaging Techniques. In Handbook of Computer Vision and Applications; Jähne, B., Haußecker, H., Greißler, P., Eds.; Academic Press: Heidelberg, Germany, 1999; pp. 464–482. [Google Scholar]

- Jähne, B.; Haußecker, H.; Geißler, P. Handbook of Computer Vision and Applications; Jähne, B., Haußecker, H., Geißler, P., Eds.; Academic Press: Heidelberg, Germany, 1999; Volume 1–2. [Google Scholar]

- Lange, R. Time-of-Flight Distance Measurement with Solid-State Image Sensors in CMOS/CCD-Technology; University of Siegen: Siegen, Germany, 2000. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Seitz, S.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 2006; pp. 519–528. [Google Scholar]

- Okutomi, M.; Kanade, T. A multiple-baseline stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 353–363. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Lavest, J.M.; Rives, G.; Dhome, M. Three-dimensional reconstruction by zooming. IEEE Trans. Robot. Autom. 1993, 9, 196–207. [Google Scholar] [CrossRef]

- Sun, C. Fast optical flow using 3D shortest path techniques. Image Vis. Comput. 2002, 20, 981–991. [Google Scholar] [CrossRef]

- Cheung, K.G.; Baker, S.; Kanade, T. Shape-From-Silhouette Across Time Part I: Theory and Algorithms. Int. J. Comput. Vis. 2005, 62, 221–247. [Google Scholar] [CrossRef]

- Kutulakos, K.N.; Seitz, S.M. A theory of shape by space carving. Int. J. Comput. Vis. 2000, 38, 199–218. [Google Scholar] [CrossRef]

- Savarese, S. Shape Reconstruction from Shadows and Reflections; California Institute of Technology: Pasadena, CA, USA, 2005. [Google Scholar]

- Lobay, A.; Forsyth, D.A. Shape from Texture without Boundaries. Int. J. Comput. Vis. 2006, 67, 71–91. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Horn, B.K.P. Shape From Shading: A Method for Obtaining the Shape of a Smooth Opaque Object From One View; Massachusetts Institute of Technology: Cambridge, MA, USA, 1970. [Google Scholar]

- Woodham, R. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Nayar, S.K.; Nakagawa, Y. Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 824–831. [Google Scholar] [CrossRef]

- Favaro, P.; Soatto, S. A Geometric Approach to Shape from Defocus. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Tiziani, H.J. Optische Methoden der 3-D-Messtechnik und Bildverarbeitung. In Ahlers, Rolf-Jürgen (Hrsg.): Bildverarbeitung: Forschen, Entwickeln, Anwenden; Techn. Akad. Esslingen: Ostfildern, Germany, 1989; pp. 1–26. [Google Scholar]

- Lachat, E.; Macher, H.; Mittet, M.; Landes, T.; Grussenmeyer, P. First experiences with Kinect v2 sensor for close range 3D modelling. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Avila, Spain, 2015; Volume XL-5/W4, pp. 93–100. [Google Scholar]

- Griepentrog, H.W.; Andersen, N.A.; Andersen, J.C.; Blanke, M.; Heinemann, O.; Nielsen, J.; Pedersen, S.M.; Madsen, T.E.; Wulfsohn, D. Safe and Reliable—Further Development of a Field Robot. In 7th European Conference on Precision Agriculture (ECPA), 6–8 July 2009; Wageningen Academic Publishers: Wageningen, The Netherlands; pp. 857–866.

- Shalal, N.; Low, T.; Mccarthy, C.; Hancock, N. A review of autonomous navigation systems in agricultural environments. In Innovative Agricultural Technologies for a Sustainable Future; Society for Engineering in Agriculture (SEAg): Barton, Australia, 2013; pp. 1–16. [Google Scholar]

- Mousazadeh, H. A technical review on navigation systems of agricultural autonomous off-road vehicles. J. Terramech. 2013, 50, 211–232. [Google Scholar] [CrossRef]

- Ji, B.; Zhu, W.; Liu, B.; Ma, C.; Li, X. Review of recent machine-vision technologies in agriculture. In Second International Symposium on Knowledge Acquisition and Modeling; IEEE: New York, NY, USA, 2009; pp. 330–334. [Google Scholar]

- Kise, M.; Zhang, Q.; Rovira Más, F. A Stereovision-based Crop Row Detection Method for Tractor-automated Guidance. Biosyst. Eng. 2005, 90, 357–367. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Han, S.; Wei, J.; Reid, J.F. Autonomous guidance of a corn harvester using stereo vision. Agric. Eng. Int. CIGR Ejournal 2007, 9, 1–13. [Google Scholar]

- Hanawa, K.; Yamashita, T.; Matsuo, Y.; Hamada, Y. Development of a stereo vision system to assist the operation of agricultural tractors. Jpn. Agric. Res. Q. JARQ 2012, 46, 287–293. [Google Scholar] [CrossRef]

- Blas, M.R.; Blanke, M. Stereo vision with texture learning for fault-tolerant automatic baling. Comput. Electron. Agric. 2011, 75, 159–168. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, Q.; Rovira-Más, F.; Tian, L. Stereovision-based lateral offset measurement for vehicle navigation in cultivated stubble fields. Biosyst. Eng. 2011, 109, 258–265. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A. Towards autonomous agriculture: Automatic ground detection using trinocular stereovision. Sensors 2012, 12, 12405–12423. [Google Scholar] [CrossRef]

- Reina, G.; Milella, A.; Nielsen, M.; Worst, R.; Blas, M.R. Ambient awareness for agricultural robotic vehicles. Biosyst. Eng. 2016. [Google Scholar] [CrossRef]

- Wei, J.; Reid, J.F.; Han, S. Obstacle Detection Using Stereo Vision To Enhance Safety of Autonomous Machines. Trans. Am. Soc. Agric. Eng. 2005, 48, 2389–2397. [Google Scholar] [CrossRef]

- Yang, L.; Noguchi, N. Human detection for a robot tractor using omni-directional stereo vision. Comput. Electron. Agric. 2012, 89, 116–125. [Google Scholar] [CrossRef]

- Nissimov, S.; Goldberger, J.; Alchanatis, V. Obstacle detection in a greenhouse environment using the Kinect sensor. Comput. Electron. Agric. 2015, 113, 104–115. [Google Scholar] [CrossRef]

- Kaizu, Y.; Choi, J. Development of a tractor navigation system using augmented reality. Eng. Agric. Environ. Food 2012, 5, 96–101. [Google Scholar] [CrossRef]

- Choi, J.; Yin, X.; Yang, L.; Noguchi, N. Development of a laser scanner-based navigation system for a combine harvester. Eng. Agric. Environ. Food 2014, 7, 7–13. [Google Scholar] [CrossRef]

- Yin, X.; Noguchi, N.; Choi, J. Development of a target recognition and following system for a field robot. Comput. Electron. Agric. 2013, 98, 17–24. [Google Scholar] [CrossRef]

- CLAAS CAM PILOT. Available online: http://www.claas.de/produkte/easy/lenksysteme/optische-lenksysteme/cam-pilot (accessed on 26 January 2016).

- IFM Electronic 3D Smart Sensor—Your Assistant on Mobile Machines. Available online: http://www.ifm.com (accessed on 22 January 2016).

- CLAAS AUTO FILL. Available online: http://www.claas.de/produkte/easy/cemos/cemos-automatic (accessed on 26 January 2016).

- New Holland IntelliFill System. Available online: http://agriculture1.newholland.com/eu/en-uk?market=uk (accessed on 26 January 2016).

- Hernandez, A.; Murcia, H.; Copot, C.; de Keyser, R. Towards the Development of a Smart Flying Sensor: Illustration in the Field of Precision Agriculture. Sensors 2015, 15, 16688–16709. [Google Scholar] [CrossRef] [PubMed]

- Naio Technologies Oz. Available online: http://naio-technologies.com (accessed on 10 February 2016).

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Rosell, J.R.; Sanz, R. A review of methods and applications of the geometric characterization of tree crops in agricultural activities. Comput. Electron. Agric. 2012, 81, 124–141. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Nelson, R.F.; Næsset, E.; Ørka, H.O.; Coops, N.C.; Hilker, T.; Bater, C.W.; Gobakken, T. Lidar sampling for large-area forest characterization: A review. Remote Sens. Environ. 2012, 121, 196–209. [Google Scholar] [CrossRef]

- Vos, J.; Marcelis, L.; de Visser, P.; Struik, P.; Evers, J. Functional–Structural Plant. Modelling in Crop. Production; Springer: Wageningen, The Netherlands, 2007; Volume 61. [Google Scholar]

- Moreda, G.P.; Ortiz-Cañavate, J.; García-Ramos, F.J.; Ruiz-Altisent, M. Non-destructive technologies for fruit and vegetable size determination—A review. J. Food Eng. 2009, 92, 119–136. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- CROPS. Intelligent Sensing and Manipulation for Sustainable Production and Harvesting of High Value Crops, Clever Robots for Crops. Available online: http://cordis.europa.eu/result/rcn/90611_en.html (accessed on 1 February 2016).

- Jay, S.; Rabatel, G.; Hadoux, X.; Moura, D.; Gorretta, N. In-field crop row phenotyping from 3D modeling performed using Structure from Motion. Comput. Electron. Agric. 2015, 110, 70–77. [Google Scholar] [CrossRef]

- Santos, T.T.; Oliveira, A.A. De Image-based 3D digitizing for plant architecture analysis and phenotyping. In Proceedings of the XXV Conference on Graphics, Patterns and Images, Ouro Preto, Brazil, 22–25 August 2012; pp. 21–28.

- Martínez-Casasnovas, J.A.; Ramos, M.C.; Balasch, C. Precision analysis of the effect of ephemeral gully erosion on vine vigour using NDVI images. Precis. Agric. 2013, 13, 777–783. [Google Scholar]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote. Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; González-Aguilera, D.; Rodriguez-Gonzalvez, P.; Hernández-López, D. Vineyard yield estimation by automatic 3D bunch modelling in field conditions. Comput. Electron. Agric. 2015, 110, 17–26. [Google Scholar] [CrossRef]

- Moonrinta, J.; Chaivivatrakul, S.; Dailey, M.N.; Ekpanyapong, M. Fruit detection, tracking, and 3D reconstruction for crop mapping and yield estimation. In Proceedings of the 2010 11th International Conference on Control Automation Robotics Vision, Singapore, 7–10 December 2010; pp. 1181–1186.

- Wang, Q.; Nuske, S.; Bergerman, M.; Singh, S. Experimental Robotics; Desai, P.J., Dudek, G., Khatib, O., Kumar, V., Eds.; Springer International Publishing: Heidelberg, Germany, 2013; pp. 745–758. [Google Scholar]

- Arikapudi, R.; Vougioukas, S.; Saracoglu, T. Orchard tree digitization for stuctural-geometrical modeling. In Precision Agriculture’15; Stafford, J.V., Ed.; Wageningen Academic Publishers: Wageningen, The Netherlands, 2015; pp. 329–336. [Google Scholar]

- UMR Itap Becam. Available online: http://itap.irstea.fr/ (accessed on 1 February 2016).

- Deepfield Robotics BoniRob. Available online: http://www.deepfield-robotics.com/ (accessed on 1 February 2016).

- Busemeyer, L.; Mentrup, D.; Möller, K.; Wunder, E.; Alheit, K.; Hahn, V.; Maurer, H.; Reif, J.; Würschum, T.; Müller, J.; et al. BreedVision—A Multi-Sensor Platform for Non-Destructive Field-Based Phenotyping in Plant Breeding. Sensors 2013, 13, 2830–2847. [Google Scholar] [CrossRef] [PubMed]

- Optimalog Heliaphen. Available online: http://www.optimalog.com/ (accessed on 1 February 2016).

- The University of Sidney Ladybird. Available online: http://www.acfr.usyd.edu.au/ (accessed on 1 February 2016).

- Koenderink, N.J.J.P.; Wigham, M.; Golbach, F.; Otten, G.; Gerlich, R.; van de Zedde, H.J. MARVIN: High speed 3D imaging for seedling classification. In Proceedings of the European Conference on Precision Agriculture, Wageningen, The Netherlands, 6–8 July 2009; pp. 279–286.

- INRA PhenoArch. Available online: http://www.inra.fr/ (accessed on 1 February 2016).

- Polder, G.; Lensink, D.; Veldhuisen, B. PhenoBot—a robot system for phenotyping large tomato plants in the greenhouse using a 3D light field camera. In Phenodays; Wageningen UR: Vaals, Holand, 2013. [Google Scholar]

- Phenospex PlantEye. Available online: https://phenospex.com/ (accessed on 1 February 2016).

- Alenyà, G.; Dellen, B.; Foix, S.; Torras, C. Robotic leaf probing via segmentation of range data into surface patches. In IROS Workshop on Agricultural Robotics: Enabling Safe, Efficient, Affordable Robots for Food Production; IEEE/RSJ: Vilamoura, Portugal, 2012; pp. 1–6. [Google Scholar]

- Alci Visionics & Robotics Sampling Automation System: SAS. Available online: http://www.alci.fr/ (accessed on 1 February 2016).

- LemnaTec Scanalyzer. Available online: http://www.lemnatec.com/ (accessed on 1 February 2016).

- Polder, G.; van der Heijden, G.W.A.M.; Glasbey, C.A.; Song, Y.; Dieleman, J.A. Spy-See—Advanced vision system for phenotyping in greenhouses. In Proceedings of the MINET Conference: Measurement, Sensation and Cognition, London, UK, 10–12 November 2009; pp. 115–119.

- BLUE RIVER TECHNOLOGY Zea. Available online: http://www.bluerivert.com/ (accessed on 1 February 2016).

- Noordam, J.; Hemming, J.; Van Heerde, C.; Golbach, F.; Van Soest, R.; Wekking, E. Automated rose cutting in greenhouses with 3D vision and robotics: Analysis of 3D vision techniques for stem detection. In Acta Horticulturae 691; van Straten, G., Bot, G.P., van Meurs, W.T.M., Marcelis, L.F., Eds.; ISHS: Leuven, Belgium, 2005; pp. 885–889. [Google Scholar]

- Hemming, J.; Golbach, F.; Noordam, J.C. Reverse Volumetric Intersection (RVI), a method to generate 3D images of plants using multiple views. In Bornimer Agrartechn. Berichte; Inst. für Agrartechnik e.V. Bornim: Potsdam-Bornim, Germany, 2005; Volume 40, pp. 17–27. [Google Scholar]

- Tabb, A. Shape from Silhouette probability maps: Reconstruction of thin objects in the presence of silhouette extraction and calibration error. In Proceedings of the 2013 IEEE Conference Computer Vision Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 161–168.

- Billiot, B.; Cointault, F.; Journaux, L.; Simon, J.-C.; Gouton, P. 3D image acquisition system based on shape from focus technique. Sensors 2013, 13, 5040–5053. [Google Scholar] [CrossRef] [PubMed]

- Jin, J.; Tang, L. Corn plant sensing using real-time stereo vision. J. F. Robot. 2009, 26, 591–608. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, Y.; Cai, X.; Liu, H.; Lammers, P.S. Identify Plant Drought Stress by 3D-Based Image. J. Integr. Agric. 2012, 11, 1207–1211. [Google Scholar] [CrossRef]

- Piron, A.; van der Heijden, F.; Destain, M.F. Weed detection in 3D images. Precis. Agric. 2011, 12, 607–622. [Google Scholar] [CrossRef]

- Lino, A.C.L.; Dal Fabbro, I.M. Fruit profilometry based on shadow Moiré techniques. Ciênc. Agrotechnol. 2001, 28, 119–125. [Google Scholar] [CrossRef]

- Šeatović, D.; Kuttere, H.; Anken, T.; Holpp, M. Automatic weed detection in grassland. In Proceedings of the 67th International Conference on Agricultural Engineering, Hanover, Germany, 6–7 November 2009; pp. 187–192.

- Wolff, A. Phänotypisierung in Feldbeständen Mittels 3D-Lichtschnitt-Technik; Strube Research GmbH: Söllingen, Germany, 2012. [Google Scholar]

- Kise, M.; Zhang, Q. Creating a panoramic field image using multi-spectral stereovision system. Comput. Electron. Agric. 2008, 60, 67–75. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Zhang, Q.; Reid, J.F. Creation of three-dimensional crop maps based on aerial stereoimages. Biosyst. Eng. 2005, 90, 251–259. [Google Scholar] [CrossRef]

- Strothmann, W.; Ruckelshausen, A.; Hertzberg, J. Multiwavelenght laser line profile sensing for agricultural crop. In Proceedings SPIE 9141 Optical Sensing and Detection III; Berghmans, F., Mignani, A.G., de Moor, P., Eds.; SPIE: Brussels, Belgium, 2014. [Google Scholar]

- Guthrie, A.G.; Botha, T.R.; Els, P.S. 3D computer vision contact patch measurements inside off-road vehicles tyres. In Proceedings of the 18th International Conference of the ISTVS, Seul, Korea, 22–25 September 2014; Volume 4405, pp. 1–6.

- Jiang, L.; Zhu, B.; Cheng, X.; Luo, Y.; Tao, Y. 3D surface reconstruction and analysis in automated apple stem-end/calyx identification. Trans. ASABE 2009, 52, 1775–1784. [Google Scholar] [CrossRef]

- Möller, K.; Jenz, M.; Kroesen, M.; Losert, D.; Maurer, H.-P.; Nieberg, D.; Würschum, T.; Ruckelshausen, A. Feldtaugliche Multisensorplattform für High-Throughput Getreidephänotypisierung—Aufbau und Datenhandling. In Intelligente Systeme—Stand Der Technik Und Neue Möglichkeiten; Ruckelshausen, A., Meyer-Aurich, A., Rath, T., Recke, G., Theuvsen, B., Eds.; Gesellschaft für Informatik e.V. (GI): Osnabrück, Germany, 2016; pp. 137–140. [Google Scholar]

- Polder, G.; Hofstee, J.W. Phenotyping large tomato plants in the greenhouse usig a 3D light-field camera. ASABE CSBE/SCGAB Annu. Int. Meet. 2014, 1, 153–159. [Google Scholar]

- Vision Robotics Proto Prune. Available online: http://www.visionrobotics.com/ (accessed on 26 January 2016).

- Marinello, F.; Pezzuolo, A.; Gasparini, F.; Sartori, L. Three-dimensional sensor for dynamic characterization of soil microrelief. In Precision Agriculture’13; Stafford, J., Ed.; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013; pp. 71–78. [Google Scholar]

- Garrido, M.; Paraforos, D.S.; Reiser, D.; Arellano, M.V.; Griepentrog, H.W.; Valero, C. 3D Maize Plant Reconstruction Based on Georeferenced Overlapping LiDAR Point Clouds. Remote Sens. 2015, 7, 17077–17096. [Google Scholar] [CrossRef]

- Weiss, U.; Biber, P.; Laible, S.; Bohlmann, K.; Zell, A.; Gmbh, R.B. Plant Species Classification using a 3D LIDAR Sensor and Machine Learning. In Machine Learning and Applications; IEEE: Washington, DC, USA, 2010; pp. 339–345. [Google Scholar]

- Weiss, U.; Biber, P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor. Robot. Auton. Syst. 2011, 59, 265–273. [Google Scholar] [CrossRef]

- Saeys, W.; Lenaerts, B.; Craessaerts, G.; de Baerdemaeker, J. Estimation of the crop density of small grains using LiDAR sensors. Biosyst. Eng. 2009, 102, 22–30. [Google Scholar] [CrossRef]

- Nakarmi, A.; Tang, L. Automatic inter-plant spacing sensing at early growth stages using a 3D vision sensor. Comput. Electron. Agric. 2012, 82, 23–31. [Google Scholar] [CrossRef]

- Adhikari, B.; Karkee, M. 3D Reconstruction of apple trees for mechanical pruning. ASABE Annu. Int. Meet. 2011, 7004. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M. Identification of repetitive apples for improved crop-load estimation with dual-side imaging. In Proceedings of the ASABE and CSBE/SCGAB Annual International Meeting, Montreal, QC, Canada, 13–16 July 2014; Volume 7004, pp. 1–9.

- Tanaka, T.; Kataoka, T.; Ogura, H.; Shibata, Y. Evaluation of rotary tillage performance using resin-made blade by 3D-printer. In Proceedings of the 18th International Conference of the ISTVS, Seoul, Korea, 22–25 September 2014.

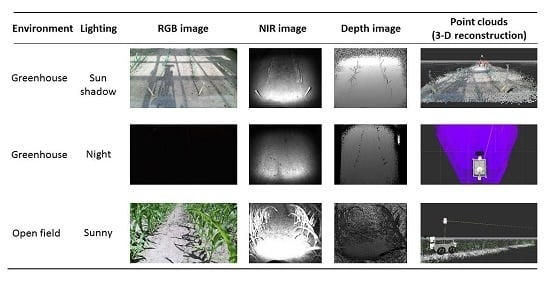

- Vázquez-Arellano, M.; Reiser, D.; Garrido, M.; Griepentrog, H.W. Reconstruction of geo-referenced maize plants using a consumer time-of-flight camera in different agricultural environments. In Intelligente Systeme—Stand der Technik und neue Möglichkeiten; Ruckelshausen, A., Meyer-Aurich, A., Rath, T., Recke, G., Theuvsen, B., Eds.; Gesellschaft für Informatik e.V. (GI): Osnabrück, Germany, 2016; pp. 213–216. [Google Scholar]

- Lee, C.; Lee, S.-Y.; Kim, J.-Y.; Jung, H.-Y.; Kim, J. Optical sensing method for screening disease in melon seeds by using optical coherence tomography. Sensors 2011, 11, 9467–9477. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.-Y.; Lee, C.; Kim, J.; Jung, H.-Y. Application of optical coherence tomography to detect Cucumber green mottle mosaic virus (CGMMV) infected cucumber seed. Hortic. Environ. Biotechnol. 2012, 53, 428–433. [Google Scholar] [CrossRef]

- Barbosa, E.A.; Lino, A.C.L. Multiwavelength electronic speckle pattern interferometry for surface shape measurement. Appl. Opt. 2007, 46, 2624–2631. [Google Scholar] [CrossRef] [PubMed]

- Madjarova, V.; Toyooka, S.; Nagasawa, H.; Kadono, H. Blooming processes in flowers studied by dynamic electronic speckle pattern interferometry (DESPI). Opt. Soc. Jpn. 2003, 10, 370–374. [Google Scholar] [CrossRef]

- Fox, M.D.; Puffer, L.G. Holographic interferometric measurement of motions in mature plants. Plant. Physiol. 1977, 60, 30–33. [Google Scholar] [CrossRef] [PubMed]

- Thilakarathne, B.L.S.; Rajagopalan, U.M.; Kadono, H.; Yonekura, T. An optical interferometric technique for assessing ozone induced damage and recovery under cumulative exposures for a Japanese rice cultivar. Springerplus 2014, 3, 89. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Ben Azouz, A.; Esmonde, H.; Corcoran, B.; O’Callaghan, E. Development of a teat sensing system for robotic milking by combining thermal imaging and stereovision technique. Comput. Electron. Agric. 2015, 110, 162–170. [Google Scholar] [CrossRef]

- Akhloufi, M. 3D vision system for intelligent milking robot automation. In Proceedings of the Intelligent Robots and Computer Vision XXXI: Algorithms and Techniques, San Francisco, CA, USA, 2 February 2014.

- Van der Stuyft, E.; Schofield, C.P.; Randall, J.M.; Wambacq, P.; Goedseels, V. Development and application of computer vision systems for use in livestock production. Comput. Electron. Agric. 1991, 6, 243–265. [Google Scholar] [CrossRef]

- Ju, X.; Siebert, J.P.; McFarlane, N.J.B.; Wu, J.; Tillett, R.D.; Schofield, C.P. A stereo imaging system for the metric 3D recovery of porcine surface anatomy. Sens. Rev. 2004, 24, 298–307. [Google Scholar] [CrossRef]

- Hinz, A. Objective Grading and Video Image Technology; E+V Technology GmbH & Co. KG: Oranienburg, Germany, 2012. [Google Scholar]

- Viazzi, S.; Bahr, C.; van Hertem, T.; Schlageter-Tello, A.; Romanini, C.E.B.; Halachmi, I.; Lokhorst, C.; Berckmans, D. Comparison of a three-dimensional and two-dimensional camera system for automated measurement of back posture in dairy cows. Comput. Electron. Agric. 2014, 100, 139–147. [Google Scholar] [CrossRef]

- Kawasue, K.; Ikeda, T.; Tokunaga, T.; Harada, H. Three-Dimensional Shape Measurement System for Black Cattle Using KINECT Sensor. Int. J. Circuits, Syst. Signal. Process. 2013, 7, 222–230. [Google Scholar]

- Kuzuhara, Y.; Kawamura, K.; Yoshitoshi, R.; Tamaki, T.; Sugai, S.; Ikegami, M.; Kurokawa, Y.; Obitsu, T.; Okita, M.; Sugino, T.; et al. A preliminarily study for predicting body weight and milk properties in lactating Holstein cows using a three-dimensional camera system. Comput. Electron. Agric. 2015, 111, 186–193. [Google Scholar] [CrossRef]

- Menesatti, P.; Costa, C.; Antonucci, F.; Steri, R.; Pallottino, F.; Catillo, G. A low-cost stereovision system to estimate size and weight of live sheep. Comput. Electron. Agric. 2014, 103, 33–38. [Google Scholar] [CrossRef]

- Pallottino, F.; Steri, R.; Menesatti, P.; Antonucci, F.; Costa, C.; Figorilli, S.; Catillo, G. Comparison between manual and stereovision body traits measurements of Lipizzan horses. Comput. Electron. Agric. 2015, 118, 408–413. [Google Scholar] [CrossRef]

- Wu, J.; Tillett, R.; McFarlane, N.; Ju, X.; Siebert, J.P.; Schofield, P. Extracting the three-dimensional shape of live pigs using stereo photogrammetry. Comput. Electron. Agric. 2004, 44, 203–222. [Google Scholar] [CrossRef]

- Zion, B. The use of computer vision technologies in aquaculture—A review. Comput. Electron. Agric. 2012, 88, 125–132. [Google Scholar] [CrossRef]

- Underwood, J.; Calleija, M.; Nieto, J.; Sukkarieh, S. A robot amongst the herd: Remote detection and tracking of cows. In Proceedings of the 4th Australian and New Zealand spatially enabled livestock management symposium; Ingram, L., Cronin, G., Sutton, L., Eds.; The University of Sydney: Camden, Australia, 2013; p. 52. [Google Scholar]

- Belbachir, A.N.; Ieee, M.; Schraml, S.; Mayerhofer, M.; Hofstätter, M. A Novel HDR Depth Camera for Real-time 3D 360° Panoramic Vision. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Columbus, OH, USA, 2014; pp. 425–432. [Google Scholar]

- Velodyne Puck VLP-16. Available online: http://velodynelidar.com/ (accessed on 3 December 2015).

- Frey, V. PMD Cameras for Automotive & Outdoor Applications; IFM Electronic: Munich, Germany, 2010; Volume 3. [Google Scholar]

- Kazmi, W.; Foix, S.; Alenyà, G.; Andersen, H.J. Indoor and outdoor depth imaging of leaves with time-of-flight and stereo vision sensors: Analysis and comparison. ISPRS J. Photogramm. Remote Sens. 2014, 88, 128–146. [Google Scholar] [CrossRef]

- Klose, R.; Penlington, J.; Ruckelshausen, A. Usability of 3D time-of-flight cameras for automatic plant phenotyping. Bornimer Agrartech. Berichte 2011, 69, 93–105. [Google Scholar]

- Odos Imaging Real.iZ VS-1000 High-Resolution Time-of-Flight. Available online: http://www.odos-imaging.com/ (accessed on 26 January 2016).

| Triangulation Approach | Visual Cue | 3-D Image Generation Techniques |

|---|---|---|

| Digital photogrammetry | Stereopsis | Stereo vision [17] |

| Multi-view stereo [18] | ||

| Multiple-baseline stereo [19] | ||

| Motion | Structure-from-motion [20] | |

| Shape-from-zooming [21] | ||

| Optical flow [22] | ||

| Silhouette | Shape-from-silhouette [23] | |

| Shape-from-photoconsistency [24] | ||

| Shape-from-shadow [25] | ||

| Structured light | Texture | Shape-from-texture [26] Shape-from-structured light [27] |

| Shading | Shading | Shape-from-shading [28] |

| Photometric stereo [29] | ||

| Focus | Focus | Shape-from-focus [30] |

| Shape-from-defocus [31] | ||

| Theodolite | Stereopsis | Trigonometry [32] |

| Basic Principle | Sensor/Technique | Advantages | Disadvantages |

|---|---|---|---|

| Triangulation | Consumer triangulation sensor (CTS) | -Off-the-shelf -Low cost -Provide RGB stream -Good community support, good documentation -Open source libraries available | -Vulnerable to sunlight, where no depth information is produced -Depth information is not possible at night or in very dark environments -Not weather resistant -Warm-up time required to stabilize the depth measurements (~1 h) |

| Stereo vision | -Good community support, good documentation -Off-the-shelf smart cameras (with parallel computing) available -Robust enough for open field applications | -Low texture produce correspondence problems -Susceptible to direct sunlight -Computationally expensive -Depth range is highly dependent on the baseline distance | |

| Structure-from-motion | -Digital cameras are easily and economically available -Open source and commercial software for 3-D reconstruction -Suitable for aerial applications -Excellent portability | -Camera calibration and field references are a requirement for reliable measurements -Time consuming point cloud generation process is not suitable for real-time applications -Requires a lot of experience for obtaining good raw data | |

| Light sheet triangulation | -High precision -Fast image data acquisition and 3-D reconstruction -Limited working range due to the focus -Do not depend on external light sources -New versions have light filtering systems that allow them to handle sunlight | -High cost -Susceptible to sunlight -Time consuming data acquisition | |

| TOF | TOF camera | -Active illumination independent of an external lighting source -Able to acquire data at night or in dark/low light conditions -Commercial 3-D sensors in agriculture are based on the fast-improving photonic mixer device (PMD) technology -New versions have pixel resolutions of up to 4.2 Megapixels -New versions have depth measurement ranges of up to 25 m | -Most of them have low pixel resolution -Most of them are susceptible to direct sunlight -High cost |

| Light sheet (pulse modulated) LIDAR | -Emitted light beams and are robust against sunlight -Able to retrieve depth measurements at night or in dark environments -Robust against interference -Widely used in agricultural applications -Many research papers and information available -New versions perform well in adverse weather conditions (rain, snow, mist and dust) | -Poor performance in edge detection due the spacing between the light beams -Warm-up time required to stabilize the depth measurements (up to 2.5 h) -Normally bulky and with moving parts -Have problems under adverse weather conditions (rain, snow, mist and dust) | |

| Interferometry | Optical coherent tomography (OCT) | -High accuracy -Near surface light penetration -High resolution | -High cost -Limited range -Highly-textured surfaces scatter the light beams -Relative measurements -Sensitive to vibrations -Difficult to implement |

| Platform | Basic Principle | Shadowing Device | Environment | Institution | Type |

|---|---|---|---|---|---|

| Becam [73] | Triangulation | √ | Open field | UMR-ITAP | Research |

| BoniRob [74] | TOF | √ | Open field | Deepfield Robotics | Commercial |

| BredVision [75] | TOF | √ | Open field | University of Applied Sciences Osnabrück | Research |

| Heliaphen [76] | Triangulation | × | Greenhouse | Optimalog | Research |

| Ladybird [77] | TOF and triangulation | √ | Open field | University of Sidney | Research |

| Marvin [78] | Triangulation | √ | Greenhouse | Wageningen University | Research |

| PhenoArch [79] | Triangulation | √ | Greenhouse | INRA-LEPSE (by LemnaTec) | Research |

| Phenobot [80] | TOF and Triangulation | × | Greenhouse | Wageningen University | Research |

| PlantEye [81] | Triangulation | × | Open field, Greenhouse | Phenospex | Commercial |

| Robot gardener [82] | Triangulation | × | Indoor | GARNICS project | Research |

| SAS [83] | Triangulation | × | Greenhouse | Alci | Commercial |

| Scanalyzer [84] | Triangulation | × | Open field, Greenhouse | LemnaTec | Commercial |

| Spy-See [85] | TOF and Triangulation | × | Greenhouse | Wageningen University | Research |

| Zea [86] | Triangulation | √ | Open field | Blue River | Commercial |

| Basic Principle | Technique | Application | Technical Difficulties |

|---|---|---|---|

| Triangulation | Stereo vision | -Autonomous navigation [38,39,40,42,44,46] -Crop husbandry [71,98,100] -Animal husbandry [121,132] | -Blank pixels of some locations specially the ones that are further away from the camera -Low light (cloudy sky) affects 3-D point generation -Direct sunlight and shadows in a sunny day affect strongly the depth image generation -Uniform texture of long leaves affect the 3-D point generation -Limited field of view -External illumination is required for night implementations -Correspondence and parallax problems -A robust disparity estimation is difficult in areas of homogeneous colour or occlusion -Specular reflections -Colour heterogeneity of the target object -A constant altitude needs to be maintained if a stereo vision system is mounted on a UAV -Camera calibration is necessary -Occlusion of leaves -Selection of a suitable camera position |

| Multi-view stereo | -Crop husbandry [65] -Animal husbandry [124] | -Surface integration from multiple views is the main obstacle -Challenging software engineering if high-resolution surface reconstruction is desired -Software obstacles associated with handling large images during system calibration and stereo matching | |

| Multiple-baseline stereo | -Autonomous navigation [43] | -Handling a rich 3-D data is computationally demanding | |

| Structure-from-motion | -Crop husbandry [64,67,68,69,97] | -Occlusion of leaves -Plant changing position from one image to the other due to the wind -High computation power is required to generate a dense point cloud -Determination of a suitable Image overlapping percentage -Greater hectare coverage requires higher altitudes when using UAVs -The camera’s pixel resolution determines the field spatial resolution -Image mosaicking is technically difficult from UAVs due to the translational and rotational movements of the camera | |

| Shape-from-Silhouette | -Crop husbandry [87,88,89] | -3-D reconstruction results strongly depend on good image pre-processing -Camera calibration is important if several cameras are used -Dense and random canopy branching is more difficult to reconstruct -Post-processing filtering may be required to remove noisy regions | |

| Structured light (light volume) sequentially coded | -Crop husbandry [93] | -Limited projector depth of field -High dynamic range scene -Internal reflections -Thin objects -Occlusions | |

| Structured light (light volume) pseudo random pattern | -Autonomous navigation [47] -Animal husbandry [122,128] | -Strong sensitivity to natural light -Small field of view -Smooth and shiny surfaces do not produce reliable depth measurements -Misalignment between the RGB and depth image due to the difference in pixel resolution -Time delay (30 s) for a stable depth measurement after a quick rotation -Mismatch between the RGB and depth images’ field of view and point of view | |

| Shape-from-Shading | -Crop husbandry [101] | -A zigzag effect at the target object’s boundary is generated (in interlaced video) if it moves at high speeds | |

| Structured light shadow Moiré | -Crop husbandry [94] | -Sensitive to disturbances (e.g., surface reflectivity) that become a source of noise | |

| Shape-from-focus | -Crop husbandry [90] | -Limited depth of field decreases the accuracy of the 3-D reconstruction | |

| TOF | Pulse modulation (light sheet) | -Autonomous navigation [49] -Crop husbandry [106,109] | -Limited perception of the surrounding structures -Requires movement to obtain 3-D data -Pitching, rolling or jawing using servo motors (i.e., pan-tilt unit) is a method to extend the field of view, but adds technical difficulties -Point cloud registration requires sensor fusion -Small plants are difficult to detect -Lower sampling rate and accuracy compared to continuous wave modulation TOF |

| Pulse modulation (light volume) | -Autonomous navigation and crop husbandry [107] | -Limited pixel resolution -Difficulty to distinguish small structures with complex shapes | |

| Continuous wave modulation (light sheet) | -Crop husbandry [109] | -Poor distance range measurement (up to 3 m) | |

| Continuous wave modulation (light volume) | -Crop husbandry [110,111,112] -Animal husbandry [122] | -Small field of view -Low pixel resolution -Calibration could be required to correct radial distortion -Requires a sunlight cover for better results -Limited visibility due to occlusion -Lack of colour output that could be useful for a better image segmentation | |

| Inter-ferometry | White-light | -Crop husbandry [115,116,120] | -The scattering surface of the plant forms speckles that affect the accuracy -Complexity of implementation |

| Holographic | -Crop husbandry [119] | -Need of a reference object in the image to detect disturbances | |

| Speckle | -Crop husbandry [117,118] | -Agricultural products with rough surface could be difficult to reconstruct -High camera resolutions provide better capabilities to resolve high fringe densities |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D Imaging Systems for Agricultural Applications—A Review. Sensors 2016, 16, 618. https://doi.org/10.3390/s16050618

Vázquez-Arellano M, Griepentrog HW, Reiser D, Paraforos DS. 3-D Imaging Systems for Agricultural Applications—A Review. Sensors. 2016; 16(5):618. https://doi.org/10.3390/s16050618

Chicago/Turabian StyleVázquez-Arellano, Manuel, Hans W. Griepentrog, David Reiser, and Dimitris S. Paraforos. 2016. "3-D Imaging Systems for Agricultural Applications—A Review" Sensors 16, no. 5: 618. https://doi.org/10.3390/s16050618

APA StyleVázquez-Arellano, M., Griepentrog, H. W., Reiser, D., & Paraforos, D. S. (2016). 3-D Imaging Systems for Agricultural Applications—A Review. Sensors, 16(5), 618. https://doi.org/10.3390/s16050618